HE-DMDeception: Adversarial Attack Network for 3D Object Detection Based on Human Eye and Deep Learning Model Deception

Abstract

1. Introduction

- (1)

- A novel framework, HE-DMDeception, is proposed to jointly optimize human visual deception and deep model deception for generating stealthy and effective adversarial camouflage;

- (2)

- For human visual deception, a CycleGAN-based camouflage network is employed to generate highly camouflaged background textures, which serve as the initial input for subsequent deep model deception.

- (3)

- A SAM-based segmentation pipeline with semi-supervised fine-tuning is introduced to mitigate the scarcity of annotated vehicle masks;

- (4)

- NMS-targeted and attention-dispersion loss terms are designed to explicitly disrupt detection pipelines while preserving camouflage fidelity;

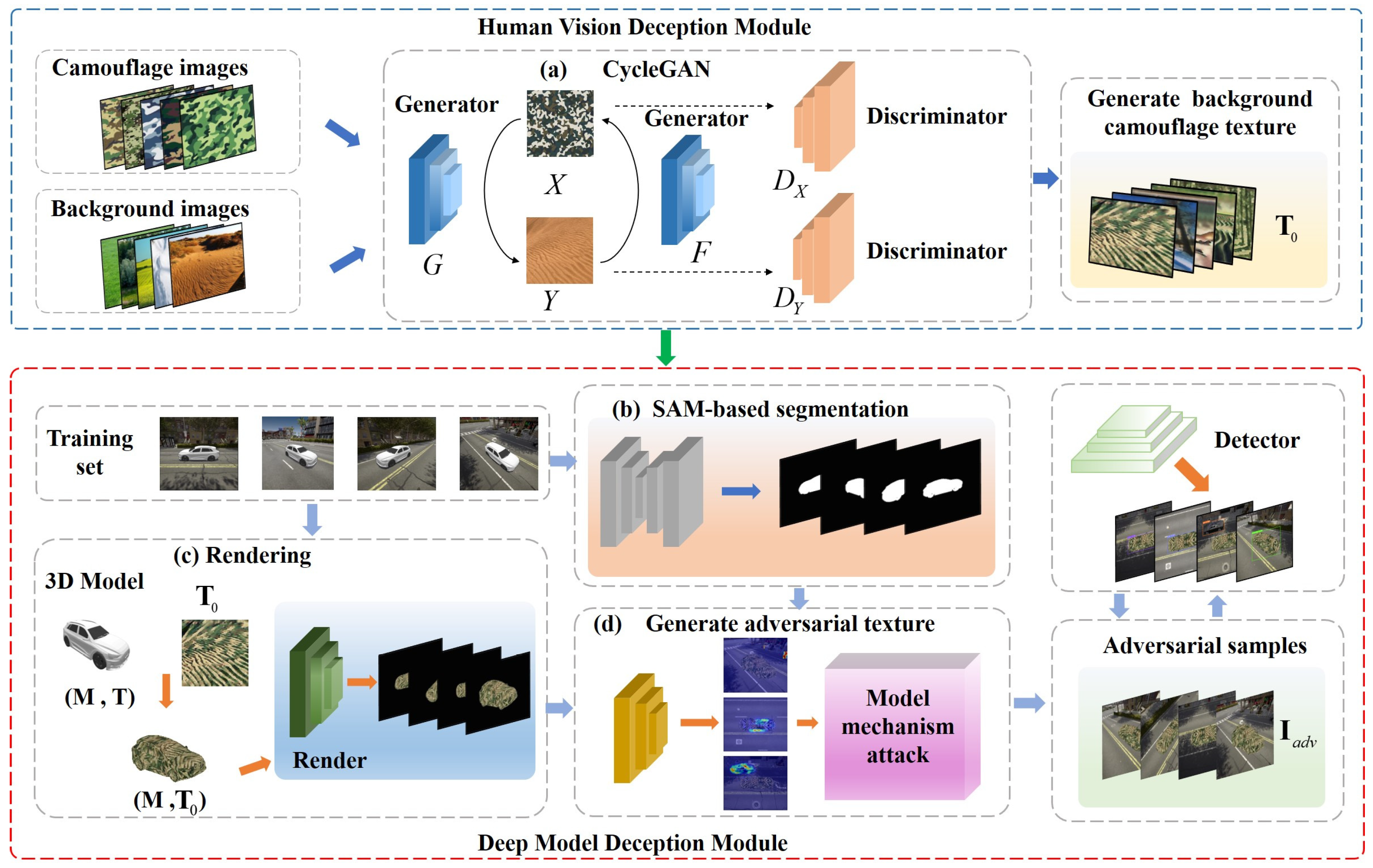

2. Network Architecture

2.1. Human Vision Deception Module

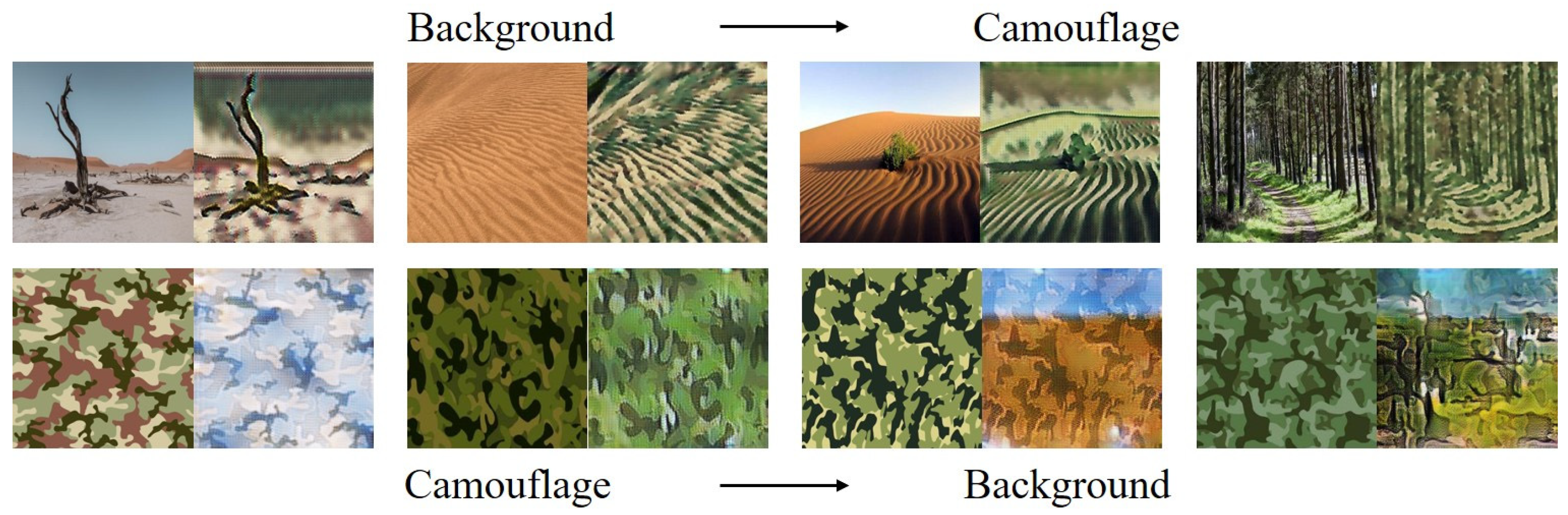

2.1.1. Camouflage-Style Background Dataset

2.1.2. Cycle-Consistent Generative Adversarial Network

2.1.3. Neural Renderer

2.2. Deep Model Deception Module

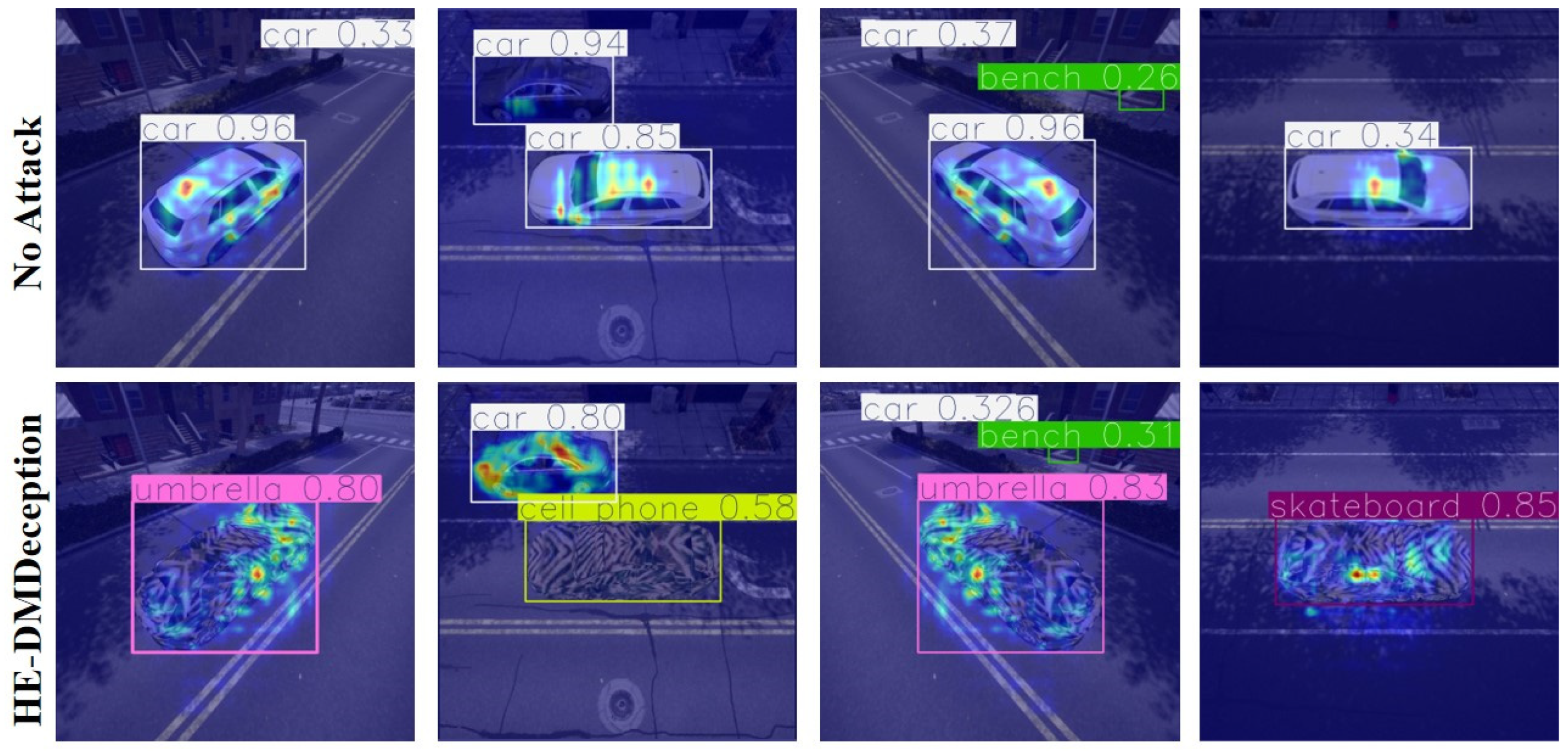

2.2.1. NMS Mechanism Attack

2.2.2. Attention Mechanism Attack

2.2.3. Adversarial Camouflage Texture Constraints

2.2.4. The Overall Loss Function

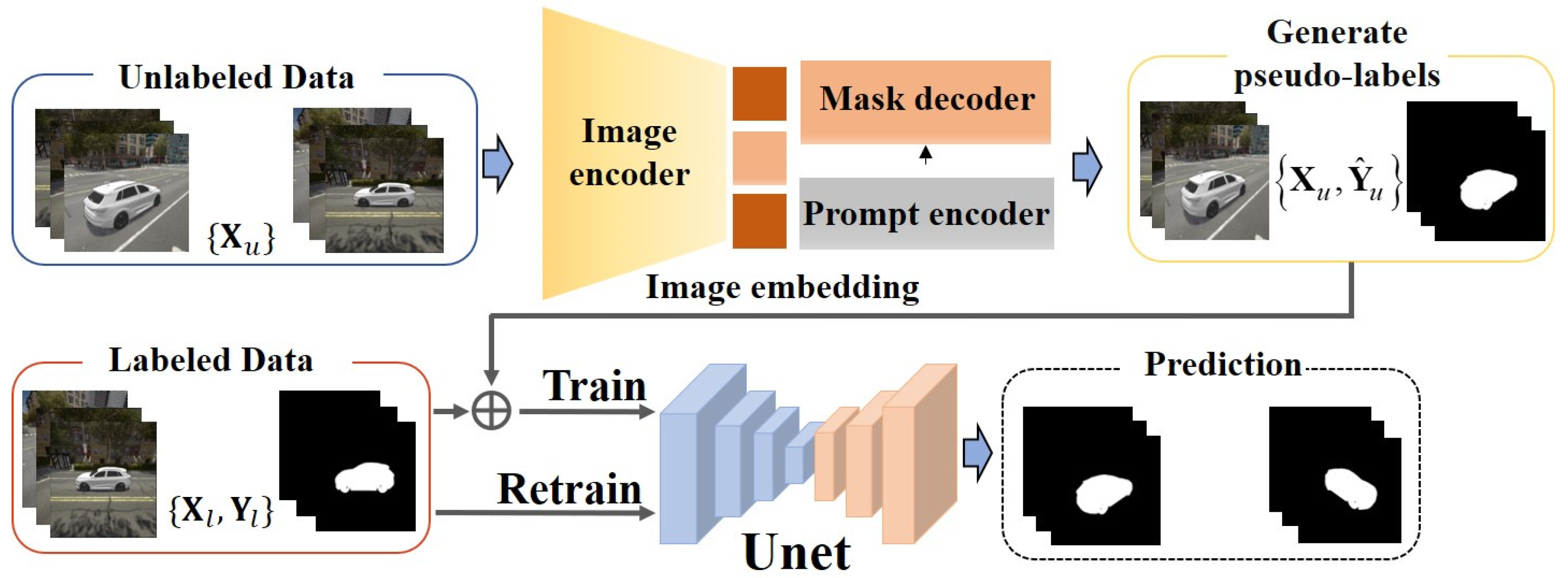

2.3. Image Segmentation Module

3. Experimental Setup

3.1. Datasets

3.2. Evaluation Metrics

4. Analysis and Discussion

5. Conclusions

6. Limitations and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, J.; Liu, A.; Yin, Z.; Liu, S.; Tang, S.; Liu, X. Dual Attention Suppression Attack: Generate Adversarial Camouflage in Physical World. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 8561–8570. [Google Scholar]

- Zeng, X.; Liu, C.; Wang, Y.S.; Qiu, W.; Xie, L.; Tai, Y.W.; Tang, C.K.; Yuille, A.L. Adversarial Attacks Beyond the Image Space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4302–4311. [Google Scholar]

- Xiao, C.; Yang, D.; Li, B.; Deng, J.; Liu, M. MeshAdv: Adversarial Meshes for Visual Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6898–6907. [Google Scholar]

- Zhang, Y.; Foroosh, H.; David, P.; Gong, B. CAMOU: Learning Physical Vehicle Camouflages to Adversarially Attack Detectors in the Wild. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–20. [Google Scholar]

- Huang, L.; Gao, C.; Zhou, Y.; Xie, C.; Yuille, A.L.; Zou, C.; Liu, N. Universal physical camouflage attacks on object detectors. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 717–726. [Google Scholar]

- Wang, D.; Jiang, T.; Sun, J.; Zhou, W.; Gong, Z.; Zhang, X.; Yao, W.; Chen, X. FCA: Learning a 3D Full-Coverage Vehicle Camouflage for Multi-View Physical Adversarial Attack. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22–29 February 2022; AAAI Press: Palo Alto, CA, USA, 2022; Volume 36, pp. 2414–2422. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Kato, H.; Ushiku, Y.; Harada, T. Neural 3D Mesh Renderer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 3907–3916. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 4–11 October 2023; pp. 4015–4026. [Google Scholar]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. In Proceedings of the 1st Annual Conference on Robot Learning (CoRL), Mountain View, CA, USA, 13–15 November 2017; PMLR: Palo Alto, CA, USA, 2017; pp. 1–16. [Google Scholar]

- Wang, Y.; Lv, H.; Kuang, X.; Zhao, G.; Tan, Y.-a.; Zhang, Q.; Hu, J. Towards a Physical-World Adversarial Patch for Blinding Object Detection Models. Inf. Sci. 2021, 556, 459–471. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO (Version 8.0.0) [Computer Software]. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 27 August 2025).

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–23 June 2024; pp. 16965–16974. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Generalized Gradient-Based Visual Explanations for Deep Convolutional Networks. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar]

| Category | Methods | Key Idea | Advantages | Disadvantages |

|---|---|---|---|---|

| Patch-based methods | Dual Attention Suppression (DAS) [1] | Generate localized adversarial patches by concentrating noise on target objects. | Easy to implement with strong local attacks. | limited to local regions, sensitive to occlusion and patch placement, and performs poorly in multi-view scenarios. |

| Camouflage-based methods with differentiable renderer | Attacks Beyond the Image Space [2], MeshAdv [3] | Optimize texture of 3D object via differentiable renderer. | Alters visual attributes of entire object; texture optimized for 3D surfaces; effective in single-view attacks. | Computationally intensive; may produce low-quality textures; limited robustness under partial occlusion; effectiveness decreases if camouflaged region is obscured. |

| Camouflage-based methods with non-differentiable renderer | CAMOU [4], Universal Physical Camouflage Attack (UPC) [5] | Apply optimized camouflage to 3D object via non-differentiable renderer. | Enables real-world application; allows multiple refinements on surface. | Hard to optimize globally; limited texture quality; less effective in multi-view scenarios; sensitive to surface geometry. |

| Full-Coverage methods | FCA [6] | Combines full-coverage camouflage generation network and deep model deception module. | Generates high-fidelity, visually subtle textures that remain effective across multi-view, partially occluded, and viewpoint-agnostic scenarios, achieving a balance between texture quality and adversarial potency. | Slightly more computationally complex than patch-based methods; requires neural renderer for texture mapping. |

| Loss Term | Purpose/Mechanism | Weight |

|---|---|---|

| NMS Mechanism Attack Loss | This attack disrupts NMS by inflating confidence scores and lowering IoU to prevent proper duplicate filtering, resulting in cluttered false positives. | |

| Attention Mechanism Attack Loss | This attack weakens the model’s feature extraction by dispersing its attention, leading to misclassification and missed detections. | |

| Camouflage Similarity Loss | This constraint ensures the adversarial texture remains stealthy and visually plausible in real-world scenarios by minimizing its distortion from the original pattern. | |

| Classification Loss | This core loss function minimizes the model’s probability score for the correct class, driving the adversarial misclassification. |

| Method | Accuracy (%) 1 | |||

|---|---|---|---|---|

| Inception-V3 | VGG-19 | ResNet-152 | DenseNet | |

| Raw | 58.33 | 40.28 | 41.67 | 46.53 |

| MeshAdv | 40.28 | 34.03 | 38.89 | 36.11 |

| CAMOU | 40.28 | 29.17 | 31.25 | 45.14 |

| UPC | 35.41 | 33.33 | 33.33 | 41.67 |

| DAS | 31.94 | 27.78 | 29.86 | 41.67 |

| HE-DMDeception | 30 | 24.4 | 23 | 32 |

| Method | P@0.5 (%) 1 | ||||

|---|---|---|---|---|---|

| YOLOv8 | RT-DETR | Faster-RCNN | SSD | MaskRCNN | |

| Raw | 100 | 100 | 86.04 | 81.54 | 89.24 |

| MeshAdv | 100 | 100 | 71.84 | 66.44 | 80.84 |

| CAMOU | 99.31 | 98 | 69.64 | 73.81 | 76.44 |

| UPC | 100 | 100 | 76.94 | 74.58 | 81.97 |

| DAS | 92.36 | 91 | 62.11 | 68.81 | 70.21 |

| FCA | 65.28 | 66 | 24.31 | 29.17 | 29.17 |

| HE-DMDeception | 50 | 55 | 20 | 25 | 25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, P.; Liu, Y.; Liu, H.; Teng, Y.; Ni, J.; Xiaobo, Z.; Wang, J. HE-DMDeception: Adversarial Attack Network for 3D Object Detection Based on Human Eye and Deep Learning Model Deception. Information 2025, 16, 867. https://doi.org/10.3390/info16100867

Zhang P, Liu Y, Liu H, Teng Y, Ni J, Xiaobo Z, Wang J. HE-DMDeception: Adversarial Attack Network for 3D Object Detection Based on Human Eye and Deep Learning Model Deception. Information. 2025; 16(10):867. https://doi.org/10.3390/info16100867

Chicago/Turabian StyleZhang, Pin, Yawen Liu, Heng Liu, Yichao Teng, Jiazheng Ni, Zhuansun Xiaobo, and Jiajia Wang. 2025. "HE-DMDeception: Adversarial Attack Network for 3D Object Detection Based on Human Eye and Deep Learning Model Deception" Information 16, no. 10: 867. https://doi.org/10.3390/info16100867

APA StyleZhang, P., Liu, Y., Liu, H., Teng, Y., Ni, J., Xiaobo, Z., & Wang, J. (2025). HE-DMDeception: Adversarial Attack Network for 3D Object Detection Based on Human Eye and Deep Learning Model Deception. Information, 16(10), 867. https://doi.org/10.3390/info16100867