A Survey of EEG-Based Approaches to Classroom Attention Assessment in Education

Abstract

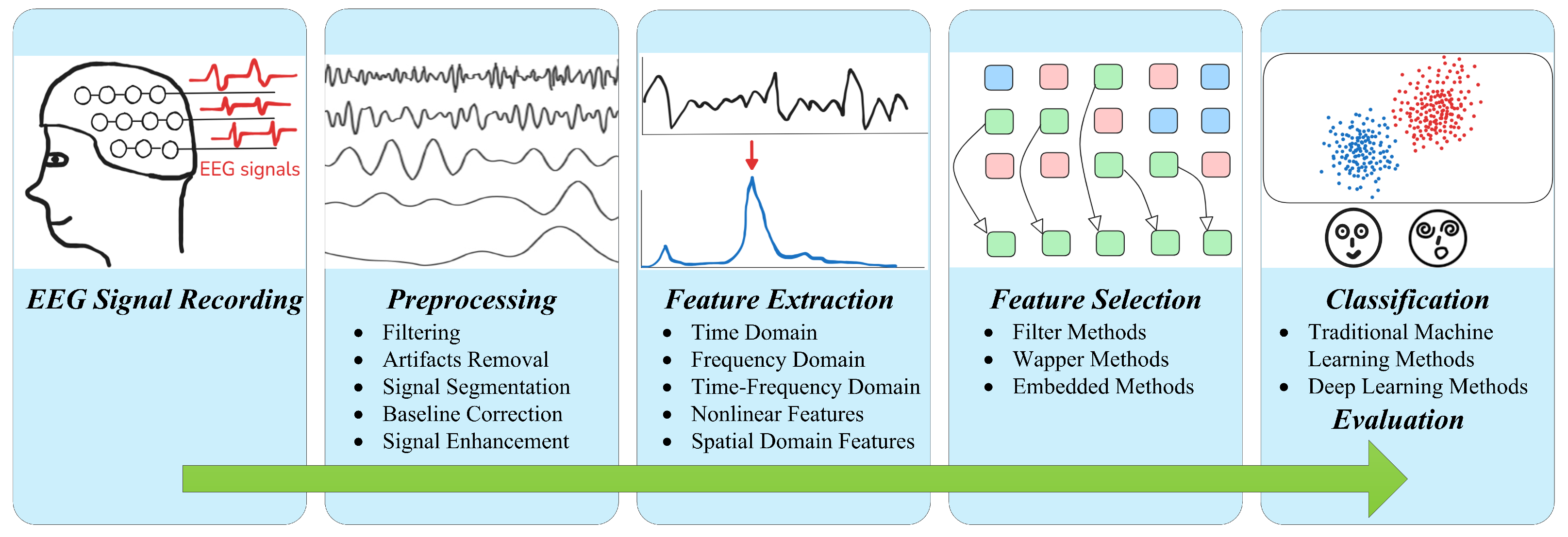

1. Introduction

2. EEG Signal Recording

2.1. EEG Signal

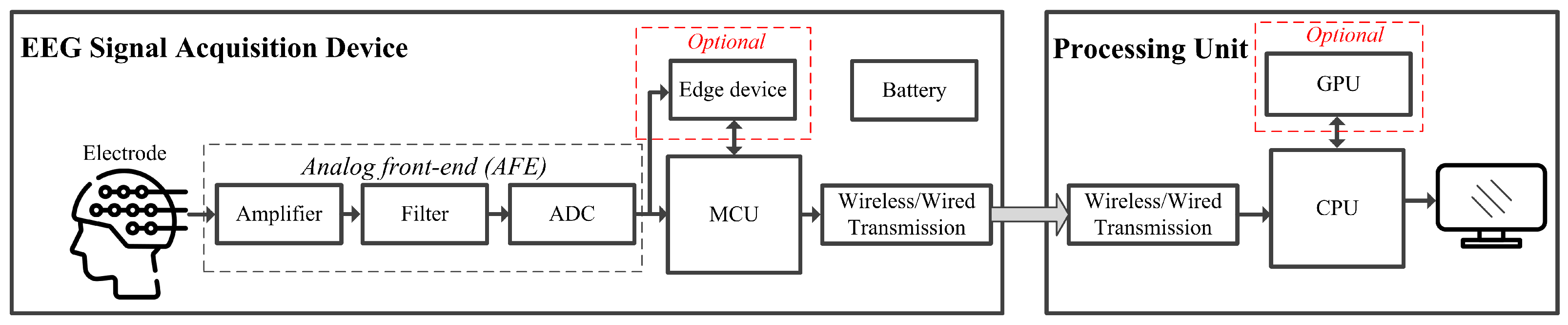

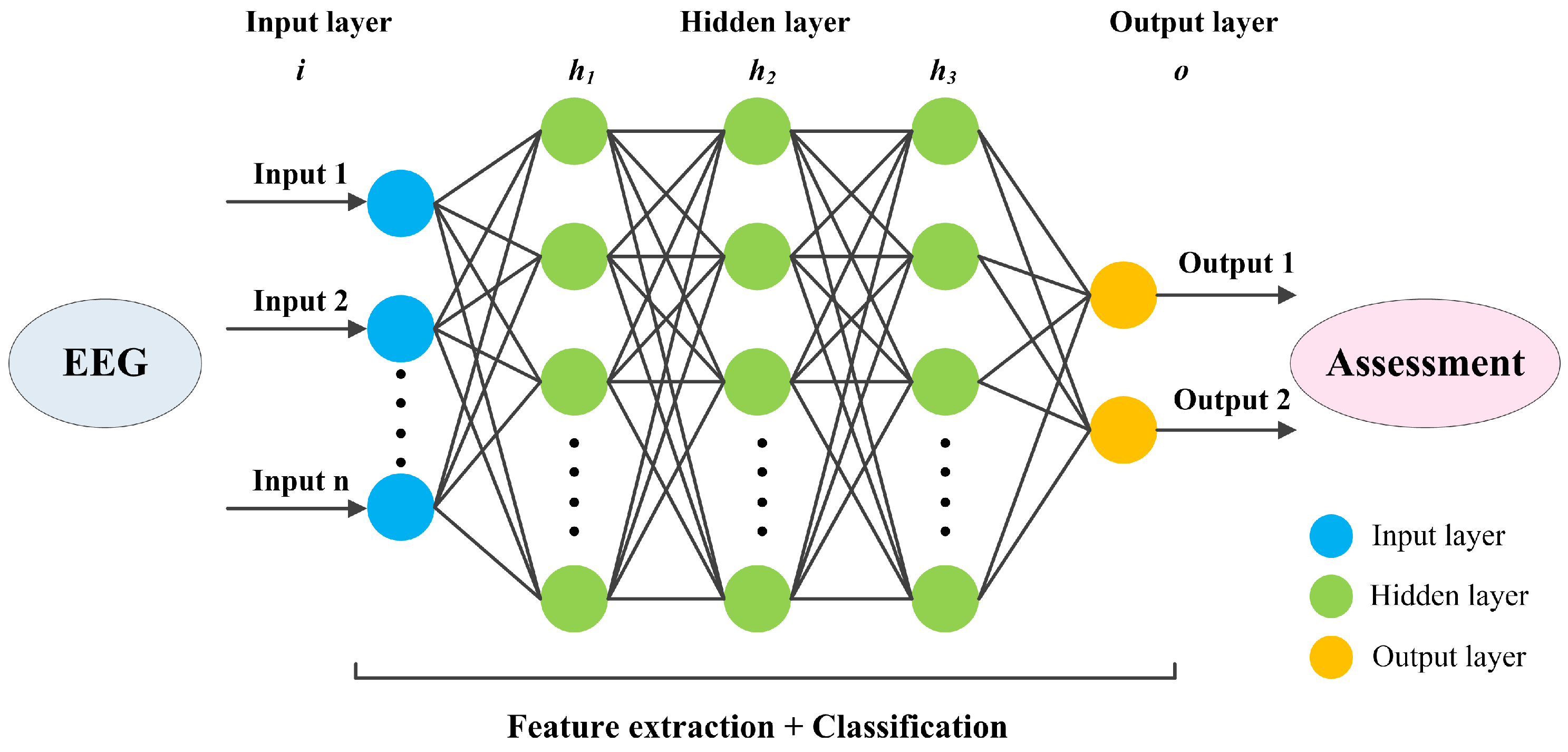

2.2. Hardware Setup for EEG Signal Processing

2.3. EEG Signal Acquisition

3. EEG Signal Preprocessing

3.1. Filtering

3.2. Artifacts Removal

3.3. Signal Segmentation and Baseline Correction

3.4. Signal Enhancement

4. EEG Signal Feature Extraction and Selection

4.1. Time-Domain Features

4.2. Frequency-Domain Features

4.3. Time–Frequency-Domain Features

4.4. Nonlinear Features

4.4.1. Fractal Dimension

4.4.2. Entropy

4.5. Spatial-Domain Features

4.6. Comparison of EEG Signal Feature Extraction Methods

4.7. EEG Signal Feature Selection

5. Classification and Evaluation Metrics

5.1. Traditional Machine Learning Methods

5.1.1. SVM Methods

5.1.2. KNN Methods

5.1.3. Random Forest Methods

5.1.4. Comparison of Traditional Machine Learning Methods

5.2. Deep Learning Methods

5.2.1. CNN Methods

5.2.2. RNN Methods

5.2.3. Transformer Methods

5.2.4. Hybrid Models Methods

5.2.5. Comparison of Deep Learning Methods

5.3. Evaluation Metrics

6. Datasets

7. Traditional Methods for Attention Assessment

7.1. Statistical Analysis

7.2. ERP Methods

8. Discussion

8.1. High-Quality EEG Data Acquisition

8.1.1. Electrode Design

8.1.2. Noises and Artifacts Removal with Deep Learning Methods

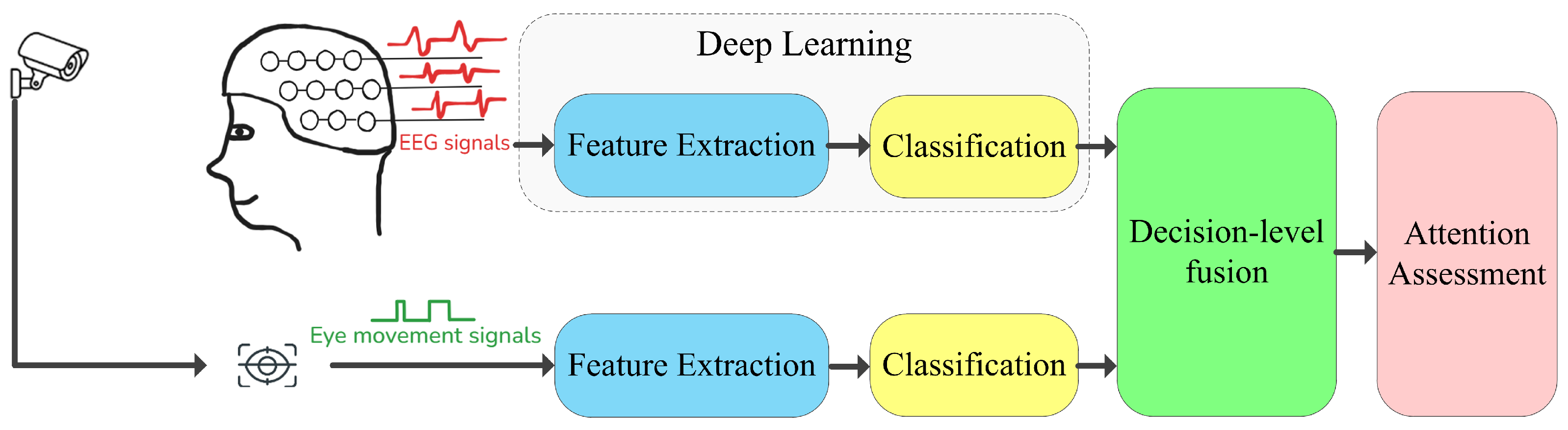

8.2. Multimodal Data Fusion

8.2.1. Methods Combined with Eye Movement

8.2.2. Methods Combined with Facial Expression

8.2.3. Comparison of Multimodal Methods

8.3. Complexity of EEG Data

8.3.1. Complexity of Signal

8.3.2. Complexity of Features

8.3.3. Complexity of Interpretability and Explainability

8.4. Hardware Setups for Deep Learning Method Implementation

8.4.1. System Architecture Design

8.4.2. Real-Time Signal Processing and Low Power Consumption

9. Future Work

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| EEG | Electroencephalogram |

| CPT | Conners’ Continuous Performance Test |

| WCST | Wisconsin Card Sorting Test |

| CNN | Convolutional neural networks |

| BCI | Brain–computer interface |

| ADHD | Attention deficit hyperactivity disorder |

| SNR | Signal-to-noise ratio |

| AFE | Analog front-end |

| MCU | Microcontroller unit |

| GPU | Graphics processing unit |

| FPGA | Field programmable gate array |

| ASIC | Application-specific integrated circuit |

| ADC | Analog-to-digital converter |

| EOG | Electrooculography |

| EMG | Electromyography |

| ECG | Electrocardiography |

| ICA | Independent component analysis |

| WT | Wavelet transform |

| EMD | Empirical mode decomposition |

| IMF | Intrinsic mode functions |

| ERP | Examining event-related potential |

| CCA | Canonical Correlation Analysis |

| SSVEP | Steady-state visually evoked potential |

| FFT | Fast Fourier transform |

| PSD | Power spectral density |

| TBR | Theta/Beta ratio |

| STFT | Short-time Fourier transform |

| HHT | Hilbert–Huang transform |

| WVD | Wigner–Ville distribution |

| WPD | Wavelet packet decomposition |

| FD | Fractal dimension |

| HFD | Higuchi fractal dimension |

| LLE | Largest Lyapunov exponent |

| CD | Correlation dimension |

| KFD | Katz fractal dimension |

| CSP | Common spatial pattern |

| FB-CSP | Filterbank common spatial pattern filters |

| RSF | Riemannian geometry-based spatial filtering |

| CFS | Correlation-based feature selection |

| RFE | Recursive feature elimination |

| GA | Genetic algorithm |

| LASSO | Least absolute shrinkage and selection operator |

| PCA | Principal component analysis |

| KNN | K-nearest neighbor |

| RNN | Recurrent neural network |

| SVM | Support vector machine |

| LSTM | Long short-term memory |

| GRU | Gated recurrent unit |

| RMSE | Root mean squared error |

| AUC-ROC | Area under the receiver operating characteristic curve |

| PDMS | Polydimethylsiloxane |

| ANOVA | Analysis of variance |

| GAN | Generative adversarial network |

| DWSAE | Deep wavelet sparse autoencoder |

| SHAP | Shapley Additive exPlanations |

| RISC | Reduced instruction set computer |

References

- Carini, R.M.; Kuh, G.D.; Klein, S.P. Student engagement and student learning: Testing the linkages. Res. High. Educ. 2006, 47, 1–32. [Google Scholar] [CrossRef]

- Raca, M.; Kidzinski, L.; Dillenbourg, P. Translating head motion into attention-towards processing of student’s body-language. In Proceedings of the 8th International Conference on Educational Data Mining, Madrid, Spain, 26–29 July 2015. [Google Scholar]

- Blume, F.; Hudak, J.; Dresler, T.; Ehlis, A.C.; Kühnhausen, J.; Renner, T.J.; Gawrilow, C. NIRS-based neurofeedback training in a virtual reality classroom for children with attention-deficit/hyperactivity disorder: Study protocol for a randomized controlled trial. Trials 2017, 18, 41. [Google Scholar] [CrossRef] [PubMed]

- Eom, H.; Kim, K.; Lee, S.; Hong, Y.J.; Heo, J.; Kim, J.J.; Kim, E. Development of virtual reality continuous performance test utilizing social cues for children and adolescents with attention-deficit/hyperactivity disorder. Cyberpsychol. Behav. Soc. Netw. 2019, 22, 198–204. [Google Scholar] [CrossRef] [PubMed]

- Gil-Berrozpe, G.; Sánchez-Torres, A.; Moreno-Izco, L.; Lorente-Omeñaca, R.; Ballesteros, A.; Rosero, Á.; Peralta, V.; Cuesta, M. Empirical validation of the wcst network structure in patients. Eur. Psychiatry 2021, 64, S519. [Google Scholar] [CrossRef]

- Schepers, J.M. The construction and evaluation of an attention questionnaire. SA J. Ind. Psychol. 2007, 33, 16–24. [Google Scholar] [CrossRef]

- Krosnick, J.A. Response strategies for coping with the cognitive demands of attitude measures in surveys. Appl. Cogn. Psychol. 1991, 5, 213–236. [Google Scholar] [CrossRef]

- Larson, R.B. Controlling social desirability bias. Int. J. Mark. Res. 2019, 61, 534–547. [Google Scholar] [CrossRef]

- Chiang, H.S.; Hsiao, K.L.; Liu, L.C. EEG-based detection model for evaluating and improving learning attention. J. Med. Biol. Eng. 2018, 38, 847–856. [Google Scholar] [CrossRef]

- Gupta, S.K.; Ashwin, T.; Guddeti, R.M.R. Students’ affective content analysis in smart classroom environment using deep learning techniques. Multimed. Tools Appl. 2019, 78, 25321–25348. [Google Scholar] [CrossRef]

- Pabba, C.; Kumar, P. An intelligent system for monitoring students’ engagement in large classroom teaching through facial expression recognition. Expert Syst. 2022, 39, e12839. [Google Scholar] [CrossRef]

- Zhu, X.; Ye, S.; Zhao, L.; Dai, Z. Hybrid attention cascade network for facial expression recognition. Sensors 2021, 21, 2003. [Google Scholar] [CrossRef] [PubMed]

- Rosengrant, D.; Hearrington, D.; O’Brien, J. Investigating student sustained attention in a guided inquiry lecture course using an eye tracker. Educ. Psychol. Rev. 2021, 33, 11–26. [Google Scholar] [CrossRef]

- Wang, Y.; Lu, S.; Harter, D. Multi-sensor eye-tracking systems and tools for capturing student attention and understanding engagement in learning: A review. IEEE Sens. J. 2021, 21, 22402–22413. [Google Scholar] [CrossRef]

- Aoki, F.; Fetz, E.; Shupe, L.; Lettich, E.; Ojemann, G. Increased gamma-range activity in human sensorimotor cortex during performance of visuomotor tasks. Clin. Neurophysiol. 1999, 110, 524–537. [Google Scholar] [CrossRef]

- Ni, D.; Wang, S.; Liu, G. The EEG-Based Attention Analysis in Multimedia m-Learning. Comput. Math. Methods Med. 2020, 2020, 4837291. [Google Scholar] [CrossRef]

- Xu, K.; Torgrimson, S.J.; Torres, R.; Lenartowicz, A.; Grammer, J.K. EEG data quality in real-world settings: Examining neural correlates of attention in school-aged children. Mind Brain Educ. 2022, 16, 221–227. [Google Scholar] [CrossRef]

- Al-Nafjan, A.; Aldayel, M. Predict students’ attention in online learning using EEG data. Sustainability 2022, 14, 6553. [Google Scholar] [CrossRef]

- Wang, T.S.; Wang, S.S.; Wang, C.L.; Wong, S.B. Theta/beta ratio in EEG correlated with attentional capacity assessed by Conners Continuous Performance Test in children with ADHD. Front. Psychiatry 2024, 14, 1305397. [Google Scholar] [CrossRef] [PubMed]

- Bazanova, O.M.; Auer, T.; Sapina, E.A. On the efficiency of individualized theta/beta ratio neurofeedback combined with forehead EMG training in ADHD children. Front. Hum. Neurosci. 2018, 12, 3. [Google Scholar] [CrossRef]

- Obaidan, H.B.; Hussain, M.; Almajed, R. EEG_DMNet: A deep multi-scale convolutional neural network for electroencephalography-based driver drowsiness detection. Electronics 2024, 13, 2084. [Google Scholar] [CrossRef]

- Jan, J.E.; Wong, P.K. Behaviour of the alpha rhythm in electroencephalograms of visually impaired children. Dev. Med. Child Neurol. 1988, 30, 444–450. [Google Scholar] [CrossRef]

- Ursuţiu, D.; Samoilă, C.; Drăgulin, S.; Constantin, F.A. Investigation of music and colours influences on the levels of emotion and concentration. In Proceedings of the Online Engineering & Internet of Things: Proceedings of the 14th International Conference on Remote Engineering and Virtual Instrumentation REV 2017, New York, NY, USA, 15–17 March 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 910–918. [Google Scholar]

- Kawala-Sterniuk, A.; Browarska, N.; Al-Bakri, A.; Pelc, M.; Zygarlicki, J.; Sidikova, M.; Martinek, R.; Gorzelanczyk, E.J. Summary of over fifty years with brain-computer interfaces—A review. Brain Sci. 2021, 11, 43. [Google Scholar] [CrossRef]

- Klimesch, W. EEG alpha and theta oscillations reflect cognitive and memory performance: A review and analysis. Brain Res. Rev. 1999, 29, 169–195. [Google Scholar] [CrossRef] [PubMed]

- Turkeš, R.; Mortier, S.; De Winne, J.; Botteldooren, D.; Devos, P.; Latré, S.; Verdonck, T. Who is WithMe? EEG features for attention in a visual task, with auditory and rhythmic support. Front. Neurosci. 2025, 18, 1434444. [Google Scholar] [CrossRef]

- Attar, E.T. Eeg waves studying intensively to recognize the human attention behavior. In Proceedings of the 2023 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS), Greater Noida, India, 3–4 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Sharma, M.; Kumar, M.; Kushwaha, S.; Kumar, D. Quantitative electroencephalography–A promising biomarker in children with attention deficit/hyperactivity disorder. Arch. Ment. Health 2022, 23, 129–132. [Google Scholar] [CrossRef]

- Newson, J.J.; Thiagarajan, T.C. EEG frequency bands in psychiatric disorders: A review of resting state studies. Front. Hum. Neurosci. 2019, 12, 521. [Google Scholar] [CrossRef]

- Shi, T.; Li, X.; Song, J.; Zhao, N.; Sun, C.; Xia, W.; Wu, L.; Tomoda, A. EEG characteristics and visual cognitive function of children with attention deficit hyperactivity disorder (ADHD). Brain Dev. 2012, 34, 806–811. [Google Scholar] [CrossRef] [PubMed]

- Samal, P.; Hashmi, M.F. Role of machine learning and deep learning techniques in EEG-based BCI emotion recognition system: A review. Artif. Intell. Rev. 2024, 57, 50. [Google Scholar] [CrossRef]

- Magazzini, L.; Singh, K.D. Spatial attention modulates visual gamma oscillations across the human ventral stream. Neuroimage 2018, 166, 219–229. [Google Scholar] [CrossRef]

- Rashid, U.; Niazi, I.K.; Signal, N.; Taylor, D. An EEG experimental study evaluating the performance of Texas instruments ADS1299. Sensors 2018, 18, 3721. [Google Scholar] [CrossRef]

- Li, G.; Chung, W.Y. A context-aware EEG headset system for early detection of driver drowsiness. Sensors 2015, 15, 20873–20893. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Zhu, Z.; Wang, Z.; Zhao, X.; Xu, T.; Zhou, T.; Wu, C.; Pignaton De Freitas, E.; Hu, H. Design and implementation of a scalable and high-throughput EEG acquisition and analysis system. Moore More 2024, 1, 14. [Google Scholar] [CrossRef]

- Aslam, A.R.; Altaf, M.A.B. An on-chip processor for chronic neurological disorders assistance using negative affectivity classification. IEEE Trans. Biomed. Circuits Syst. 2020, 14, 838–851. [Google Scholar] [CrossRef]

- Zanetti, R.; Arza, A.; Aminifar, A.; Atienza, D. Real-time EEG-based cognitive workload monitoring on wearable devices. IEEE Trans. Biomed. Eng. 2021, 69, 265–277. [Google Scholar] [CrossRef]

- Gonzalez, H.A.; George, R.; Muzaffar, S.; Acevedo, J.; Hoeppner, S.; Mayr, C.; Yoo, J.; Fitzek, F.H.; Elfadel, I.M. Hardware acceleration of EEG-based emotion classification systems: A comprehensive survey. IEEE Trans. Biomed. Circuits Syst. 2021, 15, 412–442. [Google Scholar] [CrossRef]

- Li, P.; Cai, S.; Su, E.; Xie, L. A biologically inspired attention network for EEG-based auditory attention detection. IEEE Signal Process. Lett. 2021, 29, 284–288. [Google Scholar] [CrossRef]

- Boyle, N.B.; Dye, L.; Lawton, C.L.; Billington, J. A combination of green tea, rhodiola, magnesium, and B vitamins increases electroencephalogram theta activity during attentional task performance under conditions of induced social stress. Front. Nutr. 2022, 9, 935001. [Google Scholar] [CrossRef]

- Cowley, B.U.; Juurmaa, K.; Palomäki, J. Reduced power in fronto-parietal theta EEG linked to impaired attention-sampling in adult ADHD. Eneuro 2022, 9. [Google Scholar] [CrossRef] [PubMed]

- Das, N.; Bertrand, A.; Francart, T. EEG-based auditory attention detection: Boundary conditions for background noise and speaker positions. J. Neural Eng. 2018, 15, 066017. [Google Scholar] [CrossRef]

- Cai, S.; Zhu, H.; Schultz, T.; Li, H. EEG-based auditory attention detection in cocktail party environment. APSIPA Trans. Signal Inf. Process. 2023, 12, e22. [Google Scholar] [CrossRef]

- Putze, F.; Eilts, H. Analyzing the importance of EEG channels for internal and external attention detection. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Honolulu, HI, USA, 1–4 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 4752–4757. [Google Scholar]

- Trajkovic, J.; Veniero, D.; Hanslmayr, S.; Palva, S.; Cruz, G.; Romei, V.; Thut, G. Top-down and bottom-up interactions rely on nested brain oscillations to shape rhythmic visual attention sampling. PLoS Biol. 2025, 23, e3002688. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Zhang, Y.; Fan, G.; Li, Z.; Li, J.; Fan, S.; Lou, C.; Liu, X. Design and implementation of high sampling rate and multichannel wireless recorder for EEG monitoring and SSVEP response detection. Front. Neurosci. 2023, 17, 1193950. [Google Scholar] [CrossRef]

- Lei, J.; Li, X.; Chen, W.; Wang, A.; Han, Q.; Bai, S.; Zhang, M. Design of a compact wireless EEG recorder with extra-high sampling rate and precise time synchronization for auditory brainstem response. IEEE Sens. J. 2021, 22, 4484–4493. [Google Scholar] [CrossRef]

- Valentin, O.; Ducharme, M.; Crétot-Richert, G.; Monsarrat-Chanon, H.; Viallet, G.; Delnavaz, A.; Voix, J. Validation and benchmarking of a wearable EEG acquisition platform for real-world applications. IEEE Trans. Biomed. Circuits Syst. 2018, 13, 103–111. [Google Scholar] [CrossRef]

- Lin, C.T.; Wang, Y.; Chen, S.F.; Huang, K.C.; Liao, L.D. Design and verification of a wearable wireless 64-channel high-resolution EEG acquisition system with wi-fi transmission. Med. Biol. Eng. Comput. 2023, 61, 3003–3019. [Google Scholar] [CrossRef]

- Zhou, S.; Zhang, W.; Liu, Y.; Chen, X.; Liu, H. Real-Time Driver Attention Detection in Complex Driving Environments via Binocular Depth Compensation and Multi-Source Temporal Bidirectional Long Short-Term Memory Network. Sensors 2025, 25, 5548. [Google Scholar] [CrossRef]

- Pichandi, S.; Balasubramanian, G.; Chakrapani, V. Hybrid deep models for parallel feature extraction and enhanced emotion state classification. Sci. Rep. 2024, 14, 24957. [Google Scholar] [CrossRef]

- Yuan, S.; Yan, K.; Wang, S.; Liu, J.X.; Wang, J. EEG-Based Seizure Prediction Using Hybrid DenseNet–ViT Network with Attention Fusion. Brain Sci. 2024, 14, 839. [Google Scholar] [CrossRef] [PubMed]

- Alemaw, A.S.; Slavic, G.; Zontone, P.; Marcenaro, L.; Gomez, D.M.; Regazzoni, C. Modeling interactions between autonomous agents in a multi-agent self-awareness architecture. IEEE Trans. Multimed. 2025, 27, 5035–5049. [Google Scholar] [CrossRef]

- Kim, S.K.; Kim, J.B.; Kim, H.; Kim, L.; Kim, S.H. Early Diagnosis of Alzheimer’s Disease in Human Participants Using EEGConformer and Attention-Based LSTM During the Short Question Task. Diagnostics 2025, 15, 448. [Google Scholar] [CrossRef]

- Jin, J.; Chen, Z.; Cai, H.; Pan, J. Affective eeg-based person identification with continual learning. IEEE Trans. Instrum. Meas. 2024, 73, 4007716. [Google Scholar] [CrossRef]

- Liu, H.W.; Wang, S.; Tong, S.X. DysDiTect: Dyslexia Identification Using CNN-Positional-LSTM-Attention Modeling with Chinese Dictation Task. Brain Sci. 2024, 14, 444. [Google Scholar] [CrossRef]

- Li, M.; Yu, P.; Shen, Y. A spatial and temporal transformer-based EEG emotion recognition in VR environment. Front. Hum. Neurosci. 2025, 19, 1517273. [Google Scholar] [CrossRef] [PubMed]

- Kwon, Y.H.; Shin, S.B.; Kim, S.D. Electroencephalography based fusion two-dimensional (2D)-convolution neural networks (CNN) model for emotion recognition system. Sensors 2018, 18, 1383. [Google Scholar] [CrossRef] [PubMed]

- Chauhan, N.; Choi, B.J. Regional contribution in electrophysiological-based classifications of attention deficit hyperactive disorder (ADHD) using machine learning. Computation 2023, 11, 180. [Google Scholar] [CrossRef]

- Djamal, E.C.; Ramadhan, R.I.; Mandasari, M.I.; Djajasasmita, D. Identification of post-stroke EEG signal using wavelet and convolutional neural networks. Bull. Electr. Eng. Inform. 2020, 9, 1890–1898. [Google Scholar] [CrossRef]

- Ieracitano, C.; Mammone, N.; Hussain, A.; Morabito, F.C. A novel explainable machine learning approach for EEG-based brain-computer interface systems. Neural Comput. Appl. 2022, 34, 11347–11360. [Google Scholar] [CrossRef]

- Homan, R.W. The 10–20 electrode system and cerebral location. Am. J. EEG Technol. 1988, 28, 269–279. [Google Scholar] [CrossRef]

- Koessler, L.; Maillard, L.; Benhadid, A.; Vignal, J.P.; Felblinger, J.; Vespignani, H.; Braun, M. Automated cortical projection of EEG sensors: Anatomical correlation via the international 10–10 system. Neuroimage 2009, 46, 64–72. [Google Scholar] [CrossRef]

- Meng, Y.; Liu, Y.; Wang, G.; Song, H.; Zhang, Y.; Lu, J.; Li, P.; Ma, X. M-NIG: Mobile network information gain for EEG-based epileptic seizure prediction. Sci. Rep. 2025, 15, 15181. [Google Scholar] [CrossRef]

- Paul, A.; Hota, G.; Khaleghi, B.; Xu, Y.; Rosing, T.; Cauwenberghs, G. Attention state classification with in-ear EEG. In Proceedings of the 2021 IEEE Biomedical Circuits and Systems Conference (BioCAS), Berlin, Germany, 7–9 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- Holtze, B.; Rosenkranz, M.; Jaeger, M.; Debener, S.; Mirkovic, B. Ear-EEG measures of auditory attention to continuous speech. Front. Neurosci. 2022, 16, 869426. [Google Scholar] [CrossRef] [PubMed]

- Munteanu, D.; Munteanu, N. Comparison Between Assisted Training and Classical Training in Nonformal Learning Based on Automatic Attention Measurement Using a Neurofeedback Device. eLearn. Softw. Educ. 2019, 1, 302. [Google Scholar]

- Rivas, F.; Sierra-Garcia, J.E.; Camara, J.M. Comparison of LSTM-and GRU-Type RNN networks for attention and meditation prediction on raw EEG data from low-cost headsets. Electronics 2025, 14, 707. [Google Scholar] [CrossRef]

- Klimesch, W.; Doppelmayr, M.; Russegger, H.; Pachinger, T.; Schwaiger, J. Induced alpha band power changes in the human EEG and attention. Neurosci. Lett. 1998, 244, 73–76. [Google Scholar] [CrossRef]

- Cai, S.; Zhang, R.; Zhang, M.; Wu, J.; Li, H. EEG-based auditory attention detection with spiking graph convolutional network. IEEE Trans. Cogn. Dev. Syst. 2024, 16, 1698–1706. [Google Scholar] [CrossRef]

- Cai, S.; Su, E.; Xie, L.; Li, H. EEG-based auditory attention detection via frequency and channel neural attention. IEEE Trans. Hum.-Mach. Syst. 2021, 52, 256–266. [Google Scholar] [CrossRef]

- Liu, N.H.; Chiang, C.Y.; Chu, H.C. Recognizing the degree of human attention using EEG signals from mobile sensors. Sensors 2013, 13, 10273–10286. [Google Scholar] [CrossRef]

- Ko, L.W.; Komarov, O.; Hairston, W.D.; Jung, T.P.; Lin, C.T. Sustained attention in real classroom settings: An EEG study. Front. Hum. Neurosci. 2017, 11, 388. [Google Scholar] [CrossRef]

- Ciccarelli, G.; Nolan, M.; Perricone, J.; Calamia, P.T.; Haro, S.; O’sullivan, J.; Mesgarani, N.; Quatieri, T.F.; Smalt, C.J. Comparison of two-talker attention decoding from EEG with nonlinear neural networks and linear methods. Sci. Rep. 2019, 9, 11538. [Google Scholar] [CrossRef]

- Nogueira, W.; Cosatti, G.; Schierholz, I.; Egger, M.; Mirkovic, B.; Büchner, A. Toward decoding selective attention from single-trial EEG data in cochlear implant users. IEEE Trans. Biomed. Eng. 2019, 67, 38–49. [Google Scholar] [CrossRef]

- Sinha, S.R.; Sullivan, L.R.; Sabau, D.; Orta, D.S.J.; Dombrowski, K.E.; Halford, J.J.; Hani, A.J.; Drislane, F.W.; Stecker, M.M. American clinical neurophysiology society guideline 1: Minimum technical requirements for performing clinical electroencephalography. Neurodiagn. J. 2016, 56, 235–244. [Google Scholar] [CrossRef]

- Choi, Y.; Kim, M.; Chun, C. Effect of temperature on attention ability based on electroencephalogram measurements. Build. Environ. 2019, 147, 299–304. [Google Scholar] [CrossRef]

- Bleichner, M.G.; Mirkovic, B.; Debener, S. Identifying auditory attention with ear-EEG: CEEGrid versus high-density cap-EEG comparison. J. Neural Eng. 2016, 13, 066004. [Google Scholar] [CrossRef]

- Wang, J.; Wang, W.; Hou, Z.G. Toward improving engagement in neural rehabilitation: Attention enhancement based on brain–computer interface and audiovisual feedback. IEEE Trans. Cogn. Dev. Syst. 2019, 12, 787–796. [Google Scholar] [CrossRef]

- Minguillon, J.; Lopez-Gordo, M.A.; Pelayo, F. Trends in EEG-BCI for daily-life: Requirements for artifact removal. Biomed. Signal Process. Control 2017, 31, 407–418. [Google Scholar] [CrossRef]

- Radüntz, T.; Scouten, J.; Hochmuth, O.; Meffert, B. Automated EEG artifact elimination by applying machine learning algorithms to ICA-based features. J. Neural Eng. 2017, 14, 046004. [Google Scholar] [CrossRef] [PubMed]

- Noorbasha, S.K.; Sudha, G.F. Removal of EOG artifacts and separation of different cerebral activity components from single channel EEG—An efficient approach combining SSA–ICA with wavelet thresholding for BCI applications. Biomed. Signal Process. Control 2021, 63, 102168. [Google Scholar] [CrossRef]

- Phadikar, S.; Sinha, N.; Ghosh, R. Automatic eyeblink artifact removal from EEG signal using wavelet transform with heuristically optimized threshold. IEEE J. Biomed. Health Inform. 2020, 25, 475–484. [Google Scholar] [CrossRef]

- Nayak, A.B.; Shah, A.; Maheshwari, S.; Anand, V.; Chakraborty, S.; Kumar, T.S. An empirical wavelet transform-based approach for motion artifact removal in electroencephalogram signals. Decis. Anal. J. 2024, 10, 100420. [Google Scholar] [CrossRef]

- Maddirala, A.K.; Veluvolu, K.C. ICA with CWT and k-means for eye-blink artifact removal from fewer channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 1361–1373. [Google Scholar] [CrossRef]

- Patel, R.; Janawadkar, M.P.; Sengottuvel, S.; Gireesan, K.; Radhakrishnan, T.S. Suppression of eye-blink associated artifact using single channel EEG data by combining cross-correlation with empirical mode decomposition. IEEE Sens. J. 2016, 16, 6947–6954. [Google Scholar] [CrossRef]

- Wang, G.; Teng, C.; Li, K.; Zhang, Z.; Yan, X. The removal of EOG artifacts from EEG signals using independent component analysis and multivariate empirical mode decomposition. IEEE J. Biomed. Health Inform. 2015, 20, 1301–1308. [Google Scholar] [CrossRef]

- Fathima, S.; Ahmed, M. Hierarchical-variational mode decomposition for baseline correction in electroencephalogram signals. IEEE Open J. Instrum. Meas. 2023, 2, 4000208. [Google Scholar] [CrossRef]

- Lo, P.C.; Leu, J.S. Adaptive baseline correction of meditation EEG. Am. J. Electroneurodiagn. Technol. 2001, 41, 142–155. [Google Scholar] [CrossRef]

- Kessler, R.; Enge, A.; Skeide, M.A. How EEG preprocessing shapes decoding performance. Commun. Biol. 2025, 8, 1039. [Google Scholar] [CrossRef] [PubMed]

- Xu, N.; Gao, X.; Hong, B.; Miao, X.; Gao, S.; Yang, F. BCI competition 2003-data set IIb: Enhancing P300 wave detection using ICA-based subspace projections for BCI applications. IEEE Trans. Biomed. Eng. 2004, 51, 1067–1072. [Google Scholar] [CrossRef]

- Maddirala, A.K.; Shaik, R.A. Separation of sources from single-channel EEG signals using independent component analysis. IEEE Trans. Instrum. Meas. 2017, 67, 382–393. [Google Scholar] [CrossRef]

- Lin, C.T.; Huang, C.S.; Yang, W.Y.; Singh, A.K.; Chuang, C.H.; Wang, Y.K. Real-Time EEG Signal Enhancement Using Canonical Correlation Analysis and Gaussian Mixture Clustering. J. Healthc. Eng. 2018, 2018, 5081258. [Google Scholar] [CrossRef]

- Kalunga, E.; Djouani, K.; Hamam, Y.; Chevallier, S.; Monacelli, E. SSVEP enhancement based on Canonical Correlation Analysis to improve BCI performances. In Proceedings of the 2013 Africon, Pointe aux Piments, Mauritius, 9–12 September 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–5. [Google Scholar]

- Cai, S.; Li, P.; Su, E.; Xie, L. Auditory attention detection via cross-modal attention. Front. Neurosci. 2021, 15, 652058. [Google Scholar] [CrossRef]

- Khanmohammadi, S.; Chou, C.A. Adaptive seizure onset detection framework using a hybrid PCA–CSP approach. IEEE J. Biomed. Health Inform. 2017, 22, 154–160. [Google Scholar] [CrossRef]

- Molla, M.K.I.; Tanaka, T.; Osa, T.; Islam, M.R. EEG signal enhancement using multivariate wavelet transform application to single-trial classification of event-related potentials. In Proceedings of the 2015 IEEE International Conference on Digital Signal Processing (DSP), Singapore, 21–24 July 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 804–808. [Google Scholar]

- Sita, G.; Ramakrishnan, A. Wavelet domain nonlinear filtering for evoked potential signal enhancement. Comput. Biomed. Res. 2000, 33, 431–446. [Google Scholar] [CrossRef]

- Maki, H.; Toda, T.; Sakti, S.; Neubig, G.; Nakamura, S. EEG signal enhancement using multi-channel Wiener filter with a spatial correlation prior. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 2639–2643. [Google Scholar]

- Yadav, S.; Saha, S.K.; Kar, R. An application of the Kalman filter for EEG/ERP signal enhancement with the autoregressive realisation. Biomed. Signal Process. Control 2023, 86, 105213. [Google Scholar] [CrossRef]

- Singh, A.K.; Krishnan, S. Trends in EEG signal feature extraction applications. Front. Artif. Intell. 2023, 5, 1072801. [Google Scholar] [CrossRef]

- Dallmer-Zerbe, I.; Popp, F.; Lam, A.P.; Philipsen, A.; Herrmann, C.S. Transcranial alternating current stimulation (tACS) as a tool to modulate P300 amplitude in attention deficit hyperactivity disorder (ADHD): Preliminary findings. Brain Topogr. 2020, 33, 191–207. [Google Scholar] [CrossRef]

- Zhang, G.; Luck, S.J. Variations in ERP data quality across paradigms, participants, and scoring procedures. Psychophysiology 2023, 60, e14264. [Google Scholar] [CrossRef]

- Mehmood, R.M.; Bilal, M.; Vimal, S.; Lee, S.W. EEG-based affective state recognition from human brain signals by using Hjorth-activity. Measurement 2022, 202, 111738. [Google Scholar] [CrossRef]

- Raj, V.; Hazarika, J.; Hazra, R. Feature selection for attention demanding task induced EEG detection. In Proceedings of the 2020 IEEE Applied Signal Processing Conference (ASPCON), Kolkata, India, 7–9 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 11–15. [Google Scholar]

- Harmony, T. The functional significance of delta oscillations in cognitive processing. Front. Integr. Neurosci. 2013, 7, 83. [Google Scholar] [CrossRef] [PubMed]

- Matsuo, M.; Higuchi, T.; Ichibakase, T.; Suyama, H.; Takahara, R.; Nakamura, M. Differences in Electroencephalography Power Levels between Poor and Good Performance in Attentional Tasks. Brain Sci. 2024, 14, 527. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Wang, X.; Zhu, M.; Pi, Y.; Wang, X.; Wan, F.; Chen, S.; Li, G. Spectrum power and brain functional connectivity of different EEG frequency bands in attention network tests. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Virtual, 1–5 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 224–227. [Google Scholar]

- Sharma, A.; Singh, M. Assessing alpha activity in attention and relaxed state: An EEG analysis. In Proceedings of the 2015 1st International Conference on Next Generation Computing Technologies (NGCT), Dehradun, India, 4–5 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 508–513. [Google Scholar]

- Yang, X.; Fiebelkorn, I.C.; Jensen, O.; Knight, R.T.; Kastner, S. Differential neural mechanisms underlie cortical gating of visual spatial attention mediated by alpha-band oscillations. Proc. Natl. Acad. Sci. USA 2024, 121, e2313304121. [Google Scholar] [CrossRef] [PubMed]

- Kahlbrock, N.; Butz, M.; May, E.S.; Brenner, M.; Kircheis, G.; Häussinger, D.; Schnitzler, A. Lowered frequency and impaired modulation of gamma band oscillations in a bimodal attention task are associated with reduced critical flicker frequency. Neuroimage 2012, 61, 216–227. [Google Scholar] [CrossRef]

- Van Son, D.; De Blasio, F.M.; Fogarty, J.S.; Angelidis, A.; Barry, R.J.; Putman, P. Frontal EEG theta/beta ratio during mind wandering episodes. Biol. Psychol. 2019, 140, 19–27. [Google Scholar] [CrossRef]

- Deshmukh, M.; Khemchandani, M.; Mhatre, M. Impact of brain regions on attention deficit hyperactivity disorder (ADHD) electroencephalogram (EEG) signals: Comparison of machine learning algorithms with empirical mode decomposition and time domain analysis. Appl. Neuropsychol. Child 2025, 1–17. [Google Scholar] [CrossRef]

- Sharma, Y.; Singh, B.K. Classification of children with attention-deficit hyperactivity disorder using Wigner-Ville time-frequency and deep expEEGNetwork feature-based computational models. IEEE Trans. Med. Robot. Bionics 2023, 5, 890–902. [Google Scholar] [CrossRef]

- Xu, X.; Nie, X.; Zhang, J.; Xu, T. Multi-level attention recognition of EEG based on feature selection. Int. J. Environ. Res. Public Health 2023, 20, 3487. [Google Scholar] [CrossRef]

- Ke, Y.; Chen, L.; Fu, L.; Jia, Y.; Li, P.; Zhao, X.; Qi, H.; Zhou, P.; Zhang, L.; Wan, B.; et al. Visual attention recognition based on nonlinear dynamical parameters of EEG. Bio-Med. Mater. Eng. 2014, 24, 349–355. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Zhou, W.; Yuan, Q.; Li, X.; Meng, Q.; Zhao, X.; Wang, J. Comparison of ictal and interictal EEG signals using fractal features. Int. J. Neural Syst. 2013, 23, 1350028. [Google Scholar] [CrossRef]

- Lee, M.W.; Yang, N.J.; Mok, H.K.; Yang, R.C.; Chiu, Y.H.; Lin, L.C. Music and movement therapy improves quality of life and attention and associated electroencephalogram changes in patients with attention-deficit/hyperactivity disorder. Pediatr. Neonatol. 2024, 65, 581–587. [Google Scholar] [CrossRef] [PubMed]

- Cura, O.K.; Akan, A.; Atli, S.K. Detection of Attention Deficit Hyperactivity Disorder based on EEG feature maps and deep learning. Biocybern. Biomed. Eng. 2024, 44, 450–460. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, M.; Zhang, Q.; Han, Y. Identification of auditory object-specific attention from single-trial electroencephalogram signals via entropy measures and machine learning. Entropy 2018, 20, 386. [Google Scholar] [PubMed]

- Canyurt, C.; Zengin, R. Epileptic activity detection using mean value, RMS, sample entropy, and permutation entropy methods. J. Cogn. Syst. 2023, 8, 16–27. [Google Scholar] [CrossRef]

- Angulo-Ruiz, B.Y.; Munoz, V.; Rodríguez-Martínez, E.I.; Cabello-Navarro, C.; Gomez, C.M. Multiscale entropy of ADHD children during resting state condition. Cogn. Neurodyn. 2023, 17, 869–891. [Google Scholar] [CrossRef] [PubMed]

- Geirnaert, S.; Francart, T.; Bertrand, A. Fast EEG-based decoding of the directional focus of auditory attention using common spatial patterns. IEEE Trans. Biomed. Eng. 2020, 68, 1557–1568. [Google Scholar] [CrossRef] [PubMed]

- Cai, S.; Su, E.; Song, Y.; Xie, L.; Li, H. Low Latency Auditory Attention Detection with Common Spatial Pattern Analysis of EEG Signals. In Proceedings of the INTERSPEECH, Shanghai, China, 25–29 October 2020; pp. 2772–2776. [Google Scholar]

- Niu, Y.; Chen, N.; Zhu, H.; Jin, J.; Li, G. Music-oriented auditory attention detection from electroencephalogram. Neurosci. Lett. 2024, 818, 137534. [Google Scholar] [CrossRef]

- Wang, Y.; He, H. Electroencephalogram emotion recognition based on manifold geomorphological features in Riemannian space. IEEE Intell. Syst. 2024, 39, 23–36. [Google Scholar] [CrossRef]

- Xu, G.; Wang, Z.; Zhao, X.; Li, R.; Zhou, T.; Xu, T.; Hu, H. Attentional state classification using amplitude and phase feature extraction method based on filter bank and Riemannian manifold. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 4402–4412. [Google Scholar] [CrossRef]

- Anuragi, A.; Sisodia, D.S.; Pachori, R.B. Mitigating the curse of dimensionality using feature projection techniques on electroencephalography datasets: An empirical review. Artif. Intell. Rev. 2024, 57, 75. [Google Scholar] [CrossRef]

- Li, Y.; Li, T.; Liu, H. Recent advances in feature selection and its applications. Knowl. Inf. Syst. 2017, 53, 551–577. [Google Scholar] [CrossRef]

- Hu, B.; Li, X.; Sun, S.; Ratcliffe, M. Attention recognition in EEG-based affective learning research using CFS+ KNN algorithm. IEEE/ACM Trans. Comput. Biol. Bioinform. 2016, 15, 38–45. [Google Scholar] [CrossRef]

- Liang, Z.; Wang, X.; Zhao, J.; Li, X. Comparative study of attention-related features on attention monitoring systems with a single EEG channel. J. Neurosci. Methods 2022, 382, 109711. [Google Scholar] [CrossRef]

- Kaongoen, N.; Choi, J.; Jo, S. Speech-imagery-based brain–computer interface system using ear-EEG. J. Neural Eng. 2021, 18, 016023. [Google Scholar] [CrossRef]

- Dias, N.S.; Kamrunnahar, M.; Mendes, P.M.; Schiff, S.J.; Correia, J.H. Feature selection on movement imagery discrimination and attention detection. Med. Biol. Eng. Comput. 2010, 48, 331–341. [Google Scholar] [CrossRef]

- McCann, M.T.; Thompson, D.E.; Syed, Z.H.; Huggins, J.E. Electrode subset selection methods for an EEG-based P300 brain-computer interface. Disabil. Rehabil. Assist. Technol. 2015, 10, 216–220. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Zhang, Z.; Yang, Y.; Mo, P.C.; Zhang, Z.; He, J.; Hu, S.; Wang, X.; Li, Y. Exploring Skin Potential Signals in Electrodermal Activity: Identifying Key Features for Attention State Differentiation. IEEE Access 2024, 12, 100832–100847. [Google Scholar] [CrossRef]

- Alirezaei, M.; Sardouie, S.H. Detection of human attention using EEG signals. In Proceedings of the 2017 24th National and 2nd International Iranian Conference on biomedical engineering (ICBME), Tehran, Iran, 30 November–1 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–5. [Google Scholar]

- Chen, C.M.; Wang, J.Y.; Yu, C.M. Assessing the attention levels of students by using a novel attention aware system based on brainwave signals. Br. J. Educ. Technol. 2017, 48, 348–369. [Google Scholar] [CrossRef]

- Zheng, W.; Chen, S.; Fu, Z.; Zhu, F.; Yan, H.; Yang, J. Feature selection boosted by unselected features. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 4562–4574. [Google Scholar] [CrossRef]

- Alickovic, E.; Lunner, T.; Gustafsson, F.; Ljung, L. A tutorial on auditory attention identification methods. Front. Neurosci. 2019, 13, 153. [Google Scholar] [CrossRef]

- Moon, J.; Kwon, Y.; Park, J.; Yoon, W.C. Detecting user attention to video segments using interval EEG features. Expert Syst. Appl. 2019, 115, 578–592. [Google Scholar] [CrossRef]

- Maniruzzaman, M.; Hasan, M.A.M.; Asai, N.; Shin, J. Optimal channels and features selection based ADHD detection from EEG signal using statistical and machine learning techniques. IEEE Access 2023, 11, 33570–33583. [Google Scholar] [CrossRef]

- Huang, Z.; Cheng, L.; Liu, Y. Key feature extraction method of electroencephalogram signal by independent component analysis for athlete selection and training. Comput. Intell. Neurosci. 2022, 2022, 6752067. [Google Scholar] [CrossRef]

- Zhou, S.; Gao, T. Brain activity recognition method based on attention-based rnn mode. Appl. Sci. 2021, 11, 10425. [Google Scholar] [CrossRef]

- Jin, C.Y.; Borst, J.P.; Van Vugt, M.K. Predicting task-general mind-wandering with EEG. Cogn. Affect. Behav. Neurosci. 2019, 19, 1059–1073. [Google Scholar] [CrossRef]

- Peng, C.J.; Chen, Y.C.; Chen, C.C.; Chen, S.J.; Cagneau, B.; Chassagne, L. An EEG-based attentiveness recognition system using Hilbert–Huang transform and support vector machine. J. Med. Biol. Eng. 2020, 40, 230–238. [Google Scholar] [CrossRef]

- Chen, X.; Bao, X.; Jitian, K.; Li, R.; Zhu, L.; Kong, W. Hybrid EEG Feature Learning Method for Cross-Session Human Mental Attention State Classification. Brain Sci. 2025, 15, 805. [Google Scholar] [CrossRef] [PubMed]

- Sahu, P.K.; Jain, K. Sustained attention detection in humans using a prefrontal theta-eeg rhythm. Cogn. Neurodyn. 2024, 18, 2675–2687. [Google Scholar] [CrossRef] [PubMed]

- Esqueda-Elizondo, J.J.; Juárez-Ramírez, R.; López-Bonilla, O.R.; García-Guerrero, E.E.; Galindo-Aldana, G.M.; Jiménez-Beristáin, L.; Serrano-Trujillo, A.; Tlelo-Cuautle, E.; Inzunza-González, E. Attention measurement of an autism spectrum disorder user using EEG signals: A case study. Math. Comput. Appl. 2022, 27, 21. [Google Scholar] [CrossRef]

- de Brito Guerra, T.C.; Nóbrega, T.; Morya, E.; de M. Martins, A.; de Sousa, V.A., Jr. Electroencephalography signal analysis for human activities classification: A solution based on machine learning and motor imagery. Sensors 2023, 23, 4277. [Google Scholar] [CrossRef] [PubMed]

- Demidova, L.; Klyueva, I.; Pylkin, A. Hybrid approach to improving the results of the SVM classification using the random forest algorithm. Procedia Comput. Sci. 2019, 150, 455–461. [Google Scholar] [CrossRef]

- Tibrewal, N.; Leeuwis, N.; Alimardani, M. The promise of deep learning for bcis: Classification of motor imagery eeg using convolutional neural network. bioRxiv 2021. [Google Scholar] [CrossRef]

- Craik, A.; He, Y.; Contreras-Vidal, J.L. Deep learning for electroencephalogram (EEG) classification tasks: A review. J. Neural Eng. 2019, 16, 031001. [Google Scholar] [CrossRef]

- Toa, C.K.; Sim, K.S.; Tan, S.C. Emotiv insight with convolutional neural network: Visual attention test classification. In Proceedings of the International Conference on Computational Collective Intelligence, Ho Chi Minh City, Vietnam, 12–15 November 2025; Springer: Berlin/Heidelberg, Germany, 2021; pp. 348–357. [Google Scholar]

- Vandecappelle, S.; Deckers, L.; Das, N.; Ansari, A.H.; Bertrand, A.; Francart, T. EEG-based detection of the locus of auditory attention with convolutional neural networks. eLife 2021, 10, e56481. [Google Scholar] [CrossRef]

- Wang, Y.; Shi, Y.; Du, J.; Lin, Y.; Wang, Q. A CNN-based personalized system for attention detection in wayfinding tasks. Adv. Eng. Inform. 2020, 46, 101180. [Google Scholar] [CrossRef]

- Geravanchizadeh, M.; Roushan, H. Dynamic selective auditory attention detection using RNN and reinforcement learning. Sci. Rep. 2021, 11, 15497. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, M.; Yao, L.; Shen, H.; Wu, W.; Zhang, Q.; Zhang, L.; Chen, M.; Liu, H.; Peng, R.; et al. Auditory attention decoding from electroencephalography based on long short-term memory networks. Biomed. Signal Process. Control 2021, 70, 102966. [Google Scholar] [CrossRef]

- Lee, Y.E.; Lee, S.H. EEG-transformer: Self-attention from transformer architecture for decoding EEG of imagined speech. In Proceedings of the 2022 10th International winter conference on brain-computer interface (BCI), Gangwon-do, Republic of Korea, 21–23 February 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–4. [Google Scholar]

- Xu, Y.; Du, Y.; Li, L.; Lai, H.; Zou, J.; Zhou, T.; Xiao, L.; Liu, L.; Ma, P. AMDET: Attention based multiple dimensions EEG transformer for emotion recognition. IEEE Trans. Affect. Comput. 2023, 15, 1067–1077. [Google Scholar] [CrossRef]

- Xu, Z.; Bai, Y.; Zhao, R.; Hu, H.; Ni, G.; Ming, D. Decoding selective auditory attention with EEG using a transformer model. Methods 2022, 204, 410–417. [Google Scholar] [CrossRef]

- Ding, Y.; Lee, J.H.; Zhang, S.; Luo, T.; Guan, C. Decoding Human Attentive States from Spatial-temporal EEG Patches Using Transformers. arXiv 2025, arXiv:2502.03736. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Wang, S. An attention-based hybrid deep learning model for EEG emotion recognition. Signal, image and video processing 2023, 17, 2305–2313. [Google Scholar] [CrossRef]

- Geravanchizadeh, M.; Shaygan Asl, A.; Danishvar, S. Selective Auditory Attention Detection Using Combined Transformer and Convolutional Graph Neural Networks. Bioengineering 2024, 11, 1216. [Google Scholar] [CrossRef]

- Zhao, X.; Lu, J.; Zhao, J.; Yuan, Z. Single-Channel EEG Classification of Human Attention with Two-Branch Multiscale CNN and Transformer Model. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–8. [Google Scholar]

- Xue, Y.; Wu, Y.; Zhang, S.; Feng, J. Attention Recognition Based on EEG Using Multi-Model Classification. In Proceedings of the 2025 4th International Conference on Electronics, Integrated Circuits and Communication Technology (EICCT), Chengdu, China, 11–13 July 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 459–463. [Google Scholar]

- EskandariNasab, M.; Raeisi, Z.; Lashaki, R.A.; Najafi, H. A GRU–CNN model for auditory attention detection using microstate and recurrence quantification analysis. Sci. Rep. 2024, 14, 8861. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Huang, X.; Song, R.; Qian, R.; Liu, X.; Chen, X. EEG-based seizure prediction via Transformer guided CNN. Measurement 2022, 203, 111948. [Google Scholar] [CrossRef]

- Li, L.; Fan, C.; Zhang, H.; Zhang, J.; Yang, X.; Zhou, J.; Lv, Z. MHANet: Multi-scale Hybrid Attention Network for Auditory Attention Detection. arXiv 2025, arXiv:2505.15364. [Google Scholar] [CrossRef]

- Das, N.; Francart, T.; Bertrand, A. Auditory Attention Detection Dataset KULeuven; Zenodo: Geneva, Switzerland, 2019. [Google Scholar]

- Fuglsang, S.A.; Wong, D.; Hjortkjær, J. EEG and Audio Dataset for Auditory Attention Decoding; Zenodo: Geneva, Switzerland, 2018. [Google Scholar]

- Fu, Z.; Wu, X.; Chen, J. Congruent audiovisual speech enhances auditory attention decoding with EEG. J. Neural Eng. 2019, 16, 066033. [Google Scholar] [CrossRef]

- Fan, C.; Zhang, J.; Zhang, H.; Xiang, W.; Tao, J.; Li, X.; Yi, J.; Sui, D.; Lv, Z. MSFNet: Multi-scale fusion network for brain-controlled speaker extraction. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, Australia, 28 October–1 November 2024; pp. 1652–1661. [Google Scholar]

- Broderick, M.P.; Anderson, A.J.; Di Liberto, G.M.; Crosse, M.J.; Lalor, E.C. Electrophysiological correlates of semantic dissimilarity reflect the comprehension of natural, narrative speech. Curr. Biol. 2018, 28, 803–809. [Google Scholar] [CrossRef]

- Nguyen, N.D.T.; Phan, H.; Geirnaert, S.; Mikkelsen, K.; Kidmose, P. Aadnet: An end-to-end deep learning model for auditory attention decoding. IEEE Trans. Neural Syst. Rehabil. Eng. 2025, 33, 2695–2706. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Cai, H.; Nie, L.; Xu, P.; Zhao, S.; Guan, C. An end-to-end 3D convolutional neural network for decoding attentive mental state. Neural Netw. 2021, 144, 129–137. [Google Scholar] [CrossRef] [PubMed]

- Geravanchizadeh, M.; Gavgani, S.B. Selective auditory attention detection based on effective connectivity by single-trial EEG. J. Neural Eng. 2020, 17, 026021. [Google Scholar] [CrossRef] [PubMed]

- Reichert, C.; Tellez Ceja, I.F.; Sweeney-Reed, C.M.; Heinze, H.J.; Hinrichs, H.; Dürschmid, S. Impact of stimulus features on the performance of a gaze-independent brain-computer interface based on covert spatial attention shifts. Front. Neurosci. 2020, 14, 591777. [Google Scholar] [CrossRef]

- Delvigne, V.; Wannous, H.; Dutoit, T.; Ris, L.; Vandeborre, J.P. PhyDAA: Physiological dataset assessing attention. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 2612–2623. [Google Scholar] [CrossRef]

- Jaeger, M.; Mirkovic, B.; Bleichner, M.G.; Debener, S. Decoding the attended speaker from EEG using adaptive evaluation intervals captures fluctuations in attentional listening. Front. Neurosci. 2020, 14, 603. [Google Scholar] [CrossRef]

- Torkamani-Azar, M.; Kanik, S.D.; Aydin, S.; Cetin, M. Prediction of reaction time and vigilance variability from spatio-spectral features of resting-state EEG in a long sustained attention task. IEEE J. Biomed. Health Inform. 2020, 24, 2550–2558. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, Y.; Liu, G.; Du, X.; Wang, H.; Zhang, D. A Multi-Label EEG Dataset for Mental Attention State Classification in Online Learning. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–5. [Google Scholar]

- Poulsen, A.T.; Kamronn, S.; Dmochowski, J.; Parra, L.C.; Hansen, L.K. EEG in the classroom: Synchronised neural recordings during video presentation. Sci. Rep. 2017, 7, 43916. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.X.; Xu, S.Q.; Zhou, E.N.; Huang, X.L.; Wang, J. Research on attention EEG based on Jensen-Shannon Divergence. Adv. Mater. Res. 2014, 884, 512–515. [Google Scholar] [CrossRef]

- Schupp, H.T.; Flaisch, T.; Stockburger, J.; Junghöfer, M. Emotion and attention: Event-related brain potential studies. Prog. Brain Res. 2006, 156, 31–51. [Google Scholar] [PubMed]

- Tan, C.; Zhou, H.; Zheng, A.; Yang, M.; Li, C.; Yang, T.; Chen, J.; Zhang, J.; Li, T. P300 event-related potentials as diagnostic biomarkers for attention deficit hyperactivity disorder in children. Front. Psychiatry 2025, 16, 1590850. [Google Scholar] [CrossRef]

- Datta, A.; Cusack, R.; Hawkins, K.; Heutink, J.; Rorden, C.; Robertson, I.H.; Manly, T. The P300 as a marker of waning attention and error propensity. Comput. Intell. Neurosci. 2007, 2007, 093968. [Google Scholar] [CrossRef]

- Tao, M.; Sun, J.; Liu, S.; Zhu, Y.; Ren, Y.; Liu, Z.; Wang, X.; Yang, W.; Li, G.; Wang, X.; et al. An event-related potential study of P300 in preschool children with attention deficit hyperactivity disorder. Front. Pediatr. 2024, 12, 1461921. [Google Scholar] [CrossRef]

- Wang, Z.; Ding, Y.; Yuan, W.; Chen, H.; Chen, W.; Chen, C. Active claw-shaped dry electrodes for EEG measurement in hair areas. Bioengineering 2024, 11, 276. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Bayin, C.; Li, H.; Shu, X.; Deng, J.; Yuan, H.; Shen, H.; Liang, Z.; Li, Y. A flexible, stable, semi-dry electrode with low impedance for electroencephalography recording. RSC Adv. 2024, 14, 34415–34427. [Google Scholar] [CrossRef]

- Wang, T.; Yao, S.; Shao, L.H.; Zhu, Y. Stretchable Ag/AgCl Nanowire Dry Electrodes for High-Quality Multimodal Bioelectronic Sensing. Sensors 2024, 24, 6670. [Google Scholar] [CrossRef]

- Kaveh, R.; Doong, J.; Zhou, A.; Schwendeman, C.; Gopalan, K.; Burghardt, F.L.; Arias, A.C.; Maharbiz, M.M.; Muller, R. Wireless user-generic ear EEG. IEEE Trans. Biomed. Circuits Syst. 2020, 14, 727–737. [Google Scholar] [CrossRef]

- Sun, W.; Su, Y.; Wu, X.; Wu, X. A novel end-to-end 1D-ResCNN model to remove artifact from EEG signals. Neurocomputing 2020, 404, 108–121. [Google Scholar] [CrossRef]

- Mahmud, S.; Hossain, M.S.; Chowdhury, M.E.; Reaz, M.B.I. MLMRS-Net: Electroencephalography (EEG) motion artifacts removal using a multi-layer multi-resolution spatially pooled 1D signal reconstruction network. Neural Comput. Appl. 2023, 35, 8371–8388. [Google Scholar] [CrossRef]

- Zhang, H.; Wei, C.; Zhao, M.; Liu, Q.; Wu, H. A novel convolutional neural network model to remove muscle artifacts from EEG. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1265–1269. [Google Scholar]

- Gao, T.; Chen, D.; Tang, Y.; Ming, Z.; Li, X. EEG reconstruction with a dual-scale CNN-LSTM model for deep artifact removal. IEEE J. Biomed. Health Inform. 2022, 27, 1283–1294. [Google Scholar] [CrossRef]

- Erfanian, A.; Mahmoudi, B. Real-time ocular artifact suppression using recurrent neural network for electro-encephalogram based brain-computer interface. Med. Biol. Eng. Comput. 2005, 43, 296–305. [Google Scholar] [CrossRef]

- Liu, Y.; Höllerer, T.; Sra, M. SRI-EEG: State-based recurrent imputation for EEG artifact correction. Front. Comput. Neurosci. 2022, 16, 803384. [Google Scholar] [CrossRef]

- Jiang, R.; Tong, S.; Wu, J.; Hu, H.; Zhang, R.; Wang, H.; Zhao, Y.; Zhu, W.; Li, S.; Zhang, X. A novel EEG artifact removal algorithm based on an advanced attention mechanism. Sci. Rep. 2025, 15, 19419. [Google Scholar] [CrossRef]

- Wang, S.; Luo, Y.; Shen, H. An improved Generative Adversarial Network for Denoising EEG signals of brain-computer interface systems. In Proceedings of the 2022 China Automation Congress (CAC), Xiamen, China, 25–27 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 6498–6502. [Google Scholar]

- Tibermacine, I.E.; Russo, S.; Citeroni, F.; Mancini, G.; Rabehi, A.; Alharbi, A.H.; El-Kenawy, E.S.M.; Napoli, C. Adversarial denoising of EEG signals: A comparative analysis of standard GAN and WGAN-GP approaches. Front. Hum. Neurosci. 2025, 19, 1583342. [Google Scholar] [CrossRef]

- Dong, Y.; Tang, X.; Li, Q.; Wang, Y.; Jiang, N.; Tian, L.; Zheng, Y.; Li, X.; Zhao, S.; Li, G.; et al. An approach for EEG denoising based on wasserstein generative adversarial network. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 3524–3534. [Google Scholar] [CrossRef]

- Nguyen, H.A.T.; Le, T.H.; Bui, T.D. A deep wavelet sparse autoencoder method for online and automatic electrooculographical artifact removal. Neural Comput. Appl. 2020, 32, 18255–18270. [Google Scholar] [CrossRef]

- Acharjee, R.; Ahamed, S.R. Automatic Eyeblink artifact removal from Single Channel EEG signals using one-dimensional convolutional Denoising autoencoder. In Proceedings of the 2024 International Conference on Computer, Electrical & Communication Engineering (ICCECE), Kolkata, India, 2–3 February 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–7. [Google Scholar]

- Nagar, S.; Kumar, A. Orthogonal features based EEG signals denoising using fractional and compressed one-dimensional CNN AutoEncoder. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 2474–2485. [Google Scholar] [CrossRef] [PubMed]

- Pu, X.; Yi, P.; Chen, K.; Ma, Z.; Zhao, D.; Ren, Y. EEGDnet: Fusing non-local and local self-similarity for EEG signal denoising with transformer. Comput. Biol. Med. 2022, 151, 106248. [Google Scholar] [CrossRef]

- Chen, J.; Pi, D.; Jiang, X.; Xu, Y.; Chen, Y.; Wang, X. Denosieformer: A transformer-based approach for single-channel EEG artifact removal. IEEE Trans. Instrum. Meas. 2023, 73, 2501116. [Google Scholar] [CrossRef]

- Chuang, C.H.; Chang, K.Y.; Huang, C.S.; Bessas, A.M. Art: Artifact removal transformer for reconstructing noise-free multichannel electroencephalographic signals. arXiv 2024, arXiv:2409.07326. [Google Scholar] [CrossRef]

- Yin, J.; Liu, A.; Li, C.; Qian, R.; Chen, X. A GAN guided parallel CNN and transformer network for EEG denoising. IEEE J. Biomed. Health Inform. 2023, 29, 3930–3941. [Google Scholar] [CrossRef]

- Cai, Y.; Meng, Z.; Huang, D. DHCT-GAN: Improving EEG signal quality with a dual-branch hybrid CNN–transformer network. Sensors 2025, 25, 231. [Google Scholar] [CrossRef] [PubMed]

- Zhu, L.; Lv, J. Review of studies on user research based on EEG and eye tracking. Appl. Sci. 2023, 13, 6502. [Google Scholar] [CrossRef]

- Qin, Y.; Yang, J.; Zhang, M.; Zhang, M.; Kuang, J.; Yu, Y.; Zhang, S. Construction of a Quality Evaluation System for University Course Teaching Based on Multimodal Brain Data. Recent Patents Eng. 2025; in press. [Google Scholar]

- Song, Y.; Feng, L.; Zhang, W.; Song, X.; Cheng, M. Multimodal Emotion Recognition based on the Fusion of EEG Signals and Eye Movement Data. In Proceedings of the 2024 IEEE 25th China Conference on System Simulation Technology and its Application (CCSSTA), Tianjin, China, 21–23 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 127–132. [Google Scholar]

- Zhao, M.; Gao, H.; Wang, W.; Qu, J. Research on human-computer interaction intention recognition based on EEG and eye movement. IEEE Access 2020, 8, 145824–145832. [Google Scholar] [CrossRef]

- Gong, X.; Dong, Y.; Zhang, T. CoDF-Net: Coordinated-representation decision fusion network for emotion recognition with EEG and eye movement signals. Int. J. Mach. Learn. Cybern. 2024, 15, 1213–1226. [Google Scholar] [CrossRef]

- Guo, J.J.; Zhou, R.; Zhao, L.M.; Lu, B.L. Multimodal emotion recognition from eye image, eye movement and EEG using deep neural networks. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3071–3074. [Google Scholar]

- Li, X.; Wei, W.; Zhao, K.; Mao, J.; Lu, Y.; Qiu, S.; He, H. Exploring EEG and eye movement fusion for multi-class target RSVP-BCI. Inf. Fusion 2025, 121, 103135. [Google Scholar] [CrossRef]

- Singh, P.; Tripathi, M.K.; Patil, M.B.; Shivendra; Neelakantappa, M. Multimodal emotion recognition model via hybrid model with improved feature level fusion on facial and EEG feature set. Multimed. Tools Appl. 2025, 84, 1–36. [Google Scholar] [CrossRef]

- Zhao, Y.; Chen, D. Expression EEG multimodal emotion recognition method based on the bidirectional LSTM and attention mechanism. Comput. Math. Methods Med. 2021, 2021, 9967592. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Li, J. Multi-modal emotion identification fusing facial expression and EEG. Multimed. Tools Appl. 2023, 82, 10901–10919. [Google Scholar] [CrossRef]

- Li, D.; Wang, Z.; Wang, C.; Liu, S.; Chi, W.; Dong, E.; Song, X.; Gao, Q.; Song, Y. The fusion of electroencephalography and facial expression for continuous emotion recognition. IEEE Access 2019, 7, 155724–155736. [Google Scholar] [CrossRef]

- Jin, X.; Xiao, J.; Jin, L.; Zhang, X. Residual multimodal Transformer for expression-EEG fusion continuous emotion recognition. CAAI Trans. Intell. Technol. 2024, 9, 1290–1304. [Google Scholar] [CrossRef]

- Rayatdoost, S.; Rudrauf, D.; Soleymani, M. Multimodal gated information fusion for emotion recognition from EEG signals and facial behaviors. In Proceedings of the 2020 International Conference on Multimodal Interaction, Virtual, 25–29 October 2020; pp. 655–659. [Google Scholar]

- Liu, S.; Wang, Z.; An, Y.; Li, B.; Wang, X.; Zhang, Y. DA-CapsNet: A multi-branch capsule network based on adversarial domain adaption for cross-subject EEG emotion recognition. Knowl.-Based Syst. 2024, 283, 111137. [Google Scholar] [CrossRef]

- Unsworth, N.; Miller, A.L. Individual differences in the intensity and consistency of attention. Curr. Dir. Psychol. Sci. 2021, 30, 391–400. [Google Scholar] [CrossRef]

- Matthews, G.; Reinerman-Jones, L.; Abich IV, J.; Kustubayeva, A. Metrics for individual differences in EEG response to cognitive workload: Optimizing performance prediction. Personal. Individ. Differ. 2017, 118, 22–28. [Google Scholar] [CrossRef]

- Zhang, B.; Xu, M.; Zhang, Y.; Ye, S.; Chen, Y. Attention-ProNet: A Prototype Network with Hybrid Attention Mechanisms Applied to Zero Calibration in Rapid Serial Visual Presentation-Based Brain–Computer Interface. Bioengineering 2024, 11, 347. [Google Scholar] [CrossRef]

- Leblanc, B.; Germain, P. On the Relationship Between Interpretability and Explainability in Machine Learning. arXiv 2023, arXiv:2311.11491. [Google Scholar]

- Khare, S.K.; Acharya, U.R. An explainable and interpretable model for attention deficit hyperactivity disorder in children using EEG signals. Comput. Biol. Med. 2023, 155, 106676. [Google Scholar] [CrossRef]

- ŞAHiN, E.; Arslan, N.N.; Özdemir, D. Unlocking the black box: An in-depth review on interpretability, explainability, and reliability in deep learning. Neural Comput. Appl. 2025, 37, 859–965. [Google Scholar] [CrossRef]

- Miao, Z.; Zhao, M.; Zhang, X.; Ming, D. LMDA-Net: A lightweight multi-dimensional attention network for general EEG-based brain-computer interfaces and interpretability. NeuroImage 2023, 276, 120209. [Google Scholar] [CrossRef] [PubMed]

- Shawly, T.; Alsheikhy, A.A. Eeg-based detection of epileptic seizures in patients with disabilities using a novel attention-driven deep learning framework with SHAP interpretability. Egypt. Inform. J. 2025, 31, 100734. [Google Scholar] [CrossRef]

- Gonzalez, H.A.; Muzaffar, S.; Yoo, J.; Elfadel, I.M. BioCNN: A hardware inference engine for EEG-based emotion detection. IEEE Access 2020, 8, 140896–140914. [Google Scholar] [CrossRef]

- Fang, W.C.; Wang, K.Y.; Fahier, N.; Ho, Y.L.; Huang, Y.D. Development and validation of an EEG-based real-time emotion recognition system using edge AI computing platform with convolutional neural network system-on-chip design. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 645–657. [Google Scholar] [CrossRef]

- Li, J.Y.; Fang, W.C. An edge ai accelerator design based on hdc model for real-time eeg-based emotion recognition system with risc-v fpga platform. In Proceedings of the 2024 IEEE International Symposium on Circuits and Systems (ISCAS), Singapore, 19–22 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–5. [Google Scholar]

| EEG Band | Frequency Range (Hz) | Mental Status |

|---|---|---|

| Delta | 0.1–4 Hz | Deep sleep, unconscious. |

| Theta | 4–8 Hz | Deep relaxation, internal focus, meditation. |

| Low Alpha | 8–10 Hz | Wakeful relaxation, conscious, good mood, calmness. |

| High Alpha | 10–12 Hz | Enhanced self-awareness and concentration. |

| Low Beta | 12–18 Hz | Thinking and focused attention. |

| High Beta | 18–30 Hz | Cognitive activity, alertness. |

| Low Gamma | 30–50 Hz | Cognitive processing, self-control. |

| High Gamma | 50–70 Hz | Engaged in memory, hearing reading and speaking. |

| Device | Channel | Resolution | Max. Sample Rate | Interface |

|---|---|---|---|---|

| BioSemi ActiveTwo system | 280 | 24-bit | 16 kHz | USB |

| actiCHamp | 160 | 24-bit | 25 kH | USB |

| BrainAmp DC | 256 | 16-bit | 5 kH | USB |

| Neuroscan EEG | 64 | 24-bit | 20 kHz | USB |

| DSI-24 | 19 | 16-bit | 300 Hz | Bluetooth/USB |

| Emotiv Epoc X | 14 | 16-bit | 2048 Hz | Bluetooth/USB |

| Emotiv FLEX 2 Saline | 32 | 16-bit | 2048 Hz | Bluetooth/USB |

| MindWave Mobile 2 | 1 | 12-bit | 512 Hz | Bluetooth |

| Versatile EEG | 32 | 24-bit | 256 Hz | Bluetooth |

| Li et al. [46] | 32 | 16-bit | 30 kHz | Wi-Fi |

| Lei et al. [47] | 8 | 24-bit | 16 kHz | Bluetooth |

| Valentin et al. [48] | 8 | 24-bit | 4 kHz | USB |

| Liu et al. [35] | 16 | 24-bit | 1000 Hz | Wi-Fi |

| Liu et al. [35] | 192 | 24-bit | 4000 Hz | Fiber/USB/Wi-Fi |

| Lin et al. [49] | 64 | 24-bit | 300/512 Hz | Wi-Fi |

| Model | No. of CUDA | Memory | Memory Bandwidth | Reference |

|---|---|---|---|---|

| GeForce RTX 3090 | 10,496 | 24 GB | 936.2 GB/s | [50] |

| GeForce RTX 3080 | 8704 | 10 GB | 760.3 GB/s | [51] |

| GeForce RTX 3080 Ti | 10,240 | 12 GB | 912.4 GB/s | [52] |

| GeForce RTX 3070 | 5888 | 8 GB | 448.0 GB/s | [53] |

| GeForce RTX 3070 Ti | 6144 | 8 GB | 608.3 GB/s | [54] |

| GeForce RTX 3060 | 3584 | 12 GB | 360.0 GB/s | [55] |

| GeForce RTX 3060 Ti | 4864 | 8 GB | 448.0 GB/s | [56] |

| GeForce RTX 4090 | 16,384 | 24 GB | 1.01 TB/s | [57] |

| GeForce GTX 1070 | 1920 | 8 GB | 256.3 GB/s | [58] |

| GeForce GTX 1070 Ti | 2432 | 8 GB | 256.3 GB/s | [59] |

| GeForce GTX 1050 | 640 | 2 GB | 112.1 GB/s | [60] |

| RTX 2080 GPU Ti | 4352 | 11 GB | 616.0 GB/s | [61] |

| Methods | Advantages | Challenges | Scenarios |

|---|---|---|---|

| ICA | Effectively separate mixed signals, unsupervised learning, multi-channel analysis. | Calculation complexity, manual judgment of artifact. | Multi-channel EEG; EOG artifacts, EMG artifacts. |

| WT | Time–frequency local analysis, multi-resolution features, good computational efficiency. | Depends on the wavelet basis, frequency band aliasing. | Transient artifacts, EOG artifacts, EMG artifacts. |

| EMD | Good adaptability, local feature extraction, nonlinear processing capability. | Modal aliasing problem, signal quality degrades at ends. | Single-channel EEG, non-stationary, nonlinear artifacts. |

| Methods | Advantages | Challenges |

|---|---|---|

| Time-domain features | Simple implementation and low computational complexity. Suitable for processing short-term stationary signals. | Unable to capture important frequencies or complex time–frequency relationships. |

| Frequency-domain features | Can obtain the characteristics of the signal in specific frequency bands. | Unable to capture the time-varying characteristics of the signal. |

| Time–frequency-domain features | Simultaneously acquire time and frequency information to analyze non-stationary signals. | The computational complexity is high and depends on the choice of decomposition algorithm. |

| Nonlinear features | Extract chaotic signals and complex dynamic change features. | The computational complexity is high and be sensitive to parameters. |

| Spatial-domain features | Suitable for processing multi-channel EEG data and analyzing brain region interactions | Depends on sensor layout, generalization ability may be limited. |

| Methods | Advantages | Challenges |

|---|---|---|

| SVM | Handle high-dimensional data effectively, and usually provides high classification accuracy. | Large datasets take a long time to calculate, and be sensitive to kernel function choice. |

| KNN | Simple to implement and understand, requires no training, and performs well for low-dimensional data or small sample sets. | Be sensitive to sample size and dimensionality, as computational complexity grows with data size. Choose the k value based on the data structure. |

| Random forest | Can process a large number of features and has good robustness to noise. Usually shows good classification performance and good resistance to overfitting. | The algorithm model is complex, requires more computing resources, and is not good at handling time series features. |

| Methods | Advantages | Challenges |

|---|---|---|

| CNN | Good at capturing spatial features, high computational efficiency, and parallel training. | Not suitable for capturing the temporal dynamics of time series signals, and training requires much data. |

| RNN | Good at capturing temporal dynamics and sequential relationships, suitable for processing continuous EEG signals. | There is a gradient vanishing problem, long training time, and poor parallelism. |

| Transformer | Be suitable for long time series analysis, can identify global features, high parallel processing efficiency. | The computational complexity is high, requiring a lot of computing resources, and performance degrades for small datasets. |

| Hybrid models | Combining the advantages of multiple models, more adaptable. | Structure is complex, training and parameter adjustment are difficult, and usually require more computing resources. |

| Datasets | Sampling Rate (Hz) | No. of Subjects | No. of Channels | Stimuli |

|---|---|---|---|---|

| KUL [169] | 8192 | 16 | 64 | Audio |

| DTU [170] | 512 | 18 | 64 | Audio |

| PKU [171] | 500 | 16 | 64 | Audio |

| AVED [172] | 1000 | 20 | 32 | Audio & Visual |

| Cocktail Party [173] | 512 | 33 | 128 | Audio |

| Das et al. [42] | 8192 | 28 | 64 | Audio |

| EventAAD [174] | 1000 | 24 | 32 | Audio |

| Zhang et al. [175] | 1000 | 30 | 28 | Visual |

| Geravanchizadeh et al. [176] | 512 | 40 | 128 | Audio |

| Reichert et al. [177] | 250 | 18 | 14 | Visual |

| Ciccarelli et al. [74] | 1000 | 11 | 64 | Audio |

| Delvigne et al. [178] | 500 | 11 | 32 | VR headsets |

| Jaeger et al. [179] | 500 | 21 | 94 | Audio |

| Torkamani et al. [180] | N/A | 10 | 64 | Visual |

| Liu et al. [181] | 500 | 20 | 32 | Visual |

| Ko et al. [73] | 1000 | 18 | 32 | Visual |

| Methods | Advantages | Challenges | Scenarios |

|---|---|---|---|

| CNN | Automatically learn spatial features, robust to structured noise, and highly computationally efficient. | Weak ability to model time dependencies, may require large amounts of data. Limited effect in high-frequency noise. | EMG artifacts, EOG artifacts, high-frequency noise/artifacts, noise caused by poor electrode contact. |

| RNN | Good in temporal sequence modeling and handling variable-length sequences. | Slow training, gradient disappearance or explosion, high computing resource requirements. | ECG artifacts, EOG artifacts, and long-term artifacts. |

| GAN | Learning complex distributions, suitable for non-stationary signals. | Training is unstable, requiring careful tuning, and has high computational cost. | Mixed artifacts (e.g., EMG + EOG) and complex physiological noise. |

| Autoencoder | Unsupervised learning, removing redundant information, simple structure and easy to train. | The effect on complex noise is limited and useful information may be lost. | EMG artifacts, EOG artifacts. |

| Transformer | Captures global spatial–temporal dependencie and transient dynamic features, high denoising performance. | Requires a large amount of data, high model complexity, and high computing resource consumption. | Global artifacts (e.g., EOG, ECG) and complex time-dependent noise. |

| Methods | Advantages | Challenges |

|---|---|---|

| EEG + Eye movement | Strong objective accuracy, difficult to disguise. Facilitates real-time assessment. Strong anti-interference ability. | High-precision eye tracking devices may be required. Complex data synchronization. Eye movement data lacks features for emotion recognition. |

| EEG + Facial expression | Strong emotional connection. Low deployment cost. Suitable for large-class teaching. | Facial expressions are easy to disguise. Real-time performance is not good. Be sensitive to the environment. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, L.; Yu, Y.; Qin, Y.; Zhang, S. A Survey of EEG-Based Approaches to Classroom Attention Assessment in Education. Information 2025, 16, 860. https://doi.org/10.3390/info16100860

Wei L, Yu Y, Qin Y, Zhang S. A Survey of EEG-Based Approaches to Classroom Attention Assessment in Education. Information. 2025; 16(10):860. https://doi.org/10.3390/info16100860

Chicago/Turabian StyleWei, Lijun, Yuanyu Yu, Yuping Qin, and Shuang Zhang. 2025. "A Survey of EEG-Based Approaches to Classroom Attention Assessment in Education" Information 16, no. 10: 860. https://doi.org/10.3390/info16100860

APA StyleWei, L., Yu, Y., Qin, Y., & Zhang, S. (2025). A Survey of EEG-Based Approaches to Classroom Attention Assessment in Education. Information, 16(10), 860. https://doi.org/10.3390/info16100860