Speaking like Humans, Spreading like Machines: A Study on Opinion Manipulation by Artificial-Intelligence-Generated Content Driving the Internet Water Army on Social Media

Abstract

1. Introduction

- (1)

- It focuses on the core characteristics of the new IWA (AESB) and deeply analyzes its published content to detect AIGC, thereby broadening the dimensions for identifying such groups.

- (2)

- It systematically evaluates the opinion manipulation effects of AESB at the node, community, and network levels, and examines the differences in manipulation effectiveness between the two forms of IWA, offering deeper insights into the dissemination patterns and profound impacts of these actors within the online public opinion ecosystem.

2. Related Work

3. Methodology

3.1. Detection of Social Bot Accounts

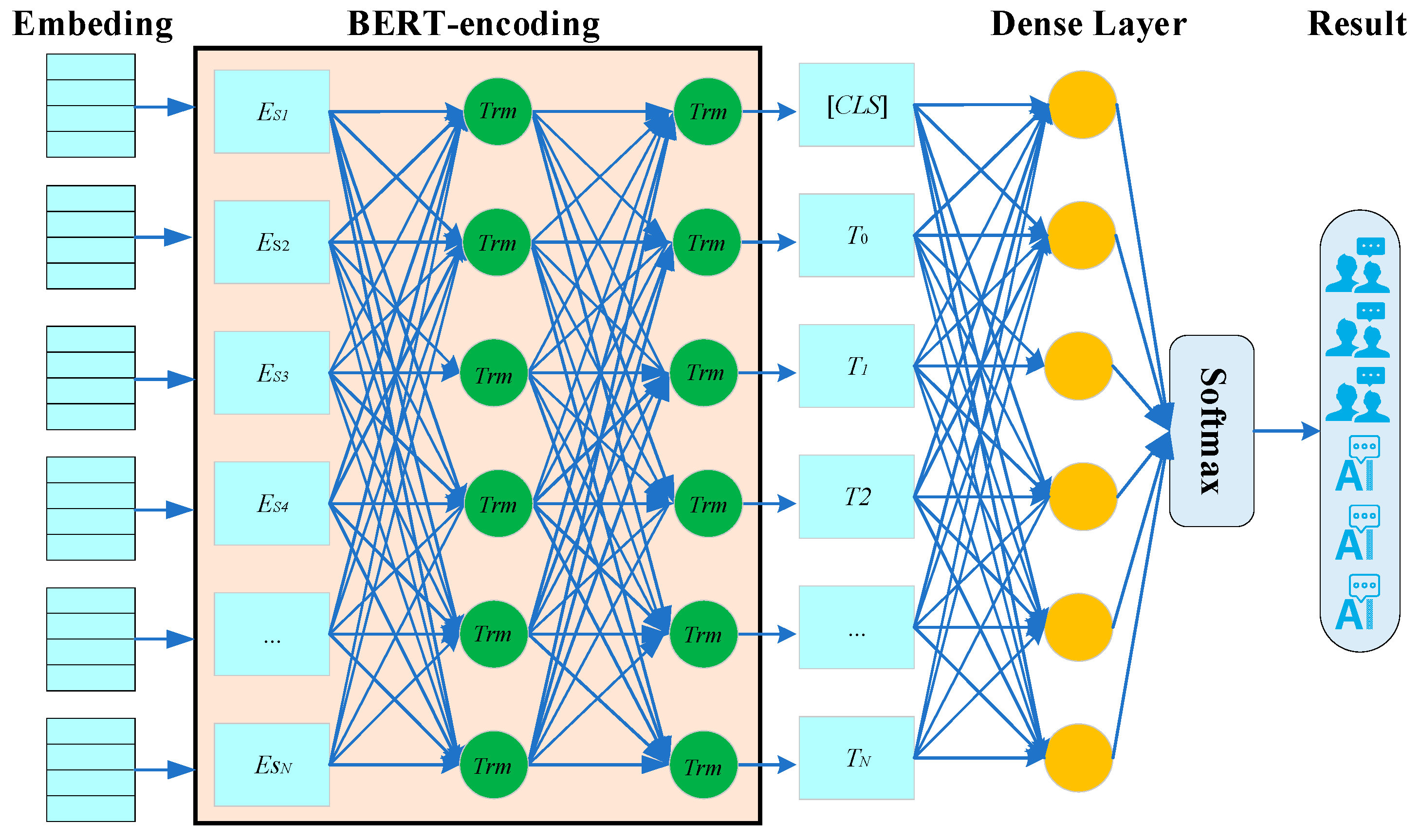

3.2. Identification of AESB Content

3.3. Quantifying the Degree of Opinion Manipulation

3.3.1. Construction of the Opinion Diffusion Network

3.3.2. Definition of Quantitative Indicator System

| Primary Indicator | Secondary Indicator | Indicator Description | Reference |

|---|---|---|---|

| Node Influence | Degree Centrality | Measures the activity of nodes based on the number of comments. | [22,40] |

| Sentiment Deviation | Assesses sentiment guidance based on sentiment deviation differences. | [41,42] | |

| Topic Focus Degree | Measures the similarity between comments and main topics. | [43] | |

| Community Influence | Modularity | Measures cohesion within communities and reflects structural compactness. | [44] |

| Community Polarization Index | Quantifies intra-community consensus by combining sentiment and topic factors. | [45] | |

| Cross-Community Penetration Rate | Measures the ability of content to break through information cocoons. | [46] | |

| Network Influence | Spread Speed | Calculates the number of comments disseminated per unit of time. | [47] |

| Diffusion Breadth | Measures the overall spread scope of information within the network. | [48] | |

| Diffusion Depth | Reflects the penetration capability of information, based on comment time intervals. | [49,50] |

- (1)

- Node Influence

- (2)

- Community Influence

- (3)

- Network Influence

4. Experiment

4.1. Data Collection and Preprocessing

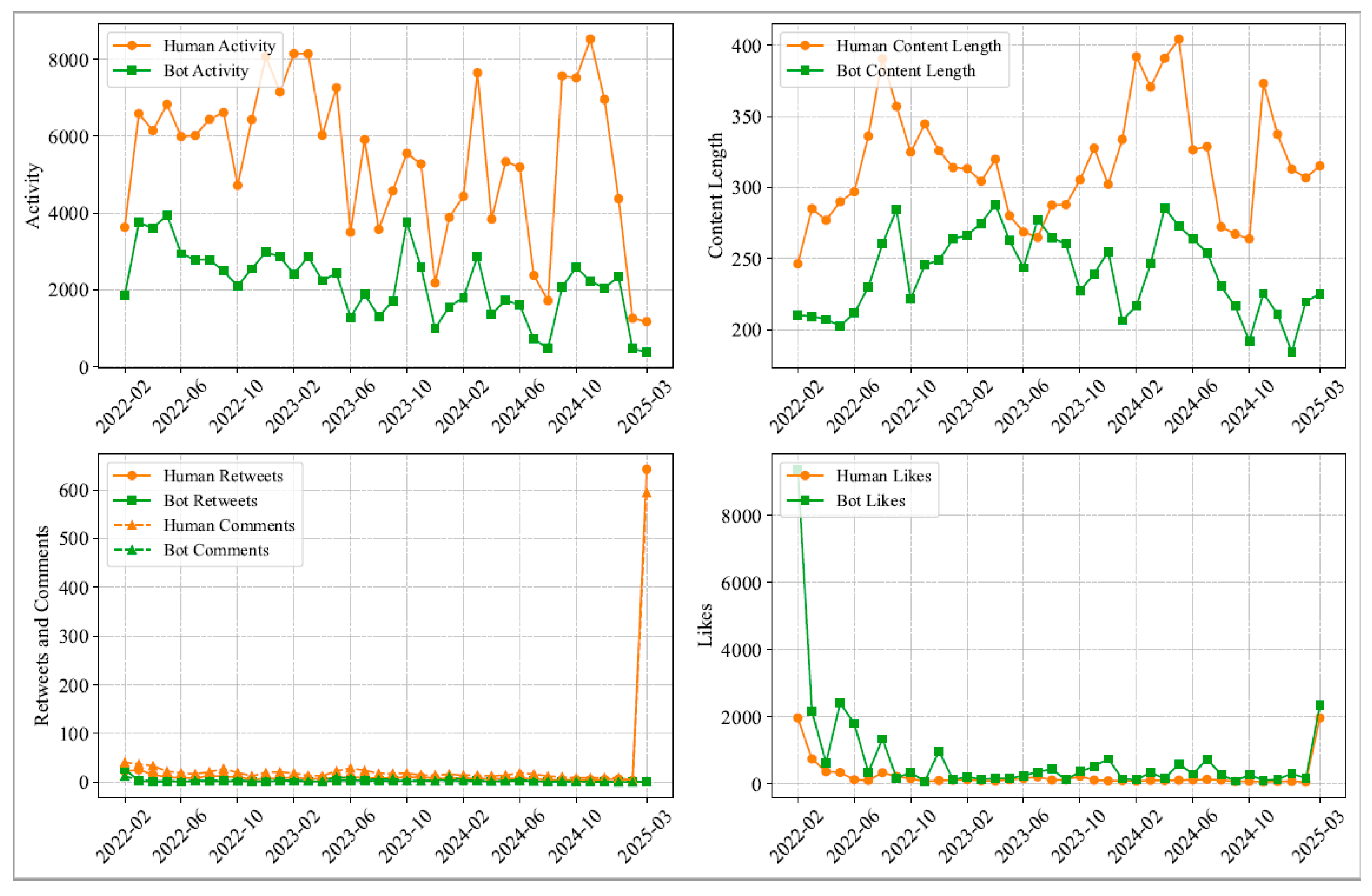

4.2. Social Bot Detection

4.3. AESB Content Identification

4.3.1. AIGC Model Training

4.3.2. Misclassification Causes

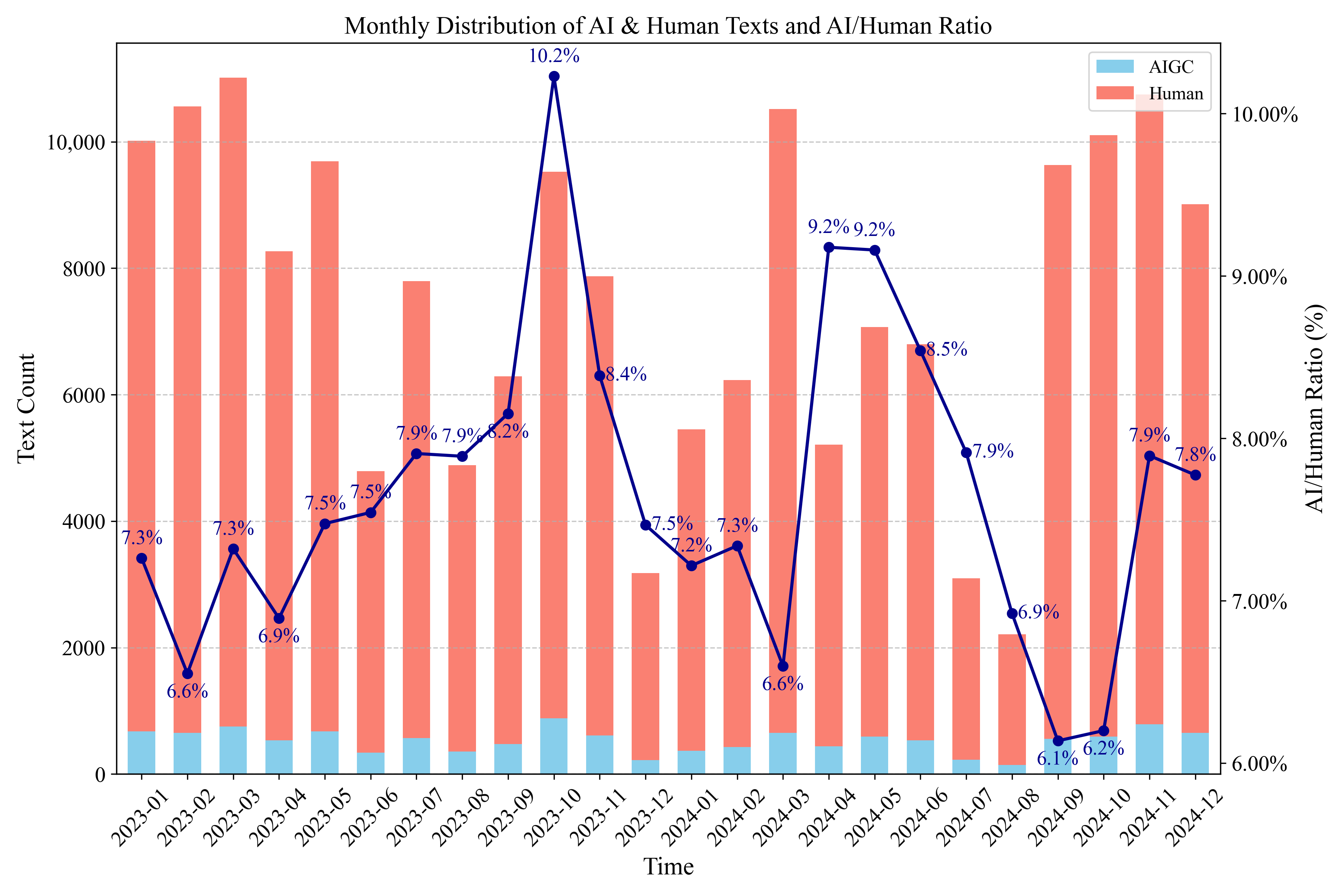

4.3.3. AIGC Identification

- (1)

- Topic distribution trends. Using perplexity-based LDA topic modeling, the optimal number of topics was determined to be 8 for AIGC and 12 for UGC. As shown in Figure 5, both text types exhibited similar coverage of general themes such as the “Trend of the Russia–Ukraine Conflict” and “Global Impact,” indicating shared attention to core events. However, AIGC texts concentrated on more structured themes (e.g., “Global Leaders’ Attention” and “Economic Impact”), while UGC texts reflected more interactive and contextual concerns (e.g., “Territorial Dispute”, “Peace Talks” and “Trump’s Involvement”). This suggests that AIGC tends to exhibit greater topical focus and structural coherence, whereas human-generated content is more diverse and sensitive to event-specific details and social sentiment.

- (2)

- Language style differences. Four linguistic features were examined: type-token ratio for lexical diversity, sentiment score, average character count, and average word count. Independent samples t-tests were conducted, with results summarized in Table 4. AIGC texts displayed significantly lower lexical diversity than UGC, suggesting a tendency toward more standardized vocabulary. No significant difference was found in sentiment scores (p = 0.359), indicating similar emotional expression between the two. In terms of length, AIGC texts showed higher average character and word counts, with greater variance, suggesting that AI tends to generate more structured and information-rich content.

4.4. Opinion Manipulation Analysis

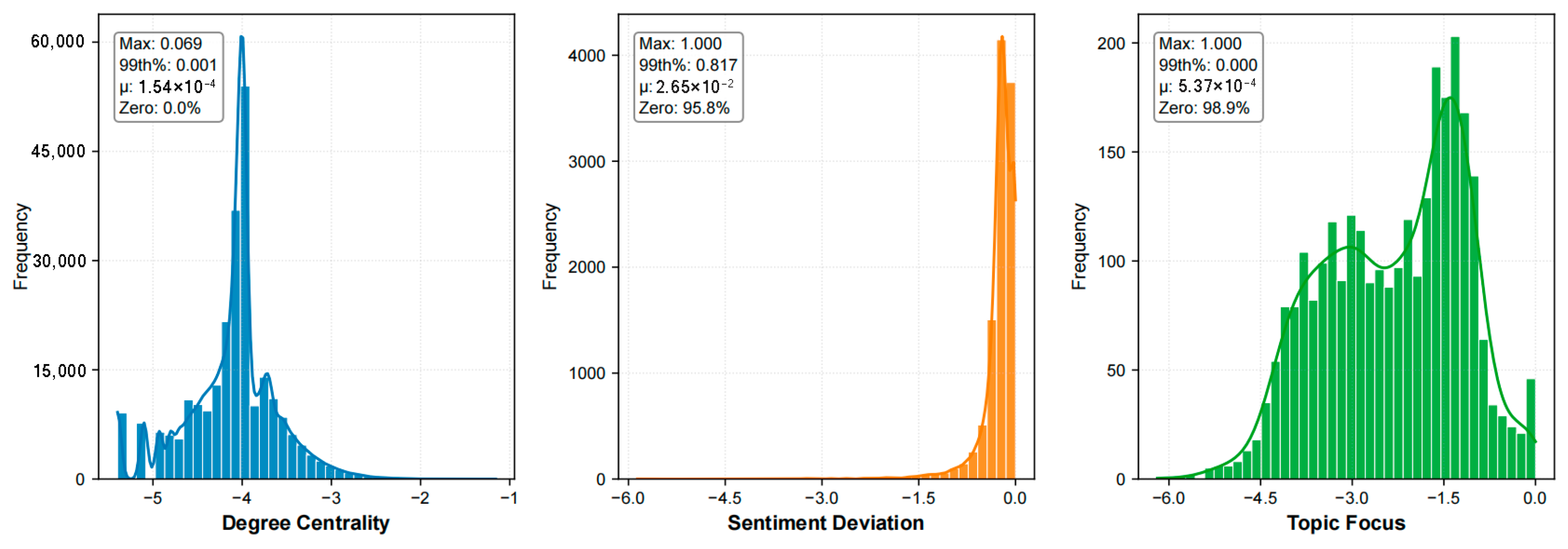

- (1)

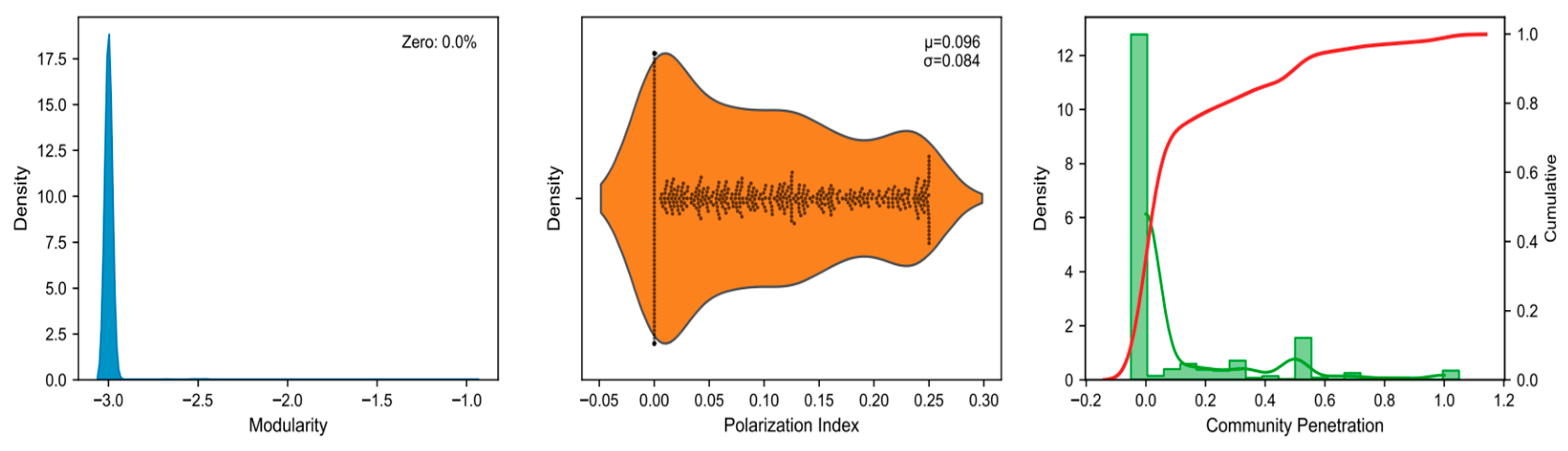

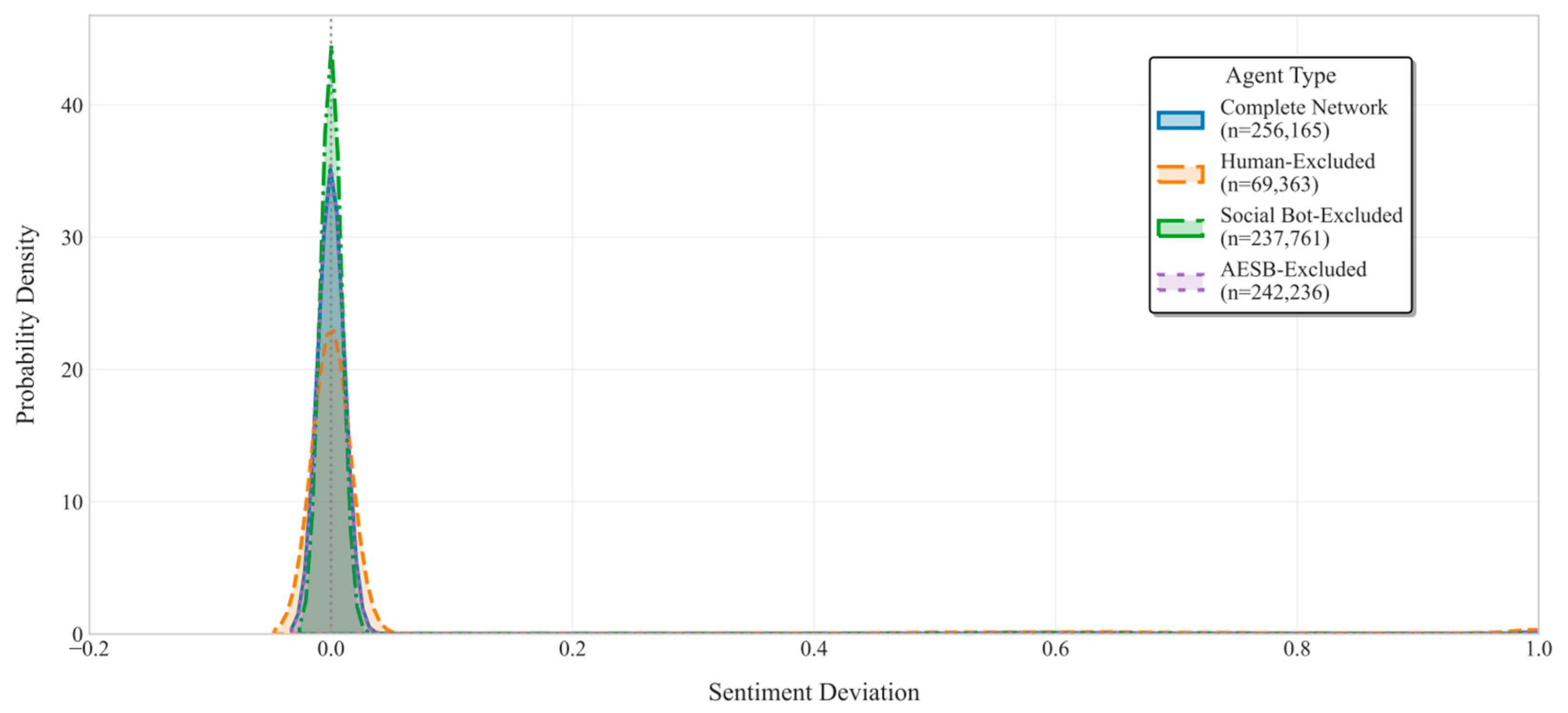

- At the node level, Figure 6 visually presents the distribution and interrelationships of three metrics: degree centrality, sentiment deviation, and topic focus. The horizontal axis shows the range of metric values, while the vertical axis indicates frequency. First, the markedly right-skewed distribution of degree centrality indicates extreme inequality in network connectivity. The vast majority of nodes occupy peripheral positions with minimal influence, whereas a small number of nodes possess exceptionally high connectivity, serving as core hubs within the network. These core hubs are likely key nodes that guide information flow and amplify specific content; identifying and monitoring them is crucial for understanding and intercepting malicious opinion manipulation. Second, the distribution of sentiment deviation shows that most nodes (95.8%) have values near zero, suggesting that their content tends to be sentimentally neutral. A minority of nodes exhibit strong positive or negative sentiment deviations. Although few in number, these nodes play a critical role, potentially setting the emotional tone of public discussions through the dissemination of highly polarized content, thereby intensifying conflicts and steering the emotional trajectory of public opinion. Finally, the distribution of topic focus is also skewed to the right. Most nodes discuss a diverse range of topics, which may reflect a camouflage strategy or indicate dispersed guiding intentions. In contrast, nodes with high topic focus consistently concentrate on a limited set of topics. These nodes may shape public discourse effectively by producing dense and repetitive content that directs network discussion toward specific issues, thereby enhancing the coherence and impact of their opinion-guiding actions.

- (2)

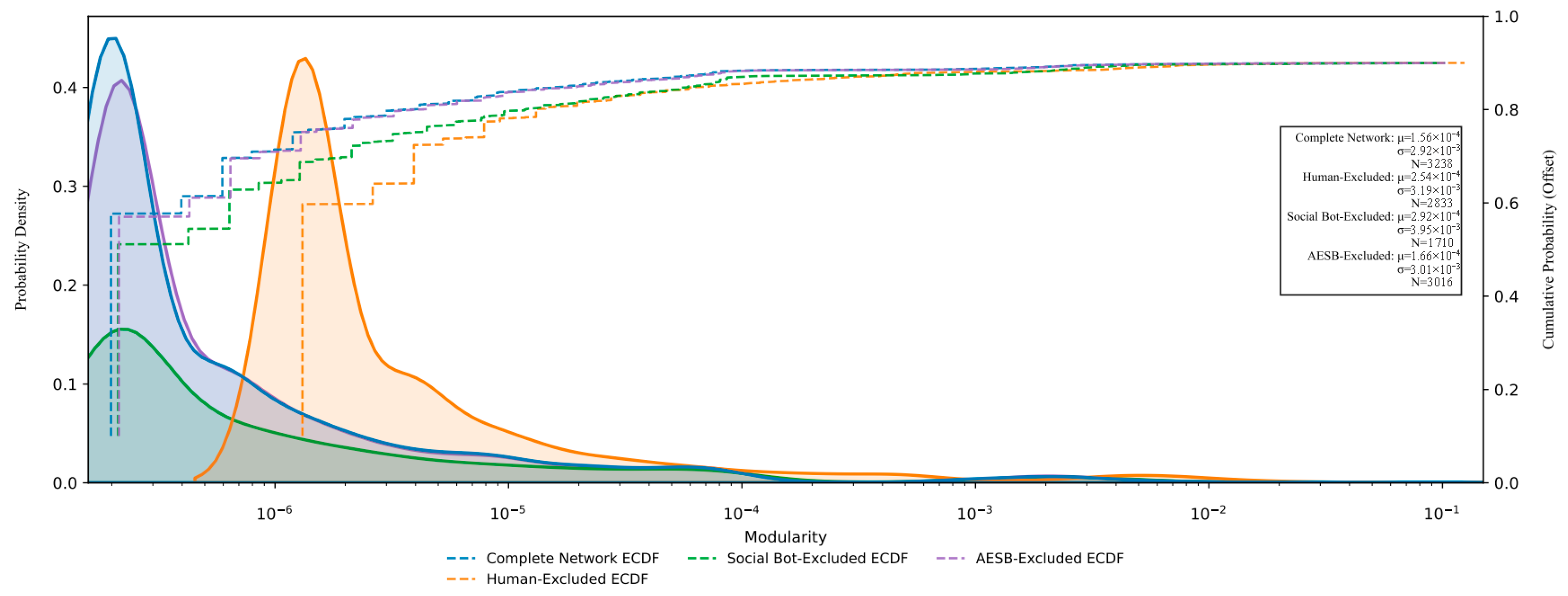

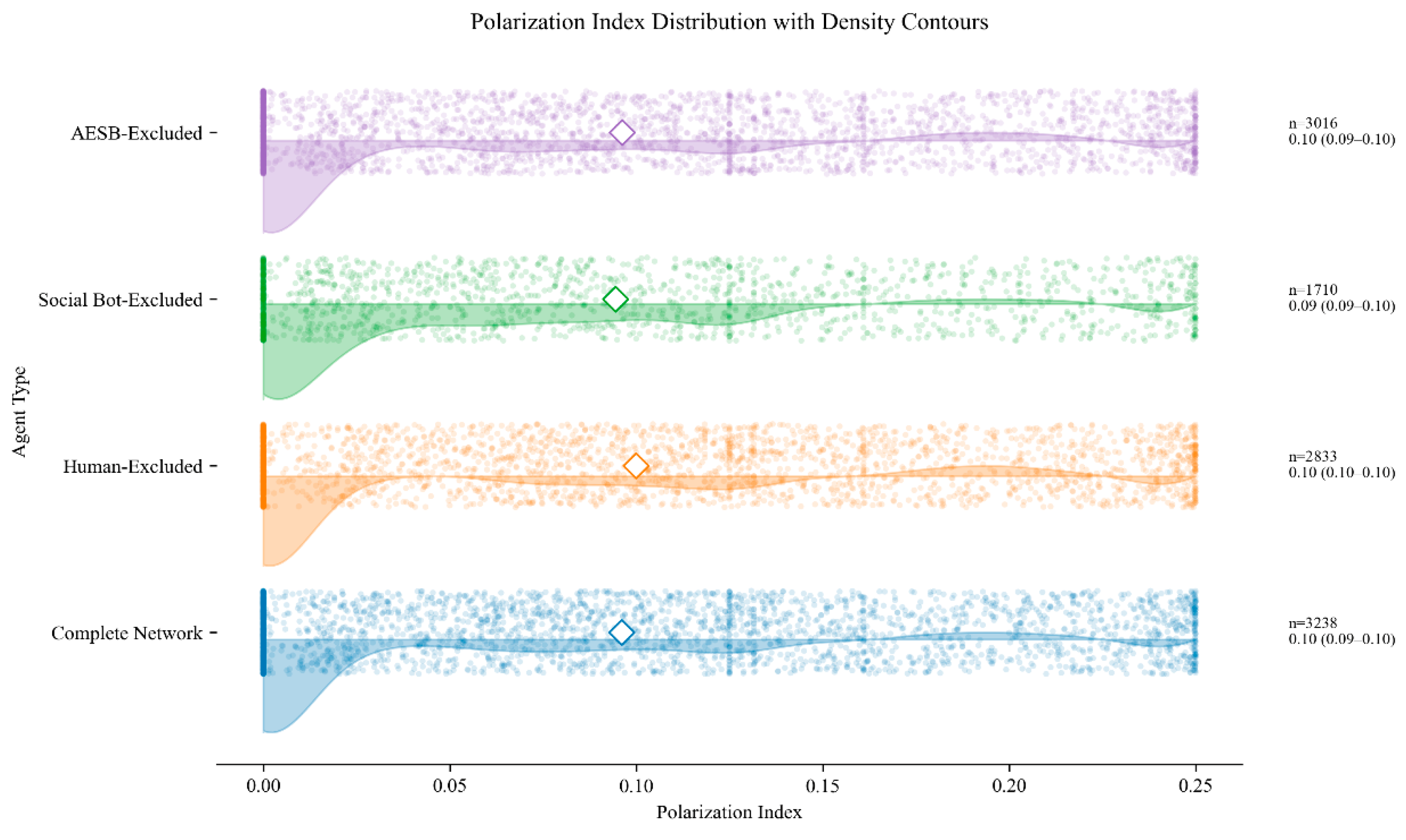

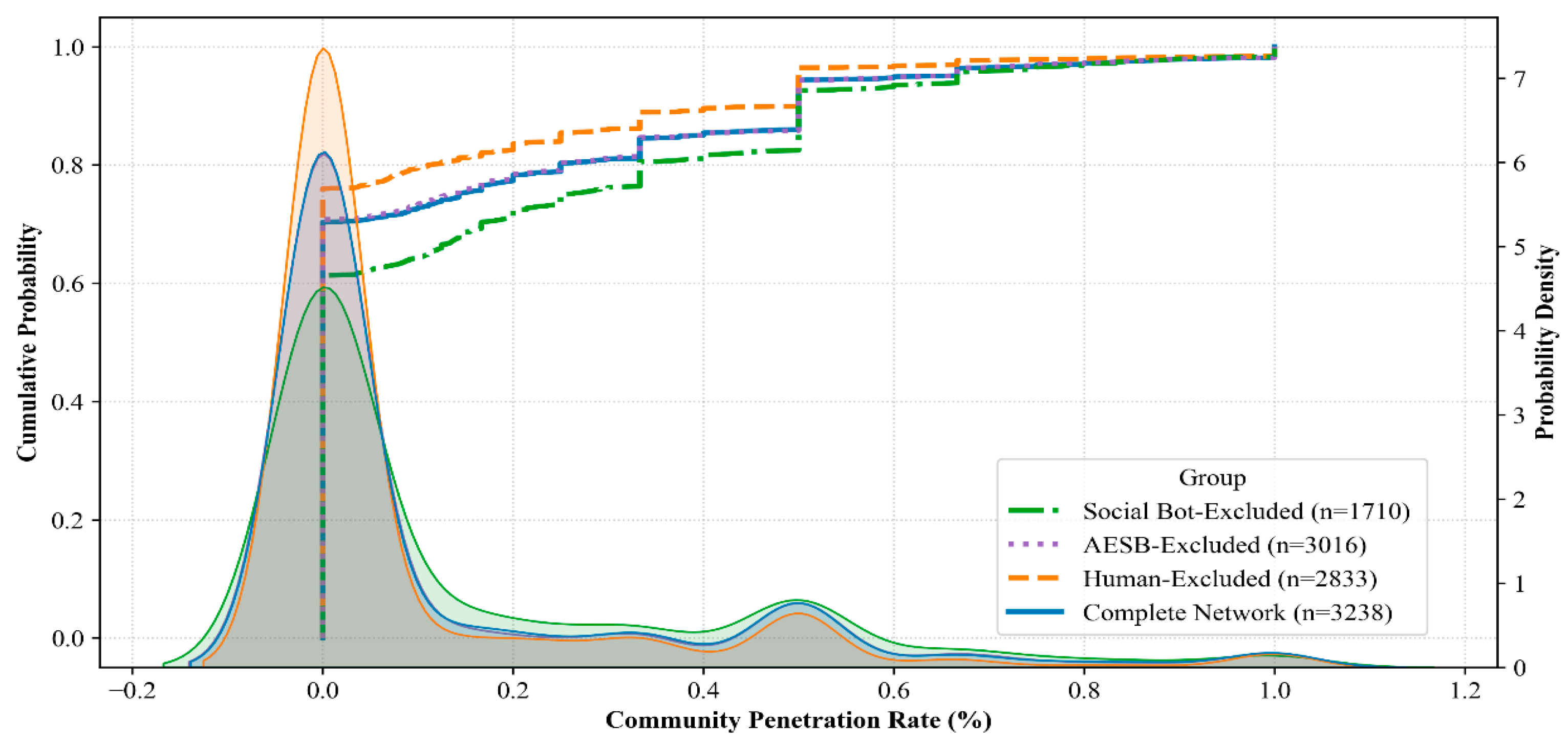

- At the community level, Figure 7 presents the distribution patterns and relationships among modularity, community polarization index, and cross-community penetration rate. First, the distribution of modularity indicates that most communities exhibit relatively loose internal connections and lack tightly cohesive structures, while a few communities display extremely high modularity values. This suggests that internal connectivity within these high-modularity communities far exceeds their random connections with external communities. Such tightly knit communities form the core layers of the network, where their dense internal structures facilitate the formation of highly unified group opinions, accelerate internal information propagation, and reinforce group identity, thereby serving as strategic bases for coordinated manipulative actions. Second, the distribution of the community polarization index shows that most communities maintain relatively balanced opinion distributions, whereas a subset exhibits high polarization. These highly polarized communities may continuously reinforce their members’ preexisting viewpoints through algorithmic recommendations or selective attention, ultimately driving group attitudes toward extremity. Finally, the generally low values of cross-community penetration rate indicate that most communities remain relatively isolated, with limited ability for internal information to spread outward. A minority of communities, however, demonstrate high cross-community penetration capabilities. These communities act as crucial channels connecting different groups, enabling agendas or polarized viewpoints established within core layers to penetrate and disseminate to other communities and real user groups, thereby amplifying influence over public opinion on a broader scale.

- (3)

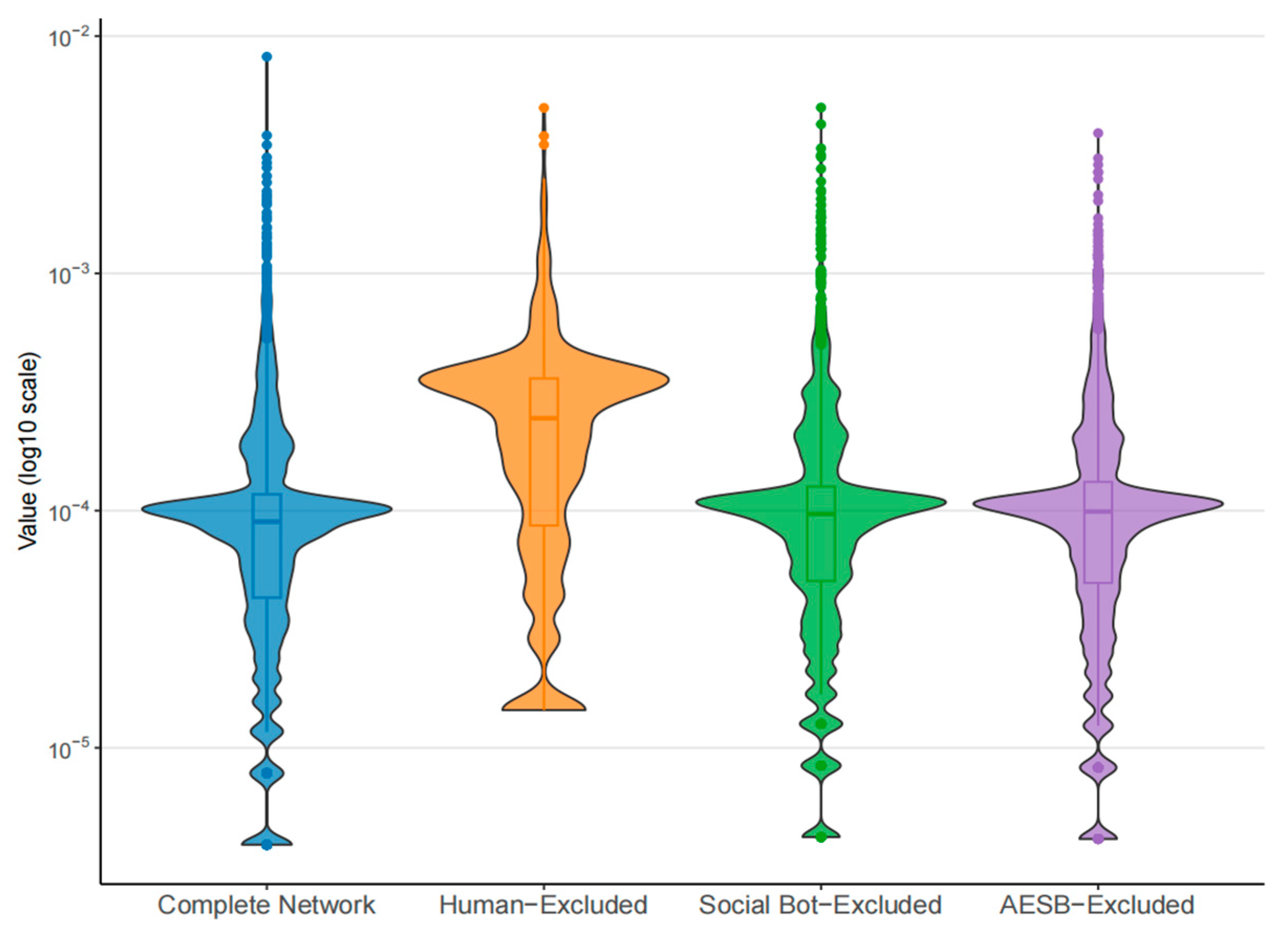

- At the network level, the global network is analyzed quantitatively using three key indicators: spread Speed, diffusion Breadth, and diffusion Depth. The results show that information spreads with high speed, low breadth, and high depth. Specifically, the average spread speed reaches 25.51 posts per hour, reflecting efficient public opinion diffusion on social media. However, diffusion breadth is low, as the average comments per post account for only a small fraction of total nodes. This suggests a strong centralization trend, where most comments and attention focus on a few popular posts, while many ordinary posts have limited influence and attract little discussion. Despite this, diffusion depth is relatively high, with an average comment time lag of 34.4 h, indicating sustained penetration of information within specific groups. Overall, these characteristics demonstrate that online opinion diffusion is marked by concentrated spread and lasting influence. Highly active nodes and specific communities amplify opinion guidance, while structures with strong cross-community penetration serve as key channels to break information silos and promote diverse discussions.

5. Results

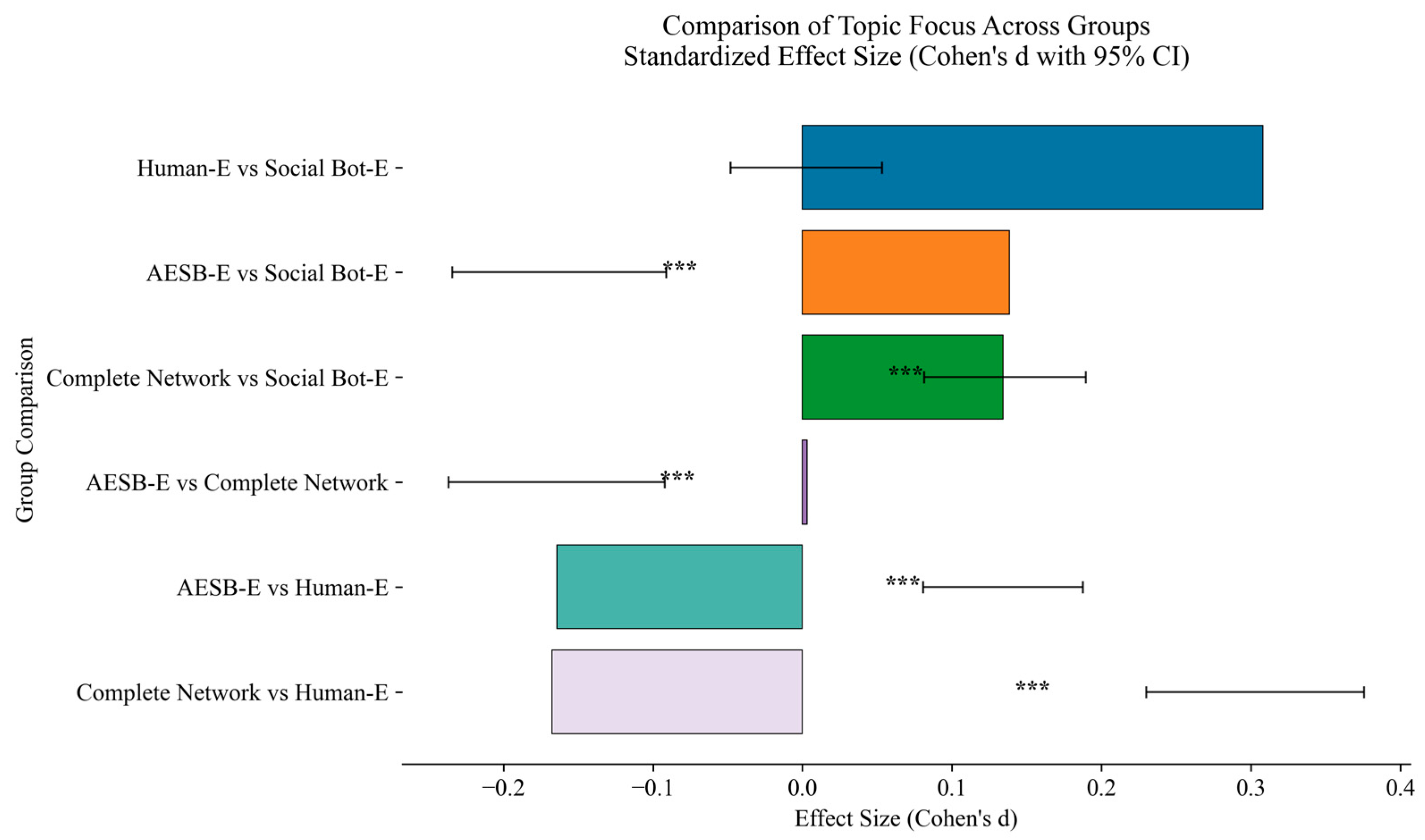

5.1. Node-Level Structural Influence

5.2. Community-Level Structural Influence

5.3. Network-Level Structural Influence

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Skoric, M.M.; Liu, J.; Jaidka, K. Electoral and public opinion forecasts with social media data: A meta-analysis. Information 2020, 11, 187. [Google Scholar] [CrossRef]

- Chen, C.; Wu, K.; Srinivasan, V.; Zhang, X. Battling the internet water army: Detection of hidden paid posters. In Proceedings of the IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), Niagara, ON, Canada, 25–28 August 2013. [Google Scholar]

- Wang, K.; Xiao, Y.; Xiao, Z. Detection of internet water army in social network. In Proceedings of the 2014 International Conference on Computer, Communications and Information Technology (CCIT 2014), Beijing, China, 15–17 October 2014. [Google Scholar]

- Chen, L.; Chen, J.; Xia, C. Social network behavior and public opinion manipulation. J. Inf. Secur. Appl. 2022, 64, 103060. [Google Scholar] [CrossRef]

- Hajli, N.; Saeed, U.; Tajvidi, M.; Shirazi, F. Social bots and the spread of disinformation in social media: The challenges of artificial intelligence. Br. J. Manag. 2022, 33, 1238–1253. [Google Scholar] [CrossRef]

- Lopez-Joya, S.; Diaz-Garcia, J.A.; Ruiz, M.D.; Martin-Bautista, M.J. Dissecting a social bot powered by generative AI: Anatomy, new trends and challenges. Soc. Netw. Anal. Min. 2025, 15, 7. [Google Scholar] [CrossRef]

- Xie, Z. Who is spreading AI-generated health rumors? A study on the association between AIGC interaction types and the willingness to share health rumors. Soc. Media Soc. 2025, 11, 20563051251323391. [Google Scholar] [CrossRef]

- Ferrara, E. Social bot detection in the age of ChatGPT: Challenges and opportunities. First Monday 2023, 28, 13185. [Google Scholar] [CrossRef]

- Zeng, K.; Wang, X.; Zhang, Q.; Zhang, X.; Wang, F.Y. Behavior modeling of internet water army in online forums. In Proceedings of the 19th IFAC World Congress, Cape Town, South Africa, 24–29 August 2014. [Google Scholar]

- Zhao, B.; Ren, W.; Zhu, Y.; Zhang, H. Manufacturing conflict or advocating peace? A study of social bots agenda building in the Twitter discussion of the Russia-Ukraine war. J. Inf. Technol. Polit. 2024, 21, 176–194. [Google Scholar] [CrossRef]

- Al-Rawi, A.; Shukla, V. Bots as active news promoters: A digital analysis of COVID-19 tweets. Information 2020, 11, 461. [Google Scholar] [CrossRef]

- Gong, X.; Cai, M.; Meng, X.; Yang, M.; Wang, T. How Social Bots Affect Government-Public Interactions in Emerging Science and Technology Communication. Int. J. Hum.-Comput. Interact. 2025, 41, 1–16. [Google Scholar] [CrossRef]

- Ferrara, E.; Varol, O.; Davis, C.; Menczer, F.; Flammini, A. The rise of social bots. Commun. ACM 2016, 59, 96–104. [Google Scholar] [CrossRef]

- Ross, B.; Pilz, L.; Cabrera, B.; Brachten, F.; Neubaum, G.; Stieglitz, S. Are social bots a real threat? An agent-based model of the spiral of silence to analyse the impact of manipulative actors in social networks. Eur. J. Inf. Syst. 2019, 28, 394–412. [Google Scholar] [CrossRef]

- Luo, H.; Meng, X.; Zhao, Y.; Cai, M. Rise of social bots: The impact of social bots on public opinion dynamics in public health emergencies from an information ecology perspective. Telemat. Inform. 2023, 85, 102051. [Google Scholar] [CrossRef]

- Cheng, C.; Luo, Y.; Yu, C. Dynamic mechanism of social bots interfering with public opinion in network. Physica A 2020, 551, 124163. [Google Scholar] [CrossRef]

- Cai, M.; Luo, H.; Meng, X.; Cui, Y.; Wang, W. Network distribution and sentiment interaction: Information diffusion mechanisms between social bots and human users on social media. Inf. Process. Manag. 2023, 60, 103197. [Google Scholar] [CrossRef]

- Stella, M.; Ferrara, E.; De Domenico, M. Bots increase exposure to negative and inflammatory content in online social systems. Proc. Natl. Acad. Sci. USA 2018, 115, 12435–12440. [Google Scholar] [CrossRef]

- Orabi, M.; Mouheb, D.; Al Aghbari, Z.; Kamel, I. Detection of bots in social media: A systematic review. Inf. Process. Manag. 2020, 57, 102250. [Google Scholar] [CrossRef]

- Feng, S.; Wan, H.; Wang, N.; Tan, Z.; Luo, M.; Tsvetkov, Y. What does the bot say? Opportunities and risks of large language models in social media bot detection. arXiv 2024, arXiv:2402.00371. [Google Scholar] [CrossRef]

- Gurajala, S.; White, J.S.; Hudson, B.; Voter, B.R.; Matthews, J.N. Profile characteristics of fake Twitter accounts. Big Data Soc. 2016, 3, 2053951716674236. [Google Scholar] [CrossRef]

- Cai, M.; Luo, H.; Meng, X.; Cui, Y.; Wang, W. Differences in behavioral characteristics and diffusion mechanisms: A comparative analysis based on social bots and human users. Front. Phys. 2022, 10, 875574. [Google Scholar] [CrossRef]

- Pozzana, I.; Ferrara, E. Measuring bot and human behavioral dynamics. Front. Phys. 2020, 8, 125. [Google Scholar] [CrossRef]

- Subrahmanian, V.S.; Azaria, A.; Durst, S.; Kagan, V.; Galstyan, A.; Lerman, K.; Zhu, L.; Ferrara, E.; Flammini, A.; Menczer, F. The DARPA Twitter Bot Challenge. Computer 2016, 49, 38–46. [Google Scholar] [CrossRef]

- Kudugunta, S.; Ferrara, E. Deep neural networks for bot detection. Inf. Sci. 2018, 467, 312–322. [Google Scholar] [CrossRef]

- Chu, Z.; Gianvecchio, S.; Wang, H.; Jajodia, S. Detecting automation of Twitter accounts: Are you a human, bot, or cyborg? IEEE Trans. Dependable Secur. Comput. 2012, 9, 811–824. [Google Scholar] [CrossRef]

- Mazza, M.; Avvenuti, M.; Cresci, S.; Tesconi, M. Investigating the difference between trolls, social bots, and humans on Twitter. Comput. Commun. 2022, 196, 23–36. [Google Scholar] [CrossRef]

- Gilani, Z.; Farahbakhsh, R.; Tyson, G.; Wang, L.; Crowcroft, J. An in-depth characterisation of bots and humans on Twitter. arXiv 2017, arXiv:1704.01508. [Google Scholar] [CrossRef]

- Chavoshi, N.; Hamooni, H.; Mueen, A. DeBot: Twitter bot detection via warped correlation. In Proceedings of the IEEE International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016. [Google Scholar]

- Varol, O.; Ferrara, E.; Davis, C.; Menczer, F.; Flammini, A. Online human-bot interactions: Detection, estimation, and characterization. In Proceedings of the International AAAI Conference on Web and Social Media (ICWSM), Montréal, QC, Canada, 15–18 May 2017. [Google Scholar]

- Dickerson, J.P.; Kagan, V.; Subrahmanian, V.S. Using Sentiment to Detect Bots on Twitter: Are Humans More Opinionated Than Bots? In Proceedings of the IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Beijing, China, 17–20 August 2014. [Google Scholar]

- Ilias, L.; Kazelidis, I.M.; Askounis, D. Multimodal detection of bots on X (Twitter) using transformers. IEEE Trans. Inf. Forensics Secur. 2024, 19, 7320–7334. [Google Scholar] [CrossRef]

- Benjamin, V.; Raghu, T.S. Augmenting social bot detection with crowd-generated labels. Inf. Syst. Res. 2023, 34, 487–507. [Google Scholar] [CrossRef]

- Wasserman, S.; Faust, K. Social Network Analysis: Methods and Applications; Cambridge University Press: Cambridge, UK, 1994. [Google Scholar]

- Gardasevic, S.; Jaiswal, A.; Lamba, M.; Funakoshi, J.; Chu, K.-H.; Shah, A.; Sun, Y.; Pokhrel, P.; Washington, P. Public health using social network analysis during the COVID-19 era: A systematic review. Information 2024, 15, 690. [Google Scholar] [CrossRef]

- Peng, Y.; Zhao, Y.; Hu, J. On the role of community structure in evolution of opinion formation: A new bounded confidence opinion dynamics. Inf. Sci. 2023, 621, 672–690. [Google Scholar] [CrossRef]

- Xiong, M.; Wang, Y.; Cheng, Z. Research on modeling and simulation of information cocoon based on opinion dynamics. In Proceedings of the 2021 9th International Conference on Information Technology: IoT and Smart City, Guangzhou, China, 22–25 December 2021. [Google Scholar]

- Zhang, Z.-K.; Liu, C.; Zhan, X.-X.; Lu, X.; Zhang, C.-X.; Zhang, Y.-C. Dynamics of information diffusion and its applications on complex networks. Phys. Rep. 2016, 651, 1–34. [Google Scholar] [CrossRef]

- Zhang, L.; Peng, T.-Q. Breadth, depth, and speed: Diffusion of advertising messages on microblogging sites. Internet Res. 2015, 25, 453–470. [Google Scholar] [CrossRef]

- Zhang, J.; Luo, Y. Degree Centrality, Betweenness Centrality, and Closeness Centrality in Social Network. In Proceedings of the 2nd International Conference on Modelling, Simulation and Applied Mathematics, Bangkok, Thailand, 26–27 February 2017. [Google Scholar]

- Del Vicario, M.; Zollo, F.; Caldarelli, G.; Scala, A.; Quattrociocchi, W. Mapping social dynamics on Facebook: The Brexit debate. Soc. Netw. 2017, 50, 6–16. [Google Scholar] [CrossRef]

- Zannettou, S.; Sirivianos, M.; Blackburn, J.; Kourtellis, N. The web of false information: Rumors, fake news, hoaxes, clickbait, and various other shenanigans. J. Data Inf. Qual. 2019, 11, 1–37. [Google Scholar] [CrossRef]

- Matsubara, Y.; Sakurai, Y.; Prakash, B.A.; Li, L.; Faloutsos, C. Rise and Fall Patterns of Information Diffusion: Model and Implications. In Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012. [Google Scholar]

- Newman, M.E. Modularity and community structure in networks. Proc. Natl. Acad. Sci. USA 2006, 103, 8577–8582. [Google Scholar] [CrossRef]

- Conover, M.; Ratkiewicz, J.; Francisco, M.; Gonçalves, B.; Menczer, F.; Flammini, A. Political Polarization on Twitter. In Proceedings of the International AAAI Conference on Web and Social Media, Barcelona, Spain, 17–21 July 2011. [Google Scholar]

- Centola, D. The spread of behavior in an online social network experiment. Science 2010, 329, 1194–1197. [Google Scholar] [CrossRef]

- Liben-Nowell, D.; Kleinberg, J. Tracing information flow on a global scale using Internet chain-letter data. Proc. Natl. Acad. Sci. USA 2008, 105, 4633–4638. [Google Scholar] [CrossRef]

- Glik, D.; Prelip, M.; Myerson, A.; Eilers, K. Fetal alcohol syndrome prevention using community-based narrowcasting campaigns. Health Promot. Pract. 2008, 9, 93. [Google Scholar] [CrossRef] [PubMed]

- Del Vicario, M.; Bessi, A.; Zollo, F.; Petroni, F.; Scala, A.; Caldarelli, G.; Stanley, H.E.; Quattrociocchi, W. The spreading of misinformation online. Proc. Natl. Acad. Sci. USA 2016, 113, 554–559. [Google Scholar] [CrossRef] [PubMed]

- Lobel, I.; Sadler, E. Information diffusion in networks through social learning. Theor. Econ. 2015, 10, 807–851. [Google Scholar] [CrossRef]

- Sufi, F. Social media analytics on Russia–Ukraine cyber war with natural language processing: Perspectives and challenges. Information 2023, 14, 485. [Google Scholar] [CrossRef]

- Marigliano, R.; Ng, L.H.X.; Carley, K.M. Analyzing digital propaganda and conflict rhetoric: A study on Russia’s bot-driven campaigns and counter-narratives during the Ukraine crisis. Soc. Netw. Anal. Min. 2024, 14, 170. [Google Scholar] [CrossRef]

- Chen, H.; Wu, L.; Chen, J.; Lu, W.; Ding, J. A comparative study of automated legal text classification using random forests and deep learning. Inf. Process. Manag. 2022, 59, 102798. [Google Scholar] [CrossRef]

- Borgatti, S.P.; Mehra, A.; Brass, D.J.; Labianca, G. Network analysis in the social sciences. Science 2009, 323, 892–895. [Google Scholar] [CrossRef] [PubMed]

| Attribute | Dimension | Indicator | Measurement Method | Reference |

|---|---|---|---|---|

| Static Attribute | Account Profile | Information Completeness | Indicates whether the account profile is fully completed. Judged by the “profile description” field: 1 if non-empty, 0 otherwise. | [15] |

| Nickname Autonomy | Ratio of non-standard characters in nickname to total characters. Computed by analyzing composition, special characters, length, and AI pattern conformity (0–1). | [15] | ||

| Avatar Consistency | Assesses frequency of avatar changes across posts. Represented by the ratio of unique avatars to total posts. | [24] | ||

| Influence | Verification Status | Whether the account is officially verified by the platform. Judged by “blogger verification” field: 1 if non-empty, 0 otherwise. | [15,25] | |

| Follower-Following Ratio | Ratio of followers to followers. Represented by dividing follower count by total follower count. | [13,26] | ||

| Interaction Abnormality | Whether repost, comment, and like frequencies are abnormal. Abnormal thresholds determined via median absolute deviation (MAD). | [24] | ||

| Dynamic Behavior | Behavior Pattern | Posting Frequency | Average daily posts. Calculated as total posts divided by active days. | [26] |

| Post Editing Degree | Degree of post modification. Ratio of edited posts to total posts. | [27,28] | ||

| Activity Abnormality | Degree of abnormal posting activity (e.g., late-night posting). Ratio of late-night posts to total posts. | [23,29] | ||

| Behavior Quality | Content Length | Average post length in characters. Calculated as average characters per post. | [15,24,30] | |

| Topic Diversity | Range and diversity of topics. Calculated as number of unique topic tags in posts. | [30,31] | ||

| Multimodality | Richness of media forms (e.g., images, videos). Ratio of multimodal posts to total posts. | [32,33] |

| Model | Accuracy | Precision | Recall | F1 Score | AUC | Brier Score |

|---|---|---|---|---|---|---|

| KNN | 0.912 | 0.912 | 0.912 | 0.911 | 0.975 | 0.058 |

| SVM | 0.941 | 0.947 | 0.941 | 0.940 | 0.928 | 0.066 |

| Naive Bayes | 0.941 | 0.947 | 0.941 | 0.940 | 0.952 | 0.059 |

| Decision Tree | 0.926 | 0.927 | 0.926 | 0.926 | 0.923 | 0.074 |

| XGBoost | 0.956 | 0.959 | 0.956 | 0.955 | 0.968 | 0.047 |

| Feature | AIGC Mean | UGC Mean | AIGC Std | UGC Std | p-Value |

|---|---|---|---|---|---|

| Type-Token | 0.686 | 0.714 | 0.172 | 0.144 | 0.000 |

| Sentimental score | 0.786 | 0.766 | 0.359 | 0.372 | 0.359 |

| Average Characters | 553 | 295 | 741 | 475 | 0.000 |

| Average Words | 312 | 166 | 417 | 271 | 0.000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, J.; Zhang, D.; Zhu, J.; Wang, F.; Bi, C. Speaking like Humans, Spreading like Machines: A Study on Opinion Manipulation by Artificial-Intelligence-Generated Content Driving the Internet Water Army on Social Media. Information 2025, 16, 850. https://doi.org/10.3390/info16100850

Zhou J, Zhang D, Zhu J, Wang F, Bi C. Speaking like Humans, Spreading like Machines: A Study on Opinion Manipulation by Artificial-Intelligence-Generated Content Driving the Internet Water Army on Social Media. Information. 2025; 16(10):850. https://doi.org/10.3390/info16100850

Chicago/Turabian StyleZhou, Jinghong, Dandan Zhang, Jiawei Zhu, Fan Wang, and Chongwu Bi. 2025. "Speaking like Humans, Spreading like Machines: A Study on Opinion Manipulation by Artificial-Intelligence-Generated Content Driving the Internet Water Army on Social Media" Information 16, no. 10: 850. https://doi.org/10.3390/info16100850

APA StyleZhou, J., Zhang, D., Zhu, J., Wang, F., & Bi, C. (2025). Speaking like Humans, Spreading like Machines: A Study on Opinion Manipulation by Artificial-Intelligence-Generated Content Driving the Internet Water Army on Social Media. Information, 16(10), 850. https://doi.org/10.3390/info16100850