Boosting LiDAR Point Cloud Object Detection via Global Feature Fusion

Abstract

1. Introduction

2. Materials and Methods

2.1. Point Cloud-Based Target Detection Methods

2.2. State Space Models

2.3. Network Architecture

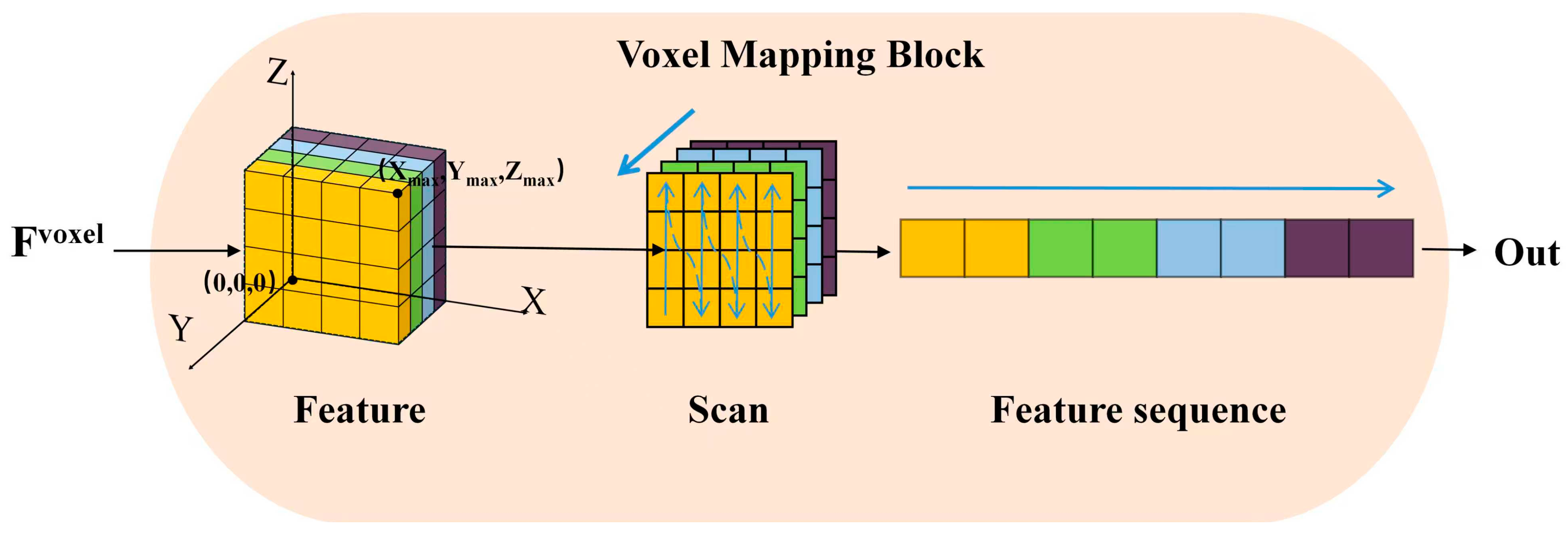

2.4. Point Cloud Feature Extract Block

| Algorithm 1: Bidirectional Voxel Mapping Block (VMB) |

| Input: |

| Voxel feature tensor F_voxel ∈ R(X_max × Y_max × Z_max × C) |

| Output: |

| Serialized bidirectional feature sequence F_out |

| Initialize empty sequences Sq+ ← [], Sq− ← [] |

| # Forward traversal (Sq+) |

| for x = 0 to X_max−1 do |

| for y = 0 to Y_max−1 do |

| for z = 0 to Z_max−1 do |

| Sq+.append(F_voxel [x, y, z, :]) |

| end for |

| end for |

| end for |

| # Reverse traversal (Sq−) |

| for x = X_max−1 downto 0 do |

| for y = Y_max−1 downto 0 do |

| for z = Z_max−1 downto 0 do |

| Sq−.append(F_voxel [x, y, z, :]) |

| end for |

| end for |

| end for |

| # Feature extraction using two SSMs |

| F+ ← SSM(Sq+) |

| F− ← SSM(Sq−) |

| # Fusion and dimensionality reduction |

| F_fused ← Fuse(F+, F−) |

| F_out ← Reduce(F_fused, target_dim = C) |

| return F_out |

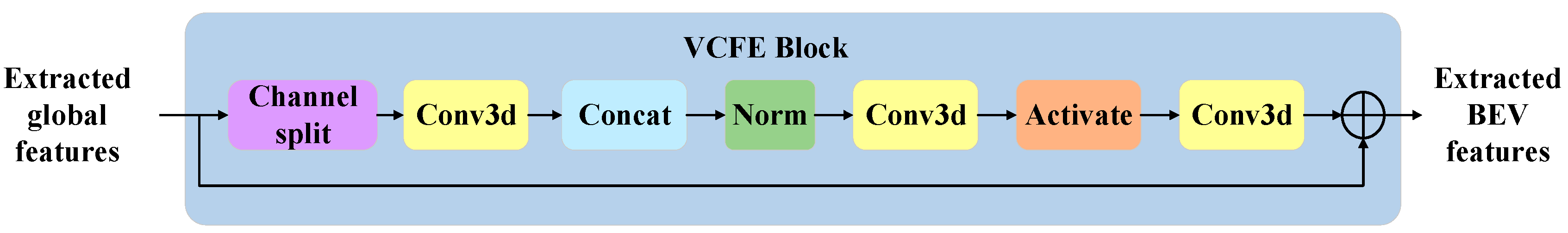

2.5. Voxel Channel Feature Extract

3. Results

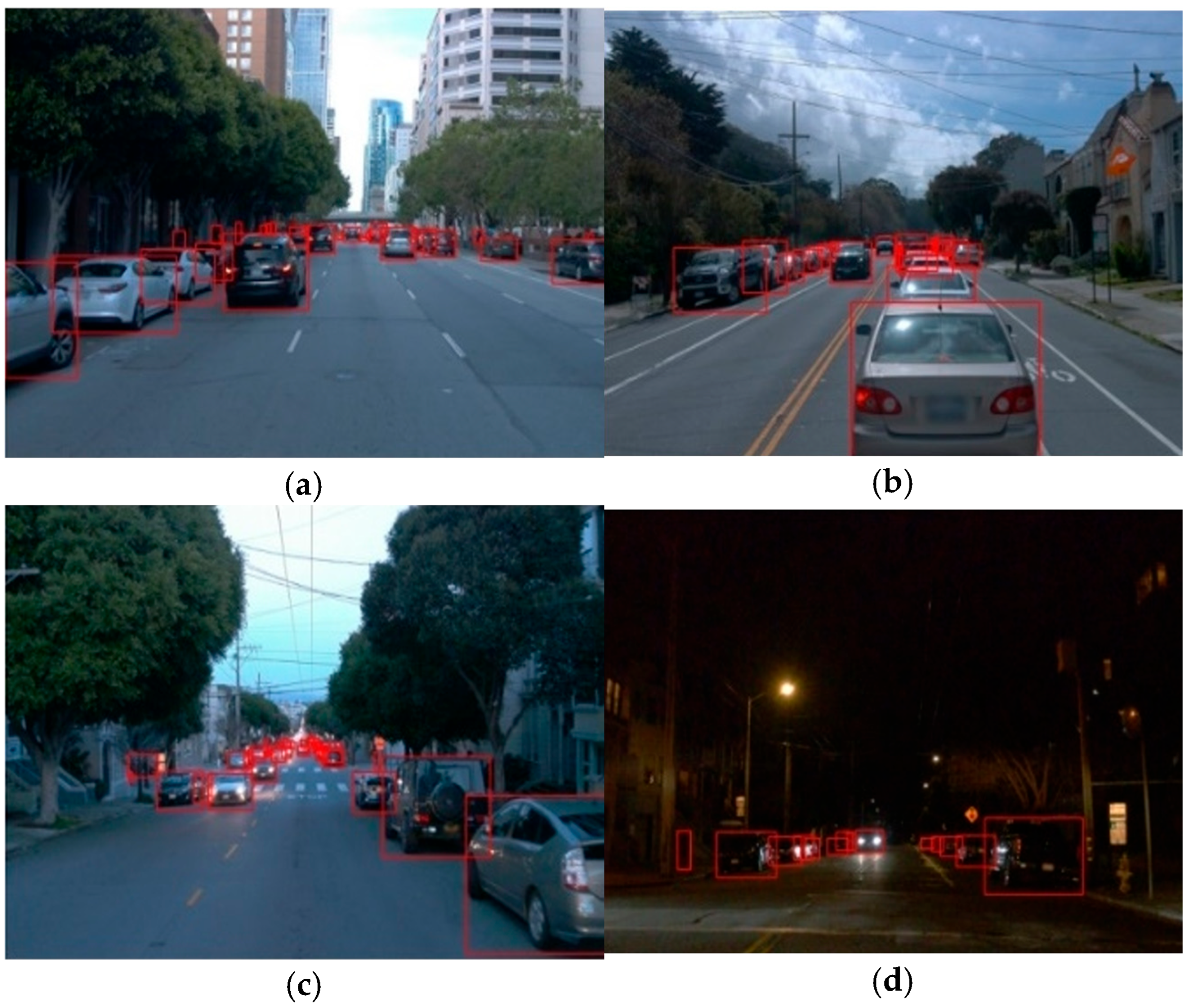

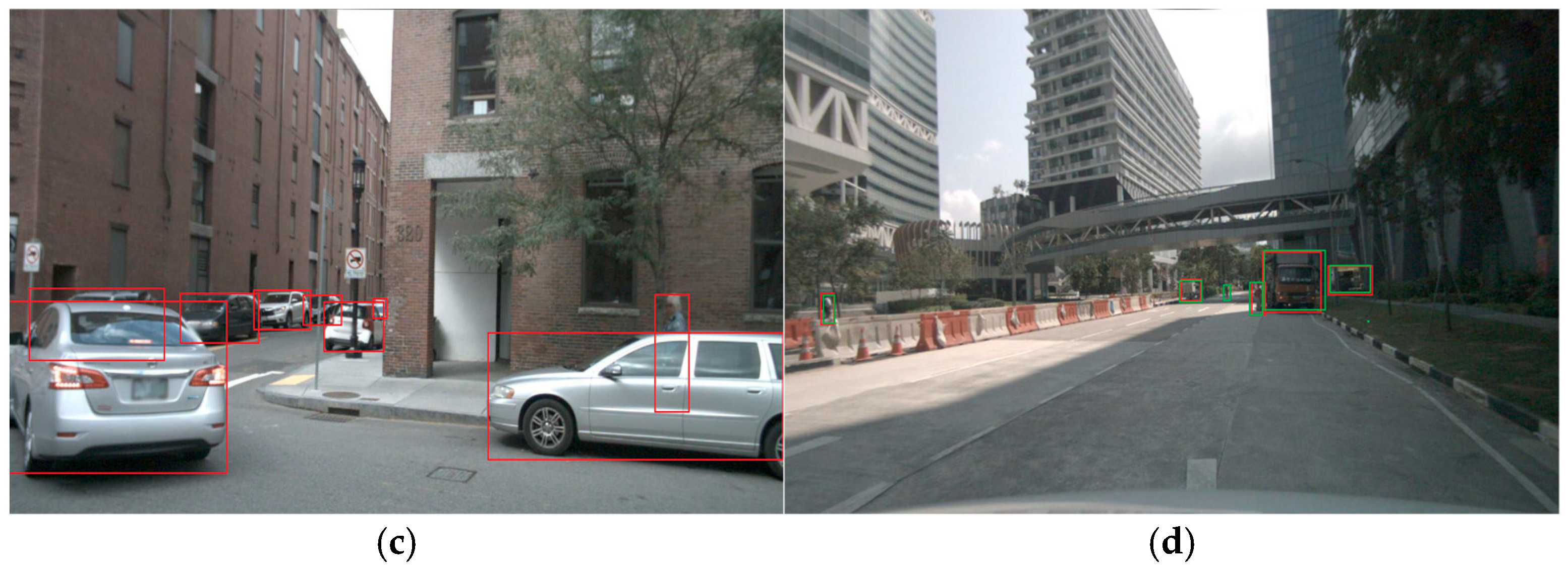

3.1. Datasets

3.2. Experimental Details

3.3. Experimental Results

| NDS | mAP | Car | Truck | Bus | Trailer | C.V | Ped. | Motor | Bicycle | T.C. | Barrier | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3DSSD [10] | 56.4 | 42.7 | 81.2 | 47.2 | 61.4 | 30.5 | 12.6 | 70.2 | 36.0 | 8.6 | 31.1 | 47.9 |

| Pointpillar [15] | 44.9 | 29.5 | 70.5 | 25.0 | 34.4 | 20.0 | 4.5 | 59.9 | 16.7 | 1.6 | 29.6 | 33.2 |

| Second [16] | - | 27.1 | 75.5 | 21.9 | 29.0 | 13.0 | 0.4 | 59.9 | 16.9 | 0 | 22.5 | 32.2 |

| DSVT [23] | 71.1 | 66.4 | 87.4 | 62.6 | 75.9 | 42.1 | 25.3 | 88.2 | 74.8 | 58.7 | 77.8 | 70.9 |

| SASA [52] | 61.0 | 45.0 | 76.8 | 45.0 | 66.2 | 36.5 | 16.1 | 69.1 | 39.6 | 16.9 | 29.9 | 53.6 |

| TransFusion-L [53] | 70.1 | 65.5 | 86.9 | 60.8 | 73.1 | 43.4 | 25.2 | 87.5 | 72.9 | 57.3 | 77.2 | 70.3 |

| FCOS-LiDAR [54] | 57.1 | 63.2 | 82.1 | 52.3 | 65.2 | 33.6 | 18.3 | 84.1 | 58.5 | 35.3 | 73.4 | 67.9 |

| PVT-SSD [28] | 65.0 | 53.6 | 79.4 | 43.48 | 62.1 | 34.2 | 21.7 | 79.8 | 53.4 | 38.2 | 56.6 | 67.1 |

| LDGF (ours) | 71.4 | 66.9 | 88.1 | 63.8 | 77.7 | 45.0 | 28.6 | 88.2 | 74.1 | 58.2 | 78.7 | 66.6 |

| Method | Modalities | Drawbacks | mAP | NDS |

|---|---|---|---|---|

| CRN [48] | Camera + Radar | Complex sensor calibration, moderate computational overhead | 57.5 | 62.4 |

| CRAFT [49] | Camera + Radar | High training data demand, sensitive to radar sparsity | 41.1 | 52.3 |

| RCBEVDet [50] | Camera + Radar | Real-time performance is hardware-dependent, lower depth precision vs. LiDAR | 55.0 | 63.9 |

| RCBEVDet++ [51] (ResNet50) | Camera + Radar | Increased computational complexity, sensitive to radar point sparsity | 51.9 | 60.4 |

| RCBEVDet++ (ViT-Large) | Camera + Radar | 67.3 | 72.7 | |

| LDGF (ours) | LiDAR (only) | Limited to single modality, no multi-modal gains | 66.9 | 71.4 |

3.4. Ablation Experiments

| Ablation | Car | Pedestrian | ||||||

|---|---|---|---|---|---|---|---|---|

| Level 1 | Level 2 | Level 1 | Level 2 | |||||

| AP | APH | AP | APH | AP | APH | AP | APH | |

| Baseline | 72.18 | 71.53 | 64.59 | 63.94 | 79.75 | 74.25 | 73.92 | 68.67 |

| +PFEB | 72.33 | 71.82 | 64.79 | 64.32 | 80.16 | 74.47 | 74.37 | 68.97 |

| +VCFE | 72.84 | 72.29 | 65.53 | 64.79 | 80.46 | 75.15 | 74.75 | 69.69 |

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, Y.; Wen, J.; Gong, R.; Ren, B.; Li, W.; Cheng, C.; Liu, H.; Sebe, N. PVAFN: Point-Voxel Attention Fusion Network with Multi-Pooling Enhancing for 3D Object Detection. Expert Syst. Appl. 2025, 281, 127608. [Google Scholar] [CrossRef]

- Zheng, Q.; Wu, S.; Wei, J. VoxT-GNN: A 3D Object Detection Approach from Point Cloud Based on Voxel-Level Transformer and Graph Neural Network. Inf. Process. Manag. 2025, 62, 104155. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, H.; Cai, Y.; Chen, L.; Li, Y. TransFusion: Transformer-Based Multi-Modal Fusion for 3D Object Detection in Foggy Weather Based on Spatial Vision Transformer. IEEE Trans. Intell. Transp. Syst. 2024, 25, 10652–10666. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Choy, C.; Gwak, J.Y.; Savarese, S. 4D spatio-temporal convnets: Minkowski convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3075–3084. [Google Scholar]

- Deng, J.; Zhang, S.; Dayoub, F.; Ouyang, W.; Zhang, Y.; Reid, I. PoIFusion: Multi-Modal 3D Object Detection via Fusion at Points of Interest. arXiv 2024, arXiv:arXiv:2403.09212. [Google Scholar]

- Zhang, Y.; Wang, Y.; Cui, Y.; Chau, L.-P. 3DGeoDet: General-purpose geometry-aware image-based 3D object detection. arXiv 2025, arXiv:arXiv:2506.09541. [Google Scholar]

- Hamilton, J.D. State-space models. Handb. Econom. 1994, 4, 3039–3080. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3dssd: Point-based 3d single stage object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11040–11048. [Google Scholar]

- Zhang, Y.; Hu, Q.; Xu, G.; Ma, Y.; Wan, J.; Guo, Y. Not all points are equal: Learning highly efficient point-based detectors for 3d lidar point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18953–18962. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Graham, B.; Engelcke, M.; Van Der Maaten, L. 3D semantic segmentation with submanifold sparse convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9224–9232. [Google Scholar]

- Graham, B.; Van der Maaten, L. Submanifold sparse convolutional networks. arXiv 2017, arXiv:1706.01307. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Zheng, W.; Tang, W.; Chen, S.; Jiang, L.; Fu, C.-W. Cia-ssd: Confident iou-aware single-stage object detector from point cloud. Proc. AAAI Conf. Artif. Intell. 2021, 35, 3555–3562. [Google Scholar] [CrossRef]

- Wang, H.; Chen, Z.; Cai, Y.; Chen, L.; Li, Y.; Sotelo, M.A.; Li, Z. Voxel-RCNN-Complex: An effective 3D point cloud object detector for complex traffic conditions. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar] [CrossRef]

- Liu, W.; Zhu, D.; Luo, H.; Li, Y. 3D Object Detection in LiDAR Point Clouds Fusing Point Attention Mechanism. Acta Photonica Sin. 2023, 52, 221–231. [Google Scholar]

- Chen, Y.; Liu, J.; Zhang, X.; Qi, X.; Jia, J. Voxelnext: Fully sparse voxelnet for 3d object detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 21674–21683. [Google Scholar]

- An, P.; Duan, Y.; Huang, Y.; Ma, J.; Chen, Y.; Wang, L.; Yang, Y.; Liu, Q. SP-Det: Leveraging Saliency Prediction for Voxel-based 3D Object Detection in Sparse Point Cloud. IEEE Trans. Multimed. 2024, 26, 2795–2808. [Google Scholar] [CrossRef]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-based 3d object detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11784–11793. [Google Scholar]

- Wang, H.; Shi, C.; Shi, S.; Lei, M.; Wang, S.; He, D.; Schiele, B.; Wang, L. Dsvt: Dynamic sparse voxel transformer with rotated sets. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13520–13529. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

- Shi, S.; Jiang, L.; Deng, J.; Wang, Z.; Guo, C.; Shi, J.; Wang, X.; Li, H. PV-RCNN++: Point-voxel feature set abstraction with local vector representation for 3D object detection. Int. J. Comput. Vis. 2023, 131, 531–551. [Google Scholar] [CrossRef]

- Sheng, H.; Cai, S.; Liu, Y.; Deng, B.; Huang, J.; Hua, X.S.; Zhao, M.J. Improving 3d object detection with channel-wise transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 2743–2752. [Google Scholar]

- Liu, H.; Dong, Z.; Tian, S. Object Detection Network Fusing Point Cloud and Voxel Information. Comput. Eng. Des. 2024, 45, 2771–2778. [Google Scholar] [CrossRef]

- Yang, H.; Wang, W.; Chen, M.; Lin, B.; He, T.; Chen, H.; He, X.; Ouyang, W. Pvt-ssd: Single-stage 3d object detector with point-voxel transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13476–13487. [Google Scholar]

- Deng, P.; Zhou, L.; Chen, J. PVC-SSD: Point-Voxel Dual-Channel Fusion with Cascade Point Estimation for Anchor-Free Single-Stage 3D Object Detection. IEEE Sens. J. 2024, 24, 14894–14904. [Google Scholar] [CrossRef]

- Tian, F.; Wu, B.; Zeng, H.; Zhang, M.; Hu, Y.; Xie, Y.; Wen, C.; Wang, Z.; Qin, X.; Han, W.; et al. A shape-attention Pivot-Net for identifying central pivot irrigation systems from satellite images using a cloud computing platform: An application in the contiguous US. GIScience Remote Sens. 2023, 60, 2165256. [Google Scholar] [CrossRef]

- Liu, M.; Wang, W.; Zhao, W. PVA-GCN: Point-voxel absorbing graph convolutional network for 3D human pose estimation from monocular video. Signal Image Video Process. 2024, 18, 3627–3641. [Google Scholar] [CrossRef]

- Xu, W.; Fu, T.; Cao, J.; Zhao, X.; Xu, X.; Cao, X.; Zhang, X. Mutual information-driven self-supervised point cloud pre-training. Knowl.-Based Syst. 2025, 307, 112741. [Google Scholar] [CrossRef]

- Spatial Sparse Convolution Library. 2022. Available online: https://github.com/traveller59/spconv (accessed on 23 September 2025).

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Gu, A.; Johnson, I.; Goel, K.; Saab, K.; Dao, T.; Rudra, A.; Ré, C. Combining recurrent, convolutional, and continuous-time models with linear state space layers. Adv. Neural Inf. Process. Syst. 2021, 34, 572–585. [Google Scholar]

- Smith, J.T.H.; Warrington, A.; Linderman, S.W. Simplified state space layers for sequence modeling. arXiv 2022, arXiv:2208.04933. [Google Scholar]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision Mamba: Efficient visual representation learning with bidirectional state space model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual state space model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Yu, W.; Wang, X. Mambaout: Do we really need mamba for vision? arXiv 2024, arXiv:2405.07992. [Google Scholar] [CrossRef]

- Zhou, Y.; Sun, P.; Zhang, Y.; Anguelov, D.; Gao, J.; Guo, J.; Ngiam, J.; Vasudevan, V. End-to-end multi-view fusion for 3d object detection in lidar point clouds. In Proceedings of the Conference on Robot Learning, Virtual, 16–18 November 2020; pp. 923–932. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11621–11631. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2446–2454. [Google Scholar]

- Liu, Y.X.; Pan, X.; Zhu, J. 3D Pedestrian Detection Based on Pointpillar: SelfAttention-pointpillar. In Proceedings of the 2024 9th International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Okinawa, Japan, 21–23 November 2024; Volume 9, pp. 1–10. [Google Scholar]

- Sheng, H.; Cai, S.; Zhao, N.; Deng, B.; Huang, J.; Hua, X.-S.; Zhao, M.-J.; Lee, G.H. Rethinking IoU-based optimization for single-stage 3D object detection. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2022; pp. 544–561. [Google Scholar]

- Ming, Q.; Miao, L.; Ma, Z.; Zhao, L.; Zhou, Z.; Huang, X.; Chen, Y.; Guo, Y. Deep dive into gradients: Better optimization for 3D object detection with gradient-corrected IoU supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5136–5145. [Google Scholar]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From points to parts: 3d object detection from point cloud with part-aware and part-aggregation network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2647–2664. [Google Scholar] [CrossRef]

- Guan, T.; Wang, J.; Lan, S.; Chandra, R.; Wu, Z.; Davis, L.; Manocha, D. M3detr: Multi-representation, multi-scale, mutual-relation 3d object detection with transformers. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 772–782. [Google Scholar]

- Kim, Y.; Shin, J.; Kim, S.; Lee, I.-J.; Choi, J.W.; Kum, D. RN: Camera Radar Net for Accurate, Robust, Efficient 3D Perception. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV 2023), Paris, France, 2–6 October 2023; pp. 17569–17580. [Google Scholar]

- Kim, Y.; Kim, S.; Choi, J.W.; Kum, D. Craft: Camera-radar 3d object detection with spatio-contextual fusion transformer. Proc. AAAI Conf. Artif. Intell. 2023, 37, 1160–1168. [Google Scholar] [CrossRef]

- Lin, Z.; Liu, Z.; Xia, Z.; Wang, X.; Wang, Y.; Qi, S.; Dong, Y.; Dong, N.; Zhang, L.; Zhu, C. Rcbevdet: Radar-camera fusion in bird’s eye view for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 14928–14937. [Google Scholar]

- Lin, Z.; Liu, Z.; Wang, Y.; Zhang, L.; Zhu, C. RCBEVDet++: Toward high-accuracy radar-camera fusion 3D perception network. arXiv 2024, arXiv:2409.04979. [Google Scholar]

- Chen, C.; Chen, Z.; Zhang, J.; Tao, D. Sasas: Semantics-augmented set abstraction for point-based 3d object detection. Proc. AAAI Conf. Artif. Intell. 2022, 36, 221–229. [Google Scholar] [CrossRef]

- Bai, X.; Hu, Z.; Zhu, X.; Huang, Q.; Chen, Y.; Fu, H.; Tai, C.-L. Transfusion: Robust lidar-camera fusion for 3d object detection with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Orleans, LA, USA, 18–24 June 2022; pp. 1090–1099. [Google Scholar]

- Tian, Z.; Chu, X.; Wang, X.; Wei, X.; Shen, C. Fully convolutional one-stage 3d object detection on lidar range images. Adv. Neural Inf. Process. Syst. 2022, 35, 34899–34911. [Google Scholar]

| Datasets | Day | Night | Dawn | All | |

|---|---|---|---|---|---|

| Waymo open dataset | Train | 646 | 79 | 73 | 798 |

| Percentage of training | 80.95% | 9.90% | 9.15% | 100% | |

| Val | 160 | 23 | 19 | 202 | |

| Percentage of evaluations | 79.21% | 11.39% | 9.41% | 100% | |

| Waymo-mini | Train | 113 | 14 | 13 | 140 |

| Percentage of training | 80.71% | 10% | 9.29% | 100% | |

| Val | 28 | 4 | 3 | 35 | |

| Percentage of evaluations | 80% | 11.43% | 8.57% | 100% |

| Method | Vehicle | Pedestrian | Cyclist | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Level1 | Level2 | Level1 | Level2 | Level1 | Level2 | |||||||

| AP | APH | AP | APH | AP | APH | AP | APH | AP | APH | AP | APH | |

| Pointpillar [15] | 61.67 | 60.83 | 54.34 | 53.58 | 58.33 | 52.73 | 53.02 | 48.02 | 56.18 | 55.76 | 54.8 | 53.98 |

| Second [16] | 61.72 | 61.04 | 54.34 | 53.72 | 58.69 | 53.46 | 53.43 | 48.92 | 53.85 | 52.34 | 52.12 | 51.4 |

| DSVT [23] | 72.18 | 71.53 | 64.59 | 63.94 | 79.75 | 74.25 | 73.92 | 68.67 | 74.32 | 73.28 | 71.89 | 70.86 |

| PVRCNN [24] | 68.2 | 67.4 | 60.35 | 59.63 | 67.32 | 62.78 | 61.36 | 56.9 | 67.63 | 66.16 | 65.46 | 64.32 |

| RDioU [44] | 64.62 | 64 | 56.98 | 56.42 | - | - | - | - | - | - | - | - |

| GCioU [45] | 64.68 | 64.29 | 57.18 | 56.67 | - | - | - | - | - | - | - | - |

| PartA2 [46] | 66.72 | 66.09 | 58.93 | 58.36 | 65.67 | 60.4 | 59.72 | 54.28 | 64.08 | 63.83 | 62.02 | 61.62 |

| M3DETR [47] | 67.31 | 66.56 | 59.48 | 58.81 | 63.78 | 58.63 | 57.92 | 52.45 | 66.59 | 65.15 | 64.45 | 63.18 |

| LDGF (ours) | 72.84 | 72.29 | 65.3 | 64.79 | 80.46 | 75.15 | 74.75 | 69.69 | 74.98 | 74.08 | 72.58 | 71.7 |

| Methods | Time Indicator | |

|---|---|---|

| Latency | FPS | |

| PointPillars [15] | 41.12 | 24.32 |

| SECOND [16] | 58.83 | 17 |

| DSVT [23] | 213 | 3.69 |

| PV-RCNN [24] | 408.83 | 2.45 |

| RDIoU [44] | 71.45 | 14 |

| GCIoU [45] | 64.93 | 15.4 |

| PartA2 [46] | 141.3 | 7.08 |

| M3DETR [47] | 895.15 | 1.07 |

| LDGF (ours) | 152.2 | 6.57 |

| Ablation | Car | Pedestrian | ||||||

|---|---|---|---|---|---|---|---|---|

| Level 1 | Level 2 | Level 1 | Level 2 | |||||

| AP | APH | AP | APH | AP | APH | AP | APH | |

| Baseline | 72.18 | 71.53 | 64.59 | 63.94 | 79.75 | 74.25 | 73.92 | 68.67 |

| Sq+ | 72.21 | 71.68 | 65.33 | 64.23 | 79.81 | 74.27 | 74.15 | 68.58 |

| Sq+ Sq− | 72.33 | 71.82 | 64.79 | 64.32 | 80.16 | 74.47 | 74.37 | 68.97 |

| Channel Size | Car | Pedestrian | ||||||

|---|---|---|---|---|---|---|---|---|

| Level1 | Level2 | Level1 | Level2 | |||||

| AP | APH | AP | APH | AP | APH | AP | APH | |

| 1 | 72.33 | 71.82 | 64.79 | 64.32 | 80.16 | 74.47 | 74.37 | 68.97 |

| 2 | 72.67 | 72.15 | 65.09 | 64.61 | 80.20 | 74.55 | 74.33 | 68.97 |

| 4 | 72.84 | 72.29 | 65.53 | 64.79 | 80.46 | 75.15 | 74.75 | 69.69 |

| 8 | 72.58 | 72.06 | 64.99 | 64.5 | 79.72 | 74.19 | 73.93 | 68.68 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Tian, F.; Sun, J.; Liu, Y. Boosting LiDAR Point Cloud Object Detection via Global Feature Fusion. Information 2025, 16, 832. https://doi.org/10.3390/info16100832

Zhang X, Tian F, Sun J, Liu Y. Boosting LiDAR Point Cloud Object Detection via Global Feature Fusion. Information. 2025; 16(10):832. https://doi.org/10.3390/info16100832

Chicago/Turabian StyleZhang, Xu, Fengchang Tian, Jiaxing Sun, and Yan Liu. 2025. "Boosting LiDAR Point Cloud Object Detection via Global Feature Fusion" Information 16, no. 10: 832. https://doi.org/10.3390/info16100832

APA StyleZhang, X., Tian, F., Sun, J., & Liu, Y. (2025). Boosting LiDAR Point Cloud Object Detection via Global Feature Fusion. Information, 16(10), 832. https://doi.org/10.3390/info16100832