Abstract

Game reviews heavily influence public perception. User feedback is crucial for developers, offering valuable insights to enhance game quality. In this research, Metacritic game reviews for Elden Ring were analyzed for topic modeling using Latent Dirichlet Allocation (LDA), Bidirectional Encoder Representations from Transformers (BERT), and a hybrid model combining both to identify effective methods for extracting underlying themes in player feedback. We analyzed and interpreted these models’ outputs to learn the game reviews. We aimed to identify the differences, similarities, and variations between the three to determine which provided more valuable and instructive information. Our findings indicate that each method successfully identified keywords with some similarities in identified words. The LDA model had the highest silhouette score, indicating the most distinct clustering. The LDA-BERT model had a 1% higher coherence score than LDA, indicating more meaningful topics.

Keywords:

game reviews; topic modeling; Elden Ring; Metacritic; LDA; BERT; game development; player feedback; user experience 1. Introduction

Game reviews and ratings appear as a critical tool that not only shapes players’ opinions about a game but also affects its commercial success. Research shows that some games fail before release due to a lack of community acceptance [1] and that gamers are a group of users that is extremely difficult to satisfy [2]. Assessing game success relies heavily on player involvement with the game [3], making the quality of games an important issue. Game developers need to have a deeper awareness of player issues in order to make improvements to the way users perceive the quality of their games. One way to achieve that is through analysis of game reviews and feedback.

According to one definition, game reviews provided by regular users are a type of video game journalism [4], and their objective is to explain and expound on which titles will make the gaming experience more enjoyable [5]. The purpose of game reviews is to enlighten the user about the game’s role and genre within the gaming spectrum. Many game reviews are so well written that they encourage the reader to purchase the game [6], implying that the game may not necessarily suit the genre that the reader is looking for but may still spark the reader’s interest in a new genre.

Reviews and ratings from critics and players alike are pivotal for the video game industry, offering deep insights into a game’s design, quality, and appeal, and significantly affecting game sales [7,8]. These evaluations are essential for developers and publishers, guiding game success, design improvements, and marketing strategies [9]. They also help players make informed gaming choices. The broader impact of reviews shapes industry trends and future game development. To tackle the challenge of analyzing extensive review data, topic modeling has become an invaluable analytical tool, helping to distill and interpret large datasets [10].

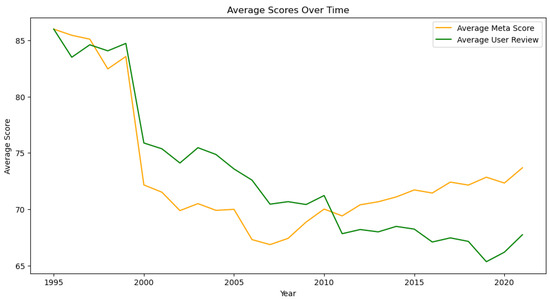

We conducted a comprehensive analysis of a large dataset from “Top Video Games 1995–2021—Metacritic” on Kaggle, containing 18,800 entries on video game reviews, to understand industry trends and how review factors correlate with player engagement metrics. This analysis provided key insights into player sentiments and experiences, guiding our use of topic modeling to further explore player feedback and its implications for game development. One of the analyses conducted, illustrated in Figure 1, compares critics’ “Average Meta Score” to users’ “Average User Review” scores over 25 years. This comparison reveals disparate trends and emphasizes the complexity of sentiment analysis. Initially, both scores decline, with critic scores stabilizing around 2005, while user scores continued to decrease. A significant gap emerges after the year 2000, reflecting differing assessment criteria. Recently, user scores have marginally improved, whereas critic evaluations have remained stable. This disparity highlights the importance of conducting in-depth sentiment analysis to understand the varying expectations and perspectives of critics and users, aiming to identify the underlying causes of score disparities.

Figure 1.

A comparison between average Meta score and User score from 1995 to 2020.

2. Research Questions

Our research aims to bridge the gap in game review analysis by applying advanced Natural Language Processing (NLP) techniques such as Latent Dirichlet Allocation (LDA), Bidirectional Encoder Representations from Transformers (BERT), and a hybrid LDA-BERT model on our dataset of Elden Ring reviews. We focus on the following research questions:

- What are the most frequently mentioned components in Elden Ring reviews?

- ⎼

- This question seeks to identify the key themes and aspects of the game that players discuss most frequently in their reviews.

- How do the different NLP techniques (LDA, BERT, and LDA-BERT) compare in terms of their ability to extract meaningful topics from the reviews?

- ⎼

- This question aims to evaluate the effectiveness of each method in capturing the underlying themes and sentiments in the player feedback.

- Which method provides the most comprehensive and actionable insights for game developers?

- ⎼

- This question addresses the practical implications of the different topic modeling techniques, focusing on which method offers the most valuable insights for improving game design and player experience.

3. Related Work

In this section, we review related literature that employs similar methodologies to our study.

Topic modeling is a common statistical approach that extracts latent variables from huge datasets [11]. In 2003, Blei et al. revolutionized topic modeling by introducing Latent Dirichlet Allocation (LDA), a method that identifies latent themes in extensive text collections by treating documents as mixtures of topics. This approach has significantly influenced text analysis and NLP, offering a method to uncover underlying themes in large datasets [12].

Previous work has applied Latent Dirichlet Allocation (LDA) across various domains. For instance, Huang et al. [13] utilized LDA to analyze online product reviews, aiming to improve business insights by uncovering key themes and sentiments. Similarly, Heng et al. [14] applied LDA to consumer feedback to explore factors influencing online purchase decisions. In the realm of autonomous systems and bioinformatics, Girdhar et al. [15] demonstrated the utility of LDA in understanding autonomous underwater vehicle operations, while Liu et al. [16] employed LDA to analyze gene expression data in bioinformatics.

The application of LDA extends to social media and online content analysis as well. Hong et al. [17] applied LDA to Twitter data to examine user behaviors and content trends. Tran et al. [18] used LDA to assess public sentiment on social media during health crises, and Lubis et al. [19] explored LDA’s effectiveness in summarizing online discussions. Kwon et al. [20] also employed LDA to analyze content on online forums, highlighting shifts in public opinion over time. In the context of movie reviews, studies by Duan et al. [21], Hennig-Thurau et al. [22], Hu et al. [23], Joshi et al. [24], King et al. [25], Reinstein and Snyder [26], and Wang et al. [27] have explored the use of LDA to identify and analyze themes and sentiments in viewer feedback on different datasets, which are crucial for understanding audience reception and predicting box office success.

Research on video game reviews has significantly benefited from LDA to uncover diverse insights. Yu et al. [28] applied LDA to e-sports game reviews on Steam, a digital distribution platform and community hub for video games. Similarly, Jeffrey et al. [29] utilized it for sentiment analysis and feature summarization in Steam reviews. Zagal et al. [30] focused on the readability and thematic content of game reviews. Li et al. [31] and Lin et al. [32] examined video game playability and the impact of reviews on player engagement and game success, respectively. Bond et al. [33] used review analysis to distinguish between successful and unsuccessful games, while Kang et al. [34] investigated the helpfulness of Steam reviews. Moreover, McCallum et al. [35] applied topic modeling to understand gaming communities, and Choi et al. [36] explored online reviewer behaviors. Gifford [37] and Kwak et al. [38] analyzed the content and identified success factors in game reviews. Significantly, Livingston et al. [39] explored how review tone and source influence player experiences, offering a nuanced understanding of the impact of reviews on gaming perceptions. Santos et al. [40] analyze discrepancies in video game reviews, highlighting how amateur (player) feedback, characterized by emotional and polarized ratings, influences game reputation predictions.

BERTopic is an effective neural topic modeling technique that can be used on many kinds of text input and produces topic representations while taking word semantic associations into consideration [41]. Leveraging NLP techniques like BERT for game review analysis, researchers have demonstrated their utility in various domains. Yu et al. [42] conducted sentiment analysis on e-sports reviews, while Uthirapathy et al. [43] combined BERT and LDA for Twitter sentiment analysis. Fadhlurrahman et al. [44] applied BERT and BiLSTM to Steam reviews, and Jain et al. [45] developed a BERT-DCNN model for reviews. Atagün et al. [46] compared LDA and BERT in event analysis, highlighting the adaptability of NLP techniques in extracting meaningful insights from textual data. George and Sumathi [47] developed a hybrid model that integrates Latent Dirichlet Allocation (LDA) with BERT to enhance topic modeling. Their approach combines LDA’s topic distributions with BERT’s contextual embeddings, using a scaling factor to balance the contributions of each. This method improves the interpretability and coherence of the resulting topics.

While there has been substantial work using LDA and BERT for topic modeling and sentiment analysis, our study addresses several gaps in the current literature:

- Combined Use of LDA, BERT, and LDA-BERT: Unlike previous studies that often focus on a single method, we compare the effectiveness of LDA, BERT, and a hybrid LDA-BERT model in extracting meaningful topics from game reviews. This comparison provides a comprehensive understanding of each method’s strengths and weaknesses.

- Comprehensive Preprocessing: Our study includes a detailed preprocessing phase, including automated translation and typo correction, which ensures high-quality data for analysis. This step is often overlooked in other studies, which may lead to less accurate results.

- Focused Analysis on a Popular Game: By concentrating on over 8000 reviews of Elden Ring, we provide a case study that demonstrates the practical application of these methods in a real-world scenario. This focused analysis highlights specific insights that can inform game development and player engagement strategies.

- Custom Data Extraction: While most existing studies rely on pre-built software for data extraction, we developed a custom Python-based script to extract reviews directly from Metacritic. This approach allowed for greater flexibility and control over the data collection process, ensuring that we could capture a comprehensive and high-quality dataset.

- Interpretation of Results: We conducted a thorough interpretation of the topic modeling results, identifying key themes and insights that are directly applicable to game development and marketing strategies.

In summary, our work builds on the existing body of research by integrating and comparing NLP techniques, implementing comprehensive preprocessing steps, and providing actionable insights for game developers based on a focused analysis of Elden Ring reviews. This approach addresses the gaps in current research and offers a replicable methodology for future studies.

4. Methodology

Data Collection

For our game selection process, we chose FromSoftware’s Elden Ring title from 2022 due to its high ratings and favorable user feedback. By focusing on Elden Ring, we wanted to understand the elements that contribute to its success and how positive reception from users impacts game reception on a broader scale. We collected 8176 reviews using a Python-based web scraping script. To ensure the stability of our web scraping process, we implemented several techniques within our custom Python-based script. First, we used the fake_useragent library to generate diverse user-agent headers, simulating typical browser behavior. This reduces the chances of being identified as automated traffic. Additionally, we introduced a one-second pause between requests with the time.sleep() function, preventing excessive server load and minimizing the likelihood of access restrictions.

The script extracted review content, user ratings, review dates, user names, and platforms. We implemented an automated translation process using the Google Translate API via the googletrans library to convert non-English user reviews into English, ensuring uniformity across the dataset. Language detection was performed using the langdetect library, and only non-English reviews were translated.

5. Data Preprocessing

Following the collection of our dataset, we initiated the preprocessing phase to refine and prepare the data for models. In order to ensure that the text data from game reviews are properly structured for efficient topic modeling, data preprocessing is an essential step in our study. The preprocessing pipeline refines the data at each stage:

- Cleaning: We carefully went over the text in this first stage to remove any unneeded elements that do not add any important information for analysis. For that purpose, we did perform the following steps:

- ⎼

- Eliminating handles, URLs, numbers, and punctuation: This step removes social media handles, web addresses, and numeric characters, which are typically irrelevant to the text analysis task.

- ⎼

- Splitting camel-cased words: It separates words that are joined in the camel case format, ensuring that each word is treated individually.

- ⎼

- Removing special characters and repeated letters: This cleans up text by eliminating unnecessary punctuation, asterisks, and reduces characters repeated more than twice in a single instance.

- ⎼

- Extracting content within parentheses and adjusting punctuation spacing: It deletes text enclosed in parentheses and ensures proper spacing after punctuation marks.

- ⎼

- Specific noise text removal: Targets and removes predefined phrases or patterns identified as noise, such as “product received for free”, to improve the quality of the dataset.

- ⎼

- Deduplication of consecutive repeated words or phrases: Identifies and collapses instances where the same word or phrase is repeated back-to-back, streamlining the text.

- Tokenization: Here, we broke down the text into its fundamental components, known as tokens, which usually correspond to words. For example, the sentence “Gamers love challenging levels” would be tokenized into [“Gamers”, “love”, “challenging”, “levels”]. This process is vital because it turns unstructured text into a format that our algorithms can interpret and analyze.

- Stop Word Removal: Stop words, which are common words in the English language such as “a”, “the”, “I“, were removed. Additionally, we identified and eliminated other frequent but uninformative words using a custom stop words list specifically tailored for game reviews. This list was iteratively refined by running the analysis and adjusting based on results to exclude words that did not add meaningful value. In addition to standard English stop words, this process also filtered out context-specific terms like “feel”, “like”, and “spoiler”. The full list of removed stop words can be found in Table A4.

- Normalization: During normalization, we converted all text to a consistent format to reduce complexity. This typically involves converting all characters to lowercase, thus equating words like “Game” and “game”, and removing punctuation. This step simplifies the text and helps avoid duplication of the same words in different cases or forms.

- Typo Correction: We utilized the SymSpell library to correct typos in the text. Each word in the review was compared against a frequency dictionary of English words, and the closest matching suggestion was selected to replace the original word, ensuring accurate and meaningful corrections.

5.1. Analytical Methods

Our analytical approach employs three distinct models to analyze the dataset: Latent Dirichlet Allocation (LDA), Bidirectional Encoder Representations from Transformers (BERT), and a hybrid model combining LDA and BERT (LDA-BERT), each offering unique insights into the data analysis process.

- Latent Dirichlet Allocation (LDA): LDA, introduced in 2003, serves as a foundational method for identifying the main themes within our corpus [12]. We configured our LDA model with a specific number of topics, set to 10, based on systematic evaluation involving coherence scores and perplexity, alongside a comparative analysis to ensure optimal topic uniqueness and interpretability. This model treats each review as a document, analyzing the distribution of topics and words within to uncover underlying patterns. Also, we have used Gensim library for the LDA model.

- Bidirectional Encoder Representations from Transformers (BERT): We utilized the ’bert-base-uncased’ variant from HuggingFace’s Transformers library. It is a deep learning model well-known for its natural language processing capabilities [48]. This model was selected for its computational efficiency, with 12 transformer layers and 768 hidden units per layer, enabling us to effectively process our dataset within available resources.The ’uncased’ version processes text in lowercase, aligning with our preprocessing steps to minimize orthographic variations and focus on semantic content. BERT transforms textual data into high-dimensional embeddings that capture the contextual relationships between words, which are then used for unsupervised clustering and topic analysis [49].To identify topics from the text data, we used BERT embeddings as the primary input for clustering. The process involved the following steps:Text Preprocessing: We first preprocessed the text data to clean and normalize them, ensuring consistency across the dataset.Tokenization and Embedding Generation: We used the ’bert-base-uncased’ variant of BERT to tokenize the text. The tokenizer broke down the text into tokens corresponding to BERT’s pre-trained vocabulary. Special tokens like [CLS] and [SEP] were added to structure the input. The tokenized text was then fed into the BERT model, which generated high-dimensional embeddings for each token. The embedding associated with the [CLS] token, which represents the entire sequence’s meaning, was extracted for further analysis. These embeddings capture the contextual relationships between words in each review.Clustering with K-Means: The [CLS] embeddings were used as input to a K-Means clustering algorithm. We specified the number of clusters (num_clusters = 10), which allowed us to categorize the reviews into distinct topics.Topic Identification: For each cluster, we analyzed the most frequent and representative words, which were identified based on the clustering of the BERT embeddings. These words were visualized in word clouds, where the size of each word corresponds to its frequency and importance within the cluster.

- Latent Dirichlet Allocation with Bidirectional Encoder Representations from Transformers (LDA-BERT): This hybrid approach combines the strengths of both LDA and BERT to provide a comprehensive analysis of the text data. LDA generates topic vectors, where each element represents the probability distribution of topics within a document. BERT, on the other hand, creates high-dimensional contextual embeddings that capture the nuanced meaning of words within the text.To integrate these two approaches effectively, we first applied a scaling factor () to the LDA vectors to ensure that they were on a comparable scale with the BERT embeddings. This step was crucial because the LDA vectors, which represent probabilities, are much lower-dimensional than the BERT embeddings, which capture richer semantic information across high-dimensional spaces. Without this scaling, there was a risk that the BERT embeddings would dominate the combined feature vector.The value of was determined empirically. We tested multiple scaling factors and evaluated the balance between LDA’s topic representations and BERT’s semantic embeddings during clustering. Through this process, we found that provided the optimal balance, ensuring that the LDA vectors contributed significantly to the combined feature vector without being overshadowed by the BERT embeddings.After scaling, we concatenated the LDA vectors with the BERT embeddings to form a combined feature vector for each document. This combined vector was then used for further analysis, including clustering and topic identification. By carefully balancing the contributions of both LDA and BERT, this method effectively captures both the broad thematic structure provided by LDA and the deep contextual insights offered by BERT, leading to a more nuanced understanding of user sentiment and preferences.Clustering with K-Means: We concatenated LDA topic vectors with BERT embeddings and applied K-Means clustering (num-clusters = 10) to the combined feature vectors. This allowed us to categorize the reviews into distinct topics by integrating thematic and contextual information.Topic Identification: Topic words were identified by leveraging the LDA component, which provides topic-word distributions. Since the BERT embeddings do not directly represent individual words in terms of topics, the LDA vectors were used to extract the most frequent topic words, while BERT embeddings added contextual depth. These words were visualized in word clouds, where word size reflects frequency and importance within the cluster.

5.2. Results Interpretation

In the final step, we critically analyzed each cluster to discern user preferences, providing key insights for game developers to refine products, align with consumer expectations, and enhance satisfaction. It is important to note that this analysis is inherently subjective, as it reflects our interpretations of the thematic clusters and their relevance to user preferences.

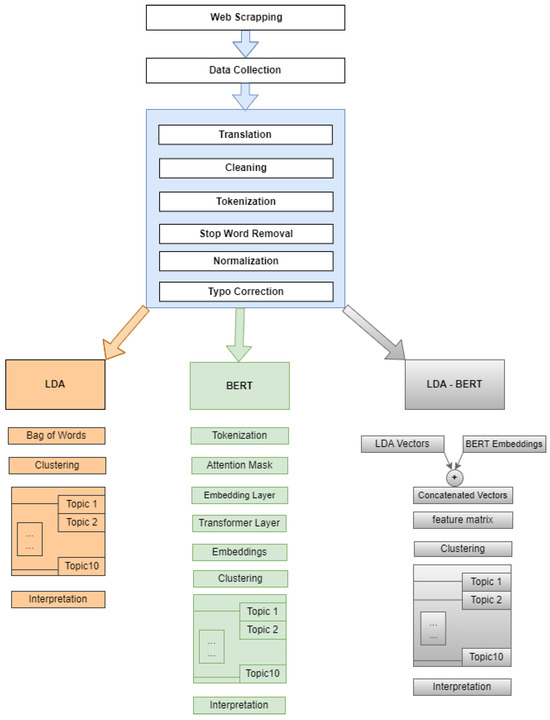

A comprehensive process flow of analysis methodologies is provided in Figure 2.

Figure 2.

Graphical summary of methodology.

6. Results

We have analyzed each cluster generated by the models, with the understanding that our familiarity with the game industry and player engagement strategies inherently involves a degree of subjectivity. The criteria applied were based on our understanding of key factors that drive player satisfaction and engagement in gaming. While our analysis is supported by quantitative clustering methods, the interpretation of the results reflects our knowledge and insights into the gaming community. This approach ensures that the basis of our analysis is both informed and relevant to understanding player feedback.

6.1. LDA Model Results and Interpretation

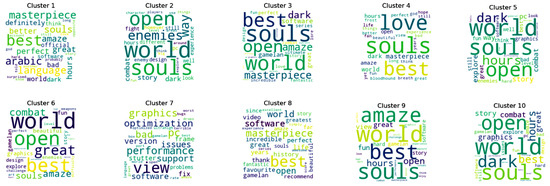

We have utilized LDAvis, a Python module, to visualize LDA topics, thereby aiding in data understanding. However, due to the substantial space required for its inclusion in this paper, we have chosen to present the top most salient terms for each topic using word clouds in Figure 3 and in Table A1.

Figure 3.

LDA Clusters.

6.1.1. Topic 1: Player Preferences in Open-World Gaming—World-Building, Combat, and Aesthetics

Topic 1, which accounts for 31.3% of the tokens in the whole analyzed text corpus, primarily centers on player conversations about important elements of open-world gaming experiences. Terms like “open”, “world”, and “explore” suggest an emphasis on large, explorable game environments. The presence of “combat”, “enemies”, and “difficulty” indicates that gameplay mechanics, particularly challenging combat elements, are a significant point of discussion. The words “dark” and “souls” suggest that the game heavily draws inspiration from Dark Souls—another franchise of FromSoftware. The use of “design” and “story” likely points to the importance of narrative and aesthetic appeal in player immersion. This topic underlines key aspects that are pivotal to creating engaging open-world games, a blend of expansive environments, strategic combat, and immersive storytelling.

6.1.2. Topic 2: Technical Challenges and Community Solutions in PC Gaming—A Focus on Performance, Graphics, and Game Optimization

Topic 2, comprising 14.4% of the tokens, centers around technical issues in PC gaming. Frequent terms like “pc”, “issues”, “performance”, and “graphics” suggest discussions are focused on the technical aspects of gaming performance. Words such as “stutter”, “frame”, and “drops” point to conversations about frame rate issues and graphical performance problems. The mention of “port” indicates a focus on the quality of game ports to PC, which can often be a source of technical concerns. The terms “dark” and “souls” could imply that these technical discussions may also be specific to games like the Dark Souls series, which are older games having much lower system requirements. Additionally, terms like “fix”, “help”, and “support” highlight a community engaged in troubleshooting and seeking solutions to enhance their gaming experience. This topic underscores the importance of optimization and technical support in the PC gaming community, which can significantly impact player satisfaction.

6.1.3. Topic 3: Exploring Narrative and Combat in Gaming—A Deep Dive into Game Mechanics, Storytelling, and Visual Experience

Topic 3, accounting for 11.2% of tokens, appears to center on the narrative and combat aspects of gaming. Prominent terms like “fun”, “story”, and “graphics” indicate a focus on the entertainment value and visual quality of games. Words such as “terrible”, “bad”, “enemy”, and “attack” suggest critical discussions of game mechanics or narratives. The presence of “fight”, “kill”, and “hit” reflects the combat elements of gameplay, while “empty”, “world”, and “controls” hint at evaluations of game world design and user interface. Additionally, “design”, “level”, “animations”, and “character” point towards discussions on the aesthetic and functional elements of game design. This topic suggests an engagement with the immersive and interactive components of gaming, where both narrative and action play significant roles in the gaming experience.

6.1.4. Topic 4: Celebrating Challenge and Immersion in Gaming—Discussions on Difficulty, Time Investment, and Open-World Masterpieces

Topic 4, accounting for 10.9% of tokens, explores the challenging nature of gaming. Words like “hard” and “challenge” point to player discussions about gameplay difficulty, and terms such as “love” and “masterpiece” reflect high praise for certain titles, emphasizing a community that values depth and difficulty in gaming experiences.

6.1.5. Topic 5: Embracing Exploration and Aesthetics in Gaming—A Journey through Open Worlds, Visual Beauty, and Engaging Challenges

Topic 5, with 7.6% of tokens, seems to focus on the exploration and aesthetic appreciation within games. Frequent terms like “world”, “open”, “explore”, and “discovery” indicate a strong interest in open-world games that offer a variety of content and landscapes to investigate. Words such as “dark” and “souls” may refer to the atmospheric and challenging aspects of the game and comparison to the Dark Souls series. The presence of “beautiful”, “amazing”, and “fantastic” suggests admiration for game visuals and design, while “challenge”, “enemies”, and “fights” reflect the engaging gameplay elements. “Vast”, “experience”, and “adventure” highlight the expansive nature of gaming environments. This topic encapsulates the enjoyment of vast, open-ended game worlds that offer both beauty and challenges to players.

6.1.6. Topic 6: Critical Analysis and Diverse Opinions in Gaming—Discussions on Game Content, Performance, and Player Experiences

Topic 6, comprising 7.5% of tokens, seems to revolve around the critique and discussion of game content and performance. Key terms like “areas”, “story”, and “enemies” suggest a focus on the game narrative and the variety within game environments. “Bad”, “bugs”, and “problems” imply conversations around game issues and technical flaws. “Combat” and “score” are indicative of discussions about gameplay mechanics and player achievement. The presence of “soft”, “copy”, and “feels” could relate to the subjective experience of the game’s texture or to issues of originality and game design imitation. “Graphics” and “user” point towards discussions on visual quality and user interface, whereas “masterpiece” and “interest” suggest contrasting opinions on game quality. This topic captures the critical analysis and mixed reception that games may receive from their audience.

6.1.7. Topic 7: Appreciating the Finer Aspects of Gaming—A Focus on Narrative, Aesthetics, and Gameplay Experience

Topic 7, represents 5.9% of tokens, appears to be overwhelmingly positive, focusing on the praiseworthy aspects of a game. The terms “amaze”, “great,“ “masterpiece”, “best”, and “beautiful” highlight strong admiration. “Story”, “breath”, and “explore” suggest an appreciation for narrative depth, world-building, and the exploratory aspects of gameplay. “Graphics”, “art”, “level”, and “design” point to visual and aesthetic appreciation. “Perfect”, “music”, and “character” indicate high regard for the game’s soundtrack, narrative perfection, and character development. The term “wild” might refer to a game’s setting or the unpredictability of its experience. “Weapons” and “combat” are likely discussing gameplay mechanics. “Different direction” and “favourite history” could relate to unique game directions or a beloved historical narrative within the game. The presence of “literally” and “absolutely” emphasizes the strong positive sentiment expressed in this topic.

6.1.8. Topic 8: Balanced Perspectives in Gaming—Merging Praise with Critical Analysis in Game Reviews and Discussions

Topic 8, constituting 4.7% of tokens, seems to blend high praise with critical insights, possibly within the context of a game or media review. Words like “incredible”, “best”, “beautiful”, and “excellent” reflect strong positive sentiments, while “work”, “title”, “experience”, and “release” relate to production and release aspects. The mention of “soundtrack” and “performance” suggests a focus on the auditory and execution quality, with “formula” hinting at narrative or gameplay mechanics. Contrasting terms such as “poor” and “lack” alongside “worth” and “enjoyable” imply a spectrum of opinions, indicating thoughtful critique amid appreciation. Terms like “opinion”, “aspect”, and “however” denote a detailed analysis, with “still” and “almost” acknowledging potential juxtaposed with shortcomings. “Soft”, “hours”, “builds”, and “run” likely touch on technical discussions, pointing to software quality, time investment, and gameplay dynamics. This topic encapsulates a multifaceted conversation that balances commendation with discerning evaluation, spanning aesthetics to technical execution.

6.1.9. Topic 9: Navigating the Complexities of Tech and Gaming—A Blend of Technical Insights and User Experiences

Topic 9, with 4.0% of tokens, seems to portray a complex sentiment, possibly within the realm of technology or gaming. Dominant positive terms such as “best”, “masterpiece”, ”amaze”, “awesome”, and “love” suggest a high level of appreciation and satisfaction. “Years”, “life”, “live”, and “day” could imply discussions about longevity, updates, or daily engagement with a product or game. Technical terms like “frame”, “optimization”, “rate”, and “performance” are indicative of discussions around software performance, possibly in the context of gaming frame rates or system optimization. The presence of “bad”, “drops”, and “without” might denote criticism or issues encountered. “PC” and “language” suggest a focus on computing or localization aspects, while “wait”, “hope”, and “patches” imply anticipation for updates or fixes. “Long” might refer to the duration of support or problems. “Buy” and “definitely” signal purchase recommendations or certainty in opinions. This topic appears to encapsulate a nuanced dialogue that juxtaposes technical evaluation and user experience over time, reflecting a broad spectrum of user engagement and perspectives.

6.1.10. Topic 10: Exploring Strategic Depth in Gaming—Insights into Role-Playing and MOBA Genres with a Focus on Player Dynamics and Game Mechanics

Topic 10, which encompasses 2.4% of tokens, presents a diverse mix of terms that may point to a gaming context, perhaps related to the genre of role-playing games and making comparisons or connections to MOBA games. “Major” and “undo” could indicate significant gameplay mechanics or the ability to reverse actions. Expressions of gratitude with “thanks” and negative connotations with “too” and “no” imply varied player feedback or in-game choices. “Demand” and “elder” might discuss in-game hierarchy, resources, or characters, while “simple” could reflect gameplay complexity. “League” and “legends” could be indicative of discussions around the game, including strategies, characters (champions), gameplay mechanics, or the competitive e-sports scene associated with it, as well as comparisons to LOL. Words like “dead”, “die”, “enemies”, and “team” support this idea, as they are common in competitive gaming. “Finest” and “scenarios” suggest discussions of optimal strategies or game moments. “Sugar”, “music”, and “balls” are more ambiguous but could relate to game content or slang used within the community. “Big”, “are”, “front”, and “bots” may refer to AI-controlled characters or a significant element in the foreground of gameplay. Overall, this topic seems to revolve around the dynamics of a complex gaming environment with strategic depth, player interaction, and possibly a vibrant community.

6.2. BERT Model Results and Interpretation

Figure 4.

BERT Clusters.

6.2.1. Cluster 1: Crafting Culturally Rich, Challenging Gameplay with Immersive Narratives

Cluster 1 highlights players’ appreciation for games that blend challenging gameplay with deep narratives, emphasizing terms like “masters” and “perfect” alongside “narrative” and “story”. The mention of “Souls” and “Arabic” suggests a focus on culturally rich and challenging content, underscoring the value of narrative immersion in gaming.

6.2.2. Cluster 2: Immersive Combat and Story—Balancing Dark Themes with Fun Mechanics

Cluster 2 delves into the interplay between “combat” and “story”, where terms like “dark” and “fun” illustrate a player preference for games that balance challenging mechanics with enjoyable narratives, indicating a nuanced approach to game engagement.

6.2.3. Cluster 3: Exploring Vast Landscapes in the Ultimate Open World Game with High-Quality Production and Immersive Gamelan Music

In Cluster 3, terms like “open world”, “explore”, “best”, and “gamelan” highlight a strong player interest in expansive, well-crafted game worlds with immersive music, pointing to a preference for high-quality production values in gaming.

6.2.4. Cluster 4: Blending Narrative and Experience in an Open World Setting with ’Bloodhound’ Elements and Acclaimed Quality

Cluster 4 emphasizes the fusion of narrative and open-world experiences, with “bloodhound” and “best” suggesting a focus on detailed, high-quality game worlds that are appreciated for their depth and narrative integration.

6.2.5. Cluster 5: Embracing Challenge and Darkness—A Focus on Hard Combat, Superior PC Graphics, and Aesthetic Appeal

This cluster indicates a preference for games that offer challenging combat and high-quality graphics, with terms like “dark”, “hard”, and “PC” pointing to a player base that values both aesthetic appeal and gameplay difficulty.

6.2.6. Cluster 6: Artistic Harmony in Combat and World Design, Inspiring Beauty and Amazement

Cluster 6 showcases player appreciation for games that combine artistic design with engaging combat, using terms like “combat”, “world”, “beautiful”, and “amaze” to highlight the importance of cohesive and visually stunning game design.

6.2.7. Cluster 7: Addressing Performance Concerns—Focus on Graphics Optimization, Issue Resolution, and Developer Support

Cluster 7 reflects concerns with game performance, emphasizing the need for graphics optimization and developer support, with “graphics”, “optimization”, and “support” underscoring the community’s call for refined gaming experiences.

6.2.8. Cluster 8: Valuing Deep Lore and Longevity—Emphasizing Story, Historical Richness, and Sustained Software Support

Highlighting the importance of deep lore and game longevity, Cluster 8 uses terms like “story”, “history”, and “software” to underline player interest in games that offer rich narratives and enduring support.

6.2.9. Cluster 9: Crafting Memorable Experiences—Combining Amazing Combat with Engaging Storylines for Standout Moments

Cluster 9 focuses on creating memorable gaming experiences, merging “amaze” and “combat” with “story” to illustrate the effective combination of engaging narratives and dynamic combat in games.

6.2.10. Cluster 10: Mastering Elden Ring—Blending Story and Combat in a Dark, Challenging Open World with High-Quality Graphics

Finally, Cluster 10 centers on “Elden Ring”, where the blend of “story” and “combat” in a “dark” and “challenging” open world, coupled with high-quality graphics, highlights player preferences for immersive and complex gaming experiences.

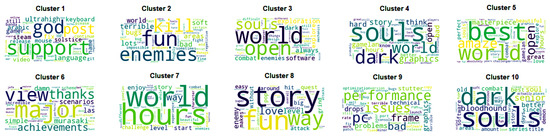

6.3. LDA-BERT Model Results and Interpretation

Figure 5.

LDA-BERT Clusters.

6.3.1. Cluster 1: Focused Discussions on Game Maintenance, Localization, and User Experience

This cluster underscores the gaming community’s focus on game maintenance, localization, and user experience, highlighting terms like “support”, “fix”, “update”, and “language”. The active discussion on localization, exemplified by mentions of “Arabic” and “English”, emphasizes the global nature of gaming and the need for inclusivity. The frequent references to “keyboard” and “mouse” point to a deep interest in interface design, while “ultrahigh” might suggest discussions on high-performance settings or graphics, underscoring a commitment to enhancing the overall gaming experience.

6.3.2. Cluster 2: Balancing Combat Enjoyment and Technical Issues in Game Discussions

This cluster delves into the balance between combat enjoyment and technical issues within games, with a strong emphasis on “enemies”, “fight”, “kill”, and “damage”, reflecting a focus on combat mechanics. Discussions also highlight technical problems, with terms like “terrible”, “bugs”, and “problem” indicating critical feedback on game performance. The juxtaposition of “fun” with technical issues underscores the community’s desire for enjoyable yet technically sound gaming experiences.

6.3.3. Cluster 3: Deep Dive into Game Design—Discussing “Souls”-like Challenges, Combat Mechanics, and Exploration

This cluster explores in-depth game design discussions, particularly focusing on “Souls”-like challenges, combat mechanics, and exploration. Terms such as “souls”, “world”, and “open” suggest a preference for challenging, expansive game environments. The emphasis on “combat” and “exploration” indicates a community valuing a blend of engaging gameplay and world discovery, highlighting the appreciation for games that offer both depth and breadth in their design.

6.3.4. Cluster 4: Discussing Complex Game Worlds and Mechanics, Emphasizing Challenge and Design in Gameplay

Discussion in this cluster centers on complex game worlds and mechanics, with a focus on challenging gameplay and intricate design, as indicated by terms like “souls”, “world”, and “dark”. The dialogue around “story”, “hours”, and “graphics” points to an engagement with narrative depth and visual quality, emphasizing the community’s value on well-crafted and challenging gaming experiences.

6.3.5. Cluster 5: Celebrating Artistic and Engaging Games with Emphasis on Aesthetics, Story, and Gameplay Excellence

This cluster celebrates artistic and engaging games, highlighting a community that values aesthetics, story, and gameplay excellence. Terms like “best”, “amaze”, and “beautiful” reflect admiration for games that excel in visual and narrative aspects. The discussion also appreciates challenging gameplay, as denoted by “combat” and “weapons”, suggesting a holistic appreciation for games that combine artistic merit with engaging mechanics.

6.3.6. Cluster 6: Artistic Harmony in Combat and World Design, Inspiring Beauty and Amazement

Cluster 6 emphasizes the interplay between combat and world design, showcasing player appreciation for games that meld artistic vision with engaging mechanics. The prominence of terms like “combat”, “world”, “beautiful”, and “amaze” reflects a community that values both the aesthetic appeal and the interactive dynamics of gaming. The discussion around “art” and “design” highlights a recognition of the craftsmanship behind game creation, underscoring the importance of a harmonious blend of visual beauty and gameplay.

6.3.7. Cluster 7: Addressing Performance Concerns—Focus on Graphics Optimization, Issue Resolution, and Developer Support

This cluster highlights the community’s focus on addressing performance concerns, particularly graphics optimization and issue resolution. The use of terms like “graphics”, “optimization”, and “issues” points to ongoing discussions about enhancing the technical aspects of gaming. The mention of “support” and “fix” suggests an active engagement with developers, indicating a collaborative effort between the community and creators to refine and improve gaming experiences, emphasizing the value placed on technical excellence and responsive support.

6.3.8. Cluster 8: Valuing Deep Lore and Longevity—Emphasizing Story, Historical Richness, and Sustained Software Support

Cluster 8 delves into the appreciation for deep lore and game longevity, with a significant focus on story, historical richness, and sustained developer support. Terms like “story”, “history”, and “software” illustrate a community that values rich narrative depth and games that offer long-term engagement. The discussion reflects a desire for continued support and updates, highlighting the importance of maintaining a vibrant and evolving game world that keeps players engaged over time.

6.3.9. Cluster 9: Crafting Memorable Experiences—Combining Amazing Combat with Engaging Storylines for Standout Moments

This cluster focuses on crafting memorable gaming experiences by combining excellent combat with engaging storylines. The prevalence of terms like “amaze”, “story”, and “combat” indicates a community preference for games that deliver standout moments through a blend of action and narrative. The emphasis on creating memorable experiences suggests that players value games that leave a lasting impact, blending dynamic gameplay with compelling storytelling.

6.3.10. Cluster 10: Mastering Elden Ring—Blending Story and Combat in a Dark, Challenging Open World with High-Quality Graphics

Cluster 10 specifically addresses discussions around mastering “Elden Ring”, highlighting the blend of story and combat in a challenging open-world setting. The focus on terms like “story”, “combat”, “dark”, and “graphics” reflects the community’s engagement with the game’s narrative depth, challenging gameplay, and visual quality. The discussion underscores a player preference for games that offer a rich, immersive experience, combining a compelling narrative with intricate gameplay mechanics in a visually stunning environment.

7. Evaluation of Study Accuracy and Validity

Our study’s reliability was evaluated using topic coherence, perplexity, and Silhouette score metrics. Higher coherence and lower perplexity indicate better model performance, while a higher Silhouette score reflects clearer clustering. The Silhouette score measures the quality of clustering by assessing how similar a document is to its own cluster compared to others [50]. Scores range from −1 to 1, with higher values indicating well-separated and distinct clusters.

In our study, after generating document vectors using BERT embeddings, LDA vectors, or their combination in the LDA-BERT model, we applied the K-Means clustering algorithm and calculated the Silhouette score to determine how distinct and meaningful the clusters were.

For the LDA-BERT model, perplexity was calculated based solely on the LDA component of the model, as the BERT embeddings do not generate probability distributions. The LDA component of the LDA-BERT model produces topic distributions, which allow for the traditional computation of perplexity, similar to how it is conducted in pure LDA models. The BERT embeddings were used to enhance the semantic richness of the representation but were not involved in the perplexity computation.

The LDA model displayed notable coherence and perplexity, and the LDA-BERT model showed enhanced coherence and clustering, affirming the effectiveness of our methods. Refer to Table 1 for detailed metrics. Research indicates that BERT-based models do not evaluate or recognize discourse coherence effectively due to their focus on token and sentence-level embeddings, rather than on broader text structures [51]. Furthermore, BERT is not designed to compute perplexity in a traditional manner, as its transformer architecture diverges from models that predict sequential text, making it unsuitable for using perplexity as an evaluation metric [52]. Additionally, coherence and perplexity are traditionally associated with probabilistic models like LDA, where they serve to assess the semantic similarity within topics and the model’s predictive accuracy, respectively. These metrics are not directly applicable to transformer-based models like BERT due to their different underlying architectures and operational focuses [53].

Table 1.

Evaluation Metrics for LDA, BERT, and LDA-BERT Models.

Our analysis revealed that certain game components, such as Aesthetics, Narratives, Fighting Mechanics, Graphics, Story, and Performance, were frequently highlighted by users in their reviews. We applied three models LDA, BERT, and LDA-BERT to explore these themes. While LDA-BERT combined the strengths of LDA’s focus on word frequency and BERT’s contextual depth, the differences in coherence and perplexity scores were modest, suggesting that while LDA-BERT offers a different perspective, its numerical advantages over LDA are limited.

8. Discussion

8.1. Understanding Player Preferences and Perceptions

The analysis of Elden Ring reviews provides significant insights into player preferences and perceptions. However, we acknowledge that some clusters contained common words that reduced their interpretability and informativeness. For instance, terms like “best”, “souls”, and “like” appeared in multiple clusters, indicating high inter-similarity and low intrasimilarity in some cases.

8.2. Emphasis on Open-World Gaming and Aesthetics

The frequent use of terms like “open”, “explore”, and “world” suggests that players highly value the ability to explore expansive game environments. Additionally, the visual appeal of the game, highlighted by terms such as “beautiful” and “design”, plays a crucial role in player satisfaction. These findings underscore the importance of creating visually stunning and immersive open-world environments in enhancing player engagement.

8.3. Technical Challenges and Performance

While primarily positive, some reviews also touched upon technical challenges, such as performance issues and optimization. Terms like “performance”, “graphics”, and “optimization” indicate areas where even highly acclaimed games like Elden Ring can face criticism. Addressing these technical aspects is crucial for maintaining player satisfaction.

8.4. Narrative and Combat Mechanics

Players frequently discussed the narrative and combat mechanics, emphasizing the balance between an engaging story and challenging gameplay. Terms such as “story”, “combat”, and “difficulty” reflect the critical role these elements play in the overall gaming experience.

8.5. New Insights and Implications

The employment of topic modeling techniques like LDA, BERT, and LDA-BERT not only refines the extraction of meaningful topics from game reviews but also provides developers with nuanced insights into player sentiments and preferences, which are invaluable to game developers, designers, marketers, and academic researchers. Most of the existing studies (e.g., [42,43,44,54]) employed BERT for sentiment analysis rather than topic modeling. In contrast, our research employs three distinct methodologies to evaluate their efficacy in pinpointing the most crucial game components, aiming to discern which method yields deeper insights beneficial for game developers. Another distinctive aspect of our work compared to other studies is the comprehensive pre-processing stage we implemented. While other studies exclude non-English reviews, our study translated all such reviews to ensure no valuable feedback was overlooked. Additionally, we meticulously identified and addressed noise in the data, achieving a highly detailed and precise cleaning process. Furthermore, our approach included a typo-correction feature that scrutinized the words for spelling errors, enhancing the overall quality and reliability of the textual data used in our analysis. A number of studies (e.g., [4,13,14,15]) utilized software tools for extracting reviews from websites, our approach involved developing a custom web scraping script tailored to gather data from various pages on the Metacritic website. For instance, we collected reviews for the game Elden Ring from 10 different web pages. Overall, our study can help game developers by providing them with detailed feedback and directing their attention to those areas where the users liked or disliked a game. Additionally, our analysis provides in-depth feedback on combat mechanics and game difficulty extracted from player discussions, offering developers precise insights into which gameplay elements are perceived as engaging or frustrating. This targeted feedback is crucial for refining game mechanics, enabling developers to adjust and optimize gameplay to boost player satisfaction and engagement effectively.

8.6. Commonality in Topic Modeling

It is common for topic modeling techniques like LDA and BERT to produce clusters with overlapping words, particularly when analyzing reviews for the same game. This overlap occurs due to shared themes, as reviews often discuss similar aspects of the game, leading to some common vocabulary. Additionally, players tend to use similar terms to describe their experiences, contributing to the presence of common words across different clusters.

In our analysis, words like “world”, “souls”, “best”, “open”, “game”, “combat”, “performance”, “dark”, “hours”, “enemies”, “story”, “graphics”, “fun”, “amaze”, and “great“ appeared across multiple clusters in all three models, indicating recurring themes in player feedback.

To provide a quantitative measure, we calculated the average word overlap between clusters produced by the different models using the following formula:

This formula provides a measure of the vocabulary shared between two clusters. The results are as follows:

- 40% word overlap between LDA and BERT clusters.

- 40% word overlap between LDA and LDA-BERT clusters.

- 55.6% word overlap between BERT and LDA-BERT clusters.

These findings suggest a moderate degree of similarity in the vocabulary used across different models, with BERT-based models showing a higher degree of overlap with LDA-BERT. This overlap highlights the shared thematic content discussed by players.

9. Conclusions

We implemented LDA, BERT, and LDA-BERT models to analyze Elden Ring reviews, uncovering player preferences for open-world gaming, the significance of technical performance in PC games, and the interplay between narrative and combat. The study highlights the complementary strengths of traditional and advanced NLP methods, where LDA excels in topic identification and BERT in linguistic analysis. While the LDA-BERT model combines these approaches, the differences in performance metrics such as coherence and perplexity were modest, suggesting that the LDA-BERT model offers a balanced integration rather than a significant improvement. These insights underscore the importance of community feedback in game development, providing a comprehensive framework for understanding player feedback and improving game experiences.

9.1. Limitations

This study faces limitations such as subjectivity in interpreting topic modeling outputs, which can introduce biases and inconsistencies in topic relevance and identification, affecting analysis precision and applicability. Additionally, the selection of an ideal topic count for model clustering, based on coherence scores, may not accurately represent the complexity of game-related discourse, potentially oversimplifying or fragmenting the dialogue, thus impacting the analysis’s effectiveness and relevance. Measurements such as Silhouette, Coherence, and Perplexity scores also present limitations, not always aligning with meaningful separations or human perceptions of topic quality. Despite extensive preprocessing, some common words remained in the clusters. Future work could explore advanced filtering methods or keyword extraction techniques to improve cluster quality. Furthermore, the analysis was conducted on a single video game, known for its high rating and success in the market.

9.2. Future Work

For a more comprehensive understanding, future work should apply our methodology to games with a broader range of reviews, including those with mixed or negative feedback. This would allow us to capture a wider spectrum of player sentiments. Additionally, efforts should be made to enhance the interpretability of topic models by integrating advanced visualization techniques and algorithmic improvements, which could reduce the reliance on human interpretation and mitigate potential biases.

Expanding the analysis to include multilingual reviews, especially for globally popular games like Elden Ring, could provide a richer, more nuanced view of player discussions across different cultures and languages. To further improve the quality of the insights, future studies should focus on more effective removal of common and uninformative words by employing advanced keyword extraction and filtering methods. This would help in generating more coherent and meaningful clusters. Moreover, we plan to explore alternative clustering techniques and further refine the scaling of LDA and BERT vectors to improve cluster separation, potentially enhancing the Silhouette scores.

Lastly, we plan to explore the potential of advanced NLP models like GPT-3 and T5 for topic modeling in game reviews, as these models may offer deeper and more nuanced insights into player feedback.

Author Contributions

Conceptualization, F.D. and L.Z.; Methodology, F.D. and L.Z.; Software, F.D.; Validation, F.D. and L.Z.; Formal analysis, F.D. and L.Z.; Investigation, F.D. and L.Z.; Writing—original draft, F.D. and L.Z.; Writing—review & editing, F.D. and L.Z.; Supervision, L.Z.; Project administration, L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by NSERC Discovery grant number RGPIN-2018-05921.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The code base for this paper can be found at GitHub Repository: https://github.com/Taraneh-Deh/Topic-Modeling-in-Game-Reviews (accessed on 15 September 2024).

Acknowledgments

To revise and condense the paper, we utilized OpenAI’s ChatGPT. The AI tool did not contribute to the development of any new text.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| NLP | Natural Language Processing |

| LDA | Latent Dirichlet Allocation |

| BERT | Bidirectional Encoder Representations from Transformers |

Appendix A

Table A1.

Top Words for Each Topic Cluster of LDA Model.

Table A1.

Top Words for Each Topic Cluster of LDA Model.

| Cluster | Top Words |

|---|---|

| Cluster 1 | masterpiece, souls, open, world, explore, combat, dark, design, story, best, difficulty, enemies, graphics |

| Cluster 2 | performance, graphics, optimization, fps, issues, support, stutter, pc, technical, bugs, fix, rate, software |

| Cluster 3 | fun, graphics, story, terrible, enemies, fight, attack, dark, open, world, software, fps |

| Cluster 4 | love, souls, dark, best, masterpiece, hours, story, world, combat, great, design, fun |

| Cluster 5 | best, world, open, souls, explore, story, graphics, hours, amazing, beautiful, combat |

| Cluster 6 | combat, world, great, open, story, explore, areas, soft, bad, graphics, enemies, design |

| Cluster 7 | graphics, optimization, great, amaze, masterpiece, performance, issues, pc, support, bugs, rate |

| Cluster 8 | best, work, great, software, title, dark, incredible, amaze, perfect, world, story |

| Cluster 9 | best, bad, soft, years, history, amazing, graphics, drop, optimization, world |

| Cluster 10 | undo, major, thanks, fix, best, souls, years, great, amazing, dark |

Table A2.

Top Words for Each Topic Cluster of BERT Model.

Table A2.

Top Words for Each Topic Cluster of BERT Model.

| Cluster | Top Words |

|---|---|

| Cluster 1 | masterpiece, definitely, best, amaze, perfect, great, arabic, language, better, souls, world, hours, dark, open, story, explore, think, design |

| Cluster 2 | pc, performance, issues, graphics, gamelan, stutter, souls, enemies, fun, fps, support, attack, high, different, however, way, still, hard, look, experience |

| Cluster 3 | fun, graphics, story, terrible, enemies, fight, attack, dark, open, world, software, fps, bad, empty, understand, master, amazing, masterpiece, incredible, combat |

| Cluster 4 | love, souls, dark, best, masterpiece, hours, story, world, combat, great, design, fun, better, perfect, god |

| Cluster 5 | best, world, open, souls, explore, story, graphics, hours, amazing, beautiful, combat, discover, variety, series |

| Cluster 6 | areas, score, enemies, world, story, combat, open, hard, graphics, dark, design, great, soft, bugs, problems, amazing |

| Cluster 7 | amaze, great, masterpiece, bugs, music, combat, world, graphics, design, exploration, beautiful, favourite, breath, optimization, optimized |

| Cluster 8 | title, work, software, incredible, best, world, story, amazing, years, history, dark, optimization, experience, greatest, simple |

| Cluster 9 | best, bad, issues, frame, years, optimization, software, performance, view, graphics, drops, rate, soft, buy, wait |

| Cluster 10 | undo, major, thanks, fix, world, open, souls, combat, amazing, story, years, graphics, demanded, murasaki, finest |

Table A3.

Top Words for Each Topic Cluster of LDA-BERT Model.

Table A3.

Top Words for Each Topic Cluster of LDA-BERT Model.

| Cluster | Top Words |

|---|---|

| Cluster 1 | good, post, support, ultrahigh, language, release, mouse, keyboard, arabic, fix, stutter, pc, fps, video |

| Cluster 2 | kill, fun, game, terrible, bugs, world, frame, soft, pc, technical, exploration, fix, support, enemy |

| Cluster 3 | souls, world, open, exploration, dark, combat, amazing, always, difficulty, said, team, design, story |

| Cluster 4 | world, best, graphics, story, hard, think, gamelan, combat, better, masterpiece, hours, hour |

| Cluster 5 | best, world, open, souls, masterpiece, amaze, beautiful, hours, love, explore, graphics, pc |

| Cluster 6 | major, perfect, view, achievements, incredible, think, scenarios, quest, combat |

| Cluster 7 | world, hours, weapon, level, start, challenge, enjoy, enemies, great, combat |

| Cluster 8 | story, fun, way, love, levels, attack, quest, think, issues, support, rate, bugs, performance |

| Cluster 9 | pc, bad, bugs, patch |

| Cluster 10 | dark, souls, old, combat, boat, series, title, amount, actually, love, Bloodhound |

Table A4.

List of Custom Stopwords Removed.

Table A4.

List of Custom Stopwords Removed.

| Custom Stopwords |

|---|

| done, a, for, i, the, expand, click, contain, spoiler, it, be, in, one, get, even, year, guess, see, got, feel, want, tell, absolute, every, is, some, would, else, in, de, said, us, by, little, decided, bethesda, let, must, gam, thousands, los, la, al, to, contains, of, the, ago, much, really, ever, games, played, bosses, go, like, good, say, lot, diego, que, give, review, reviews, people, everyone, never, per, boss, also, many, new, may, back, try, vet, made, make, could, spoilers, first, una, fps, not, find |

References

- Alferova, L. Researching Potential Customers for the Video Game as a Service in the European Market. Hämeen Ammattikorkeakoulu University of Applied Sciences. 2016. Available online: https://www.theseus.fi/handle/10024/117058 (accessed on 15 September 2024).

- Chambers, C.; Feng, W.C.; Sahu, S.; Saha, D. Measurement-based characterization of a collection of on-line games. In Proceedings of the 5th ACM SIGCOMM Conference on Internet Measurement, Berkeley, CA, USA, 19–21 October 2005; p. 1. [Google Scholar]

- Dehghani, F.; Zaman, L. Facial Emotion Recognition in VR Games. In Proceedings of the 2023 IEEE Conference on Games (CoG), Boston, MA, USA, 21–24 August 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Zagal, J.P.; Ladd, A.; Johnson, T. Characterizing and understanding game reviews. In Proceedings of the 4th International Conference on Foundations of Digital Games, Orlando, FL, USA, 26–30 April 2009; pp. 215–222. [Google Scholar]

- McNamara, A. Up against the wall: Game makers take on the press. In Proceedings of the Game Developer’s Conference, San Francisco, CA, USA, 18 February 2008; Volume 8. [Google Scholar]

- Stuart, K. State of play: Is there a role for the New Games Journalism. The Guardian. Available online: https://www.theguardian.com/technology/gamesblog/2005/feb/22/stateofplayi (accessed on 15 September 2024).

- Sherrick, B.; Schmierbach, M. The effects of evaluative reviews on market success in the video game industry. Comput. Games J. 2016, 5, 185–194. [Google Scholar] [CrossRef]

- Greenwood-Ericksen, A.; Poorman, S.R.; Papp, R. On the validity of Metacritic in assessing game value. Eludamos J. Comput. Game Cult. 2013, 7, 101–127. [Google Scholar] [CrossRef] [PubMed]

- Vieira, A.; Brandão, W. Evaluating Acceptance of Video Games using Convolutional Neural Networks for Sentiment Analysis of User Reviews. In Proceedings of the 30th ACM Conference on Hypertext and Social Media, Hof, Germany, 17–20 September 2019; pp. 273–274. [Google Scholar]

- Hu, Y.; Boyd-Graber, J.; Satinoff, B.; Smith, A. Interactive topic modeling. Mach. Learn. 2014, 95, 423–469. [Google Scholar] [CrossRef]

- Blei, D.M. Probabilistic topic models. Commun. ACM 2012, 55, 77–84. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Huang, J.; Rogers, S.; Joo, E. Improving restaurants by extracting subtopics from yelp reviews. In Proceedings of the iConference 2014 (Social Media Expo), Berlin, Germany, 4–7 March 2014. [Google Scholar]

- Heng, Y.; Gao, Z.; Jiang, Y.; Chen, X. Exploring hidden factors behind online food shopping from Amazon reviews: A topic mining approach. J. Retail Consum. Serv. 2018, 42, 161–168. [Google Scholar] [CrossRef]

- Girdhar, Y.; Giguere, P.; Dudek, G. Autonomous adaptive underwater exploration using online topic modeling. In Experimental Robotics, Proceedings of the 13th International Symposium on Experimental Robotics, Quebec City, QC, Canada, 18–21 June 2012; Springer: Cham, Switzerland, 2013; pp. 789–802. [Google Scholar]

- Liu, L.; Tang, L.; Dong, W.; Yao, S.; Zhou, W. An overview of topic modeling and its current applications in bioinformatics. SpringerPlus 2016, 5, 1–22. [Google Scholar] [CrossRef]

- Hong, L.; Davison, B.D. Empirical study of topic modeling in twitter. In Proceedings of the First Workshop on Social Media Analytics, Washington, DC, USA, 25–28 July 2010; pp. 80–88. [Google Scholar]

- Tran, T.; Ba, H.; Huynh, V.N. Measuring hotel review sentiment: An aspect-based sentiment analysis approach. In Uncertainty in Knowledge Modelling and Decision Making, Proceedings of the 7th International Symposium, IUKM 2019, Nara, Japan, 27–29 March 2019; Springer: Cham, Switzerland, 2019; pp. 393–405. [Google Scholar]

- Lubis, F.F.; Rosmansyah, Y.; Supangkat, S.H. Topic discovery of online course reviews using LDA with leveraging reviews helpfulness. Int. J. Electr. Comput. Eng. 2019, 9, 426. [Google Scholar] [CrossRef]

- Kwon, H.J.; Ban, H.J.; Jun, J.K.; Kim, H.S. Topic modeling and sentiment analysis of online review for airlines. Information 2021, 12, 78. [Google Scholar] [CrossRef]

- Duan, W.; Gu, B.; Whinston, A.B. Do online reviews matter?—An empirical investigation of panel data. Decis. Support Syst. 2008, 45, 1007–1016. [Google Scholar] [CrossRef]

- Hennig-Thurau, T.; Marchand, A.; Hiller, B. The relationship between reviewer judgments and motion picture success: Re-analysis and extension. J. Cult. Econ. 2012, 36, 249–283. [Google Scholar] [CrossRef]

- Hu, N.; Pavlou, P.A.; Zhang, J. Can online reviews reveal a product’s true quality? Empirical findings and analytical modeling of online word-of-mouth communication. In Proceedings of the 7th ACM Conference on Electronic Commerce, Ann Arbor, MI, USA, 11–15 June 2006; pp. 324–330. [Google Scholar]

- Joshi, M.; Das, D.; Gimpel, K.; Smith, N.A. Movie reviews and revenues: An experiment in text regression. In Proceedings of the Human Language Technologies: The 2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics, Los Angeles, CA, USA, 2–4 June 2010; pp. 293–296. [Google Scholar]

- King, T. Does film criticism affect box office earnings? Evidence from movies released in the US in 2003. J. Cult. Econ. 2007, 31, 171–186. [Google Scholar] [CrossRef]

- Reinstein, D.A.; Snyder, C.M. The influence of expert reviews on consumer demand for experience goods: A case study of movie critics. J. Ind. Econ. 2005, 53, 27–51. [Google Scholar] [CrossRef]

- Wang, F.; Liu, X.; Fang, E.E. User reviews variance, critic reviews variance, and product sales: An exploration of customer breadth and depth effects. J. Retail. 2015, 91, 372–389. [Google Scholar] [CrossRef]

- Yu, Y.; Nguyen, B.H.; Yu, F.; Huynh, V.N. Discovering topics of interest on steam community using an lda approach. In Advances in the Human Side of Service Engineering, Proceedings of the International Conference on Applied Human Factors and Ergonomics, Virtual, 25–29 July 2021; Springer: Cham, Switzerland, 2021; pp. 510–517. [Google Scholar]

- Jeffrey, R.; Bian, P.; Ji, F.; Sweetser, P. The Wisdom of the Gaming Crowd. In Proceedings of the 2020 Annual Symposium on Computer-Human Interaction in Play, Virtual, 2–4 November 2020; pp. 272–276. [Google Scholar]

- Zagal, J.P.; Tomuro, N.; Shepitsen, A. Natural language processing in game studies research: An overview. Simul. Gaming 2012, 43, 356–373. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Z.; Stefanidis, K. A data-driven approach for video game playability analysis based on players’ reviews. Information 2021, 12, 129. [Google Scholar] [CrossRef]

- Lin, D.; Bezemer, C.P.; Zou, Y.; Hassan, A.E. An empirical study of game reviews on the Steam platform. Empir. Softw. Eng. 2019, 24, 170–207. [Google Scholar] [CrossRef]

- Bond, M.; Beale, R. What makes a good game?: Using reviews to inform design. In Proceedings of the British Computer Society Conference on Human-Computer Interaction, Cambridge, UK, 1–5 September 2009. [Google Scholar]

- Kang, H.N.; Yong, H.R.; Hwang, H.S. A Study of analyzing on online game reviews using a data mining approach: STEAM community data. Int. J. Innov. Manag. Technol. 2017, 8, 90. [Google Scholar] [CrossRef]

- McCallum, A.; Wang, X.; Mohanty, N. Joint group and topic discovery from relations and text. In hlStatistical Network Analysis: Models, Issues, and New Directions, Proceedings of the ICML Workshop on Statistical Network Analysis, Pittsburgh, PA, USA, 29 June 2026; Springer: Berlin/Heidelberg, Germany, 2006; pp. 28–44. [Google Scholar]

- Choi, H.S.; Maasberg, M. An empirical analysis of experienced reviewers in online communities: What, how, and why to review. Electron. Mark. 2022, 32, 1293–1310. [Google Scholar] [CrossRef]

- Gifford, B. Reviewing the Critics: Examining Popular Video Game Reviews through a Comparative Content Analysis. Ph.D. Thesis, Cleveland State University, Cleveland, OH, USA, 2013. [Google Scholar]

- Kwak, M.; Park, J.S.; Shon, J.G. Identifying Critical Factors for Successful Games by Applying Topic Modeling. J. Inf. Process. Syst. 2022, 18, 130–145. [Google Scholar]

- Livingston, I.; Nacke, L.; Mandryk, R. The Impact of Negative Game Reviews and User Comments on Player Experience. In Proceedings of the Sandbox 2011: ACM SIGGRAPH Symposium on Video Games, Vancouver, BC, Canada, 10 August 2011. [Google Scholar] [CrossRef]

- Santos, T.; Lemmerich, F.; Strohmaier, M.; Helic, D. What’s in a Review: Discrepancies Between Expert and Amateur Reviews of Video Games on Metacritic. Proc. ACM Hum.-Comput. Interact. 2019, 3, 140. [Google Scholar] [CrossRef]

- Grootendorst, M. BERTopic: Neural topic modeling with a class-based TF-IDF procedure. arXiv 2022, arXiv:2203.05794. [Google Scholar]

- Yu, Y.; Dinh, D.T.; Nguyen, B.H.; Yu, F.; Huynh, V.N. Mining Insights from Esports Game Reviews with an Aspect-Based Sentiment Analysis Framework. IEEE Access 2023, 11, 61161–61172. [Google Scholar] [CrossRef]

- Uthirapathy, S.E.; Sandanam, D. Topic Modelling and Opinion Analysis On Climate Change Twitter Data Using LDA And BERT Model. Procedia Comput. Sci. 2023, 218, 908–917. [Google Scholar] [CrossRef]

- Fadhlurrahman, J.A.M.; Herawati, N.A.; Aulya, H.R.W.; Puspasari, I.; Utama, N.P. Sentiment Analysis of Game Reviews on STEAM using BERT, BiLSTM, and CRF. In Proceedings of the 2023 International Conference on Electrical Engineering and Informatics (ICEEI), IEEE, Bandung, Indonesia, 10–11 October 2023; pp. 1–6. [Google Scholar]

- Jain, P.K.; Quamer, W.; Saravanan, V.; Pamula, R. Employing BERT-DCNN with sentic knowledge base for social media sentiment analysis. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 10417–10429. [Google Scholar] [CrossRef]

- Atagün, E.; Hartoka, B.; Albayrak, A. Topic Modeling Using LDA and BERT Techniques: Teknofest Example. In Proceedings of the 2021 6th International Conference on Computer Science and Engineering (UBMK), Ankara, Turkey, 15–17 September 2021; pp. 660–664. [Google Scholar] [CrossRef]

- George, L.; Sumathy, P. An integrated clustering and BERT framework for improved topic modeling. Int. J. Inf. Technol. 2023, 15, 2187–2195. [Google Scholar] [CrossRef]

- Pang, G.; Lu, K.; Zhu, X.; He, J.; Mo, Z.; Peng, Z.; Pu, B. Aspect-level sentiment analysis approach via BERT and aspect feature location model. Wirel. Commun. Mob. Comput. 2021, 2021, 1–13. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Jiang, Y.; Song, X.; Harrison, J.; Quegan, S.; Maynard, D. Comparing Attitudes to Climate Change in the Media using sentiment analysis based on Latent Dirichlet Allocation. In Proceedings of the 2017 EMNLP Workshop: Natural Language Processing Meets Journalism, Copenhagen, Denmark, 7 September 2017; pp. 25–30. [Google Scholar] [CrossRef]

- Zhao, W.; Strube, M.; Eger, S. DiscoScore: Evaluating Text Generation with BERT and Discourse Coherence. arXiv 2022, arXiv:2201.11176. [Google Scholar] [CrossRef]

- Miaschi, A.; Brunato, D.; Dell’Orletta, F.; Venturi, G. What Makes My Model Perplexed? A Linguistic Investigation on Neural Language Models Perplexity. In Proceedings of the 2nd Workshop on Knowledge Extraction and Integration for Deep Learning Architectures, Virtual, 10 June 2021; pp. 40–47. [Google Scholar] [CrossRef]

- Kim, H.; Lee, W.; Lee, E.H.; Kim, S. Review of evaluation and interpretation method for LDA model. Korean Data Anal. Soc. 2023, 8, 1299–1310. [Google Scholar] [CrossRef]

- Sasson, G.; Kenett, Y.N. A Mirror to Human Question Asking: Analyzing the Akinator Online Question Game. Big Data Cogn. Comput. 2023, 7, 26. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).