Abstract

This paper discusses the design and evaluation of a set of heuristics (U + A) to assess the usability and accessibility of interactive systems in terms of cognitive diversity in an integrated way. Method: An analysis of the usability and accessibility aspects that have the greatest impact on people with cognitive disabilities is presented. A set of cognitive usability and accessibility heuristics targeted at people with cognitive disabilities is generated with the support of generative artificial intelligence (GenAI). Result: Several interactive systems are evaluated to validate the U + A heuristics. A quantitative classification of the evaluated systems is obtained, i.e., the “U + A-index”, which reveals the most and least usable and accessible systems for people with cognitive and learning disabilities. Conclusions: This study demonstrates that it is possible to carry out a classification of heuristic criteria considering accessibility, usability, and cognitive disability. The results also show that the selection of criteria proposed by GenAI is optimal and that this technology can be used to improve usability and accessibility of interactive systems in a practical way. Yet, further evaluations are needed to support the results of the analyzed proposal.

1. Introduction

This paper discusses an exploratory study aimed at designing and evaluating a set of usability and accessibility heuristics focused on cognitive diversity with the support of generative AI (GenAI). The combination of accessibility and usability guidelines/principles is key to designing inclusive and effective interfaces as doing so allows designers and developers to draw on best practices and design knowledge to cater for different types of users in a cost-effective way. This paper proposes a novel and practical approach for generating a single numerical index which quantifies the level of accessibility and usability of interactive systems for people with cognitive disabilities.

According to the Global Digital report [1], the number of internet users in 2024 will reach 5.35 billion, with a year-on-year growth of 1.8% in the last 12 months. The World Health Organization [2] estimates that between 15% and 20% of the population, approximately one billion people worldwide, live with a disability [3]. Among them, around 200 million people live with an intellectual disability, representing 2.6% of the world’s population [4]. The Universal Declaration of Human Rights states that people with intellectual disabilities have the right to enjoy technology and to perceive, understand, navigate, and interact appropriately in any user interface [5]. However, efforts made to improve web accessibility for people with cognitive and intellectual disabilities remain limited. This is due mainly to the fact that cognitive impairments are the least understood disability categories [6]. Also, much of the literature related to cognitive disabilities comes from medicine, which does not directly address issues related to website accessibility [7].

Usability and accessibility are two fundamental concepts of interactive systems. Usability is ‘the ability of software to be understood, learned, used and attractive to the user, under specific conditions of use’ [8]. The main factors that measure usability are navigability, ease of learning, speed of use, user efficiency, and error rates. Accessibility is a practice that ‘ensures that websites, technologies and tools are designed to be used by people with disabilities’ [9]. Web accessibility concerns many types of disabilities, including visual, hearing, physical, cognitive, neurological, and speech impairments. In addition, it benefits other people, including older people whose functional abilities decline as a result of aging. Accessibility is measured based on automatic evaluation tools, manual evaluation by an expert, and user testing of people with disabilities [10].

Usability is a concept that is closely linked to accessibility. Usability is a necessary but not sufficient condition for good accessibility. And accessibility alone does not guarantee usability [11]. For example, a system may be usable for some users. Yet, it might not be accessible for people with disabilities because, for example, it does not provide good color contrast and users with low vision may not be able to perceive the elements with which they can interact. An accessible application, which meets the accessibility criteria, does not guarantee a good user experience for all users. Despite being technically accessible, it may have a confusing navigation structure. Thus, designing a system that meets usability and accessibility objectives rests upon dealing with both aspects.

Yet, bringing together usability and accessibility is difficult, as each of them addresses different issues, as we have discussed above. However, both concepts aim to improve the user experience and are a key part of inclusive design, which truly benefits all users. Thus, this paper argues that designing a metric or index that combines usability and accessibility is worth the effort. To this end, we focus on inspection and evaluation techniques: heuristic evaluation [12] in the case of usability, and verification of WCAG criteria [13] in the case of accessibility. We focus on inspection methods because they are widely used in usability evaluation [14,15]. We also focus on usability heuristics and accessibility guidelines because they play a key role in user interface design [16]. In both cases, a set of criteria are checked to observe whether they are fulfilled in an interface and subsequently obtain a quantitative index of the evaluated system. These criteria were selected with the support of ChatGPT and evaluated in case studies with four websites.

In summary, the main contributions of this paper are as follows:

- A unique list of heuristic criteria focused on the evaluation of systems for people with cognitive learning disabilities and additionally for older people.

- An objective numerical index of cognitive usability and accessibility evaluation.

- A case study that evaluates the cognitive usability and accessibility heuristics.

- A ranking of usable and accessible systems for people with cognitive disabilities.

2. Background and Related Work

Section 2.1 discusses usability and accessibility with respect to the objectives of this paper. Section 2.2 focuses on evaluation metrics and the potential of GenAI to generate heuristics and metrics.

2.1. Usability and Accessibility, Context and Evaluation

To assess usability, several methods exist [17]. These are classified into (i) inspection, where a set of experts analyze the degree of usability of a system based on an examination of a system’s interface; (2) enquiry, where a system is analyzed through observations of its use and with interviews and/or questionnaires; and (3) testing, where users perform tasks on the system and the results are analyzed to determine the suitability of the interface [18]. This paper focuses on heuristic evaluation, which belongs to the first category (inspection).

Heuristic evaluation (HE), which is an inspection method based on expert evaluation, makes it possible to identify a large number of problems with few financial resources and little time [19,20]. HE is guided by heuristic principles [21] to identify user interface designs that violate these principles. Studies such as [22] indicate that the most commonly used heuristics are those proposed by Nielsen [23], but also point out the need to adapt them to new technologies and contexts: mobile applications [24], artificial intelligence [25], educational games [26], and the metaverse [27]. All these references are contextualized in a web environment without taking into account people with cognitive disabilities, but it is relevant to consider other areas such as the design of mobile applications for people with cognitive disabilities [28] or how IOT systems are presented to be useful to all people [29]. All these studies show the importance of usability evaluation considering HE. They also propose different sets of heuristic criteria (depending on the domain) aimed at evaluating each system or environment. With HE, a qualitative result can be obtained, indicating aspects of a system to be improved according to the criteria analyzed and also quantitative ones, indicating the level of criteria that are being fulfilled in the system [30]. However, in most of the abovementioned investigations, they do not consider a numerical quantification to obtain a single index of the level of usability of the evaluated system. A noteworthy exception is [31,32], wherein Granollers proposes a list of heuristic criteria that synthesize J. Nielsen’s Heuristic Usability Principles for User Interface Design [23] and B. Tognazzini’s Interface Design Principles [33]. This integrated set of heuristics enables us to assess each principle in an integrated way and generate a single quantitative index at the end of the evaluation. A proof of concept was carried out and we were able to quickly measure the level of usability and accessibility of an interactive system [34].

Web accessibility evaluation methods are qualitative and/or quantitative [35,36]. Qualitative methods are applied to assess the compliance of a website with the WCAG (Web Content Accessibility Guidelines) by using automatic tools [37] or with some heuristics by experts manually [38]. Quantitative methods calculate the level of accessibility of the website with the use of accessibility metrics [39]. The WCAG [13] provide us with an important framework for evaluating the accessibility of a system. These guidelines include many of the evaluation points needed to improve the accessibility of systems for people with disabilities. Yet, they do not address in much depth the evaluations directly related to cognitive and comprehension disabilities.

In this regard, W3C offers the document ‘Making Content Usable for People with Cognitive and Learning Disabilities’ [40], which is also known as ‘the COGA Guidelines’. These criteria are a complementary guide to the WCAG, presenting concrete advice on how to make content understandable for people with cognitive and learning disabilities, ensuring that they can navigate, interact with, and access digital information as successfully as possible.

The research work presented here is based on the guidelines of Granollers [31,32], WCAG 2.2 [41] and the COGA Guidelines [40]. This paper extends these previous works by proposing a set of heuristics that combine the key aspects of usability and accessibility for people with cognitive disabilities using generative AI.

2.2. Usability and Accessibility Metrics and Generative AI

Metrics are important because they (i) quantify the performance of a system, (ii) provide objective data that serve as a basis for making decisions about the system, and (iii) allow us to observe the evolution of the system and to measure the impact of changes made to it over time. Metrics also enable us to determine whether a system achieves its objectives and to identify areas that require optimization. Obtaining in a single value (or numerical index) the level of usability and accessibility of a system makes it possible to quantify the effort needed to improve the system under evaluation. The present work proposes a usability and accessibility index (U + A-index) that will make it possible to address the aforementioned issues.

Designing a usability + accessibility index (U + A-index) is not straightforward, as several studies argue [42,43,44,45]. Although researchers have been concerned with providing mechanisms that serve as guidelines for web systems in terms of usability and accessibility requirements, we are not aware of any tool that provides a unique value that helps specialists to verify the extent to which these requirements are present in a web system.

Generative AI tools have recently been used to conduct studies on interface evaluations [46,47,48]. Indeed, there is also a growing interest in the HCI community in exploring the potential of generative AI in interaction design [49]. Although generative AI has biases and hallucinations [50], we consider that generative AI could be used to help us select a set of heuristic criteria to evaluate usability and accessibility aspects focused on specific users, for example, users with disabilities. Previous research combines usability and accessibility measurements in a single index in an e-commerce environment without GenAI [34] and it does not focus on specific users with disabilities. This paper addresses the evaluation of systems considering usability and accessibility criteria in environments more suitable for people with cognitive disabilities.

3. Designing a Set of Cognitive Usability and Accessibility Heuristics

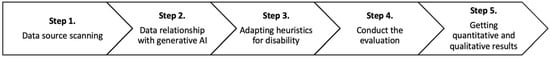

This section presents the steps that have been carried out to develop the usability and accessibility evaluation proposal with a focus on people with cognitive disabilities. The steps are important to carry out a systematic and accurate evaluation of the usability and accessibility of a system, especially adapted to the needs of people with cognitive disabilities. These steps are outlined next and depicted in Figure 1.

Figure 1.

Steps for development of the research.

The criteria to be evaluated are collected in raw form (step 1) based on existing criteria in the literature. Afterwards, they are interrelated through the GenAI (step 2) in order to synthesize the important points of each type of evaluation (usability and accessibility). The results of GenAI are validated and subsequently adapted to people with specific disabilities in step 3. In this specific case, we focused on people with cognitive disabilities. The evaluation itself is carried out in the system (step 4) to obtain the validations of the evaluation experts, and finally, the results of the evaluation (step 5) are synthesized in a single quantifiable index of the level of usability and accessibility of the system according to people with cognitive disabilities. Each step plays an important role in ensuring that the criteria chosen for the assessment are relevant, adapted, and validated, thus enabling meaningful results to be obtained.

3.1. Step 1. Exploring the Data Source

In order to develop a proposal for how to evaluate usability and accessibility focused on people with cognitive disabilities, a series of links to relevant information was made. This was based on sources of information found in the scientific literature and on reference sites:

- Table: ‘Heuristic Criteria’: The heuristic principles developed by Granollers [32,51] were selected because they are a generic set of heuristics to evaluate interactive systems, including web pages, and generate an index that indicates the level of usability of the analyzed system.

- Table: ‘WCAG’: WCAG 2.2 [41] was chosen as the latest W3C recommendation at the time of writing this paper.

- Table: ‘COGA Guidelines’: ‘Making Content Usable for People with Cognitive and Learning Disabilities’ [40], focusing on assessments related to cognitive impairment.

- Table: ‘Disabilities’: The list of disabilities contained in the WCAG 2.2 document “understanding” [52], which shows how WCAG 2.2 impacts certain users with disabilities.

3.2. Step 2. Obtaining Criteria According to the Data Relationship with Generative AI

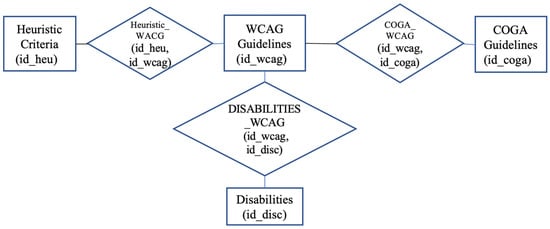

With the data sources presented in step 1, data links were made and related relationships explored (see Figure 2). The links were made in the following tables:

Figure 2.

Relational scheme of the information on which the heuristics were based (all relationships are n.n.).

- Disabilities and WCAG (disabilities–WCAG relationship).

- Heuristic criteria and WCAG (heuristic–WCAG relationship).

- COGA guidelines and WCAG (COGA–WCAG relationship).

Data linkage refers to, e.g., a specific heuristic criterion for which a guideline(s) from WCAG is most closely related. For example, heuristic criterion 1.1 The application visibly includes the page title refers to WCAG criterion 2.4.2 Page titles. Similarly, for each COGA guideline criterion, we looked for which WCAG criterion was most closely related. For example, COGA criterion 5.1 Limit interruptions relates to WCAG 2.2.4 Avoidable interruptions. These links were made with the aim of obtaining a list of usability and accessibility criteria to form a system where the common link was WCAG, and where the filtering of information was easy.

No previous works were found that specifically addressed the relationships between the proposed linkages (heuristic criteria–WCAG and COGA guidelines–WCAG), which made manually performing this work a considerable effort and time-consuming. For this reason, it was decided to use generative AI (Chat GTP) to automatically generate the correspondences between the data in the tables in step 1.

It should be noted that the use of the generative AI system was exploratory, as it was initially expected that the results would not be sufficiently accurate. However, as the research progressed, the relationships generated were found to be adequate, leading to the continuation of data production with generative AI. The validity of these data was further verified in the use case described in Section 4.

We explain below how the data for each of the data relationships (which are n.n. and formalized in a table) were obtained from the above sources (see Figure 2).

‘WCAG_Disabilities’: There are references in the literature to a correspondence between disability and WCAG [13], and these data provided a solid basis for charting the remaining relationships. However, they had to be completed manually so as to adapt them to version 2.2 and level AAA of WCAG. We drew on the section ‘beneficiaries’ in the document “understanding” [45] to do so. The results are presented in Table 1.

Table 1.

‘WCAG_Disabilities’: List of WCAG criteria and disabilities.

‘Heuristis_WCAG’ relationship: Previous works have explored the link between generic heuristic criteria and WCAG [45,53]. Yet, these works (e.g., [32]) have not addressed the specific heuristic criteria analyzed in this paper. As this was a complex task to carry out manually, generative AI was used to obtain the data. Table 2 is the result, and the steps taken were as follows:

Table 2.

‘Heuristis_WCAG’ relationship.

- First, we introduced the table “heuristic criteria” into a generative AI tool.

- After, we wrote this prompt in ChatGPT:‘Relate each of the rows in the table (of “Heuristics criteria”) to the WCAG 2.2 guidelines. Indicates whether it relates to one or more criteria of the WCAG 2.2 guidelines (or maybe none). Displays the result in a table’.

- Validation: To validate the data generated by GenAI, we randomly observed whether the heuristic criterion matched the WCAG criterion to which it was related. Although this is not a thorough validation, it was performed consciously and was an important point in the experimentation. We wanted to test whether the results obtained were satisfactory without manual intervention in this step.

‘COGA_WCAG’ relationship: For these data, we found a previous study in the literature where the guidelines in WCAG were considered for each of the COGA recommendations, but no academic reference was found. An expert in the field of natural language who had worked on the translation of the COGA recommendations into Spanish [54] was asked. This expert told the first author that no official reference was available. Therefore, in this step, we considered performing the generation manually. Yet, it was a tedious job with the possibility of error. For this reason, we used generative AI as follows (Table 3):

Table 3.

‘COGA_WCAG’ relationship.

- First, we introduced the table “COGA guidelines” into ChatGPT.

- Afterwards, we wrote this prompt:According to the COGA criteria table that has been entered into the system, associate each of the specific recommendations for improving cognitive accessibility with the relevant WCAG 2.2 guidelines. It links each row with a WCAG criterion. Displays the result in a table.

- Validation: Same as above.

Note that not all relationships were generated using generative AI. For ‘Heuristis_WCAG’ and ‘COGA_WCAG’, we used generative AI because there were no references in the literature, but for the relation ‘WCAG_Disabilities’, we used previous research that provided a solid basis for moving forward in obtaining the remaining relationships [55]. In this context, the relationships considered in the experimentation included both the proposals generated by generative AI (‘Heuristis_WCAG’ and ‘COGA_WCAG’) and those established by experts (‘WCAG_Disabilities’). This combination sought to provide a more robust and grounded framework for the research.

Although the data obtained from the Generative AI tools may contain approximate relationships and there is a margin of error to improve, these data served as an important starting point to begin to test the relationship between usability and accessibility with cognitive disabilities.

3.3. Step 3. Adapting Heuristics for Disability

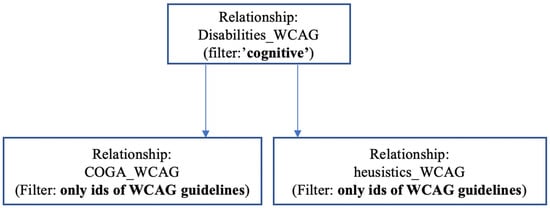

The aim of this step was to use the data obtained and related (in step 2) to select only the heuristic criteria and COGA guidelines that directly affect people with cognitive disabilities (see Figure 3 for the outline of the process). Therefore, ‘WCAG_Disabilities’ and ‘cognitive’ disability were selected (see Table 4).

Figure 3.

Information filters with ‘cognitive’ disability.

Table 4.

‘WCAG_Disabilities’. Relationship between WCAG and disability where disability is ‘cognitive or learning’.

From these data, WCAG filtering was performed in each of the tables ‘heuristics_WCAG’ and ‘COGA_WCAG’, with the purpose of selecting only the heuristic criteria and COGA guidelines that most directly impacted people with cognitive disabilities. Table 5 shows the selection of the heuristic criteria, and Table 6 shows the selection of the COGA guidelines.

Table 5.

‘Heuristis_WCAG’ relationship. Link between heuristic criteria and WCAG from Table 2.

Table 6.

‘COGA_WCAG’ relationship. Link between COGA guidelines and WCAG from Table 2.

3.4. Step 4. Conducting the Assessment

This step consisted of carrying out the evaluation of the system with the guidelines obtained according ‘heuristic criteria’ (Table 5) and the ‘COGA guidelines’ (Table 6) to leave only those with a more direct impact on people with cognitive disabilities. For this purpose, an MS Excel document (in two different sheets) was used to collect all the evaluator’s data.

There are three possible responses from the evaluator:

- ‘Pass’, indicating that it is not a problem in the assessed system.

- ‘Fail’ indicates that the assessed criterion is not met in the assessed system.

- ‘NA’ indicates “not applicable” and is selected by the assessor when the criterion cannot be assessed in the system.

Each of these outcomes has a value associated with it:

- ‘Pass’ is a 1 when the criterion is met in the system.

- ‘Fail’ is a 0 when the criterion is not fulfilled in the system.

- ‘NA’ is an empty field and will not be considered in the computation of the “U + A-index”.

The evaluator can also include a textual observation that can clarify why they have chosen each option in the evaluation. This information can later be used to enrich the final quantitative result.

3.5. Step 5. Quantitative and Qualitative Results

Once the experimentation and completion of the usability and accessibility criteria with the evaluator’s assessments were carried out, we proceeded to obtain the “U + A-index” for each of the evaluations. For this purpose, the criteria that were considered a ‘pass’ were added up, with a value of ‘1’. Criteria that the assessor had classified as ‘Not Applicable’ (NA) were also considered and were excluded from the calculation to ensure the accuracy of the results.

No specific weighting was considered for each of the criteria, since the filter of criteria to be applied in each evaluation (usability or accessibility) already eliminated unimportant aspects in the evaluation, and only the most relevant criteria were evaluated according to the evaluated disability. This was the case for both the U-index (1) and the A-index (2). The “U + A-index” (3) result was appropriate and optimized for the specific users selected in the evaluation.

The formula to derive the U-index is presented below:

U-index = [Σ of “PASS”/(All Heuristic Criteria − NA)] × 100

The formula to derive the A-index is as follows:

A-index = [Σ of “PASS”/(All COGA guidelines − NA)] × 100

Finally, both indices are added together and divided by two to obtain a single index.

U + Aindex = (U-index + A-index)/2

4. Use Case

A use case was studied to validate the proposal, which allowed for the evaluation of usability (with the heuristics) and accessibility (with the COGA) of interactive systems for people with cognitive disabilities.

4.1. Scope of This Study

Four (Spanish and Catalan) websites were selected. These websites are designed to be accessed by people with cognitive disabilities and older people. Fundació Viver Bell-lloc and Fundació Auria were chosen because they are websites aimed at presenting foundational information to people with cognitive disabilities and can usually be used by them. Imserso and SeniorTIC were chosen because they are two portals that offer information to the elderly.

- -

- Fundacio Viver Bell-lloc: https://www.vivelloc.cat/ca/ (accessed on 1 October 2024).

- -

- Fundacio auria: https://www.auria.org/ (accessed on 1 October 2024).

- -

- Imserso: https://imserso.es/web/imserso (accessed on 1 October 2024).

- -

- Seniortic: https://www.seniortic.org/ (accessed on 1 October 2024).

Evaluating the homepage alone allows critical usability and accessibility issues to be identified from the user’s first contact, as it contains representative design and navigation elements. It is also an efficient way to conduct an evaluation when resources are limited [56].

4.2. Experimentation

4.2.1. Selection of Usability and Accessibility Guidelines

The starting point was the data from ‘Step 3. Adaptation of heuristics to disability’, which revealed the ‘heuristic criteria’ and ‘COGA guidelines’ with the strongest impact on people with cognitive disabilities. We evaluated the homepages of the selected websites.

4.2.2. Implementation of the Assessment

We assessed all the items in each list (usability and accessibility). The items ‘passed’ the evaluation if they were met on the website. ‘NA’ was used when the criterion could not be applied because the website did not contain it.

4.2.3. Results

Once the assessments had been carried out, calculations were made in order to obtain a single cognitive U + A-index.

Calculations were performed for this purpose (presented in step 5 of the proposal). Table 7 and Table 8 present the results of whether we gained ‘pass’, ‘fail’, or ‘NA’ results from the user evaluation. These tables also include the points associated with each answer, together with the sum of questions to be applied to obtain an index.

Table 7.

List of results of heuristic criteria evaluation.

Table 8.

List of results of COGA guideline evaluation.

As for the assessment of the U-index based on the ‘heuristic criteria’, we produced the results presented in Table 5.

Table 7 shows the identifier of the heuristic, the description of the heuristic criterion, and the WCAG criterion that is related to it for information purposes. Then, for each website evaluated, the answer, the score associated with the answer, and the question number to be considered in the calculation of the index are presented.

Thus, ‘Viver Bell-lloc’ has a U-index of 75%; ‘Fundació Auria’ has a U-index of 57%; ‘SeniorTIC’ has a U-index of 75%; and finally, ‘Imserso’ has a U-index of 75%.

Table 7 shows that the criteria evaluated for each website were different, despite the fact that the same U-index result was obtained in three of the four websites evaluated. As we can see in Table 4, there are differences between the assessments: how links are presented, which are not distinguishable on all websites, and concerning the font size, which could be improved to facilitate the legibility of the content.

The assessed U-index was very low for Fundació Auria (only 57%), because the main page of the website presents the information in a large font size and in an uncommon interactive format (there are titles in boxes that, when clicked, display more information).

As for the assessment of the A-index (cognitive) based on the ‘COGA guidelines’, we derive the results presented in Table 6.

Table 8 shows the identifier and description of the criterion, and the associated guideline from WCAG is presented here for information purposes. Then, for each website evaluated, the rating (pass/fail/NA), the points associated with each answer, and the question number to be considered in the calculation of the index are presented.

Table 8 shows that the A-index has more variation in the results. In the case of the ‘Viver bell-lloc foundation’, an A-index of 56% was obtained; the ‘Auria foundation’ has a A-index of 25%; ‘SeniorTIC’ has a A-index of 67%; and finally, ‘Imserso’ has a A-index of 56%.

The lowest value was obtained in the evaluation of the ‘Auria foundation’ as it presented a homepage interface with complex interactions. In the case of the other websites, all presented similar results, with the SeniorTIC website showing the best A-index result because it presents the information better when blog articles are displayed.

Once the U-index and A-index were calculated, the final calculation was made: U + A-index (cognitive). The results are shown in Table 9.

Table 9.

U + A-index results table.

Table 9 shows that the three websites (Viver bell-lloc, SeniorTIC, and Imserso) are similar in terms of usability and accessibility, with the SeniorTIC site being better in terms of cognitive accessibility. The highest U + A-index value is obtained by the SeniorTIC website. With regard to the ‘Auria foundation’, the website presents the information in a not very comprehensible way and with an unusual structure and interaction. It has been concluded that this website is not suitable for users with cognitive disabilities, as it is a website with information that is aimed more at companies.

4.2.4. Specific Recommendations for Improving the Sites Analyzed

As can be seen from the results obtained, it is important for a website to have a clear and specific purpose (Heuristic criterion: 1.4. COGA guideline: 1.1) so that users can easily understand what actions they can perform (Heuristic criteria: 3.1, 5.1, and 5.2. COGA guideline: 1.4). It should have a simple structure with the possibility of finding information quickly (Heuristic criteria: 5.3, 6.3, and 10.3. COGA guidelines: 2.2 and 2.4). The content should be presented in clear text, to make it easy to read for all users (Heuristic criteria: 9.2 and 9.4. COGA guidelines: 3.2, 3.4, 3.8, and 3.9). All interactive elements should have labels to improve comprehension as well as providing clear instructions on how to complete a task (Heuristic criteria: 7.2 and 8.2. COGA guidelines: 4.6 and 4.7). It is also important to provide end-users with useful feedback to help them solve errors and carry out tasks (Heuristic criterion: 11.3. COGA guidelines: 5.4 and 7.5). It can be seen from Table 7 and Table 8 that not all criteria could be analyzed, but they are nonetheless relevant to the systems.

5. Discussion

We consider that addressing usability and accessibility with respect to cognitive diversity and developing a set of cognitive usability and accessibility heuristics was worth the effort, and the results seem to confirm it. Although usability and accessibility are key aspects of the user experience of interactive systems, we argue that cognitive impairments are the least understood in usability and accessibility research. To fill (partially) this gap, we developed a list of heuristics that bring together usability and accessibility issues, hoping to facilitate the design and evaluation of better interactive systems, and quantified the usability and accessibility of interactive systems for people with cognitive disabilities. This approach does not aim to in any way eliminate the involvement of end-users in design and evaluation activities. Yet, the approach put forward in this paper might complement end-user activities and help designers to quantify and improve the usability and accessibility of interactive systems without the intervention of end-users. The guidelines were developed with the support of generative artificial intelligence, because the task of creating an integrated set of heuristics turned out to be far from straightforward, as discussed below. The results generated by GenAI were validated by the authors of this paper. A case study shows that the new set of heuristics can be effectively used.

The development of a set of criteria for assessing cognitive usability and accessibility, with people with intellectual disabilities in mind, would have been considerably more difficult without the use of generative AI. The main difficulty lies in the need to establish a relationship between WCAG and the COGA guidelines or heuristic criteria. This complexity is compounded if this process of matching guidelines is carried out manually, as there is a high chance of making errors, because of, for instance, evaluator fatigue or difficulty in interpreting data from different domains, such as usability and accessibility. Thus, we were faced with a challenge, and we decided to address that with the support of generative AI, which significantly optimized time, effort, and resources by providing a solid basis for evaluation through the generation of criteria filters. The proposals generated by GenAI were evaluated, because GenAI is not free from errors. They were sufficiently accurate and contributed to minimizing human errors, such as those arising from evaluator fatigue, transfer of information between multiple sources, or difficulty in interpreting data from different domains, such as usability and accessibility. These results present an interesting future scenario of generative AI in HCI [49].

The exploratory case study shows that cognitive U + A heuristics enable us to identify errors and quantify the level of usability and accessibility of web systems. The websites evaluated present different degrees of compliance of the heuristics, and this level corresponds to well-known design aspects, such as the navigation structure and complex or unnatural interactions, which suggests that the heuristics are valid and might be applied by usability and accessibility professionals. The U + A-index displays the websites organized in a ranking, which was another objective of the research presented in this paper.

There is room for considering whether the combined heuristics and proposed approach can be applied to other domains and technologies. Although addressing this question warrants further research, previous research and professional practice in accessibility and usability show that usability inspection methods and accessibility guidelines can be applied to different types of technologies and users. This suggests that the approach put forward in this paper can also be adapted. Future studies could delve into this issue.

Limitations

Regarding the quantification of usability, it might be argued that we could have conducted an SUS survey [57]. However, an SUS survey is often conducted at the end of a user test, so that the user can assess their satisfaction with a system. With the results of the HE evaluation, an expert assessment is obtained, which can be much more objective (and less biased by user tastes). Calculating a usability index considering the SUS index of user testing was not addressed in this research work, where only expert evaluations were considered. The incorporation of user evaluations could be a further step to enriching the quantitative (and qualitative) data of the evaluations, and above all, to obtaining an index more adjusted to reality (combining an evaluation by experts and evaluation by users). This could be performed in future studies.

Regarding the evaluation of accessibility with WCAG, a numerical index of the level of compliance with each criterion can be obtained. However, accessibility criteria tend to be related to more than one type of disability, i.e., there is no one-to-one relationship between accessibility issues and disabilities. Also, the usefulness of the quantification proposed in this article is focused on a very specific profile of users (people with cognitive disabilities). Future research can explore the U + A heuristics with specific types of intellectual disabilities.

It might be possible to complement this research by including user evaluations in order to check whether the results obtained in the expert evaluations correspond to the direct results obtained in terms of effectiveness, efficiency, and satisfaction of users (in this case, with cognitive disabilities) in using the system. Gathering subjective user ratings (for example, using System Usability Scale surveys) alongside expert evaluations would provide a well-rounded view of the index’s applicability. This could be carried out in future studies. The main objective of this study was to explore the possibility of bringing together usability and accessibility guidelines in a practical approach with positive results.

We are aware that this experimentation was carried out in a very restricted scope. We evaluated four websites in the same domain, which limits the conclusions we can draw from this study. This is in fact one of the limitations of all case studies. We do not seek to generalize, as case studies are not good for generalization; instead, we wanted to explore a particular research issue in some detail. Yet, we are confident that the results will be significant in other domains and with other technologies, as the heuristics are not tied to one specific area or type of technology, though adjustments might be needed. We plan to extend the evaluations to other websites, including e-commerce, education, and government sites, as well as other types of interactive systems, such as mobile apps and IoT devices. This will provide a broader understanding of the performance of this heuristic in various environments and identify areas where further refinement is needed.

Regarding the formula proposed to obtain the single index of cognitive U + A, it may be necessary to adjust the weights of each of the criteria; however, in this initial proposal, all the criteria have been considered to have the same weight and equal importance in terms of usability and accessibility. This proposal is supported by the filter of each criterion to be analyzed only with users with a specific disability, in this case, cognitive disability. In a next iteration of the calculation process, different weightings could be considered for each usability and accessibility criterion in order to adjust the index more precisely to the context of users with disabilities considered in the evaluation of the system.

In order to improve the accuracy and relevance of the U + A-index, consideration should be given to adjusting the weighting of its calculation. This approach could include giving more weight to critical heuristics, such as ease of navigation for users with memory problems, or the presentation of easy-to-read text. This could result in a score that is more in line with reality and the rating system. That idea was considered in the current research, but it was decided to postpone its implementation to a future stage. Adjusting the weights of the formula rests upon further research, especially research involving end-users. This is due to the need to identify, in a very specific way and by means of additional analyses, those factors that have a greater impact according to the specific disability, and which therefore allow a more adapted value of the U + A-index to be obtained. The research presented in this paper opens the door to this possibility.

We want to highlight that the data collection through the generative AI system was an incipient result, and it is necessary to explore other generative AI tools to obtain complementary classifications and allow for a more accurate approximation of the criteria to be analyzed according to a selected type of disability.

It should also be noted that we only performed random checks of some criteria (the raw results of the generative AI shown in tables) to observe their correct relationship between the data and the guidelines. Although this is not a thorough validation, it was conducted to explore the possibility of obtaining useful enough results without manual intervention in this activity. This opens the door to somewhat generalizing and customizing the heuristics for each type of disability, always with the supervision of human experts.

6. Conclusions

The research work discussed in this paper offers the potential for evaluating usability and accessibility together. The proposal focuses on the context of users with cognitive disabilities. generative AI was used to generate a set of cognitive usability and accessibility heuristics. These GenAI-generated heuristics are useful to evaluate the usability and accessibility of websites for people with intellectual disabilities. A ranking of websites optimized for people with cognitive and learning disabilities has been obtained.

The results motivate us to encourage developers to use these heuristics in a variety of areas: webpages, mobile applications, and other systems. These heuristics can potentially help to inform the design and evaluation of more inclusive systems.

Future research should include the development of a U + A-index to address different types of disabilities, because the process carried out to obtain this index is ‘scalable’ and can be modified to take into account other disabilities. For example, different lists of usability and accessibility heuristics could be created for different disabilities (total visual, low vision, motor, and hearing) if another disability was selected in step 3 of the methodology. Also, evaluation of other websites considering usability and accessibility heuristics as well as considering different user profiles with disabilities is needed. Future research may also include the development of AI for continuous and automatic monitoring and adjustment tools. These tools would enable continuous assessment of website accessibility, allowing for real-time identification of usability issues and suggestions for improvement in compliance with heuristic standards.

Author Contributions

Conceptualization, A.P.A., S.S.B.; methodology, A.P.A.; validation, A.P.A.; investigation, A.P.A. and S.S.B.; data curation, A.P.A.; writing—original draft preparation, A.P.A. and S.S.B.; writing—review and editing, A.P.A. and S.S.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data is contained within the article.

Acknowledgments

We thank the reviewers for their constructive and positive suggestions and comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Digital 2024: Global Overview Report. 2024. Available online: https://datareportal.com/reports/digital-2024-global-overview-report (accessed on 25 October 2024).

- World Report on Disability 2011. Available online: https://www.who.int/teams/noncommunicable-diseases/sensory-functions-disability-and-rehabilitation/world-report-on-disability (accessed on 25 October 2024).

- World Health Organization: Summary World Report on Disability. 2011. Available online: https://cutt.ly/ZrDBPje (accessed on 25 October 2024).

- Eser, A. Intellectual Disability Statistics: Key Facts on Global Impact Revealed. World Metrics. 23 July 2024. Available online: https://worldmetrics.org/intellectual-disability-statistics/ (accessed on 25 October 2024).

- Universal Declaration of Human Rights. 2022. Available online: https://www.un.org/es/about-us/universal-declaration-of-human-rights (accessed on 25 October 2024).

- Novak, M.E.; Paciello, M.G. La Conquista de la Accesibilidad de X-Windows: Desarrollo para Personas con Discapacidades; The Paciello Group: Clearwater, FL, USA, 2002. [Google Scholar]

- Hudson, R.; Weakley, R.; Firminger, P. Una Frontera de Accesibilidad: Discapacidades Cognitivas y Dificultades de Aprendizaje. Webusability—Servicios de Accesibilidad y Usabilidad. 2005. Available online: https://usability.com.au/2004/12/an-accessibility-frontier-cognitive-disabilities-and-learning-difficulties-2004/ (accessed on 25 October 2024).

- ISO/CD 9241-11; Ergonomics of Human-System Interaction–Part 11: Guidance on Usability. ISO: Geneva, Switzerland, 1998. Available online: http://www.iso.org/iso/catalogue_detail.htm?csnumber=63500 (accessed on 25 October 2024).

- Introduction to Web Accessibility. 2018. Available online: https://www.w3.org/standards/webdesign/accessibility (accessed on 25 October 2024).

- Evaluating Web Accessibility Overview. 2020. Available online: https://www.w3.org/WAI/test-evaluate/ (accessed on 25 October 2024).

- Quintal, C.; Macías, J.A. Measuring and improving the quality of development processes based on usability and accessibility. Univ. Access Inf. Soc. 2021, 20, 203–221. [Google Scholar] [CrossRef]

- González, M.P.; Pascual, A.; Lorés, J. Evaluación heurística. In Introducción a la Interacción Persona-Ordenador; AIPO: Asociación Interacción Persona-Ordenador: Zaragoza, Spain, 2001; Available online: https://aipo.es/wp-content/uploads/2022/02/LibroAIPO.pdf (accessed on 25 October 2024).

- WCAG 2 Overview. 2024. Available online: https://www.w3.org/WAI/standards-guidelines/wcag/ (accessed on 25 October 2024).

- Hollingsed, T.; Novick, D.G. Usability inspection methods after 15 years of research and practice. In Proceedings of the 25th Annual ACM International Conference on Design of Communication (SIGDOC ‘07), El Paso, TX, USA, 22–24 October 2007; Association for Computing Machinery: New York, NY, USA, 2007; pp. 249–255. [Google Scholar] [CrossRef]

- Or, C.K.; Chan, A.H.S. Inspection methods for usability evaluation. In User Experience Methods and Tools in Human-Computer Interaction, 1st ed.; CRC Press: Boca Raton, FL, USA, 2024; p. 23. [Google Scholar] [CrossRef]

- Sauer, J.; Sonderegger, A.; Schmutz, S. Usability, user experience and accessibility: Towards an integrative model. Ergonomics 2020, 63, 1207–1220. [Google Scholar] [CrossRef] [PubMed]

- Cockton, G. Usability Evaluation. Interaction Design Foundation—IxDF. 1 January 2014. Available online: https://www.interaction-design.org/literature/book/the-encyclopedia-of-human-computer-interaction-2nd-ed/usability-evaluation (accessed on 25 October 2024).

- La Interacción Persona Ordenador. Asociación en Interacción Persona Ordenador. 2001. ISBN: 84-607-2255-4. Available online: https://aipo.es/educacion/material-editado-por-aipo/?id_rec=2 (accessed on 25 October 2024).

- Polson, P.; Rieman, J.; Wharton, C.; Olson, J.; Kitajima, M. Usability inspection methods: Rationale and examples. In Proceedings of the 8th Human Interface Symposium (HIS92), Kawasaki, Japan, 10–12 September 1992; pp. 377–384. [Google Scholar]

- Jeffries, R.; Miller, J.R.; Wharton, C.; Uyeda, K. User interface evaluation in the real world: A comparison of four techniques. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 27 April–2 May 1991; ACM: New York, NY, USA, 1991; pp. 119–124. [Google Scholar]

- Nielsen, J. Finding usability problems through heuristic evaluation. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Monterey, CA, USA, 3–7 May 1992; ACM: New York, NY, USA, 1992; pp. 373–380. [Google Scholar]

- Jimenez, C.; Lozada, P.; Rosas, P. Usability heuristics: A systematic review. In Proceedings of the 2016 IEEE 11th Colombian Computing Conference (CCC), Popayan, Colombia, 27–30 September 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Nielsen, J. 10 Usability Heuristics for User Interface Design. Available online: https://www.nngroup.com/articles/ten-usability-heuristics/ (accessed on 25 October 2024).

- Dourado, D.; Antonio, M.; Canedo, E.D. Usability Heuristics for Mobile Applications—A Systematic Review. In Proceedings of the 20th International Conference on Enterprise Information Systems—Volume 2, ICEIS, Funchal, Portugal, 21–24 March 2018; pp. 483–494. [Google Scholar] [CrossRef]

- Kuhail, M.A.; Farooq, S.; Almutairi, S. Recent Developments in Chatbot Usability and Design Methodologies. In Trends, Applications, and Challenges of Chatbot Technology; Kuhail, M., Shawar, B.A., Hammad, R., Eds.; IGI Global: Hershey, PA, USA, 2023; pp. 1–23. [Google Scholar] [CrossRef]

- Vieira, E.; Silveira, A.; Martins, R. Heuristic Evaluation on Usability of Educational Games: A Systematic Review. Inform. Educ. 2019, 18, 427–442. [Google Scholar] [CrossRef]

- Omar, K.; Fakhouri, H.; Zraqou, J.; Marx Gómez, J. Usability Heuristics for Metaverse. Computers 2024, 13, 222. [Google Scholar] [CrossRef]

- Dekelver, J.; Kultsova, M.; Shabalina, O.; Borblik, J.; Pidoprigora, A.; Romanenko, R. Design of mobile applications for people with intellectual disabilities. Commun. Comput. Inf. Sci. 2015, 535, 823–836. [Google Scholar]

- Abou-Zahra, S.; Brewer, J.; Cooper, M. Web Standards to Enable an Accessible and Inclusive Internet of Things (IoT). In Proceedings of the 14th International Web for All Conference (W4A ‘17), Perth, Australia, 2–4 April 2017; Article 9. Association for Computing Machinery: New York, NY, USA, 2017; pp. 1–4. [Google Scholar] [CrossRef]

- González, M.; Masip, L.; Granollers, T.; Oliva, M. Análisis Cuantitativo en un Experimento de Evaluación Heurística. In Proceedings of the IX Congreso Internacional Interacción, Albacete, Spain, 9–11 June 2008. [Google Scholar]

- Bonastre, L.; Granollers, T. A set of heuristics for user experience evaluation in Ecommerce websites. In Proceedings of the Seventh International Conference on Advances in Computer-Human Interactions, ACHI 2014, Virtual Event, 24–29 July 2021; pp. 27–34, ISBN 978-1-61208-325-4. [Google Scholar]

- Granollers, T.; Albets, M. Heuristic Evaluations New Proposal. 2021. Available online: https://web.archive.org/web/20240713190018/ (accessed on 25 October 2024).

- Tognazzini, B. First Principles, HCI Design, Human Computer Interaction (HCI), Principles of HCI Design, Usability Testing. 2014. Available online: http://www.asktog.com/basics/firstPrinciples.html (accessed on 25 October 2024).

- Pascual-Almenara, A.; Saltiveri, T.; Navarro, J.; Albets, M. Enhancing Usability Assessment with a Novel Heuristics-Based Approach Validated in an Actual Business Setting. J. Interact. Syst. 2024, 15, 615–631. [Google Scholar] [CrossRef]

- Onay Durdu, P.; Soydemir, Ö.N. A Systematic Review of Web Accessibility Metrics. In App and Website Accessibility Developments and Compliance Strategies; Akgül, Y., Ed.; IGI Global: Hershey, PA, USA, 2022; pp. 77–108. [Google Scholar] [CrossRef]

- Masri, F.; Luján-Mora, S. A Combined Agile Methodology for the Evaluation of Web Accessibility. In Proceedings of the IADIS International Conference Interfaces and Human Computer Interaction 2011 (IHCI 2011), Rome, Italy, 24–26 July 2011; pp. 423–428, ISBN 978-972-8939-52-6. [Google Scholar]

- Web Accessibility Evaluation Tools List. 2024. Available online: https://www.w3.org/WAI/test-evaluate/tools/list/ (accessed on 25 October 2024).

- Brajnik, G.; Vigo, M. Automatic Web Accessibility Metrics: Where We Were and Where We Went. In Web Accessibility; Human–Computer Interaction Series; Springer: London, UK, 2019. [Google Scholar] [CrossRef]

- Freire, A.P.; Fortes, R.P.M.; Turine, M.A.S.; Paiva, D.M.B. An evaluation of web accessibility metrics based on their attributes. In Proceedings of the 26th Annual ACM International Conference on Design of Communication (SIGDOC ‘08), Lisbon, Portugal, 22–24 September 2008; Association for Computing Machinery: New York, NY, USA, 2008; pp. 73–80. [Google Scholar] [CrossRef]

- Making Content Usable for People with Cognitive and Learning Disabilities. 2021. Available online: https://www.w3.org/TR/coga-usable (accessed on 25 October 2024).

- Web Content Accessibility Guidelines 2.2 (WCAG). World Wide Web Consortium (W3C). 2023. Available online: http://www.w3.org/TR/WCAG22/ (accessed on 25 October 2024).

- Dias, A.L.; de Mattos Fortes, R.P.; Masiero, P.C. Heua: A heuristic evaluation with usability and accessibility requirements to assess web systems. In Proceedings of the 11th Web for All Conference, Seoul, Republic of Korea, 7 April 2014; pp. 18:1–18:4. [Google Scholar]

- Rodrigues, S.; Fortes, R. A Checklist for the Evaluation of Web Accessibility and Usability for Brazilian Older Adults. J. Web Eng. (JWE) 2020, 19, 63–108. [Google Scholar] [CrossRef]

- Tateo, L. Web accessibility and usability: Limits and perspectives. In Proceedings of the Workshop on Technology Enhanced Learning Environments for Blended Education, Foggia, Italy, 5–6 October 2021. [Google Scholar]

- Moreno, L.; Martinez, P.; Ruíz-Mezcua, B. A Bridge to Web Accessibility from the Usability Heuristics. In Proceedings of the 5th Symposium of the Workgroup Human-Computer Interaction and Usability Engineering of the Austrian Computer Society, USAB 2009, Linz, Austria, 9–10 November 2009; pp. 290–300. [Google Scholar] [CrossRef]

- Bisante, A.; Datla, V.S.V.; Panizzi, E.; Trasciatti, G.; Zeppieri, S. Enhancing Interface Design with AI: An Exploratory Study on a ChatGPT-4-Based Tool for Cognitive Walkthrough Inspired Evaluations. In Proceedings of the 2024 International Conference on Advanced Visual Interfaces (AVI ‘24), Arenzano, Italy, 3–7 June 2024; Article 41. Association for Computing Machinery: New York, NY, USA, 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Acosta-Vargas, P.; Acosta-Vargas, G.; Salvador-Acosta, B.; Jadán-Guerrero, J. Addressing Web Accessibility Challenges with Generative Artificial Intelligence Tools for Inclusive Education. In Proceedings of the 2024 Tenth International Conference on eDemocracy & eGovernment (ICEDEG), Lucerne, Switzerland, 24–26 June 2024; pp. 1–7. [Google Scholar] [CrossRef]

- York, E. Evaluating ChatGPT: Generative AI in UX Design and Web Development Pedagogy. In Proceedings of the 41st ACM International Conference on Design of Communication (SIGDOC ‘23), Orlando, FL, USA, 26–28 October 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 197–201. [Google Scholar] [CrossRef]

- Interactions. Volume XXXI.1 January—February 2024. Available online: https://interactions.acm.org/archive/toc/january-february-2024/ (accessed on 25 October 2024).

- Siontis, K.C.; Attia, Z.I.; Asirvatham, S.J.; Friedman, P.A. ChatGPT hallucinating: Can it get any more humanlike? Eur. Heart J. 2024, 45, 321–323. [Google Scholar] [CrossRef] [PubMed]

- Pascual-Almenara, A.; Granollers-Saltiveri, T. Combining two inspection methods: Usability heuristic evaluation and WCAG guidelines to assess e-commerce web-sites. In Human-Computer Interaction; Ruiz, P.H., Agredo-Delgado, V., Kawamoto, A.L.S., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 1–16. [Google Scholar]

- WCAG 2.2 Understanding Docs. 2023. Available online: https://www.w3.org/WAI/WCAG22/Understanding/ (accessed on 25 October 2024).

- Casare, A.; Guimarães da Silva, C.; Martins, P.; Moraes, R. Mapping WCAG Guidelines to Nielsen’s Heuristics. In Proceedings of the 16th International Conference on Applied Computing, Cagliary, Italy, 7–9 November 2019. [Google Scholar] [CrossRef]

- García Muñoz, O. Pautas de Accesibilidad Cognitiva Web. García Muñoz, O (Plena Inclusión Madrid). 2020. Available online: https://plenainclusionmadrid.org/wp-content/uploads/2020/12/Guia-Pautas-Accesibilidad-2020-final.pdf (accessed on 25 October 2024).

- Campoverde-Molina, M.; Luján-Mora, S.; García, L.V. Empirical Studies on Web Accessibility of Educational Websites: A Systematic Literature Review. IEEE Access 2020, 8, 91676–91700. [Google Scholar] [CrossRef]

- Nielsen, J.; Tahir, M. Homepage Usability: 50 Websites Deconstructed; New Riders Press: Indianapolis, IN, USA, 2001. [Google Scholar]

- Brooke, J. SUS: A ‘quick and dirty’ usability scale. In Usability Evaluation in Industry; Jordan, P.W., Thomas, B., Weerdmeester, A., McClelland, I.I., Eds.; Taylor & Francis: London, UK, 1996; pp. 189–194. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).