Author Contributions

Conceptualization, J.D.C. and G.W.; methodology, G.W., A.C., Y.C. and Y.J.; formal analysis, G.W., A.C., Y.C. and Y.J.; investigation, G.W.; resources, A.C., Y.C. and Y.J.; data curation, G.W.; writing—original draft preparation, G.W., A.C., Y.C. and Y.J.; software, L.X.; supervision, J.D.C.; project administration, J.D.C. and G.W.; funding acquisition, J.D.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Amazon Alexa AI grant.

Data Availability Statement

Our annotated corpus and annotation guidelines are publicly available under the Apache 2.0 license on

https://github.com/emorynlp/MLCG, (accessed on 27 July 2023).

Acknowledgments

We gratefully acknowledge the support of the Amazon Alexa AI grant. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of Alexa AI.

Conflicts of Interest

The authors declare no conflict of interest. The funding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, and in the decision to publish the results.

References

- Li, X.; Palmer, M.; Xue, N.; Ramshaw, L.; Maamouri, M.; Bies, A.; Conger, K.; Grimes, S.; Strassel, S. Large Multi-lingual, Multi-level and Multi-genre Annotation Corpus. In Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC’16), Portorož, Slovenia, 23–28 May 2016; pp. 906–913. [Google Scholar]

- Zeldes, A.; Simonson, D. Different Flavors of GUM: Evaluating Genre and Sentence Type Effects on Multilayer Corpus Annotation Quality. In Proceedings of the 10th Linguistic Annotation Workshop Held in Conjunction with ACL 2016 (LAW-X 2016), Berlin, Germany, 11 August 2016; pp. 68–78. [Google Scholar] [CrossRef]

- Zeldes, A. The GUM corpus: Creating multilayer resources in the classroom. Lang. Resour. Eval. 2017, 51, 581–612. [Google Scholar] [CrossRef]

- Zeldes, A. Multilayer Corpus Studies; Routledge: Abingdon, UK, 2018. [Google Scholar]

- Gessler, L.; Peng, S.; Liu, Y.; Zhu, Y.; Behzad, S.; Zeldes, A. AMALGUM—A Free, Balanced, Multilayer English Web Corpus. In Proceedings of the 12th Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; pp. 5267–5275. [Google Scholar]

- Grishman, R.; Sundheim, B.M. Message understanding conference-6: A brief history. In Proceedings of the COLING 1996 Volume 1: The 16th International Conference on Computational Linguistics, Copenhagen, Denmark, 5–9 August 1996. [Google Scholar]

- Doddington, G.R.; Mitchell, A.; Przybocki, M.A.; Ramshaw, L.A.; Strassel, S.M.; Weischedel, R.M. The automatic content extraction (ace) program-tasks, data, and evaluation. In Proceedings of the Lrec, Lisbon, Portugal, 26–28 May 2004; Volume 2, pp. 837–840. [Google Scholar]

- Hovy, E.; Marcus, M.; Palmer, M.; Ramshaw, L.; Weischedel, R. OntoNotes: The 90% solution. In Proceedings of the Human Language Technology Conference of the NAACL, NewYork, NY, USA, 4–9 June 2006; pp. 57–60. [Google Scholar]

- Silvano, P.; Leal, A.; Silva, F.; Cantante, I.; Oliveira, F.; Mario Jorge, A. Developing a multilayer semantic annotation scheme based on ISO standards for the visualization of a newswire corpus. In Proceedings of the 17th Joint ACL—ISO Workshop on Interoperable Semantic Annotation, Groningen, The Netherlands, 14–18 June 2021; pp. 1–13. [Google Scholar]

- Bamman, D.; Lewke, O.; Mansoor, A. An Annotated Dataset of Coreference in English Literature. In Proceedings of the 12th Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; pp. 44–54. [Google Scholar]

- Chen, H.; Fan, Z.; Lu, H.; Yuille, A.; Rong, S. PreCo: A Large-scale Dataset in Preschool Vocabulary for Coreference Resolution. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 172–181. [Google Scholar] [CrossRef]

- Guha, A.; Iyyer, M.; Bouman, D.; Boyd-Graber, J. Removing the training wheels: A coreference dataset that entertains humans and challenges computers. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May–5 June 2015; pp. 1108–1118. [Google Scholar]

- Apostolova, E.; Tomuro, N.; Mongkolwat, P.; Demner-Fushman, D. Domain adaptation of coreference resolution for radiology reports. In Proceedings of the BioNLP: Proceedings of the 2012 Workshop on Biomedical Natural Language Processing, Montreal, QC, Canada, 3–8 June 2012; pp. 118–121. [Google Scholar]

- Pustejovsky, J.; Castano, J.; Robert, I.; Sauri, R. TimeML: Robust Specification of Event and Temporal Expressions in Text. New Dir. Quest. Answ. 2003, 3, 28–34. [Google Scholar]

- Verhagen, M.; Gaizauskas, R.; Schilder, F.; Hepple, M.; Katz, G.; Pustejovsky, J. SemEval-2007 Task 15: TempEval Temporal Relation Identification. In Proceedings of the Fourth International Workshop on Semantic Evaluations (SemEval-2007), Prague, Czech Republic, 23–24 June 2007; pp. 75–80. [Google Scholar]

- Verhagen, M.; Saurí, R.; Caselli, T.; Pustejovsky, J. SemEval-2010 Task 13: TempEval-2. In Proceedings of the 5th International Workshop on Semantic Evaluation, Uppsala, Sweden, 15–16 July 2010; pp. 57–62. [Google Scholar]

- UzZaman, N.; Llorens, H.; Derczynski, L.; Allen, J.; Verhagen, M.; Pustejovsky, J. SemEval-2013 Task 1: TempEval-3: Evaluating Time Expressions, Events, and Temporal Relations. In Proceedings of the Second Joint Conference on Lexical and Computational Semantics (*SEM), Volume 2: Proceedings of the Seventh International Workshop on Semantic Evaluation (SemEval 2013), Atlanta, GA, USA, 14–15 June 2013; pp. 1–9. [Google Scholar]

- Ning, Q.; Wu, H.; Roth, D. A Multi-Axis Annotation Scheme for Event Temporal Relations. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 1318–1328. [Google Scholar] [CrossRef]

- Zhang, Y.; Xue, N. Neural Ranking Models for Temporal Dependency Structure Parsing. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 3339–3349. [Google Scholar] [CrossRef]

- Yao, J.; Qiu, H.; Min, B.; Xue, N. Annotating Temporal Dependency Graphs via Crowdsourcing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 5368–5380. [Google Scholar] [CrossRef]

- Van Gysel, J.E.; Vigus, M.; Chun, J.; Lai, K.; Moeller, S.; Yao, J.; O’Gorman, T.; Cowell, A.; Croft, W.; Huang, C.R.; et al. Designing a Uniform Meaning Representation for Natural Language Processing. KI-Künstliche Intell. 2021, 35, 343–360. [Google Scholar] [CrossRef]

- Bethard, S.; Kolomiyets, O.; Moens, M.F. Annotating Story Timelines as Temporal Dependency Structures. In Proceedings of the Eighth International Conference on Language Resources and Evaluation (LREC’12), Istanbul, Turkey, 23–25 May 2012; pp. 2721–2726. [Google Scholar]

- Mostafazadeh, N.; Chambers, N.; He, X.; Parikh, D.; Batra, D.; Vanderwende, L.; Kohli, P.; Allen, J. A Corpus and Cloze Evaluation for Deeper Understanding of Commonsense Stories. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 839–849. [Google Scholar] [CrossRef]

- O’Gorman, T.; Wright-Bettner, K.; Palmer, M. Richer Event Description: Integrating event coreference with temporal, causal and bridging annotation. In Proceedings of the 2nd Workshop on Computing News Storylines (CNS 2016), Austin, TX, USA, 5 November 2016; pp. 47–56. [Google Scholar] [CrossRef]

- Araki, J. Extraction of Event Structures from Text. Ph.D. Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, 2018. [Google Scholar]

- Talmy, L. Force dynamics in language and cognition. Cogn. Sci. 1988, 12, 49–100. [Google Scholar] [CrossRef]

- Wolff, P.; Klettke, B.; Ventura, T.; Song, G. Expressing Causation in English and Other Languages. In Categorization Inside and Outside the Laboratory: Essays in Honor of Douglas L. Medin; American Psychological Association: Washington, DC, USA, 2005; pp. 29–48. [Google Scholar]

- Mirza, P.; Sprugnoli, R.; Tonelli, S.; Speranza, M. Annotating Causality in the TempEval-3 Corpus. In Proceedings of the EACL 2014 Workshop on Computational Approaches to Causality in Language (CAtoCL), Gothenburg, Sweden, 26 April 2014; pp. 10–19. [Google Scholar] [CrossRef]

- Mostafazadeh, N.; Grealish, A.; Chambers, N.; Allen, J.; Vanderwende, L. CaTeRS: Causal and Temporal Relation Scheme for Semantic Annotation of Event Structures. In Proceedings of the Fourth Workshop on Events, San Diego, CA, USA, 17 June 2016; pp. 51–61. [Google Scholar] [CrossRef]

- Sloman, S.; Barbey, A.; Hotaling, J. A Causal Model Theory of the Meaning of Cause, Enable, and Prevent. Cogn. Sci. 2009, 33, 21–50. [Google Scholar] [CrossRef] [PubMed]

- Cao, A.; Geiger, A.; Kreiss, E.; Icard, T.; Gerstenberg, T. A Semantics for Causing, Enabling, and Preventing Verbs Using Structural Causal Models. In Proceedings of the 45th Annual Conference of the Cognitive Science Society, Sydney, Australia, 26–29 July 2023. [Google Scholar]

- Beller, A.; Bennett, E.; Gerstenberg, T. The language of causation. In Proceedings of the 42nd Annual Conference of the Cognitive Science Society, Online, 29 July–1 August 2020. [Google Scholar]

- Gerstenberg, T.; Goodman, N.D.; Lagnado, D.; Tenenbaum, J. How, whether, why: Causal judgements as counterfactual contrasts. In Proceedings of the CogSci 2015, Pasadena, CA, USA, 22–25 July 2015; pp. 1–6. [Google Scholar]

- Gerstenberg, T.; Goodman, N.D.; Lagnado, D.; Tenenbaum, J. A counterfactual simulation model of causal judgments for physical Events. Psychol. Rev. 2021, 128, 936. [Google Scholar] [CrossRef] [PubMed]

- Bonial, C.; Babko-Malaya, O.; Choi, J.; Hwang, J.; Palmer, M. PropBank Annotation Guidelines; Center for Computational Language and Education Research Institute of Cognitive Science, University of Colorado at Boulder: Boulder, CO, USA, 2010. [Google Scholar]

- Dunietz, J. Annotating and Automatically Tagging Constructions of Causal Language. Ph.D. Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, 2018. [Google Scholar]

- Cao, A.; Williamson, G.; Choi, J.D. A Cognitive Approach to Annotating Causal Constructions in a Cross-Genre Corpus. In Proceedings of the 16th Linguistic Annotation Workshop (LAW-XVI) within LREC2022, Marseille, France, 24 June 2022; pp. 151–159. [Google Scholar]

- Dunietz, J.; Levin, L.; Carbonell, J. The BECauSE Corpus 2.0: Annotating Causality and Overlapping Relations. In Proceedings of the 11th Linguistic Annotation Workshop, Valencia, Spain, 3 April 2017; pp. 95–104. [Google Scholar] [CrossRef]

- Lu, J.; Ng, V. Event coreference resolution: A survey of two decades of research. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 5479–5486. [Google Scholar]

- Vilain, M.; Burger, J.D.; Aberdeen, J.; Connolly, D.; Hirschman, L. A model-theoretic coreference scoring scheme. In Proceedings of the Sixth Message Understanding Conference (MUC-6): Proceedings of a Conference, Columbia, MD, USA, 6–8 November 1995. [Google Scholar]

- Bagga, A.; Baldwin, B. Algorithms for scoring coreference chains. In Proceedings of the First International Conference on Language Resources and Evaluation Workshop on Linguistics Coreference, Granada, Spain, 28–30 May 1998. [Google Scholar]

- Luo, X. On coreference resolution performance metrics. In Proceedings of the Human Language Technology Conference and Conference on Empirical Methods in Natural Language Processing, Vancouver, BC, Canada, 6–8 October 2005; pp. 25–32. [Google Scholar]

- Lewis, P.; Oguz, B.; Rinott, R.; Riedel, S.; Schwenk, H. MLQA: Evaluating Cross-lingual Extractive Question Answering. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 7315–7330. [Google Scholar] [CrossRef]

- Verhagen, M. Temporal closure in an annotation environment. Lang. Resour. Eval. 2005, 39, 211–241. [Google Scholar] [CrossRef][Green Version]

- Mani, I.; Verhagen, M.; Wellner, B.; Lee, C.M.; Pustejovsky, J. Machine Learning of Temporal Relations. In Proceedings of the 21st International Conference on Computational Linguistics and 44th Annual Meeting of the Association for Computational Linguistics, Sydney, Australia, 17–18 July 2006; pp. 753–760. [Google Scholar] [CrossRef]

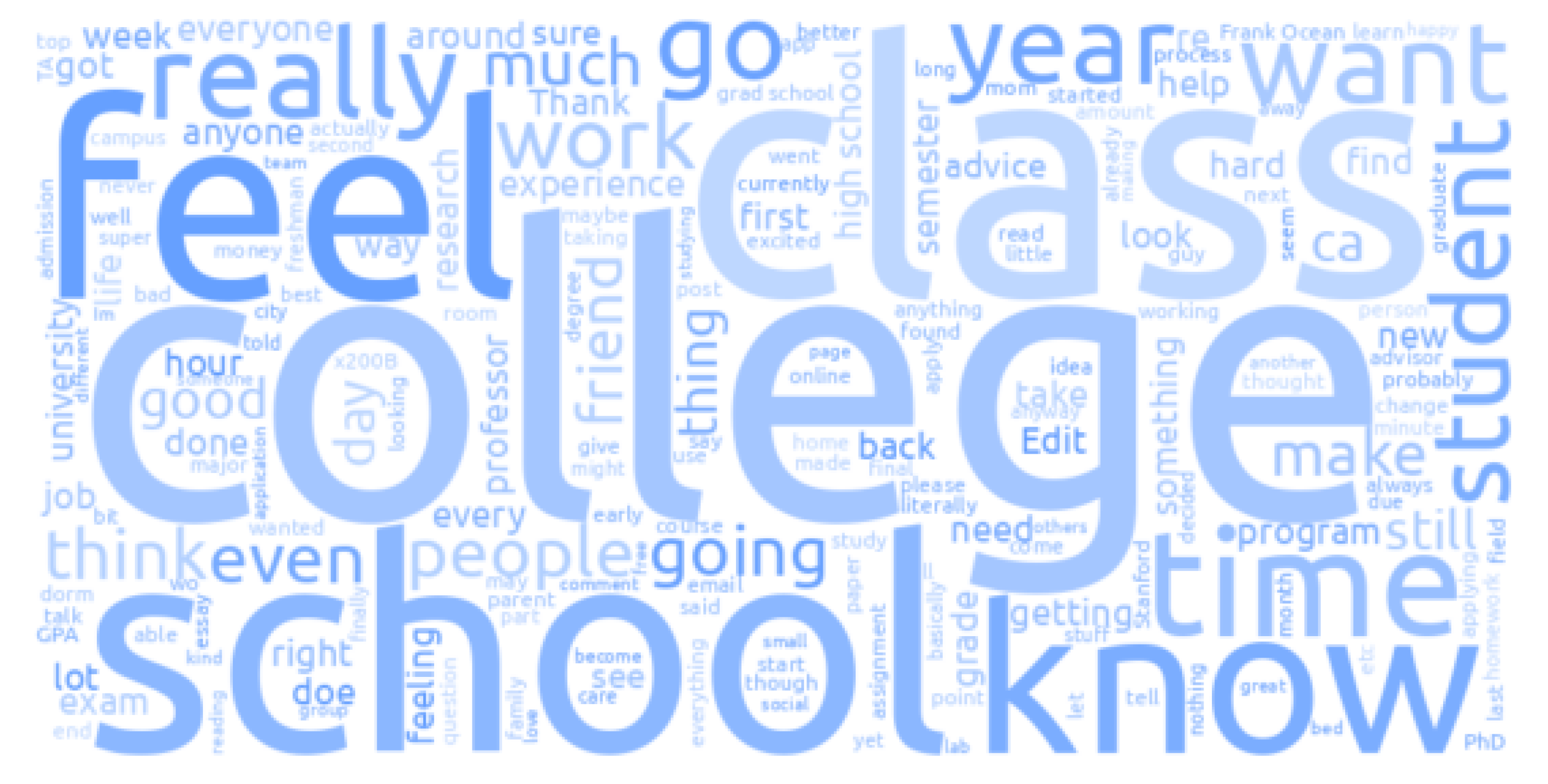

Figure 1.

Use of document situation and post mentions.

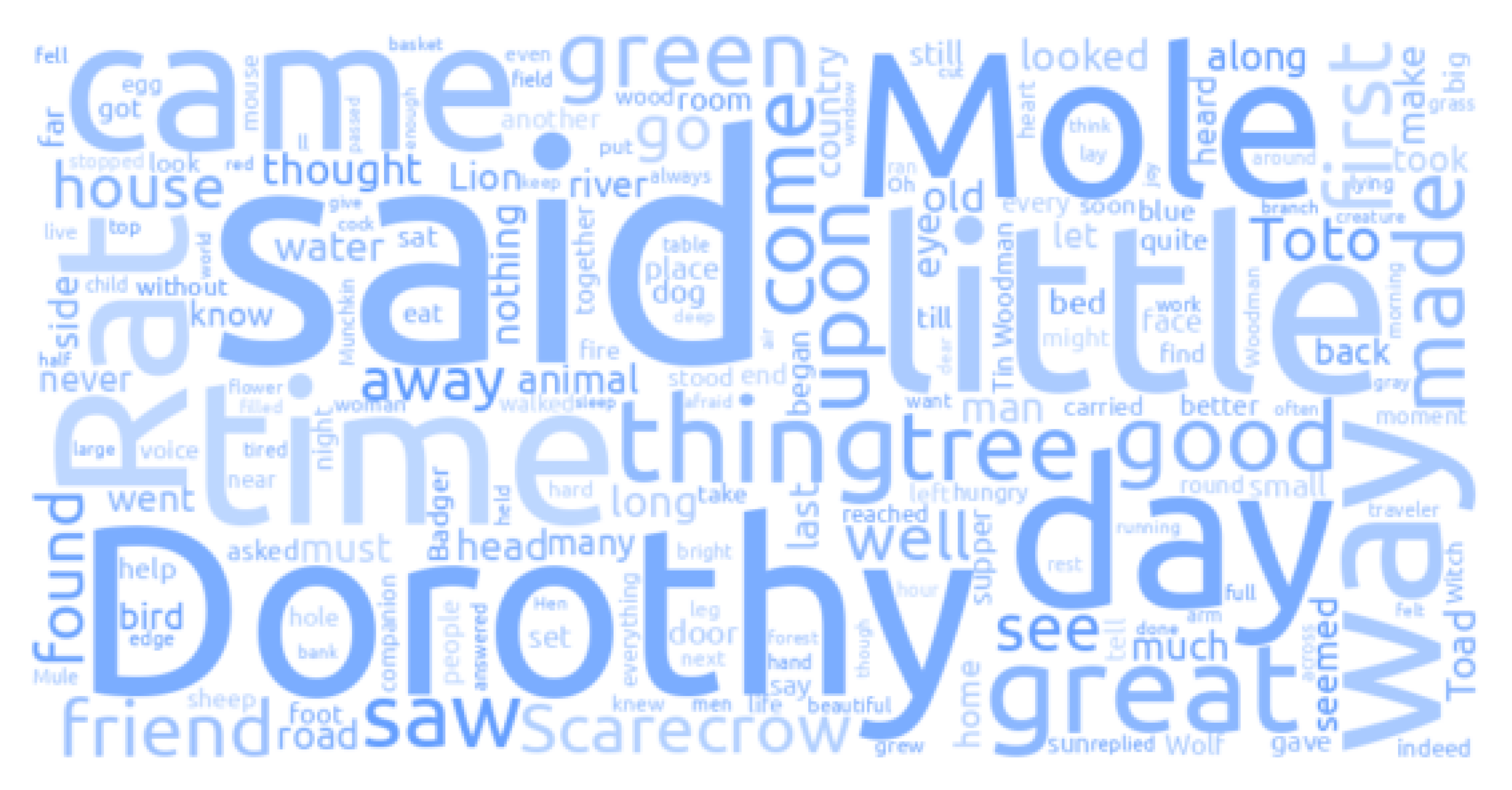

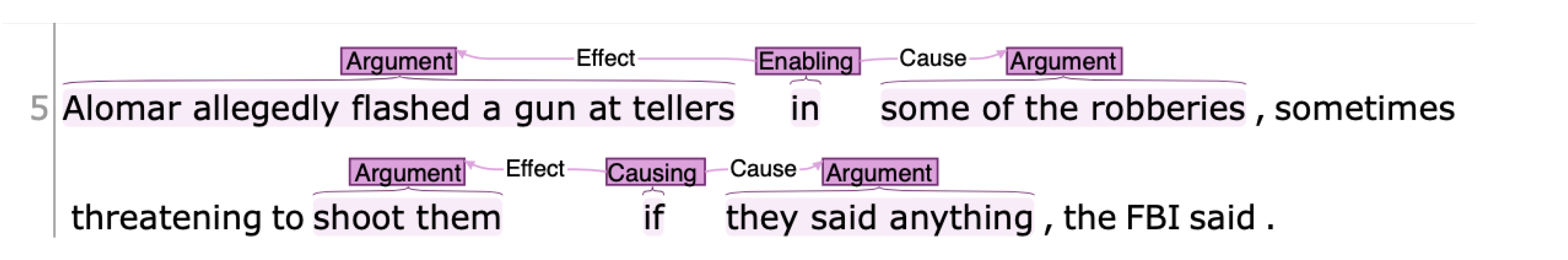

Figure 2.

Example causal constructions in the Constructicon.

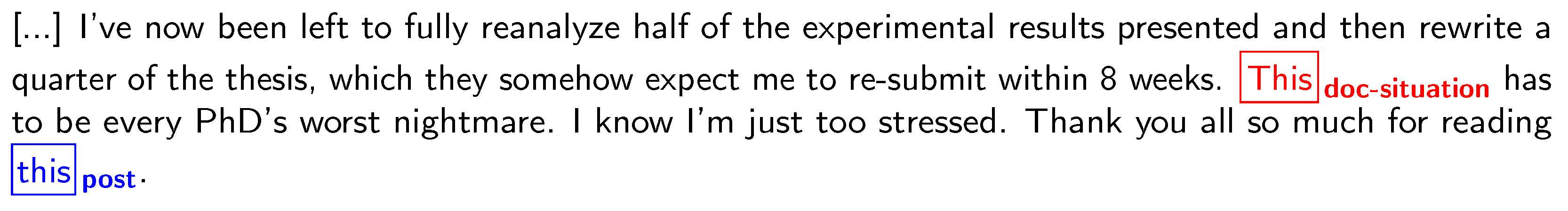

Figure 3.

Example of causal annotation using the INCEpTION tool. Image shows annotation of the fifth sentence in a news document. Span labels represent cause type, edge labels represent argument type.

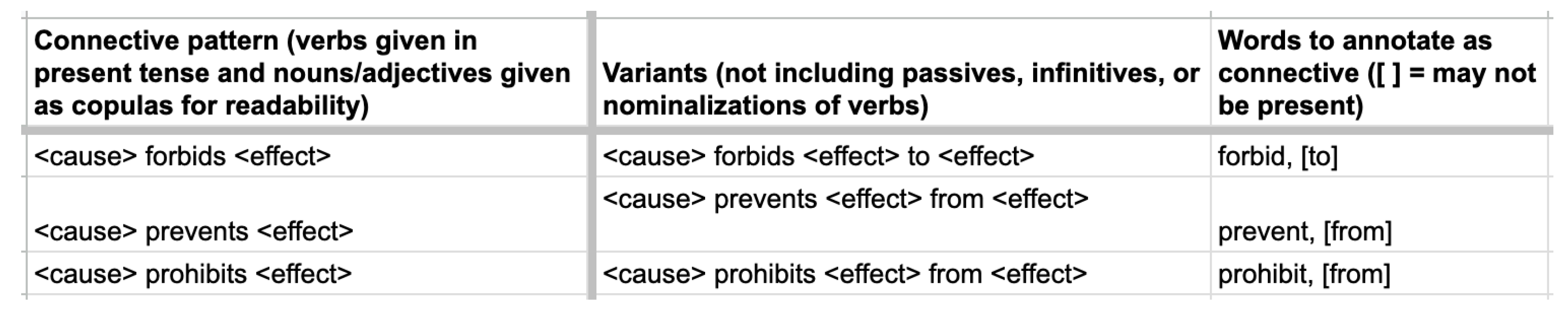

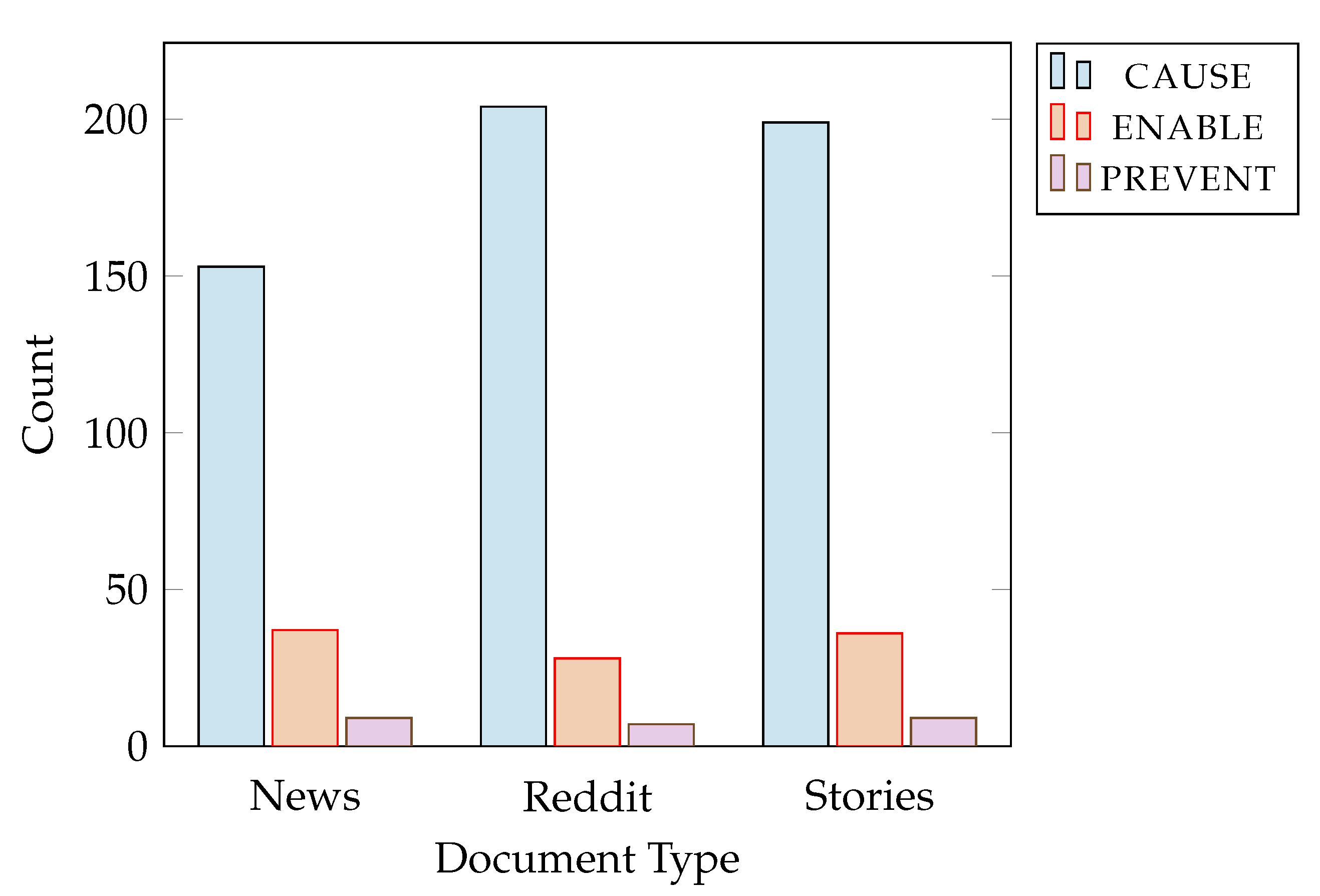

Figure 4.

Bar graph of causal counts as presented in

Table 13.

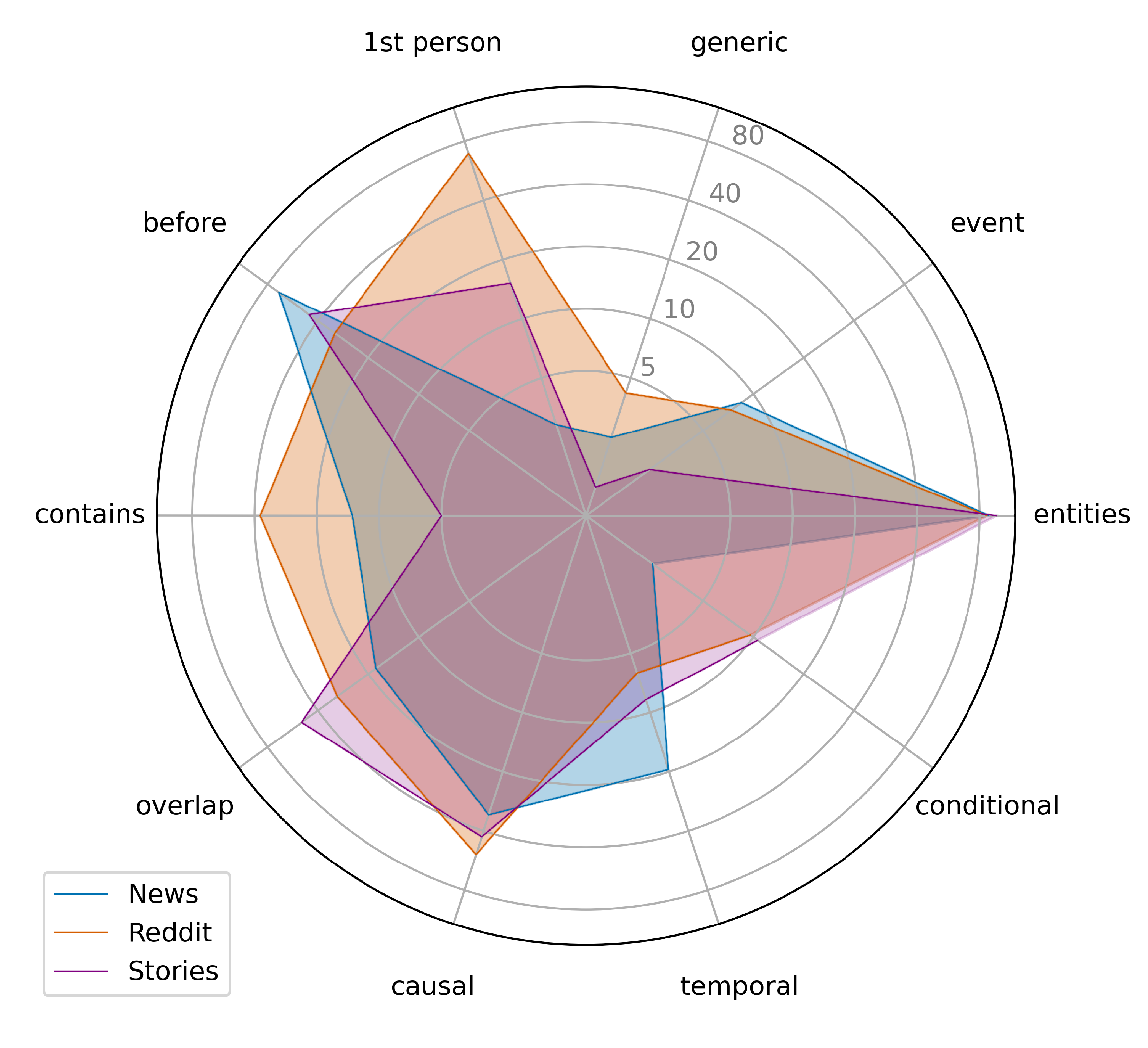

Figure 5.

Visual representation of genre characteristics. Coreference: 1st person, generic, event, and entities. Causal: conditional connectives (‘if’), causal connectives (‘for’, ‘to’, ‘because’, ‘so’), and temporal connectives (‘when’, ‘after’). Temporal: overlap, contains/contained, and before/after. All percentages are from the tables above. Graph is scaled logarithmically.

Table 1.

Defining

cause,

enable, and

prevent according to [

27].

| | Patient Tendency

toward Result | Affector–Patient

Concordance | Occurrence

of Result |

|---|

| cause | N | N | Y |

| enable | Y | Y | Y |

| prevent | Y | N | N |

Table 2.

Table of temporal relations.

| Relation | Definition |

|---|

| A before B | event A finished before event B started |

| A after B | event A started after event B finished |

| A contains B | the run time of event A contains the time of event B |

| A contained-in B | the run time of event A is contained in the time of event B |

| A overlap B | the run times of events A and B overlap |

Table 3.

Corpus composition by data source.

| | Causal | Coref | Temporal |

|---|

| CNN | 50 | 50 | 50 |

| Fables | 50 | 50 | 50 |

| Reddit | 110 | 110 | 160 |

| Reuters | 50 | 50 | 50 |

| Wind in the Willows | - | - | 50 |

| Wizard of Oz | 50 | 50 | 50 |

| Total | 310 | 310 | 410 |

Table 4.

Token and sentence count, average document length in tokens and sentences, and average sentence length in tokens for each genre.

| | News | Reddit | Stories | Total |

|---|

| Documents | 100 | 160 | 150 | 410 |

| Tokens | 14,442 | 22,668 | 21,412 | 58,522 |

| Sentences | 606 | 1140 | 851 | 2597 |

| Tokens per document (avg.) | 144.42 | 141.68 | 142.75 | 142.74 |

| Sentences per document (avg.) | 6.06 | 7.13 | 5.67 | 6.33 |

| Tokens per sentence (avg.) | 23.83 | 19.88 | 25.16 | 22.53 |

Table 5.

Comparison of coreference performance on different text types annotated using the same guidelines.

| Text Type | MUC | B | CEAF | Avg. |

|---|

| News | 68.73 | 65.43 | 64.70 | 66.29 |

| Reddit | 85.29 | 80.21 | 71.61 | 79.04 |

| Stories | 88.75 | 83.12 | 75.49 | 82.45 |

Table 6.

Count of mention types in different text types.

| | News | Reddit | Stories |

|---|

| Category | n | Freq. | Mean | n | Freq. | Mean | n | Freq. | Mean |

|---|

| entity | 1497 | 88.6% | 14.97 | 1947 | 86.8% | 17.70 | 2241 | 96.2% | 22.41 |

| event | 143 | 8.5% | 1.43 | 167 | 7.4% | 1.52 | 56 | 2.4% | 0.56 |

| generic | 42 | 2.5% | 0.42 | 95 | 4.2% | 0.86 | 32 | 1.4% | 0.32 |

| doc-situation | 0 | 0.0% | 0.00 | 27 | 1.2% | 0.25 | 0 | 0.0% | 0.00 |

| post | 7 | 0.4% | 0.07 | 6 | 0.2% | 0.05 | 1 | 0.04% | 0.01 |

| Total | 1689 | 100% | 16.89 | 2242 | 100% | 20.38 | 2330 | 100% | 23.30 |

Table 7.

Count of pronouns in different text types.

| | News | Reddit | Stories |

|---|

| Category | n | Freq. | Mean | n | Freq. | Mean | n | Freq. | Mean |

|---|

| 1st pers. | 9 | 2.9% | 0.09 | 1081 | 69.4% | 9.83 | 204 | 15.2% | 2.04 |

| All pronouns | 311 | 100% | 3.11 | 1557 | 100% | 14.15 | 1346 | 100% | 13.46 |

Table 8.

Statistics on coreference annotation in different text types. Column ‘1st PP’ shows statistics for first-person pronouns.

| | News | Reddit | Stories |

|---|

| | Overall | 1st PP | Overall | 1st PP | Overall | 1st PP |

|---|

| Chain per document (avg.) | 6.41 | 0.02 | 5.50 | 1.09 | 5.93 | 0.68 |

| Mention per chain (avg.) | 2.64 | 4.5 | 3.72 | 9.01 | 3.94 | 3.00 |

| Characters per mention (avg.) | 10.45 | 1.33 | 4.30 | 1.35 | 5.90 | 1.50 |

| Token distance to antecedent (avg.) | 29.58 | 10.25 | 16.04 | 10.85 | 14.87 | 9.20 |

Table 9.

Inter-annotator agreement (Krippendorff’s-) across genres.

| | News | Reddit | Stories |

|---|

| Events | 0.86 | 0.75 | 0.85 |

| Relations | 0.56 | 0.47 | 0.48 |

Table 10.

Average reference time count per document.

| | DCTs per Document (avg.) | Events per Document (avg.) |

|---|

| News | 1.00 | 7.57 |

| Reddit | 1.16 | 10.32 |

| Stories | 1.00 | 11.54 |

Table 11.

Representation of relation type across genre, and % of relation type connected to the DCT.

| | News | Reddit | Stories |

|---|

| | % of Rels | % DCT | % of Rels | % DCT | % of Rels | % DCT |

|---|

| before/after | 68.51% | 79.30% | 31.68% | 58.47% | 45.11% | 19.80% |

| contains/contained-in | 13.46% | 82.14% | 37.55% | 90.97% | 4.98% | 7.21% |

| overlap | 18.03% | 0.00% | 30.77% | 0.00% | 49.91% | 0.00% |

Table 12.

Comparison of causal relation annotation performance on different text types using the same guidelines.

indicates Cohen’s kappa, which was only calculated for agreed spans (in line with [

36]).

| | News | Reddit | Stories | Overall |

|---|

| Spans () | 0.74 | 0.81 | 0.72 | 0.75 |

| Argument labels () | 0.86 | 0.93 | 0.91 | 0.90 |

| Connective spans () | 0.75 | 0.82 | 0.75 | 0.77 |

| Types of causation () | 0.89 | 0.78 | 0.82 | 0.83 |

Table 13.

Counts of cause type across different text types.

| | News | Reddit | Stories | |

|---|

| | n | Percent | n | Percent | n | Percent | Total |

|---|

| cause | 153 | 71.94% | 204 | 85.36% | 199 | 81.56% | 556 |

| enable | 37 | 24.90% | 28 | 11.72% | 36 | 14.75% | 101 |

| prevent | 9 | 3.16% | 7 | 2.93% | 9 | 3.69% | 25 |

| Total | 199 | 100% | 239 | 100% | 244 | 100% | 682 |

Table 14.

Comparison of popular connectives across different document types.

| | News | Reddit | Stories | Total |

|---|

| Connective | n | Freq. | n | Freq. | n | Freq. | n | Freq. |

|---|

| for | 29 | 14.57% | 28 | 11.72% | 45 | 18.44% | 102 | 14.96% |

| to | 29 | 14.57% | 32 | 13.39% | 34 | 13.93% | 95 | 13.93% |

| if | 5 | 2.51% | 23 | 9.62% | 26 | 10.66% | 54 | 7.92% |

| because | 3 | 1.51% | 44 | 18.41% | 4 | 1.64% | 51 | 7.48% |

| so | 2 | 1.01% | 22 | 9.21% | 22 | 9.02% | 46 | 6.74% |

| when | 13 | 6.53% | 11 | 4.60% | 20 | 8.20% | 44 | 6.45% |

| after | 26 | 13.07% | 4 | 1.67% | 1 | 0.41% | 31 | 4.55% |

| Total | 109 | 54.77% | 164 | 68.62% | 152 | 62.30% | 423 | 62.02% |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).