The Detection of COVID-19 in Chest X-rays Using Ensemble CNN Techniques

Abstract

1. Introduction

2. Materials and Methods

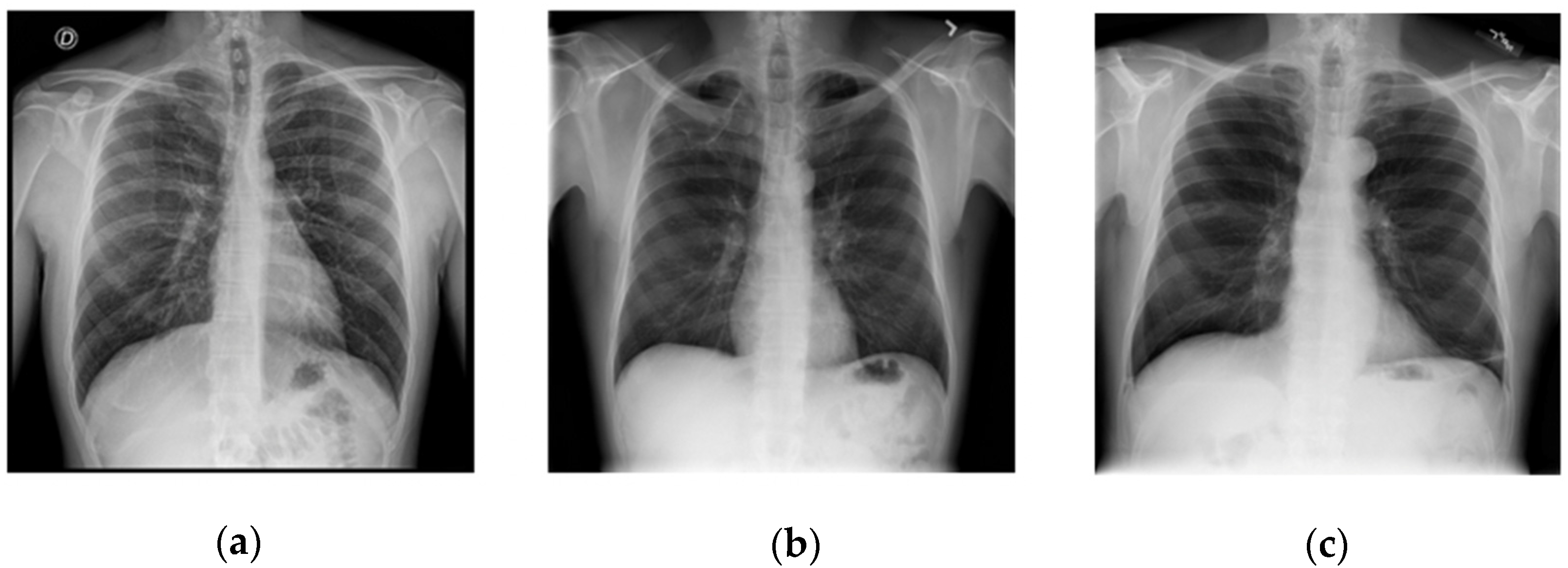

2.1. Datasets

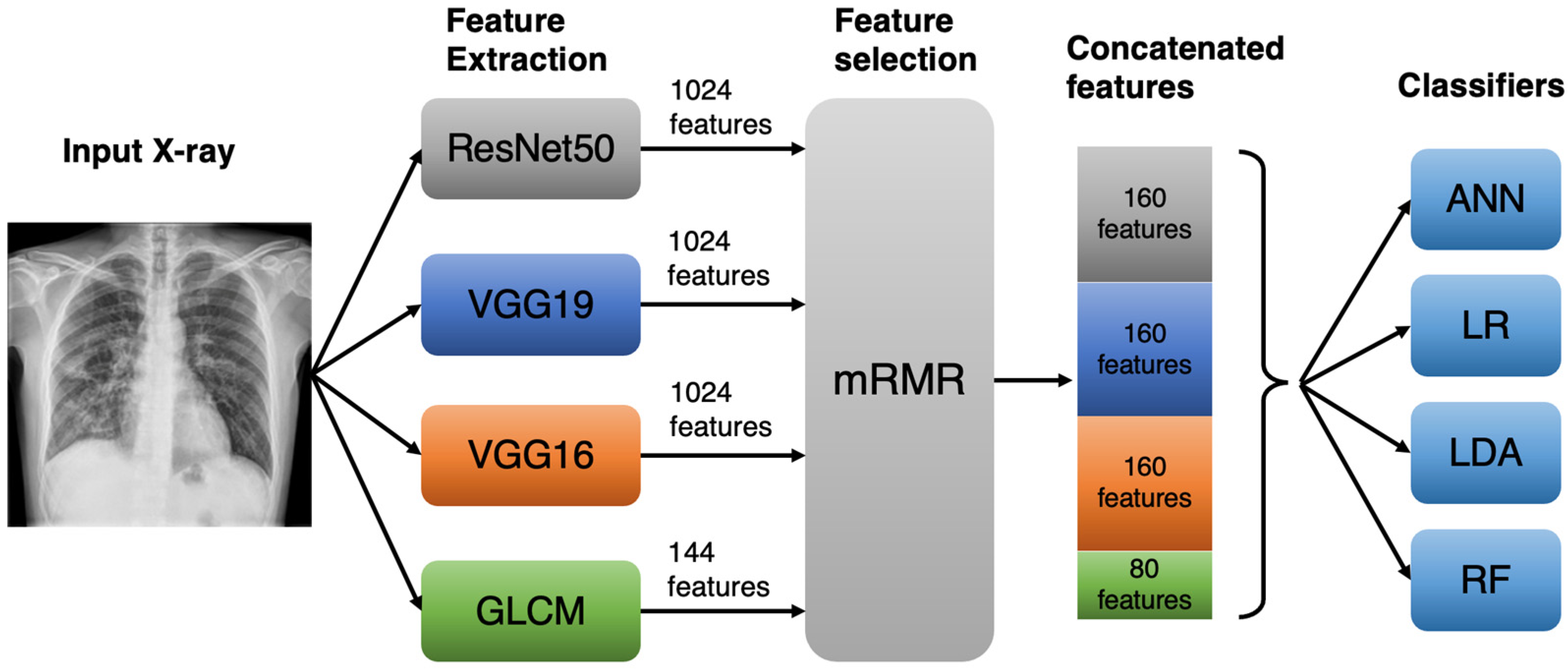

2.2. Model Overview

2.3. Convolutional Neural Networks (CNNs)

2.4. Grey-Level Co-Occurrence Matrix Features

2.5. mRMR Feature Selection

2.6. Classification Process

2.6.1. Random Forest Classifier (RF)

2.6.2. Linear Discriminant Analysis (LDA)

2.6.3. Logistic Regression (LR)

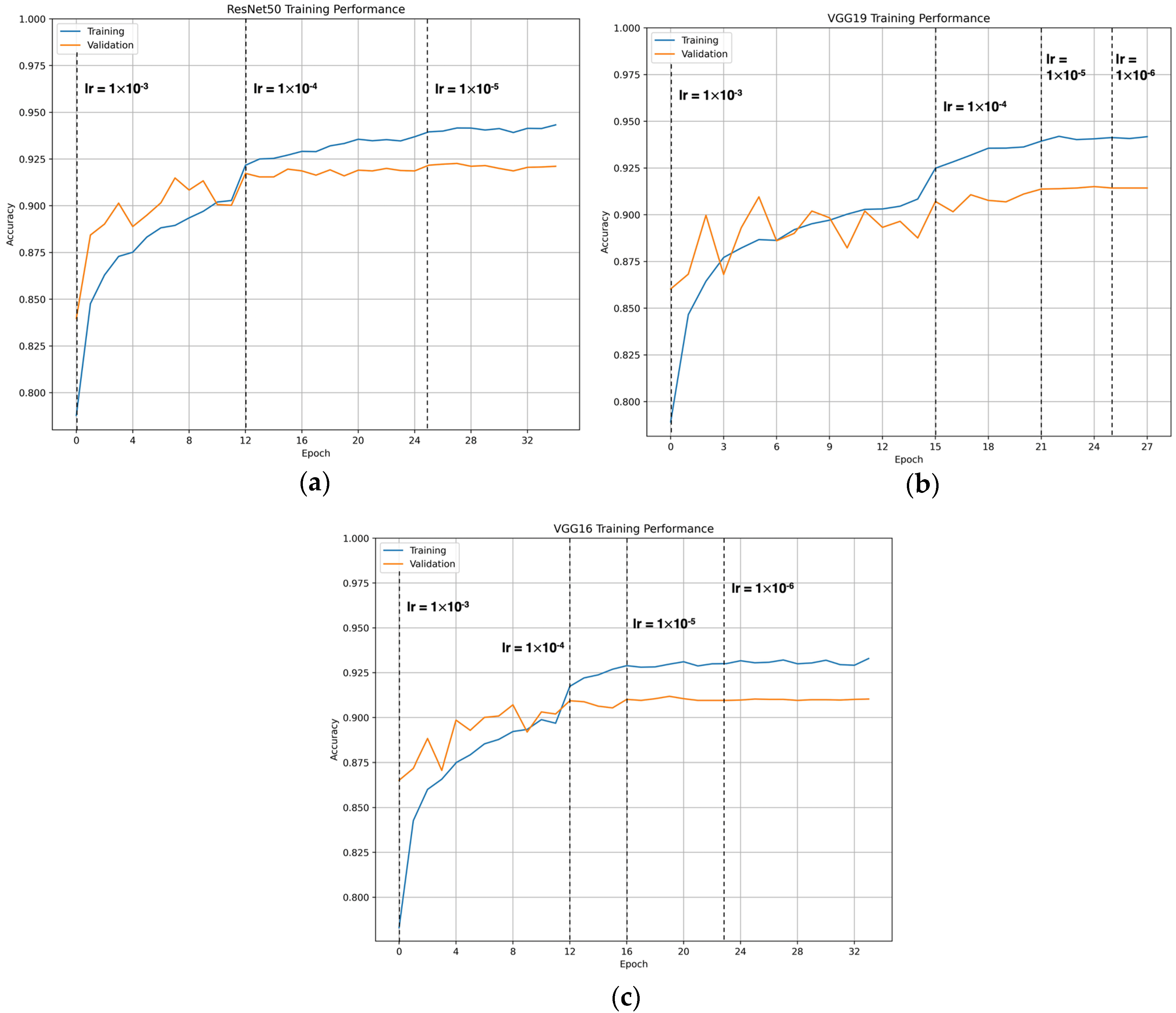

2.6.4. Artificial Neural Network (ANN)

2.7. Generalizability of Models

- (1)

- Training and testing on the COVID-QU-Ex dataset.

- (2)

- Training and testing on the Mostafiz et al. (2022) dataset.

- (3)

- Training on the COVID-QU-Ex dataset and testing on Mostafiz et al. (2022) dataset.

- (4)

- Training on Mostafiz et al. (2022) dataset and testing on the COVID-QU-Ex dataset.

2.8. Classification Metrics

3. Results

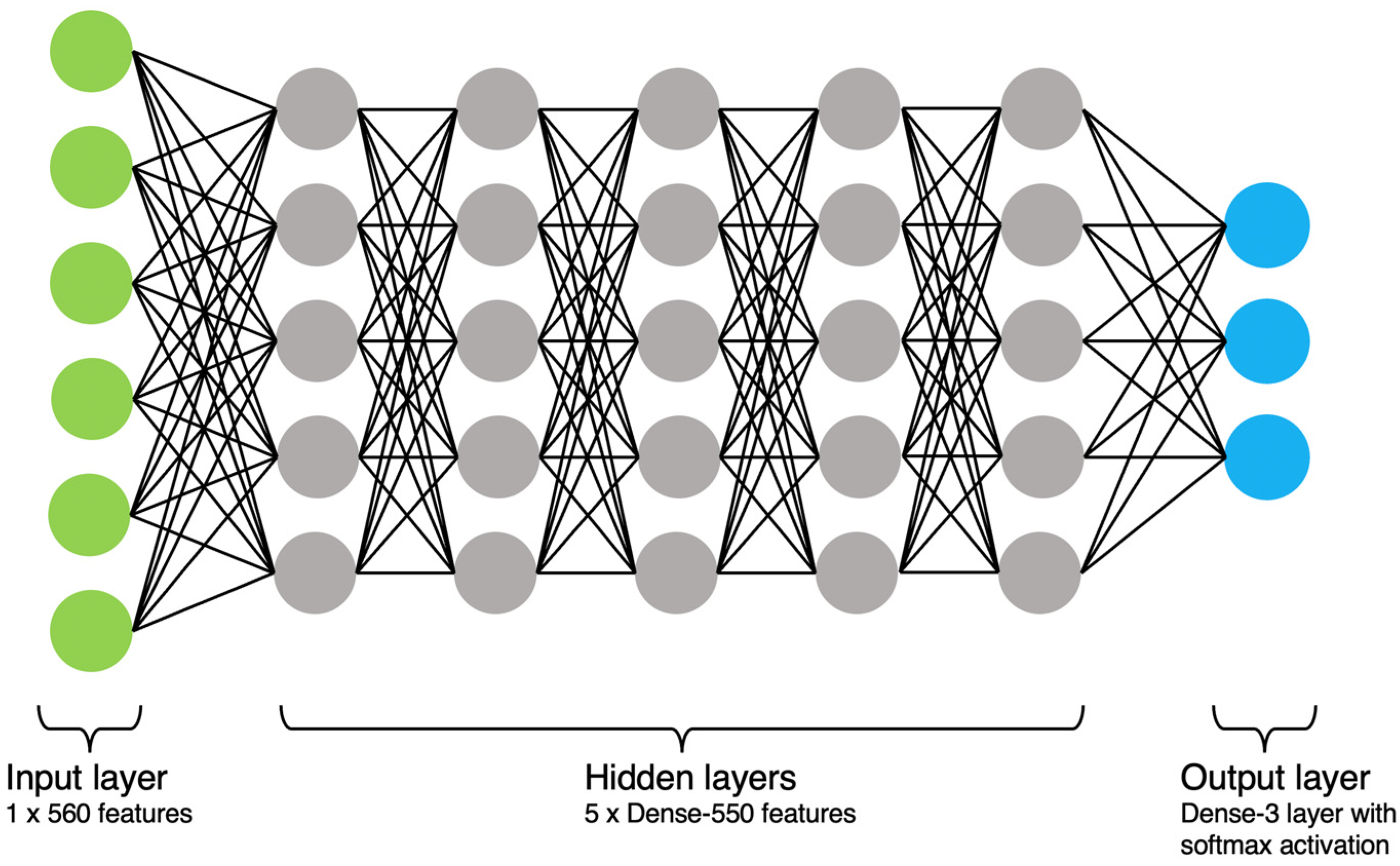

3.1. CNN Training Results

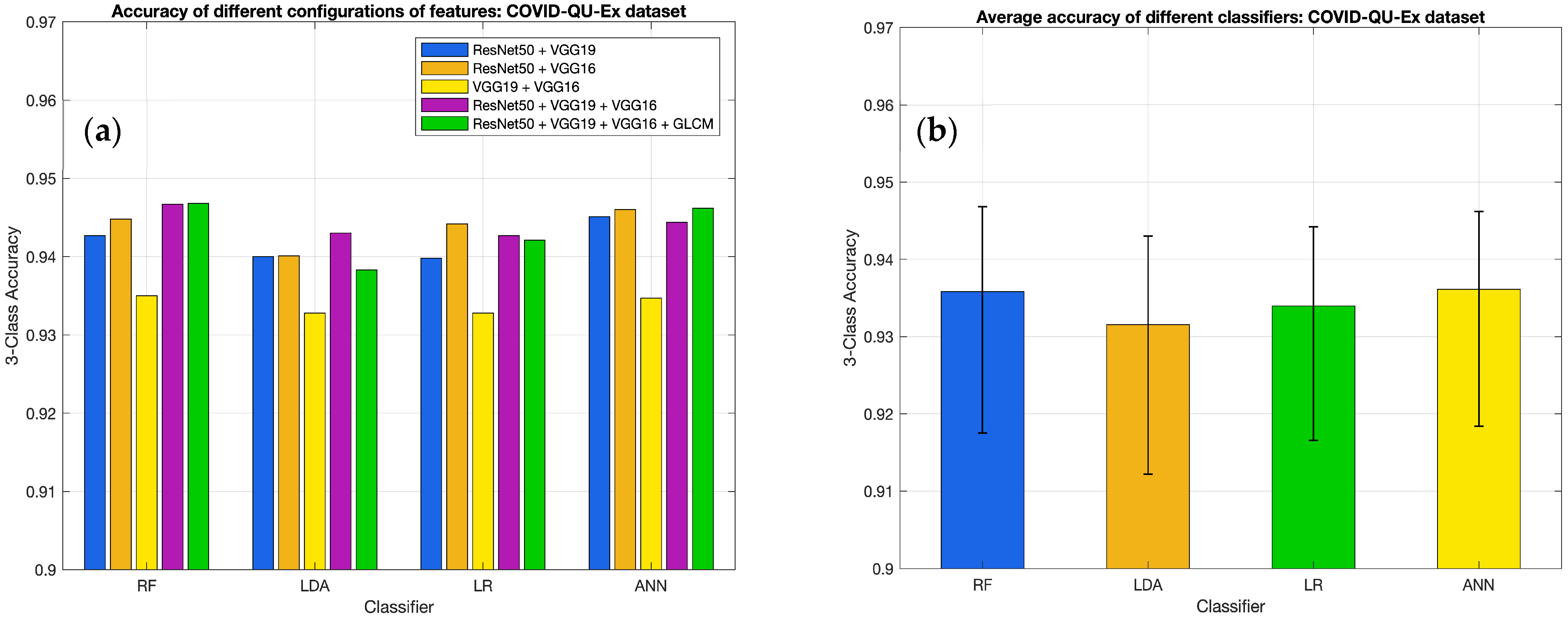

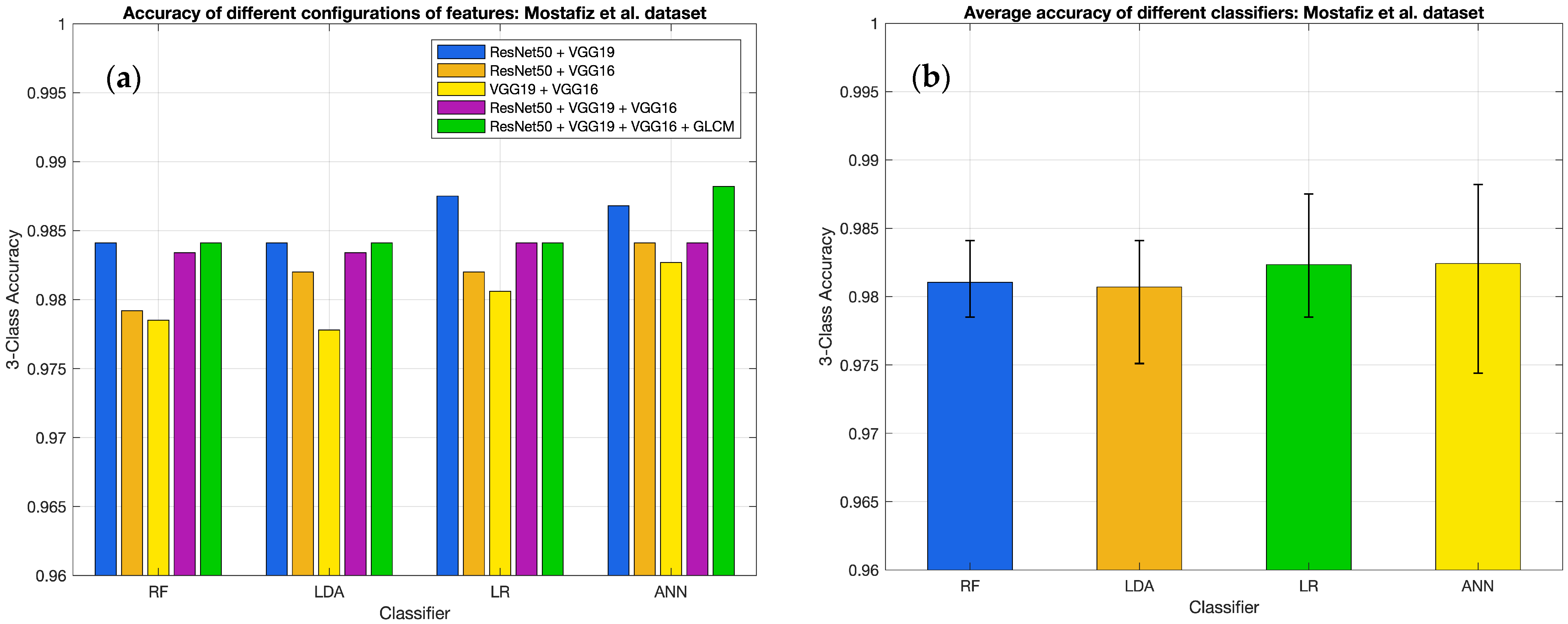

3.2. Results for Different Classifiers

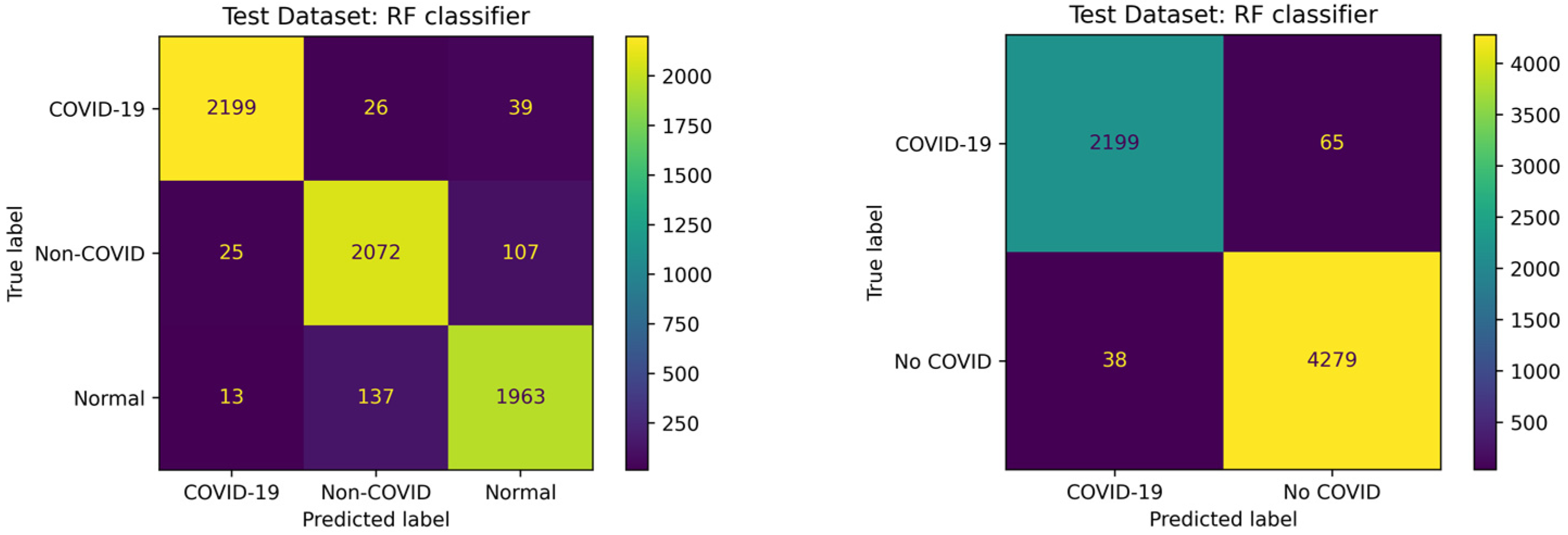

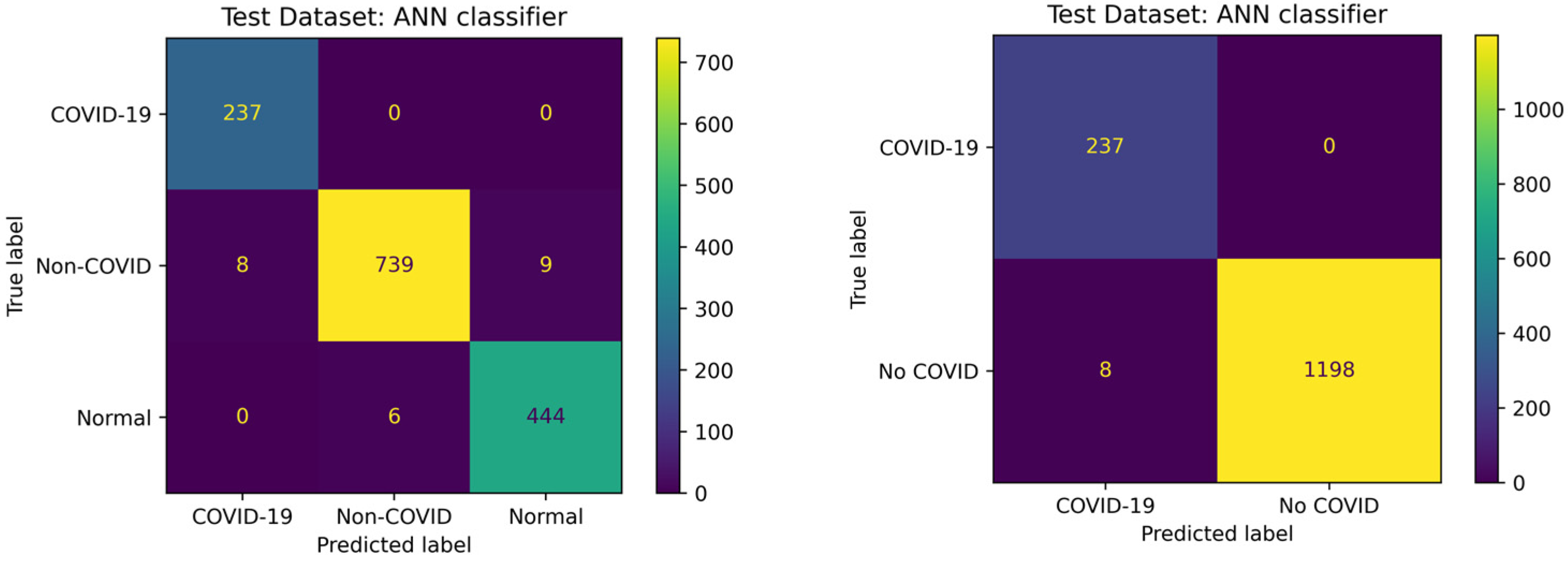

3.3. COVID-19 Detection Performance

3.4. Generalizability of Models to Unseen Data

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kanji, J.N.; Zelyas, N.; MacDonald, C.; Pabbaraju, K.; Khan, M.N.; Prasad, A.; Hu, J.; Diggle, M.; Berenger, B.M.; Tipples, G. False Negative Rate of COVID-19 PCR Testing: A Discordant Testing Analysis. Virol. J. 2021, 18, 13. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Ruiz, A.; Lång, K.; Gubern-Merida, A.; Broeders, M.; Gennaro, G.; Clauser, P.; Helbich, T.H.; Chevalier, M.; Tan, T.; Mertelmeier, T.; et al. Stand-Alone Artificial Intelligence for Breast Cancer Detection in Mammography: Comparison with 101 Radiologists. JNCI J. Natl. Cancer Inst. 2019, 111, 916–922. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Lin, Z.Q.; Wong, A. COVID-Net: A Tailored Deep Convolutional Neural Network Design for Detection of COVID-19 Cases from Chest X-Ray Images. Sci. Rep. 2020, 10, 19549. [Google Scholar] [CrossRef] [PubMed]

- Sufian, A.; Ghosh, A.; Sadiq, A.S.; Smarandache, F. A Survey on Deep Transfer Learning to Edge Computing for Mitigating the COVID-19 Pandemic. J. Syst. Archit. 2020, 108, 101830. [Google Scholar] [CrossRef]

- Zouch, W.; Sagga, D.; Echtioui, A.; Khemakhem, R.; Ghorbel, M.; Mhiri, C.; Hamida, A.B. Detection of COVID-19 from CT and Chest X-Ray Images Using Deep Learning Models. Ann. Biomed. Eng. 2022, 50, 825–835. [Google Scholar] [CrossRef] [PubMed]

- Mostafiz, R.; Uddin, M.S.; Alam, N.-A.; Reza, M.; Rahman, M.M. Covid-19 Detection in Chest X-Ray through Random Forest Classifier Using a Hybridization of Deep CNN and DWT Optimized Features. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 3226–3235. [Google Scholar] [CrossRef]

- Sethy, P.K.; Behera, S.K.; Ratha, P.K.; Biswas, P. Detection of Coronavirus Disease (COVID-19) Based on Deep Features and Support Vector Machine. Int. J. Math. Eng. Manag. Sci. 2020, 5, 643–651. [Google Scholar] [CrossRef]

- Saha, P.; Sadi, M.S.; Islam, M. EMCNet: Automated COVID-19 Diagnosis from X-Ray Images Using Convolutional Neural Network and Ensemble of Machine Learning Classifiers. Inform. Med. Unlocked 2021, 22, 100505. [Google Scholar] [CrossRef]

- Karim, A.M.; Kaya, H.; Alcan, V.; Sen, B.; Hadimlioglu, I.A. New Optimized Deep Learning Application for COVID-19 Detection in Chest X-Ray Images. Symmetry 2022, 14, 1003. [Google Scholar] [CrossRef]

- Toğaçar, M.; Ergen, B.; Cömert, Z.; Özyurt, F. A Deep Feature Learning Model for Pneumonia Detection Applying a Combination of MRMR Feature Selection and Machine Learning Models. IRBM 2020, 41, 212–222. [Google Scholar] [CrossRef]

- Tahir, A.M.; Chowdhury, M.; Qiblawey, Y.; Khandakar, A.; Rahman, T.; Kiranyaz, S.; Khurshid, U.; Ibtehaz, N.; Mahmud, S.; Ezeddin, M. COVID-QU-Ex Dataset; Kaggle: San Francisco, CA, USA, 2021. [Google Scholar] [CrossRef]

- Chowdhury, M.E.H.; Rahman, T.; Khandakar, A.; Mazhar, R.; Kadir, M.A.; Mahbub, Z.B.; Islam, K.R.; Khan, M.S.; Iqbal, A.; Emadi, N.A.; et al. Can AI Help in Screening Viral and COVID-19 Pneumonia? IEEE Access 2020, 8, 132665–132676. [Google Scholar] [CrossRef]

- Rahman, T.; Khandakar, A.; Qiblawey, Y.; Tahir, A.; Kiranyaz, S.; Abul Kashem, S.B.; Islam, M.T.; Al Maadeed, S.; Zughaier, S.M.; Khan, M.S.; et al. Exploring the Effect of Image Enhancement Techniques on COVID-19 Detection Using Chest X-Ray Images. Comput. Biol. Med. 2021, 132, 104319. [Google Scholar] [CrossRef] [PubMed]

- De la Iglesia Vayá, M.; Saborit-Torres, J.M.; Montell Serrano, J.A.; Oliver-Garcia, E.; Pertusa, A.; Bustos, A.; Cazorla, M.; Galant, J.; Barber, X.; Orozco-Beltrán, D.; et al. BIMCV COVID-19+: A Large Annotated Dataset of RX and CT Images from COVID-19 Patients. arXiv 2021, arXiv:2006.01174. [Google Scholar] [CrossRef]

- Covid-19-Image-Repository/Png at Master Ml-Workgroup/COVID-19-Image-Repository. Available online: https://github.com/ml-workgroup/covid-19-image-repository (accessed on 22 June 2023).

- SIRM—Società Italiana di Radiologia Medica e Interventistica. 2022. Available online: https://sirm.org/ (accessed on 22 June 2023).

- Eurorad.Org. Available online: https://www.eurorad.org/homepage (accessed on 22 June 2023).

- COVID-19 Chest X-ray Image Repository. 2020. Available online: https://figshare.com/articles/dataset/COVID-19_Chest_X-Ray_Image_Repository/12580328/3 (accessed on 22 June 2023).

- Haghanifar, A. COVID-CXNet 2023. Available online: https://github.com/armiro/COVID-CXNet (accessed on 22 June 2023).

- RSNA Pneumonia Detection Challenge. Available online: https://kaggle.com/competitions/rsna-pneumonia-detection-challenge (accessed on 22 June 2023).

- Chest X-ray Images (Pneumonia). Available online: https://www.kaggle.com/datasets/paultimothymooney/chest-xray-pneumonia (accessed on 22 June 2023).

- Mostafiz, R. Chest-X-ray. GitHub. 2020. Available online: https://github.com/rafid909/Chest-X-ray (accessed on 22 June 2023).

- Cohen, J.P.; Morrison, P.; Dao, L. COVID-19 Image Data Collection. arXiv 2020, arXiv:2003.11597. [Google Scholar] [CrossRef]

- Dadario, A.M.V. COVID-19 X rays; Kaggle: San Francisco, CA, USA, 2020. [Google Scholar] [CrossRef]

- Kermany, D.; Zhang, K.; Goldbaum, M. Labeled Optical Coherence Tomography (OCT) and Chest X-ray Images for Classification; Mendeley Data, Version 2; Elsevier inc.: Amsterdam, The Netherlands, 2018. [Google Scholar] [CrossRef]

- Smazzanti. mRMR Python Package. GitHub. 2022. Available online: https://github.com/smazzanti/mrmr (accessed on 4 January 2023).

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Chollet, F. Keras: Deep Learning for Humans. Available online: https://keras.io/ (accessed on 4 January 2023).

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Ding, C.; Peng, H. Minimum Redundancy Feature Selection from Microarray Gene Expression Data. J. Bioinform. Comput. Biol. 2005, 3, 185–205. [Google Scholar] [CrossRef]

- Peng, H.; Long, F.; Ding, C. Feature Selection Based on Mutual Information Criteria of Max-Dependency, Max-Relevance, and Min-Redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef]

- Zhao, Z.; Anand, R.; Wang, M. Maximum Relevance and Minimum Redundancy Feature Selection Methods for a Marketing Machine Learning Platform. In Proceedings of the 2019 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Washington, DC, USA, 5–8 October 2019; pp. 442–452. [Google Scholar] [CrossRef]

- Menze, B.H.; Kelm, B.M.; Masuch, R.; Himmelreich, U.; Bachert, P.; Petrich, W.; Hamprecht, F.A. A Comparison of Random Forest and Its Gini Importance with Standard Chemometric Methods for the Feature Selection and Classification of Spectral Data. BMC Bioinform. 2009, 10, 213. [Google Scholar] [CrossRef]

- Fawagreh, K.; Gaber, M.M.; Elyan, E. Random Forests: From Early Developments to Recent Advancements. Syst. Sci. Control. Eng. 2014, 2, 602–609. [Google Scholar] [CrossRef]

- Liu, R.; Gillies, D.F. Overfitting in Linear Feature Extraction for Classification of High-Dimensional Image Data. Pattern Recognit. 2016, 53, 73–86. [Google Scholar] [CrossRef]

- Schober, P.; Vetter, T.R. Logistic Regression in Medical Research. Obstet. Anesthesia Dig. 2021, 132, 365–366. [Google Scholar] [CrossRef] [PubMed]

- Upadhyay, S.; Tanwar, P.S. Classification of Benign-Malignant Pulmonary Lung Nodules Using Ensemble Learning Classifiers. In Proceedings of the 2021 6th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 8–10 July 2021; pp. 1–8. [Google Scholar]

- Majumder, S.; Ullah, M.A. Feature Extraction from Dermoscopy Images for an Effective Diagnosis of Melanoma Skin Cancer. In Proceedings of the 2018 10th International Conference on Electrical and Computer Engineering (ICECE), Dhaka, Bangladesh, 20–22 December 2018; pp. 185–188. [Google Scholar]

- Senior, A.; Heigold, G.; Ranzato, M.; Yang, K. An Empirical Study of Learning Rates in Deep Neural Networks for Speech Recognition. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 6724–6728. [Google Scholar]

- Johny, A.; Madhusoodanan, K.N. Dynamic Learning Rate in Deep CNN Model for Metastasis Detection and Classification of Histopathology Images. Comput. Math. Methods Med. 2021, 2021, e5557168. [Google Scholar] [CrossRef]

- Hooda, R.; Mittal, A.; Sofat, S. Automated TB Classification Using Ensemble of Deep Architectures. Multimed. Tools Appl. 2019, 78, 31515–31532. [Google Scholar] [CrossRef]

- Futoma, J.; Simons, M.; Panch, T.; Doshi-Velez, F.; Celi, L.A. The Myth of Generalisability in Clinical Research and Machine Learning in Health Care. Lancet Digit. Health 2020, 2, e489–e492. [Google Scholar] [CrossRef]

- Prusa, J.; Khoshgoftaar, T.M.; Seliya, N. The Effect of Dataset Size on Training Tweet Sentiment Classifiers. In Proceedings of the 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 9–11 December 2015; pp. 96–102. [Google Scholar]

- Althnian, A.; AlSaeed, D.; Al-Baity, H.; Samha, A.; Dris, A.B.; Alzakari, N.; Abou Elwafa, A.; Kurdi, H. Impact of Dataset Size on Classification Performance: An Empirical Evaluation in the Medical Domain. Appl. Sci. 2021, 11, 796. [Google Scholar] [CrossRef]

- Luo, D.; Ding, C.; Huang, H. Linear Discriminant Analysis: New Formulations and Overfit Analysis. Proc. AAAI Conf. Artif. Intell. 2011, 25, 417–422. [Google Scholar] [CrossRef]

| Dataset and Classes | Train | Val | Test | Total |

|---|---|---|---|---|

| COVID-QU-Ex [11] | ||||

| COVID-19 | 7290 | 1826 | 2264 | 11,380 |

| Non-COVID Pneumonia | 7082 | 1762 | 2204 | 11,048 |

| Normal | 6730 | 1686 | 2113 | 10,529 |

| Total | 21,102 | 5274 | 6581 | 32,957 |

| Mostafiz et al. (2022) [6,22] | ||||

| COVID-19 | 442 | 111 | 237 | 790 |

| Non-COVID Pneumonia | 1410 | 353 | 756 | 2519 |

| Normal | 840 | 210 | 450 | 1500 |

| Total | 2692 | 674 | 1443 | 4809 |

| GLCM Property | Meaning | Equation |

|---|---|---|

| Contrast | Measure of local variations in pixel values. | |

| Dissimilarity | Measure of absolute difference in pixel intensities. | |

| Homogeneity | Measure of the local homogeneity of pixels in the image. | |

| ASM | Measure of overall homogeneity of pixels in the image. | |

| Energy | Square root of ASM. | |

| Correlation | Measure of how linearly correlated pairs of pixels are over the whole image. |

| Metric | Equation |

|---|---|

| Accuracy | |

| Precision | |

| Sensitivity | |

| Specificity | |

| F1-score |

| COVID-QU-Ex Dataset | Mostafiz et al. Dataset | |||

|---|---|---|---|---|

| CNN | Simple Training (40 Epochs) | LR Reduction + Early Stopping | Simple Training (40 Epochs) | LR Reduction + Early Stopping |

| ResNet50 | 0.9184 | 0.9337 | 0.9785 | 0.9806 |

| VGG19 | 0.9123 | 0.9189 | 0.9674 | 0.9709 |

| VGG16 | 0.8991 | 0. 9231 | 0.9729 | 0.9757 |

| Classifier and Metrics | ResNet50 | VGG19 | VGG16 | ResNet50 + VGG19 | ResNet50 + VGG16 | VGG19 + VGG16 | ResNet50 + VGG19 + VGG16 | ResNet50 + VGG19 + VGG16 + GLCM |

|---|---|---|---|---|---|---|---|---|

| RF | ||||||||

| Accuracy | 0.9307 | 0.9175 | 0.9225 | 0.9427 | 0.9448 | 0.9350 | 0.9467 | 0.9468 |

| Precision | 0.9304 | 0.9172 | 0.9221 | 0.9425 | 0.9445 | 0.9348 | 0.9463 | 0.9465 |

| Sensitivity | 0.9302 | 0.9170 | 0.9221 | 0.9422 | 0.9445 | 0.9346 | 0.9461 | 0.9463 |

| Specificity | 0.9655 | 0.9589 | 0.9614 | 0.9715 | 0.9726 | 0.9676 | 0.9735 | 0.9735 |

| F1-score | 0.9303 | 0.9171 | 0.9221 | 0.9423 | 0.9445 | 0.9346 | 0.9462 | 0.9464 |

| LDA | ||||||||

| Accuracy | 0.9268 | 0.9122 | 0.9193 | 0.9400 | 0.9401 | 0.9328 | 0.9430 | 0.9383 |

| Precision | 0.9265 | 0.9119 | 0.9189 | 0.9397 | 0.9398 | 0.9327 | 0.9427 | 0.9386 |

| Sensitivity | 0.9264 | 0.9117 | 0.9189 | 0.9396 | 0.9397 | 0.9325 | 0.9426 | 0.9379 |

| Specificity | 0.9635 | 0.9563 | 0.9598 | 0.9701 | 0.9702 | 0.9666 | 0.9716 | 0.9693 |

| F1-score | 0.9264 | 0.9118 | 0.9189 | 0.9396 | 0.9397 | 0.9325 | 0.9426 | 0.9380 |

| LR | ||||||||

| Accuracy | 0.9304 | 0.9166 | 0.9231 | 0.9398 | 0.9442 | 0.9328 | 0.9427 | 0.9421 |

| Precision | 0.9301 | 0.9165 | 0.9227 | 0.9395 | 0.9437 | 0.9327 | 0.9424 | 0.9418 |

| Sensitivity | 0.9300 | 0.9163 | 0.9227 | 0.9393 | 0.9437 | 0.9325 | 0.9423 | 0.9416 |

| Specificity | 0.9654 | 0.9585 | 0.9617 | 0.9701 | 0.9722 | 0.9666 | 0.9715 | 0.9712 |

| F1-score | 0.9300 | 0.9163 | 0.9227 | 0.9394 | 0.9437 | 0.9325 | 0.9423 | 0.9417 |

| ANN | ||||||||

| Accuracy | 0.9299 | 0.9184 | 0.9243 | 0.9451 | 0.9460 | 0.9347 | 0.9444 | 0.9462 |

| Precision | 0.9298 | 0.9183 | 0.9240 | 0.9448 | 0.9471 | 0.9347 | 0.9443 | 0.9460 |

| Sensitivity | 0.9295 | 0.9178 | 0.9241 | 0.9447 | 0.9472 | 0.9343 | 0.9440 | 0.9457 |

| Specificity | 0.9651 | 0.9594 | 0.9623 | 0.9727 | 0.9739 | 0.9675 | 0.9723 | 0.9732 |

| F1-score | 0.9295 | 0.9180 | 0.9239 | 0.9447 | 0.9471 | 0.9344 | 0.9440 | 0.9458 |

| Classifier and Metrics | ResNet50 | VGG19 | VGG16 | ResNet50 + VGG19 | ResNet50 + VGG16 | VGG19 + VGG16 | ResNet50 + VGG19 + VGG16 | ResNet50 + VGG19 + VGG16 + GLCM |

| RF | ||||||||

| Accuracy | 0.9813 | 0.9785 | 0.9792 | 0.9841 | 0.9792 | 0.9785 | 0.9834 | 0.9841 |

| Precision | 0.9798 | 0.9794 | 0.9801 | 0.9827 | 0.9770 | 0.9792 | 0.9820 | 0.9827 |

| Sensitivity | 0.9844 | 0.9814 | 0.9786 | 0.9862 | 0.9831 | 0.9817 | 0.9858 | 0.9862 |

| Specificity | 0.9897 | 0.9879 | 0.9883 | 0.9911 | 0.9888 | 0.9881 | 0.9907 | 0.9911 |

| F1-score | 0.9821 | 0.9804 | 0.9794 | 0.9844 | 0.9800 | 0.9804 | 0.9838 | 0.9844 |

| LDA | ||||||||

| Accuracy | 0.9827 | 0.9764 | 0.9751 | 0.9841 | 0.9820 | 0.9778 | 0.9834 | 0.9841 |

| Precision | 0.9813 | 0.9741 | 0.9779 | 0.9825 | 0.9805 | 0.9760 | 0.9820 | 0.9831 |

| Sensitivity | 0.9860 | 0.9804 | 0.9762 | 0.9872 | 0.9855 | 0.9816 | 0.9864 | 0.9872 |

| Specificity | 0.9904 | 0.9874 | 0.9848 | 0.9912 | 0.9901 | 0.9882 | 0.9907 | 0.9912 |

| F1-score | 0.9836 | 0.9772 | 0.9770 | 0.9848 | 0.9830 | 0.9787 | 0.9842 | 0.9851 |

| LR | ||||||||

| Accuracy | 0.9820 | 0.9785 | 0.9799 | 0.9875 | 0.9820 | 0.9806 | 0.9841 | 0.9841 |

| Precision | 0.9805 | 0.9792 | 0.9829 | 0.9877 | 0.9799 | 0.9810 | 0.9836 | 0.9836 |

| Sensitivity | 0.9855 | 0.9821 | 0.9802 | 0.9890 | 0.9870 | 0.9827 | 0.9881 | 0.9881 |

| Specificity | 0.9901 | 0.9881 | 0.9875 | 0.9929 | 0.9908 | 0.9892 | 0.9915 | 0.9915 |

| F1-score | 0.9830 | 0.9806 | 0.9815 | 0.9884 | 0.9834 | 0.9819 | 0.9858 | 0.9858 |

| ANN | ||||||||

| Accuracy | 0.9806 | 0.9785 | 0.9744 | 0.9868 | 0.9841 | 0.9827 | 0.9841 | 0.9882 |

| Precision | 0.9787 | 0.9777 | 0.9719 | 0.9854 | 0.9839 | 0.9834 | 0.9839 | 0.9872 |

| Sensitivity | 0.9837 | 0.9832 | 0.9793 | 0.9895 | 0.9856 | 0.9849 | 0.9861 | 0.9916 |

| Specificity | 0.9893 | 0.9891 | 0.9870 | 0.9928 | 0.9907 | 0.9905 | 0.9914 | 0.9940 |

| F1-score | 0.9812 | 0.9802 | 0.9753 | 0.9875 | 0.9847 | 0.9841 | 0.9850 | 0.9893 |

| Metric | COVID-QU-Ex Dataset with RF | Mostafiz et al. Dataset with ANN |

|---|---|---|

| Three-Class Accuracy | 0.9468 | 0.9882 |

| Binary Accuracy | 0.9843 | 0.9986 |

| Sensitivity to COVID-19 | 0.9713 | 1.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kuzinkovas, D.; Clement, S. The Detection of COVID-19 in Chest X-rays Using Ensemble CNN Techniques. Information 2023, 14, 370. https://doi.org/10.3390/info14070370

Kuzinkovas D, Clement S. The Detection of COVID-19 in Chest X-rays Using Ensemble CNN Techniques. Information. 2023; 14(7):370. https://doi.org/10.3390/info14070370

Chicago/Turabian StyleKuzinkovas, Domantas, and Sandhya Clement. 2023. "The Detection of COVID-19 in Chest X-rays Using Ensemble CNN Techniques" Information 14, no. 7: 370. https://doi.org/10.3390/info14070370

APA StyleKuzinkovas, D., & Clement, S. (2023). The Detection of COVID-19 in Chest X-rays Using Ensemble CNN Techniques. Information, 14(7), 370. https://doi.org/10.3390/info14070370