Improving the Effectiveness and Efficiency of Web-Based Search Tasks for Policy Workers

Abstract

1. Introduction

RQ1: What work and search tasks do PWs at the municipality of Utrecht perform?

RQ2: How can we design functionality for the more effective and efficient completion of the web-based search tasks of PWs?

2. Related Work

2.1. Generic Task Models

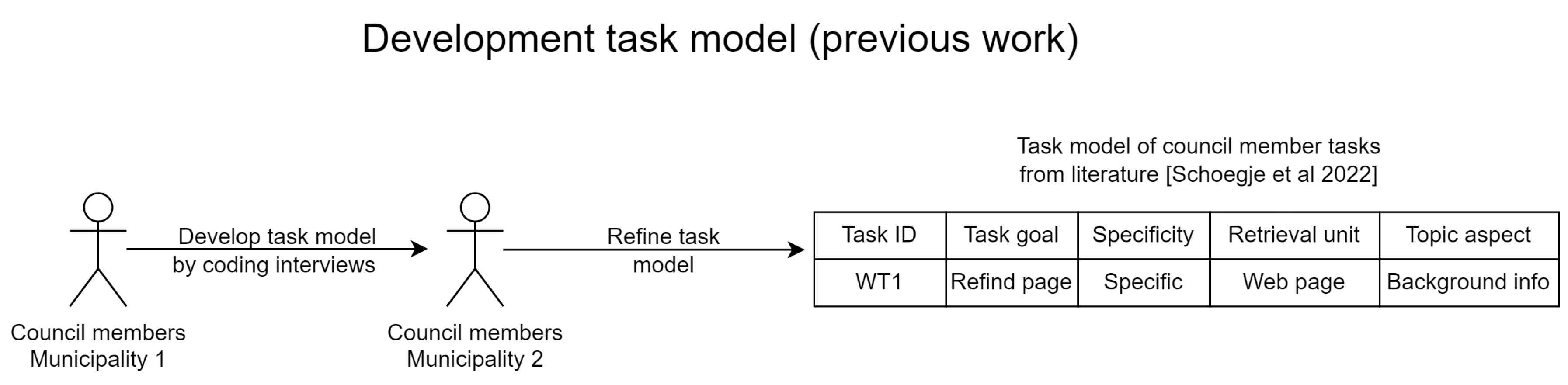

2.2. Applying Task Models to a Domain

2.3. Tasks of Policy Workers

3. Method to Identifying Policy Worker Tasks

3.1. Round 1 Interviews

3.1.1. Participants

3.1.2. Semi-Structured Interviews

3.1.3. Codebook

3.2. Round 2 Interviews

3.2.1. Participants

3.2.2. Interviews

4. Task Analysis Results

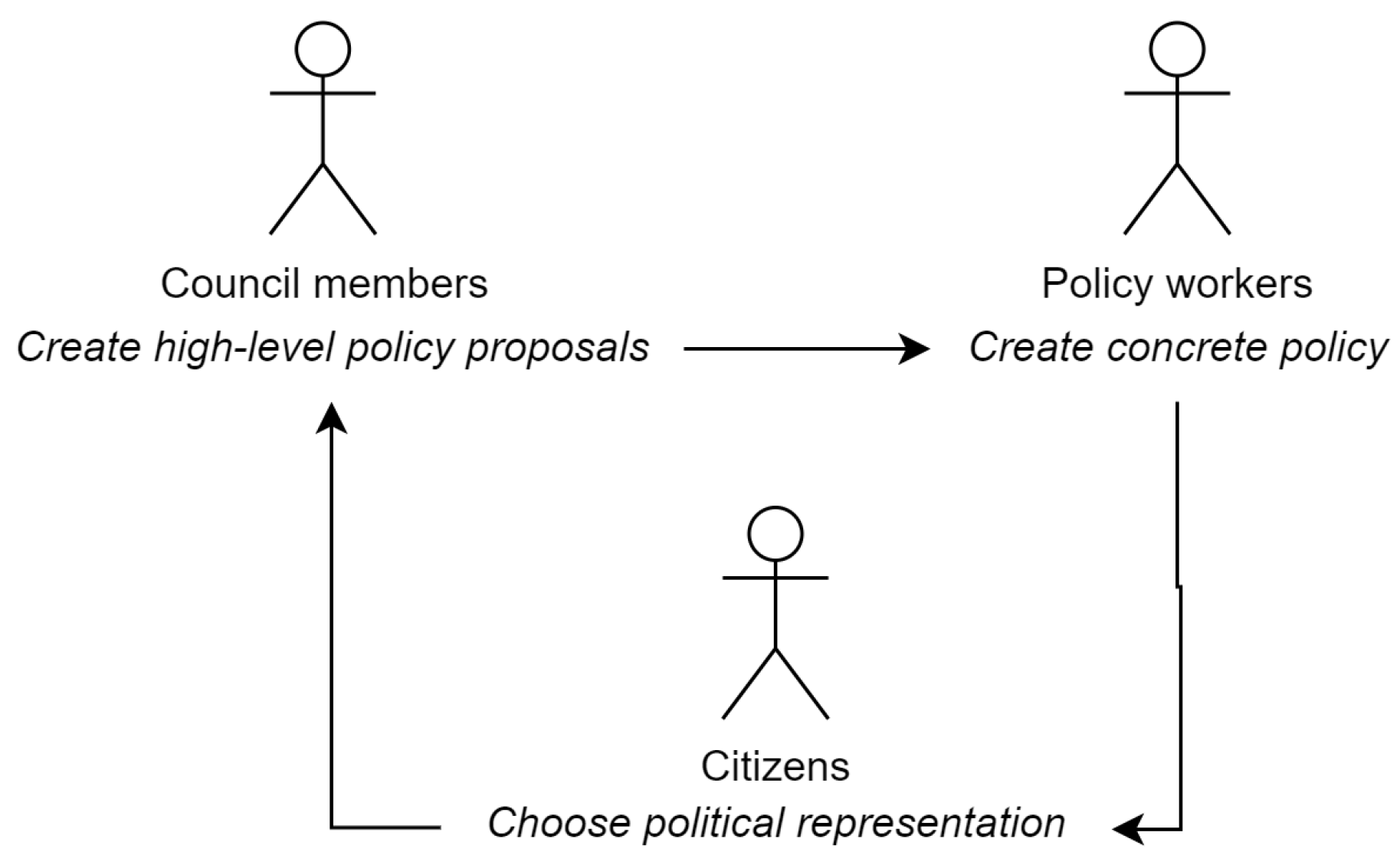

4.1. Work Tasks

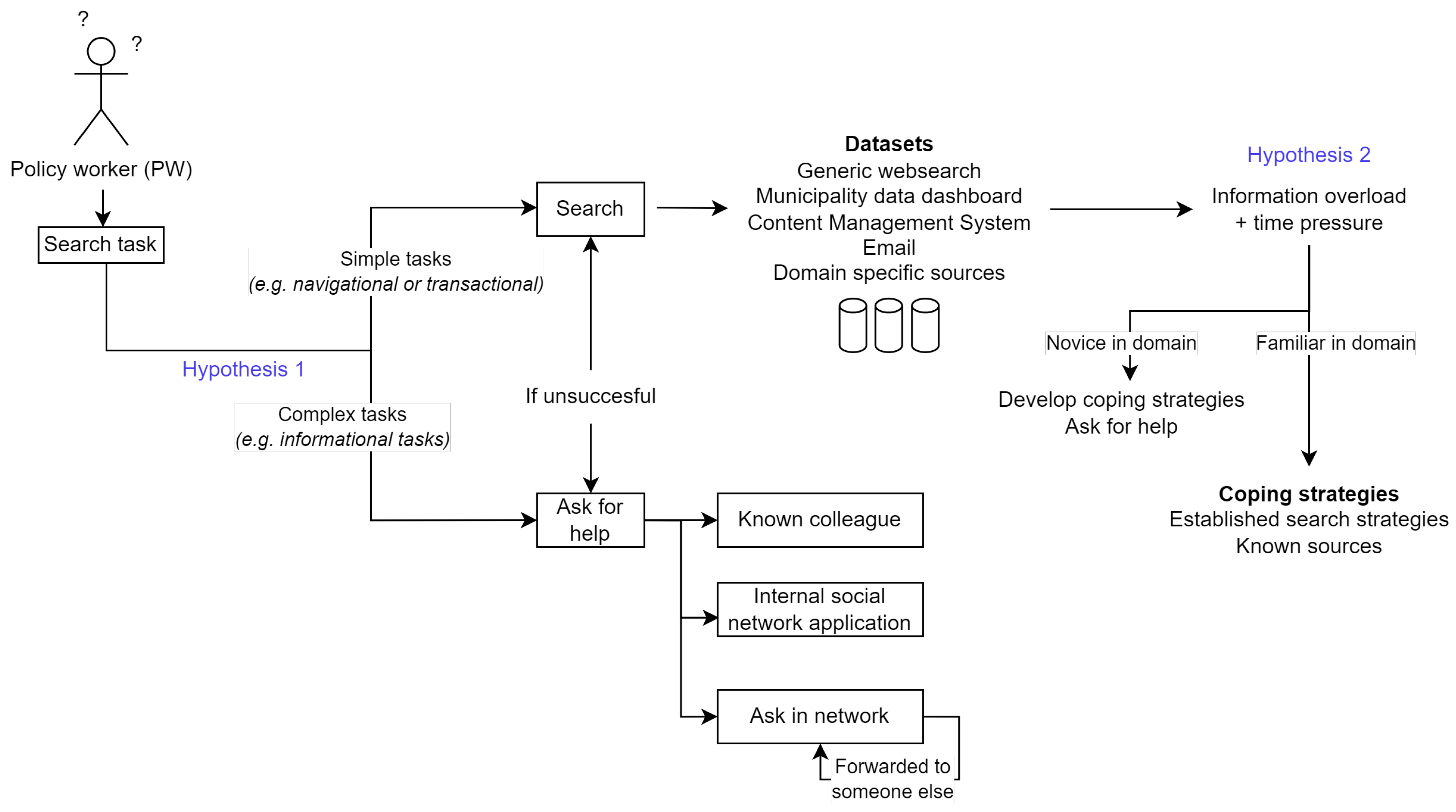

4.2. Search Tasks

4.3. Overview Information Seeking

4.3.1. Role of Expert Search

- The expert knows where relevant data are located, both inside and outside the organisation;

- The expert can give context to the data, e.g., what is trustworthy and worthwhile;

- The expert can give advice on the work task;

- The expert can help avoid performing redundant work;

- The time spent actively searching is reduced.

4.3.2. Information Overload

4.4. Conclusions: How to Better Support PW Web Search Tasks

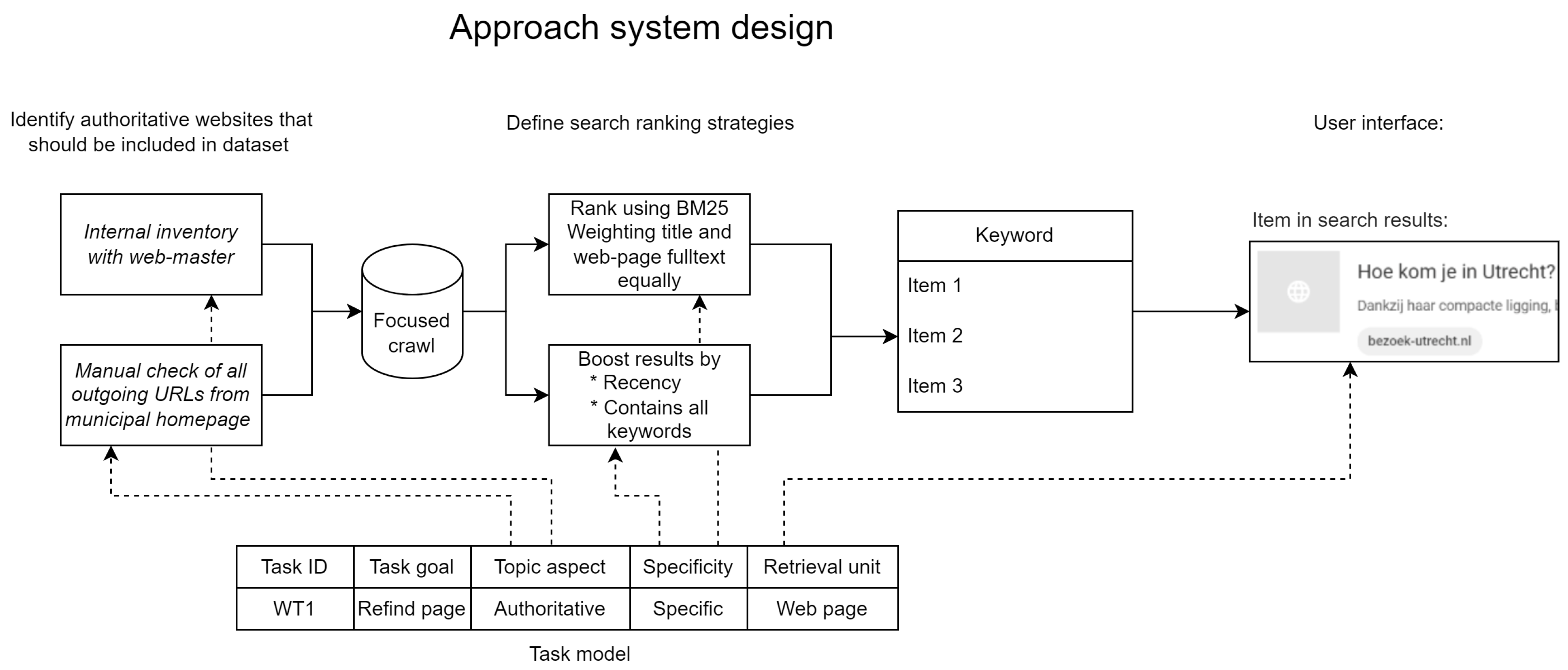

5. Search System Development

5.1. Focused Crawl

5.2. UI Elements

5.3. Ranking Functions

6. Method

6.1. Task Construction

6.1.1. Simple Tasks

6.1.2. Complex Tasks

6.2. Task Design

6.3. Participants

6.4. Data Preparation

6.5. Metrics

7. Results

7.1. Effectiveness

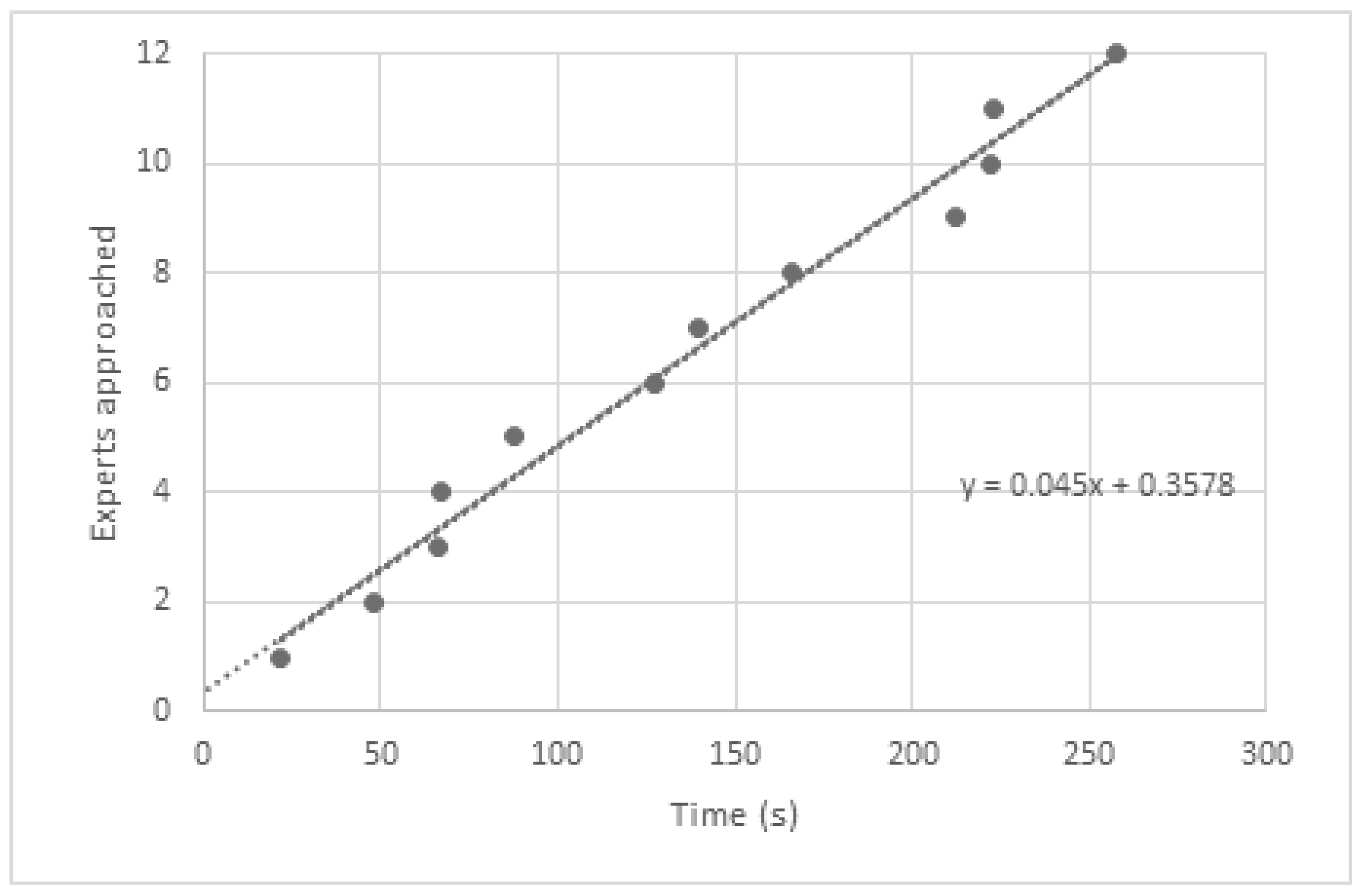

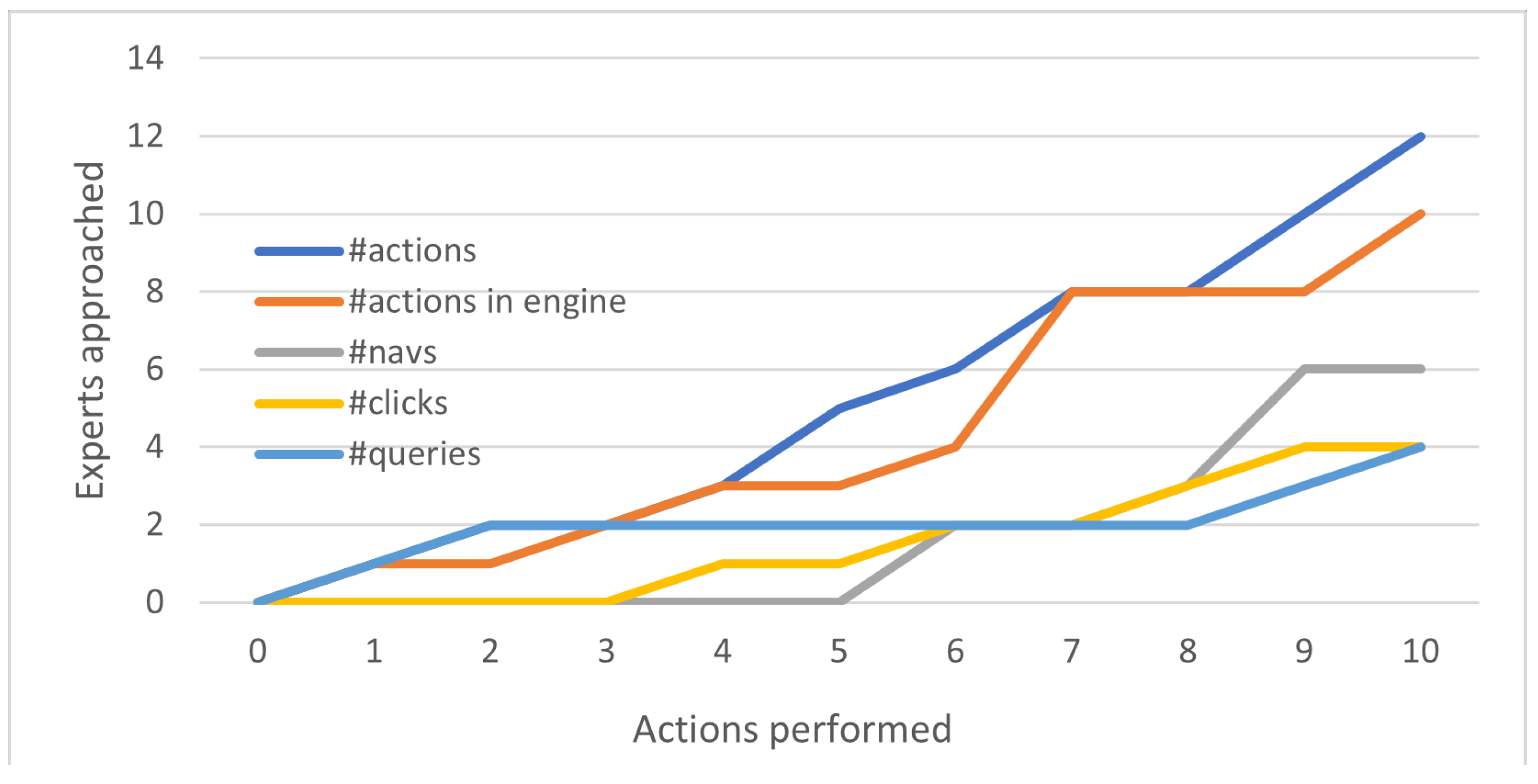

7.2. Efficiency

7.3. User Satisfaction

7.4. Search Behaviour

8. Discussion

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Capra, R.; Arguello, J.; O’Brien, H.; Li, Y.; Choi, B. The Effects of Manipulating Task Determinability on Search Behaviors and Outcomes. In Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, SIGIR 2018, Ann Arbor, MI, USA, 8–12 July 2018; Collins-Thompson, K., Mei, Q., Davison, B.D., Liu, Y., Yilmaz, E., Eds.; Association for Computing Machinery: Ann Arbor, MI, USA; New York, NY, USA, 2018; pp. 445–454. [Google Scholar] [CrossRef]

- Li, Y.; Belkin, N.J. A faceted approach to conceptualizing tasks in information seeking. Inf. Process. Manag. 2008, 44, 1822–1837. [Google Scholar] [CrossRef]

- Verberne, S.; He, J.; Wiggers, G.; Russell-Rose, T.; Kruschwitz, U.; de Vries, A.P. Information search in a professional context-exploring a collection of professional search tasks. arXiv 2019, arXiv:1905.04577. [Google Scholar]

- Walter, L.; Denter, N.M.; Kebel, J. A review on digitalization trends in patent information databases and interrogation tools. World Pat. Inf. 2022, 69, 102107. [Google Scholar] [CrossRef]

- Heidari, M.; Zad, S.; Berlin, B.; Rafatirad, S. Ontology creation model based on attention mechanism for a specific business domain. In Proceedings of the 2021 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Toronto, ON, Canada, 21–24 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- Findwise. Enterprise Search and Findability Survey 2015. 2015. Available online: https://findwise.com/en/enterprise-search-and-findability-survey-2015 (accessed on 27 October 2019).

- Network, T.S. Search Insights 2018. 2018. Available online: https://searchexplained.com/shop/books/book-search-insights-2018/ (accessed on 27 June 2023).

- Schoegje, T.; de Vries, A.P.; Pieters, T. Adapting a Faceted Search Task Model for the Development of a Domain-Specific Council Information Search Engine. In Proceedings of the Electronic Government—21st IFIP WG 8.5 International Conference, EGOV 2022, Linköping, Sweden, 6–8 September 2022; Janssen, M., Csáki, C., Lindgren, I., Loukis, E.N., Melin, U., Pereira, G.V., Bolívar, M.P.R., Tambouris, E., Eds.; Springer: Berlin/Heidelberg, Germany, 2022; Volume 13391, pp. 402–418. [Google Scholar] [CrossRef]

- Saastamoinen, M.; Kumpulainen, S. Expected and materialised information source use by municipal officials: Intertwining with task complexity. Inf. Res. 2014, 19, 646. [Google Scholar]

- Byström, K.; Hansen, P. Conceptual framework for tasks in information studies. J. Am. Soc. Inf. Sci. Technol. 2005, 56, 1050–1061. [Google Scholar] [CrossRef]

- Broder, A.Z. A taxonomy of web search. SIGIR Forum 2002, 36, 3–10. [Google Scholar] [CrossRef]

- Rose, D.E.; Levinson, D. Understanding user goals in web search. In Proceedings of the Proceedings of the 13th international conference on World Wide Web WWW, New York, NY, USA, 17–20 May 2004; Feldman, S.I., Uretsky, M., Najork, M., Wills, C.E., Eds.; Association for Computing Machinery: New York, NY, USA, 2004; pp. 13–19. [Google Scholar] [CrossRef]

- Alexander, D.; Kusa, W.; de Vries, A.P. ORCAS-I: Queries Annotated with Intent using Weak Supervision. arXiv 2022, arXiv:2205.00926. [Google Scholar]

- Choi, B.; Arguello, J.; Capra, R. Understanding Procedural Search Tasks “in the Wild”. In Proceedings of the 2023 Conference on Human Information Interaction and Retrieval, CHIIR 2023, Austin, TX, USA, 19–23 March 2023; Gwizdka, J., Rieh, S.Y., Eds.; Association for Computing Machinery: New York, NY, USA, 2023; pp. 24–33. [Google Scholar] [CrossRef]

- Ingwersen, P.; Järvelin, K. The Turn-Integration of Information Seeking and Retrieval in Context; The Kluwer International Series on Information Retrieval; Springer: Dordrecht, The Netherlands, 2005; Volume 18. [Google Scholar] [CrossRef]

- Byström, K.; Heinström, J.; Ruthven, I. Information at Work: Information Management in the Workplace; Facet Publishing: London, UK, 2019. [Google Scholar]

- Shah, C.; White, R.; Thomas, P.; Mitra, B.; Sarkar, S.; Belkin, N.J. Taking Search to Task. In Proceedings of the 2023 Conference on Human Information Interaction and Retrieval, CHIIR 2023, Austin, TX, USA, 19–23 March 2023; Gwizdka, J., Rieh, S.Y., Eds.; ACM: New York, NY, USA, 2023; pp. 1–13. [Google Scholar] [CrossRef]

- Aliannejadi, M.; Harvey, M.; Costa, L.; Pointon, M.; Crestani, F. Understanding Mobile Search Task Relevance and User Behaviour in Context. In Proceedings of the 2019 Conference on Human Information Interaction and Retrieval, CHIIR 2019, Glasgow, UK, 10–14 March 2019; pp. 143–151. [Google Scholar] [CrossRef]

- Yang, Y.; Capra, R. Nested Contexts of Music Information Retrieval: A Framework of Contextual Factors. In Proceedings of the 2023 Conference on Human Information Interaction and Retrieval, CHIIR 2023, Austin, TX, USA, 19–23 March 2023; Gwizdka, J., Rieh, S.Y., Eds.; ACM: New York, NY, USA, 2023; pp. 368–372. [Google Scholar] [CrossRef]

- Cambazoglu, B.B.; Tavakoli, L.; Scholer, F.; Sanderson, M.; Croft, W.B. An Intent Taxonomy for Questions Asked in Web Search. In Proceedings of the CHIIR ’21: ACM SIGIR Conference on Human Information Interaction and Retrieval, Canberra, ACT, Australia, 14–19 March 2021; Scholer, F., Thomas, P., Elsweiler, D., Joho, H., Kando, N., Smith, C., Eds.; ACM: New York, NY, USA, 2021; pp. 85–94. [Google Scholar] [CrossRef]

- Trippas, J.R.; Spina, D.; Scholer, F.; Awadallah, A.H.; Bailey, P.; Bennett, P.N.; White, R.W.; Liono, J.; Ren, Y.; Salim, F.D.; et al. Learning About Work Tasks to Inform Intelligent Assistant Design. In Proceedings of the 2019 Conference on Human Information Interaction and Retrieval, CHIIR 2019, Glasgow, UK, 10–14 March 2019; Azzopardi, L., Halvey, M., Ruthven, I., Joho, H., Murdock, V., Qvarfordt, P., Eds.; ACM: New York, NY, USA, 2019; pp. 5–14. [Google Scholar] [CrossRef]

- Wildman, J.L.; Thayer, A.L.; Rosen, M.A.; Salas, E.; Mathieu, J.E.; Rayne, S.R. Task types and team-level attributes: Synthesis of team classification literature. Hum. Resour. Dev. Rev. 2012, 11, 97–129. [Google Scholar] [CrossRef]

- Benetka, J.R.; Krumm, J.; Bennett, P.N. Understanding Context for Tasks and Activities. In Proceedings of the 2019 Conference on Human Information Interaction and Retrieval, CHIIR 2019, Glasgow, Scotland, UK, 10–14 March 2019; Azzopardi, L., Halvey, M., Ruthven, I., Joho, H., Murdock, V., Qvarfordt, P., Eds.; ACM: New York, NY, USA, 2019; pp. 133–142. [Google Scholar] [CrossRef]

- Segura-Rodas, S.C. What tasks emerge from Knowledge Work? In Proceedings of the 2023 Conference on Human Information Interaction and Retrieval, CHIIR 2023, Austin, TX, USA, 19–23 March 2023; Gwizdka, J., Rieh, S.Y., Eds.; ACM: New York, NY, USA, 2023; pp. 495–498. [Google Scholar] [CrossRef]

- Taylor, R.S. Information use environments. Prog. Commun. Sci. 1991, 10, 55. [Google Scholar]

- Taylor, R.S.; Taylor, R.S. Value-Added Processes in Information Systems; Greenwood Publishing Group: Norwood, NJ, USA, 1986. [Google Scholar]

- Berryman, J.M. What defines ’enough’ information? How policy workers make judgements and decisions during information seeking: Preliminary results from an exploratory study. Inf. Res. 2006, 11, 4. [Google Scholar]

- Crawford, J.; Irving, C. Information literacy in the workplace: A qualitative exploratory study. JOLIS 2009, 41, 29–38. [Google Scholar] [CrossRef]

- van Deursen, A.; van Dijk, J. Civil Servants’ Internet Skills: Are They Ready for E-Government? In Proceedings of the Electronic Government, 9th IFIP WG 8.5 International Conference, EGOV 2010, Lausanne, Switzerland, 29 August–2 September 2010; Wimmer, M.A., Chappelet, J., Janssen, M., Scholl, H.J., Eds.; Springer: Berlin, Germany; Lausanne, Switzerland, 2010; Volume 6228, pp. 132–143. [Google Scholar] [CrossRef]

- Kaptein, R.; Marx, M. Focused retrieval and result aggregation with political data. Inf. Retr. 2010, 13, 412–433. [Google Scholar] [CrossRef]

- Erjavec, T.; Ogrodniczuk, M.; Osenova, P.; Ljubešić, N.; Simov, K.; Pančur, A.; Rudolf, M.; Kopp, M.; Barkarson, S.; Steingrímsson, S.; et al. The ParlaMint corpora of parliamentary proceedings. Lang. Resour. Eval. 2022, 56, 1–34. [Google Scholar] [CrossRef] [PubMed]

- Li, Y. Exploring the relationships between work task and search task in information search. JASIST 2009, 60, 275–291. [Google Scholar] [CrossRef]

- Hackos, J.T.; Redish, J. User and Task Analysis for Interface Design; Wiley: New York, NY, USA, 1998; Volume 1. [Google Scholar]

- Lykke, M.; Bygholm, A.; Søndergaard, L.B.; Byström, K. The role of historical and contextual knowledge in enterprise search. J. Doc. 2021, 78, 1053–1074. [Google Scholar] [CrossRef]

- Morris, M.R.; Teevan, J.; Panovich, K. What do people ask their social networks, and why?: A survey study of status message q&a behavior. In Proceedings of the 28th International Conference on Human Factors in Computing Systems, CHI 2010, Atlanta, GA, USA, 10–15 April 2010; Mynatt, E.D., Schoner, D., Fitzpatrick, G., Hudson, S.E., Edwards, W.K., Rodden, T., Eds.; Association for Computing Machinery: Atlanta, GA, USA; New York, NY, USA, 2010; pp. 1739–1748. [Google Scholar] [CrossRef]

- Oeldorf-Hirsch, A.; Hecht, B.J.; Morris, M.R.; Teevan, J.; Gergle, D. To search or to ask: The routing of information needs between traditional search engines and social networks. In Proceedings of the Computer Supported Cooperative Work, CSCW ’14, Baltimore, MD, USA, 15–19 February 2014; Fussell, S.R., Lutters, W.G., Morris, M.R., Reddy, M., Eds.; Association for Computing Machinery: Baltimore, MD, USA; New York, NY, USA, 2014; pp. 16–27. [Google Scholar] [CrossRef]

- Wang, Y.; Sarkar, S.; Shah, C. Juggling with Information Sources, Task Type, and Information Quality. In Proceedings of the 2018 Conference on Human Information Interaction and Retrieval, CHIIR 2018, New Brunswick, NJ, USA, 11–15 March 2018; Shah, C., Belkin, N.J., Byström, K., Huang, J., Scholer, F., Eds.; ACM: New York, NY, USA, 2018; pp. 82–91. [Google Scholar] [CrossRef]

- Freund, L.; Toms, E.G.; Waterhouse, J. Modeling the information behaviour of software engineers using a work-task framework. In Proceedings of the Sparking Synergies: Bringing Research and Practice Together-Proceedings of the 68th ASIS&T Annual Meeting, ASIST 2005, Charlotte, NC, USA, 28 October–2 November 2005; Volume 42. [Google Scholar] [CrossRef]

- Russell-Rose, T.; Chamberlain, J. Expert search strategies: The information retrieval practices of healthcare information professionals. JMIR Med. Inform. 2017, 5, e7680. [Google Scholar] [CrossRef]

- Saracevic, T. Relevance: A review of the literature and a framework for thinking on the notion in information science. Part II: Nature and manifestations of relevance. J. Assoc. Inf. Sci. Technol. 2007, 58, 1915–1933. [Google Scholar] [CrossRef]

- Liu, C.; Liu, Y.; Liu, J.; Bierig, R. Search Interface Design and Evaluation. Found. Trends Inf. Retr. 2021, 15, 243–416. [Google Scholar] [CrossRef]

- Joho, H. Diane Kelly: Methods for evaluating interactive information retrieval systems with users—Foundation and Trends in Information Retrieval, vol 3, nos 1-2, pp 1-224, 2009, ISBN: 978-1-60198-224-7. Inf. Retr. 2011, 14, 204–207. [Google Scholar] [CrossRef]

- 9241-11; Ergonomic Requirements for Office Work with Visual Display Terminals (VDTs). The International Organization for Standardization: Geneva, Switzerland, 1998; Volume 45.

- Shah, C.; White, R.W. Task Intelligence for Search and Recommendation; Synthesis Lectures on Information Concepts, Retrieval, and Services, Morgan & Claypool Publishers: San Rafael, CA, USA, 2021. [Google Scholar] [CrossRef]

- Arguello, J.; Wu, W.; Kelly, D.; Edwards, A. Task complexity, vertical display and user interaction in aggregated search. In Proceedings of the 35th International ACM SIGIR conference on research and development in Information Retrieval, SIGIR ’12, Portland, OR, USA, 12–16 August 2012; Hersh, W.R., Callan, J., Maarek, Y., Sanderson, M., Eds.; Association for Computing Machinery: Portland, OR, USA; New York, NY, USA, 2012; pp. 435–444. [Google Scholar] [CrossRef]

- Hienert, D.; Mitsui, M.; Mayr, P.; Shah, C.; Belkin, N.J. The Role of the Task Topic in Web Search of Different Task Types. In Proceedings of the 2018 Conference on Human Information Interaction and Retrieval, CHIIR 2018, New Brunswick, NJ, USA, 11–15 March 2018; Shah, C., Belkin, N.J., Byström, K., Huang, J., Scholer, F., Eds.; Association for Computing Machinery: New Brunswick, NJ, USA; New York, NY, USA, 2018; pp. 72–81. [Google Scholar] [CrossRef]

- Kelly, D.; Arguello, J.; Edwards, A.; Wu, W. Development and Evaluation of Search Tasks for IIR Experiments using a Cognitive Complexity Framework. In Proceedings of the 2015 International Conference on The Theory of Information Retrieval, ICTIR 2015, Northampton, MA, USA, 27–30 September 2015; Allan, J., Croft, W.B., de Vries, A.P., Zhai, C., Eds.; Association for Computing Machinery: Northampton, MA, USA; New York, NY, USA, 2015; pp. 101–110. [Google Scholar] [CrossRef]

- Byström, K.; Järvelin, K. Task Complexity Affects Information Seeking and Use. Inf. Process. Manag. 1995, 31, 191–213. [Google Scholar] [CrossRef]

- Frøkjær, E.; Hertzum, M.; Hornbæk, K. Measuring usability: Are effectiveness, efficiency, and satisfaction really correlated? In Proceedings of the CHI 2000 Conference on Human Factors in Computing Systems, The Hague, The Netherlands, 1–6 April 2000; Turner, T., Szwillus, G., Eds.; Association for Computing Machinery: The Hague, The Netherlands; New York, NY, USA, 2000; pp. 345–352. [Google Scholar] [CrossRef]

- Hornbæk, K.; Law, E.L. Meta-analysis of correlations among usability measures. In Proceedings of the 2007 Conference on Human Factors in Computing Systems, CHI 2007, San Jose, CA, USA, 28 April–3 May 2007; Rosson, M.B., Gilmore, D.J., Eds.; Association for Computing Machinery: San Jose, CA, USA; New York, NY, USA, 2007; pp. 617–626. [Google Scholar] [CrossRef]

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Lewis, J.R. The System Usability Scale: Past, Present, and Future. Int. J. Hum. Comput. Interact. 2018, 34, 577–590. [Google Scholar] [CrossRef]

- Teevan, J.; Collins-Thompson, K.; White, R.W.; Dumais, S.T.; Kim, Y. Slow Search: Information Retrieval without Time Constraints. In Proceedings of the Symposium on Human-Computer Interaction and Information Retrieval, HCIR ’13, Vancouver, BC, Canada, 3–4 October 2013; pp. 1–10. [Google Scholar] [CrossRef]

- Balog, K.; Fang, Y.; de Rijke, M.; Serdyukov, P.; Si, L. Expertise Retrieval. Found. Trends Inf. Retr. 2012, 6, 127–256. [Google Scholar] [CrossRef]

| ID | Task Description | Topic Aspect | Information Sources | Retr. Unit | Complexity |

|---|---|---|---|---|---|

| WT1 | Monitor my domain | Topic background | Domain-dependent | Various | Hard |

| WT2 | Learn a new domain | Topic background | Domain-dep., Experts | Various | Hard |

| WT3 | Answer questions (by CMs) | Topic background, | web | Various | Easy |

| policy | |||||

| WT4 | Give advice on a project | Topic background, | web, domain-dep. | Various | Medium |

| policy | |||||

| WT5 | Research a complex problem | Topic background, | web, domain-dep. | Various | Hard |

| policy | |||||

| WT6 | Perform an internal service | Resource | Intranet, colleagues | Action | Easy |

| WT7 | Maintain/update information | Resource | Utrecht’s webpages | Pages, | Easy |

| including its copies | Documents |

| ID | Task Objective | Task Motivation | (Web) Search Intention | Found n Times | |

|---|---|---|---|---|---|

| PWs | Non-PWs | ||||

| ST1 | Find a domain expert | Ask for advice | Informational/Advice | 5 | 3 |

| ST2 | Find out who works on x | Ask a request | Resource/Obtain | 7 | 2 |

| ST3 | Explore colleagues’ tasks | Avoid overlap in work | Informational/List | 5 | 3 |

| ST4 | Find a data coach | Find all relevant data | Informational/Advice | 4 | 1 |

| ST5 | Re-find most recent policy | Check compliance | Navigational | 4 | 2 |

| ST6 | Find structured data | Decision-making | Informational/Download | 4 | 1 |

| or giving advice | |||||

| ST7 | Quickly (re)find a fact | Answer council’s question | Informational/Closed directed | 6 | 0 |

| ST8 | Find intranet page | Find info or perform action | Resource/Interact | 0 | 2 |

| Task | Task Objective | Channels | Topic Aspect | Retrieval Unit | Search Goal |

|---|---|---|---|---|---|

| ID | Informal Description | Where Do You Search | What Aspect of the Query Topic Is Important Now | What Information Do You Need | Specificity of Need |

| ST1 | Find a domain expert | WIW, colleagues | Expertise (knowledge) | Contact info, | Specific |

| picture | |||||

| ST2 | Find who works on x | WIW, colleagues | Expertise (tasks) | Contact info | Specific |

| ST3 | Explore colleagues’ tasks | WIW, colleagues | Expertise (tasks) | Contact info, | Amorphous |

| contact tasks | |||||

| ST4 | Find a data coach | Known people | Expertise (knowledge) | Contact info | Specific |

| ST5 | Find most recent policy | web, Desktop, iBabs | Policy | Policy document | Specific |

| ST6 | Find structured data | web, colleagues | Data | Dataset, | Amorphous |

| or giving advice | meta-data | ||||

| or giving advice | |||||

| ST7 | Quickly (re)find a fact | web, mail | Information (Fact) | Fact | Specific |

| ST8 | Find intranet page | Intranet | Internal info/service | Fact or page | Specific |

| Condition | Simple tasks () | ||||

| Effectiveness | Correct | Approx | Wrong | Expert | Time Limit |

| Proposed | 100% | 0% | 0% | 0% | 0% |

| Other | 87% | 3% | 7% | 0% | 3% |

| Efficiency | Complete | Time to correct | Avg time | Time to expert | Actions to correct |

| Proposed | 100% | 27 s | 27 s | - | 2.6 |

| Other | 87% | 38 s | 46 s | - | 3.1 |

| Behaviour | #engines | #queries | #clicks | #navs | #total |

| Proposed | 1.00 | 1.19 | 1.04 | 0.38 | 2.61 |

| Other | 1.00 | 3.00 | 0.92 | 1.54 | 5.46 |

| Condition | Complex tasks () | ||||

| Effectiveness | Correct | Approx | Wrong | Expert | Time Limit |

| Proposed | 33% | 0% | 0% | 54% | 13% |

| Other | 27% | 7% | 20% | 33% | 13% |

| Efficiency | Complete | Time to correct | Avg time | Time to expert | Actions to correct |

| Proposed | 16% | 141 s | 148 s | 107 s | 4.5 |

| Other | 17% | 170 s | 186 s | 178 s | 8 |

| Behaviour | #engines | #queries | #clicks | #navs | #total |

| Proposed | 1.00 | 2.00 | 0.75 | 1.75 | 5.00 |

| Other | 1.33 | 3.00 | 3.00 | 2.00 | 8.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schoegje, T.; de Vries, A.; Hardman, L.; Pieters, T. Improving the Effectiveness and Efficiency of Web-Based Search Tasks for Policy Workers. Information 2023, 14, 371. https://doi.org/10.3390/info14070371

Schoegje T, de Vries A, Hardman L, Pieters T. Improving the Effectiveness and Efficiency of Web-Based Search Tasks for Policy Workers. Information. 2023; 14(7):371. https://doi.org/10.3390/info14070371

Chicago/Turabian StyleSchoegje, Thomas, Arjen de Vries, Lynda Hardman, and Toine Pieters. 2023. "Improving the Effectiveness and Efficiency of Web-Based Search Tasks for Policy Workers" Information 14, no. 7: 371. https://doi.org/10.3390/info14070371

APA StyleSchoegje, T., de Vries, A., Hardman, L., & Pieters, T. (2023). Improving the Effectiveness and Efficiency of Web-Based Search Tasks for Policy Workers. Information, 14(7), 371. https://doi.org/10.3390/info14070371