Abstract

This work describes a learning tool named ARGeoITS that combines augmented reality with an intelligent tutoring system to support geometry learning. The work depicts a study developed in Mexico to measure the impact on the learning and motivation of students using two different learning systems: an intelligent tutoring system with augmented reality (ARGeoITS) and a system with only augmented reality (ARGeo). To study the effect of this type of technology (ARGeoITS, ARGeo) and time of assessment (pre-, post-) on learning gains and motivation, we applied a 2 × 2 factorial design to 106 middle school students. Both pretest and post-test questionnaires were applied to each group to determine the students’ learning gains, as was an IMMS motivational survey to evaluate the students’ motivation. The results show that: (1) students who used the intelligent tutoring system ARGeoITS scored higher in learning gain (7.47) compared with those who used ARGeo (6.83); and (2) both the ARGeoITS and ARGeo learning tools have a positive impact on students’ motivation. The research findings imply that intelligent tutoring systems that integrate augmented reality can be exploited as an effective learning environment to help middle–high school students learn difficult topics such as geometry.

1. Introduction

Learning geometry helps students develop their logical reasoning ability, which implies analyzing and elaborating arguments about spatial forms, shapes, and abstract math concepts [1]. However, geometry tends to be abstract, and many students encounter difficulties and show poor performance [2]. Some researchers claim that to improve students’ geometrical reasoning abilities, learning activities should keep the motivation and adaptation to their knowledge and psychological conditions [3,4].

Augmented reality (AR) is a technology that enhances the user’s actual physical surroundings by overlaying virtual elements such as images, videos, and virtual items [5]. AR technology might be useful both to facilitate the visualization of geometric shapes and to foster psychological states such as motivation towards learning. AR technology could help students easily understand basic geometry concepts, since it supplements their sensory perception of the real world through the addition of computer-generated content to the students’ environment in real-time [6]. Moreover, AR attracts attention to students due to its interactive possibilities [7]. In recent years, many researchers have focused their works on AR applied to education [8,9,10,11], particularly in areas of study such as science, technology, engineering, and mathematics [12]. Although AR has proven to foster motivation and engagement, it does not always positively impact learning outcomes. Consequently, some researchers suggest integrating AR technology into learning environments with the purpose of guiding learning activities in accordance with the knowledge or psychological state of students [13]. An effective choice for this integration is the incorporation of AR to an intelligent tutoring system (ITS) [14]. Intelligent tutoring systems are computer-based systems that provide personalized learning support to the student, according to their current (or projected) performance or skill in a task [15,16]. ITSs provide personalized and interactive help so that the content dynamically adapts to the student—who can “learn by doing” in realistic and meaningful contexts—providing feedback to the student [17].

ITSs have been built using different artificial intelligence (AI) techniques such as neural networks, Bayesian networks, data mining, and fuzzy logic, and they have proven their efficiency in many different fields of knowledge [18]. For example, in the field of English learning, the work of [19] combines a neural network with a fuzzy system to adapt learning content. In [20], a fuzzy-logic- and constraint-based student model (CBM) for an intelligent tutoring system was developed to teach Turkish students how to use punctuation correctly. In the field of computer programming learning, Ref. [21] implemented an intelligent multi-agent with a Bayesian technique for updating the student model, estimating the learner’s level of knowledge, and adapting the learning content. In the area of chemistry and molecular biology, Ref. [22] applied a data mining technique for learners’ evaluation and adaptive feedback. In the study field of the human circulatory system, Ref. [23] worked with intelligent multi-agents, neural networks, and different sensors for learners’ knowledge evaluation, automatic facial expression recognition, and emotion measurement. Finally, in the field of mathematics, Ref. [24] presented a Bayesian network for classifying the learner’s affective states and adapting the feedback generation. In recent years, ITSs have incorporated new technological strategies to give their operation more emotional intelligence and simulate empathy. Two examples of this kind of strategy are the incorporation of emotion recognition [25] and the inclusion of motivational techniques such as gamification [26].

This study aims to assess the learning effectiveness and the impact on motivation of using an ITS with an AR interface for practicing the basic principles of geometry. The activities were designed according to the curricular objectives and subject matter of the Mexican middle school geometry curriculum. The interface was compared to a similar AR-based lesson that encompasses identical learning objectives and content but lacks an ITS.

The study is unique in that it researches the use of AR technology embedded into an ITS within a real school setting for practicing geometry at a middle school level, while also comparing the use of the AR interface with or without an ITS guide. The study can help us better understand whether AR technology is more effective when used in combination with a tutoring system.

This work is organized as follows: Section 2 presents the related works. Section 3 describes the architecture of the learning applications developed for the study. Section 4 explains the general structure of the methodology. Section 5 presents the results of the evaluation of academic performance and motivation. Finally, discussions and conclusions are described in Section 6.

2. Related Works

This section mentions the most relevant related works that support this research, which involves AR in education and ITSs enhanced with AR.

2.1. AR in Education

AR allows users to interact in real-time with virtual elements in real contexts. This distinctive aspect of AR technology provides new opportunities to promote learning and allows the deployment of constructive learning environments [27]. The rapid development of smart mobile devices has increased the amount of AR applications in education, ranging from the use of AR for augmented books [28,29,30] to deploying inquiry-based learning activities [31,32,33] and fostering learning via exploration [34,35,36]. Regardless of the use of AR in developing learning activities, studies have demonstrated that AR allows students to learn new procedures in real conditions [37]; additionally, most of the studies claim that AR-enabled learning environments can enhance learning motivation, engagement, and learning effectiveness [38]. The use of AR in education can help students find the activities fun and interesting, monitoring them and increasing their interaction with the learning tool, making them understand abstract concepts, depending on their learning pace. Regarding teachers, they feel that AR enhances student creativity, participation, and attention to the academic work [39].

An application to help students learn programming using marker-based AR was carried out by [40]. This study focused on usability, efficiency, flow experience, and user perception. The efficiency of learning emphasizes the levels of competitiveness of the students to successfully solve the proposed exercises. To measure the efficiency of student learning, the authors recorded the number of tasks successfully completed by the student during the session using the system. On the other hand, [41] presented three AR-based applications to support students to understand and learn abstract concepts in probability and statistics. The authors examined the relationship between student performance and their attitudes when interacting with the application. They also evaluated the student learning gains when using their applications. The results reflected that the applications are useful for the learning achievements of the students and attitude improvement. An AR application for teaching geometry in middle schools was proposed by [42], where the students could create different segments by using two or more markers. They also made geometry solids using markers-based AR. The authors showed that by using AR in geometry lessons, they created conditions for positive emotional interaction between the student and the teacher.

Currently, most AR-based learning systems have two main limitations. First, they promote distraction probably due to the novelty effect [32,43,44]. Second, they provide instructions linearly with no feedback about any eventual mistake [45]. To alleviate these problems, some researchers are including scaffolding approaches in their AR learning environments [46,47], while others provide intelligent tutoring systems to guide students through the learning process in a more accurate way [45,48,49].

2.2. ITSs Supported with AR

The field of ITSs supported with AR has been scarcely explored, and most of the currently published work focuses on finding learning activities that may be convenient and relevant to be carried out in the new interactive environments, with possible help for students in relation to the activities that they perform or with basic scaffolding techniques [12,50].

Intelligent tutoring systems use artificial intelligence techniques to represent the knowledge that is essential in the teaching–learning process such as domain knowledge, pedagogical strategy knowledge, and knowledge about the student’s present state [51]. Instruction and learning support delivered using an ITS tend to provide higher learning gains than the classroom and static instruction. The effectiveness of ITSs is tied to their capabilities to adapt themselves to the characteristics of the students.

Some researchers claim that ITSs based on a desktop computer paradigm disconnect the real world and the tutoring instruction, thus degrading the interest and motivation of students [45,52]. In this regard, AR technology has been used in education or training as the main interface module to support the rest of the ITS components (student and pedagogical modules). For example, the Motherboard Assembly Tutor (MAT), designed by [48], integrates AR technology to provide an adaptive training experience for students. In their work, students learn the process of assembling components such as a RAM, CPU chip, or heat sink on a motherboard guided by the MAT tutor, which overlays the assembly parts on the motherboard using AR technology. The results showed that MAT users improved test scores by 25% and found the solution 30% faster compared with users who trained without the ITS. One limitation of this work was the small sample of participants. Similarly, the project ARTWILD proposed by [53] used the Generalized Intelligent Framework for Tutoring (GIFT) with Markov decision processes for inferences made by the intelligent tutoring system. Authors used Metaio Creator and Unity 3D to support military training tasks in environments that are not specially designed to support combat training. They developed a software architecture that provides a standard messaging interface between the apprentice, the sensor, the tutor, and the pedagogy module. Additionally, [54] presented IARTS, an ITS using AR working together with the assistance of a virtual tutor and an adaptive guide for solving math problems. The learning tool engages the student in a variety of interactive ways, enhancing the student with rich content unique to three-dimensional learning environments. The tutor combined with AR technology uses a head-mounted display to guide students through the cabling of a network topology by overlaying arrows and digital icons on the ports of the hardware. The messages are displayed only when the learner experiences difficulties, allowing learners to remain motivated by practicing themselves. Likewise, AdapTutAr is a project designed by [55] to be an adaptive task tutoring system that enables experts to record machine task tutorials via embodied demonstration and train learners with different AR tutoring contents adapting to each user’s characteristics. The system enables an expert to record a tutorial that can be adaptively learned by different workers. For this purpose, it uses a convolutional neural network for machine state prediction based on bounding boxes. The authors evaluated the accuracy of the low-level state recognition on a mockup machine with nine component types, and further evaluated the overall adaptation model via a remote user study in a VR environment.

The combination of augmented reality technology and fuzzy logic in an ITSs has the potential to significantly enhance student learning in several ways by engaging different cognitive mechanisms. Firstly, AR can capture students’ attention by overlaying digital information on the real-world environment [56], while fuzzy logic can use rules and reasoning to adapt the presented information to the student’s level of understanding, making the content more engaging and relevant to individual learners [16]. Secondly, AR can provide visual and spatial cues that help students understand complex concepts by visualizing abstract ideas [56]. Fuzzy logic can also personalize the learning experience by adapting the content presentation based on the student’s prior knowledge and current performance [57]. Lastly, AR can provide students with opportunities to solve problems and make decisions in a real-world context [58]. Fuzzy logic can assist students in making informed decisions by analyzing data and providing feedback on the best course of action [59].

3. Materials and Methods

3.1. The Architecture of the Learning Tool

ARGeoITS is an intelligent tutoring system designed to promote an effective learning experience and allow middle school students to practice basic concepts of geometry. The system includes a fuzzy logic engine that adapts its tutoring to previous students’ performance, and it is enhanced with an AR-based interface to easily visualize geometric shapes required for the learning activities.

3.2. Architectural Model

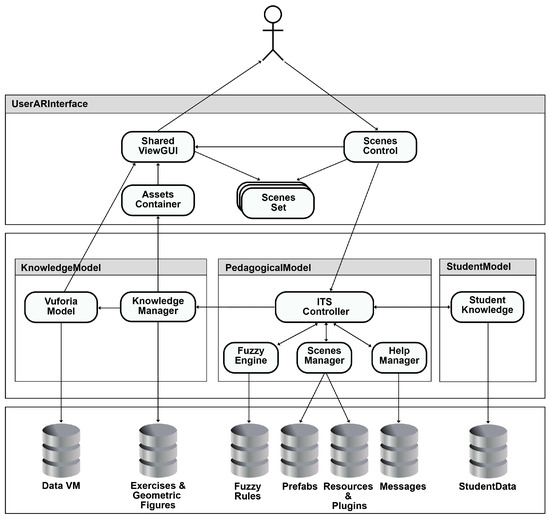

The architecture of the system follows the traditional architecture of an ITS, with 4 modules to encapsulate the main processes; these modules are called KnowledgeModel, PedagogicalModel, StudentModel, and UserARInterface. Figure 1 shows the distribution and connection of these modules. Each component within the architecture is briefly described as follows:

Figure 1.

ARGeoITS architecture.

(1) UserARInterface is the main component where the student interacts with the system. It is responsible both for building the scenes required for each student according to their knowledge level and for handling the interaction between the student and the system. This component contains several internal components to perform both: to show geometry study topics and to select options to control the flow of tutor execution. It contains 4 components: ScenesControl, ScenesSet, SharedViewGUI, and AssetsContainer.

- SharedViewGUI. This component is a graphical user interface (GUI) that dynamically adapts to the screen of the mobile device. Its function is to present to the user the content of the tutor distributed in topics of study, exercises, and quizzes.

- ScenesSet. This component contains a set of scenes designed for each of the elements of the tutor. The user interacts with different scenes, among which are: entering the system, main menu of options, exercise settings, and instructions panel.

- ScenesControl. This component manages the scenes where the user interacts with the system. The ITS executes different processes based on the user’s actions.

- AssetsContainer. This component is a container for all the assets required to show the tutor’s exercises on the screen. It contains the digital elements that are used through the Vuforia markers (images and gameObjects).

(2) PedagogicalModel. This component is responsible for making decisions to manage the teaching–learning process. The general functionality of the tutor depends on the information produced by the interaction of the student with the tutor in real-time. The system adapts the instruction to the user’s needs by providing help or empathetic messages during its execution. This component interacts with the StudentModel and the KnowledgeModel. It contains 4 elements: ITSController, FuzzyEngine, ScenesManager, and HelpManager.

- ITSController. This is the main component of the pedagogical model of the tutor. The ITSController is the central manager of the system, and is responsible for instantiating objects and system variables, communicating with the models and the controllers, and managing the student interaction. It is the component that receives the options selected by the user in the interaction scenes and performs the corresponding actions. It is responsible for selecting the exercises students must perform based on the recommendations made by the Fuzzy Inference Engine, and it is responsible for providing feedback to the student. For example: when the user enters the system (LogIn), the ITSController calls on the StudentModel to configure the student model with the student information that is stored in the database.

- FuzzyEngine. This component implements the fuzzy logic that the tutor uses to adapt or infer the pedagogical model to the student’s needs. It also controls the flow and the rules of the current exercise. The fuzzy system contains linguistic variables, fuzzy sets, and various labels to represent time, the number of correct answers, the number of errors, and how many times the user asks for help. The fuzzy rules for the inference system are separated in a DB. We explain this component in detail later.

- ScenesManager. This component manages the scenes that are sent to the UserARInterface module. The component controls the flow of scenes from the main menu and accesses the databases of prefabs, resources, and plugins to configure the current exercise based on the user’s needs.

- HelpManager. This component manages help messages to solve the exercises, e.g., it shows the formula to calculate the area or volume of a geometric figure, it presents concepts used in the exercise, and it also selects motivational messages to be sent to the student when the fuzzy system determines the need for them based on user interaction.

(3) KnowledgeModel. This component represents the knowledge of the tutor’s domain (geometry), integrating the augmented reality assets. It is responsible for managing teaching material, such as (1) topics, (2) exercises required to learn how to compute base areas, prism volume, a sum of volumes of quadrangular prims, and the identification of cuts in cylinders and cones, and (3) related questions including the statement, possible answers, correct answer, feedback, and level of difficulty. It contains 2 components: KnowledgeManager and VuforiaModel.

- KnowledgeManager. This component manages the knowledge stored in the teaching resources. This knowledge reaches the student in different stages of interaction with the tutor. The component accesses the Exercises and Geometric Figures database to send the assets to the Asset Container and also interacts with the VuforiaModel component to produce the resources needed to emulate the AR.

- VuforiaModel. This component is responsible for providing the UserARInterface with the assets to emulate the AR Camera and other Vuforia elements. The component accesses the DB DataModel to obtain the markers and scripts required to display augmented reality exercises on the mobile device.

- StudentModel. This component represents the student model which contains personal and system usage information. It contains a component called StudentKnowledge.

(4) StudentKnowledge. This component is responsible for creating a representation of the student’s cognitive model. It exchanges student information with the ITSController, including their level of knowledge, topics visited, level of difficulty of visited exercises, number of unsuccessful attempts in each exercise, time spent in each exercise, and test scores. The component consults personal information in the student’s database and records the information from the current session, which is captured based on the student’s interaction with the tutor.

Through ScenesControl, the application collects the student’s performance from the current exercise, and the ITS Controller receives the information and invokes the student’s knowledge to update their profile while making fuzzy inferences through the fuzzy engine. Once the fuzzy rules are executed, the complexity level of the following exercise is obtained, and the KnowledgeManager sends the elements to the AssetsContainer while the VuforiaModel loads the augmented objects on the SharedViewGUI; thus, both components update the user interface.

On the other hand, AR has the VuforiaModel component; its main function is to load the augmented objects and send them to the SharedViewGUI to update the graphical user interface. VuforiaModel has a file in xml format with information about the recognition of markers, which are detected by the camera of the mobile device. Once it is validated that the detected marker is the one requested, at that moment, the pertinent actions are carried out to display the pertinent information on the screen so that the student can solve the problem that is requested.

3.3. The Fuzzy Inference System

Learning and assessing a student’s level of knowledge are not simple jobs in general, because they are influenced by elements that cannot be readily observed and assessed, particularly in ITSs, where there is no real-life interaction between a teacher and students [60]. Fuzzy logic is one possible strategy to deal with uncertainty. In real-world issues, fuzzy logic is utilized to deal with the uncertainty generated by imprecise and missing data, as well as human subjectivity. The application of fuzzy logic in a learning environment can improve the learning environment by allowing intelligent decisions about learning content to be sent to the learner, as well as personalized feedback to be offered to each learner. Fuzzy logic may also diagnose a learner’s level of understanding of a topic and forecast the learner’s level of understanding of related concepts.

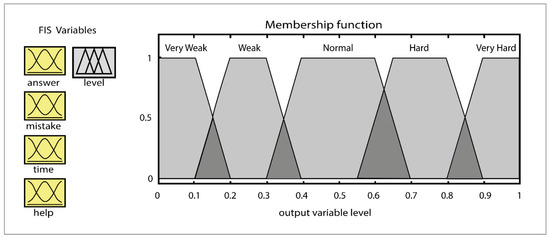

In ARGeoITS, the Fuzzy Engine component handles the personalization in the system. The engine uses 4 fuzzy input variables: the number of right answers, the number of mistakes made by the student, the number of assistances requested, and the time dedicated to completing the last exercise. These variables represent the state of the student solving the exercise, with the values low, regular, or high. The output variable level is computed by 81 fuzzy rules that consider the total of correct answers, the total errors made while solving the exercises, the time to solve the current exercise, and the amount of help requested to solve the problem. The possible values of output level are very weak, weak, normal, hard, and very hard. Figure 2 shows an example of fuzzy sets.

Figure 2.

Fuzzy set example of the answer, mistake, time, help, and level.

The linguistic variable answer has a range between 1 and 20. It increases by two with each exercise. The answer fuzzy sets are low, regular, and high. The linguistic variable mistake is in the range of 0 and 10 and includes fuzzy sets low, regular, and high. The linguistic variable time ranges from 1 to 150 s per exercise; it includes the fuzzy sets slow, normal, and fast. The linguistic variable help ranges from 0 to 15 and includes the fuzzy sets low, regular, and high.

Each of the four fuzzy input variables is normalized to a range of values between 0 and 1 to obtain the next level of difficulty. The exit variable is assessed linguistically using one of the membership functions after the end of the exercise in turn. The level of difficulty obtained can belong to more than two membership functions. The membership function that has the maximum membership results in the appropriate fuzzy linguistic value to represent the difficulty level of the following exercise. The level of difficulty assigned to the next exercise is very weak, weak, normal, hard, and very hard, depending on whether the score in the last exercise is under 20%, 10%, 30%, 55%, or 80%, respectively.

We used Library AForgeNet 2.0 to define sets and fuzzy sets [61]. An example of a fuzzy rule is as follows:

- IF (answer is high) and (time is low) and (mistake is regular) and (help is low)

- THEN level is hard

For the purposes of this study, the developers implemented a simplified version of ARGeoITS named ARGeo [62]. Both applications covered the same learning topics and offered students the same activities. Fuzzy logic was chosen to implement the inference engine of the ITS because it is simple and efficient when working in reasoning problems, especially in computer programming and mathematics [63,64]. As the field of study of mathematics is based on numbers, geometric spaces, and patterns, fuzzy logic reasoning represents a good choice for problem solving and decision making in this field [18]. Additionally, fuzzy systems require little processing power, and thus performance is not affected whenever the system is allocated in mobile devices. The Fuzzy Inference Engine of ARGeoITS is used to suggest students’ next exercise to solve according to their previous performance. In contrast, ARGeo followed a linear workflow with no learning material adaptation to control the learning activities presented to students.

4. Method

The main purpose of this research study was to assess the impact of intelligent tutoring systems enhanced with augmented reality technology on students’ motivation and learning gain regarding basic principles of geometry. To this end, two learning tools were designed: ARGeo [65] and ARGeoITS [66], which are available for download at https://argeo-apps.web.app/ (accessed on 20 December 2022) [67]. At https://youtu.be/IBKfJ4OstJ4 (accessed on 20 December 2022), there is a video where there is a brief explanation of how to work the exercises that integrate ARGeo and ARGeoITS. The former is an image-based AR application that allows students to practice the basic principles of geometry. The latter is an ARGeo enhanced with an intelligent tutoring system based on a fuzzy logic engine to guide students in their learning activities. An experimental design of two groups with random selection was used to compare the two learning tools on participants’ motivation and the acquisition of basic concepts of geometry. Specifically, the present study poses the following three research questions:

RQ1: Is there any difference in students’ learning outcomes depending on which of the two learning applications they used?

RQ2: Is there any difference in students’ motivation toward the instructional material depending on which of the two proposed learning applications they used?

RQ3: Are there any differences in the four factors that measure student motivation depending on which of the two proposed teaching scenarios was used?

4.1. Participants

To select the students who participated in this evaluation, we went to different schools (public and private) in our city, considering different academic backgrounds according to the type of school.

The experiment involved 106 (grade 9) middle school students (age 13–15, M = 14.07, SD = 0.707). Students were randomly assigned to the control or experimental groups, with 24 females and 29 males in the control group, and 22 females and 31 males in the experimental group. The students in the control group used the ARGeo learning tool while the students in the experimental group used ARGeoITS. The data collected from five students were removed from the sample due to missing values (they did not participate in the post-test evaluation). A text document was provided to students and their parents outlining the purpose of the research and their right to withdraw at any moment. Informed consent was obtained from every participant. Figure 3 illustrates two students interacting with the learning tool and Figure 4 shows two students solving volume of regular prism exercises.

Figure 3.

Students using the learning tool.

Figure 4.

Students solving volume of regular prism exercises.

4.2. Measurement Instruments

4.2.1. Pretest and Post-Test Questionnaires

Two questionnaires (pretest and post-test) were designed by the researchers to measure students’ knowledge of basic geometry concepts before and after the learning activity. Middle school teachers who participated in this study examined and validated both pretest (see Appendix A) and post-test questionnaires (see Appendix B). Pretest and post-test instruments consisted of 8 multiple-choice questions, each worth 1.25 points. Next, we present an example of a question from these questionnaires:

What is the volume of a square prism considering that each side measures 5 cm and has a height of 10 cm?

150 cm3

250 cm3

225 cm3

200 cm3

4.2.2. Motivational Survey

We used the IMMS survey to measure the impact of the AR application on motivation toward the instructional material. The goal of this instrument is to assess how motivated students are for a particular course [68]. IMMS measures four motivation factors: Attention, Relevance, Confidence, and Satisfaction (ARCS). It contains 36 questions with 5-point Likert scale items. Thus, total scores ranged from 111 to 168. An example of a question from this questionnaire is:

The way the material was presented using augmented reality technology helped me keep my attention.

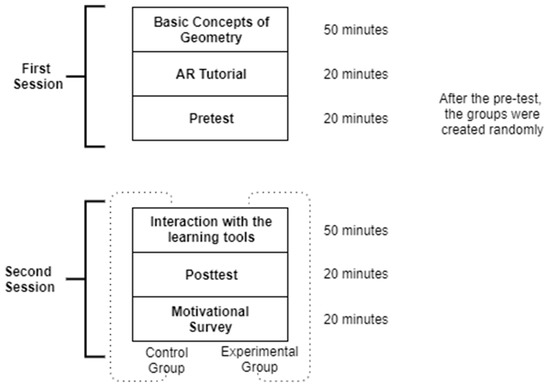

4.3. Procedure

The experiment was conducted in two sessions. During the first session, all students received a 50 min lesson on the basic principles of geometry. Later, all students received a 20 min tutorial on augmented reality, covering how it works and how to use the markers correctly. For the final activity of the first session, the students were given 20 min to respond to the pretest.

During the second session, each student received a tablet with their respective learning tool: ARGeo for students allocated to the control group and ARGeoITS for students allocated to the experimental group. The group of researchers provided both groups with instructions to carry out the intervention. The students interacted with the learning tools for 50 min. In this lapse of time, the students solved the geometry problems displayed on their respective tablets. Whenever there were doubts or technical issues with the interaction with the learning tools, the research group was available to offer support and help students continue with the interaction. Later, the students answered a 20 min post-test and a 20 min survey to measure their learning and motivation levels, respectively. Figure 5 shows the steps of the entire intervention.

Figure 5.

Steps of the intervention.

5. Results

5.1. Research Question 1

Is there any difference in students’ learning outcomes depending on which of the two learning applications they used?

The Shapiro–Wilk test was applied to examine the normality distribution of the data obtained from the pretest. The result (W = 0.964, p-value = 0.109) indicates a normal data distribution. Therefore, parametric tests can be used for the rest of the analysis. Table 1 shows the descriptive analysis of students’ pretest scores for both the control (M = 5.79, SD = 1.489) and the experimental (M = 5.64, SD = 1.688) groups.

Table 1.

Descriptive statistics of pretest data.

The ANOVA test was carried out to compare the knowledge background between the students that used the ARGeo learning tool (control group) and the students that used the ARGeoITS learning tool (experimental group). The test result showed that there was no statistically significant difference between the groups (F(1106) = 0.182, p-value = 0.670). Therefore, it can be assumed that both groups had similar background knowledge of the basic concepts of geometry.

The Shapiro–Wilk test was conducted to demonstrate the normality distribution of the data obtained from the post-test. The result (W = 0.962, p-value = 0.090) indicates a normal data distribution. Therefore, parametric tests can be used for the rest of the analysis.

Table 2 shows the descriptive analysis of both groups’ post-test scores. As we can observe, students in both groups (control and experimental) show an increment in the outcome from the pretest to the post-test; the mean in both groups is higher in the post-test.

Table 2.

Descriptive statistics of post-test data.

The ANOVA test conducted aimed to compare the main effect of the type of experiment (control, experimental). The results of the analysis revealed that the experiment using ARGeoITS (experimental group) had a statistically significant difference (F(1106) = 4.752 and p-value = 0.032). The mean achievement score was higher in the experimental group (M = 7.47, SD = 1.601) compared with the control group (M = 6.83, SD = 1.424), indicating a better learning outcome for students in the experimental group.

Table 3 shows the results of applying the univariate ANOVA test to compare the effects of the post-test and the type of school the students come from.

Table 3.

ANOVA test of post-test data and type of school.

The conducted ANOVA test aimed to compare the post-test scores between the two groups of students based on the type of school they attended (public or private). The results of the ANOVA showed that there was a statistically significant difference between the two groups of students (F (1106) = 6.675, p-value = 0.011). The students that came from private schools (M = 7.62, SD = 1.396) performed significantly better compared with those who came from public schools (M = 6.84, SD = 1.566). These results indicate that there is a significant difference in learning outcomes between students from private and public schools, with private school students performing better in the post-test.

5.2. Research Question 2

Is there any difference in students’ motivation toward the instructional material depending on which of the two learning applications proposed they used?

The minimum and maximum scores of the IMMS instrument [68] are 36 and 180, respectively, since the instrument has 36 items and each item was scored on a five-point categorical scale. The total scores in the control group ranged from 111 to 159, and the scores in the experimental group ranged from 128 to 168. These results indicate that students were moderately motivated when the module was taught within the AR-based learning environment and more motivated when it was taught within the ITS-enhanced AR learning environment.

The Shapiro–Wilk test of normality distribution was used to examine the distribution of the difference in the motivation considering the two teaching scenarios (W = 0.960, p-value = 0.079). It can be assumed that the difference in motivation presents a normal distribution [69]. Therefore, parametric tests can be used for the rest of the analysis.

A two-factor ANOVA test was conducted to compare the results of the type of experiment and students’ score of motivation. Results indicate that there is a statistically significant difference in motivation between students from the experimental group (M = 4.36, SD = 0.346) and students from the control group (M = 4.24, SD = 0.032), F(1106) = 5.70, p-value = 0.019. According to the results, students using ARGeoITS were more motivated towards the learning activity compared with those using ARGeo.

5.3. Research Question 3

Are there any differences in the four factors that measure student motivation depending on which of the two teaching scenarios proposed were used?

To measure the internal consistency of motivation items, a coefficient Cronbach’s Alpha was calculated for the items belonging to each IMMS motivation factor. To consider the internal reliability of statements considering the same factor as satisfactory, Cronbach’s Alpha should be greater than 0.7 [70]. The obtained Alpha values for each factor are at a satisfactory level of reliability (α > 0.7), as shown in Table 4.

Table 4.

Reliability analysis to each factor ARCS of IMMS.

Table 5 shows the descriptive statistics for all subscales of each factor from the IMMS motivational survey to determine the motivational impact in both groups of students. For the four scales that describe motivation toward the learning activities, the highest mean values were attained by students in the experimental group.

Table 5.

Descriptive statistics for all subscales of IMMS results.

An ANOVA univariate test was carried out to compare motivation results between the control group and the experimental group across each factor measured by the IMMS survey test. The results indicate that for the Attention and Confidence motivation factors, there was a statistically significant difference in favor of the experimental group (see Table 6). This suggests that the students in the experimental group had higher motivation than those in the control group.

Table 6.

ANOVA univariate test results by each factor of IMMS survey test.

6. Discussions and Conclusions

In this study, we examined whether learning activities guided by an intelligent tutoring system that is enhanced with an augmented reality interface improves: (1) students’ learning outcomes and (2) the motivation toward the learning activities compared with an equivalent application that does not include the ITSs module. The main findings and their implications are discussed below.

Regarding the learning effectiveness of both applications, after conducting a statistical analysis on the pretest and post-test scores, we identified that the students who used ARGeoITS scored significantly higher compared with students that used ARGeo. This result is consistent with the findings of previous studies [45,48]. The personalization of the learning activities suggested to students—based on participants’ present knowledge state [46,71], participant’s actions [72], psychological states of students [73], and comparing participants’ behavior with the behavior of an expert [74]—has a positive impact on learning outcomes [41,75]. Further studies are required to understand the factors that contribute to the impact of ITS on learning outcomes. In this sense, mixed-method studies including qualitative information could be useful.

The quantitative results of this research study also showed that the use of AR technology in both learning environments studied had a positive effect on the motivation toward learning activities [76,77]. This was an expected result since motivation has been highlighted in the educational area as an affordance of this emerging technology [11,78,79]. Moreover, when comparing the impact of two AR-based learning applications on students’ motivation toward learning activities, we identified that students who were guided by the intelligent tutoring system enhanced with AR were significantly more motivated than those that were using the learning application without the ITS.

The support of the ITS when the student is presented with exercises according to their skills and knowledge is essential, so that they improve their spatial skills step by step within the field of geometry. This invokes greater confidence and motivation in the student when using the tool applied by an intelligent motor to infer or forecast their next exercises and could be an indication of the importance of managing the student’s learning progress according to how and when they solved the proposed exercises. Additionally, the difference in motivation can be achieved through the personalized guidance and monitoring that the ITSs provide to the student. However, further studies are necessary to confirm this hypothesis.

Based on the results of this study, the ITS application enhanced with AR technology was more effective than the AR-based learning application without the ITS module [48], in both promoting students’ knowledge of the basic principles of geometry and in fostering motivation to learn activities.

Despite the above-mentioned findings, this work has some limitations. First, the assessment involved short-term retention of the basic principles of geometry. Further work should include long-term evaluations to verify that the knowledge has been effectively acquired. Second, a major limitation in this study is potential biases that may have occurred during the collection process since the data collected were self-reported, and we did not independently verify their accuracy. Third, this study had a small sample of students in the evaluation. It is necessary to increase the sample size with more students to analyze the impact of the tool in different contexts. For future work, we want to deepen the referential framework on how to make intersections to 3D figures such as cones or cylinders and deepen the qualitative impact of learning on students.

Author Contributions

All authors contributed to the study, conceptualization, and methodology of this work; the software was developed by A.U.-P.; the validation and the formal analysis by M.B.I. and A.U.-P.; the investigation and resources by M.B.I., R.Z.-C., M.L.B.-E. and L.-M.G.-B.; the writing—original draft preparation by A.U.-P. and M.B.I.; the writing—review and editing by all the authors; the supervision by M.B.I., R.Z.-C. and M.L.B.-E. All authors have read and agreed to the published version of the manuscript.

Funding

The work described in this paper was fully supported by a scholarship from CONACYT (Consejo Nacional de Ciencia y Tecnología) in México and a grant from PRODEP (511-6/2019-8474). This work was also co-founded by the Madrid Regional Government, Spain, through the Project e-Madrid-CM (P2018/TCS-4307) and by the Spanish Ministry of Science, Innovation, and Universities through Project Smartlet (TIN2017-85179-C3-1-R). The publication is part of the I+D+i project “H2O Learn” (PID2020-112584RB-C31). These three projects have also been co-founded by the Structural Funds (FSE and FEDER), Spain.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

We appreciate the support provided by teachers, principals, and students from middle schools who participated in this experiment.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Pretest Questionnaire

Appendix B. Posttest Questionnaire

References

- Kusumah, Y.S.; Martadiputra, B.A.P. Investigating the Potential of Integrating Augmented Reality into the 6E Instructional 3D Geometry Model in Fostering Students’ 3D Geometric Thinking Processes. Int. J. Interact. Mob. Technol. 2022, 16. [Google Scholar] [CrossRef]

- Halat, E.; Jakubowski, E.; Aydin, N. Reform-Based Curriculum and Motivation in Geometry. EURASIA J. Math. Sci. Technol. Educ. 2008, 4, 285–292. [Google Scholar] [CrossRef] [PubMed]

- Idris, N. Teaching and Learning of Mathematics: Making Sense and Developing Cognitives Abilities; Utusan Publications & Distributors Sdn.Bhd.: Kuala Lumpur, Malaysia, 2006. [Google Scholar]

- Alfat, S.; Maryanti, E. The Effect of STAD Cooperative Model by GeoGebra Assisted on Increasing Students’ Geometry Reasoning Ability Based on Levels of Mathematics Learning Motivation. J. Phys. Conf. Ser. 2019, 1315, 012028. [Google Scholar]

- Azuma, R.; Baillot, Y.; Behringer, R.; Feiner, S.; Julier, S.; MacIntyre, B. Recent Advances in Augmented Reality. IEEE Comput. Graph. Appl. 2001, 21, 34–47. [Google Scholar] [CrossRef]

- Azuma, R. A Survey of Augmented Reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Lisowski, D.; Ponto, K.; Fan, S.; Probst, C.; Sprecher, B. Augmented Reality into Live Theatrical Performance. In Springer Handbook of Augmented Reality; Springer: Berlin, Germany, 2023; pp. 433–450. [Google Scholar]

- Laine, T.H. Mobile Educational Augmented Reality Games: A Systematic Literature Review and Two Case Studies. Computers 2018, 7, 19. [Google Scholar] [CrossRef]

- Nincarean, D.; Alia, M.B.; Halim, N.D.A.; Rahman, M.H.A. Mobile Augmented Reality: The Potential for Education. Procedia—Soc. Behav. Sci. 2013, 103, 657–664. [Google Scholar] [CrossRef]

- Wang, M.; Callaghan, V.; Bernhardt, J.; White, K.; Peña-Rios, A. Augmented Reality in Education and Training: Pedagogical Approaches and Illustrative Case Studies. J. Ambient Intell. Humaniz. Comput. 2018, 9, 1391–1402. [Google Scholar] [CrossRef]

- Di Serio, Á.; Ibáñez, M.B.; Kloos, C.D. Impact of an Augmented Reality System on Students’ Motivation for a Visual Art Course. Comput. Educ. 2013, 68, 586–596. [Google Scholar] [CrossRef]

- Mystakidis, S.; Christopoulos, A.; Pellas, N. A Systematic Mapping Review of Augmented Reality Applications to Support STEM Learning in Higher Education. Educ. Inf. Technol. 2022, 27, 1883–1927. [Google Scholar] [CrossRef]

- Ibañez, M.B.; Delgado-Kloos, C. Augmented Reality for STEM Learning: A Systematic Review. Comput. Educ. 2018, 123, 109–123. [Google Scholar] [CrossRef]

- Yasin, M.; Utomo, R.A. Design of Intelligent Tutoring System (ITS) Based on Augmented Reality (AR) for Three-Dimensional Geometry Material. AIP Conf. Proc. 2023, 2569, 040001. [Google Scholar]

- Troussas, C.; Krouska, A.; Virvou, M. A Multilayer Inference Engine for Individualized Tutoring Model: Adapting Learning Material and Its Granularity. Neural Comput. Appl. 2021, 35, 61–75. [Google Scholar] [CrossRef]

- Chrysafiadi, K.; Papadimitriou, S.; Virvou, M. Cognitive-Based Adaptive Scenarios in Educational Games Using Fuzzy Reasoning. Knowl.-Based Syst. 2022, 250, 109111. [Google Scholar] [CrossRef]

- Murray, T. Authoring Intelligent Tutoring Systems: An Analysis of the State of the Art. Int. J. Artif. Intell. Educ. 1999, 10, 98–129. [Google Scholar]

- Mousavinasab, E.; Zarifsanaiey, N.; Niakan Kalhori, S.R.; Rakhshan, M.; Keikha, L.; Ghazi Saeedi, M. Intelligent Tutoring Systems: A Systematic Review of Characteristics, Applications, and Evaluation Methods. Interact. Learn. Environ. 2021, 29, 142–163. [Google Scholar] [CrossRef]

- Chen, C.-M.; Li, Y.-L. Personalised Context-Aware Ubiquitous Learning System for Supporting Effective English Vocabulary Learning. Interact. Learn. Environ. 2010, 18, 341–364. [Google Scholar] [CrossRef]

- Karaci, A. Intelligent Tutoring System Model Based on Fuzzy Logic and Constraint-Based Student Model. Neural Comput. Appl. 2019, 31, 3619–3628. [Google Scholar] [CrossRef]

- Hooshyar, D.; Ahmad, R.B.; Yousefi, M.; Yusop, F.D.; Horng, S.-J. A Flowchart-Based Intelligent Tutoring System for Improving Problem-Solving Skills of Novice Programmers. J. Comput. Assist. Learn. 2015, 31, 345–361. [Google Scholar] [CrossRef]

- Bryfczynski, S. BeSocratic: An Intelligent Tutoring System for the Recognition, Evaluation, and Analysis of Free-Form Student Input. Ph.D. Thesis, Clemson University, Clemson, SC, USA, 2012. [Google Scholar]

- Harley, J.M.; Bouchet, F.; Hussain, M.S.; Azevedo, R.; Calvo, R. A Multi-Componential Analysis of Emotions during Complex Learning with an Intelligent Multi-Agent System. Comput. Human Behav. 2015, 48, 615–625. [Google Scholar] [CrossRef]

- Grawemeyer, B.; Mavrikis, M.; Holmes, W.; Gutierrez-Santos, S.; Wiedmann, M.; Rummel, N. Affecting Off-Task Behaviour: How Affect-Aware Feedback Can Improve Student Learning. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, Edinburgh, UK, 25–29 April 2016; pp. 104–113. [Google Scholar]

- Wu, C.; Huang, Y.; Hwang, J.-P. Review of Affective Computing in Education/Learning: Trends and Challenges. Br. J. Educ. Technol. 2016, 47, 1304–1323. [Google Scholar] [CrossRef]

- Dicheva, D.; Dichev, C.; Agre, G.; Angelova, G. Gamification in Education: A Systematic Mapping Study. J. Educ. Technol. Soc. 2015, 18, 75–88. [Google Scholar]

- Lee, T.; Wen, Y.; Chan, M.Y.; Azam, A.B.; Looi, C.K.; Taib, S.; Ooi, C.H.; Huang, L.H.; Xie, Y.; Cai, Y. Investigation of Virtual & Augmented Reality Classroom Learning Environments in University STEM Education. Interact. Learn. Environ. 2022. [Google Scholar] [CrossRef]

- Polyzou, S.; Botsoglou, K.; Zygouris, N.C.; Stamoulis, G. Interactive Books for Preschool Children: From Traditional Interactive Paper Books to Augmented Reality Books: Listening to Children’s Voices through Mosaic Approach. Education 3-13 2022. [Google Scholar] [CrossRef]

- Yang, R.Y.H. Designing Augmented Reality Picture Books for Children. In Conceptual Practice-Research and Pedagogy in Art, Design, Creative Industries, and Heritage; Department of Art and Design, The Hang Seng University of Hong Kong: Hong Kong, China, 2022; Volume 1, pp. 29–33. [Google Scholar]

- Radu, I.; Huang, X.; Kestin, G.; Schneider, B. How Augmented Reality Influences Student Learning and Inquiry Styles: A Study of 1-1 Physics Remote AR Tutoring. Comput. Educ. X Real. 2023, 2, 100011. [Google Scholar] [CrossRef]

- Rossano, V.; Lanzilotti, R.; Cazzolla, A.; Roselli, T. Augmented Reality to Support Geometry Learning. IEEE Access 2020, 8, 107772–107780. [Google Scholar] [CrossRef]

- Kamarainen, A.M.; Metcalf, S.; Grotzer, T.; Browne, A.; Mazzuca, D.; Tutwiler, M.S.; Dede, C. EcoMOBILE: Integrating Augmented Reality and Probeware with Environmental Education Field Trips. Comput. Educ. 2013, 68, 545–556. [Google Scholar] [CrossRef]

- Squire, K.D.; Jan, M. Mad City Mystery: Developing Scientific Argumentation Skills with a Place-Based Augmented Reality Game on Handheld Computers. J. Sci. Educ. Technol. 2007, 16, 5–29. [Google Scholar] [CrossRef]

- Ibañez, M.B.; Di Serio, Á.; Villarán, D.; Kloos, C.D. Experimenting with Electromagnetism Using Augmented Reality: Impact on Flow Student Experience and Educational Effectiveness. Comput. Educ. 2014, 71, 1–13. [Google Scholar] [CrossRef]

- Bursali, H.; Yilmaz, R.M. Effect of Augmented Reality Applications on Secondary School Students’ Reading Comprehension and Learning Permanency. Comput. Human Behav. 2019, 95, 126–135. [Google Scholar] [CrossRef]

- Wojciechowski, R.; Cellary, W. Evaluation of Learners’ Attitude toward Learning in ARIES Augmented Reality Environments. Comput. Educ. 2013, 68, 570–585. [Google Scholar] [CrossRef]

- Elmqaddem, N. Augmented Reality and Virtual Reality in Education. Myth or Reality? Int. J. Emerg. Technol. Learn. 2019, 14, 234–242. [Google Scholar] [CrossRef]

- Uriarte-Portillo, A.; Ibáñez, M.-B.; Zatarain-Cabada, R.; Barrón-Estrada, M.L. Comparison of Using an Augmented Reality Learning Tool at Home and in a Classroom Regarding Motivation and Learning Outcomes. Multimodal Technol. Interact. 2023, 7, 23. [Google Scholar] [CrossRef]

- Koparan, T.; Dinar, H.; Koparan, E.T.; Haldan, Z.S. Integrating Augmented Reality into Mathematics Teaching and Learning and Examining Its Effectiveness. Think. Ski. Creat. 2023, 47, 101245. [Google Scholar] [CrossRef]

- Teng, C.H.; Chen, J.Y.; Chen, Z.H. Impact of Augmented Reality on Programming Language Learning: Efficiency and Perception. J. Educ. Comput. Res. 2018, 56, 254–271. [Google Scholar] [CrossRef]

- Cai, S.; Liu, E.; Shen, Y.; Liu, C.; Li, S.; Shen, Y. Probability Learning in Mathematics Using Augmented Reality: Impact on Student’s Learning Gains and Attitudes. Interact. Learn. Environ. 2020, 28, 560–573. [Google Scholar] [CrossRef]

- Rashevska, N.; Semerikov, S.; Zinonos, N.; Tkachuk, V.; Shyshkina, M. Using Augmented Reality Tools in the Teaching of Two-Dimensional Plane Geometry. In Proceedings of the 3rd International Workshop on Augmented Reality in Education (AREdu 2020), Kryvyi Rih, Ukraine, 13 May 2020. [Google Scholar]

- Ibáñez, M.B.; Di-Serio, Á.; Villarán-Molina, D.; Delgado-Kloos, C. Augmented Reality-Based Simulators as Discovery Learning Tools: An Empirical Study. IEEE Trans. Educ. 2014, 58, 208–213. [Google Scholar] [CrossRef]

- Frank, J.A.; Kapila, V. Mixed-Reality Learning Environments: Integrating Mobile Interfaces with Laboratory Test-Beds. Comput. Educ. 2017, 110, 88–104. [Google Scholar] [CrossRef]

- Herbert, B.; Ens, B.; Weerasinghe, A.; Billinghurst, M.; Wigley, G. Design Considerations for Combining Augmented Reality with Intelligent Tutors. Comput. Graph. 2018, 77, 166–182. [Google Scholar] [CrossRef]

- Ibáñez, M.B.; Di-Serio, A.; Villarán-Molina, D.; Delgado-Kloos, C. Support for Augmented Reality Simulation Systems: The Effects of Scaffolding on Learning Outcomes and Behavior Patterns. IEEE Trans. Learn. Technol. 2015, 9, 46–56. [Google Scholar] [CrossRef]

- Kyza, E.A.; Georgiou, Y. Scaffolding Augmented Reality Inquiry Learning: The Design and Investigation of the TraceReaders Location-Based, Augmented Reality Platform. Interact. Learn. Environ. 2019, 27, 211–225. [Google Scholar] [CrossRef]

- Westerfield, G.; Mitrovic, A.; Billinghurst, M. Intelligent Augmented Reality Training for Motherboard Assembly. Int. J. Artif. Intell. Educ. 2015, 25, 157–172. [Google Scholar] [CrossRef]

- Almiyad, M.A.; Oakden, L.; Weerasinghe, A.; Billinghurst, M. Intelligent Augmented Reality Tutoring for Physical Tasks with Medical Professionals. In Proceedings of the International Conference on Artificial Intelligence in Education, Wuhan, China, 28 June–1 July 2017; pp. 450–454. [Google Scholar]

- Chen, P.; Liu, X.; Cheng, W.; Huang, R. A Review of Using Augmented Reality in Education from 2011 to 2016. In Innovations in Smart Learning; Lecture Notes in Educational Technology; Springer: Singapore, 2017; pp. 13–18. [Google Scholar]

- Nwana, H.S. Intelligent Tutoring Systems: An Overview. Artif. Intell. Rev. 1990, 4, 251–277. [Google Scholar] [CrossRef]

- Almasri, A.; Ahmed, A.; Al-Masri, N.; Sultan, Y.A.; Mahmoud, A.Y.; Zaqout, I.; Akkila, A.N.; Abu-Naser, S.S. Intelligent Tutoring Systems Survey for the Period 2000–2018. Int. J. Acad. Eng. Res. 2019, 3, 21–37. [Google Scholar]

- LaViola, J.; Williamson, B.; Brooks, C.; Veazanchin, S.; Sottilare, R.; Garrity, P. Using Augmented Reality to Tutor Military Tasks in the Wild. In Proceedings of the Interservice/Industry Training, Simulation, and Education Conference, Orlando, FL, USA, 30 November–4 December 2015; pp. 1–10. [Google Scholar]

- Hsieh, M.-C.; Chen, S.-H. Intelligence Augmented Reality Tutoring System for Mathematics Teaching and Learning. J. Internet Technol. 2019, 20, 1673–1681. [Google Scholar]

- Huang, G.; Qian, X.; Wang, T.; Patel, F.; Sreeram, M.; Cao, Y.; Ramani, K.; Quinn, A.J. AdapTutAR: An Adaptive Tutoring System for Machine Tasks in Augmented Reality. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–15. [Google Scholar]

- Dargan, S.; Bansal, S.; Kumar, M.; Mittal, A.; Kumar, K. Augmented Reality: A Comprehensive Review. Arch. Comput. Methods Eng. 2022. [Google Scholar] [CrossRef]

- Papakostas, C.; Troussas, C.; Krouska, A.; Sgouropoulou, C. Modeling the Knowledge of Users in an Augmented Reality-Based Learning Environment Using Fuzzy Logic. In Novel & Intelligent Digital Systems: Proceedings of the 2nd International Conference (NiDS 2022); Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2022; pp. 113–123. [Google Scholar]

- Iqbal, M.Z.; Mangina, E.; Campbell, A.G. Current Challenges and Future Research Directions in Augmented Reality for Education. Multimodal Technol. Interact. 2022, 6, 75. [Google Scholar] [CrossRef]

- Ouyang, F.; Zheng, L.; Jiao, P. Artificial Intelligence in Online Higher Education: A Systematic Review of Empirical Research from 2011 to 2020. Educ. Inf. Technol. 2022, 27, 7893–7925. [Google Scholar] [CrossRef]

- Alkhatlan, A.; Kalita, J. Intelligent Tutoring Systems: A Comprehensive Historical Survey with Recent Developments. arXiv 2018, arXiv:1812.09628. [Google Scholar] [CrossRef]

- Kirillov, A. AForge.Net Framework. Available online: http//www.aforgenet.com (accessed on 25 September 2020).

- Ibáñez, M.B.; Uriarte Portillo, A.; Zatarain Cabada, R.; Barrón, M.L. Impact of Augmented Reality Technology on Academic Achievement and Motivation of Students from Public and Private Mexican Schools. A Case Study in a Middle-School Geometry Course. Comput. Educ. 2020, 145, 103734. [Google Scholar] [CrossRef]

- Mohammed, P.; Mohan, P. Dynamic Cultural Contextualisation of Educational Content in Intelligent Learning Environments Using ICON. Int. J. Artif. Intell. Educ. 2015, 25, 249–270. [Google Scholar] [CrossRef]

- Samarakou, M.; Prentakis, P.; Mitsoudis, D.; Karolidis, D.; Athinaios, S. Application of Fuzzy Logic for the Assessment of Engineering Students. In Proceedings of the 2017 IEEE Global Engineering Education Conference (EDUCON), Athens, Greece, 25–28 April 2017; pp. 646–650. [Google Scholar]

- Uriarte-Portillo, A.; Ibañez, M.-B.; Zatarain-Cabada, R.; Barrón-Estrada, M.-L. ARGeo 2018. Available online: https://argeo-apps.web.app/ (accessed on 20 December 2022).

- Uriarte-Portillo, A.; Ibañez, M.-B.; Zatarain-Cabada, R.; Barrón-Estrada, M.-L. ARGeoITS 2019. Available online: https://argeo-apps.web.app/ (accessed on 20 December 2022).

- Uriarte-Portillo, A.; Ibañez, M.-B.; Zatarain-Cabada, R.; Barrón-Estrada, M.-L. AR Applications for Learning Geometry. Available online: https://argeo-apps.web.app/ (accessed on 20 December 2022).

- Keller, J.M. Motivational Design for Learning and Performance: The ARCS Model Approach; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010; ISBN 9781441912497. [Google Scholar]

- Shapiro, S.; Wilk, M.B. An Analysis of Variance Test for Normality (Complete Samples). Biometrika 1965, 52, 591–611. [Google Scholar] [CrossRef]

- George, D.; Mallery, M. Using SPSS for Windows Step by Step: A Simple Guide and Reference; Allyn & Bacon: Boston, MA, USA, 2003. [Google Scholar]

- Cakir, R. Effect of Web-Based Intelligence Tutoring System on Students’ Achievement and Motivation. Malaysian Online J. Educ. Technol. 2015, 7, 46–56. [Google Scholar]

- Arnau, D.; Arevalillo-Herráez, M.; Puig, L.; González-Calero, J.A. Fundamentals of the Design and the Operation of an Intelligent Tutoring System for the Learning of the Arithmetical and Algebraic Way of Solving Word Problems. Comput. Educ. 2013, 63, 119–130. [Google Scholar] [CrossRef]

- Vang, R.N. Motivation in Intelligent Tutoring Systems and Game Based Learning: Why Am I Learning This? 2018. Available online: http://hdl.handle.net/1803/8847 (accessed on 2 December 2022).

- Hibbi, F.-Z.; Abdoun, O. Integrating an Intelligent Tutoring System into an Adaptive E-Learning Process. In Recent Advances in Mathematics and Technology; Springer: Berlin/Heidelberg, Germany, 2020; pp. 141–150. [Google Scholar]

- Chen, Y. Effect of Mobile Augmented Reality on Learning Performance, Motivation, and Math Anxiety in a Math Course. J. Educ. Comput. Res. 2019, 57, 1695–1722. [Google Scholar] [CrossRef]

- Hou, H.-T.; Fang, Y.-S.; Tang, J.T. Designing an Alternate Reality Board Game with Augmented Reality and Multi-Dimensional Scaffolding for Promoting Spatial and Logical Ability. Interact. Learn. Environ. 2021. [Google Scholar] [CrossRef]

- Hwang, G.-J.; Chen, C.-H. Influences of an Inquiry-Based Ubiquitous Gaming Design on Students’ Learning Achievements, Motivation, Behavioral Patterns, and Tendency towards Critical Thinking and Problem Solving. Br. J. Educ. Technol. 2017, 48, 950–971. [Google Scholar] [CrossRef]

- Chiang, T.H.C.; Yang, S.J.H.; Hwang, G.-J. An Augmented Reality-Based Mobile Learning System to Improve Students’ Learning Achievements and Motivations in Natural Science Inquiry Activities. J. Educ. Technol. Soc. 2014, 17, 352–365. [Google Scholar]

- Estapa, A.; Nadolny, L. The Effect of an Augmented Reality Enhanced Mathematics Lesson on Student Achievement and Motivation. J. STEM Educ. 2015, 16, 40–49. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).