The Grossberg Code: Universal Neural Network Signatures of Perceptual Experience

Abstract

1. Introduction

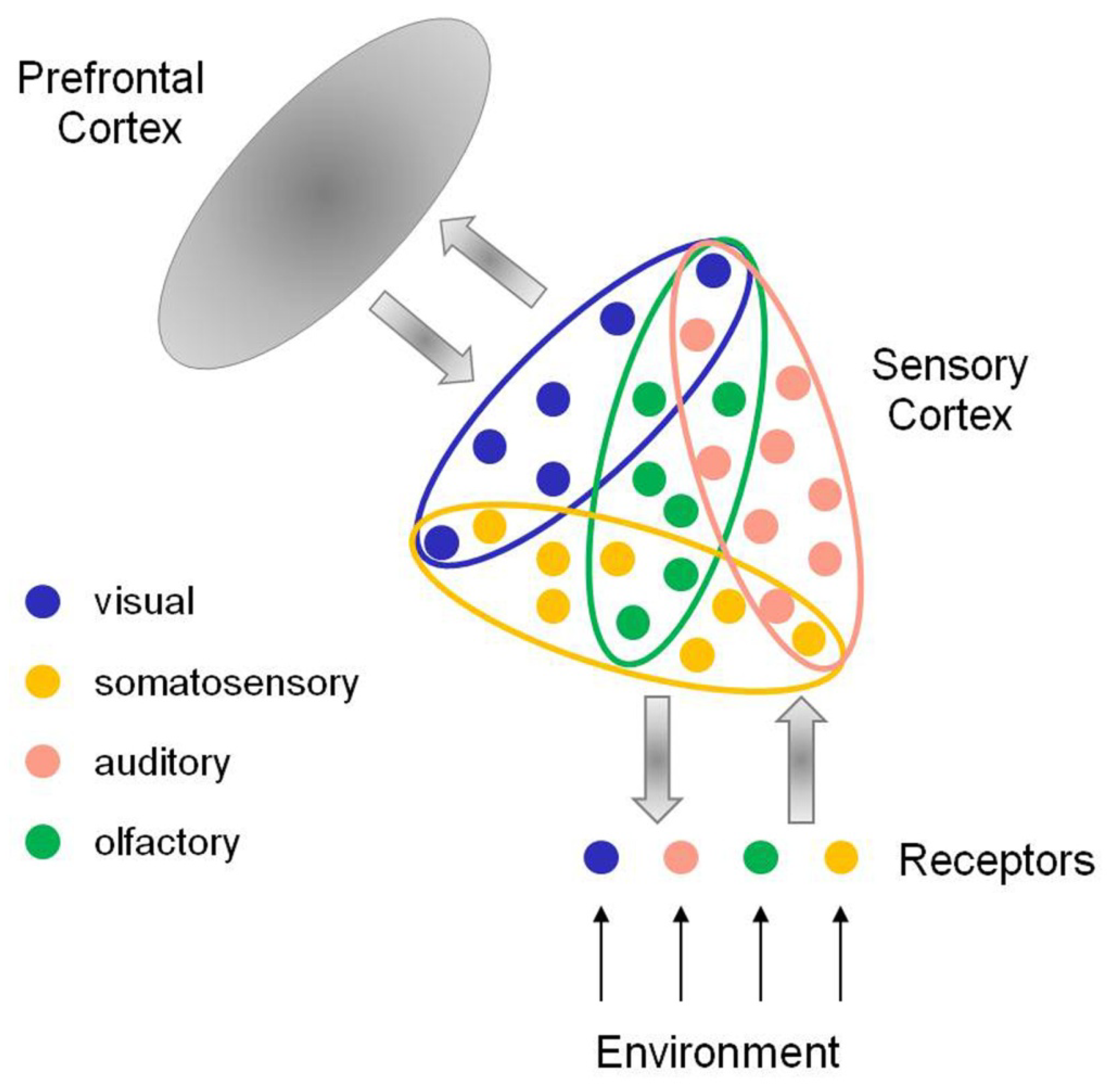

2. Contextual Modulation in the Brain

2.1. Vision

2.2. Hearing

2.3. Somatosensation

2.4. Olfaction

2.5. Prefrontal Control

3. Brain Signatures of Perceptual Experience

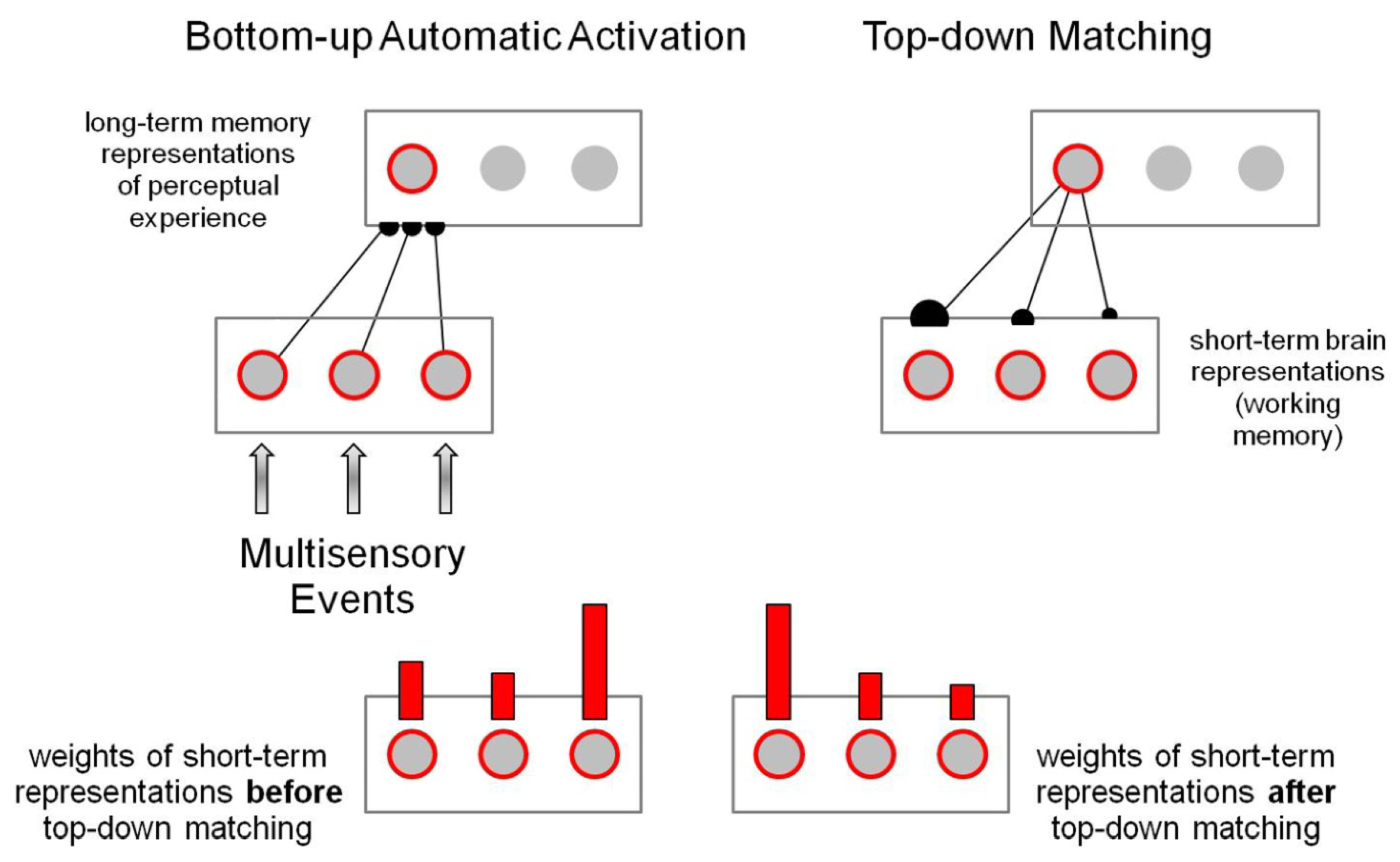

3.1. Bottom-Up Automatic Activation

3.2. Top-Down Matching

3.3. Temporary Representation for Selection and Control

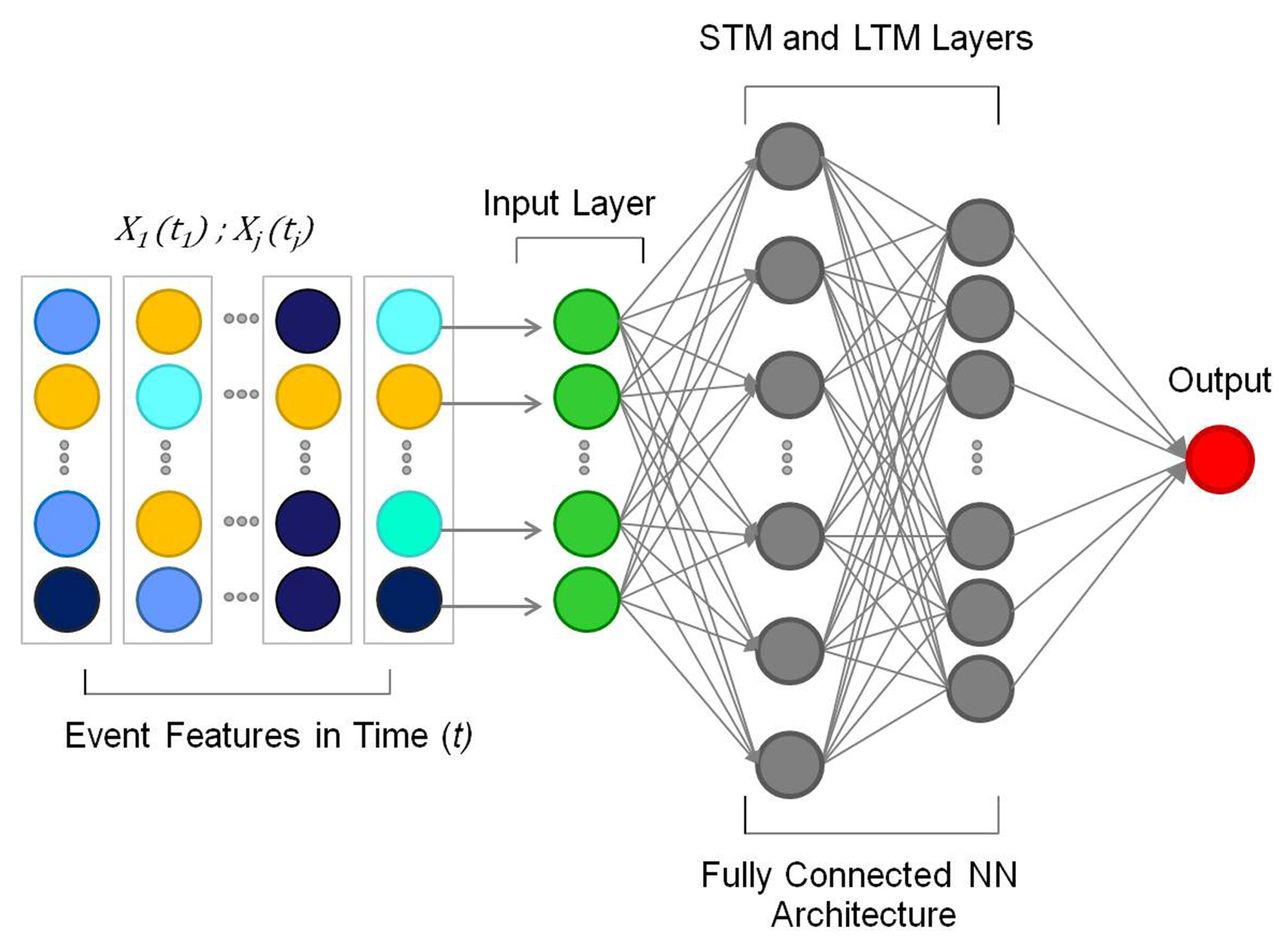

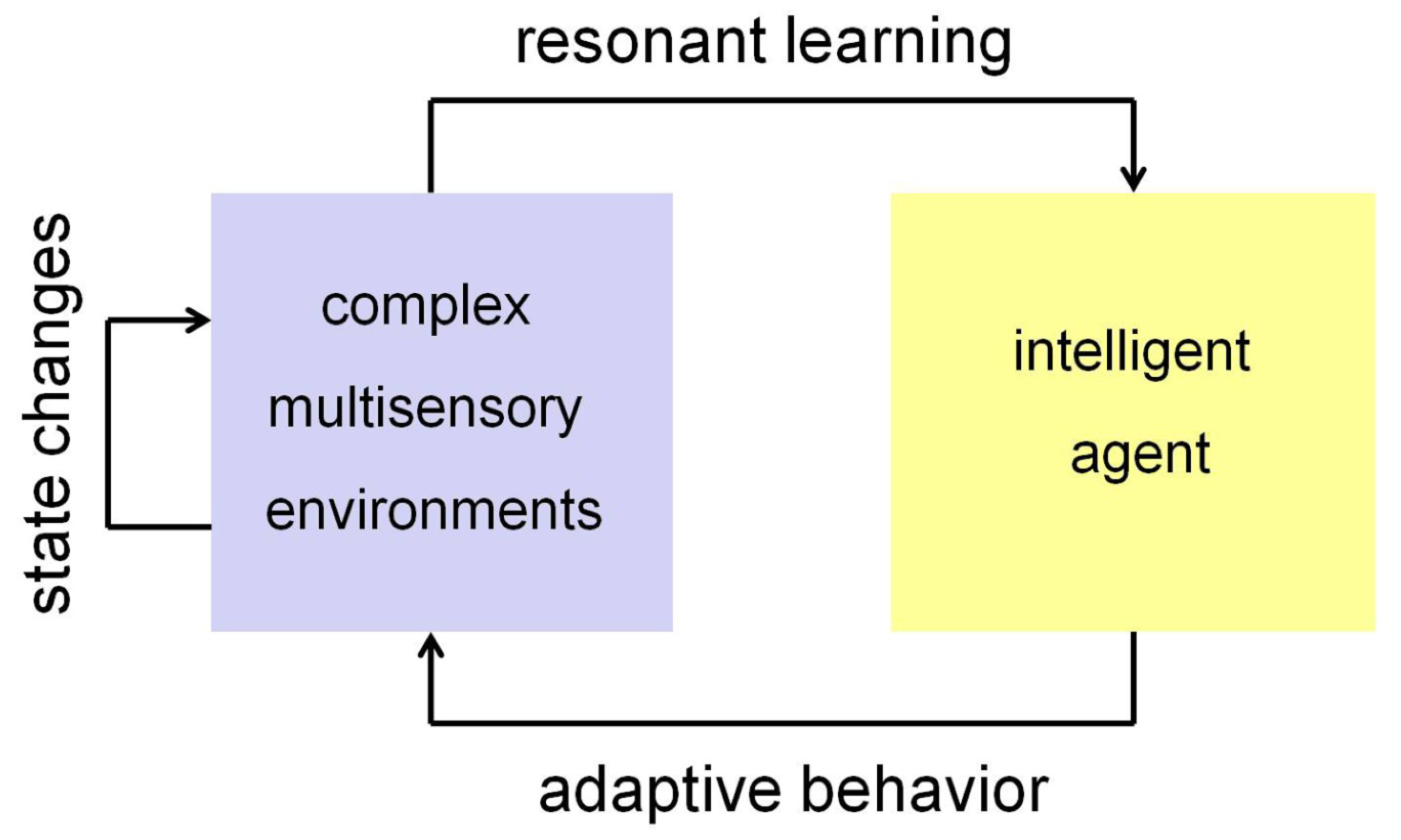

4. Towards Adaptive Intelligence in Robotics

5. Discussion

6. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Grossberg, S. Conscious Mind, Resonant Brain: How Each Brain Makes a Mind; Oxford University Press: Oxford, UK, 2021. [Google Scholar]

- Grossberg, S. A path toward explainable AI and autonomous adaptive intelligence: Deep Learning, adaptive resonance, and models of perception, emotion, and action. Front. Neurorobotics 2020, 14, 36. [Google Scholar] [CrossRef] [PubMed]

- Jadaun, P.; Cui, C.; Liu, S.; Incorvia, J.A.C. Adaptive cognition implemented with a context-aware and flexible neuron for next-generation artificial intelligence. PNAS Nexus 2022, 1, 206. [Google Scholar] [CrossRef] [PubMed]

- Dresp-Langley, B. From Biological Synapses to Intelligent Robots. Electronics 2022, 11, 707. [Google Scholar] [CrossRef]

- Dresp-Langley, B. Seven Properties of Self-Organization in the Human Brain. Big Data Cogn. Comput. 2020, 4, 10. [Google Scholar] [CrossRef]

- Meirhaeghe, N.; Sohn, H.; Jazayeri, M. A precise and adaptive neural mechanism for predictive temporal processing in the frontal cortex. Neuron 2021, 109, 2995–3011.e5. [Google Scholar] [CrossRef]

- Rosenberg, R.N. The universal brain code a genetic mechanism for memory. J. Neurol. Sci. 2021, 429, 118073. [Google Scholar] [CrossRef] [PubMed]

- Hebb, D. The Organization of Behaviour; John Wiley & Sons: Hoboken, NJ, USA, 1949. [Google Scholar]

- Grossberg, S. Self-organizing neural networks for stable control of autonomous behavior in a changing world. In Mathematical Approaches to Neural Networks; Taylor, J.G., Ed.; Elsevier Science Publishers: Amsterdam, The Netherlands, 1993; pp. 139–197. [Google Scholar]

- Dresp-Langley, B.; Durup, J. A plastic temporal brain code for conscious state generation. Neural Plast. 2009, 2009, e482696. [Google Scholar] [CrossRef]

- Churchland, P.S. Brain-Wise: Studies in Neurophilosophy; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Grossberg, S. How Does a Brain Build a Cognitive Code? Springer: Berlin/Heidelberg, Germany, 1982; Volume 87, pp. 1–52. [Google Scholar]

- Connor, C.E.; Knierim, J.J. Integration of objects and space in perception and memory. Nat. Neurosci. 2017, 20, 1493–1503. [Google Scholar] [CrossRef]

- Hubel, D.H.; Wiesel, T.N. Receptive fields and functional architecture of monkey striate cortex. J. Physiol. 1968, 195, 215–224. [Google Scholar] [CrossRef]

- Chavane, F.; Monier, C.; Bringuier, V.; Baudot, P.; Borg-Graham, L.; Lorenceau, J.; Frégnac, Y. The visual cortical association field: A Gestalt concept or a psychophysiological entity? J. Physiol. Paris 2000, 94, 333–342. [Google Scholar] [CrossRef]

- McManus, J.N.J.; Li, W.; Gilbert, C.D. Adaptive shape processing in primary visual cortex. Proc. Natl. Acad. Sci. USA 2011, 108, 9739–9746. [Google Scholar] [CrossRef] [PubMed]

- Grossberg, S. How does the cerebral cortex work? Learning, attention, and grouping by the laminar circuits of visual cortex. Spat. Vis. 1999, 12, 163–185. [Google Scholar] [CrossRef]

- Onat, S.; Jancke, D.; König, P. Cortical long-range interactions embed statistical knowledge of natural sensory input: A voltage-sensitive dye imaging study. F1000Res 2013, 2, 51. [Google Scholar] [CrossRef] [PubMed]

- Grossberg, S. Adaptive Resonance Theory: How a brain learns to consciously attend, learn, and recognize a changing world. Neural Netw. 2013, 37, 1–47. [Google Scholar] [CrossRef]

- Kim, L.H.; Sharma, S.; Sharples, S.A.; Mayr, K.A.; Kwok, C.H.T.; Whelan, P.J. Integration of descending command systems for the generation of context-specific locomotor behaviors. Front. Neurosci. 2017, 11, 581. [Google Scholar] [CrossRef]

- Oram, T.B.; Card, G.M. Context-dependent control of behavior in Drosophila. Curr. Opin. Neurobiol. 2022, 73, 102523. [Google Scholar] [CrossRef] [PubMed]

- Spillmann, L.; Dresp-Langley, B.; Tseng, C.H. Beyond the classical receptive field: The effect of contextual stimuli. J. Vis. 2015, 15, 7. [Google Scholar] [CrossRef]

- Lankow, B.S.; Usrey, W.M. Contextual Modulation of Feedforward Inputs to Primary Visual Cortex. Front. Syst. Neurosci. 2022, 16, 818633. [Google Scholar] [CrossRef]

- Gilbert, C.D.; Wiesel, T.N. The influence of contextual stimuli on the orientation selectivity of cells in the primary visual cortex of the cat. Vis. Res. 1990, 30, 1689–1701. [Google Scholar] [CrossRef]

- Dresp, B. Dynamic characteristic of spatial mechanisms coding contour structures. Spat. Vis. 1999, 12, 129–142. [Google Scholar] [CrossRef]

- Dresp, B.; Grossberg, S. Contour integration across polarities and spatial gaps: From contrast filtering to bipole cooperation. Vis. Res. 1997, 37, 913–924. [Google Scholar] [CrossRef] [PubMed]

- Dresp, B.; Grossberg, S. Spatial facilitation by color and luminance edges: Boundary, surface, and attentional factors. Vis. Res. 1999, 39, 3431–3443. [Google Scholar] [CrossRef] [PubMed]

- Hubel, D.H.; Livingstone, M.S. Color and contrast sensitivity in the lateral geniculate body and primary visual cortex of the macaque monkey. J. Neurosci. 1990, 10, 2223–2237. [Google Scholar] [CrossRef] [PubMed]

- Grossberg, S.; Mingolla, E. Neural dynamics of perceptual grouping: Textures, boundaries, and emergent segmentations. Percept. Psychophys. 1985, 38, 141–171. [Google Scholar] [CrossRef]

- Grossberg, S. 3-D vision and figure-ground separation by visual cortex. Percept. Psychophys. 1994, 55, 48–121. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Piëch, V.; Gilbert, C.D. Learning to link visual contours. Neuron 2008, 57, 442–451. [Google Scholar] [CrossRef]

- Angeloni, C.; Geffen, M.N. Contextual modulation of sound processing in the auditory cortex. Curr. Opin. Neurobiol. 2017, 49, 8–15. [Google Scholar] [CrossRef]

- Paraskevoudi, N.; SanMiguel, I. Sensory suppression and increased neuromodulation during actions disrupt memory encoding of unpredictable self-initiated stimuli. Psychophysiology 2022, 60, e14156. [Google Scholar] [CrossRef]

- Bednárová, V.; Grothe, B.; Myoga, M.H. Complex and spatially segregated auditory inputs of the mouse superior colliculus. J. Physiol. 2018, 596, 5281–5298. [Google Scholar] [CrossRef]

- Chou, X.L.; Fang, Q.; Yan, L.; Zhong, W.; Peng, B.; Li, H.; Wei, J.; Tao, H.W.; Zhang, L.I. Contextual and cross-modality modulation of auditory cortical processing through pulvinar mediated suppression. Elife 2020, 9, e54157. [Google Scholar] [CrossRef]

- Sutter, M.L.; Schreiner, C.E. Physiology and topography of neurons with multipeaked tuning curves in cat primary auditory cortex. J. Neurophysiol. 1991, 65, 1207–1226. [Google Scholar] [CrossRef] [PubMed]

- Pérez-Bellido, A.; Pappal, R.D.; Yau, J.M. Touch engages visual spatial contextual processing. Sci. Rep. 2018, 8, 16637. [Google Scholar] [CrossRef]

- Lohse, M.; Zimmer-Harwood, P.; Dahmen, J.C.; King, A.J. Integration of somatosensory and motor-related information in the auditory system. Front. Neurosci. 2022, 16, 1010211. [Google Scholar] [CrossRef] [PubMed]

- Dresp-Langley, B. Grip force as a functional window to somatosensory cognition. Front. Psychol. 2022, 13, 1026439. [Google Scholar] [CrossRef] [PubMed]

- Imbert, M.; Bignal, K.; Buser, P. Neocortical interconnections in the cat. J. Neurophysiol. 1966, 29, 382–395. [Google Scholar] [CrossRef]

- Imbert MBuser, P. Sensory Projections to the Motor Cortex in Cats: A Microelectrode Study. In Sensory Communication; Rosenblith, W., Ed.; 2012 (re-edited); The MIT Press: Cambridge, MA, USA, 1961; pp. 607–628. [Google Scholar] [CrossRef]

- Adibi, M.; Lampl, I. Sensory Adaptation in the Whisker-Mediated Tactile System: Physiology, Theory, and Function. Front. Neurosci. 2021, 15, 770011. [Google Scholar] [CrossRef]

- Ramamurthy, D.L.; Krubitzer, L.A. Neural Coding of Whisker-Mediated Touch in Primary Somatosensory Cortex Is Altered Following Early Blindness. J. Neurosci. 2018, 38, 6172–6189. [Google Scholar] [CrossRef]

- Raposo, D.; Sheppard, J.P.; Schrater, P.R.; Churchland, A.K. Multisensory decision-making in rats and humans. J. Neurosci. 2012, 32, 3726–3735. [Google Scholar] [CrossRef]

- Renard, A.; Harrell, E.R.; Bathellier, B. Olfactory modulation of barrel cortex activity during active whisking and passive whisker stimulation. Nat. Commun. 2022, 13, 3830. [Google Scholar] [CrossRef]

- Ezzatdoost, K.; Hojjati, H.; Aghajan, H. Decoding olfactory stimuli in EEG data using nonlinear features: A pilot study. J. Neurosci. Methods 2020, 341, 108780. [Google Scholar] [CrossRef]

- Kato, M.; Okumura, T.; Tsubo, Y.; Honda, J.; Sugiyama, M.; Touhara, K.; Okamoto, M. Spatiotemporal dynamics of odor representations in the human brain revealed by EEG decoding. Proc. Natl. Acad. Sci. USA 2022, 119, e2114966119. [Google Scholar] [CrossRef] [PubMed]

- Persson, B.M.; Ambrozova, V.; Duncan, S.; Wood, E.R.; O’Connor, A.R.; Ainge, J.A. Lateral entorhinal cortex lesions impair odor-context associative memory in male rats. J. Neurosci. Res. 2022, 100, 1030–1046. [Google Scholar] [CrossRef]

- Cansler, H.L.; Zandt, E.E.; Carlson, K.S.; Khan, W.T.; Ma, M.; Wesson, D.W. Organization and engagement of a prefrontal-olfactory network during olfactory selective attention. Cereb. Cortex 2022, bhac153. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Hanganu-Opatz, I.L.; Bieler, M. Cross-Talk of Low-Level Sensory and High-Level Cognitive Processing: Development, Mechanisms, and Relevance for Cross-Modal Abilities of the Brain. Front. Neurorobotics 2020, 14, 7. [Google Scholar] [CrossRef] [PubMed]

- Botvinick, M.M.; Braver, T.S.; Barch, D.M.; Carter, C.S.; Cohen, J.D. Conflict monitoring and cognitive control. Psychol. Rev. 2001, 108, 624–652. [Google Scholar] [CrossRef]

- Parmentier, F.B.R.; Gallego, L.; Micucci, A.; Leiva, A.; Andrés, P.; Maybery, M.T. Distraction by deviant sounds is modulated by the environmental context. Sci. Rep. 2022, 12, 21447. [Google Scholar] [CrossRef]

- Mayer, A.R.; Ryman, S.G.; Hanlon, F.M.; Dodd, A.B.; Ling, J.M. Look Hear! The prefrontal cortex is stratified by modality of sensory input during multisensory cognitive control. Cereb. Cortex 2017, 27, 2831–2840. [Google Scholar] [CrossRef]

- Skirzewski, M.; Molotchnikoff, S.; Hernandez, L.F.; Maya-Vetencourt, J.F. Multisensory Integration: Is Medial Prefrontal Cortex Signaling Relevant for the Treatment of Higher-Order Visual Dysfunctions? Front. Mol. Neurosci. 2022, 14, 806376. [Google Scholar] [CrossRef]

- Walters, E.T.; Carew, T.J.; Kandel, E.R. Classical conditioning in Aplysia californica. Proc. Natl. Acad. Sci. USA 1979, 76, 6675–6679. [Google Scholar] [CrossRef]

- Berns, G.S.; Cohen, J.D.; Mintun, M.A. Brain regions responsive to novelty in the absence of awareness. Science 1997, 276, 1272–1275. [Google Scholar] [CrossRef]

- Wong, P.S.; Bernat, E.; Bunce, S.; Shevrin, H. Brain indices of non-conscious associative learning. Conscious. Cogn. 1997, 6, 519–544. [Google Scholar] [CrossRef] [PubMed]

- Bulf, H.; Johnson, S.P.; Valenza, E. Visual statistical learning in the newborn infant. Cognition 2011, 121, 127–132. [Google Scholar] [CrossRef] [PubMed]

- Rees, G.; Wojciulik, E.; Clarke, K.; Husain, M.; Frith, C.; Driver, J. Neural correlates of conscious and unconscious vision in parietal extinction. Neurocase 2002, 8, 387–393. [Google Scholar] [CrossRef] [PubMed]

- Dresp-Langley, B. Why the brain knows more than we do: Non-conscious representations and their role in the construction of conscious experience. Brain Sci. 2011, 2, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Oberauer, K. Working Memory and Attention–A Conceptual Analysis and Review. J. Cogn. 2019, 2, 36. [Google Scholar] [CrossRef]

- Edelman, G.M. Neural Darwinism: Selection and reentrant signaling in higher brain function. Neuron 1993, 10, 115–125. [Google Scholar] [CrossRef]

- Judák, L.; Chiovini, B.; Juhász, G.; Pálfi, D.; Mezriczky, Z.; Szadai, Z.; Katona, G.; Szmola, B.; Ócsai, K.; Martinecz, B.; et al. Sharp-wave ripple doublets induce complex dendritic spikes in parvalbumin interneurons in vivo. Nat. Commun. 2022, 13, 6715. [Google Scholar] [CrossRef]

- Fourneret, P.; Jeannerod, M. Limited conscious monitoring of motor performance in normal subjects. Neuropsychologia 1998, 36, 1133–1140. [Google Scholar] [CrossRef]

- Mashour, G.A.; Roelfsema, P.; Changeux, J.P.; Dehaene, S. Conscious Processing and the Global Neuronal Workspace Hypothesis. Neuron 2020, 105, 776–798. [Google Scholar] [CrossRef]

- Zhuang, J.; Bereshpolova, Y.; Stoelzel, C.R.; Huff, J.M.; Hei, X.; Alonso, J.M.; Swadlow, H.A. Brain state effects on layer 4 of the awake visual cortex. J. Neurosci. 2014, 34, 3888–3900. [Google Scholar] [CrossRef]

- Cohen, M.A.; Grossberg, S. Absolute stability and global pattern formation and parallel memory storage by competitive neural networks. IEEE Trans. Syst. Man Cybern. 1983, 13, 815–821. [Google Scholar] [CrossRef]

- Cohen, M.A. Sustained oscillations in a symmetric cooperative competitive neural network: Disproof of a conjecture about content addressable memory. Neural Netw. 1988, 1, 217–221. [Google Scholar] [CrossRef]

- Dresp-Langley, B. Consciousness Beyond Neural Fields: Expanding the Possibilities of What Has Not Yet Happened. Front. Psychol. 2022, 12, 762349. [Google Scholar] [CrossRef] [PubMed]

- Azizi, Z.; Ebrahimpour, R. Explaining Integration of Evidence Separated by Temporal Gaps with Frontoparietal Circuit Models. Neuroscience 2023, 509, 74–95. [Google Scholar] [CrossRef] [PubMed]

- Grossberg, S. Towards solving the hard problem of consciousness: The varieties of brain resonances and the conscious experiences that they support. Neural Netw. 2017, 87, 38–95. [Google Scholar] [CrossRef] [PubMed]

- Grossberg, S. The embodied brain of SOVEREIGN2: From space-variant conscious percepts during visual search and navigation to learning invariant object categories and cognitive-emotional plans for acquiring valued goals. Front. Comput. Neurosci. 2019, 13, 36. [Google Scholar] [CrossRef] [PubMed]

- Grossberg, S. Toward autonomous adaptive intelligence: Building upon neural models of how brains make minds. IEEE Trans. Syst. 2021, 51, 51–75. [Google Scholar] [CrossRef]

- Carpenter, G.A.; Grossberg, S. Adaptive resonance theory. In Encyclopedia of Machine Learning and Data Mining; Sammut, C., Webb, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; pp. 1–17. [Google Scholar]

- Pearson, M.J.; Dora, S.; Struckmeier, O.; Knowles, T.C.; Mitchinson, B.; Tiwari, K.; Kyrki, V.; Bohte, S.; Pennartz, C.M.A. Multimodal Representation Learning for Place Recognition Using Deep Hebbian Predictive Coding. Front. Robot. AI 2021, 8, 732023. [Google Scholar] [CrossRef]

- Bartolozzi, C.; Indiveri, G.; Donati, E. Embodied neuromorphic intelligence. Nat. Commun. 2022, 13, 1024. [Google Scholar] [CrossRef]

- Gong, X.; Wang, Q.; Fang, F. Configuration perceptual learning and its relationship with element perceptual learning. J. Vis. 2022, 22, 2. [Google Scholar] [CrossRef]

- Parisi, G.I.; Kemker, R.; Part, J.L.; Kanan, C.; Wermter, S. Continual lifelong learning with neural networks: A review. Neural Netw. 2019, 113, 54–71. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Phan, T.V.; Li, S.; Wang, J.; Peng, Y.; Chen, G.; Qu, J.; Goldman, D.I.; Levin, S.A.; Pienta, K.; et al. Robots as models of evolving systems. Proc. Natl. Acad. Sci. USA 2022, 119, e2120019119. [Google Scholar] [CrossRef] [PubMed]

- Gumaste, A.; Coronas-Samano, G.; Hengenius, J.; Axman, R.; Connor, E.G.; Baker, K.L.; Ermentrout, B.; Crimaldi, J.P.; Verhagen, J.V. A Comparison between Mouse, In Silico, and Robot Odor Plume Navigation Reveals Advantages of Mouse Odor Tracking. Eneuro 2020, 7, 212–219. [Google Scholar] [CrossRef]

- Tekülve, J.; Fois, A.; Sandamirskaya, Y.; Schöner, G. Autonomous Sequence Generation for a Neural Dynamic Robot: Scene Perception, Serial Order, and Object-Oriented Movement. Front. Neurorobotics 2019, 13, 95. [Google Scholar] [CrossRef] [PubMed]

- Axenie, C.; Richter, C.; Conradt, J. A self-synthesis approach to perceptual learning for multisensory fusion in robotics. Sensors 2016, 16, 1751. [Google Scholar] [CrossRef] [PubMed]

- Holland, J.; Kingston, L.; McCarthy, C.; Armstrong, E.; O’Dwyer, P.; Merz, F.; McConnell, M. Service robots in the healthcare sector. Robotics 2021, 10, 47. [Google Scholar] [CrossRef]

- Pozzi, L.; Gandolla, M.; Pura, F.; Maccarini, M.; Pedrocchi, A.; Braghin, F.; Piga, D.; Roveda, L. Grasping learning, optimization, and knowledge transfer in the robotics field. Sci. Rep. 2022, 12, 4481. [Google Scholar] [CrossRef]

- Lomas, J.D.; Lin, A.; Dikker, S.; Forster, D.; Lupetti, M.L.; Huisman, G.; Habekost, J.; Beardow, C.; Pandey, P.; Ahmad, N.; et al. Resonance as a Design Strategy for AI and Social Robots. Front. Neurorobotics 2022, 16, 850489. [Google Scholar] [CrossRef]

- Wandeto, J.M.; Dresp-Langley, B. Contribution to the Honour of Steve Grossberg’s 80th Birthday Special Issue: The quantization error in a Self-Organizing Map as a contrast and colour specific indicator of single-pixel change in large random patterns. Neural Netw. 2019, 120, 116–128. [Google Scholar] [CrossRef]

- Dresp-Langley, B.; Wandeto, J.M. Unsupervised classification of cell imaging data using the quantization error in a Self Organizing Map. In Transactions on Computational Science and Computational Intelligence; Arabnia, H.R., Ferens, K., de la Fuente, D., Kozerenko, E.B., Olivas Varela, J.A., Tinetti, F.G., Eds.; Springer-Nature: Berlin/Heidelberg, Germany, 2021; pp. 201–210. [Google Scholar]

- Shepherd, G.M.G.; Yamawaki, N. Untangling the cortico-thalamo-cortical loop: Cellular pieces of a knotty circuit puzzle. Nat. Rev. Neurosci. 2021, 22, 389–406. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dresp-Langley, B. The Grossberg Code: Universal Neural Network Signatures of Perceptual Experience. Information 2023, 14, 82. https://doi.org/10.3390/info14020082

Dresp-Langley B. The Grossberg Code: Universal Neural Network Signatures of Perceptual Experience. Information. 2023; 14(2):82. https://doi.org/10.3390/info14020082

Chicago/Turabian StyleDresp-Langley, Birgitta. 2023. "The Grossberg Code: Universal Neural Network Signatures of Perceptual Experience" Information 14, no. 2: 82. https://doi.org/10.3390/info14020082

APA StyleDresp-Langley, B. (2023). The Grossberg Code: Universal Neural Network Signatures of Perceptual Experience. Information, 14(2), 82. https://doi.org/10.3390/info14020082