Mixing Global and Local Features for Long-Tailed Expression Recognition

Abstract

1. Introduction

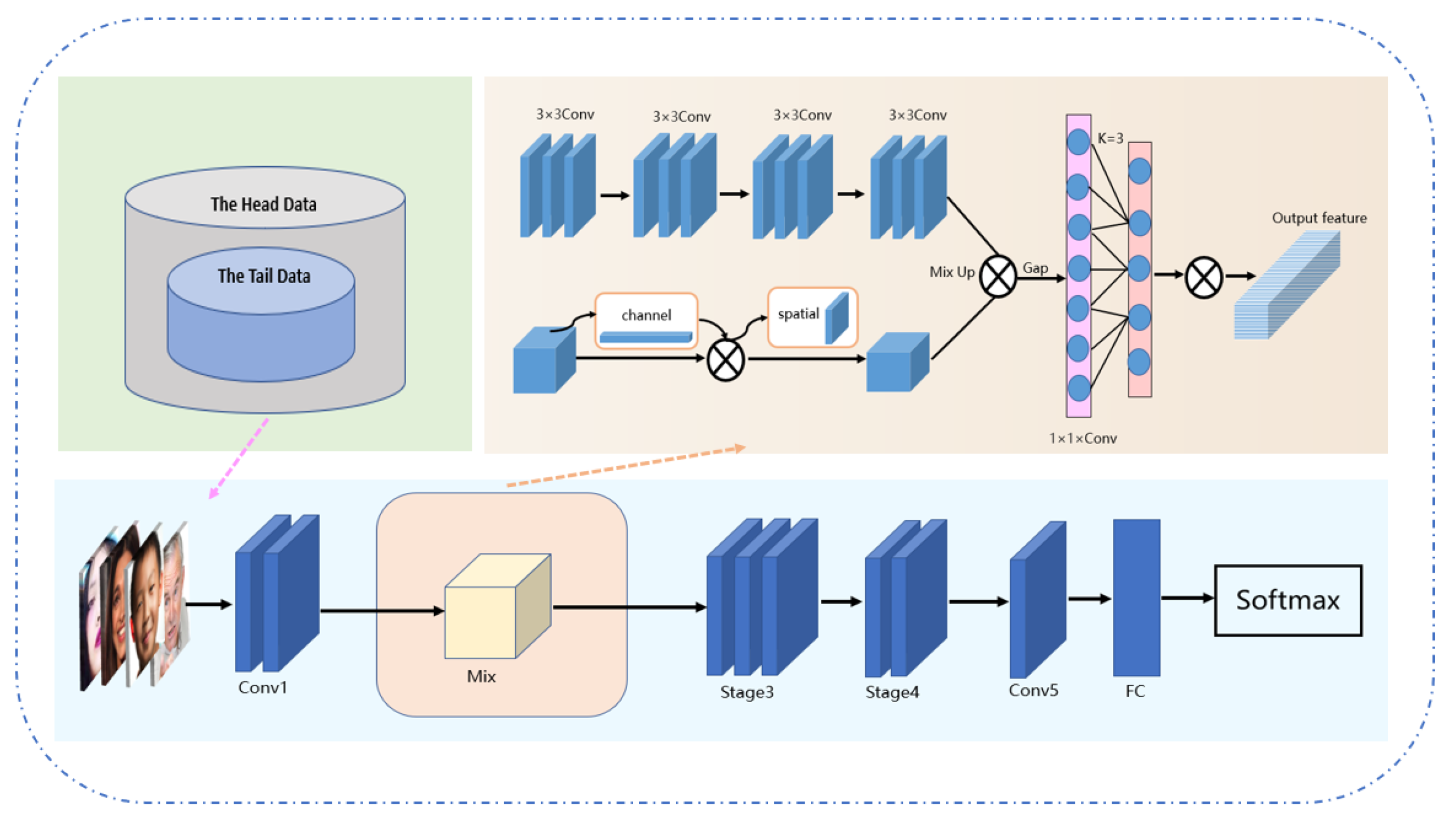

- We propose an attention mechanism that combines global and local features to extract richer face information (AM-FGL).

- Based on the CutMix data enhancement method for solving the problem of facial expression data imbalance in real-world scenes, we increased the diversity of the dataset without increasing the complexity of the model BC-EDB.

- We improved the recognition accuracy of minority classes and achieved the most advanced results in occlusion-RAF-DB, 30 pose-RAF-DB, and 45 pose-RAF-DB with accuracies of 86.96%, 89.74%, and 88.53%.

2. Related Work

2.1. Large-Scale Expression Data Research

2.2. Research on Imbalanced Data

3. Method

3.1. Backbone Network Based on ShuffleNet-v2

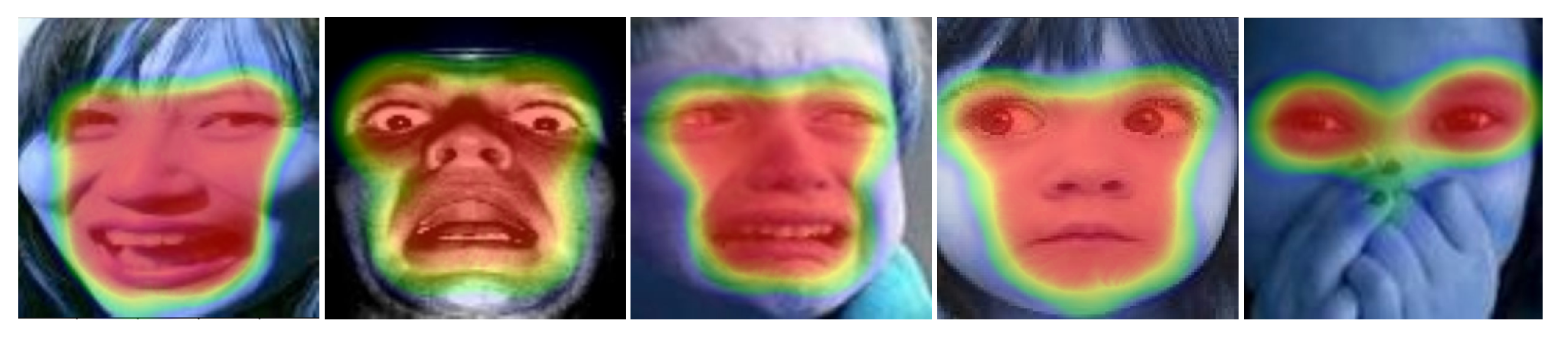

3.2. Mixing Up Global Features and Local Features Based on Attention Mechanisms

3.3. Data Enhancement Based on CutMix

4. Experiment

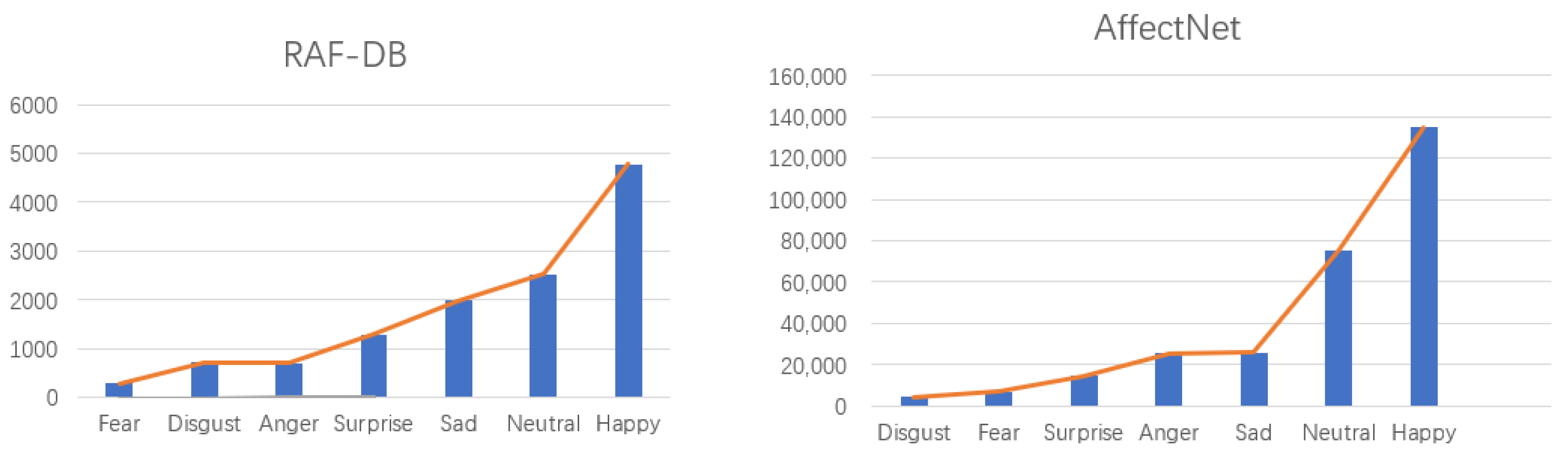

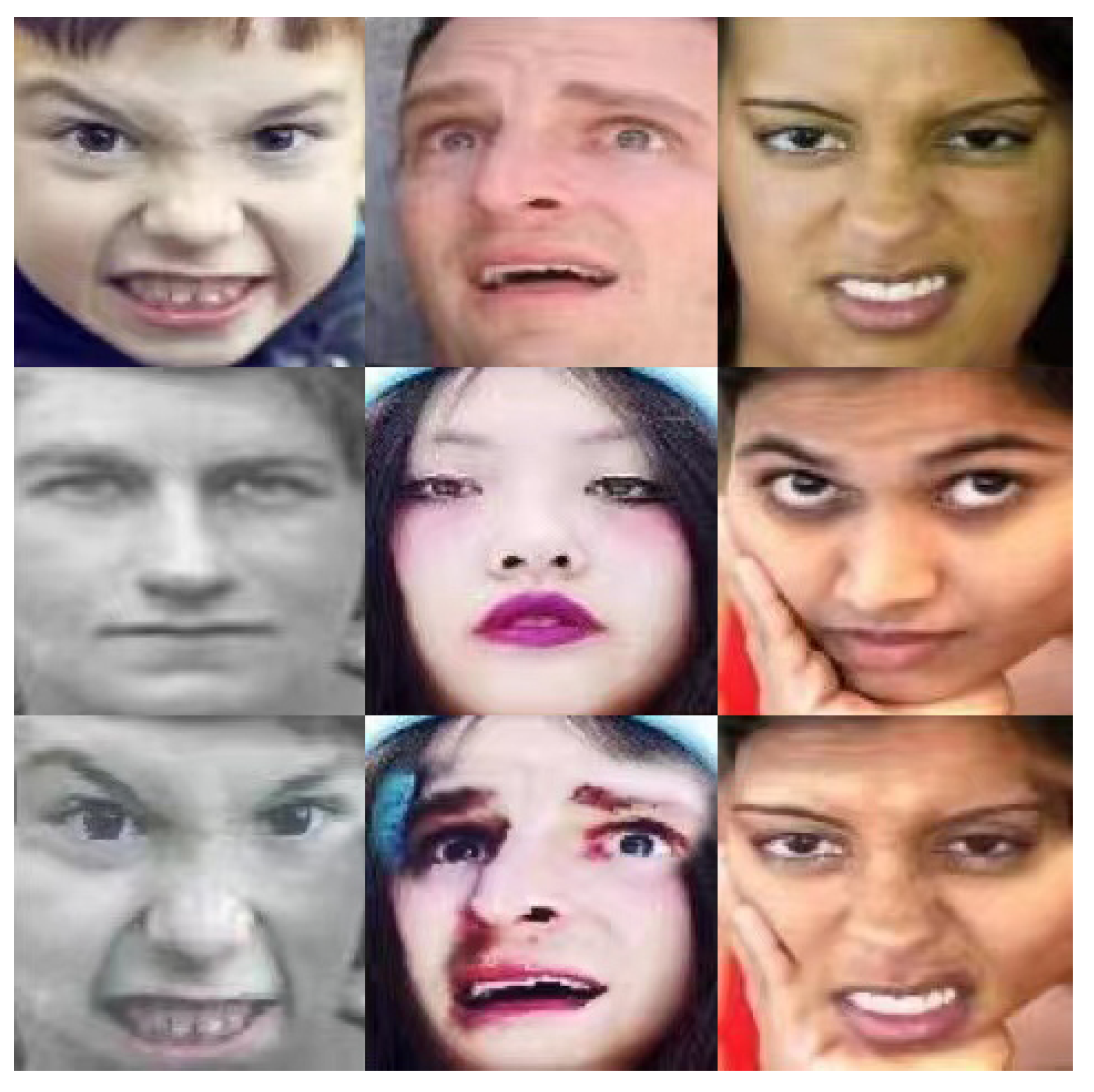

4.1. Datasets

4.2. Experimental Operation Details

4.3. Experimental Results Compared with the State-of-the-Art Method

4.4. Ablation Experiment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pabba, C.; Kumar, P. An intelligent system for monitoring students’ engagement in large classroom teaching through facial expression recognition. Expert Syst. 2022, 39, e12839. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Personal. Soc. Psychol. 1971, 17, 124. [Google Scholar] [CrossRef] [PubMed]

- Zhan, C.; She, D.; Zhao, S.; Cheng, M.M.; Yang, J. Zero-shot emotion recognition via affective structural embedding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1151–1160. [Google Scholar]

- Lei, J.; Liu, Z.; Zou, Z.; Li, T.; Juan, X.; Wang, S.; Yang, G.; Feng, Z. Mid-level Representation Enhancement and Graph Embedded Uncertainty Suppressing for Facial Expression Recognition. arXiv 2022, arXiv:2207.13235. [Google Scholar]

- Liu, Z.; Miao, Z.; Zhan, X.; Wang, J.; Gong, B.; Yu, S.X. Large-scale long-tailed recognition in an open world. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2537–2546. [Google Scholar]

- Cotter, S.F. Sparse representation for accurate classification of corrupted and occluded facial expressions. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 15–19 March 2010; pp. 838–841. [Google Scholar]

- Kotsia, I.; Buciu, I.; Pitas, I. An analysis of facial expression recognition under partial facial image occlusion. Image Vis. Comput. 2008, 26, 1052–1067. [Google Scholar] [CrossRef]

- Barros, P.; Sciutti, A. I Only Have Eyes for You: The Impact of Masks On Convolutional-Based Facial Expression Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1226–1231. [Google Scholar]

- Bourel, F.; Chibelushi, C.C.; Low, A.A. Recognition of Facial Expressions in the Presence of Occlusion. In Proceedings of the BMVC, Manchester, UK, 10–13 September 2001; pp. 1–10. [Google Scholar]

- Ly, S.T.; Do, N.T.; Lee, G.; Kim, S.H.; Yang, H.J. Multimodal 2D and 3D for In-The-Wild Facial Expression Recognition. In Proceedings of the CVPR Workshops, Long Beach, CA, USA, 16–20 June 2019; pp. 2927–2934. [Google Scholar]

- Li, K.; Zhao, Q. If-gan: Generative adversarial network for identity preserving facial image inpainting and frontalization. In Proceedings of the 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), Buenos Aires, Argentina, 16–20 November 2020; pp. 45–52. [Google Scholar]

- Shome, D.; Kar, T. FedAffect: Few-shot federated learning for facial expression recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2021; pp. 4168–4175. [Google Scholar]

- Cao, X.; Li, P. Dynamic facial expression recognition of sprinters based on multi-scale detail enhancement. Int. J. Biom. 2022, 14, 336–351. [Google Scholar]

- Chen, Y.; Wang, J.; Chen, S.; Shi, Z.; Cai, J. Facial motion prior networks for facial expression recognition. In Proceedings of the 2019 IEEE Visual Communications and Image Processing (VCIP), Sydney, Australia, 1–4 December 2019; pp. 1–4. [Google Scholar]

- She, J.; Hu, Y.; Shi, H.; Wang, J.; Shen, Q.; Mei, T. Dive into ambiguity: Latent distribution mining and pairwise uncertainty estimation for facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6248–6257. [Google Scholar]

- Chen, Y.; Joo, J. Understanding and mitigating annotation bias in facial expression recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 14980–14991. [Google Scholar]

- Ahmady, M.; Mirkamali, S.S.; Pahlevanzadeh, B.; Pashaei, E.; Hosseinabadi, A.A.R.; Slowik, A. Facial expression recognition using fuzzified Pseudo Zernike Moments and structural features. Fuzzy Sets Syst. 2022, 443, 155–172. [Google Scholar] [CrossRef]

- Chen, S.; Wang, J.; Chen, Y.; Shi, Z.; Geng, X.; Rui, Y. Label distribution learning on auxiliary label space graphs for facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13984–13993. [Google Scholar]

- Gera, D.; Balasubramanian, S. Noisy Annotations Robust Consensual Collaborative Affect Expression Recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3585–3592. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Wu, Y.; Liu, H.; Li, J.; Fu, Y. Deep face recognition with center invariant loss. In Proceedings of the on Thematic Workshops of ACM Multimedia 2017, Mountain View, CA, USA, 23–27 October 2017; pp. 408–414. [Google Scholar]

- Wang, Y.X.; Ramanan, D.; Hebert, M. Learning to model the tail. Adv. Neural Inf. Process. Syst. 2017, 30, 7032–7042. [Google Scholar]

- Yang, L.; Jiang, H.; Song, Q.; Guo, J. A Survey on Long-Tailed Visual Recognition. Int. J. Comput. Vis. 2022, 130, 1837–1872. [Google Scholar] [CrossRef]

- Zhang, X.; Fang, Z.; Wen, Y.; Li, Z.; Qiao, Y. Range loss for deep face recognition with long-tailed training data. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5409–5418. [Google Scholar]

- Mullick, S.S.; Datta, S.; Das, S. Generative adversarial minority oversampling. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1695–1704. [Google Scholar]

- Lee, J.; Kim, S.; Kim, S.; Park, J.; Sohn, K. Context-aware emotion recognition networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10143–10152. [Google Scholar]

- Park, S.; Hong, Y.; Heo, B.; Yun, S.; Choi, J.Y. The Majority Can Help The Minority: Context-rich Minority Oversampling for Long-tailed Classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6887–6896. [Google Scholar]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6023–6032. [Google Scholar]

- Zhao, Z.; Liu, Q.; Zhou, F. Robust lightweight facial expression recognition network with label distribution training. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 3510–3519. [Google Scholar]

- Antoniadis, P.; Pikoulis, I.; Filntisis, P.P.; Maragos, P. An audiovisual and contextual approach for categorical and continuous emotion recognition in-the-wild. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3645–3651. [Google Scholar]

- Li, Y.; Zeng, J.; Shan, S.; Chen, X. Occlusion aware facial expression recognition using CNN with attention mechanism. IEEE Trans. Image Process. 2018, 28, 2439–2450. [Google Scholar] [CrossRef] [PubMed]

- Xiong, W.; He, Y.; Zhang, Y.; Luo, W.; Ma, L.; Luo, J. Fine-grained image-to-image transformation towards visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5840–5849. [Google Scholar]

- Kuo, C.M.; Lai, S.H.; Sarkis, M. A compact deep learning model for robust facial expression recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2121–2129. [Google Scholar]

- Gecer, B.; Deng, J.; Zafeiriou, S. Ostec: One-shot texture completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7628–7638. [Google Scholar]

- Bao, Z.; You, S.; Gu, L.; Yang, Z. Single-image facial expression recognition using deep 3d re-centralization. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Zhou, H.; Liu, J.; Liu, Z.; Liu, Y.; Wang, X. Rotate-and-render: Unsupervised photorealistic face rotation from single-view images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5911–5920. [Google Scholar]

- Yang, H.; Zhu, K.; Huang, D.; Li, H.; Wang, Y.; Chen, L. Intensity enhancement via GAN for multimodal face expression recognition. Neurocomputing 2021, 454, 124–134. [Google Scholar] [CrossRef]

- Bau, D.; Zhu, J.Y.; Wulff, J.; Peebles, W.; Strobelt, H.; Zhou, B.; Torralba, A. Seeing what a gan cannot generate. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4502–4511. [Google Scholar]

- Barros, P.; Churamani, N.; Sciutti, A. The FaceChannel: A Light-weight Deep Neural Network for Facial Expression Recognition. In Proceedings of the 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), Buenos Aires, Argentina, 16–20 November 2020; pp. 652–656. [Google Scholar]

- Wang, K.; Peng, X.; Yang, J.; Meng, D.; Qiao, Y. Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans. Image Process. 2020, 29, 4057–4069. [Google Scholar] [CrossRef] [PubMed]

- Chu, P.; Bian, X.; Liu, S.; Ling, H. Feature space augmentation for long-tailed data. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 694–710. [Google Scholar]

- Dong, Y.; Wang, X. A new over-sampling approach: Random-SMOTE for learning from imbalanced data sets. In Proceedings of the International Conference on Knowledge Science, Engineering and Management, Irvine, CA, USA, 12–14 December 2011; pp. 343–352. [Google Scholar]

- Ando, S.; Huang, C.Y. Deep over-sampling framework for classifying imbalanced data. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Skopje, North Macedonia, 18–22 September 2017; pp. 770–785. [Google Scholar]

- Hong, Y.; Han, S.; Choi, K.; Seo, S.; Kim, B.; Chang, B. Disentangling label distribution for long-tailed visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6626–6636. [Google Scholar]

- Zhong, Y.; Deng, W.; Wang, M.; Hu, J.; Peng, J.; Tao, X.; Huang, Y. Unequal-training for deep face recognition with long-tailed noisy data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7812–7821. [Google Scholar]

- Yin, X.; Yu, X.; Sohn, K.; Liu, X.; Chandraker, M. Feature transfer learning for face recognition with under-represented data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5704–5713. [Google Scholar]

- Zhu, K.; Wang, Y.; Yang, H.; Huang, D.; Chen, L. Intensity enhancement via gan for multimodal facial expression recognition. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2020; pp. 1346–1350. [Google Scholar]

- Gao, H.; An, S.; Li, J.; Liu, C. Deep balanced learning for long-tailed facial expressions recognition. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 11147–11153. [Google Scholar]

- Zhang, H.; Su, W.; Yu, J.; Wang, Z. Weakly supervised local-global relation network for facial expression recognition. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, Yokohama, Japan, 7–15 January 2021; pp. 1040–1046. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Li, S.; Deng, W.; Du, J. Reliable crowdsourcing and deep locality-preserving learning for expression recognition in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2852–2861. [Google Scholar]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2017, 10, 18–31. [Google Scholar] [CrossRef]

- Wang, K.; Peng, X.; Yang, J.; Lu, S.; Qiao, Y. Suppressing uncertainties for large-scale facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6897–6906. [Google Scholar]

- Deng, J.; Guo, J.; Zhou, Y.; Yu, J.; Kotsia, I.; Zafeiriou, S. RetinaFace: Single-stage dense face localisation in the wild. arXiv 2019, arXiv:1905.00641. [Google Scholar]

- Xiong, X.; De la Torre, F. Supervised descent method and its applications to face alignment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 532–539. [Google Scholar]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Deng, W. Relative Uncertainty Learning for Facial Expression Recognition. Adv. Neural Inf. Process. Syst. 2021, 34, 17616–17627. [Google Scholar]

- Zhang, Y.; Wang, C.; Ling, X.; Deng, W. Learn from all: Erasing attention consistency for noisy label facial expression recognition. In Proceedings of the Computer Vision—ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; pp. 418–434. [Google Scholar]

- Farzaneh, A.H.; Qi, X. Discriminant distribution-agnostic loss for facial expression recognition in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 406–407. [Google Scholar]

- Wen, Z.; Lin, W.; Wang, T.; Xu, G. Distract your attention: Multi-head cross attention network for facial expression recognition. arXiv 2021, arXiv:2109.07270. [Google Scholar]

- Antoniadis, P.; Filntisis, P.P.; Maragos, P. Exploiting Emotional Dependencies with Graph Convolutional Networks for Facial Expression Recognition. In Proceedings of the 2021 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2021), Jodhpur, India, 15–18 December 2021; pp. 1–8. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

| Expression | Neutral | Happy | Sad | Surprise | Fear | Disgust | Anger |

|---|---|---|---|---|---|---|---|

| numbers | 2524 | 4772 | 1982 | 1290 | 281 | 716 | 704 |

| Expression | Neutral | Happy | Sad | Surprise | Fear | Disgust | Anger |

|---|---|---|---|---|---|---|---|

| numbers | 75,374 | 134,915 | 25,959 | 14,590 | 6878 | 4303 | 25,383 |

| Classes | Neutral | Happy | Sad | Surprise | Fear | Disgust | Anger | |

|---|---|---|---|---|---|---|---|---|

| Recall | Efficientface [29] | 81.84 | 95.39 | 84.59 | 87.83 | 68.81 | 76.40 | 83.36 |

| RUL [60] | 87.28 | 94.68 | 86.50 | 88.31 | 72.58 | 71.52 | 85.16 | |

| EAC [61] | 86.20 | 96.68 | 86.91 | 91.00 | 76.56 | 73.42 | 88.44 | |

| Ours | 85.88 | 93.64 | 85.43 | 92.73 | 78.95 | 77.62 | 89.33 | |

| Macro-f1 | Efficientface [29] | 84.92 | 94.25 | 83.79 | 85.44 | 57.24 | 57.24 | 81.97 |

| RUL [60] | 87.54 | 95.35 | 87.77 | 87.49 | 66.18 | 69.45 | 83.28 | |

| EAC [61] | 88.06 | 96.27 | 88.79 | 90.00 | 68.70 | 72.96 | 84.14 | |

| Ours | 87.18 | 95.02 | 88.03 | 86.73 | 71.01 | 73.21 | 85.90 |

| Datasets | Methods | Recall | Precision | Macro-f1 |

|---|---|---|---|---|

| RAF-DB | RAN [40] | 86.90 | 88.23 | 87.21 |

| SCN [56] | 87.03 | 87.72 | 87.25 | |

| SCN * [56] | 88.14 | 88.38 | 88.17 | |

| Efficient Face [29] | 88.36 | 88.68 | 8845 | |

| RUL [60] | 88.98 | 89.21 | 89.07 | |

| EAC [61] | 90.12 | 90.22 | 90.14 | |

| Ours | 89.11 | 89.62 | 89.26 | |

| AffectNet-7 | gACNN [26] | 58.78 | 59.96 | 59.01 |

| FMPN [14] | 61.52 | 63.01 | 62.02 | |

| DDA-Loss [62] | 62.34 | 63.33 | 62.42 | |

| Efficient Face [29] | 63.70 | 65.29 | 64.01 | |

| DAN [63] | 65.12 | 65.38 | 65.21 | |

| EfficientNet-B2 [64] | 66.13 | 67.65 | 67.34 | |

| Ours | 65.03 | 65.26 | 65.11 | |

| AffectNet-8 | Weighted-Loss [55] | 58.00 | 59.24 | 58.53 |

| RAN [40] | 59.50 | 61.02 | 59.81 | |

| SCN [56] | 60.52 | 63.52 | 61.16 | |

| Efficient Face [29] | 59.89 | 62.30 | 59.43 | |

| DAN [63] | 61.82 | 62.01 | 61.89 | |

| EfficientNet-B2 [64] | 63.02 | 63.53 | 63.06 | |

| Ours | 61.11 | 64.62 | 61.59 |

| Datasets | Methods | Accuracy |

|---|---|---|

| Occlusion-RAF-DB | ResNet-18 [65] | 80.19 |

| RAN [40] | 82.72 | |

| EfficientFace [29] | 83.24 | |

| RUL [60] | 85.99 | |

| EAC [61] | 86.47 | |

| Ours | 86.96 |

| Datasets | Methods | Accuracy | |

|---|---|---|---|

| Pose ⩾ 30 | Pose ⩾ 45 | ||

| Pose-RAF-DB | ResNet-18 [65] | 84.04 | 83.15 |

| RAN [40] | 86.74 | 85.20 | |

| EfficientFace [29] | 88.13 | 86.92 | |

| RUL [60] | 88.37 | 87.99 | |

| EAC [61] | 89.09 | 88.35 | |

| Ours | 89.74 | 88.53 | |

| Local Features | Global Features | Accuracy |

|---|---|---|

| 0 | 1 | 87.68 |

| 0.2 | 0.8 | 88.12 |

| 0.3 | 0.7 | 88.61 |

| 0.4 | 0.6 | 88.21 |

| 0.5 | 0.5 | 89.11 |

| 0.6 | 0.4 | 88.38 |

| 0.7 | 0.3 | 87.98 |

| 0.8 | 0.2 | 87.92 |

| 1 | 0 | 87.78 |

| Patch | Patch Accuracy |

|---|---|

| 4 | 89.11 |

| 8 | 88.26 |

| 12 | 87.38 |

| 16 | 86.20 |

| Datasets | AM-FGL | BC-EDB | Accuracy |

|---|---|---|---|

| RAF-DB | 88.36 | ||

| ✔ | 88.60 | ||

| ✔ | 88.91 | ||

| ✔ | ✔ | 89.11 | |

| AffectNet-7 | 63.70 | ||

| ✔ | 63.85 | ||

| ✔ | 64.78 | ||

| ✔ | ✔ | 65.03 | |

| AffectNet-8 | 59.89 | ||

| ✔ | 60.03 | ||

| ✔ | 60.52 | ||

| ✔ | ✔ | 61.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, J.; Li, J.; Yan, Y.; Wu, L.; Xu, H. Mixing Global and Local Features for Long-Tailed Expression Recognition. Information 2023, 14, 83. https://doi.org/10.3390/info14020083

Zhou J, Li J, Yan Y, Wu L, Xu H. Mixing Global and Local Features for Long-Tailed Expression Recognition. Information. 2023; 14(2):83. https://doi.org/10.3390/info14020083

Chicago/Turabian StyleZhou, Jiaxiong, Jian Li, Yubo Yan, Lei Wu, and Hao Xu. 2023. "Mixing Global and Local Features for Long-Tailed Expression Recognition" Information 14, no. 2: 83. https://doi.org/10.3390/info14020083

APA StyleZhou, J., Li, J., Yan, Y., Wu, L., & Xu, H. (2023). Mixing Global and Local Features for Long-Tailed Expression Recognition. Information, 14(2), 83. https://doi.org/10.3390/info14020083