Abstract

Traffic replay can effectively improve the fidelity of network emulation and can support emerging network verification and network security evaluation. A distributed traffic replay framework for network emulation, named DTRF, is designed for large-scale and high-fidelity network traffic emulation on the cloud platform. A fast traffic replay strategy bypassing the kernel is proposed to improve the traffic replay performance in the single node by alleviating the problem of high overhead from the kernel protocol stack. To conveniently expand the emulation scale, an extendable method of workflow orchestration that can realize the flexible control and configuration of the execution process of emulation tasks is proposed. The experimental results show that, compared with that of the traditional method, the throughput of traffic replay is increased by 3.71 times on average, the number of concurrent streams is increased by 4.18 times on average, and the timestamp error of the replayed packets is reduced by 97.8% on average. The DTRF can expand the emulation scenario to a massive heterogeneous network.

1. Introduction

The cyberspace security situation is becoming increasingly severe, with a series of challenges arising in theoretical research, technical evaluation, and practical applications. Cyber range [1,2], created by emulation technology, is an important information infrastructure with a highly similar operating mechanism to real cyberspace. The cyber range can support the emulation of network attacks and the defence and verification of network security technology. As an important part of the cyber range, network traffic emulation [3] can map traffic in a live network environment to a test network environment, providing a flexible and customized function for traffic scenario reproduction. Network traffic emulation is of great significance to the evaluation and application of emerging networks and security technologies.

Although emulation tools based on discrete event simulation, such as OPNET [4] and NS3 [5], can realize internet traffic emulation, they have shortcomings, such as low emulation throughput, insufficient fidelity, and poor expansibility. Virtualization [6] and cloud computing [7] technologies provide better emulation capabilities by abstracting, reintegrating, and managing physical resources, making it feasible to reproduce large-scale real network traffic in a virtual network environment. The method of traffic replay [8,9,10] injects the traffic collected in the live network environment directly into the virtual network environment and has attracted much attention as a method to effectively improve the fidelity and diversity of network traffic emulation. Therefore, traffic replay based on the cloud platform has become the mainstream network traffic emulation scheme.

In research on traffic replay based on the cloud platform, reference [11] studied malicious user behaviour emulation based on the cloud platform, but it focused more on malicious traffic emulation and was insufficient in emulation extensibility. Reference [12] proposed a method of traffic replay based on IP mapping, which realized the best IP mapping by calculating the similarity of a physical network to a virtual network and then replayed the traffic data collected from the physical network on the associated nodes in the virtual network on the cloud platform. However, it did not realize interactive traffic replay. Reference [13] proposed an architecture of interactive traffic replay on the cloud platform, which used delay optimization technology to improve the timing fidelity of multipoint traffic replay. However, it had shortcomings, such as low replay throughput and insufficient emulation scale. Reference [14] proposed a method, named ITRM, for interactive replay in a virtual network based on the cloud platform. ITRM is based on the research of Reference [12] and focuses on the problem of how to perform the interactive traffic replay of multiple nodes in a reduced-scale virtual network scenario. ITRM has certain advantages in space fidelity, but it is slightly inadequate in the performance of traffic generation and cannot guarantee the timing fidelity of high-speed traffic replay. The above methods can emulate small-scale and low-speed network scenarios, but the scale of internet data is exponentially increasing at present, which brings new challenges to network traffic emulation [15]. How to emulate large-scale, high-speed, highly concurrent network traffic has become an urgent problem [16,17]. It is critical to improve the traffic-generation performance in emulation nodes and expand the emulation scale [3].

Therefore, we propose a distributed framework for network traffic emulation based on traffic replay, named DTRF. The goals of the DTRF are to further improve the performance of traffic replay in emulation nodes, such as the throughput of emulation traffic and the number of concurrent emulation streams, and to expand the emulation scale through automatic configuration rather than tedious manual operation. Therefore, in the emulation node, we replay traffic by bypassing the kernel to shorten the replay path and reduce additional overhead. We combine the workflow model [18,19,20] to orchestrate emulation tasks and realize the flexible control of massive emulation nodes. In summary, our main contributions are as follows:

- From a micro perspective, in a single emulation node, to tackle the high overhead of the kernel protocol stack, the state control of traffic replay is implemented in the user space, which shortens the path of traffic replay. Additional overheads are reduced (such as interruptions, memory copying, context switching, and lock contention), so that more CPU and memory are used for traffic replay and higher traffic replay performance can be obtained.

- From a macro perspective, a method for the cluster control of emulation nodes and the flexible configuration of emulation tasks in large-scale and complex virtual network scenarios is proposed, which transmits control commands in parallel on the basis of message queues and models the execution process of emulation tasks on the basis of workflows. This method improves the scalability of the emulation scale (the number of emulation nodes that can be controlled in emulation experiments) and expands the emulation scenario from a reduced-scale network to a massive heterogeneous network.

The rest of this paper is organized as follows: In Section 2, the DTRF we proposed is introduced in detail. Section 3 presents exhaustive experiments and analysis to validate the DTRF. In Section 4, the advantages and disadvantages of the DTRF and other mainstream methods of traffic replay are compared and summarized. Finally, Section 5 contains a summary and a look at the full text.

2. DTRF Introduction

2.1. Introduction to the DTRF Architecture

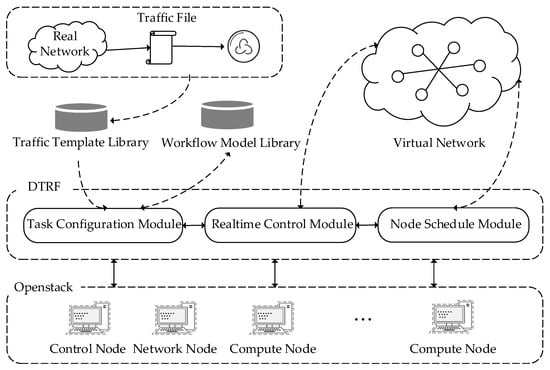

OpenStack is used as the emulation platform for the DTRF. OpenStack consists of control nodes, network nodes, and compute nodes. All virtual emulation nodes are built by Docker. The network time protocol is used to ensure the synchronization of each emulation node; the time of the control node is used as the reference time axis. The distributed architecture of the DTRF based on the cloud platform is shown in Figure 1.

Figure 1.

DTRF Architecture.

The DTRF includes four modules: the task configuration module, the node schedule module, the real-time control module, and the resource awareness module. The DTRF module interacts with the API exposed by the OpenStack components. The DTRF emulation process is as follows:

- 1.

- Platform Start-Up Stage

When the platform is initialized, a traffic template library and a workflow model library are created. First, the traffic files collected from the real network environment are parsed when the emulation platform starts up. Then, the interaction sequence is recorded, the datagram information is loaded, the template for the emulation traffic is constructed with five-tuples and timestamps as features, and the template is stored in the traffic template library. The workflow model library is used to store the workflow model built by the experimenter.

- 2.

- Emulation Task Configuration and Node Initialization Stage

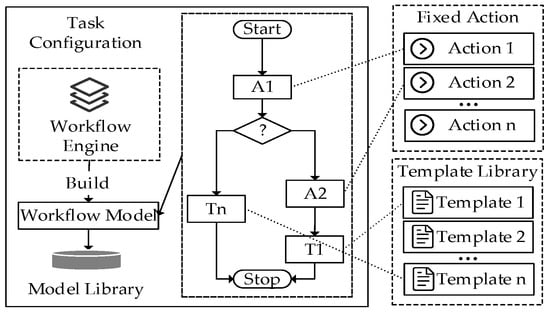

In the task configuration module, some tedious and repeated operations are fixed as actions in the workflow. When configuring the emulation task, the experimenter selects the traffic template in the traffic template library as needed, matches the fixed action, constructs the workflow model, and stores it in the workflow model library. Experimenters can also select the constructed workflow model for editing. The node schedule module deploys the emulation node according to the initial resources of the emulation task.

- 3.

- Emulation Task Execution and Completion Stage

The experimenter controls the execution and can stop the emulation task through the real-time control module. When the emulation task is executing, the resource awareness module regularly obtains the status of the emulation node and feeds it back to the node schedule module. The node schedule module dynamically adjusts the emulation nodes when the emulation task in nodes has completed. After all emulation tasks have completed, the control node destroys all emulation nodes and reclaims the resources.

2.2. Traffic Replay Algorithm

The replay algorithm is introduced in this section. As described in Section 2.1, the emulation node reads streams from the traffic template and sends packets to other emulation nodes. The packet ontology and the captured timestamp are recorded in the traffic template. At the beginning of Algorithm 1, the timestamp of the first packet is recorded (denoted as last_ts) and used as the starting point of the timeline. Then, it is sent, and the send time is recorded (denoted as last_send). In the next replay, the gap between the timestamp of the current packet and the timestamp of the previous packet is calculated (denoted as gap_ts). The gap between the current and last send times is calculated (denoted as gap_send). If gap_ts exceeds gap_send, the packet is added to the sleep queue. Otherwise, the packet is sent directly, and last_send and last_ts are updated.

| Algorithm 1: Traffic Replay Algorithm |

| Input: stream 1. last_ts ← 0//first packet timestamp 2. last_send ← 0//first packt send time 3. for packet in stream do: 4. if last_ts == 0 then://first packt in stream 5. last_ts ← packt_ts 6. last_send ← gettime()//the actual send time 7. else: 8. gap_ts ← packt_ts—last_ts 9. gap_send ← gettime()—last_send 10. if gap_ts > gap_send then: 11. sleep_time ← gap_ts—gap_send 12. sleep() 13. end if 14. last_send ← gettime() 15. last_ts ← packt_ts//update 16. end if 17. send packet 18. end for |

2.3. High-Performance Strategy of Traffic Replay

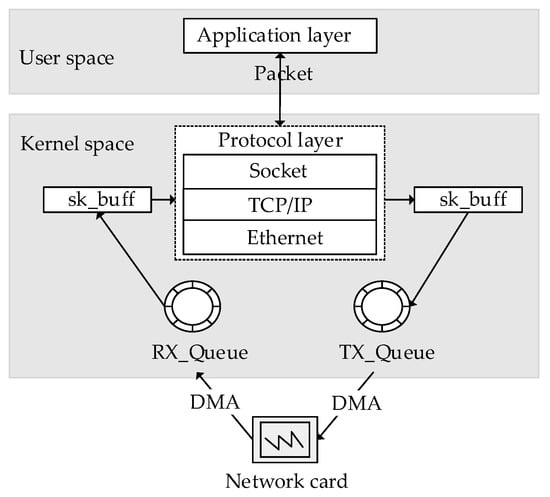

Algorithm 1 can accurately control the process of traffic replay theoretically. However, in traditional methods, packets must pass through the operating system kernel space, as illustrated in Figure 2. This incurs additional overhead, including CPU and memory usage. These overheads may be negligible in low-speed network emulation because resources such as CPU and memory are sufficient. However, they can have a significant impact in high-speed network scenarios. From a vertical perspective, when the replay speed of a single stream reaches the peak (meaning that the replay program will send packets as soon as possible, which is usually used for stress testing in the cyber range), these overheads become the main limiting factor for the maximum throughput of the replay program. From a horizontal perspective, when the number of streams is massive in the sleep queue, these overheads will lead to the inability to wake up some sleeping streams in time. In the following, the mechanisms for generating these overheads are explained, and the strategies for increasing the throughput and the number of concurrent streams by minimizing these overheads are described.

Figure 2.

Packet path.

The interruption mode is used by the kernel protocol stack to receive and send packets. When a packet arrives, a hard interrupt is first triggered and the packet is copied to the kernel space from the network card. Then the packet is copied to the user space after processing by the kernel protocol stack. The sending process is similar. CPU and memory are consumed in large quantities by frequent interruption, memory copying, and context switching between user space and kernel space. Therefore, we used Intel’s DPDK [21] (data plane development kit) to migrate the entire protocol processing from the kernel to the user space. The DPDK implements a high-performance user mode driver, eliminating the overhead of context switching between user space and kernel space. Compared with the original kernel driver, the DPDK can provide lower and stabler latency. In the user space, we reduce the copying operation of the packet ontology, and the packets flow in the form of index pointers and are stored in the cache queue. We use the polling mode to receive and send packets. In the polling mode, we let the CPU actively query whether there are packets in the network card cache queue instead of relying on interruptions to notify the CPU that packets have arrived, so that CPU resources will not be wasted by frequent interruptions. When sending and receiving data packets at high speed, the CPU always has something to do and will not be in a busy waiting state.

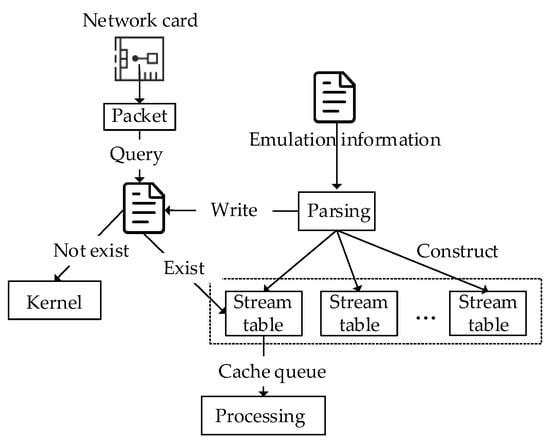

In the kernel, LVS [22] (Linux Virtual Server) is used to distribute streams to network threads. When a thread processes streams, the related resources are stored in the CPU cache. For load balancing, threads are dynamically scheduled to different CPUs by the kernel, which invalidate the cache on the original CPU. The overhead caused by frequent cache failures cannot be ignored [23]. Therefore, we bind threads to CPUs one to one. During the life cycle of a thread, fixed streams are processed on a fixed CPU. On the other hand, in a multithreaded environment, access to global resources such as stream tables, memory pools, and cache queues must be locked. The overhead caused by frequent locking cannot be ignored [23]. Therefore, to achieve locklessness, each thread of DTRF independently manages related data structures and accesses only its own stream table, memory pool, cache queue, etc. When the emulation task has been initialized, the streams to be replayed have been determined. During initialization, DTRF evenly distributes streams to the CPU cores, constructs the related data structures for each CPU, and writes the information to the configuration file. The specific packet-receiving process is shown in Figure 3.

Figure 3.

Packet-receiving process.

In summary, we optimize the process of traffic replay from the perspective of software overhead. More CPU and memory can be used for traffic replay itself instead of wasting it on other operations (such as interruption, memory copying, context switching, and lock contention), which can improve the throughput and the number of concurrent streams of each emulation node.

2.4. Cluster Control and Flexible Configuration Strategy

Manual operation on a single emulation node is relatively simple. However, in the large-scale network traffic emulation scenario based on the cloud platform, the traffic that each node needs to replay is different. The process of manually starting the replay tasks of all nodes is too cumbersome. Therefore, it is necessary to study a cluster control method for emulation nodes in large-scale virtual network scenarios.

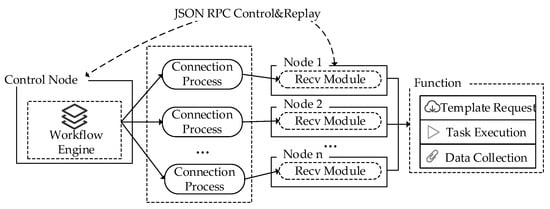

In DTRF, a distributed cluster control method based on a message queue is designed to realize the flexible control of the emulation node. The communication architecture is shown in Figure 4. The control node and the emulation node communicate through the remote procedure call framework. In this RPC framework, the experimenter operates the control node to arrange emulation tasks and sends control commands to the emulation node in batches. After the emulation node receives the commands issued by the control node, it parses the commands and executes operations such as traffic template requests, traffic replay, and data collection.

Figure 4.

Communication architecture.

At the same time, a workflow engine is deployed in the control node to set the execution steps required by the emulation task as fixed actions. The scanning of connectable emulation nodes, the status query of emulation nodes, the start of emulation nodes, the traffic data collection, the termination of the emulation task, and other actions are designed. As Figure 5 shows, according to the emulation requirements, the traffic template and action are matched, and then the replay rate, duration, and other parameters of the traffic template are set to build a variety of workflow models that can achieve flexible configuration of emulation tasks.

Figure 5.

Task configuration.

In summary, we study how to realize the flexible control and task configuration of massive emulation nodes in an automated manner. In this way, we can centrally configure the emulation task of all emulation nodes in the control node and control the execution of the task, rather than independently configuring the task in each emulation node. It is convenient to expand the emulation scenario from a reduced-scale network to a massive heterogeneous network.

3. Results

This section analyses and verifies the DTRF through the following experiments: (1) a comparison of replay performance in a single node and (2) a functional verification of a large-scale network emulation.

3.1. Experimental Environment

The experimental platform in this section consists of multiple Dell R730s to build the OpenStack cloud platform (Mitaka version). Control nodes, network nodes, and compute nodes are included as the basic experimental environment. The operating system of all nodes is CentOS 7.2, and the specific configuration is shown in Table 1.

Table 1.

OpenStack node information.

3.2. Comparison of Replay Performance

In this section, the performance advantages of DTRA are verified. We compare the optimized traffic replay strategy of DTRF with the classic traffic replay tool, named Tcpreplay [24], including throughput, number of concurrent streams, and timestamp error. We create one emulation node on the cloud platform and use the above two methods to replay traffic between the two network cards of the node.

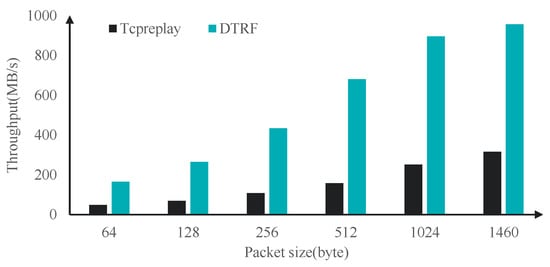

3.2.1. Comparison of Throughput

In this experiment, we replay 64–1460 byte UDP packets at the top speed. The experimental results are shown in Figure 6. In the 64–1046 byte range of packets, the throughput of DTRF is much higher than that of Tcpreplay. In the 64–1460 byte UDP packet replay task, the average PPS (packets per second) of DTRF is 1609 K, while the average PPS of Tcpreplay is 434 K. The throughput of DTRF is 3.71 times higher than that of Tcpreplay on average.

Figure 6.

Throughput comparison.

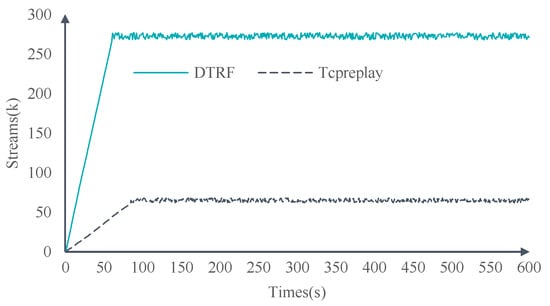

3.2.2. Comparison of Concurrent Streams

In this experiment, we create new streams at the top speed and extend the duration of each stream as much as possible. The experimental results are shown in Figure 7. As time goes by, the number of concurrent streams of DTRF increases at a rate of 4.52 K per second, while that of Tcpreplay increases at a rate of 0.76 K per second. After stabilization, the number of concurrent streams of DTRF is 272 K on average, while that of Tcpreplay is 65 K on average. Compared with that of Tcpreplay, the number of concurrent streams of DTRF increases by 4.18 times on average.

Figure 7.

Concurrent stream comparison.

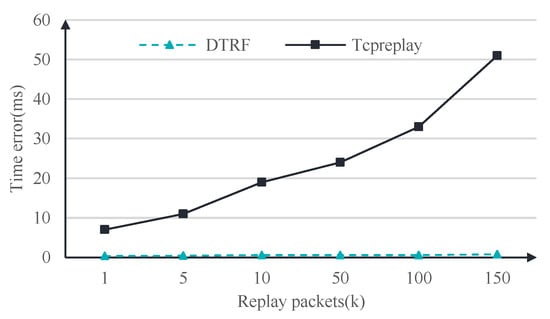

3.2.3. Comparison of Timestamp Errors

An effective method to evaluate the timing fidelity of replay traffic is to compare the timestamp error of packets in the replay traffic file and the original traffic file through Equation (1). is the timestamp interval between adjacent packets. We calculate (denoted as ) of the replay traffic file and (denoted as ) of the original traffic file. The mean value of the absolute value of the timestamp error is used to evaluate the time series accuracy.

In this experiment, we replay six groups of traffic, including 1 K, 5 K, 10 K, 50 K, 100 K, and 150 K packets. The experimental results are shown in Figure 8. In the six groups of replay experiments, with an increase in the number of replay packets, the average error of the DTRF always remains at a stable level of less than 1 ms. On the other hand, the errors generated by Tcpreplay gradually increase. When the number reaches 150,000, the average error of Tcpreplay exceeds 50 ms. Compared with that of Tcpreplay, the timestamp error of the replayed packets of the DTRF is reduced by 97.8% on average.

Figure 8.

Timestamp error comparison.

3.2.4. Results Explanation

The throughput and the rate of new stream creation are related to the packet processing speed. The packet processing speed is inversely related to the overhead, with a smaller overhead resulting in a faster processing speed. Additionally, the overhead of each stream affects the total number of concurrent streams that can be maintained, with a smaller cost allowing for a greater number of concurrent streams. The packet processing speed can also impact the accuracy of time, as a slower processing speed may cause a higher number of packets to be blocked in the sleeping queue and not be transmitted in a timely manner.

After using the DPDK driver, our replay program can completely obtain the ownership of the network card, without the participation of the kernel. Therefore, we can reduce the additional CPU and memory overhead, which was mentioned in Section 2.3, including interruption, memory copying, context switching, and lock contention. Tcpreplay pays more attention to universality, the kernel is required in the running process, and the above overhead cannot be avoided. Although Tcpreplay has excellent software architecture, its performance is in theory lower than our method, and the experimental results also prove that. DTRF has less overhead, so it achieves higher performance.

3.3. DTRF Function Verification

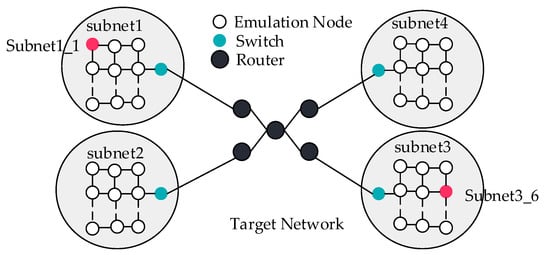

In this experiment, we use DTRF to create the network topology, shown in Figure 9. Subnet1–Subnet4 are subnets containing 20 emulation nodes. Emulation traffic is interactively replayed between Subnet1 and Subnet3 and interactively replayed between Subnet2 and Subnet4. We select the workflow model for each emulation node in the control node and then transmit the control command to the emulation node. After receiving the control command, the emulation node starts the emulation task. Different types of traffic are replayed in each node, but the duration is the same, which is 30 s. The experimental results are shown in Figure 10.

Figure 9.

Target network.

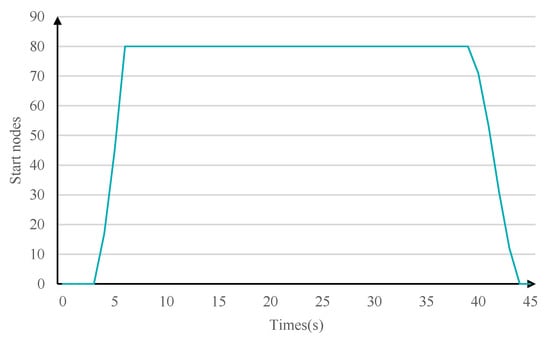

Figure 10.

Online nodes.

In the first 3 s, the control node scans and connects the emulation nodes, and in the next 2 s, all emulation nodes start up one after another and receive the command from the control node to replay traffic, with a cycle of 30 s. Finally, all nodes complete the emulation task, stop replay traffic, and provide feedback on the task execution results to the emulation control node. All tasks are successfully executed, and DTRF can realize the large-scale network emulation.

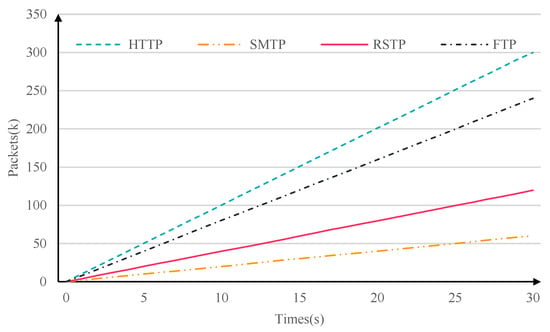

In the network topology of Figure 9, emulation traffic is interactively replayed between node subnet1_1 and node subnet3_6 at a fixed speed. The configuration ratio of emulation traffic between two nodes is shown in Table 2. The traffic replayed of the two nodes will be filtered and captured by the core router and stored as a file. The file-parsing result is shown in Figure 11. The time when the first packet is captured is used as the starting point of the timeline. The type and growth rate of traffic are consistent with our configuration.

Table 2.

Traffic configuration ratio.

Figure 11.

Replay results.

In summary, through DTRF, we can centrally configure the emulation task of all emulation nodes in the control node and control the execution of the task, rather than independently configuring the task in each emulation node. It is convenient to expand the emulation scenario from a reduced-scale network to a massive heterogeneous network.

4. Discussion

Traditional methods can realize low-speed network emulation with high fidelity. However, traditional methods, such as ITRM, focus on the spatial topology mapping of a reduced-scale network and rarely optimize the traffic-generation performance of a single node. These methods must replay traffic through the operating system kernel, which has expensive overhead (such as interruptions, memory copying, context switching and lock contention), so they are not suitable for high-speed network emulation. Although Tcpreplay can replay traffic only in a single node and cannot emulate a multinode network, it has excellent software architecture, so we compare its single-node performance with DTRF. Tcpreplay still replays traffic through the kernel, while DTRF alleviates the overhead by bypassing the kernel, so DTRF has higher performance. On the other hand, we study how to realize the flexible control and task configuration of massive emulation nodes in an automated manner, which can easily expand the emulation scale. However, owing to the expansion of the emulation scale, it is difficult to ensure the similarity between physical and virtual topologies, which is also our limitation. In summary, in the current environment, DTRF can meet our requirements for large-scale, high-speed, highly concurrent network emulation.

5. Conclusions

Large-scale network emulation based on traffic replay on the cloud platform can provide a variety of traffic environments for the technical verification and performance evaluation of emerging networks. In this paper, we proposed a distributed traffic replay framework for network emulation. By experimental verification, we saw that the DTRF can support the flexible configurations and automatic execution of emulation tasks in large-scale network traffic emulation scenarios; this support simplified the experimentation process. The DTRF had certain advantages in the performance and the timing fidelity of traffic replay. In future research, we will further study the deployment and scheduling of emulation nodes on the cloud platform and will adopt different deployment strategies for various emulation tasks to further improve the task concurrency and reduce the response delay of emulation tasks.

Author Contributions

Conceptualization, X.W.; methodology, X.H.; Software, X.H.; Validation, X.H. and Q.X.; Formal analysis, Q.X.; Investigation, Q.X.; Data curation, X.H.; Writing—original draft, X.H.; Writing—review & editing, X.W. and Y.L.; Supervision, X.W. and Y.L.; Project administration, X.W. and Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant Nos. 61972182 and 62172191).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fang, B.; Jia, Y. Cyber Ranges: State-of-the-art and research challenges. J. Cyber Secur. 2016, 1, 1–9. [Google Scholar]

- Li, F.; Wang, Q. Research on Cyber Ranges and Its Key Technologies. Comput. Eng. Appl. 2022, 58, 12–22. [Google Scholar]

- Fu, Y.; Zhao, H.; Wang, X.F.; Liu, H.R.; An, L. State-of-the-Art Survey of Network Behavior Emulation. J. Softw. 2022, 33, 274–296. [Google Scholar]

- Zhang, L. Opnet based emulation research on heterogeneous network for smart-home. Master’s Thesis, Beijing University of Posts and Telecommunications, Beijing, China, 2019. [Google Scholar]

- Ru, X.; Liu, Y. A survey of new network simulator NS3. Microcomput. Its Appl. 2017, 36, 14–16. [Google Scholar]

- Felter, W.; Ferreira, A. An updated performance comparison of virtual machines and Linux containers. In Proceedings of the 2015 IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), Philadelphia, PA, USA, 29–31 March 2015; pp. 171–172. [Google Scholar]

- Li, C.; Zhang, X. Performance evaluation on open source cloud platform for high performance computing. J. Comput. Appl. 2013, 33, 3580–3585. [Google Scholar]

- Adeleke, O.; Bastin, N. Network Traffic Generation: A Survey and Methodology. ACM Comput. Surv. 2022, 55, 1–23. [Google Scholar] [CrossRef]

- Catillo, M.; Pecchia, A.; Rak, M. Demystifying the role of public intrusion datasets: A replication study of DoS network traffic data. Comput. Secur. 2021, 108, 102341. [Google Scholar] [CrossRef]

- Toll, M.; Behnke, I. IoTreeplay: Synchronous Distributed Traffic Replay in IoT Environments. In Proceedings of the 2022 IEEE International Conference on Cloud Engineering (IC2E), San Francisco, CA, USA, 26–30 September 2022; pp. 8–14. [Google Scholar]

- Wen, H.; Liu, Y.; Wang, X. Malicious user behavior emulation technology for space-ground integrated network. J. Chin. Mini-Micro Comput. Syst. 2019, 40, 1658–1665. [Google Scholar]

- Li, L.; Hao, Z. Traffic replay in virtual network based on IP-mapping. In Proceedings of the International Conference on Algorithms and Architectures for Parallel Processing, Zhangjiajie, China, 18–20 November 2015; pp. 697–713. [Google Scholar]

- Huang, N.; Liu, Y.; Wang, X. User Behavior Emulation Technology Based on Interactive Traffic Replay. Comput. Eng. 2021, 47, 103–110. [Google Scholar]

- Liu, H.; An, L.; Ren, J.; Wang, B. An interactive traffic replay method in a scaled-down environment. IEEE Access 2019, 7, 149373–149386. [Google Scholar] [CrossRef]

- Du, X.L.; Xu, G.; Li, T. Traffic Control for Data Center Network: State of Art and Future Research. J. Comput. 2021, 44, 1287–1309. [Google Scholar]

- Zhang, Z.; Lu, G.; Zhang, C.; Gao, Y.; Wu, Y.; Zhong, G. Cyfrs: A fast recoverable system for cyber range based on real network environment. In Proceedings of the 2020 Information Communication Technologies Conference (ICTC), Nanjing, China, 29–31 May 2020; pp. 153–157. [Google Scholar]

- Andreolini, M.; Colacino, V.G.; Colajanni, M.; Marchetti, M. A framework for the evaluation of trainee performance in cyber range exercises. Mob. Netw. Appl. 2020, 25, 236–247. [Google Scholar] [CrossRef]

- Xu, Y. Research and Implementation of Software Automation Test Framework Based on Workflow Model. Master’s Thesis, Nanjing University of Posts and Telecommunications, Nanjing, China, 2015. [Google Scholar]

- Zheng, H.; Ye, C. A Game Cheating Detection System Based on Petri Nets. J. Syst. Simul. 2020, 32, 455–463. [Google Scholar]

- Liu, C.; Wang, H. Workflow and Service Oriented E-mail System Model. Comput. Eng. 2010, 36, 68–71. [Google Scholar]

- Shi, J.; Pesavento, D. NDN-DPDK: NDN forwarding at 100 Gbps on commodity hardware. In Proceedings of the 7th ACM Conference on Information-Centric Networking, Virtual Event, 29 September–1 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 30–40. [Google Scholar]

- Zhang, W.S.; Wu, T.; Jin, S.Y.; Wu, Q.Y. Design and Implementation of a Virtual Internet Server. J. Softw. 2000, 11, 122–125. [Google Scholar]

- Yang, Z.; Harris, J.R. SPDK: A development kit to build high performance storage applications. In Proceedings of the 2017 IEEE International Conference on Cloud Computing Technology and Science (CloudCom), Hong Kong, China, 11–14 December 2017; pp. 154–161. [Google Scholar]

- Tcpreplay. Available online: https://github.com/appneta/tcpreplay (accessed on 23 October 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).