A Rumor Detection Method Based on Adaptive Fusion of Statistical Features and Textual Features

Abstract

:1. Introduction

2. Related Work

2.1. Design of Handcrafted Features for Rumor Detection

2.2. Extraction of Textual Features for Rumor Detection

2.3. Rumour Detection Based on the Integration of Auxiliary Features

2.4. Rumor Detection Based on Multimodality

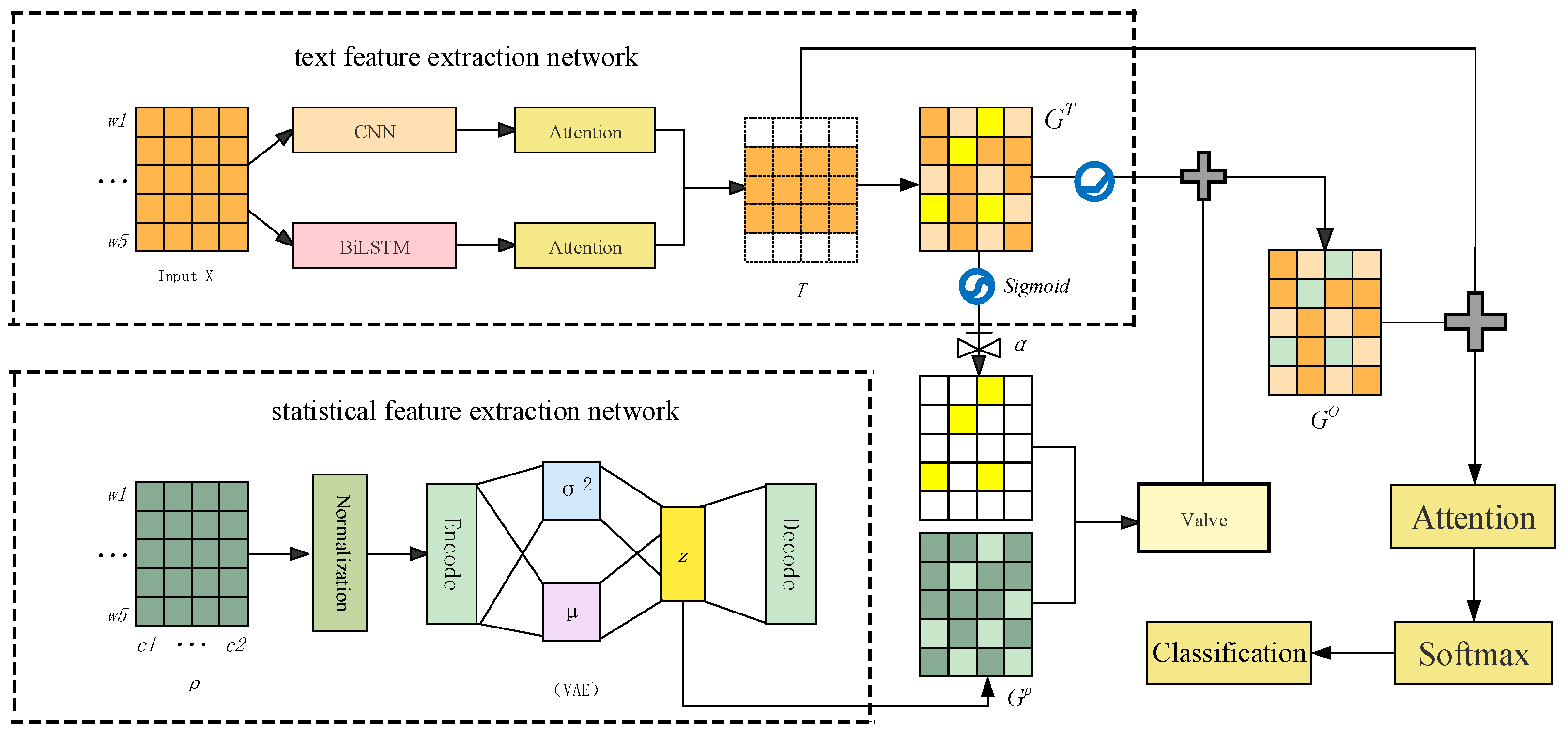

3. Materials and Methods

3.1. Word Frequency Statistical Vector Representation

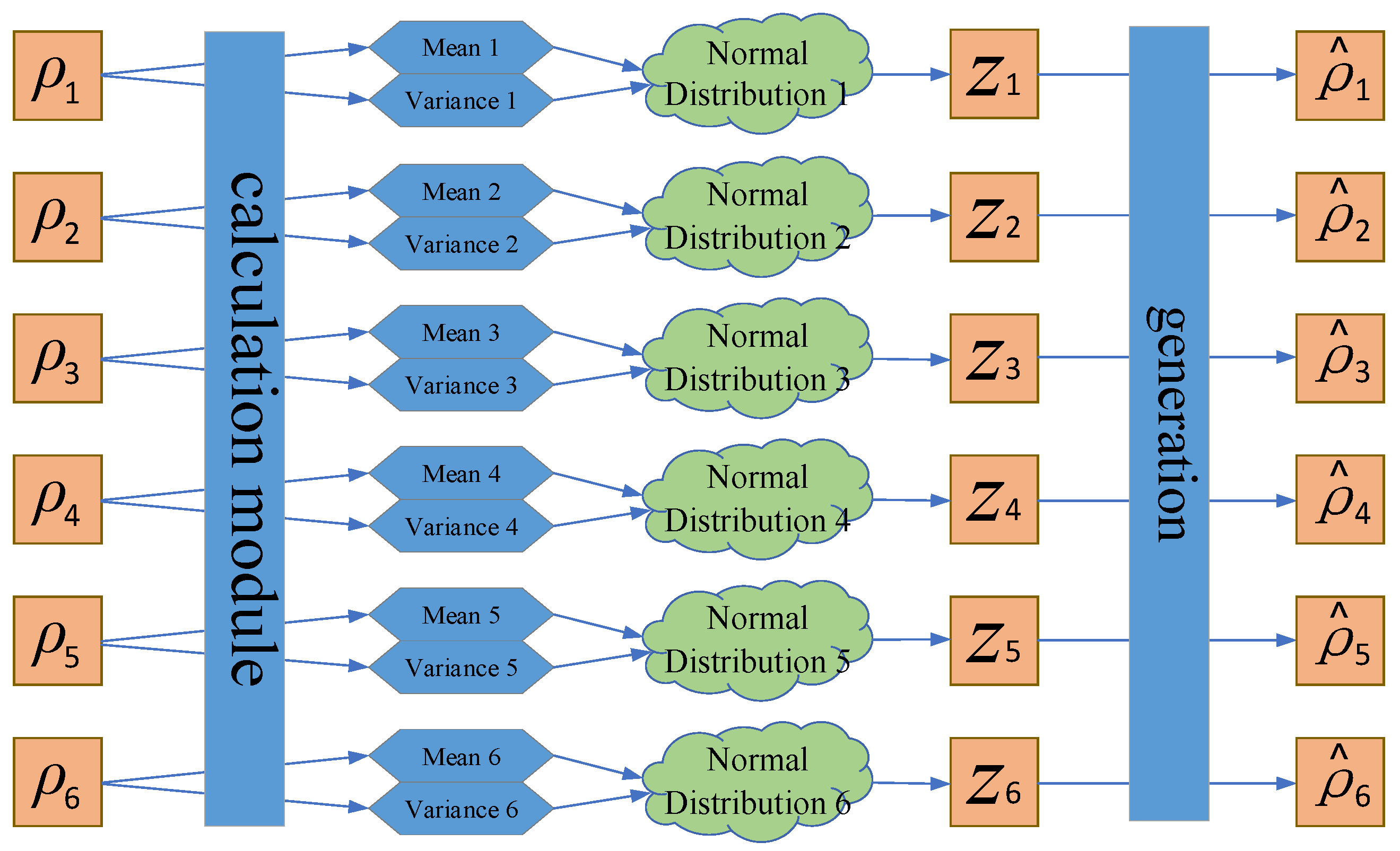

3.2. Statistical Feature Extraction Network

3.3. Textual Feature Extraction Network

3.3.1. BERT

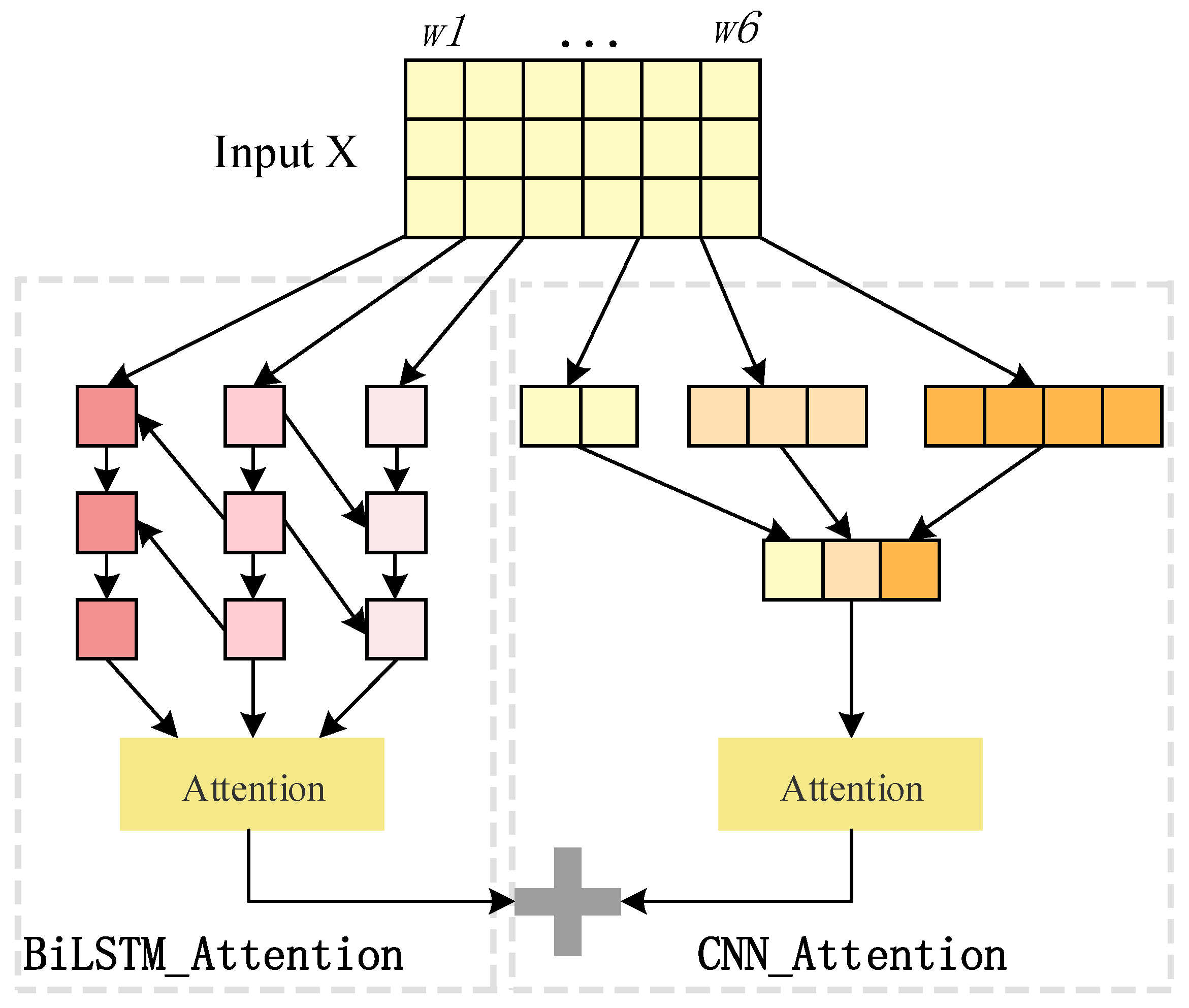

3.3.2. Bi_LSTM+Attention Layer

3.3.3. CNN+Attention Layer

3.4. Valve Component

3.5. Classifier

4. Results

4.1. The Datasets

4.2. Training Parameter Setting

4.3. Comparative Experiment and Result Analysis

4.4. Variational Autoencoders (VAE) Compared with Ordinary Auto-Encoders (AE)

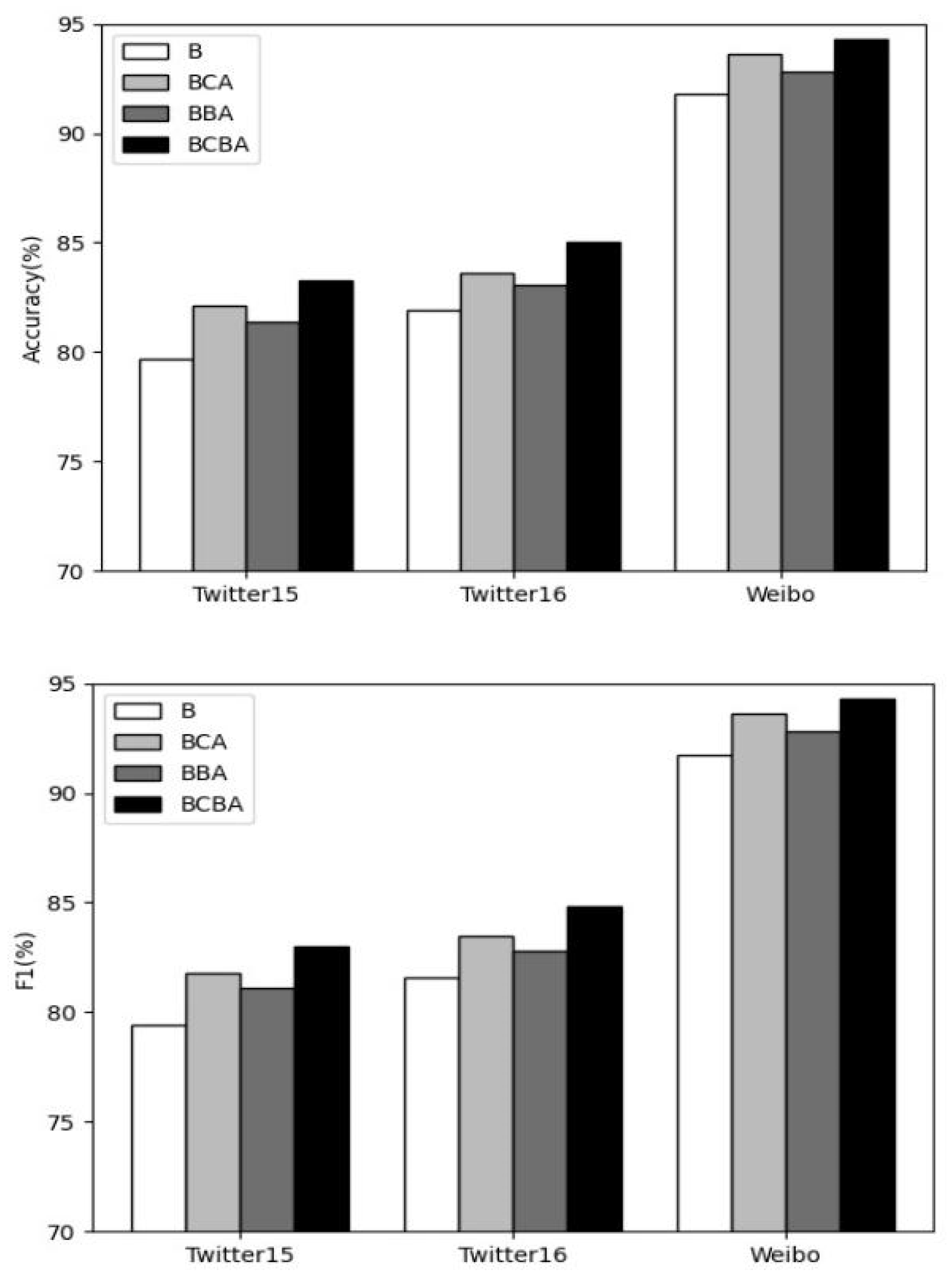

5. Ablation Study

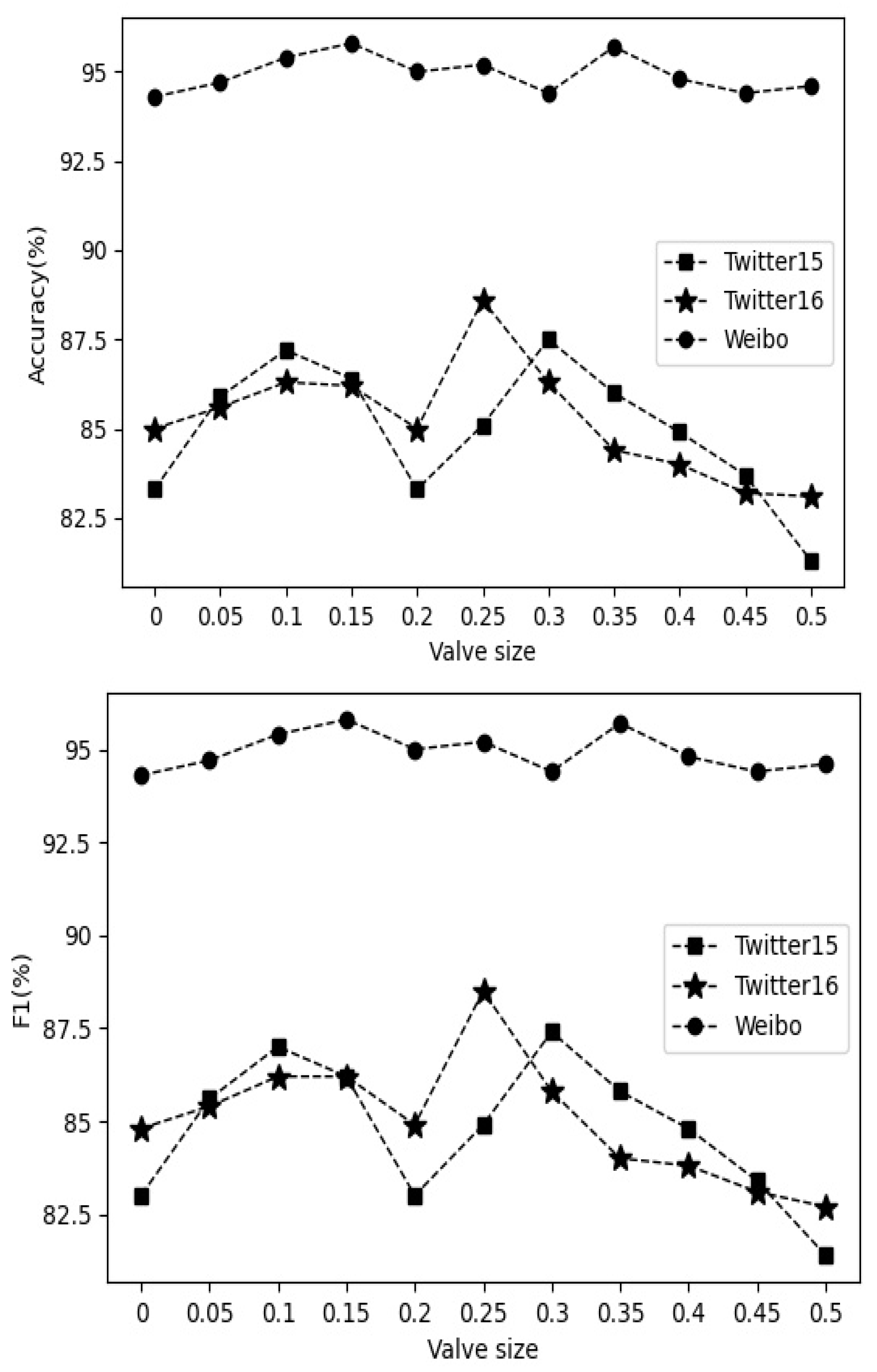

5.1. Valve Components

5.2. Textual Feature Module

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gao, Y.J.; Liang, G.; Jiang, F.T.; Xu, C.; Yang, J.; Chen, J.R.; Wang, H. Social Network Rumor Detection: A Survey. Acta Electonica Sin. 2020, 48, 1421. [Google Scholar]

- Ma, J.; Gao, W.; Wong, K.F. Detect rumors on twitter by promoting information campaigns with generative adversarial learning. In WWW ′19: The World Wide Web Conference; ACM: New York, NY, USA, 2019; pp. 3049–3055. [Google Scholar]

- Castillo, C.; Mendoza, M.; Poblete, B. Information credibility on twitter. In Proceedings of the 20th International Conference on World Wide Web, Hyderabad, India, 28 March–1 April 2011; pp. 675–684. [Google Scholar]

- Yang, F.; Liu, Y.; Yu, X.; Yang, M. Automatic detection of rumor on sina weibo. In Proceedings of the ACM SIGKDD Workshop on Mining Data Semantics, Beijing, China, 12–16 August 2012; pp. 1–7. [Google Scholar]

- Kwon, S.; Cha, M.; Jung, K.; Chen, W.; Wang, Y. Prominent features of rumor propagation in online social media. In Proceedings of the 2013 IEEE 13th International Conference on Data Mining, Dallas, TX, USA, 7–10 December 2013; pp. 1103–1108. [Google Scholar]

- Ma, J.; Gao, W.; Mitra, P.; Kwon, S.; Jansen, B.J.; Wong, K.F.; Cha, M. Detecting rumors from microblogs with recurrent neural networks. In Proceedings of the 25th International Joint Conference on Artificial Intelligence (IJCAI 2016), New York, NY, USA, 9–15 July 2016. [Google Scholar]

- Yu, F.; Liu, Q.; Wu, S.; Wang, L.; Tan, T. A Convolutional Approach for Misinformation Identification. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence (IJCAI-17), Melbourne, Australia, 19–25 August 2017; pp. 3901–3907. [Google Scholar]

- Ruchansky, N.; Seo, S.; Liu, Y. Csi: A hybrid deep model for fake news detection. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 797–806. [Google Scholar]

- Li, X.; Li, Z.; Xie, H.; Li, Q. Merging statistical feature via adaptive gate for improved text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, 2–9 February 2021; pp. 13288–13296. Available online: https://aaai.org/Conferences/AAAI-21/ (accessed on 11 January 2023).

- Chen, T.; Li, X.; Yin, H.; Zhang, J. Call attention to rumors: Deep attention based recurrent neural networks for early rumor detection. In Trends and Applications in Knowledge Discovery and Data Mining—PAKDD 2018; Springer: Cham, Switzerland, 2018; pp. 40–52. [Google Scholar]

- Ajao, O.; Bhowmik, D.; Zargari, S. Fake news identification on twitter with hybrid cnn and rnn models. In Proceedings of the 9th International Conference on Social Media and Society, Copenhagen, Denmark, 18–20 July 2018; pp. 226–230. [Google Scholar]

- Nguyen, T.N.; Li, C.; Niederee, C. On early-stage debunking Rumors On Twitter: Leveraging the wisdom of weak learners. In Social Informatics—SocInfo 2017; Springer: Cham, Switzerland, 2017; pp. 141–158. [Google Scholar]

- Ma, J.; Gao, W.; Wong, K.F. Rumor Detection on Twitter with Tree-Structured Recursive Neural Networks. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (ACL 2018), Melbourne, Australia, 15–20 July 2018. [Google Scholar]

- Jin, Z.; Cao, J.; Guo, H.; Zhang, Y.; Luo, J. Multimodal fusion with recurrent neural networks for rumor detection on microblogs. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 795–816. [Google Scholar]

- Khattar, D.; Goud, J.S.; Gupta, M.; Varma, V. Mvae: Multimodal variational autoencoder for fake news detection. In WWW ′19: The World Wide Web Conference; ACM: New York, NY, USA, 2019; pp. 2915–2921. [Google Scholar]

- Zhou, H.; Ma, T.; Rong, H.; Qian, Y.; Tian, Y.; Al-Nabhan, N. MDMN: Multi-task and Domain Adaptation based Multi-modal Network for early rumor detection. Expert Syst. Appl. 2022, 195, 116517. [Google Scholar] [CrossRef]

- Ma, J.; Gao, W.; Wong, K.F. Detect rumors in microblog posts using propagation structure via kernel learning. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Zhao, Z.; Resnick, P.; Mei, Q. Enquiring minds: Early detection of rumors in social media from enquiry posts. In Proceedings of the 24th International Conference on World Wide Web, Florence, Italy, 18–22 May 2015; pp. 1395–1405. [Google Scholar]

- Liu, Y.; Wu, Y.F. Early detection of fake news on social media through propagation path classification with recurrent and convolutional networks. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Ma, J.; Gao, W. Debunking Rumors on Twitter with Tree Transformer. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020. [Google Scholar]

- Bing, C.; Wu, Y.; Dong, F.; Xu, S.; Liu, X.; Sun, S. Dual Co-Attention-Based Multi-Feature Fusion Method for Rumor Detection. Information 2022, 13, 25. [Google Scholar] [CrossRef]

| Twitter15 | Twitter16 | ||

|---|---|---|---|

| False rumors | 2313 | 374 | 205 |

| Non-rumors | 2351 | 370 | 205 |

| unverified | 0 | 374 | 203 |

| True rumor | 0 | 372 | 205 |

| Total | 4664 | 1490 | 818 |

| The Parameter Name | The Parameter Value |

|---|---|

| Batch size | 32 |

| Training epoch | 20 |

| Hidden layer size | 128 |

| Optimizer | Adam |

| Loss function | VAE_LOSS |

| The Parameter Name | The Parameter Value |

|---|---|

| Batch size | 32 |

| Training epoch | 10 |

| Optimizer | Adam |

| Loss function | Cross entropy loss |

| Learning rate | 0.05 |

| Dropout | 0.2 |

| Twitter15 | Twitter16 | |||||

|---|---|---|---|---|---|---|

| Model | Acc | F1 | Acc | F1 | Acc | F1 |

| DTR | 0.467 | 0.443 | 0.566 | 0.515 | 0.732 | 0.732 |

| DTC | 0.523 | 0.502 | 0.538 | 0.497 | 0.831 | 0.831 |

| RFC | 0.599 | 0.55 | 0.582 | 0.533 | 0.849 | 0.847 |

| PTK | 0.75 | 0.75 | 0.732 | 0.743 | / | / |

| RvNN | 0.749 | 0.742 | 0.737 | 0.704 | / | / |

| HD-TRANS | 0.789 | 0.787 | 0.768 | 0.765 | / | / |

| GRU | 0.646 | 0.642 | 0.633 | 0.635 | 0.91 | 0.91 |

| PPC | 0.842 | 0.824 | 0.863 | 0.850 | 0.921 | 0.921 |

| BERT_fine-tuning | 0.847 | 0.847 | 0.856 | 0.855 | 0.951 | 0.949 |

| BDCoNN | / | / | / | / | 0.957 | 0.957 |

| BCBA_GN | 0.875 | 0.874 | 0.886 | 0.885 | 0.958 | 0.958 |

| VAE | AE | |||

|---|---|---|---|---|

| Acc | F1 | Acc | F1 | |

| Twitter15 | 0.851 | 0.849 | 0.849 | 0.846 |

| Twitter16 | 0.886 | 0.885 | 0.882 | 0.879 |

| 0.952 | 0.952 | 0.943 | 0.943 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Dan, Z.; Dong, F.; Gao, Z.; Zhang, Y. A Rumor Detection Method Based on Adaptive Fusion of Statistical Features and Textual Features. Information 2022, 13, 388. https://doi.org/10.3390/info13080388

Zhang Z, Dan Z, Dong F, Gao Z, Zhang Y. A Rumor Detection Method Based on Adaptive Fusion of Statistical Features and Textual Features. Information. 2022; 13(8):388. https://doi.org/10.3390/info13080388

Chicago/Turabian StyleZhang, Ziyan, Zhiping Dan, Fangmin Dong, Zhun Gao, and Yanke Zhang. 2022. "A Rumor Detection Method Based on Adaptive Fusion of Statistical Features and Textual Features" Information 13, no. 8: 388. https://doi.org/10.3390/info13080388

APA StyleZhang, Z., Dan, Z., Dong, F., Gao, Z., & Zhang, Y. (2022). A Rumor Detection Method Based on Adaptive Fusion of Statistical Features and Textual Features. Information, 13(8), 388. https://doi.org/10.3390/info13080388