Integrating Human Factors in the Visualisation of Usable Transparency for Dynamic Risk Assessment

Abstract

:1. Introduction

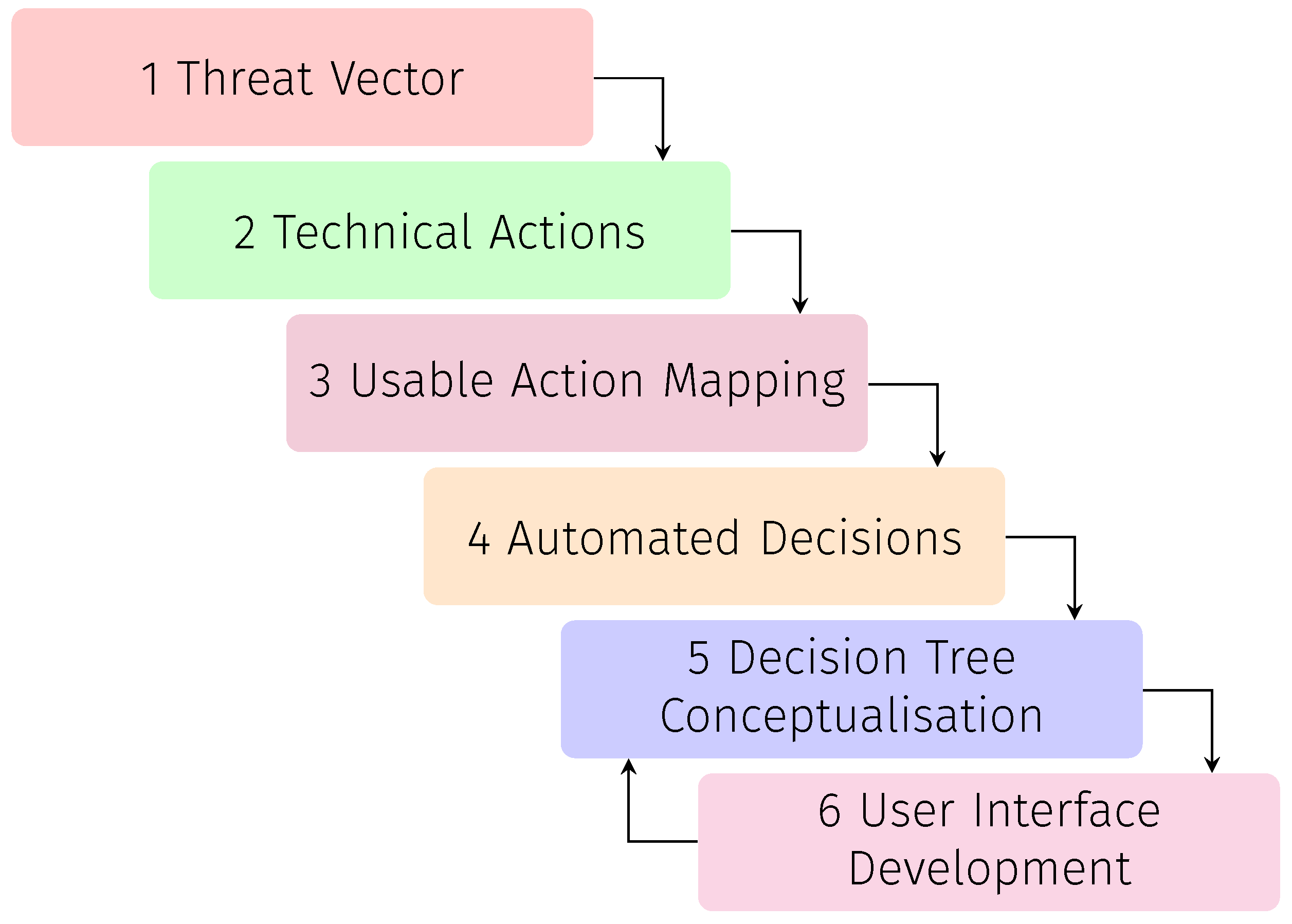

- Define and demonstrate the methodological process for mapping technical actions to usable and automatable mitigation techniques;

- Test our model in a smart home environment and present the results found based on an interactive user-centric approach;

- Design a decision tree conceptualisation by exploring the perception of the end users regarding security, privacy-related risks and the associated Information Retrieval (IR) methods in the context of usable security;

- Implement the decision tree model as a result of our research and translate it into a final set of decision-making monitor and control user interfaces (UIs).

2. Related Work

2.1. Security Usability Guidelines

- General principles: relies on the path of least resistance and appropriate boundaries;

- Actor-ability state maintenance: achieved by explicit authorisation, visibility, revocability and expected ability;

- Communication with the user: accomplished with a trusted path, identifiability, expressiveness and clarity.

2.2. Security and Privacy Risk Perception

2.3. Risk Assessment for Threat Mitigation as a Usability Improvement

2.4. User-Centric Approaches

- Collage building: enables the end user to be in charge of the engagement method and the extent of their contribution and experience sharing;

- Questionable concepts: facilitates the expression of opinions as provocative concepts are proposed by the designers;

- Digital portraits: permits establishing trust between participating parties.

- 1.

- Context of use: defined by the users, tasks and environment;

- 2.

- System awareness: ensures the system is understandable for the end user by mapping the conceptual model to the user’s mental model;

- 3.

- System design: selects UI patterns to support the envisioned functionality of the final system;

- 4.

- Design evaluation: an iterative cycle through feedback collection and analysis.

3. Methodology for User Action Mapping

4. Threat Vector Landscape

4.1. Physical Attacks

4.1.1. Physical Damage (P1)

- Removing the battery: Z-Wave and Zigbee devices;

- Shutting down the device: Wi-Fi devices.

4.1.2. Malicious Device Injection (P2)

- Following the typical procedures for adding a new device to the IoT installation;

- Using a Z-Wave sniffer to demonstrate the sniffing of events via a new device.

4.1.3. Mechanical Exhaustion (P3)

4.2. Network Attacks

4.2.1. Traditional Attacks (N1)

- nmap: SYN scan, XMAS scan and full scan;

- tcpreplay, tcprewrite, Scapy: DoS attack and Distributed denial-of-service (DDoS) attack.

4.2.2. Device Impersonation (N2)

4.2.3. Side Channel Attacks (N3)

- Bluetooth: packets may be sniffed by leveraging the device’s capability (e.g., Android has a built-in sniffer);

- Z-Wave: a special device is needed to register for the network and to sniff the Z-Wave packets;

- Wi-Fi and Ethernet: traditional network sniffing tools such as Wireshark or tcpdump may be used.

4.2.4. Unusual Activities and Battery-Depleting Attacks (N4)

4.3. Software Attacks

4.3.1. Traditional Attacks (S1)

- Packet capture (PCAP) files containing malware traces: malware traces shall be used from public sources (https://zeltser.com/malware-sample-sources/, accessed on 12 April 2022);

- tcpreplay, tcprewrite: these two tools are used to edit and replay the malware PCAP files against the gateway.

4.3.2. Compromised Software Attacks (S2)

- Network analysis: the periodic generation of PCAP files;

- Anomaly reporting: the periodic alerts issued;

- Configuration manager: the status of each OS process and the consumption of CPU and memory for each gateway OS process.

4.3.3. Command Injection (S3)

4.3.4. Mechanical Exhaustion (S4)

4.3.5. Sleep Deprivation (S5)

5. Technical Actions

5.1. Physical Attack Actions

5.2. Network Attack Actions

5.3. Software Attack Actions

6. Decision Automation in Risk Assessment

- Real-time risk assessment;

- Decision automation;

- Security usability.

- Behaviour analyser (BA): the main purpose of this analyser is to detect any deviation from the device’s normal behaviour;

- Payload check (PC): a set of defined rules aiming to detect the presence of the user’s sensitive data within the traffic flow;

- Block rules (BR): responsible for verifying the destination’s maliciousness from personalised settings and common shared intelligence;

- Alert processor (AP): alert extraction analytics from external input for anomaly detection.

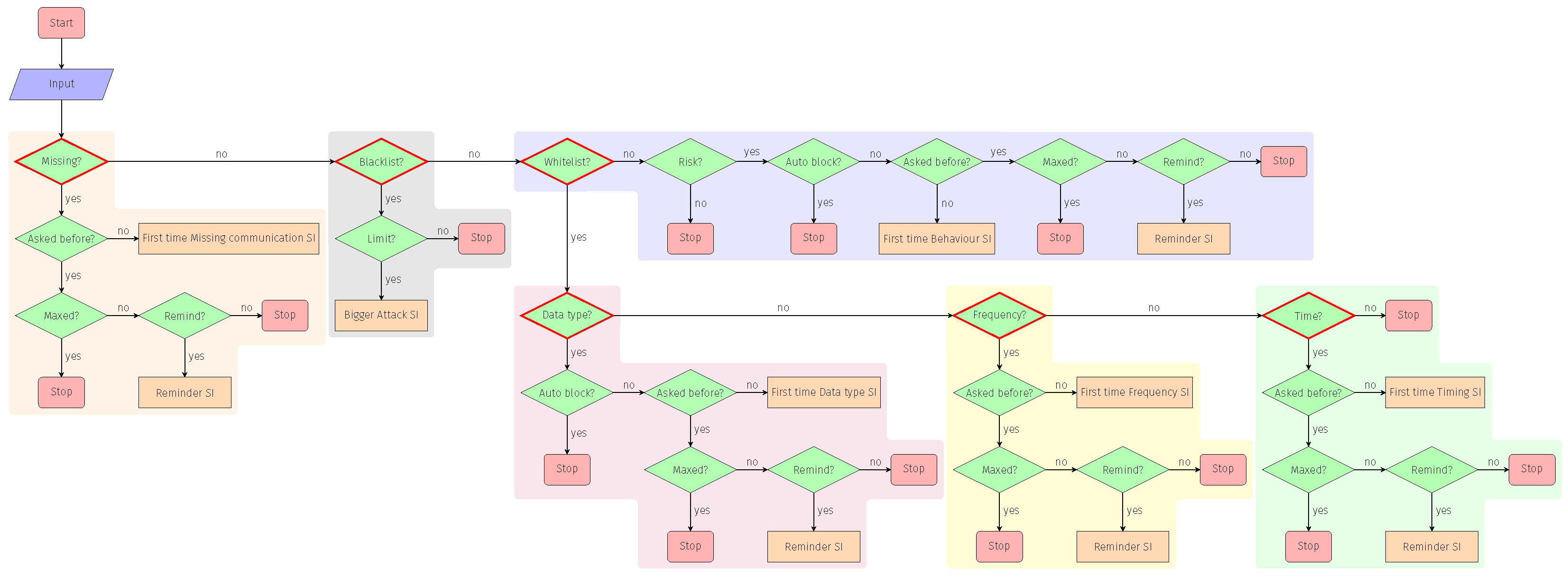

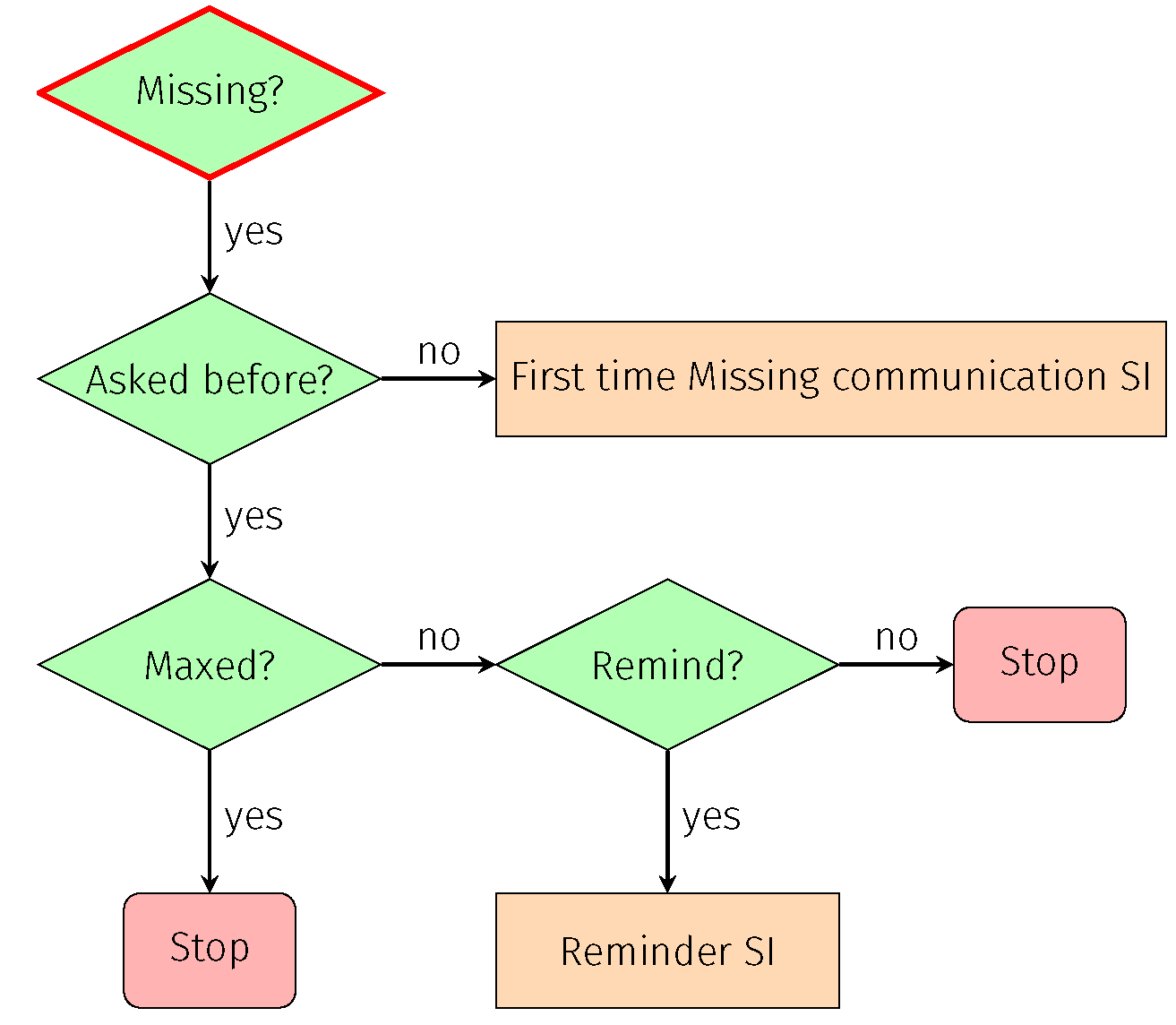

- Missing communication (absence): Absence of the device’s communication in relation to its normal behaviour;

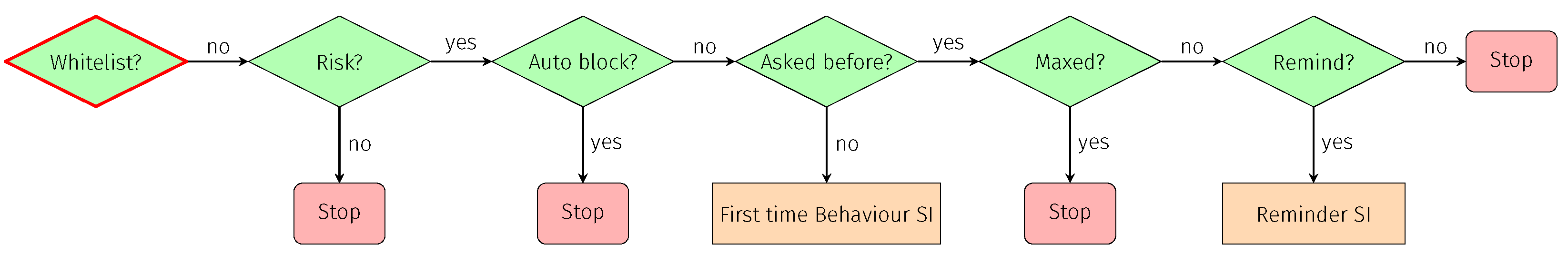

- Whitelisting: New communication was neither blacklisted nor whitelisted before by the user or the GHOST solution itself;

- Data type (privacy): Private data leakage is tracked, and the user is informed of the violation of the policies defined;

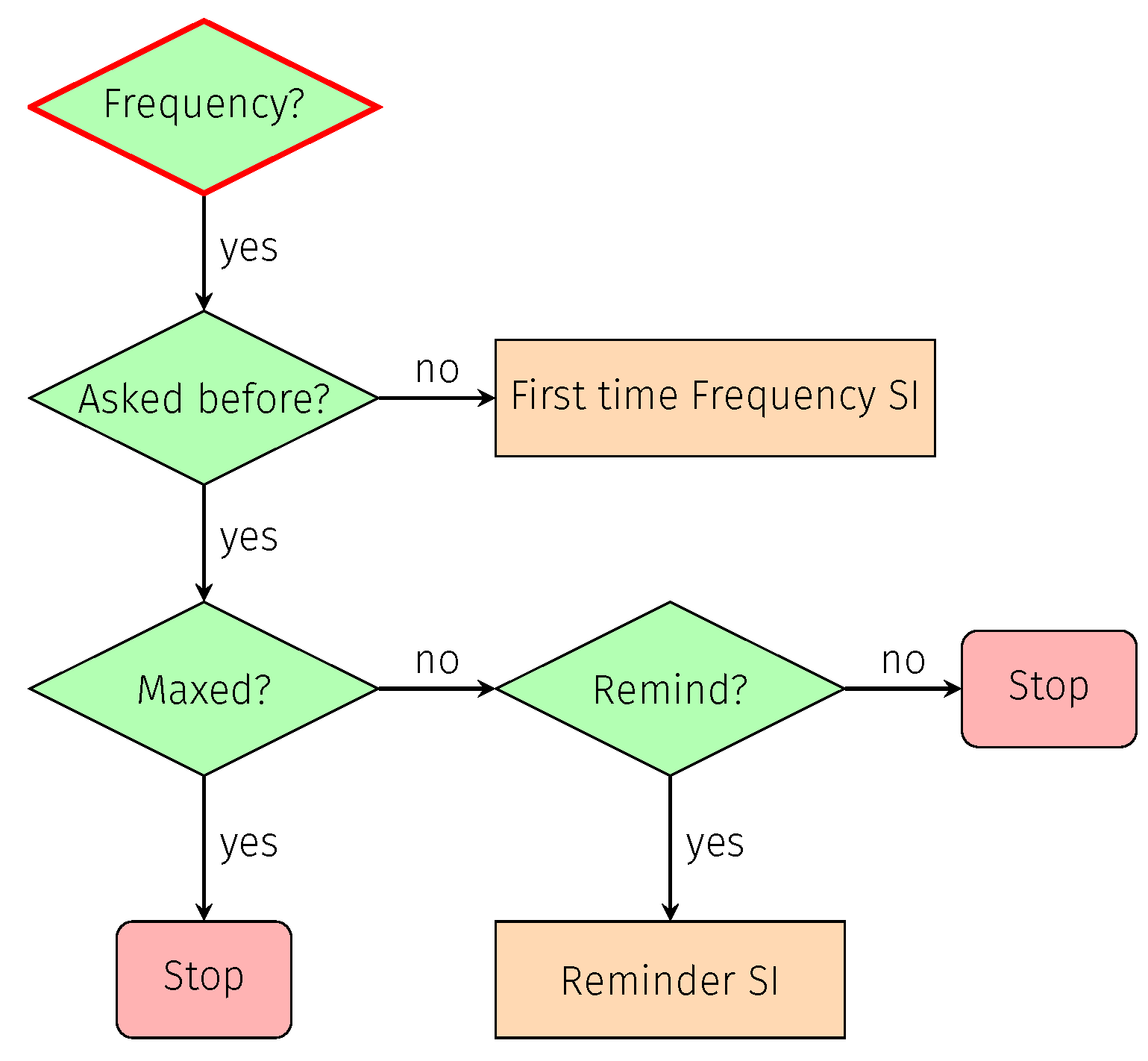

- Frequency: A suspicious situation is detected in terms of the communication frequency, being too often or not frequent enough;

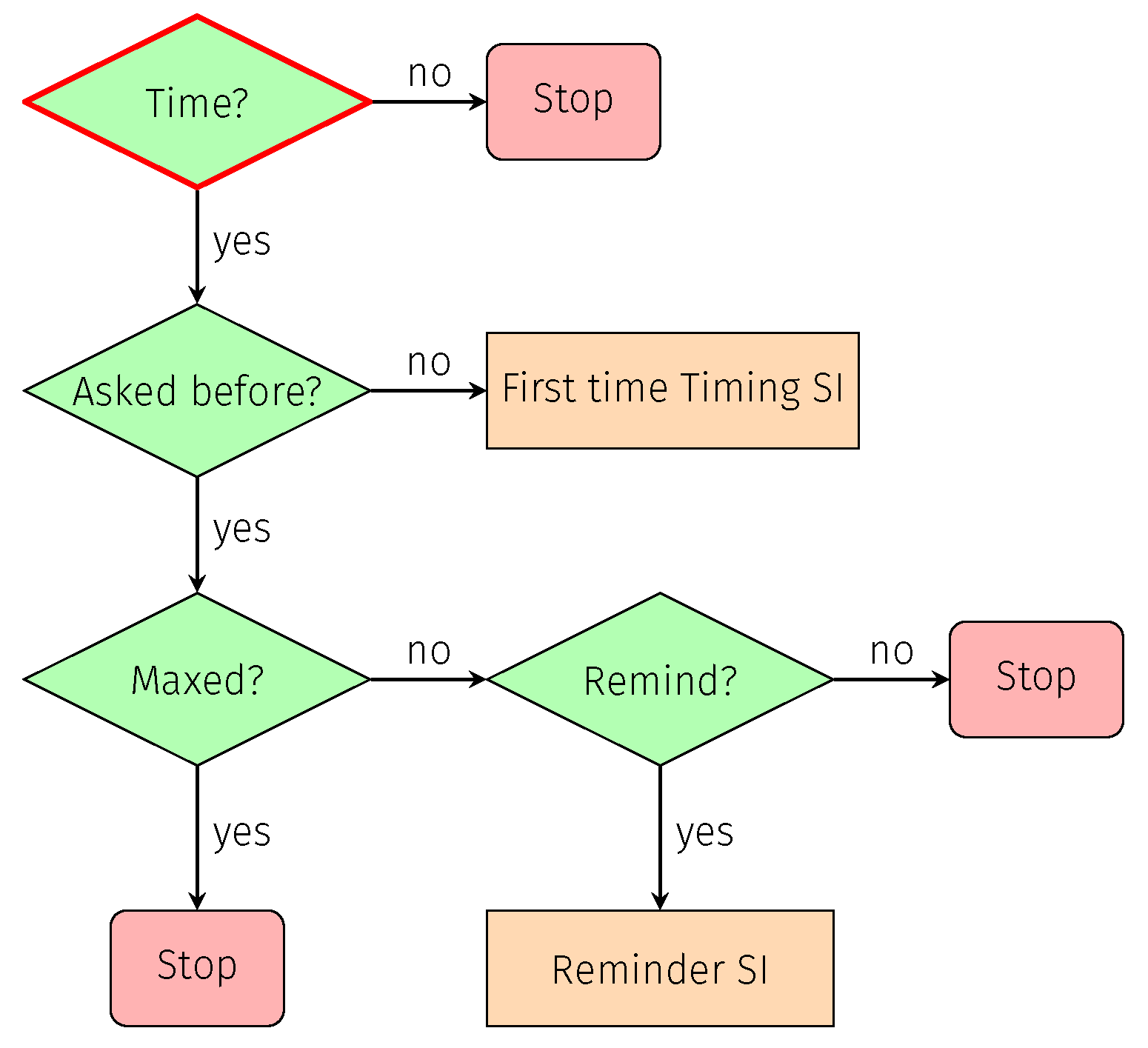

- Time (timing): The pattern of communication stays within the safe profile derived, but the actual timing is suspicious;

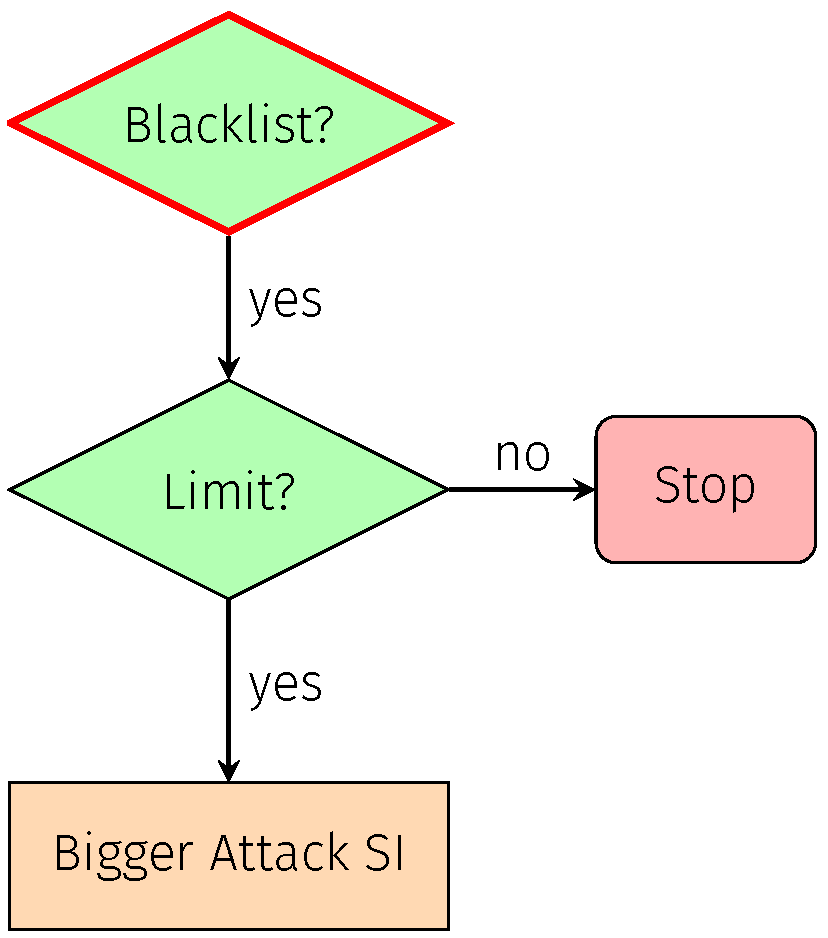

- Blacklisting: Known illegitimate communication is taking place, but it is generating activity on the internal network despite being blocked from further external propagation.

7. Decision Tree Conceptualisation

7.1. Decision Branches

7.1.1. Missing Communication

7.1.2. Whitelisting

7.1.3. Data Type

7.1.4. Frequency

7.1.5. Time

7.1.6. Blacklisting

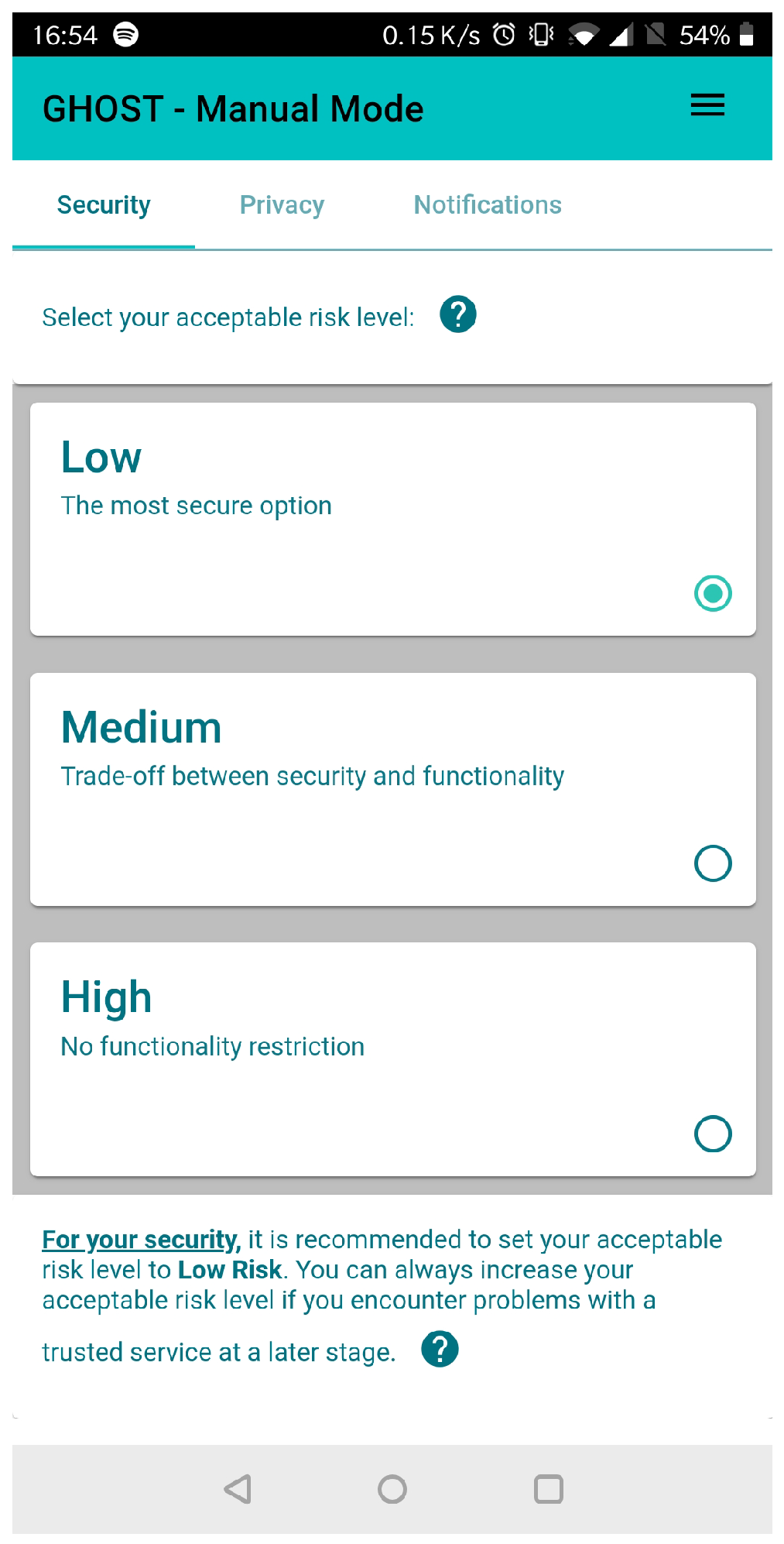

7.2. Configuring the DRA

- 1.

- The development approach was based on the requirements derived from literature research and the results from the first set of user studies. Furthermore, the categorisation of the initial set of navigation pages was developed and is outlined in Table 10.

- 2.

- For the second iteration, the Configuration (CFG) interfaces were improved in terms of their usability with a mobile device form factor, and a new menu was developed for easier access to the different CFG sections. Furthermore, the colour theme was updated to match the official project theme.

- 3.

- The third iteration of the configuration was refined based on the results from the second set of user studies and the derived requirements. The updated specifications for the categorisation of the initial set of navigation pages developed are shown in Table 11.

- 4.

7.3. Monitoring Automated DRA

- 1.

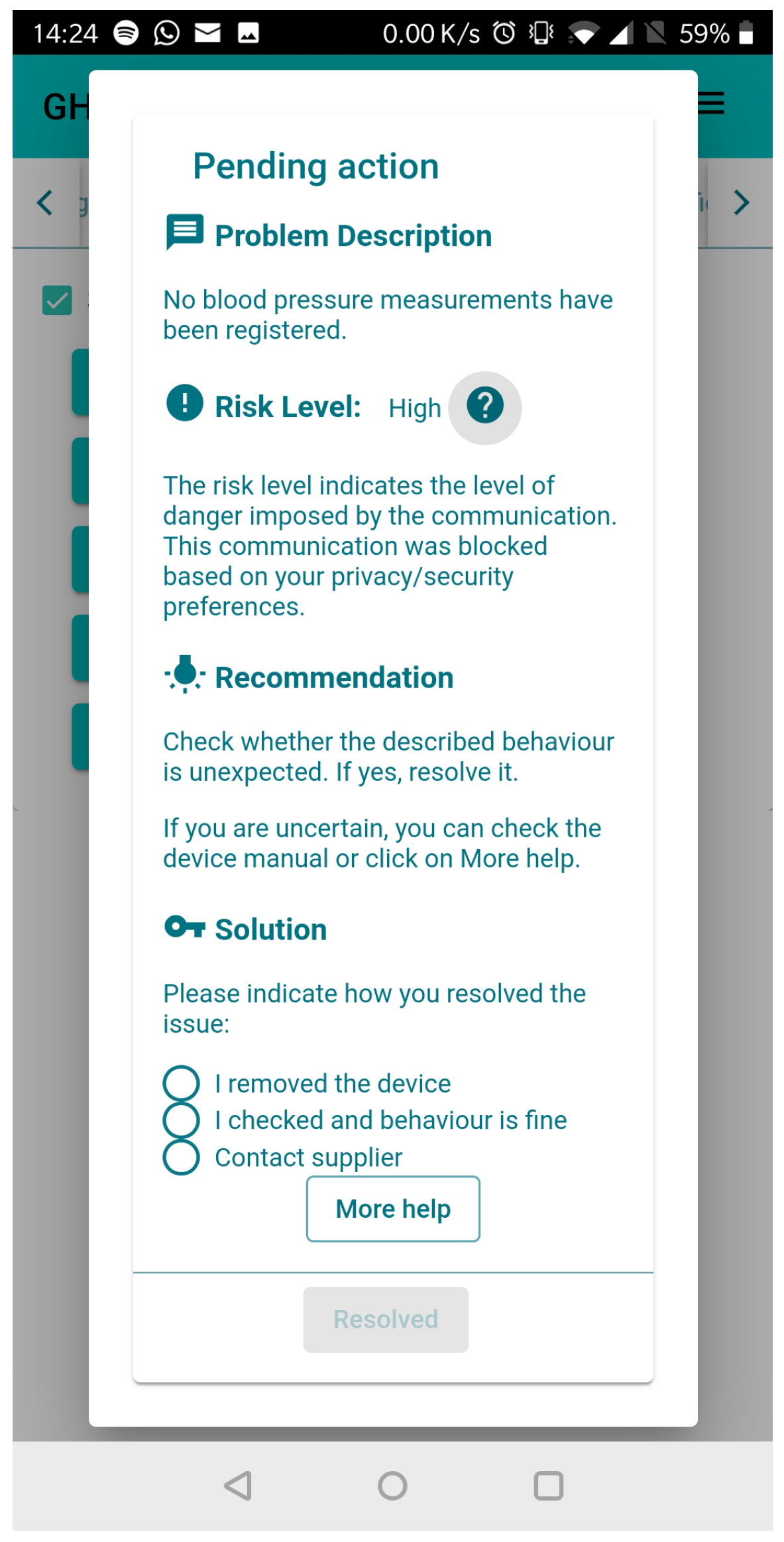

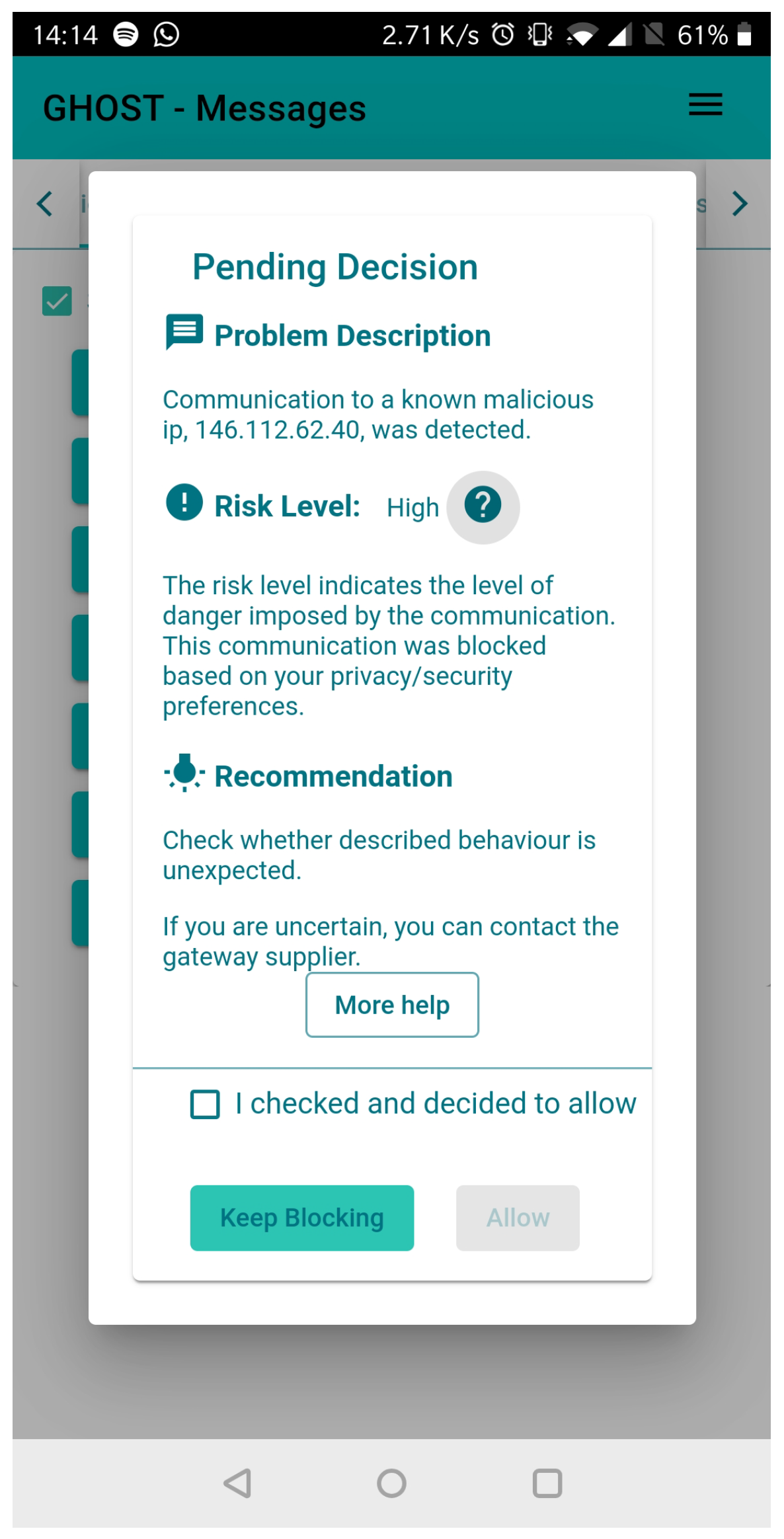

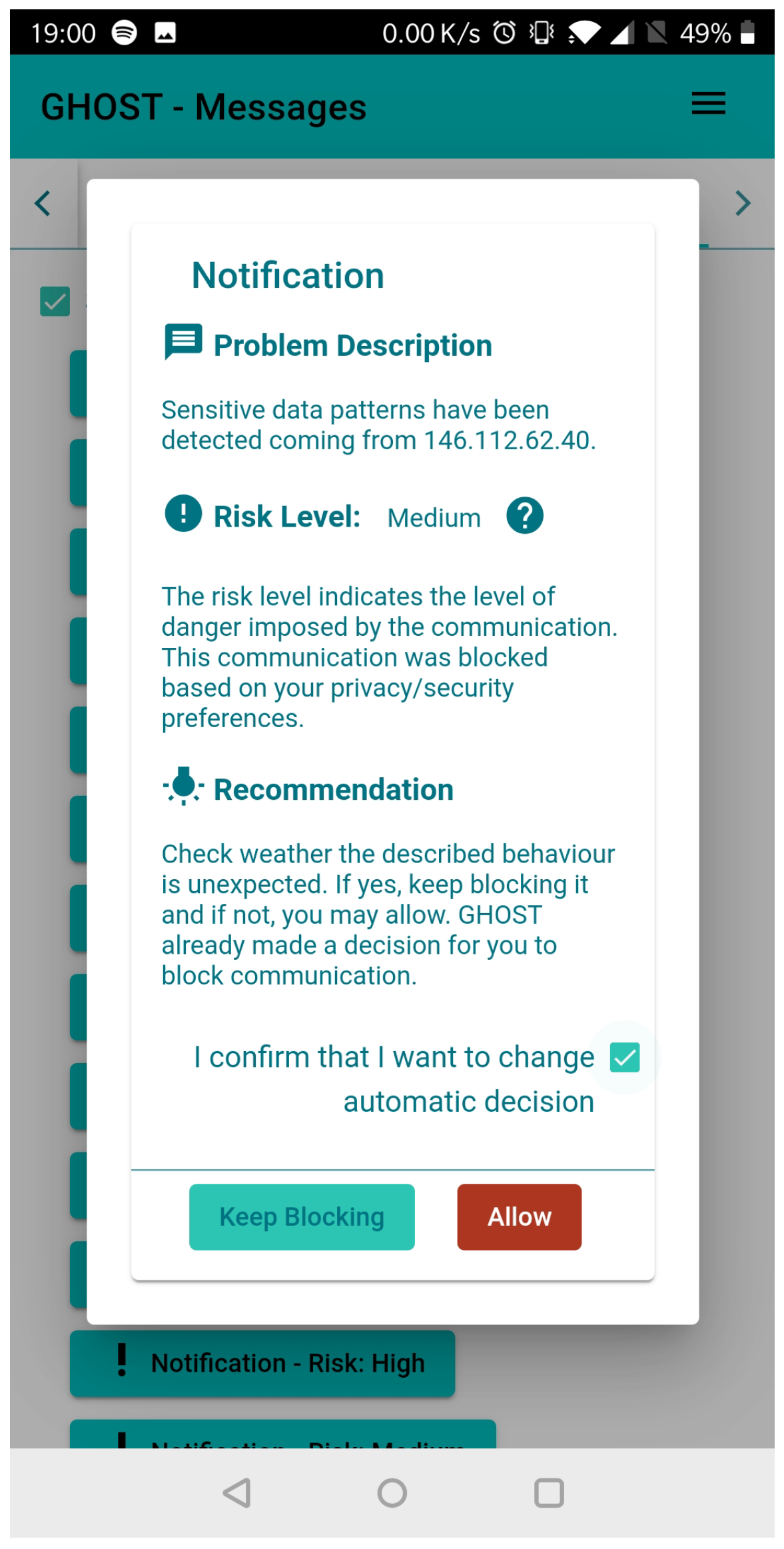

- The first iteration of the security intervention interface was developed based on the requirements from the initial literature review. Furthermore, the initial listing of possible interactions with the GHOST solution was created. This outline is summarised in Table 12.

- 2.

- To provide a more fluent and unified experience, the SI notifications and related front-end were included in the same Angular web application package as the CFG interface.

- 3.

- The second prototype was developed based on the input from the user trials, particularly by attempting to provide a more understandable and actionable input for the user’s decisions.

- 4.

- The existing SI was further fine-tuned in preparation for the third trials, where attack simulations would be performed. For this purpose, not only were additional menu items were added, but the generic system flow was also amended to reassure the end user and provide additional information on the ongoing evaluation and feedback gathering.

- 5.

8. Discussion and Future Work

- RQ1

- Limitations on automation: We started our research with the identification of possible technical actions to mitigate the exposure to smart home threats. As outlined in Section 5, for each category of the attacks, we derived a list of technical actions further linked to the automation feasibility, as presented in Table 2. As can be observed, only actions of a physical nature (such as physical verification of the device integrity or battery state) were not possible to automate. However, we were able to address this through inclusion of the actions in mitigation advisory, enabling guidance to the user.

- RQ2

- Usable actions translation: Guided by the user studies and continuous feedback collection, we were able to derive a short set of usable actions to be presented as part of the final UI. The process we followed transformed the initial interfaces significantly with the minimum information on the detailed and fine-tuned textual descriptions to have maximum user engagement. Limiting the number of usable actions had a positive effect on the usability aspects of the final interfaces, which was showcased during real-life pilot deployments with an average System Usability Scale (SUS) score [38] that increased throughout the project’s lifetime.

- RQ3

- Perception’s linkability to engagement: The differences and impact of personal risk perception were a key challenge that we addressed in this research. As pointed out by Gerber et al. [17], ‘people tend to base their decisions on perceived risk instead of actual risk’, and this human aspect complicates the translation of technical risk information into a format that would engage the user in his or her digital security and privacy exposure. As a result, for the risks emanating from the users’ IoT assets, which are often not directly perceivable, the users are unaware of the consequences. While we derived a set of usable actions, it neither directly engages the user nor provides any preferences for how much a user wants to be in control or, for that matter, be informed. For this purpose, we proposed and developed a decision tree concept and three types of awareness preferences (outlined in Section 6 and Section 7, respectively). While the provided solution was refined through four iterations and proved to be successful during the project deployments, the inadvertent limitation by the published works on the user studies should be noted for future research [16,17,18], as they were conducted mainly in Germany and their samples were likely to be biased due to the usage of an online recruitment panel. With our proposed solution, we provide the means to address the security and privacy perception of each individual user while preserving a high level of security through balancing the automation and security preferences.

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AP | Alert processor |

| BA | Behaviour analyser |

| BR | Block rules |

| CFG | Configuration |

| DDoS | Distributed denial-of-service |

| DoS | Denial-of-service |

| DRA | Dynamic risk assessment |

| IoT | Internet of Things |

| IR | Information retrieval |

| ISys | Information system |

| PC | Payload check |

| PCAP | Packet capture |

| RA | Risk assessment |

| SI | Security intervention |

| SUS | System Usability Scale |

| UI | User interface |

| UX | User experience |

References

- Bansal, M.; Chana, I.; Clarke, S. A Survey on IoT Big Data. ACM Comput. Surv. 2021, 53, 131. [Google Scholar] [CrossRef]

- Almusaylim, Z.A.; Zaman, N. A review on smart home present state and challenges: Linked to context-awareness internet of things (IoT). Wirel. Netw. 2019, 25, 3193–3204. [Google Scholar] [CrossRef]

- Assal, H.; Chiasson, S. “Think secure from the beginning”: A survey with software developers. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–13. [Google Scholar] [CrossRef]

- Jacobsson, A.; Boldt, M.; Carlsson, B. A risk analysis of a smart home automation system. Future Gener. Comput. Syst. 2016, 56, 719–733. [Google Scholar] [CrossRef] [Green Version]

- Collen, A.; Nijdam, N.A. Can I Sleep Safely in My Smarthome? A Novel Framework on Automating Dynamic Risk Assessment in IoT Environments. Electronics 2022, 11, 1123. [Google Scholar] [CrossRef]

- Haim, B.; Menahem, E.; Wolfsthal, Y.; Meenan, C. Visualizing insider threats: An effective interface for security analytics. In Proceedings of the 22nd International Conference on Intelligent User Interfaces Companion, Limassol, Cyprus, 13–16 March 2017; pp. 39–42. [Google Scholar] [CrossRef]

- Realpe, P.C.; Collazos, C.A.; Hurtado, J.; Granollers, A. Towards an integration of usability and security for user authentication. In Proceedings of the XVI International Conference on Human Computer Interaction, Vilanova i la Geltru, Spain, 7–9 September 2015; p. 43. [Google Scholar] [CrossRef]

- Preibusch, S. Privacy behaviors after Snowden. Commun. ACM 2015, 58, 48–55. [Google Scholar] [CrossRef] [Green Version]

- Dhillon, G.; Oliveira, T.; Susarapu, S.; Caldeira, M. Deciding between information security and usability: Developing value based objectives. Comput. Hum. Behav. 2016, 61, 656–666. [Google Scholar] [CrossRef]

- Andriotis, P.; Oikonomou, G.; Mylonas, A.; Tryfonas, T. A study on usability and security features of the Android pattern lock screen. Inf. Comput. Secur. 2016, 24, 53–72. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, H. Security and Privacy Challenges in the Internet of Things [Security and Privacy Matters]. IEEE Consum. Electron. Mag. 2017, 6, 134–136. [Google Scholar] [CrossRef]

- Alur, R.; Berger, E.; Drobnis, A.W.; Fix, L.; Fu, K.; Hager, G.D.; Lopresti, D.; Nahrstedt, K.; Mynatt, E.; Patel, S.; et al. Systems Computing Challenges in the Internet of Things. arXiv 2016, arXiv:1604.02980. [Google Scholar] [CrossRef]

- Nurse, J.R.; Atamli, A.; Martin, A. Towards a usable framework for modelling security and privacy risks in the smart home. In Proceedings of the 4th International Conference on Human Aspects of Information Security, Privacy, and Trust, Toronto, ON, Canada, 17–22 July 2016; Volume 9750, pp. 255–267. [Google Scholar]

- Dutta, S.; Madnick, S.; Joyce, G. SecureUse: Balancing security and usability within system design. In Proceedings of the 18th International Conference on Human-Computer Interaction, Toronto, ON, Canada, 17–22 July 2016; Communications in Computer and Information Science. Volume 617, pp. 471–475. [Google Scholar]

- Augusto-Gonzalez, J.; Collen, A.; Evangelatos, S.; Anagnostopoulos, M.; Spathoulas, G.; Giannoutakis, K.M.; Votis, K.; Tzovaras, D.; Genge, B.; Gelenbe, E.; et al. From Internet of Threats to Internet of Things: A Cyber Security Architecture for Smart Homes. In Proceedings of the 2019 IEEE 24th International Workshop on Computer Aided Modeling and Design of Communication Links and Networks (CAMAD), Limassol, Cyprus, 11–13 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Gerber, N.; Reinheimer, B.; Volkamer, M. Home Sweet Home? Investigating Users’ Awareness of Smart Home Privacy Threats. Proceedings of an Interactive Workshop on the Human Aspects of Smarthome Security and Privacy (WSSP), Baltimore, MD, USA, 12 August 2018. [Google Scholar]

- Gerber, N.; Reinheimer, B.; Volkamer, M. Investigating People’s Privacy Risk Perception. Proc. Priv. Enhancing Technol. 2019, 2019, 267–288. [Google Scholar] [CrossRef]

- Duezguen, R.; Mayer, P.; Berens, B.; Beckmann, C.; Aldag, L.; Mossano, M.; Volkamer, M.; Strufe, T. How to Increase Smart Home Security and Privacy Risk Perception. In Proceedings of the 2021 IEEE 20th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Shenyang, China, 20–22 October 2021; pp. 997–1004. [Google Scholar] [CrossRef]

- Yee, K.P. Aligning security and usability. IEEE Secur. Priv. 2004, 2, 48–55. [Google Scholar] [CrossRef] [Green Version]

- Caputo, D.D.; Pfleeger, S.L.; Sasse, M.A.; Ammann, P.; Offutt, J.; Deng, L. Barriers to Usable Security? Three Organizational Case Studies. IEEE Secur. Priv. 2016, 14, 22–32. [Google Scholar] [CrossRef]

- Balfanz, D.; Durfee, G.; Smetters, D.; Grinter, R. In search of usable security: Five lessons from the field. IEEE Secur. Priv. Mag. 2004, 2, 19–24. [Google Scholar] [CrossRef]

- Atzeni, A.; Faily, S.; Galloni, R. Usable Security. In Encyclopedia of Information Science and Technology, 4th ed.; Khosrow-Pour, D.B.A., Ed.; IGI Global: Hershey, PA, USA, 2018; Chapter 433; pp. 5004–5013. [Google Scholar] [CrossRef]

- Barbosa, N.M.; Zhang, Z.; Wang, Y. Do Privacy and Security Matter to Everyone? Quantifying and Clustering User-Centric Considerations About Smart Home Device Adoption. In Proceedings of the Sixteenth Symposium on Usable Privacy and Security (SOUPS 2020), Online, 7–11 August 2020; pp. 417–435. [Google Scholar]

- Emami-Naeini, P.; Agarwal, Y.; Faith Cranor, L.; Hibshi, H. Ask the experts: What should be on an IoT privacy and security label? In Proceedings of the 2020 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 18–21 May 2020; pp. 447–464. [Google Scholar] [CrossRef]

- Tabassum, M.; Carolina, N.; Kosinski, T.; Clara, S. “I don’ t own the data”: End User Perceptions of Smart Home Device Data Practices and Risks. In Proceedings of the Fifteenth USENIX Conference on Usable Privacy and Security, Santa Clara, CA, USA, 11–13 August 2019; pp. 435–450. [Google Scholar]

- Bugeja, J.; Jacobsson, A.; Davidsson, P. On Privacy and Security Challenges in Smart Connected Homes. In Proceedings of the 2016 European Intelligence and Security Informatics Conference (EISIC), Uppsala, Sweden, 17–19 August 2016; pp. 172–175. [Google Scholar] [CrossRef] [Green Version]

- Vervier, P.A.; Shen, Y. Before toasters rise up: A view into the emerging IoT threat landscape. In Proceedings of the 21st International Symposium on Research in Attacks, Intrusions, and Defenses, Heraklion, Greece, 10–12 September 2018; pp. 556–576. [Google Scholar]

- Haney, J.M.; Furman, S.M.; Acar, Y. Smart home security and privacy mitigations: Consumer perceptions, practices, and challenges. In Proceedings of the 2nd International Conference on Human-Computer Interaction, Copenhagen, Denmark, 19–24 July 2020; pp. 393–411. [Google Scholar]

- Dunphy, P.; Vines, J.; Coles-Kemp, L.; Clarke, R.; Vlachokyriakos, V.; Wright, P.; McCarthy, J.; Olivier, P. Understanding the Experience-Centeredness of Privacy and Security Technologies. In Proceedings of the 2014 workshop on New Security Paradigms Workshop—NSPW ’14, Victoria, BC, Canada, 15–18 September 2014; ACM Press: New York, NY, USA, 2014; pp. 83–94. [Google Scholar] [CrossRef] [Green Version]

- Collard, H.; Briggs, J. Creative Toolkits for TIPS. In Proceedings of the ESORICS 2020: European Symposium on Research in Computer Security, Guildford, UK, 17–18 September 2020; Boureanu, I., Druagan, C.C., Manulis, M., Giannetsos, T., Dadoyan, C., Gouvas, P., Hallman, R.A., Li, S., Chang, V., Pallas, F., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 39–55. [Google Scholar]

- Victora, S. IoT Guard: Usable Transparency and Control over Smart Home IoT Devices. Ph.D. Thesis, Institut für Information Systems Engineering, Montréal, QC, Canada, 2020. [Google Scholar] [CrossRef]

- Bada, M.; Sasse, A.M.; Nurse, J.R.C. Cyber Security Awareness Campaigns: Why do they fail to change behaviour? arXiv 2019, arXiv:1901.02672. [Google Scholar] [CrossRef]

- Chalhoub, G.; Flechais, I.; Nthala, N.; Abu-Salma, R.; Tom, E. Factoring user experience into the security and privacy design of smart home devices: A case study. In Proceedings of the CHI ’20: CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Feth, D.; Maier, A.; Polst, S. A user-centered model for usable security and privacy. In Proceedings of the 5th International Conference on Human Aspects of Information Security, Privacy, and Trust, Vancouver, BC, Canada, 9–14 July 2017; pp. 74–89. [Google Scholar]

- Grobler, M.; Gaire, R.; Nepal, S. User, Usage and Usability: Redefining Human Centric Cyber Security. Front. Big Data 2021, 4, 583723. [Google Scholar] [CrossRef] [PubMed]

- Heartfield, R.; Loukas, G.; Budimir, S.; Bezemskij, A.; Fontaine, J.R.; Filippoupolitis, A.; Roesch, E. A taxonomy of cyber-physical threats and impact in the smart home. Comput. Secur. 2018, 78, 398–428. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; McCune, J.; Baker, B.; Newsome, J.; Drewry, W.; Perrig, A. Minibox: A two-way sandbox for x86 native code. In Proceedings of the 2014 USENIX Annual Technical Conference, USENIX ATC 2014, Philadelphia, PA, USA, 19–20 June 2014; USENIX Association: Philadelphia, PA, USA, 2014; pp. 409–420. [Google Scholar]

- Lewis, J.R. The System Usability Scale: Past, Present, and Future. Int. J. Hum.-Comput. Interact. 2018, 34, 577–590. [Google Scholar] [CrossRef]

| Attacks | ID | Validation Methodology | Software Tools | |

|---|---|---|---|---|

| Physical attacks | Physical damage | P1 | Remove battery, shut down | N/A |

| Malicious device injection | P2 | Device registration, sniffers | N/A | |

| Mechanical exhaustion | P3 | Trigger device operation | N/A | |

| Network attacks | Traditional attacks | N1 | Scanning and enumeration | nmap, Scapy, tcpreplay |

| Device impersonation | N2 | Packet injection | Scapy, tcpreplay, tcprewrite | |

| Side channel attacks | N3 | Hardware or software sniffers | Wireshark, tcpdump | |

| Unusual activities and battery-depleting attacks | N4 | Packet injection, sniffers | Scapy, tcpreplay, tcprewrite | |

| Software attacks | Traditional attacks | S1 | Traffic replay | PCAP files, tcpreplay, tcprewrite |

| Compromised software attacks | S2 | Alter module behaviour | Module-specific software | |

| Command injection | S3 | Inject legitimate commands | Specially crafted software | |

| Mechanical exhaustion | S4 | Inject legitimate commands | Specially crafted software | |

| Sleep deprivation | S5 | Inject legitimate commands | Specially crafted software | |

| ID | Description | Automatable |

|---|---|---|

| T1 | Verify physical integrity | No |

| T2 | Verify battery | No |

| T3 | One-way sandboxing | Yes |

| T4 | Two-way sandboxing | Yes |

| T5 | Permit | Yes |

| T6 | Block device temporarily | Yes |

| T7 | Block device permanently | Yes |

| T8 | Drop packets for flow temporarily | Yes |

| T9 | Drop packets for flow permanently | Yes |

| T10 | Drop packets for source temporarily | Yes |

| T11 | Drop packets for source permanently | Yes |

| T12 | Restart GHOST | Yes |

| T13 | Restart module | Yes |

| T14 | Disable module temporarily | Yes |

| T15 | Disable module permanently | Yes |

| T16 | Send update request | Yes |

| ID | Description | Linked Technical Actions |

|---|---|---|

| U1 | Allow | T5, T16 |

| U2 | Block | T3, T4, T6, T7, T8, T9, T10, T11 |

| U3 | Ignore | T3, T4, T5, T6, T7, T8, T9, T10, T11, T16 |

| U4 | Remind | T3, T4, T5, T6, T7, T8, T9, T10, T11, T16 |

| U5 | Advisory | T1, T2, T12, T13, T14, T15 |

| Attack ID | Attack Name | Action | Action ID |

|---|---|---|---|

| P1 | Damage | Verify physical integrity | T1 |

| Verify battery | T2 | ||

| One-way sandboxing | T3 | ||

| Two-way sandboxing | T4 | ||

| Block device (temp or perm) | T6, T7 | ||

| P2 | Device injection | Permit | T5 |

| One-way sandboxing | T3 | ||

| Two-way sandboxing | T4 | ||

| Block device (temp or perm) | T6, T7 | ||

| P3 | Mechanical exhaustion | Permit | T5 |

| One-way sandboxing | T3 | ||

| Two-way sandboxing | T4 | ||

| Block device (temp or perm) | T6, T7 |

| Attack ID | Attack Name | Action | Action ID |

|---|---|---|---|

| N1 | Traditional | Drop packets for flow (temp or perm) | T8, T9 |

| Drop packets for source (temp or perm) | T10, T11 | ||

| N2 | Device impersonation | One-way sandboxing (temp or perm) | T3 |

| Two-way sandboxing (temp or perm) | T4 | ||

| Block device (temp or perm) | T6, T7 | ||

| N3 | Side-channel | (design time test only) | – |

| N4 | Battery attacks | Drop packets for flow (temp or perm) | T8, T9 |

| Drop packets for source (temp or perm) | T10, T11 |

| Attack ID | Attack Name | Action | Action ID |

|---|---|---|---|

| S1 | Traditional | Drop packets for flow (temp or perm) | T8, T9 |

| Drop packets for source (temp or perm) | T10, T11 | ||

| S2 | Software compromise | Restart module or GHOST | T12,T13 |

| Disable module (temp or perm) | T14, T15 | ||

| Send update request | T16 | ||

| S3 | Command injection | Drop packets for flow (temp or perm) | T8, T9 |

| Drop packets for source (temp or perm) | T10, T11 | ||

| S4 | Mechanical exhaustion | Drop packets for flow (temp or perm) | T8, T9 |

| Drop packets for source (temp or perm) | T10, T11 | ||

| S5 | Sleep deprivation | Drop packets for flow (temp or perm) | T8,T9 |

| Drop packets for source (temp or perm) | T10, T11 |

| Attack ID | Apt Analyser | Rationale |

|---|---|---|

| P1 | BA | Absence or change in behaviour of communication |

| P2 | BA and AP | No behaviour profile present and alert propagation on non-registered device |

| P3 | BA and AP | Absence or change in behaviour of communication and alert propagation on anomalous traffic |

| N1 | AP | Alert propagation in threat detection |

| N2 | BA and AP | Absence or change in behaviour of communication and alert propagation in anomalous traffic |

| N3 | BA, PC and AP | Absence or change in behaviour of communication, presence of sensitive data and alert |

| propagation in anomalous traffic | ||

| N4 | BA and AP | Absence or change in behaviour of communication and alert propagation in anomalous traffic |

| S1 | AP | Alert propagation in threat detection |

| S2 | BA, PC and BR | Absence or change in behaviour of communication, presence of sensitive data and |

| attempted communication with malicious destination | ||

| S3 | BA and BR | Absence or change in behaviour of communication and attempted communication |

| with malicious destination | ||

| S4 | BA and AP | Absence or change in behaviour of communication and alert propagation in anomalous traffic |

| S5 | BA and AP | Absence or change in behaviour of communication and alert propagation in anomalous traffic |

| Name | Description | Informed on Any Decision | Allow Risk-Controlled Automation |

|---|---|---|---|

| Raise Awareness | Stay informed of any decisions that GHOST made by displaying a corresponding notification. The GHOST system will automatically block any suspicious communication as soon as maximum risk level is exceeded. | Yes | Yes |

| Enforced Awareness | Stay informed of any decisions that GHOST made by displaying a corresponding notification. GHOST will not perform any automatic decisions when the maximum risk level is exceeded. One will be constantly prompted to review suggested actions and make decisions by one’s self. | Yes | No |

| Problem Awareness | Stay informed of decisions that GHOST made only when exceeding maximum risk level by displaying a corresponding notification. GHOST will automatically block any suspicious communication as soon as maximum risk level is exceeded. | No | Yes |

| Absence | Whitelisting | Privacy | Frequency | Timing | Blacklisting | |

|---|---|---|---|---|---|---|

| Raise | - | Automatable | Automatable | Automatable | Automatable | Automatable |

| Enforced | - | - | - | - | - | Automatable |

| Problem | - | Automatable | Automatable | Automatable | Automatable | Automatable |

| Category | Specification |

|---|---|

| Welcome | Initial welcome screen |

| User registration | Initial user registration and authentication set-up |

| Dedicated device registration | User’s interfacing device registration |

| Smart home environment | Configuration of the smart home, aiming to provide settings for:

|

| Mode selection | Three configuration modes are envisioned to target different user profiles:

|

| Step-by-step configuration | Detailed configuration of the GHOST main features:

|

| Category | Specification |

|---|---|

| Mode selection | The three configuration modes are updated to differentiate between different target user profiles:

|

| Step-by-step configuration | Detailed configuration of the GHOST main features:

|

| Category | Specification |

|---|---|

| Mismatching device behaviour |

|

| Communication with blocked src or dst | Attempt to initiate communication with blocked party |

| ‘New’ src or dst (unknown) |

|

| Device parameter anomalies | An overview of the device profile and its activity (e.g., battery power) |

| Payload-related |

|

| Mitigation action | Hypothesis presentation with possible suggestion for mitigation recommendation |

| Current status | Display of the risk level’s current status in relation to defined accepted level for security and privacy risks |

| Predicted status | A risk level estimation representation after the evaluation of the current communication-associated risk |

| Impacts (text) | Possible impact score value |

| Recommendations | Mitigation action to support the decrease of the raised risk level |

| History of risk assessments | Interface link to historical data visualisation |

| History on suspicious activity | Interface link to historical data visualisation |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Collen, A.; Szanto, I.-C.; Benyahya, M.; Genge, B.; Nijdam, N.A. Integrating Human Factors in the Visualisation of Usable Transparency for Dynamic Risk Assessment. Information 2022, 13, 340. https://doi.org/10.3390/info13070340

Collen A, Szanto I-C, Benyahya M, Genge B, Nijdam NA. Integrating Human Factors in the Visualisation of Usable Transparency for Dynamic Risk Assessment. Information. 2022; 13(7):340. https://doi.org/10.3390/info13070340

Chicago/Turabian StyleCollen, Anastasija, Ioan-Cosmin Szanto, Meriem Benyahya, Bela Genge, and Niels Alexander Nijdam. 2022. "Integrating Human Factors in the Visualisation of Usable Transparency for Dynamic Risk Assessment" Information 13, no. 7: 340. https://doi.org/10.3390/info13070340

APA StyleCollen, A., Szanto, I.-C., Benyahya, M., Genge, B., & Nijdam, N. A. (2022). Integrating Human Factors in the Visualisation of Usable Transparency for Dynamic Risk Assessment. Information, 13(7), 340. https://doi.org/10.3390/info13070340