Human Autonomy in the Era of Augmented Reality—A Roadmap for Future Work

Abstract

:1. Introduction and Definition of the Problem Space

2. Nudging versus Behavior Modification

3. Autonomy and the Relation to Privacy

Autonomy

4. Approaches to Ensure Autonomy in the Era of AR

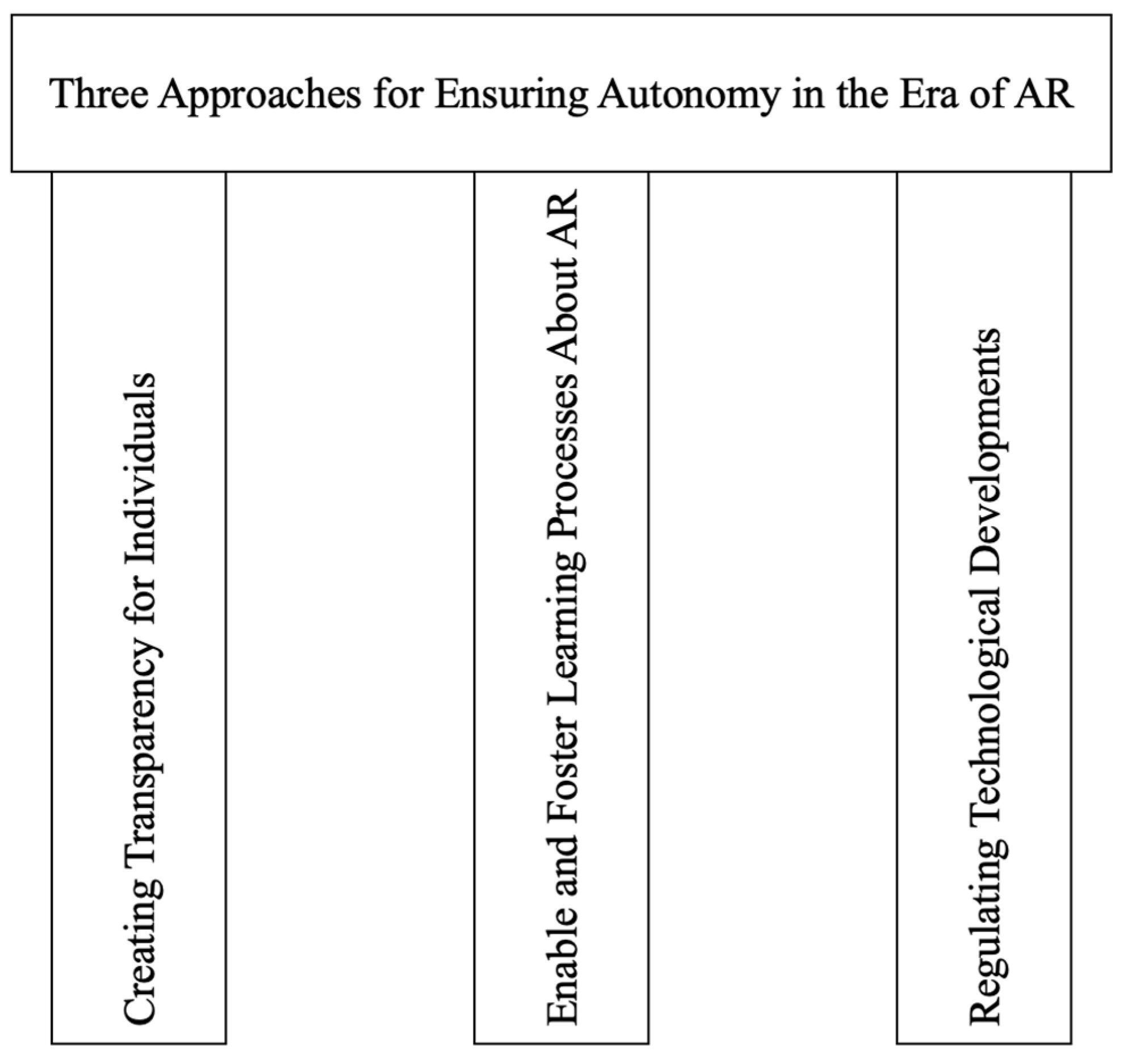

4.1. Creating Transparency for Individuals

4.2. Enable and Foster Learning Processes about AR

4.3. Regulating Technological Developments before They Are Widely Used

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dejan, G. 29+ Augmented Reality Stats to Keep You Sharp in 2020. Available online: https://techjury.net/blog/augmented-reality-stats/ (accessed on 27 August 2020).

- Petrock, V. US Virtual and Augmented Reality Users 2020. Available online: https://www.emarketer.com/content/us-virtual-and-augmented-reality-users-2020 (accessed on 27 August 2020).

- Meta Introducing Meta: A Social Technology Company. Available online: https://about.fb.com/news/2021/10/facebook-company-is-now-meta/ (accessed on 17 November 2021).

- Levy, S. AR Is Where the Real Metaverse Is Going to Happen. Available online: https://www.wired.com/story/john-hanke-niantic-augmented-reality-real-metaverse/ (accessed on 17 November 2021).

- Niantic Labs Official Website of Niantic Labs. Available online: https://www.nianticlabs.com/ (accessed on 3 May 2017).

- Palladino, T. Niantic Opens Private Beta for AR Cloud Platform Now Called Lightship. Available online: https://next.reality.news/news/niantic-opens-private-beta-for-ar-cloud-platform-now-called-lightship-0384633/ (accessed on 17 November 2021).

- Cook, T. Apple CEO Tim Cook Thinks Augmented Reality Will Be as Important as “Eating Three Meals a Day”. Available online: http://www.businessinsider.com/apple-ceo-tim-cook-explains-augmented-reality-2016-10?r=US&IR=T (accessed on 27 January 2017).

- Kaufmann, F.; Rook, L.; Lefter, I.; Brazier, F. Understanding the Potential of Augmented Reality in Manufacturing Environments BT—Information Systems and Neuroscience; Davis, F.D., Riedl, R., vom Brocke, J., Léger, P.-M., Randolph, A.B., Müller-Putz, G., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 128–138. [Google Scholar]

- Baabdullah, A.M.; Alsulaimani, A.A.; Allamnakhrah, A.; Alalwan, A.A.; Dwivedi, Y.K.; Rana, N.P. Usage of Augmented Reality (AR) and Development of e-Learning Outcomes: An Empirical Evaluation of Students’ e-Learning Experience. Comput. Educ. 2022, 177, 104383. [Google Scholar] [CrossRef]

- Ho, V. Tech Monopoly? Facebook, Google and Amazon Face Increased Scrutiny. Available online: https://www.theguardian.com/technology/2019/jun/03/tech-monopoly-congress-increases-antitrust-scrutiny-on-facebook-google-amazon (accessed on 18 April 2020).

- Zuboff, S. The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power; Profile Books; Public Affairs: New York, NY, USA, 2019. [Google Scholar]

- Whitler, K.A. Why Too Much Data Is a Problem and How to Prevent It. Available online: https://www.forbes.com/sites/kimberlywhitler/2018/03/17/why-too-much-data-is-a-problem-and-how-to-prevent-it/#6b8632eb755f (accessed on 18 April 2020).

- Chapple, C. Pokémon GO Has Best Year Ever in 2019, Catching Nearly $900 Million in Player Spending. Available online: https://sensortower.com/blog/pokemon-go-has-best-year-ever-in-2019-catching-nearly-900m-usd-in-player-spending (accessed on 3 April 2020).

- D’Anastasio, C.; Mehrotra, D. The Creators of Pokémon go Mapped the World. Now They’re Mapping You. Available online: https://kotaku.com/the-creators-of-pokemon-go-mapped-the-world-now-theyre-1838974714 (accessed on 2 April 2020).

- Biddle, S. Privacy Scandal Haunts Pokemon Go’s CEO. Available online: https://theintercept.com/2016/08/09/privacy-scandal-haunts-pokemon-gos-ceo/ (accessed on 20 April 2020).

- Hanke, J. Guest Speaker Interview with John Hanke, CEO of Niantic, Inc. Available online: https://executive.berkeley.edu/thought-leadership/video/guest-speaker-interview-john-hanke-ceo-niantic-inc (accessed on 14 April 2020).

- Thaler, R.H.; Sunstein, C.R. Nudge: Improving Decisions about Health, Wealth, and Happiness; Yale University Press: New Haven, CT, USA, 2008. [Google Scholar]

- Sunstein, C. The Ethics of Nudging: An Overview. Yale J. Regul. 2015, 32, 413–450. [Google Scholar] [CrossRef]

- Pichert, D.; Katsikopoulos, K.V. Green Defaults: Information Presentation and pro-Environmental Behavior. J. Environ. Psychol. 2008, 28, 63–73. [Google Scholar] [CrossRef]

- Tversky, A.; Kahneman, D. Judgment under Uncertainty: Heuristics and Biases. Science 1974, 185, 1124–1131. [Google Scholar] [CrossRef]

- Thaler, R.H. Mental Accounting and Consumer Choice. Mark. Sci. 2008, 27, 15–25. [Google Scholar] [CrossRef] [Green Version]

- Kahneman, D. Thinking, Fast and Slow; Farrar, Straus and Giroux: New York, NY, USA, 2011. [Google Scholar]

- Dinev, T.; Mcconnell, A.R.; Smith, H.J. Informing Privacy Research Through Information Systems, Psychology, and Behavioral Economics: Thinking Outside the “APCO” Box. Inf. Syst. Res. 2015, 26, 639–655. [Google Scholar] [CrossRef] [Green Version]

- Schubert, C. On the Ethics of Public Nudging: Autonomy and Agency; MAGKS Papers on Economics; Philipps-Universität Marburg, Faculty of Business Administration and Economics, Department of Economics: Marburg, Germany, 2015; pp. 1–26. [Google Scholar]

- van den Hoven, M. Nudging for Others’ Sake: An Ethical Analysis of the Legitimacy of Nudging Healthcare Workers to Accept Influenza Immunization. Bioethics 2020, 35, 143–150. [Google Scholar] [CrossRef]

- Susser, D.; Roessler, B.; Nissenbaum, H. Technology, Autonomy, and Manipulation. Internet Policy Rev. 2019, 8, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Thaler, R. The Power of Nudges, for Good and Bad. Available online: https://www.nytimes.com/2015/11/01/upshot/the-power-of-nudges-for-good-and-bad.html (accessed on 17 December 2020).

- Sanchez-Rola, I.; Dell’Amico, M.; Kotzias, P.; Balzarotti, D.; Bilge, L.; Vervier, P.-A.; Santos, I. Can i Opt out yet? Gdpr and the Global Illusion of Cookie Control. In Proceedings of the 2019 ACM Asia Conference on Computer and Communications Security, Auckland, New Zealand, 9–12 July 2019; pp. 340–351. [Google Scholar]

- Rauschnabel, P.A.; He, J.; Ro, Y.K. Antecedents to the Adoption of Augmented Reality Smart Glasses: A Closer Look at Privacy Risks. J. Bus. Res. 2018, 92, 374–384. [Google Scholar] [CrossRef]

- Harborth, D.; Pape, S. Investigating Privacy Concerns Related to Mobile Augmented Reality Applications. In Proceedings of the International Conference on Information Systems (ICIS), Munich, Germany, 15–18 December 2019; pp. 1–9. [Google Scholar]

- Harborth, D.; Pape, S. Investigating Privacy Concerns Related to Mobile Augmented Reality Applications—A Vignette Based Online Experiment. Comput. Hum. Behav. 2021, 122, 106833. [Google Scholar] [CrossRef]

- Harborth, D.; Kreuz, H. Exploring the Attitude Formation Process of Individuals Towards New Technologies: The Case of Augmented Reality. Int. J. Technol. Mark. 2020, 14, 125–153. [Google Scholar] [CrossRef]

- Harborth, D. Unfolding Concerns about Augmented Reality Technologies: A Qualitative Analysis of User Perceptions. In Proceedings of the Wirtschaftsinformatik (WI19), Siegen, Germany, 24–27 February 2019; pp. 1262–1276. [Google Scholar]

- Harborth, D.; Hatamian, M.; Tesfay, W.B.; Rannenberg, K. A Two-Pillar Approach to Analyze the Privacy Policies and Resource Access Behaviors of Mobile Augmented Reality Applications. In Proceedings of the 52nd Hawaii International Conference on System Sciences, Maui, HI, USA, 8–11 January 2019; pp. 5029–5038. [Google Scholar]

- Denning, T.; Dehlawi, Z.; Kohno, T. In Situ with Bystanders of Augmented Reality Glasses. In Proceedings of the 32nd Annual ACM Conference on Human Factors in Computing Systems—CHI ’14, Toronto, ON, Canada, 26 April–21 May 2014; pp. 2377–2386. [Google Scholar]

- Lebeck, K.; Ruth, K.; Kohno, T.; Roesner, F. Towards Security and Privacy for Multi-User Augmented Reality: Foundations with End Users. In Proceedings of the IEEE Symposium on Security and Privacy, San Francisco, CA, USA, 21–23 May 2018; pp. 392–408. [Google Scholar]

- de Guzman, J.A.; Thilakarathna, K.; Seneviratne, A. Security and Privacy Approaches in Mixed Reality: A Literature Survey. arXiv 2018, arXiv:1802.05797v2. [Google Scholar] [CrossRef] [Green Version]

- European Union. Charter of Fundamental Rights of the European Union. Available online: https://www.europarl.europa.eu/charter/pdf/text_en.pdf (accessed on 20 May 2022).

- Nissenbaum, H. Privacy in Context: Technology, Policy and the Integrity of Social Life; Stanford University Press: Palo Alto, CA, USA, 2010. [Google Scholar]

- Nissenbaum, H. Contextual Integrity Up and Down the Data Food Chain. Theor. Inq. 2019, 20, 221–256. [Google Scholar] [CrossRef]

- Herzog, L. Citizens’ Autonomy and Corporate Cultural Power. J. Soc. Philos. 2020, 51, 205–230. [Google Scholar] [CrossRef]

- Christman, J. Autonomy in Moral and Political Philosophy. Available online: https://plato.stanford.edu/entries/autonomy-moral/ (accessed on 1 May 2022).

- Dworkin, G. The Theory and Practice of Autonomy; Cambridge University Press: New York, NY, USA, 1988. [Google Scholar]

- Beauchamp, T.L.; Childress, J.F. Principles of Biomedical Ethics; Oxford University Press: Oxford, UK, 2013. [Google Scholar]

- Halper, T. Privacy and Autonomy: From Warren and Brandeis to Roe and Cruzan. J. Med. Philos. 1996, 21, 121–135. [Google Scholar] [CrossRef]

- Jacobs, N. Two Ethical Concerns about the Use of Persuasive Technology for Vulnerable People. Bioethics 2020, 34, 519–526. [Google Scholar] [CrossRef]

- McDonald, A.M.; Cranor, L.F. The Cost of Reading Privacy Policies. I/S A J. Law Policy Inf. Soc. 2008, 4, 543. [Google Scholar]

- Harborth, D.; Frik, A. Evaluating and Redefining Smartphone Permissions for Mobile Augmented Reality Apps. In Proceedings of the USENIX Symposium on Usable Privacy and Security (SOUPS 2021), Virtual, 8–10 August 2021; pp. 513–533. [Google Scholar]

- Spiekermann, S.; Grossklags, J.; Berendt, B. E-Privacy in 2nd Generation E-Commerce: Privacy Preferences versus Actual Behavior. In Proceedings of the Third ACM Conference on Electronic Commerce, Seoul, Korea, 3–5 August 2015; ACM: Tampa, FL, USA, 2001; pp. 38–47. [Google Scholar]

- Grossklags, J.; Acquisti, A. When 25 Cents Is Too Much: An Experiment on Willingness-To-Sell and Willingness-To-Protect Personal Information. In Proceedings of the WEIS, Hanover, NH, USA, 25–27 June 2007. [Google Scholar]

- Harborth, D.; Cai, X.; Pape, S. Why Do People Pay for Privacy-Enhancing Technologies? The Case of Tor and JonDonym. In Proceedings of the ICT Systems Security and Privacy Protection. SEC 2019, Lisbon, Portugal, 25–27 June 2019; IFIP Advances in Information and Communication Technology. Dhillon, G., Karlsson, F., Hedström, K., Zúquete, A., Eds.; Springer: Cham, Switzerland, 2019; Volume 562, pp. 253–267. [Google Scholar]

- Seels, B.; Fullerton, K.; Berry, L.; Horn, L.J. Research on Learning from Television. In Handbook of Research on Educational Communications and Technology, 2nd ed.; Hardcover; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 2004; pp. 249–334. ISBN 0-8058-4145-8. [Google Scholar]

- Olney, T.J.; Holbrook, M.B.; Batra, R. Consumer Responses to Advertising: The Effects of Ad Content, Emotions, and Attitude toward the Ad on Viewing Time. J. Consum. Res. 1991, 17, 440–453. [Google Scholar] [CrossRef]

- Bamberger, K.A.; Egelman, S.; Han, C.; Elazari, A.; On, B.; Reyes, I. Can you pay for privacy? Consumer expectations and the behavior of free and paid apps. Berkeley Technol. Law J. 2020, 35, 1174616. [Google Scholar]

- Han, C.; Reyes, I.; Elazari, A.; On, B.; Reardon, J.; Feal, Á.; Bamberger, K.A.; Egelman, S.; Vallina-rodriguez, N. Do You Get What You Pay For? Comparing the Privacy Behaviors of Free vs. Paid Apps. In Proceedings of the Workshop on Technology and Consumer Protection (ConPro ’19), San Francisco, CA, USA, 23 May 2019; pp. 1–7. [Google Scholar]

- O’Neill, N. Roomba Maker Wants to Sell Your Home’s Floor Plan. Available online: https://nypost.com/2017/07/25/roomba-maker-wants-to-sell-your-homes-floor-plan/ (accessed on 20 April 2020).

- de Montjoye, Y.-A.; Radaelli, L.; Singh, V.K.; Pentland, A. Unique in the Shopping Mall: On the Reidentifiability of Credit Card Metadata. Science 2015, 347, 536–539. [Google Scholar] [CrossRef] [Green Version]

- Harborth, D.; Pape, S. Examining Technology Use Factors of Privacy-Enhancing Technologies: The Role of Perceived Anonymity and Trust. In Proceedings of the 24th Americas Conference on Information Systems, New Orleans, LA, USA, 16–18 August 2018. [Google Scholar]

- Harborth, D.; Herrmann, D.; Köpsell, S.; Pape, S.; Roth, C.; Federrath, H.; Kesdogan, D.; Rannenberg, K. Integrating Privacy-Enhancing Technologies into the Internet Infrastructure. arXiv 2017, arXiv:1711.07220. [Google Scholar]

- Harborth, D.; Pape, S. JonDonym Users’ Information Privacy Concerns. In Proceedings of the ICT Systems Security and Privacy Protection. SEC 2018, Poznan, Poland, 18–20 September 2018; IFIP Advances in Information and Communication Technology. Janczewski, L., Kutyłowski, M., Eds.; Springer: Cham, Switzerland, 2018; Volume 529, pp. 170–184. [Google Scholar]

- Harborth, D.; Pape, S.; Rannenberg, K. Explaining the Technology Use Behavior of Privacy-Enhancing Technologies: The Case of Tor and JonDonym. Proc. Priv. Enhanc. Technol. 2020, 2020, 111–128. [Google Scholar] [CrossRef]

- Harborth, D.; Pape, S. How Privacy Concerns, Trust and Risk Beliefs, and Privacy Literacy Influence Users’ Intentions to Use Privacy-Enhancing Technologies: The Case of Tor. ACM SIGMIS Database Adv. Inf. Syst. 2020, 51, 51–69. [Google Scholar] [CrossRef]

- Harborth, D.; Pape, S. How Privacy Concerns and Trust and Risk Beliefs Influence Users’ Intentions to Use Privacy-Enhancing Technologies—The Case of Tor. In Proceedings of the Hawaii International Conference on System Sciences (HICSS) Proceedings, Maui, HI, USA, 8–11 January 2019; pp. 4851–4860. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Harborth, D. Human Autonomy in the Era of Augmented Reality—A Roadmap for Future Work. Information 2022, 13, 289. https://doi.org/10.3390/info13060289

Harborth D. Human Autonomy in the Era of Augmented Reality—A Roadmap for Future Work. Information. 2022; 13(6):289. https://doi.org/10.3390/info13060289

Chicago/Turabian StyleHarborth, David. 2022. "Human Autonomy in the Era of Augmented Reality—A Roadmap for Future Work" Information 13, no. 6: 289. https://doi.org/10.3390/info13060289

APA StyleHarborth, D. (2022). Human Autonomy in the Era of Augmented Reality—A Roadmap for Future Work. Information, 13(6), 289. https://doi.org/10.3390/info13060289