Improving the Adversarial Robustness of Neural ODE Image Classifiers by Tuning the Tolerance Parameter

Abstract

1. Introduction

- We provide a complete study on neural ODE image classifiers and on how their robustness can vary by playing with the ODE solver tolerance against adversarial attacks such as the Carlini and Wagner one;

- We demonstrate the defensive properties offered by ODE nets in a zero-knowledge adversarial scenario;

- We analyze how the robustness offered by Neural ODE nets varies in the more stringent scenario of an active attacker that changes the attack-time solver tolerance.

2. Related Work

3. Background

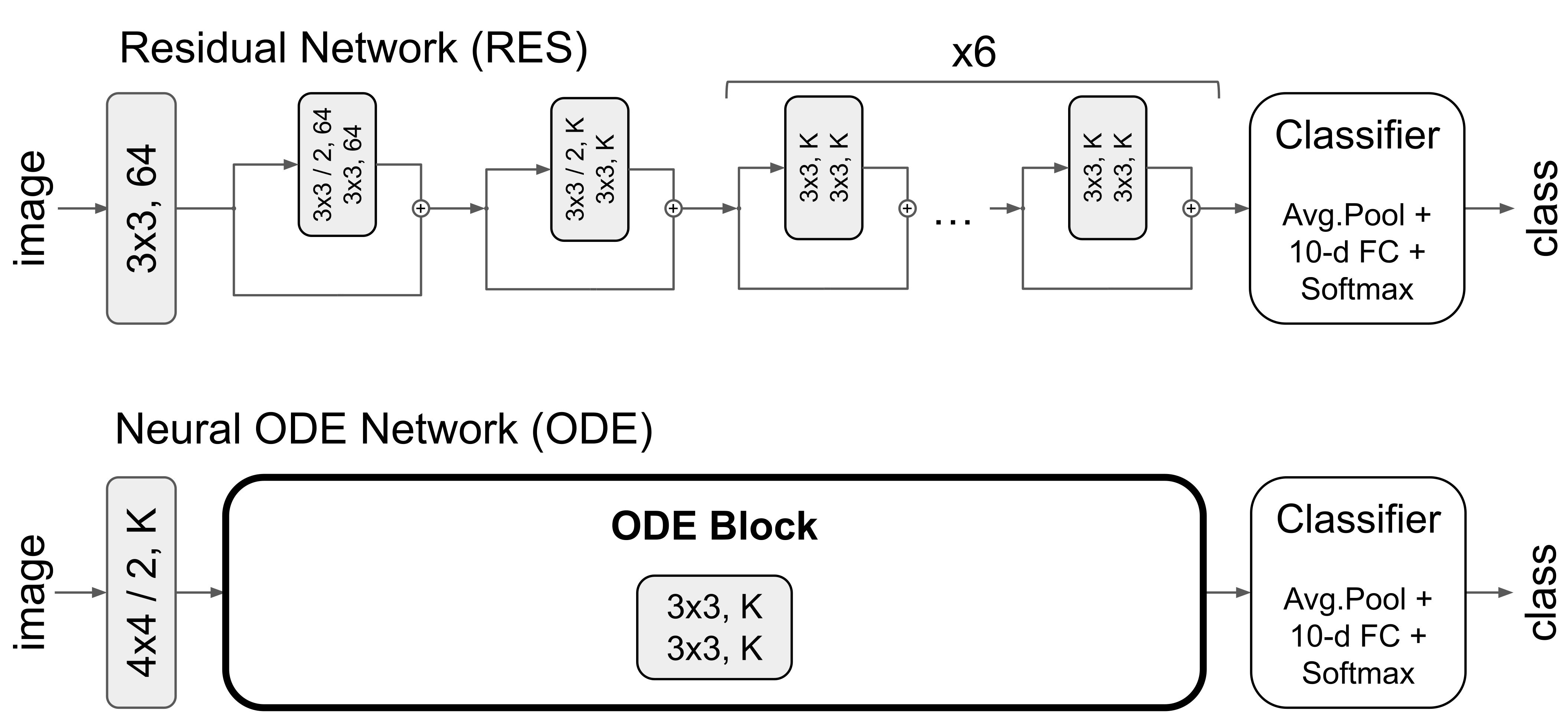

3.1. The Neural ODE Networks

3.2. The Carlini and Wagner Attack

4. Robustness via Tolerance Variation

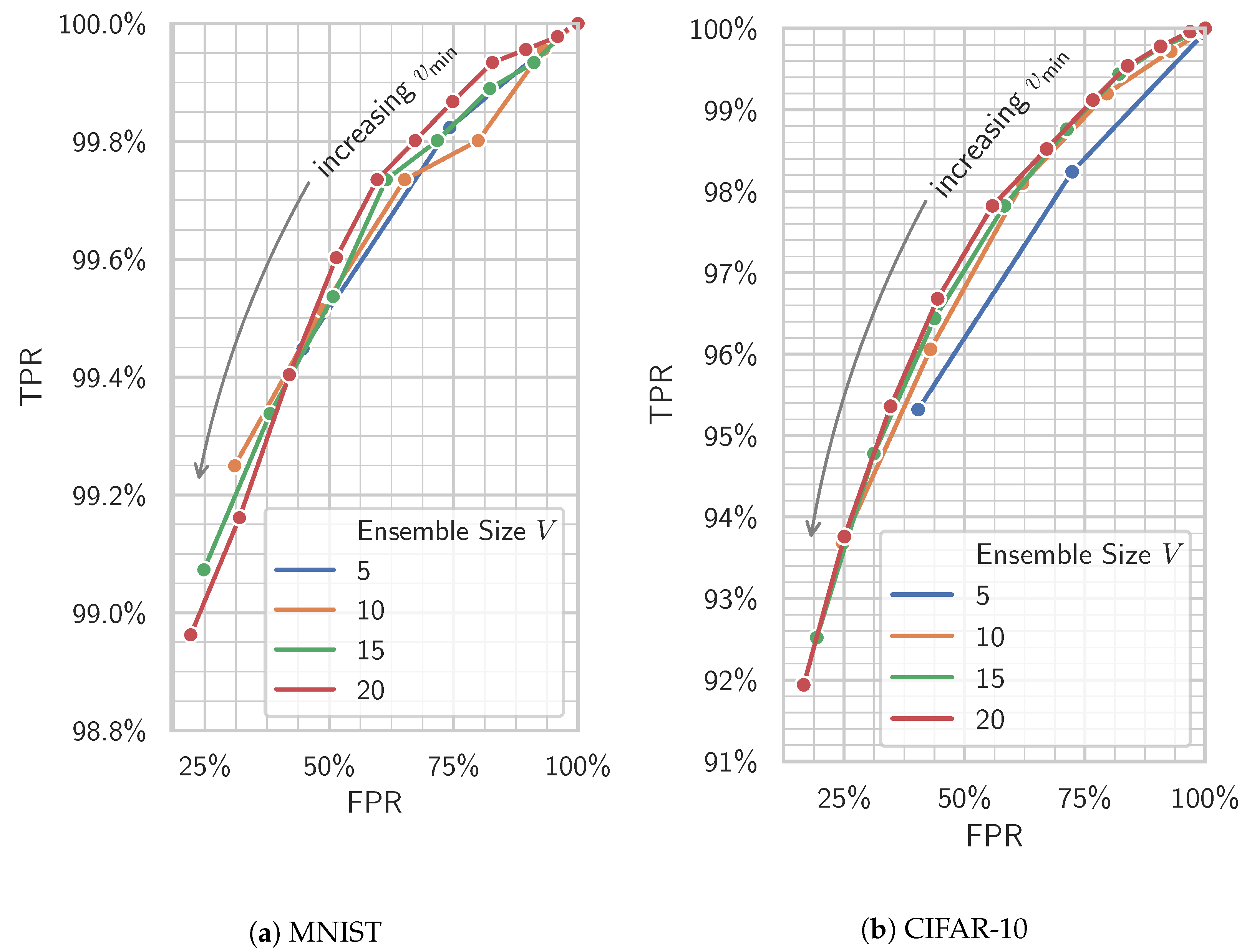

4.1. Defensive Tolerance Randomization

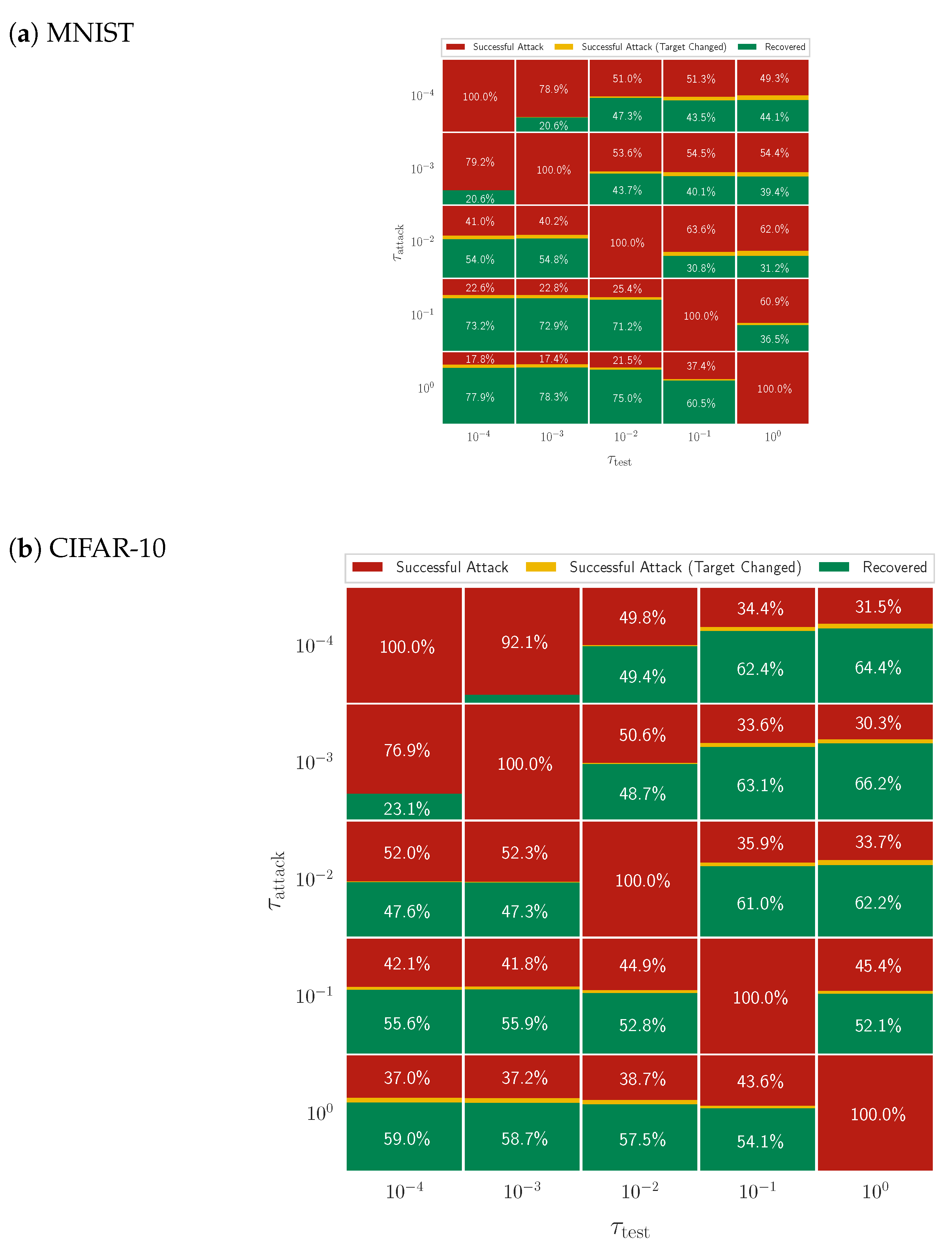

5. Robustness under Adaptive Attackers

6. Experimental Details

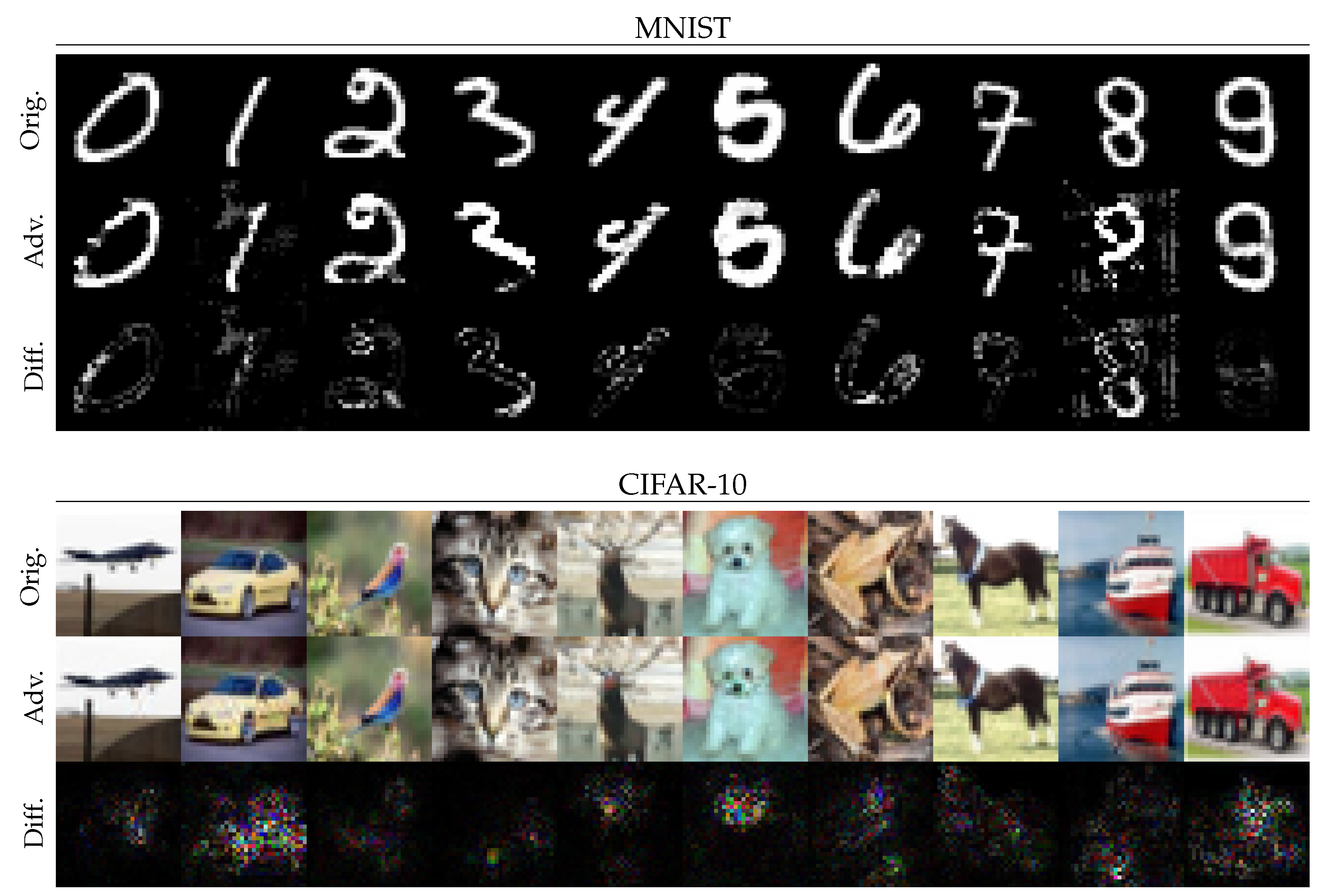

6.1. Datasets: MNIST and CIFAR-10

6.2. The Training Phase

6.3. Carlini and Wagner Attack Implementation Details

7. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.J.; Fergus, R. Intriguing properties of neural networks. arXiv 2014, arXiv:1312.6199. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. arXiv 2015, arXiv:1412.6572. [Google Scholar]

- Moosavi-Dezfooli, S.M.; Fawzi, A.; Frossard, P. Deepfool: A simple and accurate method to fool deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2574–2582. [Google Scholar]

- Carlini, N.; Wagner, D. Towards evaluating the robustness of neural networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 39–57. [Google Scholar]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial Examples in the Physical World; Chapman and Hall: London, UK, 2017. [Google Scholar]

- Papernot, N.; McDaniel, P.D.; Wu, X.; Jha, S.; Swami, A. Distillation as a Defense to Adversarial Perturbations Against Deep Neural Networks. In Proceedings of the 2016 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2016; pp. 582–597. [Google Scholar] [CrossRef]

- Wu, J.; Xia, Z.; Feng, X. Improving Adversarial Robustness of CNNs via Maximum Margin. Appl. Sci. 2022, 12, 7927. [Google Scholar] [CrossRef]

- Carrara, F.; Caldelli, R.; Falchi, F.; Amato, G. On the robustness to adversarial examples of neural ODE image classifiers. In Proceedings of the 2019 IEEE International Workshop on Information Forensics and Security (WIFS), Delft, The Netherlands, 9–12 December 2019; pp. 1–6. [Google Scholar]

- Carrara, F.; Caldelli, R.; Falchi, F.; Amato, G. Defending Neural ODE Image Classifiers from Adversarial Attacks with Tolerance Randomization. In Proceedings of the Pattern Recognition—ICPR International Workshops and Challenges, Virtual Event, 15–20 January 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 425–438. [Google Scholar]

- Papernot, N.; McDaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The limitations of deep learning in adversarial settings. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy (EuroS&P), Saarbruecken, Germany, 21–24 March 2016; pp. 372–387. [Google Scholar]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S.; Dong, Y.; Liao, F.; Liang, M.; Pang, T.; Zhu, J.; Hu, X.; Xie, C.; et al. Adversarial Attacks and Defences Competition. In The NIPS ’17 Competition: Building Intelligent Systems; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards Deep Learning Models Resistant to Adversarial Attacks. arXiv 2018, arXiv:1706.06083. [Google Scholar]

- Grosse, K.; Manoharan, P.; Papernot, N.; Backes, M.; McDaniel, P.D. On the (Statistical) Detection of Adversarial Examples. arXiv 2017, arXiv:1702.06280. [Google Scholar]

- Metzen, J.H.; Genewein, T.; Fischer, V.; Bischoff, B. On Detecting Adversarial Perturbations. arXiv 2017, arXiv:1702.04267. [Google Scholar]

- Carrara, F.; Becarelli, R.; Caldelli, R.; Falchi, F.; Amato, G. Adversarial examples detection in features distance spaces. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Taran, O.; Rezaeifar, S.; Holotyak, T.; Voloshynovskiy, S. Defending against adversarial attacks by randomized diversification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11226–11233. [Google Scholar]

- Barni, M.; Nowroozi, E.; Tondi, B.; Zhang, B. Effectiveness of random deep feature selection for securing image manipulation detectors against adversarial examples. In Proceedings of the ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 2977–2981. [Google Scholar]

- Feinman, R.; Curtin, R.R.; Shintre, S.; Gardner, A.B. Detecting Adversarial Samples from Artifacts. arXiv 2017, arXiv:1703.00410. [Google Scholar]

- Carlini, N.; Wagner, D. Adversarial Examples Are Not Easily Detected: Bypassing Ten Detection Methods. In Proceedings of the Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, Dallas, TX, USA, 3 November 2017; ACM: New York, NY, USA, 2017; pp. 3–14. [Google Scholar] [CrossRef]

- Hanshu, Y.; Jiawei, D.; Vincent, T.; Jiashi, F. On robustness of neural ordinary differential equations. arXiv 2019, arXiv:1910.05513. [Google Scholar]

- Liu, X.; Xiao, T.; Si, S.; Cao, Q.; Kumar, S.; Hsieh, C.J. Stabilizing Neural ODE Networks with Stochasticity. 2019. Available online: https://openreview.net/forum?id=Skx2iCNFwB (accessed on 1 August 2022).

- Chen, T.Q.; Rubanova, Y.; Bettencourt, J.; Duvenaud, D.K. Neural ordinary differential equations. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2018; pp. 6572–6583. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 630–645. [Google Scholar]

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Technical Report; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Rauber, J.; Brendel, W.; Bethge, M. Foolbox: A Python toolbox to benchmark the robustness of machine learning models. arXiv 2017, arXiv:1707.04131. [Google Scholar]

| MNIST | CIFAR-10 | |||||

|---|---|---|---|---|---|---|

| Err (%) | ASR (%) | Pert () | Err (%) | ASR (%) | Pert () | |

| RES | 0.4 | 99.7 | 1.1 | 7.3 | 100 | 2.6 |

| ODE | 0.5 | 99.7 | 1.4 | 9.1 | 100 | 2.2 |

| ODE | 0.5 | 90.7 | 1.7 | 9.2 | 100 | 2.4 |

| ODE | 0.6 | 74.4 | 1.9 | 9.3 | 100 | 4.1 |

| ODE | 0.8 | 71.6 | 1.7 | 10.6 | 100 | 8.0 |

| ODE | 1.2 | 69.7 | 1.9 | 11.3 | 100 | 13.7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Carrara, F.; Caldelli, R.; Falchi, F.; Amato, G. Improving the Adversarial Robustness of Neural ODE Image Classifiers by Tuning the Tolerance Parameter. Information 2022, 13, 555. https://doi.org/10.3390/info13120555

Carrara F, Caldelli R, Falchi F, Amato G. Improving the Adversarial Robustness of Neural ODE Image Classifiers by Tuning the Tolerance Parameter. Information. 2022; 13(12):555. https://doi.org/10.3390/info13120555

Chicago/Turabian StyleCarrara, Fabio, Roberto Caldelli, Fabrizio Falchi, and Giuseppe Amato. 2022. "Improving the Adversarial Robustness of Neural ODE Image Classifiers by Tuning the Tolerance Parameter" Information 13, no. 12: 555. https://doi.org/10.3390/info13120555

APA StyleCarrara, F., Caldelli, R., Falchi, F., & Amato, G. (2022). Improving the Adversarial Robustness of Neural ODE Image Classifiers by Tuning the Tolerance Parameter. Information, 13(12), 555. https://doi.org/10.3390/info13120555