Tool Wear Prediction Based on a Multi-Scale Convolutional Neural Network with Attention Fusion

Abstract

1. Introduction

2. Related Word

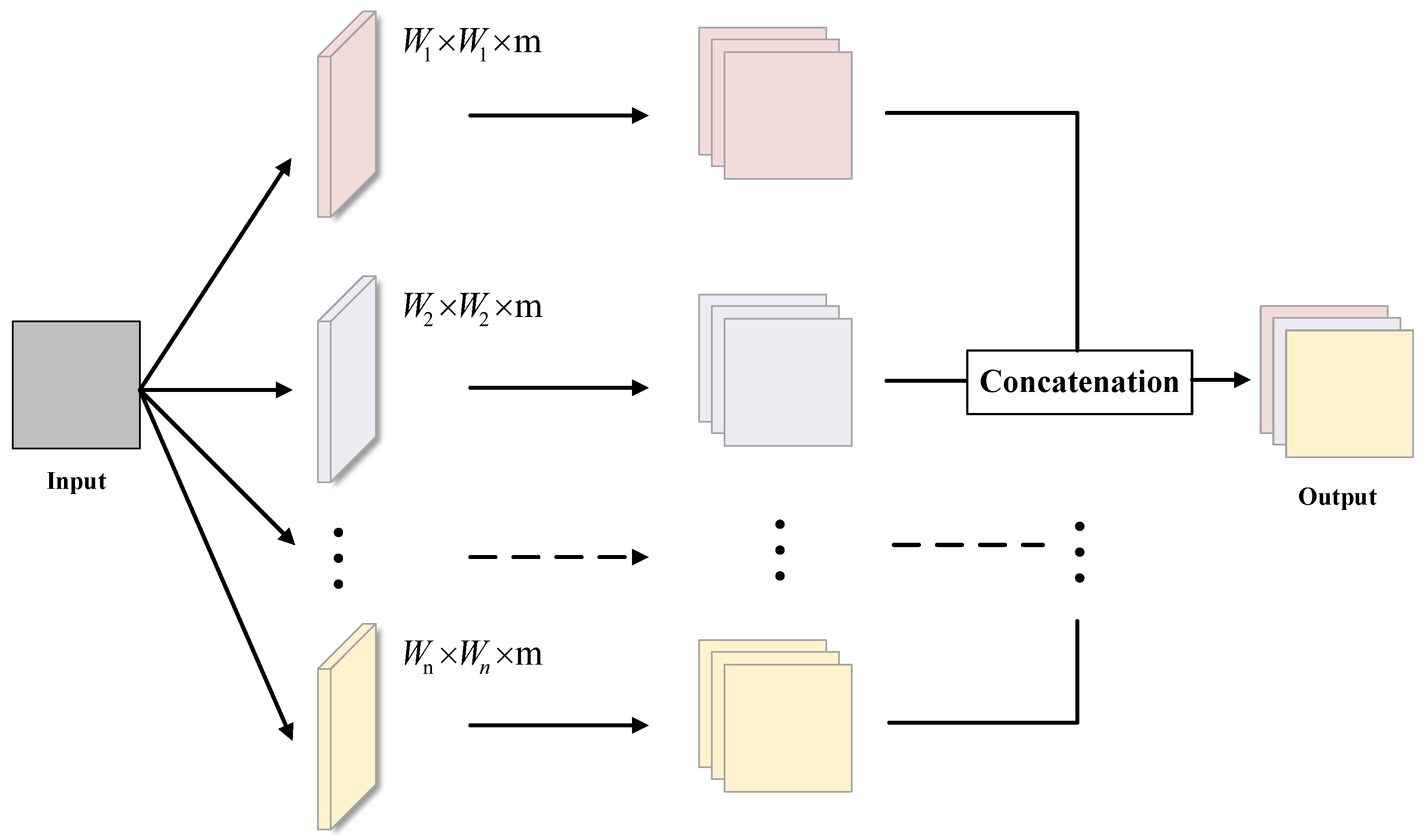

2.1. Multi-Scale Convolutional Neural Network

2.2. Attention Mechanism

3. Method

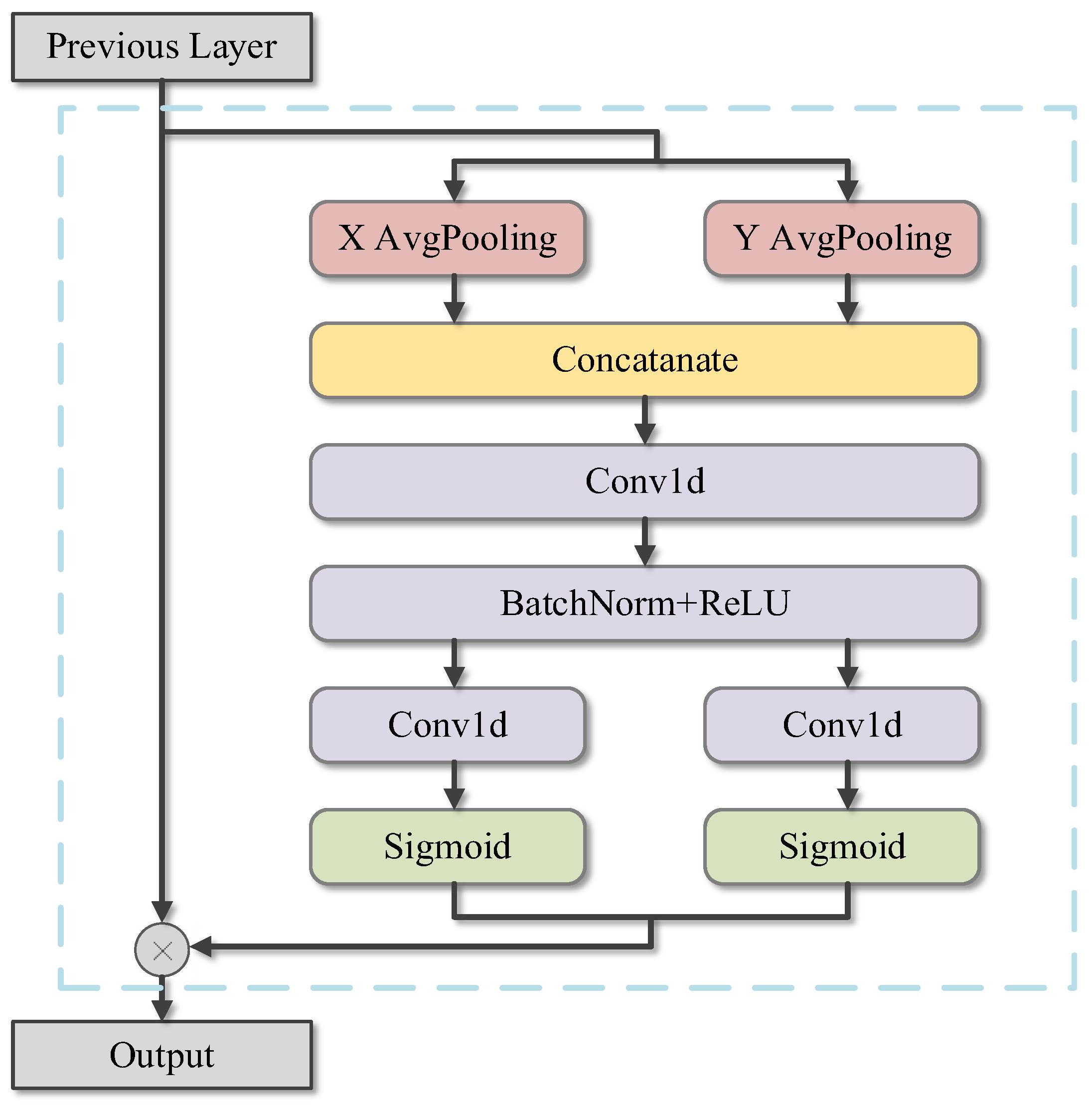

3.1. Attention Fusion Module

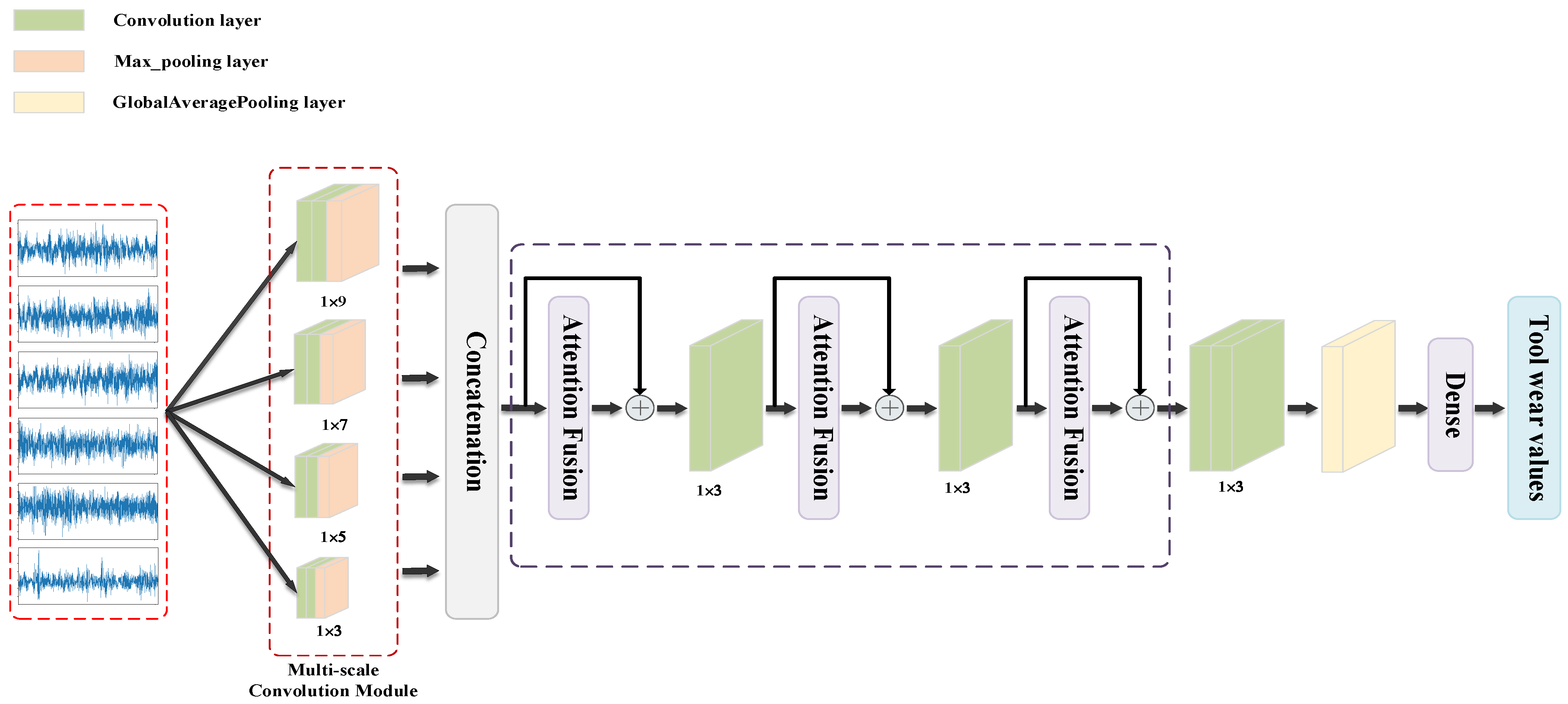

3.2. The Multi-Scale Convolutional Network with Attention Fusion

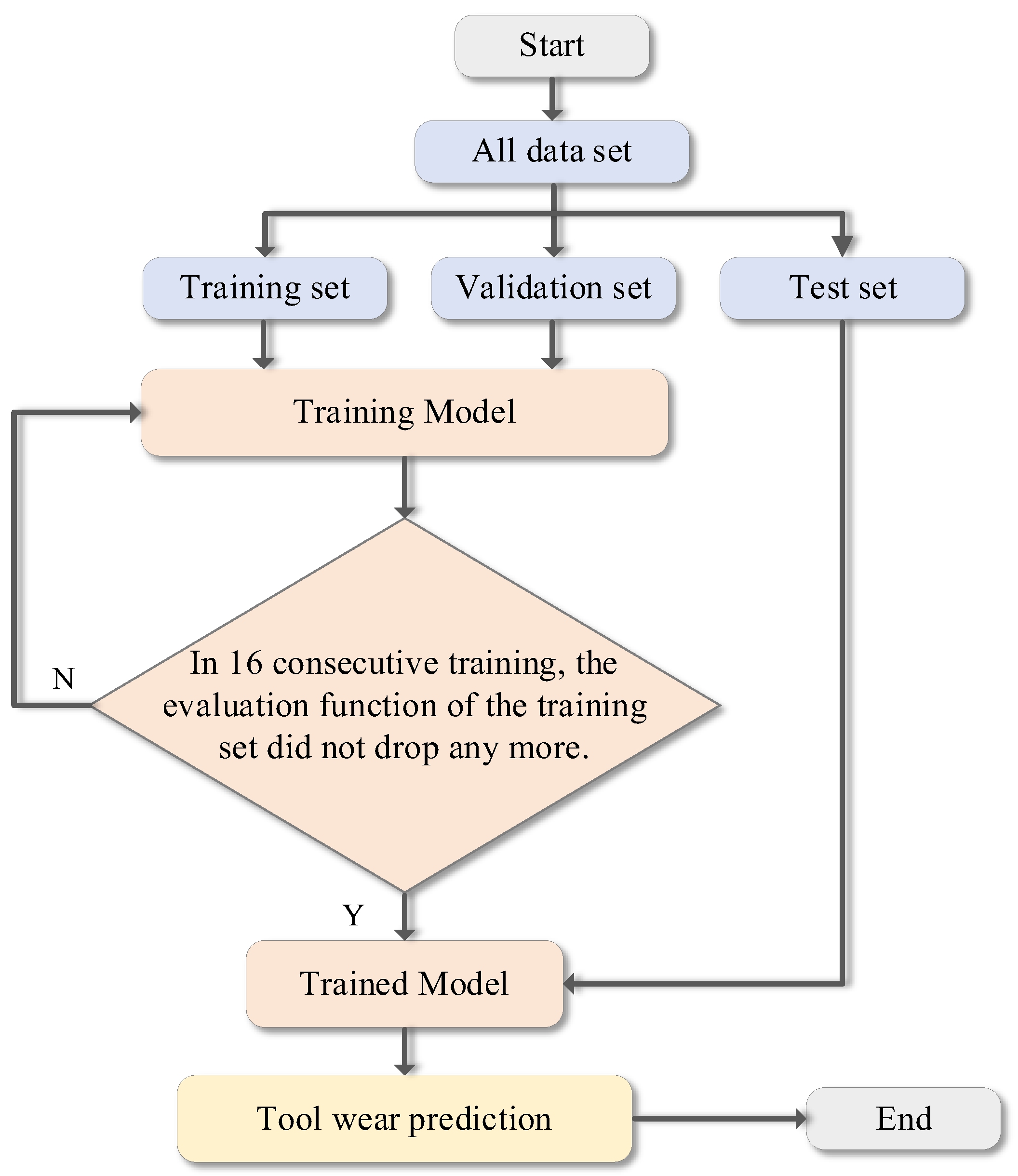

3.3. The Flowchart of the Proposed Method

- (1)

- The dataset obtained after pre-processing is divided into a training set, a validation set, and a test set.

- (2)

- The training set data is put into the model for training, and the validation set data is used to calculate the loss and gradient. The model updates parameters through gradient descent and determines whether the model needs to continue training according to the learning strategy.

- (3)

- After the model training is completed, the test set is input into the trained model to evaluate the performance of the model, thereby realizing tool wear prediction.

4. Experiment

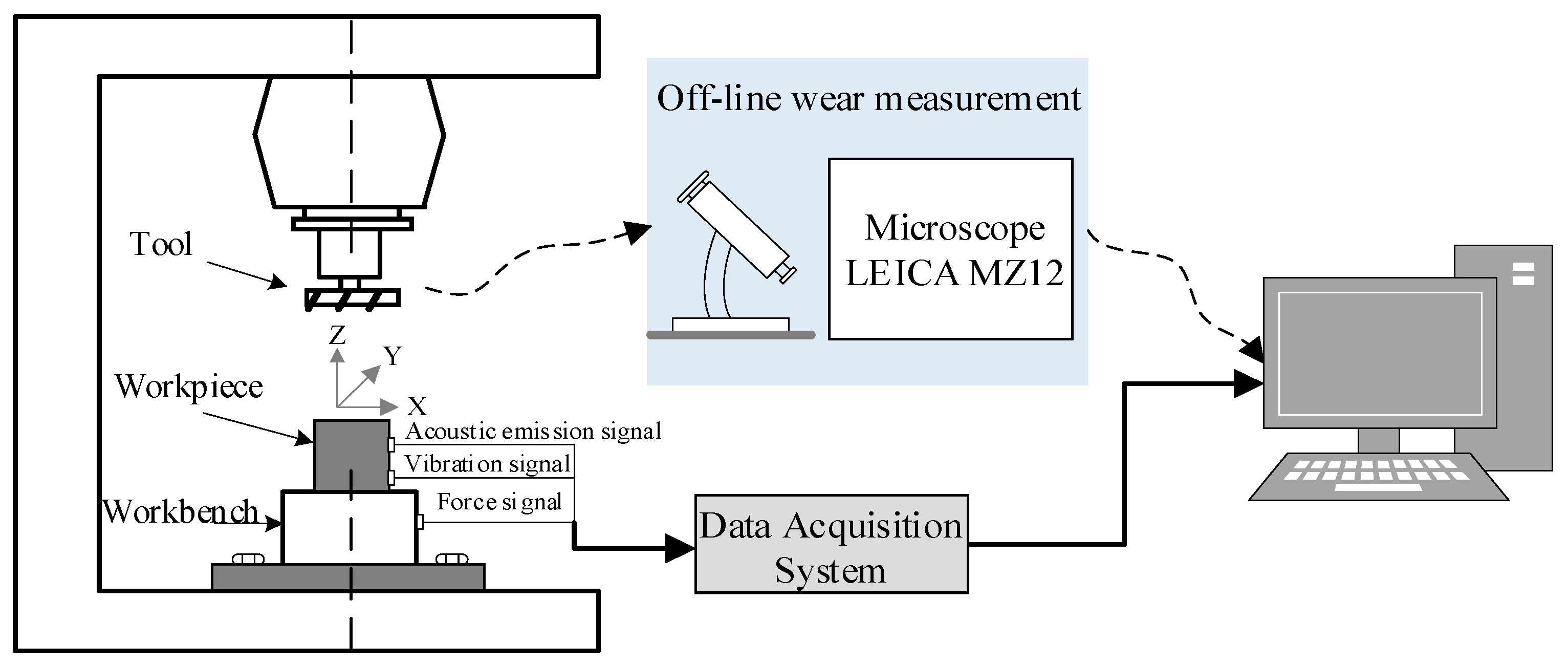

4.1. Data Description

4.2. Data Processing

4.3. Parameter Settings

4.4. Evaluation Indicators

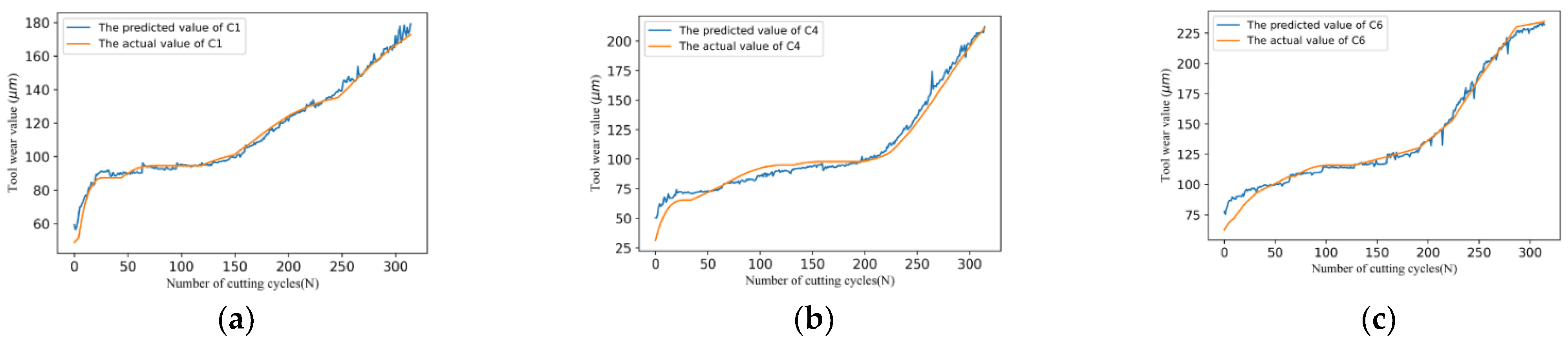

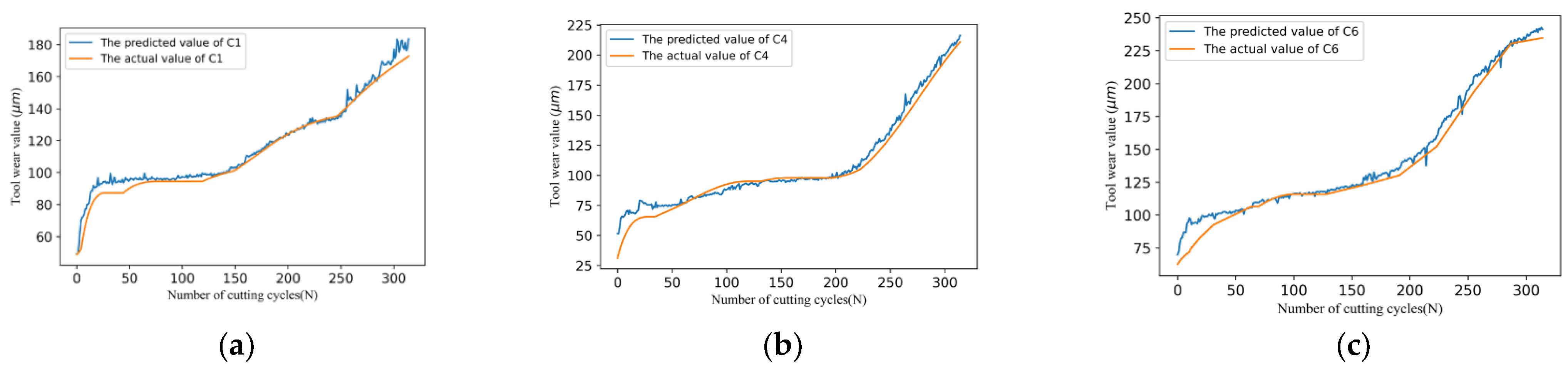

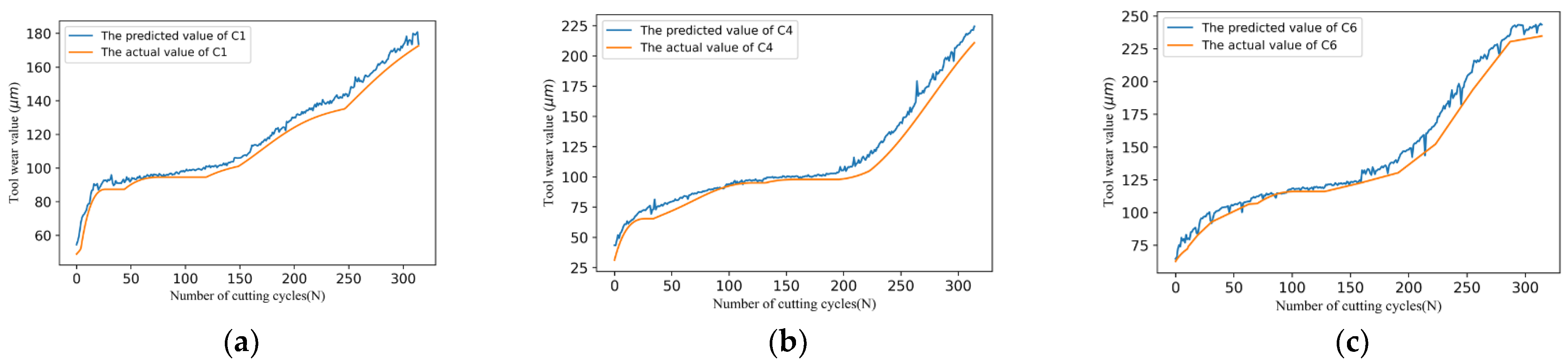

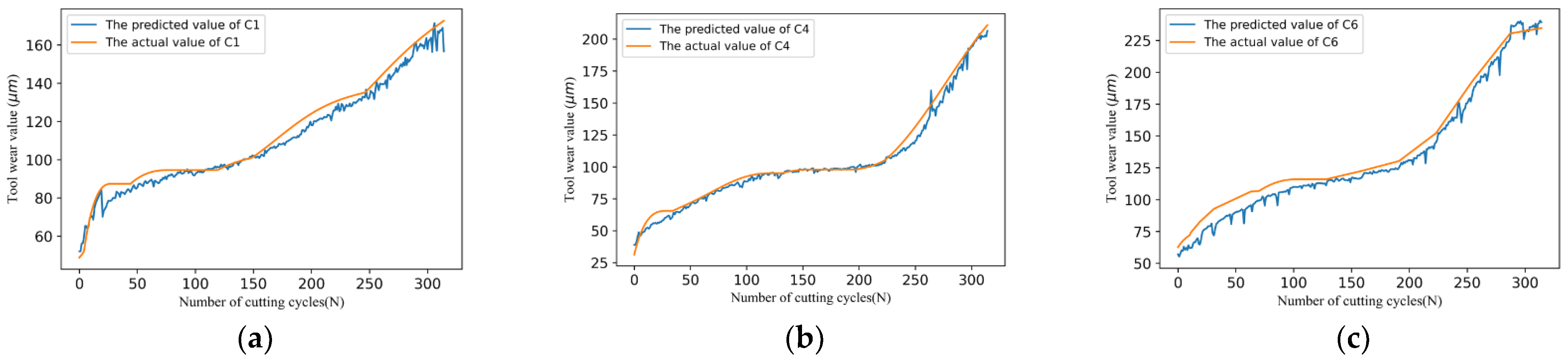

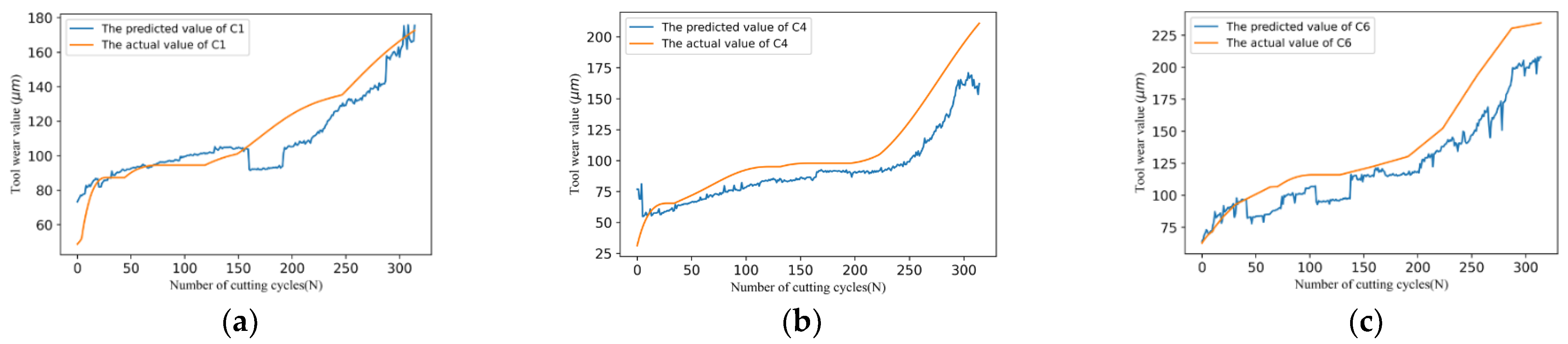

4.5. Experimental Results and Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Biao, W.; Lei, Y.; Li, N.; Wang, W. Multiscale Convolutional Attention Network for Predicting Remaining Useful Life of Machinery. In IEEE Transactions on Industrial Electronics; IEEE: Manhattan, NY, USA, 2021; Volume 68, pp. 7496–7504. [Google Scholar]

- Mun, J.; Joeng, J. Design and Analysis of RUL Prediction Algorithm Based on CABLSTM for CNC Machine Tools. In Proceedings of the 2020 7th International Conference on Soft Computing & Machine Intelligence (ISCMI), Stockholm, Sweden, 14–15 November 2020; pp. 83–87. [Google Scholar]

- Lu, Z.; Wang, M.; Dai, W.; Sun, J. In-Process Complex Machining Condition Monitoring Based on Deep Forest and Process Information Fusion. Int. J. Adv. Manuf. Technol. 2019, 104, 1953–1966. [Google Scholar] [CrossRef]

- Shankar, S.; Mohanraj, T.; Rajasekar, R. Prediction of Cutting Tool Wear during Milling Process Using Artificial Intelligence Techniques. Int. J. Comput. Integr. Manuf. 2018, 32, 174–182. [Google Scholar] [CrossRef]

- Das, A.; Yang, F.; Habibullah, M.S.; Yu, Z.; Farbiz, F. Tool Wear Health Monitoring with Limited Degradation Data. In Proceedings of the TENCON 2019—2019 IEEE Region 10 Conference (TENCON), Kochi, India, 17–19 October 2019; pp. 1103–1108. [Google Scholar]

- Li, Y.; Huang, Y.; Zhao, L.; Liu, C. Cutter Multi-Case Wear Evaluation Based on t-Distributed Neighborhood Embedding and XGBoost. Chin. J. Mech. Eng. 2020, 56, 132–140. [Google Scholar]

- Liao, X.; Li, Y.; Chen, C.; Zhang, Z.; Lu, J.; Ma, J.; Xue, B. Recognition of Tool Wear State Based on Kernel Principal Component and Grey Wolf Optimization Algorithm. Comput. Integr. Manuf. Syst. 2020, 26, 3031–3039. [Google Scholar]

- Deng, F. A Tool Wear Fault Diagnosis Method Based on LMD and HMM. Mech. Des. Manuf. Eng. 2019, 48, 111–114. [Google Scholar]

- Martínez-Arellano, G.; Terrazas, G.; Ratchev, S. Tool Wear Classification Using Time Series Imaging and Deep Learning. Int. J Adv. Manuf. Technol. 2019, 104, 3647–3662. [Google Scholar] [CrossRef]

- LeCun, Y.; Yoshua, B.; Geoffrey, H. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Zhou, J.; Gao, J.; Li, Z.; Li, E. Milling Tool Wear Prediction Method Based on Deep Learning under Variable Working Conditions. In IEEE Access; IEEE: Manhattan, NY, USA, 2020; Volume 8, pp. 140726–140735. [Google Scholar]

- Chen, Y.; Jin, Y.; Jiri, G. Predicting Tool Wear with Multi-Sensor Data Using Deep Belief Networks. Int. J. Adv. Manuf. Technol. 2018, 99, 1917–1926. [Google Scholar] [CrossRef]

- Gan, M.; Wang, C.; Zhu, C. Construction of Hierarchical Diagnosis Network Based on Deep Learning and Its Application in the Fault Pattern Recognition of Rolling Element Bearings. Mech. Syst. Signal Process. 2016, 72–73, 92–104. [Google Scholar] [CrossRef]

- De Bruin, T.; Verbert, K.; Babuška, R. Railway Track Circuit Fault Diagnosis Using Recurrent Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 523–533. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Cui, Z.; Wenlin, C.; Yixin, C. Multi-scale convolutional neural networks for time series classification. arXiv 2016, arXiv:1603.06995. preprint. [Google Scholar]

- Li, T.; Yu, Y.; Huang, C.; Yang, J.; Zhong, Y.; Hao, Y. Method for Predicting Cutter Remaining Life Based on Multi-Scale Cyclic Convolutional Network. Int. J. Distrib. Sens. Netw. 2022, 18, 155013292211020. [Google Scholar] [CrossRef]

- Xu, W.; Miao, H.; Zhao, Z.; Liu, J.; Sun, C.; Yan, R. Multi-Scale Convolutional Gated Recurrent Unit Networks for Tool Wear Prediction in Smart Manufacturing. Chin. J. Mech. Eng. 2021, 34, 53. [Google Scholar] [CrossRef]

- Zhu, J.; Chen, N.; Peng, W. Estimation of Bearing Remaining Useful Life Based on Multiscale Convolutional Neural Network. In IEEE Transactions on Industrial Electronics; IEEE: Manhattan, NY, USA, 2019; Volume 66, pp. 3208–3216. [Google Scholar]

- Jiang, Y.; Lyu, Y.; Wang, Y.; Wan, P. Fusion Network Combined with Bidirectional LSTM Network and Multiscale CNN for Remaining Useful Life Estimation. In Proceedings of the 2020 12th International Conference on Advanced Computational Intelligence (ICACI), Dali, China, 14–16 August 2020; pp. 620–627. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Tsostas, J.K.; Culhane, S.M.; Wai, W.Y.K.; Lai, Y.; Davis, N.; Nuflo, F. Modeling Visual Attention via Selective Tuning. Artif. Intell. 1995, 78, 507–545. [Google Scholar] [CrossRef]

- Niu, Z.; Zhong, G.; Yu, H. A Review on the Attention Mechanism of Deep Learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albaine, S.; Sun, G.; Wu, E. Squeeze-And-Excitation Networks. In IEEE Transactions on Pattern Analysis and Machine Intelligence; IEEE: Manhattan, NY, USA, 2019; Volume 42, pp. 2011–2023. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Computer Vision—ECCV 2018; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3141–3149. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual Attention Network for Image Classification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6450–6458. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. arXiv 2021, arXiv:2103.02907. [Google Scholar]

- Xu, X.; Wang, J.; Zhong, B.; Ming, W.; Chen, M. Deep Learning-Based Tool Wear Prediction and Its Application for Machining Process Using Multi-Scale Feature Fusion and Channel Attention Mechanism. Measurement 2021, 177, 109254. [Google Scholar] [CrossRef]

- PHM Society. 2010 PHM Society Conference Data Challenge [EB/OL]. Available online: https://phmsociety.org/phm_competition/2010-phm-society-conference-data-challenge (accessed on 20 August 2022).

- Wang, C.; Sun, H.; Cao, X. Construction of the efficient attention prototypical net based on the time–frequency characterization of vibration signals under noisy small sample. Measurement 2021, 179, 109412. [Google Scholar] [CrossRef]

- Yu, H.; Tang, B.; Zhang, K.; Tan, Q.; Wei, J. Fault Diagnosis Method of Wind Turbine Gearboxes Mixed with Attention Prototype Networks under Small Samples. China Mech. Eng. 2021, 32, 2475–2481. [Google Scholar]

| Spindle Speed (r/min) | Feed Rate (mm/min) | Radial Depth of Cut (mm) | Axial Depth of Cut (mm) | Sampling Frequency (kHz) |

|---|---|---|---|---|

| 10,400 | 1555 | 0.125 | 0.2 | 50 |

| Model | Evaluation Indicators ± STD | |||

|---|---|---|---|---|

| MAE | MSE | MAPE/% | ||

| MSCNN | 5.65 ± 2.42 | 49.13 ± 31.68 | 4.94 ± 0.19 | 0.968 ± 0.02 |

| MSCNN + SE | 5.39 ± 2.06 | 51.56 ± 42.06 | 4.76 ± 0.17 | 0.955 ± 0.05 |

| MSCNN + ELCA | 5.44 ± 1.33 | 50.71 ± 22.98 | 4.84 ± 0.11 | 0.969 ± 0.01 |

| MSCNN + MAS | 5.59 ± 0.59 | 45.92 ± 13.39 | 4.98 ± 0.06 | 0.967 ± 0.01 |

| MSCNN + CBAM | 11.53 ± 6.52 | 223.01 ± 217.29 | 9.48 ± 0.05 | 0.873 ± 0.12 |

| The proposed model | 4.29 ± 1.11 | 34.00 ± 13.91 | 3.70 ± 0.09 | 0.975 ± 0.01 |

| Model | MAE | MSE | MAPE/% |

|---|---|---|---|

| MSCNN + ELCA | 49.57 | 3037.93 | 72.71 |

| MSCNN + MAS | 35.29 | 1815.92 | 40.17 |

| MSCNN + CBAM | 75.73 | 6434.62 | 194.94 |

| The proposed model | 30.08 | 1783.13 | 30.24 |

| Model | MAE | MSE | MAPE/% |

|---|---|---|---|

| MSCNN + ELCA | 19.53 | 647.84 | 15.29 |

| MSCNN + MAS | 18.51 | 659.42 | 15.03 |

| MSCNN + CBAM | 22.34 | 810.00 | 18.16 |

| The proposed model | 17.04 | 527.03 | 14.21 |

| Model | MAE | MSE | MAPE/% |

|---|---|---|---|

| MSCNN + ELCA | 13.96 | 234.46 | 15.64 |

| MSCNN + MAS | 22.79 | 574.39 | 24.99 |

| MSCNN + CBAM | 20.59 | 597.66 | 15.83 |

| The proposed model | 6.41 | 84,045 | 6.01 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Q.; Wu, D.; Huang, H.; Zhang, Y.; Han, Y. Tool Wear Prediction Based on a Multi-Scale Convolutional Neural Network with Attention Fusion. Information 2022, 13, 504. https://doi.org/10.3390/info13100504

Huang Q, Wu D, Huang H, Zhang Y, Han Y. Tool Wear Prediction Based on a Multi-Scale Convolutional Neural Network with Attention Fusion. Information. 2022; 13(10):504. https://doi.org/10.3390/info13100504

Chicago/Turabian StyleHuang, Qingqing, Di Wu, Hao Huang, Yan Zhang, and Yan Han. 2022. "Tool Wear Prediction Based on a Multi-Scale Convolutional Neural Network with Attention Fusion" Information 13, no. 10: 504. https://doi.org/10.3390/info13100504

APA StyleHuang, Q., Wu, D., Huang, H., Zhang, Y., & Han, Y. (2022). Tool Wear Prediction Based on a Multi-Scale Convolutional Neural Network with Attention Fusion. Information, 13(10), 504. https://doi.org/10.3390/info13100504