What Constitutes Fairness in Games? A Case Study with Scrabble

Abstract

1. Introduction

2. Literature Review and Related Work

2.1. Artificial Intelligence (AI) and Fairness in Games

2.2. Game Refinement Theory

2.3. Gamified Experience and the Notion of Fairness

2.3.1. Definition of Outcome Fairness

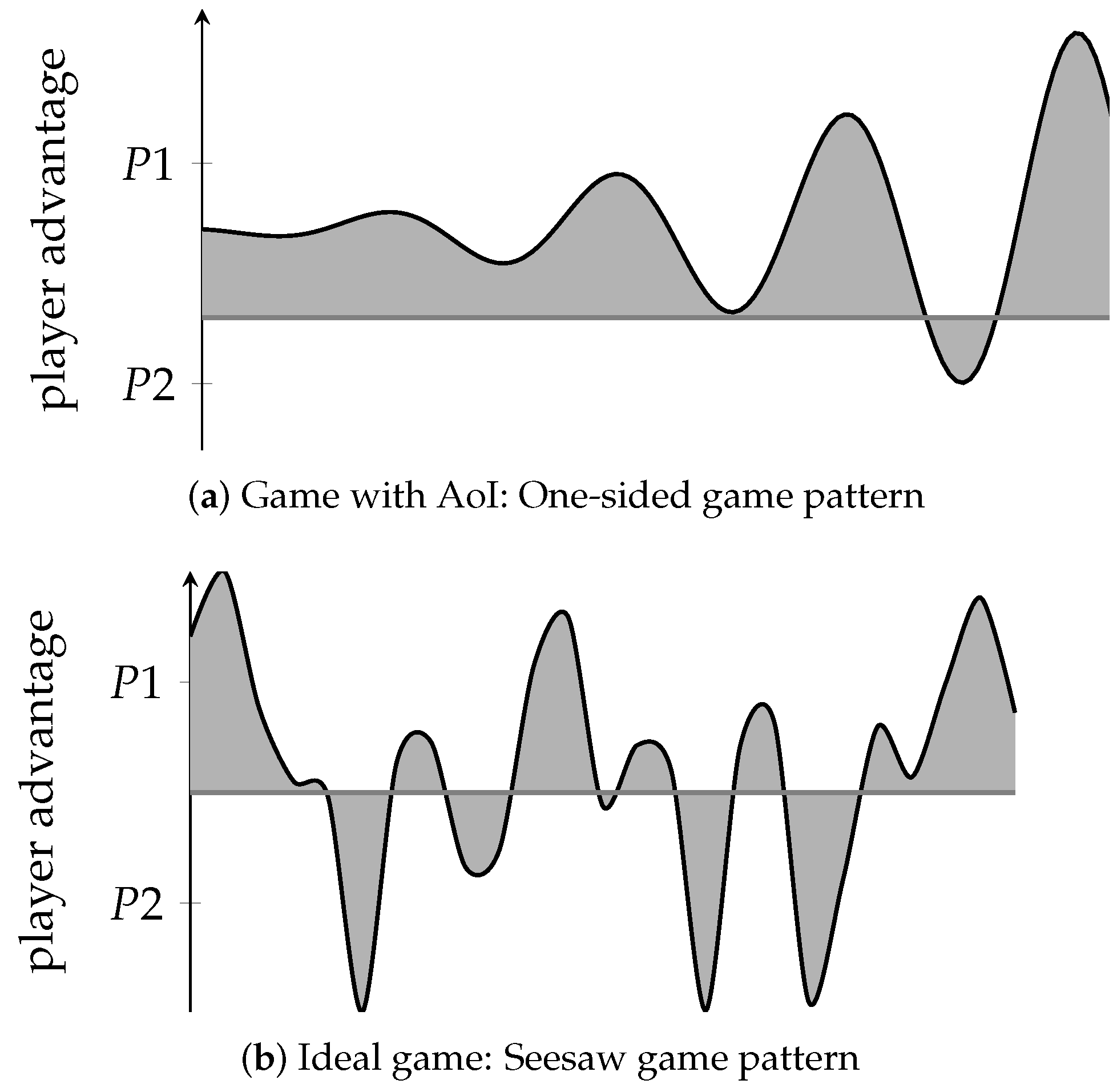

2.3.2. Definition of Process Fairness

- (1)

- Board games:

- (2)

- Scoring games:Let W and L be the number of advantages and the number of disadvantages in the games that have a game pattern with an observable score. The score rate p is given by (10), which implies that the number of advantages W and the number of disadvantages L are almost equal when a game meets fairness.

2.3.3. Momentum, Force, and Potential Energy in Games

2.4. Evolution of Fair Komi in Go

3. The Proposed Assessment Method

3.1. Dynamic versus Static Komi

3.2. Scrabble AI and Play Strategies

- Mid-game: This phase lasts from the beginning until there are nine or fewer tiles left in the bag.

- Pre-endgame: This phase starts when nine tiles are left in the bag. It is designed to attempt to yield a good end-game situation.

- Endgame: During this phase, there are no tiles left in the bag, and the state of the Scrabble game becomes a perfect information game.

4. Computational Experiment and Result Analysis

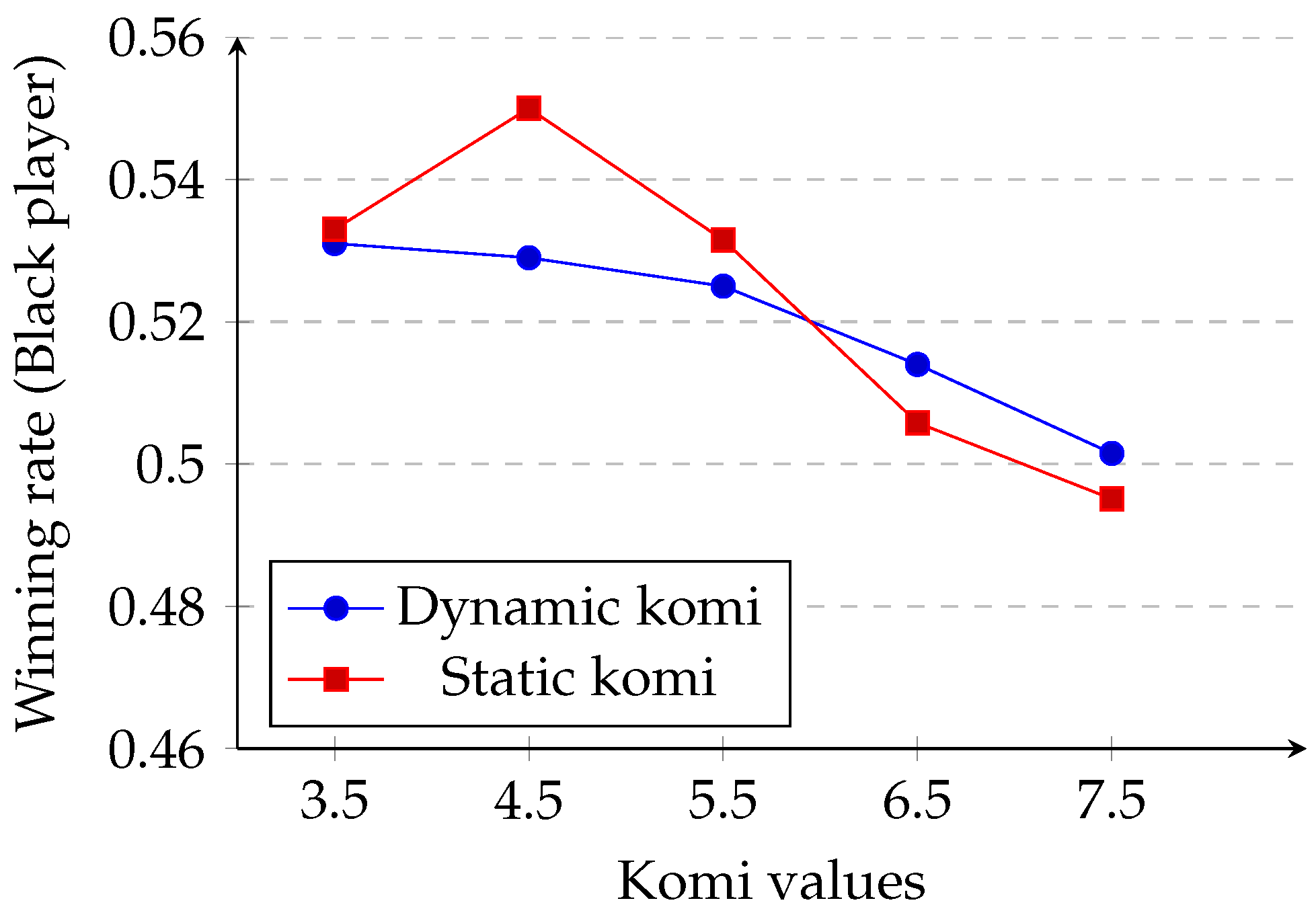

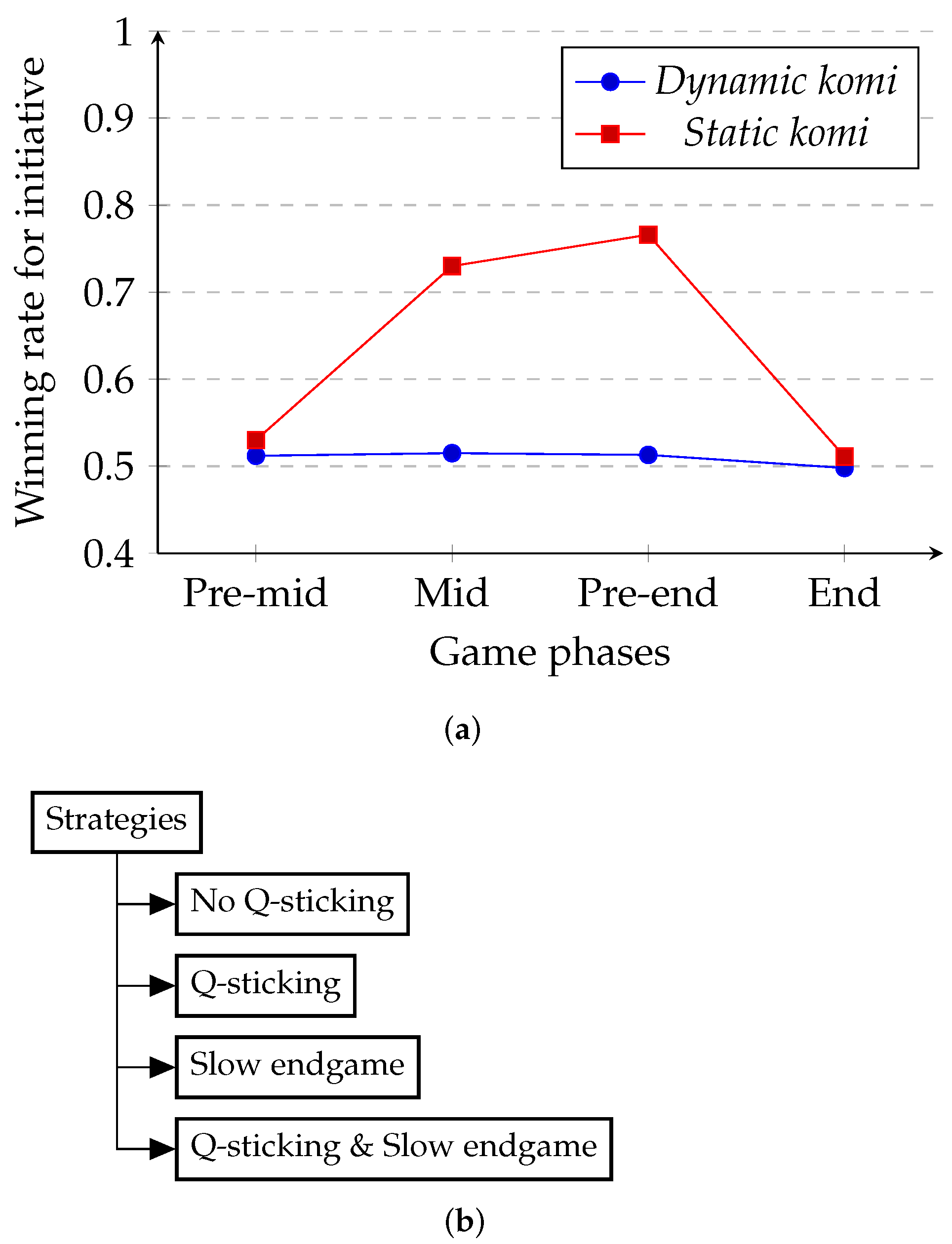

4.1. Analysis of Dynamic Komi in the Game of Go

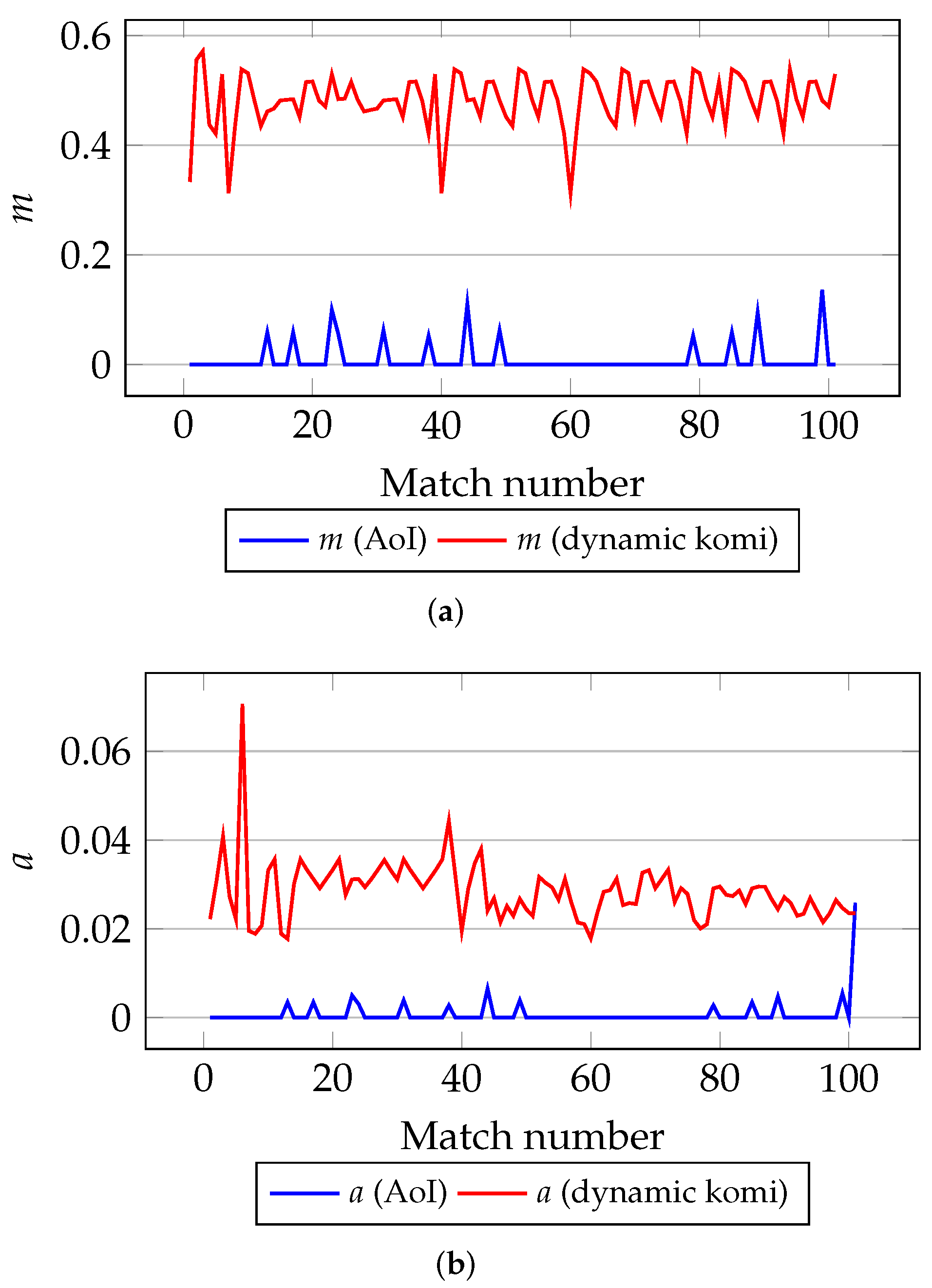

4.2. Analysis of Dynamic Komi in the Game of Scrabble

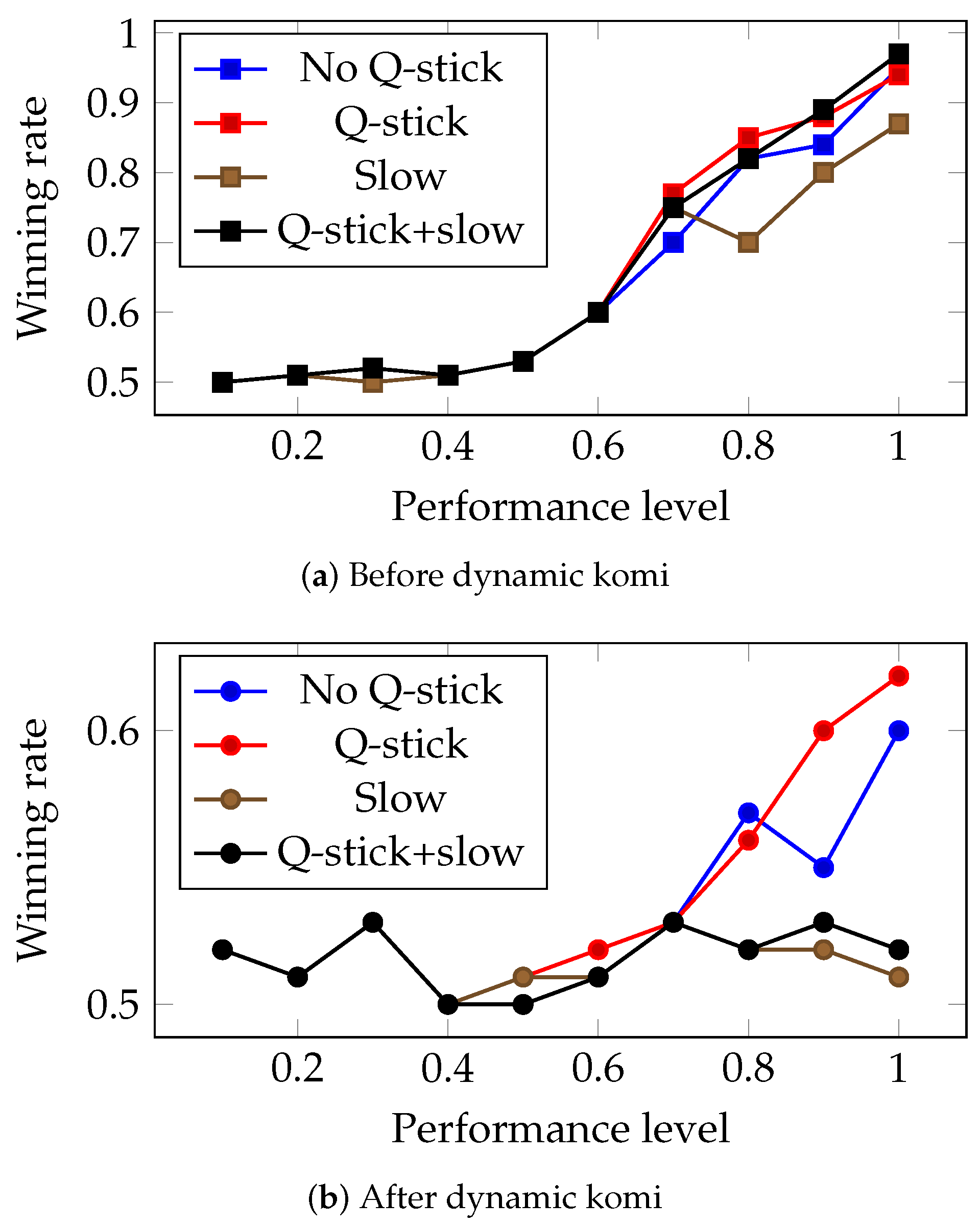

4.3. Optimal Play Strategy in Scrabble

4.4. Link between Play Strategy and Fairness

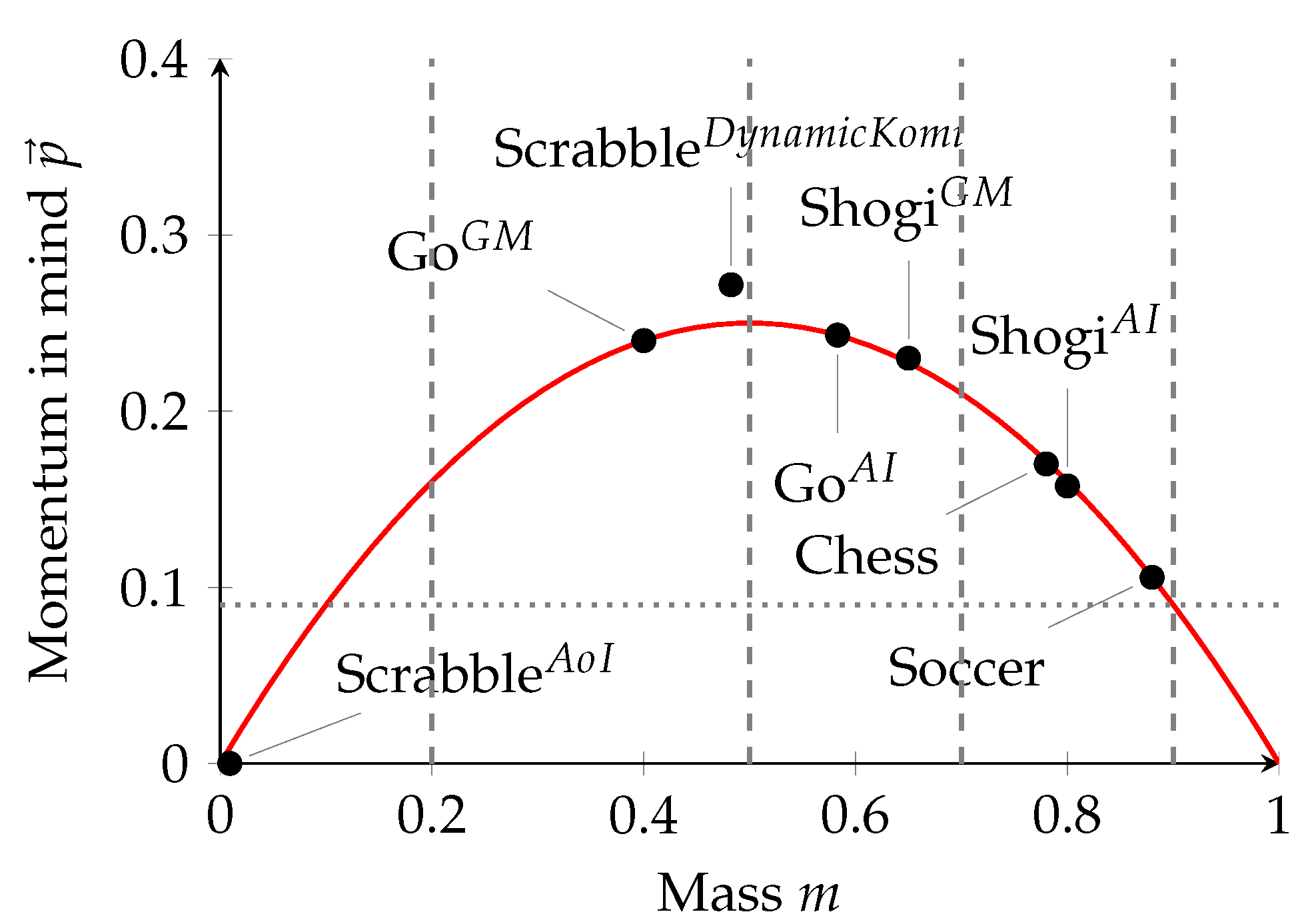

4.5. Interpretation of m with Respect to Fairness from Entertainment Perspective

4.6. Interpretation of m with Respect to Fairness from the Physics-in-Mind Perspective

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Goel, A.; Meyerson, A.; Plotkin, S. Combining fairness with throughput: Online routing with multiple objectives. In Proceedings of the Thirty-Second Annual ACM Symposium on Theory of Computing, Portland, OR, USA, 21–23 May 2000; pp. 670–679. [Google Scholar]

- Kumar, A.; Kleinberg, J. Fairness measures for resource allocation. In Proceedings of the 41st Annual Symposium on Foundations of Computer Science, Redondo Beach, CA, USA, 12–14 November 2000; pp. 75–85. [Google Scholar]

- Amershi, S.; Chickering, M.; Drucker, S.M.; Lee, B.; Simard, P.; Suh, J. Modeltracker: Redesigning performance analysis tools for machine learning. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 337–346. [Google Scholar]

- Chang, J.C.; Amershi, S.; Kamar, E. Revolt: Collaborative crowdsourcing for labeling machine learning datasets. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 2334–2346. [Google Scholar]

- Dove, G.; Halskov, K.; Forlizzi, J.; Zimmerman, J. UX design innovation: Challenges for working with machine learning as a design material. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 278–288. [Google Scholar]

- Agarwal, A.; Beygelzimer, A.; Dudík, M.; Langford, J.; Wallach, H. A reductions approach to fair classification. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 60–69. [Google Scholar]

- Cramer, H.; Garcia-Gathright, J.; Reddy, S.; Springer, A.; Takeo Bouyer, R. Translation, tracks & data: An algorithmic bias effort in practice. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–8. [Google Scholar]

- Holstein, K.; Wortman Vaughan, J.; Daumé, H., III; Dudik, M.; Wallach, H. Improving fairness in machine learning systems: What do industry practitioners need? In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–16. [Google Scholar]

- Binns, R. Fairness in machine learning: Lessons from political philosophy. In Proceedings of the Conference on Fairness, Accountability and Transparency, PMLR, New York, NY, USA, 23–24 February 2018; pp. 149–159. [Google Scholar]

- Chouldechova, A. Fair prediction with disparate impact: A study of bias in recidivism prediction instruments. Big Data 2017, 5, 153–163. [Google Scholar] [CrossRef] [PubMed]

- Dwork, C.; Hardt, M.; Pitassi, T.; Reingold, O.; Zemel, R. Fairness through awareness. In Proceedings of the 3rd Innovations in Theoretical Computer Science Conference, Cambridge, MA, USA, 8–10 January 2012; pp. 214–226. [Google Scholar]

- Lee, M.K.; Baykal, S. Algorithmic mediation in group decisions: Fairness perceptions of algorithmically mediated vs. discussion-based social division. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing, Portland, OR, USA, 25 February–1 March 2017; pp. 1035–1048. [Google Scholar]

- Díaz, M.; Johnson, I.; Lazar, A.; Piper, A.M.; Gergle, D. Addressing age-related bias in sentiment analysis. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–14. [Google Scholar]

- Liu, A.; Reyzin, L.; Ziebart, B. Shift-pessimistic active learning using robust bias-aware prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29. [Google Scholar]

- Huang, X.L.; Ma, X.; Hu, F. Machine learning and intelligent communications. Mob. Netw. Appl. 2018, 23, 68–70. [Google Scholar] [CrossRef]

- Aung, H.P.P.; Iida, H. Advantage of Initiative Revisited: A case study using Scrabble AI. In Proceedings of the 2018 International Conference on Advanced Information Technologies (ICAIT), Yangon, Myanmar, 1–2 November 2018; Volume 11. [Google Scholar]

- Borge, S. An agon aesthetics of football. Sport Ethics Philos. 2015, 9, 97–123. [Google Scholar] [CrossRef]

- Shirata, Y. The evolution of fairness under an assortative matching rule in the ultimatum game. Int. J. Game Theory 2012, 41, 1–21. [Google Scholar] [CrossRef][Green Version]

- Iida, H. On games and fairness. In Proceedings of the 12th Game Programming Workshop, Kanagawa, Japan, 8–10 November 2007; pp. 17–22. [Google Scholar]

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; et al. A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 2018, 362, 1140–1144. [Google Scholar] [CrossRef] [PubMed]

- Schaeffer, J. One Jump Ahead: Challenging Human Supremacy in Checkers; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Buro, M. How Machines Have Rearnea to Play Othello. IEEE Intell. Syst. Appl. 1999, 14, 12–14. [Google Scholar]

- Sheppard, B. World-championship-caliber Scrabble. Artif. Intell. 2002, 134, 241–275. [Google Scholar] [CrossRef][Green Version]

- Canaan, R.; Salge, C.; Togelius, J.; Nealen, A. Leveling the playing field: Fairness in AI versus human game benchmarks. In Proceedings of the 14th International Conference on the Foundations of Digital Games, Luis Obispo, CA, USA, 26–30 August 2019; pp. 1–8. [Google Scholar]

- Justesen, N.; Debus, M.S.; Risi, S. When Are We Done with Games? In Proceedings of the 2019 IEEE Conference on Games (CoG), London, UK, 20–23 August 2019; pp. 1–8. [Google Scholar]

- Van Den Herik, H.J.; Uiterwijk, J.W.; Van Rijswijck, J. Games solved: Now and in the future. Artif. Intell. 2002, 134, 277–311. [Google Scholar] [CrossRef]

- Iida, H. Games and Equilibriums; 12th IPSJ-SIG-GI-12; Totsugeki-Tohoku, Science: Kodansha, Japan, 2004. [Google Scholar]

- Toys|Toys and Games—Scrabble. 2008. Available online: https://web.archive.org/web/20080424165147/http://www.history.com/minisite.do?content_type=Minisite_Generic&content_type_id=57162&display_order=4&sub_display_order=4&mini_id=57124 (accessed on 13 January 2021).

- Staff, G. Oliver Burkeman on Scrabble. 2008. Available online: http://www.theguardian.com/lifeandstyle/2008/jun/28/healthandwellbeing.familyandrelationships (accessed on 13 January 2021).

- MindZine—Scrabble—History. 2011. Available online: https://web.archive.org/web/20110608001552/http://www.msoworld.com/mindzine/news/proprietary/scrabble/features/history.html (accessed on 13 January 2021).

- Müller, M. Computer go. Artif. Intell. 2002, 134, 145–179. [Google Scholar] [CrossRef]

- Gelly, S.; Kocsis, L.; Schoenauer, M.; Sebag, M.; Silver, D.; Szepesvári, C.; Teytaud, O. The grand challenge of computer Go: Monte Carlo tree search and extensions. Commun. ACM 2012, 55, 106–113. [Google Scholar] [CrossRef]

- Fotland, D. Knowledge representation in the Many Faces of Go. 1993. Available online: ftp://Basdserver.Ucsf.Edu/Go/Coomp/Mfg.Gz (accessed on 13 January 2021).

- Chen, K. The move decision process of Go Intellect. Comput. Go 1990, 14, 9–17. [Google Scholar]

- Kocsis, L.; Szepesvári, C. Bandit based monte-carlo planning. In Proceedings of the European Conference on Machine Learning, Berlin, Germany, 18–22 September 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 282–293. [Google Scholar]

- Tesauro, G.; Galperin, G.R. On-line policy improvement using Monte-Carlo search. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 2–5 December 1997; pp. 1068–1074. [Google Scholar]

- Gelly, S.; Silver, D. Combining online and offline knowledge in UCT. In Proceedings of the 24th International Conference on Machine Learning, San Juan, PR, USA, 21–24 March 2007; pp. 273–280. [Google Scholar]

- Browne, C.B.; Powley, E.; Whitehouse, D.; Lucas, S.M.; Cowling, P.I.; Rohlfshagen, P.; Tavener, S.; Perez, D.; Samothrakis, S.; Colton, S. A survey of monte carlo tree search methods. IEEE Trans. Comput. Intell. AI Games 2012, 4, 1–43. [Google Scholar] [CrossRef]

- Coulom, R. Efficient selectivity and backup operators in Monte-Carlo tree search. In Proceedings of the International Conference on Computers and Games, Turin, Italy, 29–31 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 72–83. [Google Scholar]

- Baudiš, P. Balancing MCTS by dynamically adjusting the komi value. ICGA J. 2011, 34, 131–139. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484. [Google Scholar] [CrossRef] [PubMed]

- Allis, L.V. Searching for Solutions in Games and Artificial Intelligence; Ponsen & Looijen: Wageningen, The Netherlands, 1994. [Google Scholar]

- The Renju International Federation Portal—RenjuNet. 2006. Available online: http://www.renju.net/study/rules.php (accessed on 13 January 2021).

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach; Pearson Education Limited: London, UK, 2016. [Google Scholar]

- Brown, J.; O’Laughlin, J. How Quackle Plays Scrabble. 2007. Available online: http://people.csail.mit.edu/jasonkb/quackle/doc/how_quackle_plays_scrabble.html (accessed on 15 January 2020).

- Májek, P.; Iida, H. Uncertainty of game outcome. In Proceedings of the 3rd International Conference on Global Research and Education in Intelligent Systems, Budapest, Hungary, 6–9 September 2004; pp. 171–180. [Google Scholar]

- Iida, H.; Takahara, K.; Nagashima, J.; Kajihara, Y.; Hashimoto, T. An application of game-refinement theory to Mah Jong. In Proceedings of the International Conference on Entertainment Computing, Eindhoven, The Netherlands, 1–3 September 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 333–338. [Google Scholar]

- Iida, H.; Takeshita, N.; Yoshimura, J. A metric for entertainment of boardgames: Its implication for evolution of chess variants. In Entertainment Computing; Springer: Berlin/Heidelberg, Germany, 2003; pp. 65–72. [Google Scholar]

- Zuo, L.; Xiong, S.; Iida, H. An analysis of dota2 using game refinement measure. In Proceedings of the International Conference on Entertainment Computing, Tsukuba City, Japan, 18–21 September 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 270–276. [Google Scholar]

- Xiong, S.; Zuo, L.; Iida, H. Quantifying engagement of electronic sports game. Adv. Soc. Behav. Sci. 2014, 5, 37–42. [Google Scholar]

- Takeuchi, J.; Ramadan, R.; Iida, H. Game refinement theory and its application to Volleyball. Inf. Process. Soc. Jpn. 2014, 2014, 1–6. [Google Scholar]

- Sutiono, A.P.; Ramadan, R.; Jarukasetporn, P.; Takeuchi, J.; Purwarianti, A.; Iida, H. A mathematical Model of Game Refinement and Its Applications to Sports Games. EAI Endorsed Trans. Creat. Technol. 2015, 15, e1. [Google Scholar] [CrossRef][Green Version]

- Zuo, L.; Xiong, S.; Iida, H. An analysis of hotel loyalty program with a focus on the tiers and points system. In Proceedings of the 2017 4th International Conference on Systems and Informatics (ICSAI), Hangzhou, China, 11–13 November 2017; pp. 507–512. [Google Scholar]

- Huynh, D.; Zuo, L.; Iida, H. Analyzing gamification of “Duolingo” with focus on its course structure. In Proceedings of the International Conference on Games and Learning Alliance, Utrecht, The Netherlands, 5–7 December 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 268–277. [Google Scholar]

- Iida, H. Where is a line between work and play? IPSJ Sig. Tech. Rep. 2018, 2018, 1–6. [Google Scholar]

- Xiong, S.; Zuo, L.; Iida, H. Possible interpretations for game refinement measure4. In Proceedings of the International Conference on Entertainment Computing, Tsukuba City, Japan, 18–21 September 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 322–334. [Google Scholar]

- Sensei’s Library. Available online: https://senseis.xmp.net/?Komi (accessed on 13 January 2021).

- Aung, H.P.P.; Khalid, M.N.A.; Iida, H. Towards a fairness solution of Scrabble with Komi system. In Proceedings of the 2019 International Conference on Advanced Information Technologies (ICAIT), Yangon, Myanmar, 6–7 November 2019; pp. 66–71. [Google Scholar] [CrossRef]

- Wespa Tournament Calendar. Available online: http://www.wespa.org/tournaments/index.shtml (accessed on 18 January 2021).

- Scrabble: An Entertaining Way to Improve Your Child’s Vocabulary and Spelling Skills. Available online: http://mathandreadinghelp.org/articles/Scrabble/3AAnEntertainingWaytoImproveYourChild/27sVocabularyandSpellingSkills.html (accessed on 18 January 2021).

- Aung, H.P.P.; Khalid, M.N.A. Can we save near-dying games? An approach using advantage of initiative and game refinement measures. In Proceedings of the 1st International Conference on Informatics, Engineering, Science and Technology, INCITEST 2019, Bandung, Indonesia, 18 July 2019. [Google Scholar] [CrossRef]

- Wu, T.R.; Wu, I.C.; Chen, G.W.; Wei, T.H.; Wu, H.C.; Lai, T.Y.; Lan, L.C. Multilabeled Value Networks for Computer Go. IEEE Trans. Games 2018, 10, 378–389. [Google Scholar] [CrossRef]

- Meyers, J. Importance of End Game in Scrabble. 2011. Available online: https://scrabble.wonderhowto.com/how-to/scrabble-challenge-9-can-you-win-losing-game-last-move-0130043/ (accessed on 15 January 2020).

- Wu, Y.; Mohd Nor Kkmal, R.; Hiroyuki, I. Informatical Analysis of Go, Part 1: Evolutionary Changes of Board Size. In Proceedings of the 2020 IEEE Conference on Games (CoG), Osaka, Japan, 24–27 August 2020; pp. 320–327. [Google Scholar]

- Hiroyuki, I.; Mohd Nor Kkmal, R. Using games to study law of motions in mind. IEEE Access 2020, 84, 138701–138709. [Google Scholar]

| No. of Games | Winning Probability | |

|---|---|---|

| Black | 6701 | 53.15% |

| White | 5906 | 46.85% |

| Total | 12,607 |

| Komi | Static Komi | Dynamic Komi | ||

|---|---|---|---|---|

| Value | Black | White | Black | White |

| 3.5 | 53.30% | 46.70% | 53.10% | 46.90% |

| 4.5 | 55.00% | 45.00% | 52.90% | 47.10% |

| 5.5 | 53.15% | 46.85% | 52.50% | 47.50% |

| 6.5 | 50.58% | 49.42% | 51.40% | 48.60% |

| 7.5 | 49.51% | 50.49% | 50.15% | 49.85% |

| Game | G | T | B | D | |

|---|---|---|---|---|---|

| Xiangqi | 38.00 | 95 | 0.065 | ||

| Soccer | 2.64 | 22.00 | 0.073 | ||

| Basketball | 36.38 | 82.01 | 0.073 | ||

| Chess | 35.00 | 80.00 | 0.074 | ||

| Go | 250.00 | 208.00 | 0.076 | ||

| Table tennis | 54.86 | 96.47 | 0.077 | ||

| UNO® | 0.98 | 12.68 | 0.078 | ||

| DotA® | 68.6 | 106.20 | 0.078 | ||

| Shogi | 80.00 | 115 | 0.078 | ||

| Badminton | 46.34 | 79.34 | 0.086 | ||

| Scrabble * | 2.79 | 31.54 | 0.053 | ||

| Scrabble ** | 10.25 | 39.56 | 0.080 |

| Game | G | T | B | D | m | F | ||

|---|---|---|---|---|---|---|---|---|

| Chess | 35.00 | 80.00 | 0.7813 | 0.1708 | 0.0152 | 0.07474 | ||

| Go | 250.00 | 208.00 | 0.4000 | 0.2400 | 0.0015 | 0.2880 | ||

| Go | 220.00 | 264.00 | 0.5830 | 0.2431 | 0.0026 | 0.2028 | ||

| Shogi | 80.00 | 115.00 | 0.6500 | 0.2300 | 0.0073 | 0.1593 | ||

| Shogi | 80.00 | 204.00 | 0.8040 | 0.1575 | 0.0063 | 0.0640 | ||

| Soccer | 2.64 | 22 | 0.8800 | 0.1056 | 0.0704 | 0.0253 | ||

| Scrabble * | 2.79 | 31.54 | 0.0091 | 0.00006 | 0.000004 | 0.000001 | ||

| Scrabble ** | 10.25 | 39.56 | 0.4824 | 0.27170 | 0.0137 | 0.3061 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aung, H.P.P.; Khalid, M.N.A.; Iida, H. What Constitutes Fairness in Games? A Case Study with Scrabble. Information 2021, 12, 352. https://doi.org/10.3390/info12090352

Aung HPP, Khalid MNA, Iida H. What Constitutes Fairness in Games? A Case Study with Scrabble. Information. 2021; 12(9):352. https://doi.org/10.3390/info12090352

Chicago/Turabian StyleAung, Htun Pa Pa, Mohd Nor Akmal Khalid, and Hiroyuki Iida. 2021. "What Constitutes Fairness in Games? A Case Study with Scrabble" Information 12, no. 9: 352. https://doi.org/10.3390/info12090352

APA StyleAung, H. P. P., Khalid, M. N. A., & Iida, H. (2021). What Constitutes Fairness in Games? A Case Study with Scrabble. Information, 12(9), 352. https://doi.org/10.3390/info12090352