An Inspection and Classification System for Automotive Component Remanufacturing Industry Based on Ensemble Learning

Abstract

:1. Introduction

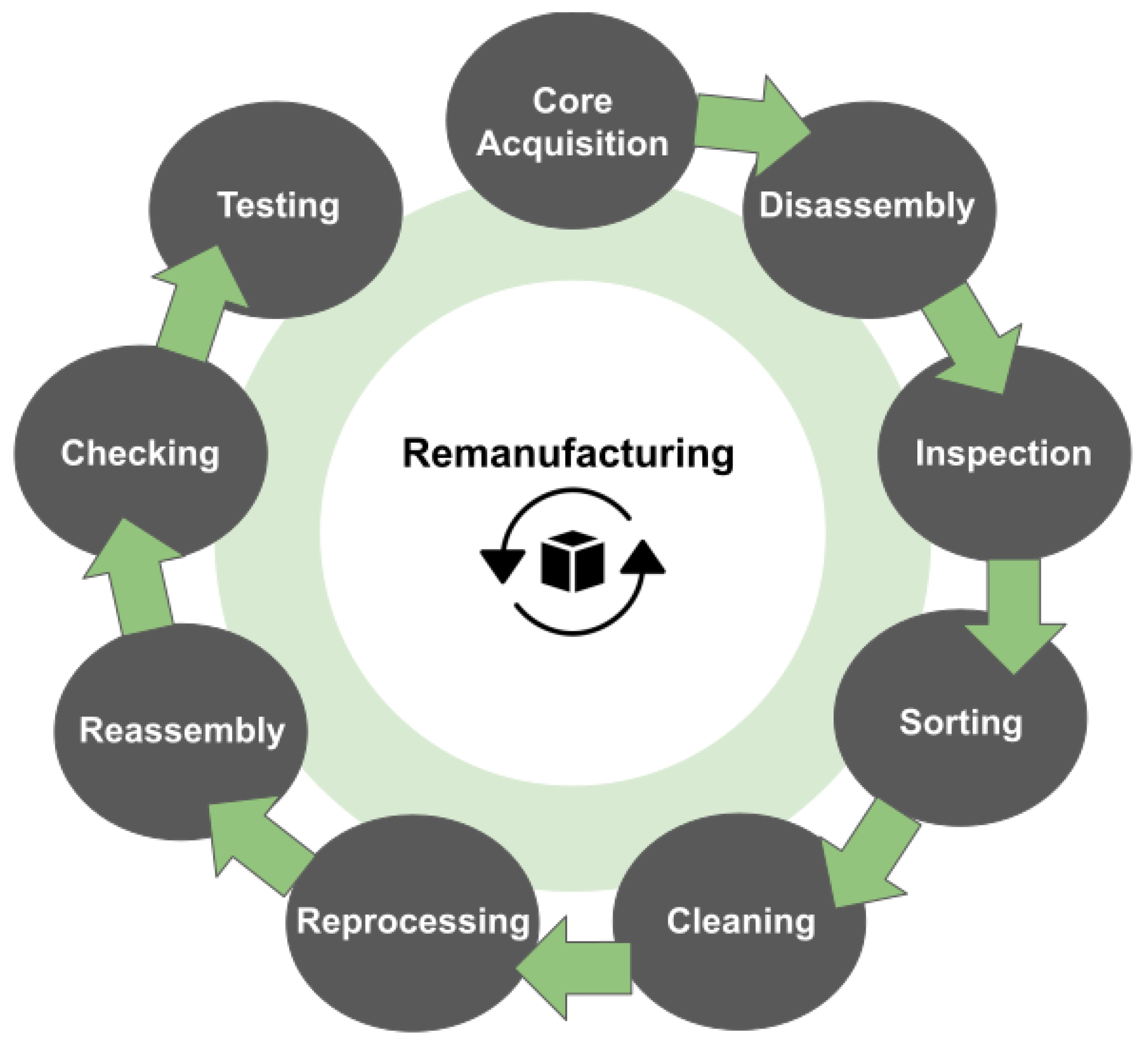

1.1. Remanufacturing Process in the Manufacturing Industry

1.2. Machine Vision Applications for Quality Control

1.3. Main Contributions

2. Materials and Methods

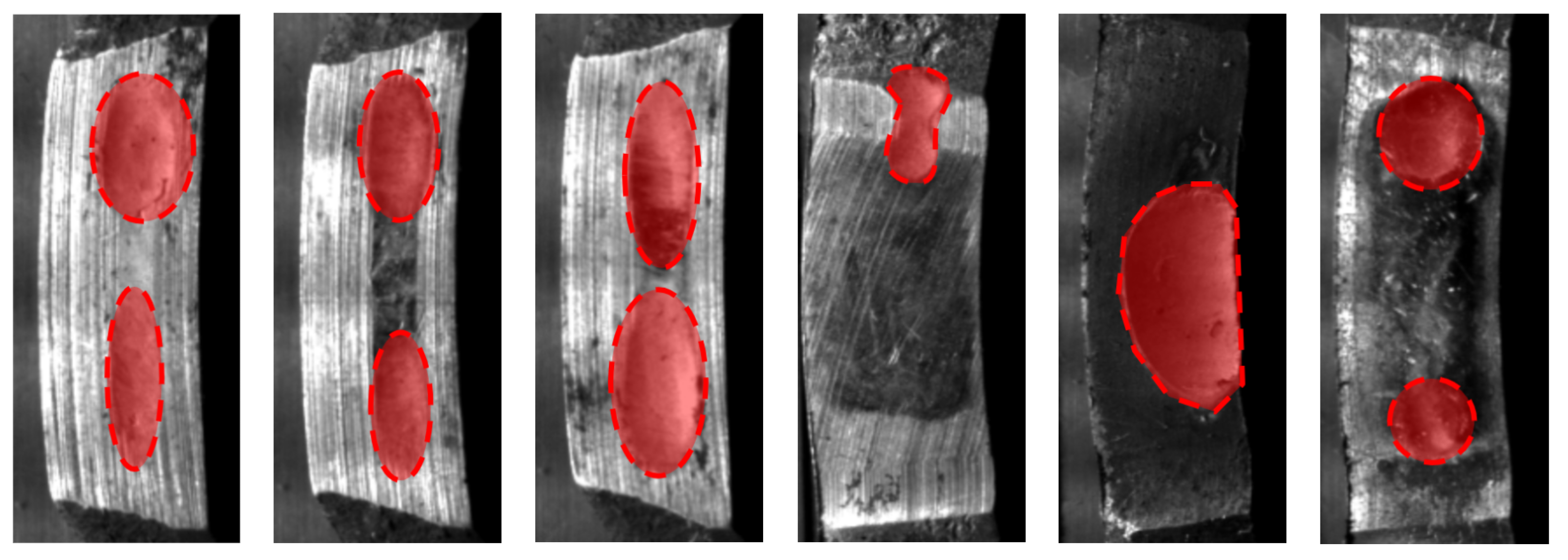

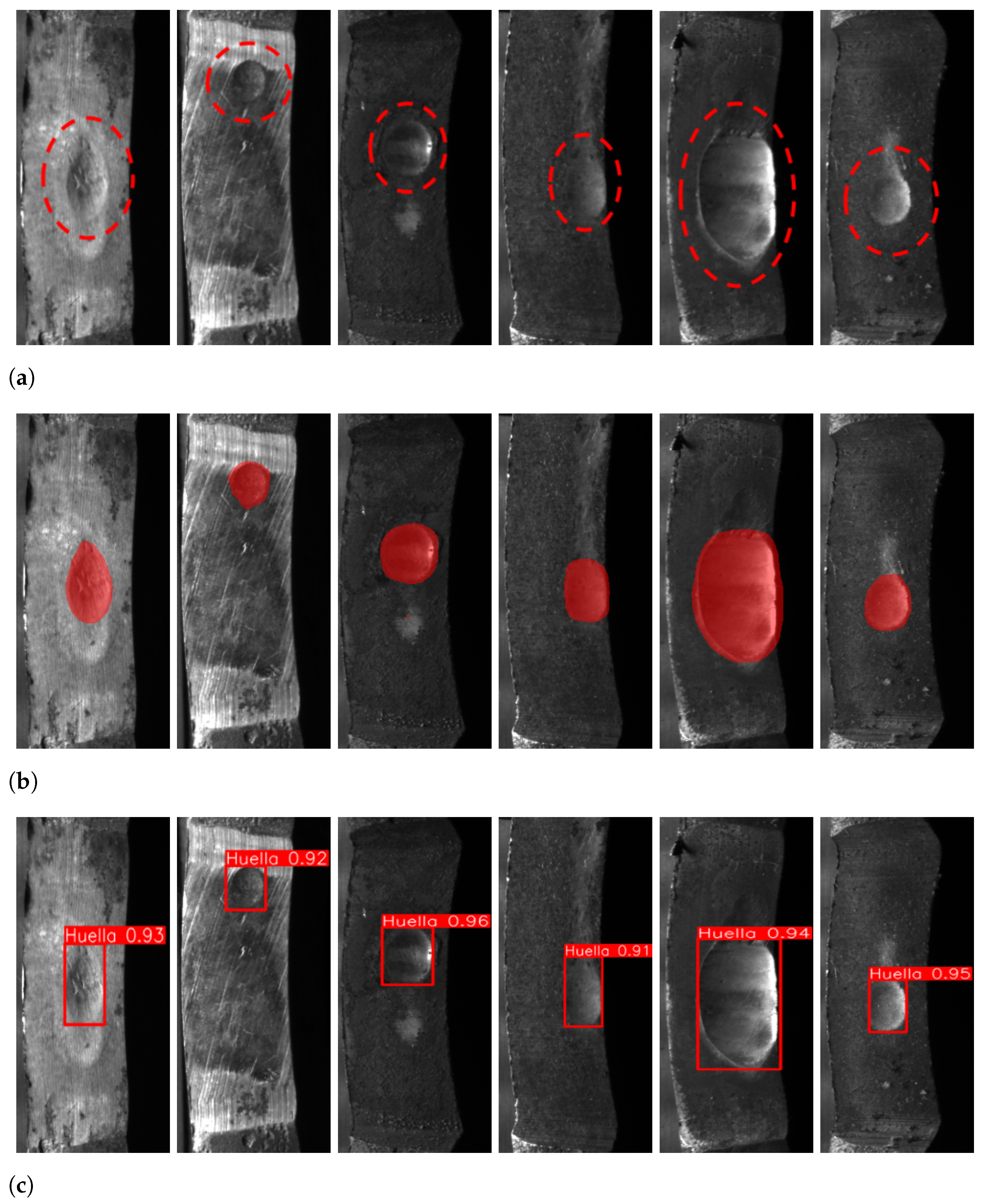

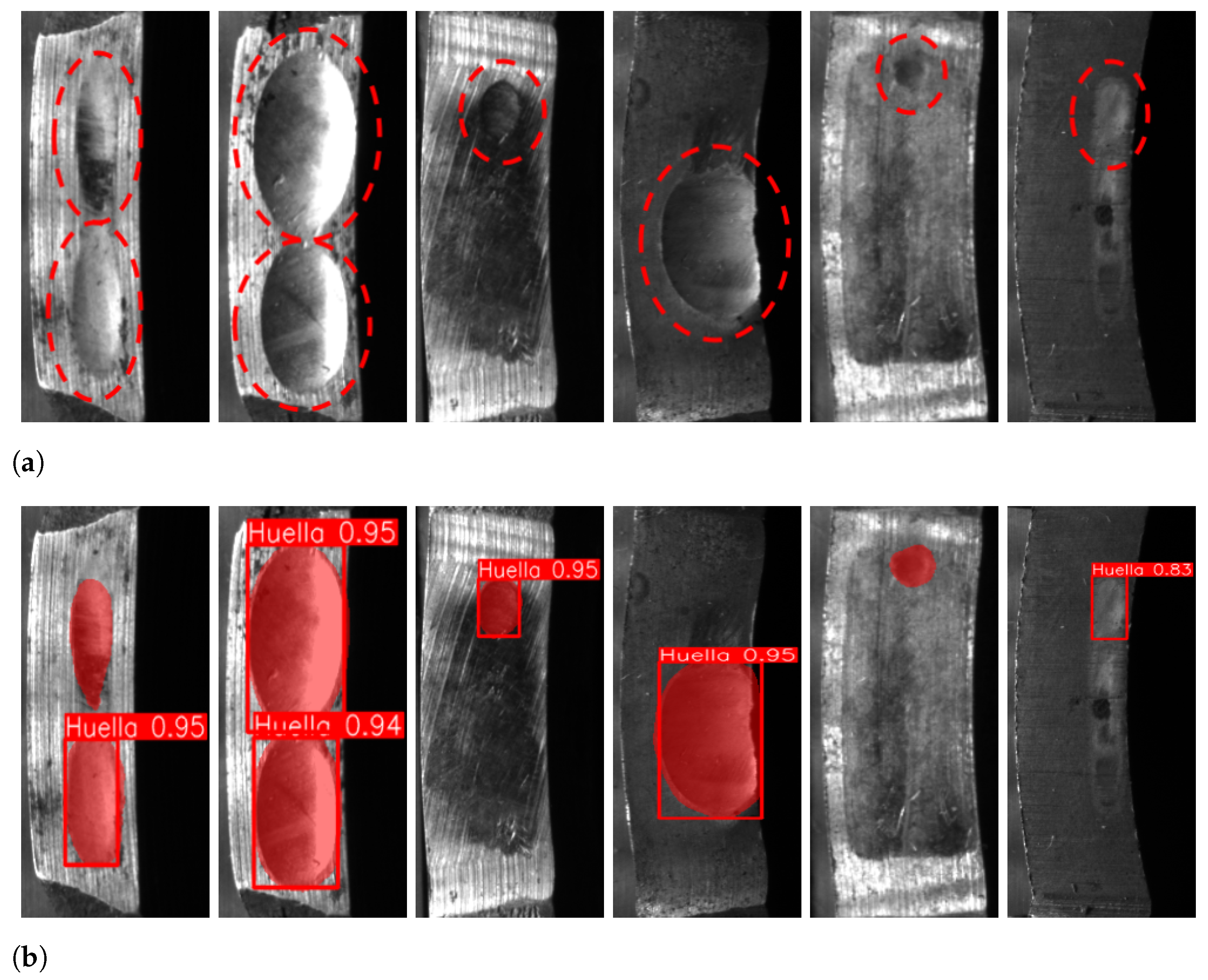

2.1. Characteristics of Inspected Components

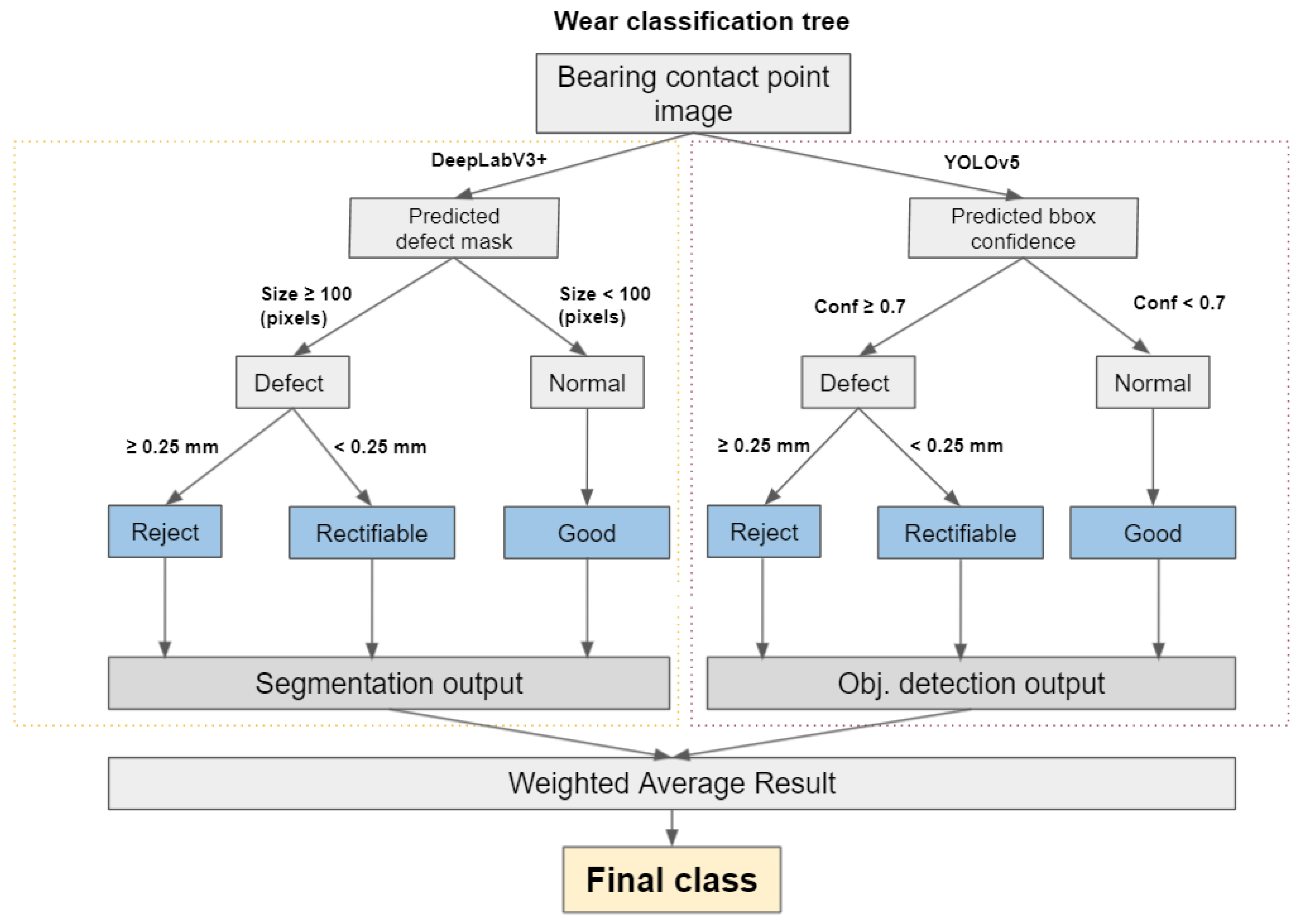

- If the cage has a wear diameter smaller than 0.25 mm, it is rectifiable;

- If the cage has a wear diameter equal or greater than 0.25 mm, it is rejectable.

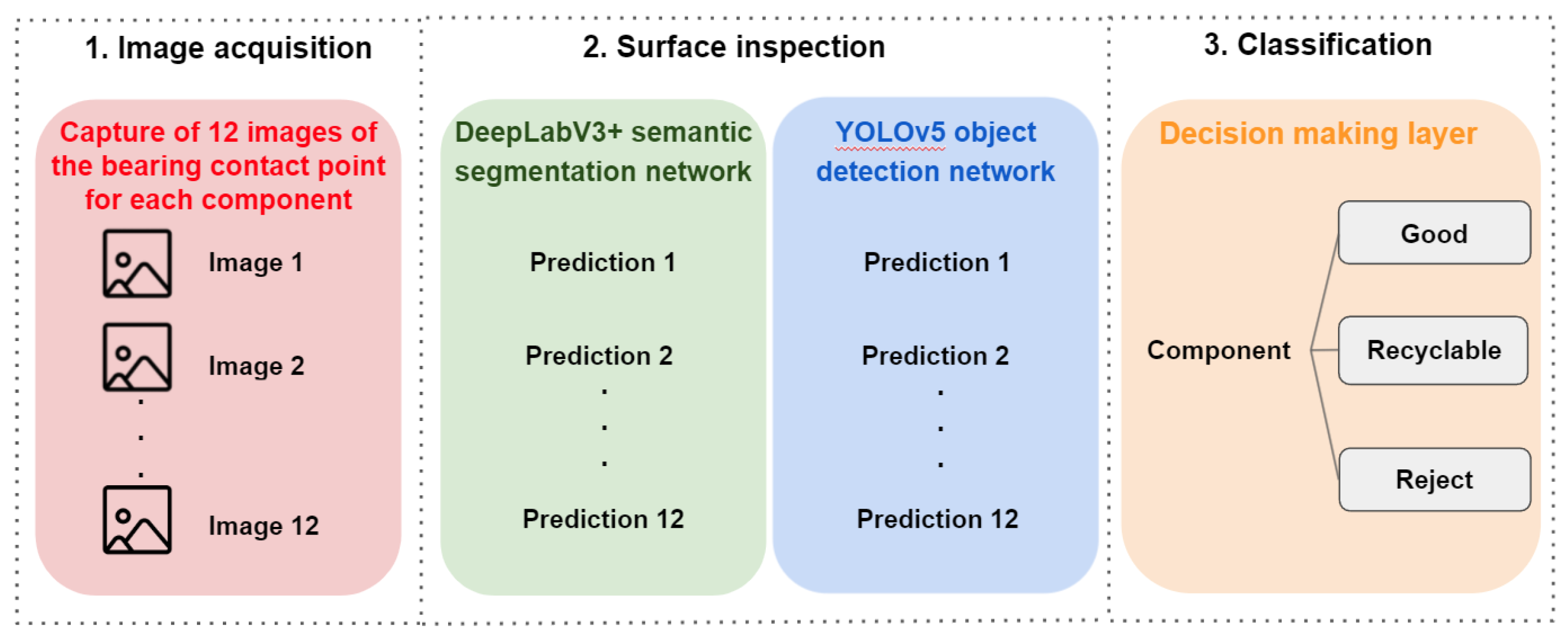

2.2. Proposed Inspection and Evaluation Pipeline

2.2.1. Step 1: Image Acquisition

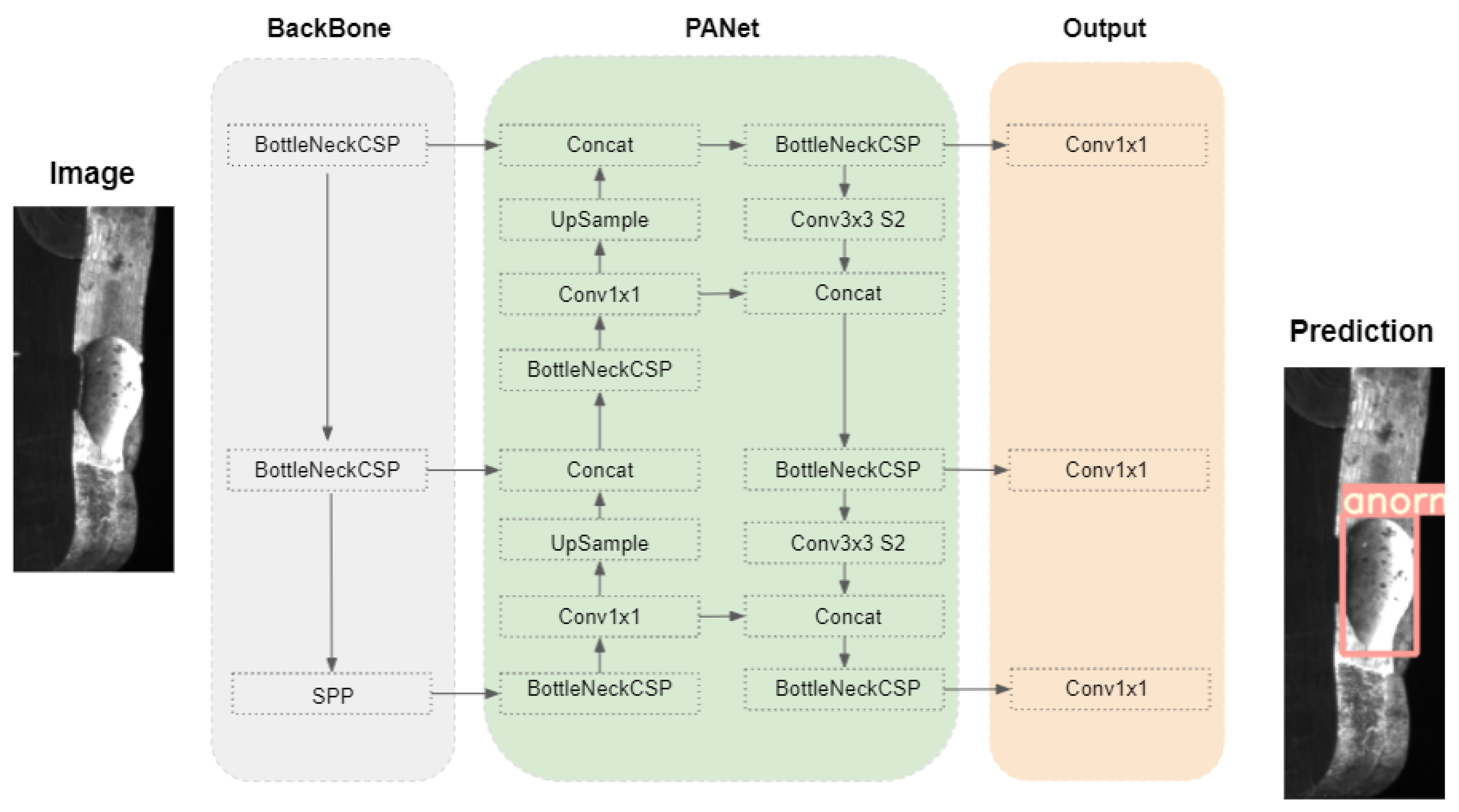

2.2.2. Step 2: Surface Inspection

2.2.3. Step 3: Classification Layer

2.3. Evaluation Metrics

- True Positives (TP): the defect is detected as defect;

- True Negatives (TN): the normality is detected as normality;

- False Positives (FP): the normality is mistakenly detected as defect;

- False Negatives (FN): the defect is mistakenly detected as normality.

3. Results and Discussion

3.1. Dataset Generation

3.2. Performance Comparison between Traditional Methods and Deep Neural Networks

3.3. Individual Evaluation of the Deep Neural Networks Models Performance

3.4. Analysis of the Model Ensemble Performance

3.5. Component Final Classification Results

3.6. Results Summary

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mete, S.; Çil, Z.A.; Özceylan, E.; Ağpak, K.; Battaïa, O. An optimisation support for the design of hybrid production lines including assembly and disassembly tasks. Int. J. Prod. Res. 2018, 56, 7375–7389. [Google Scholar] [CrossRef]

- Nasr, N.; Hilton, B.; German, R. Advances in Sustainable Manufacturing; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011; pp. 189–194. [Google Scholar] [CrossRef]

- Ijomah, W.L.; McMahon, C.A.; Hammond, G.P.; Newman, S.T. Development of design for remanufacturing guidelines to support sustainable manufacturing. Robot.-Comput.-Integr. Manuf. 2007, 23, 712–719. [Google Scholar] [CrossRef]

- Ijomah, W. A Model-Based Definition of the Generic Remanufacturing Business Process; University of Plymouth: Plymouth, UK, 2002. [Google Scholar]

- Zhu, X.; Ren, M.; Chu, W.; Chiong, R. Remanufacturing subsidy or carbon regulation? An alternative toward sustainable production. J. Clean. Prod. 2019, 239, 117988. [Google Scholar] [CrossRef]

- Steinhilper, R. Remanufacturing—The Ultimate Form of Recycling; Fraunhofer IRB Verlag: Stuttgart, Germany, 1998. [Google Scholar]

- Ijomah, W.L.; McMahon, C.; Childe, S. Remanufacturing—A key strategy for sustainable development. In Design and Manufacture for Sustainable Development 2004; Cambridge University Press: Cambridge, UK, 2004; pp. 51–63. [Google Scholar]

- Nasr, N.; Thurston, M. Remanufacturing: A key enabler to sustainable product systems. Rochester Inst. Technol. 2006, 23, 15–18. [Google Scholar]

- Sundin, E.; Lee, H.M. In what way is remanufacturing good for the environment? In Design for Innovative Value towards a Sustainable Society; Springer: Kyoto, Japan, 2012; pp. 552–557. [Google Scholar]

- Sundin, E.; Bras, B. Making functional sales environmentally and economically beneficial through product remanufacturing. J. Clean. Prod. 2005, 13, 913–925. [Google Scholar] [CrossRef] [Green Version]

- Geyer, R.; Van Wassenhove, L.N.; Atasu, A. The economics of remanufacturing under limited component durability and finite product life cycles. Manag. Sci. 2007, 53, 88–100. [Google Scholar] [CrossRef] [Green Version]

- Gallo, M.; Romano, E.; Santillo, L.C. A perspective on remanufacturing business: Issues and opportunities. Int. Trade Econ. Policy Perspect. 2012, 209. [Google Scholar] [CrossRef]

- Pawlik, E.; Ijomah, W.; Corney, J. Current state and future perspective research on lean remanufacturing–focusing on the automotive industry. In IFIP International Conference on Advances in Production Management Systems; Springer: Berlin/Heidelberg, Germany, 2012; pp. 429–436. [Google Scholar]

- Lee, C.M.; Woo, W.S.; Roh, Y.H. Remanufacturing: Trends and issues. Int. J. Precis. Eng.-Manuf.-Green Technol. 2017, 4, 113–125. [Google Scholar] [CrossRef]

- Villalba-Diez, J.; Schmidt, D.; Gevers, R.; Ordieres-Meré, J.; Buchwitz, M.; Wellbrock, W. Deep learning for industrial computer vision quality control in the printing industry 4.0. Sensors 2019, 19, 3987. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chouchene, A.; Carvalho, A.; Lima, T.M.; Charrua-Santos, F.; Osório, G.J.; Barhoumi, W. Artificial intelligence for product quality inspection toward smart industries: Quality control of vehicle non-conformities. In Proceedings of the 2020 9th international conference on industrial technology and management (ICITM), Oxford, UK, 11–13 February 2020; pp. 127–131. [Google Scholar]

- Schwebig, A.I.M.; Tutsch, R. Compilation of training datasets for use of convolutional neural networks supporting automatic inspection processes in industry 4.0 based electronic manufacturing. J. Sens. Sens. Syst. 2020, 9, 167–178. [Google Scholar] [CrossRef]

- Zheng, P.; Wang, H.; Sang, Z.; Zhong, R.Y.; Liu, Y.; Liu, C.; Mubarok, K.; Yu, S.; Xu, X. Smart manufacturing systems for Industry 4.0: Conceptual framework, scenarios, and future perspectives. Front. Mech. Eng. 2018, 13, 137–150. [Google Scholar] [CrossRef]

- Picon Ruiz, A.; Alvarez Gila, A.; Irusta, U.; Echazarra Huguet, J. Why deep learning performs better than classical machine learning? Dyna Ing. Ind. 2020, 95, 119–122. [Google Scholar] [CrossRef] [Green Version]

- Nwankpa, C.; Eze, S.; Ijomah, W.; Gachagan, A.; Marshall, S. Achieving remanufacturing inspection using deep learning. J. Remanuf. 2021, 11, 89–105. [Google Scholar] [CrossRef]

- Nwankpa, C.; Eze, S.; Ijomah, W.; Gachagan, A.; Marshall, S. Deep learning based vision inspection system for remanufacturing application. In Advances in Manufacturing Technology XXXIII; IOS Press: Amsterdam, The Netherlands, 2019; pp. 535–546. [Google Scholar]

- Zheng, Y.; Mamledesai, H.; Imam, H.; Ahmad, R. A Novel Deep Learning-based Automatic Damage Detection and Localization Method for Remanufacturing/Repair. Computer-Aided Design and Applications; Taylor and Francis Ltd.: Abingdon, UK, 2021; Volume 18, pp. 1359–1372. [Google Scholar]

- Zheng, Y. Intelligent and Automatic Inspection, Reconstruction and Process Planning Methods for Remanufacturing and Repair; University of Alberta: Edmonton, AB, Canada, 2021. [Google Scholar]

- Li, F.; Wu, J.; Dong, F.; Lin, J.; Sun, G.; Chen, H.; Shen, J. Ensemble machine learning systems for the estimation of steel quality control. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 2245–2252. [Google Scholar]

- Hann, E.; Gonzales, R.A.; Popescu, I.A.; Zhang, Q.; Ferreira, V.M.; Piechnik, S.K. Ensemble of Deep Convolutional Neural Networks with Monte Carlo Dropout Sampling for Automated Image Segmentation Quality Control and Robust Deep Learning Using Small Datasets. In Annual Conference on Medical Image Understanding and Analysis; Springer: Oxford, UK, 2021; pp. 280–293. [Google Scholar]

- Oh, S.J.; Woscek, J.T. Analysis of rzeppa and cardan joints in monorail drive train system. Int. J. Mech. Eng. Robot. Res. 2015, 4, 1. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Ultralytics. YOLOv5. 2021. Available online: https://github.com/ultralytics/yolov5 (accessed on 22 November 2021).

- Bochkovskiy, A.; Wang, C.; Liao, H.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Rodríguez, J.J.; Quintana, G.; Bustillo, A.; Ciurana, J. A decision-making tool based on decision trees for roughness prediction in face milling. Int. J. Comput. Integr. Manuf. 2017, 30, 943–957. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Suthaharan, S. Support vector machine. In Machine Learning Models and Algorithms for Big Data Classification; Springer: Boston, MA, USA, 2016; pp. 207–235. [Google Scholar]

- Zhang, H. The optimality of naive Bayes. AA 2004, 1, 3. [Google Scholar]

- Quinlan, J.R. Decision trees and decision-making. IEEE Trans. Syst. Man, Cybern. 1990, 20, 339–346. [Google Scholar] [CrossRef]

- Rokach, L.; Maimon, O. Decision trees. In Data Mining and Knowledge Discovery Handbook; Springer: Boston, MA, USA, 2005; pp. 165–192. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

| Total Set | Training Set | Validation Set | Test Set | |

|---|---|---|---|---|

| Number of remanufactured components | 55 | 36 | 9 | 10 |

| Number of images (12 wear zone per component) | 660 | 432 | 108 | 120 |

| Method | TP | TN | FP | FN |

|---|---|---|---|---|

| Decision Tree | 46 | 20 | 32 | 22 |

| Gaussian Naive Bayes | 54 | 28 | 24 | 14 |

| SVM | 59 | 24 | 28 | 9 |

| DeepLabV3+ | 51 | 50 | 2 | 17 |

| UNet | 50 | 48 | 13 | 9 |

| YOLOv3 | 53 | 36 | 10 | 21 |

| YOLOv5 | 60 | 44 | 8 | 8 |

| YOLOv5+DeepLabV3+ | 62 | 50 | 2 | 6 |

| Model | DeepLabV3+ | YOLOv5 | YOLOv5 + DeepLabV3+ |

|---|---|---|---|

| Accuracy (%) | 84.17 | 86.67 | 93.33 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saiz, F.A.; Alfaro, G.; Barandiaran, I. An Inspection and Classification System for Automotive Component Remanufacturing Industry Based on Ensemble Learning. Information 2021, 12, 489. https://doi.org/10.3390/info12120489

Saiz FA, Alfaro G, Barandiaran I. An Inspection and Classification System for Automotive Component Remanufacturing Industry Based on Ensemble Learning. Information. 2021; 12(12):489. https://doi.org/10.3390/info12120489

Chicago/Turabian StyleSaiz, Fátima A., Garazi Alfaro, and Iñigo Barandiaran. 2021. "An Inspection and Classification System for Automotive Component Remanufacturing Industry Based on Ensemble Learning" Information 12, no. 12: 489. https://doi.org/10.3390/info12120489