Designing Automated Deployment Strategies of Face Recognition Solutions in Heterogeneous IoT Platforms

Abstract

:1. Introduction

- To obtain (near) real-time responses, DNN models need to be processed locally, and not on remote servers, as server-device data transference would add considerable delays in such cases, the computational cost of DNN inference could be higher than the computational resources available in many IoT devices. Besides, they could have different kinds of processors (XPUs: CPUs, GPUs, FPGAs, etc.), which require specific DNN inference engines (Intel’s OpenVINO, Google’s TensorFlow Lite, NVIDIA’s TensorRT, Facebook’s PyTorch, etc.) [6].

- To allow users to enroll on one device and authenticate on another, respecting their privacy in compliance with the law, such as the EU’s General Data Protection Regulation (GDPR), biometric data needs to be managed securely, preventing intruders from gaining access.

- Besides the high heterogeneity of IoT devices, with which users might interact, in terms of shape, functionalities, sensing, and computing capabilities, we might face a high variety of user-interaction capabilities, from fully active to fully assisted. All users should be able to interact satisfactorily with the deployed FR system during the face enrollment and verification stages.

2. Related Work

2.1. User Interaction on Face Recognition Systems

2.2. Deployment of Face Recognition Systems in IoT Platforms

3. Proposed Approach

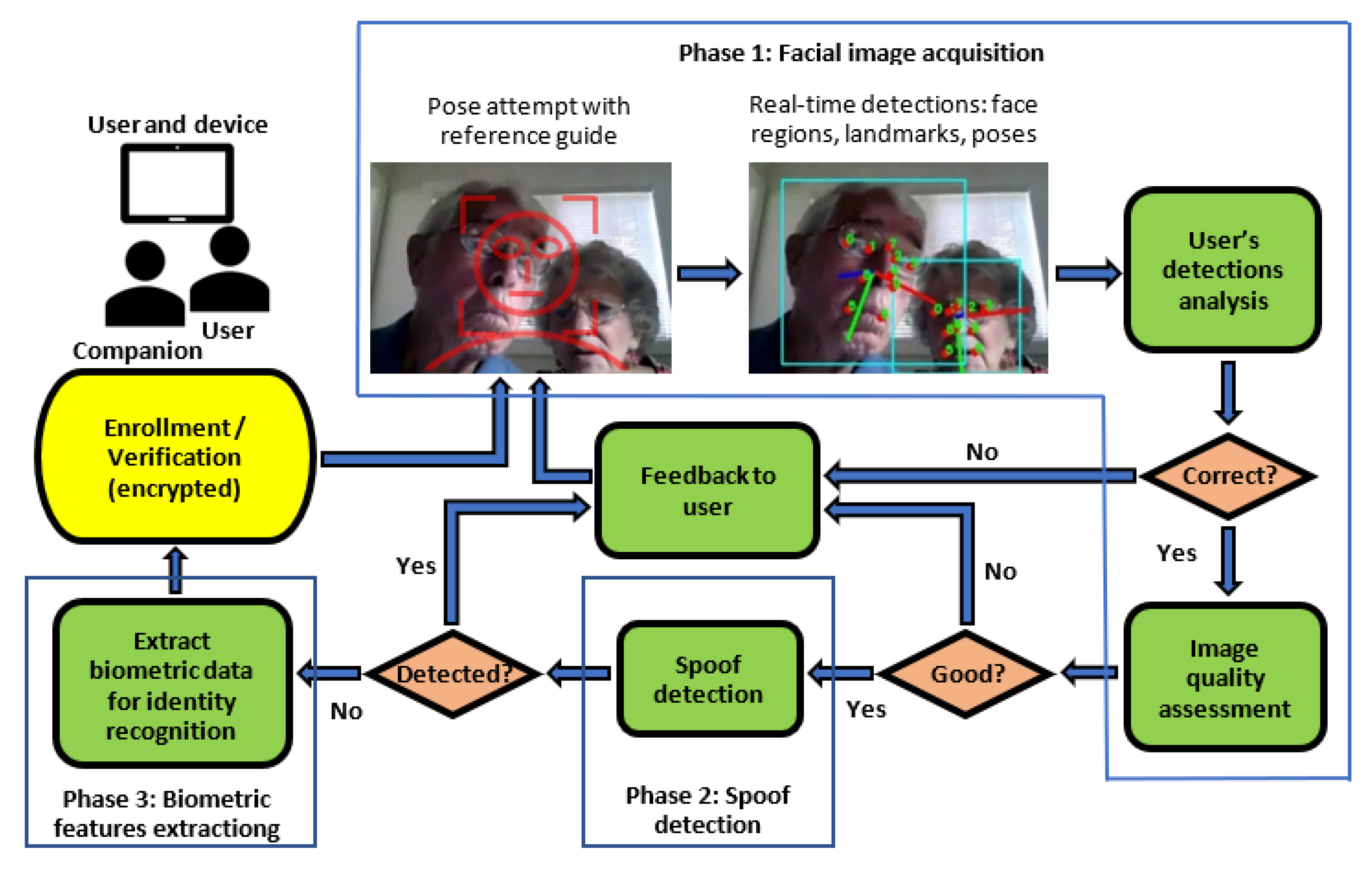

3.1. User Interaction Workflow for Face Verification

3.2. Deployment of Algorithms

- Problem: New device with heterogeneous hardware (i.e., might have one or more kinds of processors, including in some cases DNN accelerators).

- Solution: The most optimal DNN IE and DNN model configuration package for the target device.

3.2.1. Case Retrieval

3.2.2. Case Reuse/Adaptation

3.2.3. Case Evaluation

| Algorithm 1. Case evaluation. | |

| Input: Heterogenous hardware device configurations (HCONF), Image/request inference batch sizes list (IB), DNN model candidates (DMC), DNN IE (DIE), Testing dataset for benchmarking (TEST_DATA) | |

| Output: List of optimal heterogeneous Hardware configuration per batch (OHC) | |

| 1 | For batch in IB: |

| 2 | For hetero_device_conf in HCONF |

| 3 | For IE in DIE: |

| 4 | DM = get_suitable_precision_DNN_models(IE, DMC, hetero_device_conf) |

| 5 | DB = load_database_for_benchmarking(TEST_DATA) |

| 6 | load_models_to_IE(IE, DM, DB, hetero_device_conf) |

| 7 | Aff = Get_estimated_layer_affinities_from_DM (IE, DM, hetero_device_conf) |

| 8 | If (all_layers_supported(IE, hetero_device_conf, DM) = OK) |

| 9 | make_benchmark(IE, DM, hetero_device_conf, Aff, DB) |

| 10 | Perf_list_device = Store_performance_metrics(DM, hetero_device_conf, IB) |

| 11 | OHC.append(find_optimal_hconf_per_batch(Perf_list_device, IB)) |

| 12 | Else |

| 13 | discard_device_configuration(DM, hetero_device_conf) |

| 14 | Return OHC |

3.2.4. Case Retaining

3.2.5. Updating the New Trained DNN Models

3.2.6. Running the Face Recognition System

3.3. Biometric Data Management

4. Results and Discussion

4.1. Qualitative Evaluation

4.2. Quantitative Evaluation

- IEI TANK AIoT Developer Kit embedded PC with Intel Mustang V100 MX8 for DNN acceleration card. This hardware contains an Intel CPU, GPU and a High Density Deep Learning (HDDL) card (Mustang) compatible with Intel’s OpenVINO DNN IE.

- NVIDIA Jetson Xavier AGX 32GB. This hardware contains a NVIDIA GPU with 512-core NVIDIA Volta™ GPU with 64 Tensor cores and 2 NVDLA (dla0, dla1) DNN accelerators compatible with NVIDIA’s TensorRT DNN IE.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sathyan, M. Chapter Six-Industry 4.0: Industrial Internet of Things (IIOT). Adv. Comput. 2020, 117, 129–164. [Google Scholar]

- Oloyede, M.O.; Hancke, G.P.; Myburgh, H.C. A review on face recognition systems: Recent approaches and challenges. Multimed. Tools Appl. 2020, 79, 27891–27922. [Google Scholar] [CrossRef]

- Taskiran, M.; Kahraman, N.; Erdem, C.E. Face recognition: Past, present and future (a review). Digit. Signal Process. 2020, 106, 102809. [Google Scholar] [CrossRef]

- Kortli, Y.; Jridi, M.; Al Falou, A.; Atri, M. Face recognition systems: A survey. Sensors 2020, 20, 342. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jain, A.K.; Flynn, P.; Ross, A.A. (Eds.) Handbook of Biometrics; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Reddi, V.J.; Kanter, D.; Mattson, P.; Duke, J.; Nguyen, T.; Chukka, R.; Shiring, K.; Tan, K.; Charlebois, M.; Chou, W.; et al. MLPerf mobile inference benchmark. arXiv 2020, arXiv:2012.02328. [Google Scholar]

- Elordi, U.; Bertelsen, A.; Unzueta, L.; Aranjuelo, N.; Goenetxea, J.; Arganda-Carreras, I. Optimal deployment of face recognition solutions in a heterogeneous IoT platform for secure elderly care applications. Procedia Comput. Sci. 2021, 192, 3204–3213. [Google Scholar] [CrossRef]

- Bajenaru, L.; Marinescu, I.A.; Dobre, C.; Prada, G.I.; Constantinou, C.S. Towards the development of a personalized healthcare solution for elderly: From user needs to system specifications. In Proceedings of the International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Bucharest, Romania, 25–27 June 2020; pp. 1–6. [Google Scholar]

- Bhattacharjee, A.; Barve, Y.; Gokhale, A.; Kuroda, T. A model-driven approach to automate the deployment and management of Cloud Services. In Proceedings of the International Conference on Utility and Cloud Computing Companion (UCC Companion), Zurich, Switzerland, 17–20 December 2018; pp. 109–114. [Google Scholar]

- Li, Y.; Su, X.; Ding, A.Y.; Lindgren, A.; Liu, X.; Prehofer, C.; Riekki, J.; Rahmani, R.; Tarkoma, S.; Hui, P. Enhancing the internet of things with knowledge-driven software-defined networking technology: Future perspectives. Sensors 2020, 20, 3459. [Google Scholar] [CrossRef] [PubMed]

- Shaaban, A.M.; Schmittner, C.; Gruber, T.; Mohamed, A.B.; Quirchmayr, G.; Schikuta, E. CloudWoT—A reference model for knowledge-based IoT solutions. In Proceedings of the International Conference on Information Integration and Web-based Applications & Services (iiWAS2018), Yogyakarta, Indonesia, 19–21 November 2018; pp. 272–281. [Google Scholar]

- Blanco-Gonzalo, R.; Lunerti, C.; Sanchez-Reillo, R.; Guest, R. Biometrics: Accessibility challenge or opportunity? PLoS ONE 2018, 13, e0194111, Erratum in PLoS ONE 2018, 13, e0196372. [Google Scholar] [CrossRef] [PubMed]

- Hofbauer, H.; Debiasi, L.; Krankl, S.; Uhl, A. Exploring presentation attack vulnerability and usability of face recognition systems. IET Biom. 2021, 10, 219–232. [Google Scholar] [CrossRef]

- Yousefpour, A.; Fung, C.; Nguyen, T.; Kadiyala, K.; Jalali, F.; Niakanlahiji, A.; Kong, J.; Jue, J.P. All one needs to know about fog computing and related edge computing paradigms: A complete survey. J. Syst. Archit. 2019, 98, 289–330. [Google Scholar] [CrossRef]

- Hu, P.; Ning, H.; Qiu, T.; Zhang, Y.; Luo, X. Fog computing based face identification and resolution scheme in internet of things. IEEE Trans. Ind. Inform. 2017, 13, 910–1920. [Google Scholar] [CrossRef]

- Hu, P.; Ning, H.; Qiu, T.; Song, H.; Wang, Y.; Yao, X. Security and privacy preservation scheme of face identification and resolution framework using fog computing in internet of things. IEEE Internet Things J. 2017, 4, 1143–1155. [Google Scholar] [CrossRef]

- Wang, Y.; Nakachi, T. A privacy-preserving learning framework for face recognition in edge and cloud networks. IEEE Access 2020, 8, 136056–136070. [Google Scholar] [CrossRef]

- Mao, Y.; Yi, S.; Li, Q.; Feng, J.; Xu, F.; Zhong, S. A privacy-preserving deep learning approach for face recognition with edge computing. In Proceedings of the USENIX Workshop on Hot Topics in Edge Computing (HotEdge ’18), Boston, MA, USA, 10 July 2018; pp. 1–6. [Google Scholar]

- Bazarevsky, V.; Kartynnik, Y.; Vakunov, A.; Raveendran, K.; Grundmann, M. BlazeFace: Sub-millisecond neural face detection on mobile GPUs. arXiv 2019, arXiv:1907.05047. [Google Scholar]

- Kartynnik, Y.; Ablavatski, A.; Grishchenko, I.; Grundmann, M. Real-time facial surface geometry from monocular video on mobile GPUs. arXiv 2019, arXiv:1907.06724. [Google Scholar]

- Schlett, T.; Rathgeb, C.; Henniger, O.; Galbally, J.; Fiérrez, J.; Busch, C. Face image quality assessment: A literature survey. arXiv 2020, arXiv:2009.01103. [Google Scholar]

- Kavita, K.; Walia, G.S.; Rohilla, R. A contemporary survey of unimodal liveness detection techniques: Challenges & opportunities. In Proceedings of the International Conference on Intelligent Sustainable Systems (ICISS), Thoothukudi, India, 3–5 December 2020; pp. 848–855. [Google Scholar]

- Zhang, Y.; Yin, Z.; Li, Y.; Yin, G.; Yan, J.; Shao, J.; Liu, Z. CelebA-Spoof: Large-scale face anti-spoofing dataset with rich annotations. In Computer Vision–ECCV. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2020; pp. 70–85. [Google Scholar]

- Swapna, M.; Sharma, Y.K.; Prasad, B.M.G. A survey on face recognition using convolutional neural network. In Data Engineering and Communication Technology. Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2020; p. 1079. [Google Scholar]

- Aamodt, A.; Plaza, E. Case-based reasoning: Foundational issues, methodological variations, and system approaches. AI Commun. 1994, 7, 39–59. [Google Scholar] [CrossRef]

- Kwon, H.; Lai, L.; Pellauer, M.; Krishna, T.; Chen, Y.H.; Chandra, V. Heterogeneous dataflow accelerators for multi-DNN workloads. In Proceedings of the IEEE International Symposium on High-Performance Computer Architecture (HPCA), Seoul, Korea, 27 February–3 March 2021. [Google Scholar]

- Mai, G.; Cao, K.; Yuen, P.C.; Jain, A.K. Face image reconstruction from deep templates. arXiv 2017, arXiv:1703.00832. [Google Scholar]

- Boddeti, V.N. Secure face matching using fully homomorphic encryption. In Proceedings of the 2018 IEEE 9th International Conference on Biometrics Theory, Applications and Systems (BTAS), Redondo Beach, CA, USA, 22–25 October 2018. [Google Scholar]

- Papp, D.; Zombor, M.; Buttyan, L. TEE based protection of crypto-graphic keys on embedded IoT devices. Ann. Math. Inform. 2020, 53, 245–256. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andretto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In European Conference on Computer Vision (ECCV); Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

| Method | Face Recognition Approach | Assistance for Interaction | Deployment of Algorithms | Privacy in the IoT Platform |

|---|---|---|---|---|

| [15,16] | Pretrained Haar-based model for FD and LBP features for FIR to be trained on the cloud. | Not considered | Manually predefined | Schemes for authentication, session key agreement, data encryption, and data integrity checking for secure data transmission and storage. |

| [17] | Discriminative dictionary learning for FIR that needs to be trained. | Not considered | Manually predefined | Biometric data encrypted with a low complexity encrypting algorithm based on random unitary transformation. |

| [18] | DNN for FIR split in two parts: one deployed on the user side and the other on the edge server side. | Not considered | Manually predefined | Differential privacy for user’s confidential datasets. No cryptographic tools used to keep user side lightweight. |

| [7] | Pretrained DNN models for FLD, PGR, SAD, and FIR deployed on fog gateway and client devices suitable for DNN inference. | Real-time visual feedback based on FLD and PGR to guide the user during enrollment and verification. | Automated selection of the appropriate DNN inference engine, DNN model configurations, and batch size, based on IoT device characteristics. | Biometric data homomorphically encrypted. All computations are performed on the private network. Biometric data not sent to the cloud. |

| Ours | Pretrained DNN models for FLD, PGR, IQA, SAD, and FIR deployed on fog gateway and client devices suitable for DNN inference. | Real-time visual feedback based on FLD, PGR and IQA to guide the user during enrollment and verification. | Automated selection of the appropriate DNN inference engine, DNN model configurations, and batch size, by means of a knowledge-driven approach. | Biometric data homomorphically encrypted. All computations are performed on the private network. Biometric data not sent to the cloud. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elordi, U.; Lunerti, C.; Unzueta, L.; Goenetxea, J.; Aranjuelo, N.; Bertelsen, A.; Arganda-Carreras, I. Designing Automated Deployment Strategies of Face Recognition Solutions in Heterogeneous IoT Platforms. Information 2021, 12, 532. https://doi.org/10.3390/info12120532

Elordi U, Lunerti C, Unzueta L, Goenetxea J, Aranjuelo N, Bertelsen A, Arganda-Carreras I. Designing Automated Deployment Strategies of Face Recognition Solutions in Heterogeneous IoT Platforms. Information. 2021; 12(12):532. https://doi.org/10.3390/info12120532

Chicago/Turabian StyleElordi, Unai, Chiara Lunerti, Luis Unzueta, Jon Goenetxea, Nerea Aranjuelo, Alvaro Bertelsen, and Ignacio Arganda-Carreras. 2021. "Designing Automated Deployment Strategies of Face Recognition Solutions in Heterogeneous IoT Platforms" Information 12, no. 12: 532. https://doi.org/10.3390/info12120532

APA StyleElordi, U., Lunerti, C., Unzueta, L., Goenetxea, J., Aranjuelo, N., Bertelsen, A., & Arganda-Carreras, I. (2021). Designing Automated Deployment Strategies of Face Recognition Solutions in Heterogeneous IoT Platforms. Information, 12(12), 532. https://doi.org/10.3390/info12120532