Abstract

Humans are capable of learning new concepts from small numbers of examples. In contrast, supervised deep learning models usually lack the ability to extract reliable predictive rules from limited data scenarios when attempting to classify new examples. This challenging scenario is commonly known as few-shot learning. Few-shot learning has garnered increased attention in recent years due to its significance for many real-world problems. Recently, new methods relying on meta-learning paradigms combined with graph-based structures, which model the relationship between examples, have shown promising results on a variety of few-shot classification tasks. However, existing work on few-shot learning is only focused on the feature embeddings produced by the last layer of the neural network. The novel contribution of this paper is the utilization of lower-level information to improve the meta-learner performance in few-shot learning. In particular, we propose the Looking-Back method, which could use lower-level information to construct additional graphs for label propagation in limited data settings. Our experiments on two popular few-shot learning datasets, miniImageNet and tieredImageNet, show that our method can utilize the lower-level information in the network to improve state-of-the-art classification performance.

1. Introduction

Deep learning (DL) is already ubiquitous in our daily lives, including image-based object detection [1], face recognition [2], medical imaging, and healthcare [3]. While DL is outperforming traditional machine learning methods in these aforementioned application areas [4], a major downside of DL is that it requires large amounts of data to achieve good performance [5]. Few-shot learning (FSL) is a subfield of DL that focuses on training DL models under scarce data regimes, thereby opening possibilities for applying DL to new problem areas where the amount of labeled data is limited.

In FSL settings, datasets are comprised of large numbers of categories (i.e., class labels), but only a few examples per class are available. The main objective of FSL is the design of methods that achieve good generalization performance from the limited number of examples per category. The overarching concept of FSL is very general and applies to different data modalities and tasks like image classification [6], object detection [7], and text classification [8]. However, most FSL research is focused on image classification so that we will use the terms examples and images (in a supervised learning context) interchangeably.

Most FSL methods use an episodic training strategy known as meta-learning [9], where a meta-learner is trained on (classification) tasks with the goal to learn to perform well on new, unseen tasks. Many of the most recent FSL methods are based on episodic meta-learning, such as prototypical networks [10], relation networks [11], model agnostic meta-learning frameworks [12], and LSTM-based meta-learning [13]. Another successful approach to FSL is the use of transfer learning, where models are trained on large datasets and then appropriately transferred to smaller datasets that contain the novel target classes; examples include weight imprinting [14], dynamic few-shot object recognition with attention modules [15], and few-shot image classification by predicting parameters from activation values [16].

Apart from recent developments in FSL, many researchers have recently proposed methods for implementing graph neural networks (GNNs) to extend deep learning approaches for graph-structured data. In this context, graphs are used as data structures for modeling the relationships (edges) between data instances (nodes), which was first proposed via the graph neural network model [17] and extended via graph convolutional networks [18], semi-supervised graph convolutional networks [19], graph attention networks [20], and message passing neural networks [21]. Since FSL methods are centered around modeling relationships between the examples in the support and query datasets, GNNs have also gained a growing interest in FSL research, including approaches aggregating node information from densely connected support and query image graphs [22], transductive inference [23], and edge-labeling [24]. GNNs can be computationally prohibitive on large datasets. However, we shall note that one of the significant characteristics of FSL is that datasets for meta-training and meta-testing contain only “few” examples per class, such that the computational cost of graph construction becomes small in FSL.

Previous research has shown that FSL can be improved by incorporating additional information. For instance, unlabeled data, which is used in conventional [25], self-trained [26], and transfer learning-based [27] semi-supervised FSL, could improve the predictive performance of FSL models. Also, FSL benefits from the inclusion of additional modalities (e.g., textual information describing the images to be classified), which was demonstrated via an adaptive cross-model approach enhancing metric-based FSL [28] as well as cross-modal FSL utilizing latent features from aligned autoencoders [29]. While the aforementioned works showed that additional external information benefits FSL, we raise the question of whether additional internal information can be useful as well.

While the incorporation of additional information can be beneficial, the utilization of additional internal information is not very common in FSL research, and only two recent research papers explored this approach, i.e., Li et al.’s deep nearest neighbor neural network [30] and the dense classification network by Lifchitz et al. [31]. In these works, the researchers expanded the feature embeddings (the low-dimensional representation) of the data inputs (i.e., images), extracted from the last layer in the neural network, to higher-dimensional embeddings. These higher-dimensional embeddings were split into several smaller vectors, such that multiple embedding vectors corresponded to the same image. In the DN4 model proposed by Li et al. [30], the last layer’s feature embeddings were expanded to form many local descriptors. The dense classification network by Lifchitz et al. [31] expanded the feature embeddings to three separate vectors that are used for computing the cross-entropy loss during training.

When it comes to utilizing additional internal information, both DN4 [30] and the dense classification network [31] only considered the last layer’s information. In contrast to existing work on FSL, we consider additional information that is hidden in the earlier layers of the neural network. We hypothesize that such internal information benefits an FSL model’s predictive performance. More specifically, the extra information hidden in the network considered in this work is comprised of the feature embeddings that can be obtained from layers before the last layer. We propose using a graph structure to integrate this lower-level information into the neural network, since graph structures are well-suited for modeling relationships in data.

We refer to the FSL method proposed in this paper as Looking-Back, because unlike DN4 [30] and the dense classification network [31], this method fully utilizes previous layers’ feature embeddings (i.e., lower-level information) rather than focusing on the final layer’s feature embeddings alone. During training, the lower-level information is expected to help the meta-learner to absorb more information overall. Although this lower-level information may not be as useful as the embedding vectors obtained from the last layer, we hypothesize that the lower-level information has a positive impact on the meta-learner. To test this hypothesis, we adopt the widely used Conv-64F [30] in few-shot learning as a backbone, and construct graphs for label propagation, following the transductive propagation network (TPN) [23], to capture lower-level information.

Besides the feature embeddings of the last layer, the previous layers’ feature embeddings are also used for computing the pair-wise similarities between the inputs, based on relational network modules [23]. In the Looking-Back method, three groups of pair-wise similarity measures are computed. The similarity scores between all support and query images in one episode amount to three separate graph Laplacians, which are used for iterative label propagation, to generate three separate cross-entropy losses. As the experimental results indicate, the losses from lower-level features are used during meta-training to enhance the performance of the meta-learner. After meta-training, we adopt the last layer’s feature embeddings for testing on new tasks (i.e., images with class labels that are not seen during training) in a transductive fashion. As the experimental results reveal, the resulting FSL models have a better predictive performance on new, unseen tasks compared to models generated by meta-learners that do not utilize lower-level information.

The contributions of this work can be summarized as follows:

- We propose a novel FSL meta-learning method, Looking-Back, that utilizes lower-level information from hidden layers, which is different from existing FSL methods that only use feature embedding of the last layer during meta-training.

- We implement our Looking-Back method using a graph neural network, which fully utilizes the advantage of graph structures for few-shot learning to absorb the lower-level information in the hidden layers of the neural network.

- We evaluate our proposed Looking-Back method on two popular FSL datasets, miniImageNet and tieredImageNet, and achieve new state-of-the-art results, providing supporting evidence that using lower-level information could result in better meta-learners in FSL tasks.

2. Related Work

In this section, we discuss the recent developments in FSL with a focus on methods related to our work. We group these related FSL methods into two main categories, meta-learning-based approaches and transfer learning-based approaches.

2.1. Meta-Learning

FSL, based on meta-learning, typically uses episodic training strategies. In each episode, the meta-learner is trained on a meta-task, which can be thought of as an image classification task. During training, these tasks are drawn randomly from the training dataset across the episodes. During the model evaluation, tasks are chosen from a separate test dataset, which consists of images from novel classes that are not contained in the training dataset.

In N-way-k-shot FSL, when a meta-learner is trained on several tasks sampled from the training dataset, each training task is subdivided into a support set and a query set. Each task consists of N unique class labels, and the support set consists of k labeled images per class. Utilizing the support set, the model learns to predict the image labels in the query set. After training, the meta-learner is then evaluated on new tasks sampled from the test set. Similar to the training tasks, each new task consists of N unique class labels with k images (in the support set) each. However, to assess how well the meta-learner performs on new tasks, the classes in the test dataset are not overlapping with the classes in the training set.

Based on the general FSL meta-learning framework described above, we can divide meta-learning approaches further into metric-, optimization-, and graph-based meta-learning, which we discuss in the following subsections.

2.1.1. Metric-Based Meta-Learning

Metric-based methods are primarily focused on learning feature embeddings that enable similarity comparisons between support and query images. The Prototypical Network [10] used a Euclidean distance measure to compare the feature embeddings of the query images with centroids of the support images in different classes. The Relation Network [11] constructed an additional network to compute the similarity score between images directly, instead of using the Euclidean distance measure on the images’ feature embeddings similar to the Prototypical Network. DN4 [30] used a cosine similarity measure on multiple local descriptors, obtained by expanding the feature embeddings of the last layer to higher dimensions, to find the most similar images via nearest neighbor search.

2.1.2. Optimization-Based Meta-Learning

Optimization-based methods are focused on parameter optimization and how to rapidly learn knowledge from limited training images that can be adapted to novel images. The model agnostic meta-learning framework (MAML) [12] learned a general model that can be efficiently fine-tuned to perform well on other tasks using conventional gradient descent-based optimization. While MAML used second-order partial derivatives to train the general model before task-specific fine-tuning, Reptile [32] was a first-order approximation of MAML that simplified the training procedure and boosted computational performance. Ravi and Larochelle [13] introduced a related yet different approach to optimization-based meta-learning. They proposed the use of an LSTM to model the sequence corresponding to the sequential optimization of the model parameters across different tasks.

2.1.3. Graph-Based Meta-Learning

Graph-based meta-learning uses graph structures to model the relationship between query and support images based on relative similarity measures, where each labeled and unlabeled image represents a node in the graph. There are very few treatments of graph-based methods for FSL in the literature; however, the topic has recently gained more attention in the FSL research community.

In 2017, Garcia and Bruna [22] proposed the use of a GNN for aggregating node information in an iterative fashion via a message-passing model, where the support and query images are densely connected in the graph. The edge-labeling graph neural network (EGNN) modified this approach, using edge- rather than node-label information, combined with inter-cluster dissimilarity and intra-cluster similarity measures [24]. Like GNN, the Transductive Propagation Network (TPN) considered the graph nodes for representing the feature embeddings of the images [23]. However, instead of performing inductive inference (that is, predicting test images one by one), TPN used transductive inference to predict the labels of the entire test set at once, which alleviated the low-data problem in FSL and achieved state-of-the-art performance [23].

2.2. Transfer Learning

In contrast to meta-learning, transfer learning is based on a more conventional supervised learning approach. Here, a model is pre-trained on a large dataset with an abundant number of examples per class. After pre-training on these base classes, the model is then transferred (i.e., fine-tuned) to the novel classes in a few-shot task.

The weight imprinting [14] method constructed classifiers for novel tasks by imprinting the centroids of the novel images’ feature embeddings on classifier weights. TransMatch [27] extended this concept to semi-supervised settings. The dynamic few-shot object recognition system proposed by Gidaris and Komodakis introduced an attention module during training to learn the classifier weights [15]. The dense classification network was another method based on imprinting [31]. In addition, this method expanded the feature embeddings, obtained from the last layer, to a set of vectors when computing the cross-entropy loss during training on the base classes in the training. All cross-entropy loss terms were aggregated to compute the overall loss during backpropagation.

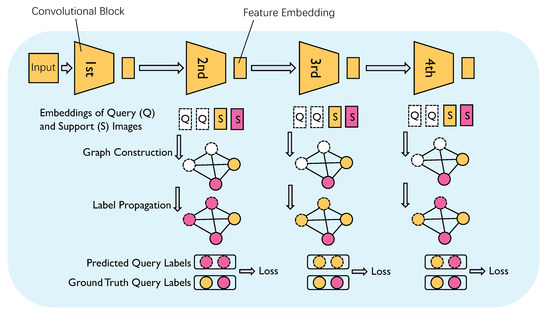

The Looking-Back method we propose in this paper (Figure 1) uses the same graph construction approach as TPN [23]. However, Looking-Back incorporates the feature embeddings from hidden layers in the graph construction procedure as well. We shall note that the simultaneous training with graphs built on lower-level information could also be seen as a particular case of multi-task learning or incremental learning, which was mentioned in [33] but is rarely adopted in FSL.

Figure 1.

A conceptual overview of the proposed Looking-Back method.

3. Proposed Method

In this section, we introduce our proposed Looking-Back approach utilizing lower-level information to enhance the predictive performance of FSL models.

3.1. Problem Definition

The goal of FSL is to train predictive models that learn from and perform well on classification tasks, given only a few labeled examples per class. For instance, N-way K-shot classification can be understood as a classification task with N unique classes, where K labeled examples per class are provided for supervised learning.

In an N-way K-shot setting, the dataset for a given task is divided into a support set and a query set . consists of examples and the corresponding class labels . The goal is to utilize to predict the class labels for the examples in , .

Given a large training dataset , with base classes , FSL meta-learning approaches sample many different N-way K-shot classification tasks randomly from , to train the meta-learner for m episodes. After training, the meta-learner is given a novel N-way K-shot classification task , such that the N classes do not overlap with the base classes in encountered during training. The dataset corresponding to is split into support and query sets, and the meta-learner uses the labeled examples in the support set to classify the examples in the query set.

A successful FSL meta-learner learns from the training tasks how to efficiently utilize the few labeled examples in the support set of a novel task so that the resulting model is able to predict the class labels in the unlabeled query set with good generalization performance.

Considering the general problem definition of FSL and meta-learning given above, the examples in the query set can be used in a transductive manner as suggested by [23], i.e., instead of classifying the query examples one at a time, the whole query set can be propagated into the network all at once, which improves the predictive performance compared to classifying each query example independently [23].

3.2. Feature Extractor Module

The two predominant types of neural network backbone architectures used in FSL research are ResNet-12 [34,35,36,37] and Conv-64F [9,10,11,22,23,30]. In this work, we adopt Conv-64F since it is easier to experiment with. However, we shall note that our proposed method is architecture-agnostic and can be implemented for other types of feedforward neural networks.

Conv-64F contains four convolutional blocks where every block is constructed by one convolutional layer with 64 filters of size 3 × 3, followed by a batch normalization layer, ReLU activation, and a 2 × 2 max-pooling layer. Both the convolutional layers and the max-pooling layers have a stride of 1.

Besides extracting feature embeddings from the last layer of the last convolutional block, the proposed Looking-Back also extracts the embeddings from the last layer of the second and third convolutional block. These three feature embeddings are then used in the graph-based label propagation, as illustrated in Figure 1. The dimensions of the feature embeddings extracted by the three convolutional blocks are 64 × 21 × 21, 64 × 10 × 10, and 64 × 5 × 5, respectively. Here, the number of channels, 64, is determined by the Conv-64F architecture, whereas the channel heights and widths are a consequence of the input image dimensions given the Conv-64F architecture.

3.3. Graph Construction Module

In the original work of TPN [23], the authors proposed a pair-wise similarity function that used an example-wise length-scale parameter. Adopting this mechanism, for the output of i-th convolutional block, we compute the similarity of two images () via

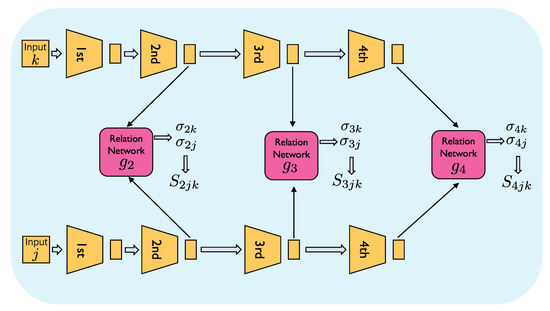

which measures the distance between the two feature embeddings. Here is a scale parameter for the feature embedding computed by a relation network module, which is described in the next paragraph. As illustrated in Figure 2, we use a separate relation network for the second, third, and fourth convolutional block, since the dimensions and information contents of the respective feature embeddings differ.

Figure 2.

Computing the similarity between a pair of images, inputs j and k, based on the feature embeddings produced by the 2nd, 3rd, and 4th convolutional block (layer). The similarity values computed by the relation network modules are then used to construct multiple graphs for label propagation.

The overall architecture of the relation network module, which computes and , is similar to the architecture used by Liu et al. [23]. Each relation network module consists of two convolutional blocks, followed by two fully-connected layers. Each convolutional block is composed of a 3 × 3 convolutional layer with a stride of 1, a batch normalization layer, ReLU activation, and a 2 × 2 max-pooling layer with a stride of 1.

In the Looking-Back model, we compute multiple symmetric normalized graph Laplacians [38] via

where is the diagonal matrix whose d-th diagonal element is the sum of the d-th row of the . Here, we only keep m-max values from every row in to construct a m-nearest neighbor graph for each layer during episodic training to improve computational efficiency as suggested by Liu et al. [23].

3.4. Classification Loss

After constructing different graphs for multiple layers as explained in Section 3.3, label propagation [39] is used to compute the prediction (i.e., class-membership) scores for the query images [23].

Let be an initial score matrix. For a given image in the support set,

The label propagation process is an iterative process

where is the predicted label at time step t. The predicted scores for an input image’s feature embedding from the i-th convolutional block are computed via

where I is the identity matrix, is the normalized graph Laplacian of that feature embedding from the i-th convolutional block, and is a hyperparameter controlling propagation rate.

After computing the prediction scores, we obtain class-membership probability scores for the feature embeddings from the i-th convolutional block by applying a softmax function as follows:

where is the predicted class label for feature embedding of the j-th input image from the i-th convolutional block, and is the predicted score at the k-th position.

The total loss term is the combination of cross-entropy loss for different layers’ features:

where is a relative weight for the cross-entropy loss term of the feature embeddings from the i-th convolutional block and is a hyperparameter during the episodic training.

The feature embeddings from the second () and third () convolutional block containing lower-level information are only used during training to improve the feature extractor module (Section 3.2). In both the validation and test stage, the class labels are obtained from the prediction on feature embeddings of the last convolutional block only, that is, the fourth convolutional block, .

4. Experiments

In this section, we evaluate the proposed Looking-Back method on two popular FSL benchmark datasets, i.e., miniImageNet [13] and tieredImageNet [25], and compare with other state-of-the-art FSL methods.

4.1. Datasets

miniImageNet. The miniImageNet dataset is widely used for comparing different few-shot learning methods [13]. It is a small subset of ImageNet [40] that consists of 100 classes with 600 examples per class. For our experiments, we split the dataset into 64 classes for training, 16 classes for validation, and 20 classes for testing following [13].

tieredImageNet. Similar to miniImageNet, the tieredImageNet dataset is a small, simplified version of ImageNet proposed by [25]. Different from miniImageNet, tieredImageNet has a hierarchical or tiered structure consisting of 34 larger classes, where each larger class contains 10 to 30 smaller classes (i.e., related subcategories). tieredImageNet contains 608 smaller classes and 779,165 images in total. We split the dataset as described in [25], resulting in a training set consisting of 20 larger classes, a validation set consisting of 6 larger classes, and the test set consisting of 8 larger classes. The advantage of splitting the dataset based on the larger classes, as opposed to splitting into the subclasses, is that this approach creates a clearer distinction between training, test, and validation sets.

4.2. Implementation Details

As mentioned before, we adopted the Conv-64F architecture (Section 3.2) as the backbone for our model. During training, we used the three layers’ feature embeddings as shown in Figure 1 and Figure 2. For label propagation, we chose the same hyperparameters as described in [23], setting (the propagation coefficient, Equations (4) and (5)) to 0.99 and m (the per-row max values of the graph Laplacians) to 20. Moreover, we gave equal weighting to the individual loss terms when computing the total loss Equation (7), that is, setting , , and to 1.

During the episodic training, each episode was a 5-way K-shot task with 15-query images in each task, mimicking the testing scenario. We used the Adam optimizer [41] to train the model and set the initial learning rate to 0.001. For miniImageNet, the learning rate was decayed by a multiplicative factor of 0.8 every 5000 episodes. The same multiplicative factor was used for decaying the learning rate when training on tieredImageNet, but it was decayed more frequently, every 2000 epochs, due to the larger size and complexity of tieredImageNet.

To evaluate the model on the test set, we randomly sampled 600 5-way K-shot tasks from an independent test set with and , respectively. In both scenarios, and , there were 15 query samples in each class (that is, 75 query examples in total), which were used to compute the prediction accuracy for a given task or episode. To compute the overall prediction accuracy of a given model, we randomly sampled the test set 600 times and calculated the accuracy by averaging the prediction accuracy across these 600 episodes.

4.3. Results and Discussion

4.3.1. Overall performance

In this section, we compare our proposed Looking-Back method to other state-of-the-art FSL methods. All neural network implementations are based on a Conv-64F backbone architecture for feature extraction as described in Section 3.2. Following the established conventions, we consider both 5-way 1-shot and 5-way 5-shot settings for the performance comparisons, using the two common FSL benchmark datasets miniImageNet and tieredImageNet as described in Section 4.1. The accuracy is computed as the average of 600 test episodes (as described in Section 4.2) with a 95% confidence interval. As the results for miniImageNet (Table 1) and tieredImageNet (Table 2) indicate, our proposed Looking-Back method achieves state-of-the-art results on both datasets, in both the 5-way 1-shot and 5-way 5-shot scenarios.

Table 1.

Accuracy (in %) on miniImageNet with 95% confidence interval. Best results are shown in bold.

Table 2.

Accuracy (in %) on tieredImageNet with 95% confidence interval. Best results are shown in bold.

4.3.2. Comparing Looking-Back and TPN Training in a “Higher Shot” Setting

The performance comparisons between Looking-Back and TPN [23] (Table 1 and Table 2) provides supportive evidence that utilizing lower-level information, which is contained in previous layers’ feature embeddings, improves the predictive performance by a substantial amount by our Looking-Back method. In this section, we investigate whether the lower-level information can also enhance the performance in a “Higher Shot” setting.

In FSL, it is common to use support sets of similar size during meta-training and testing. However, some researchers found that using larger support sets during meta-training (i.e., increasing the number of “shots”) can improve the predictive performance of FSL systems based on evaluation on the same (i.e., smaller shot) test sets [10,30]. Similar observations have been made in the original TPN paper [23], where the authors described that increasing the number of examples in the support sets during meta-training (referred to as “Higher Shot”) can improve the predictive accuracy during testing. However, using a larger number of shots during meta-training than testing does not always improve the predictive performance, and it is still an open area of research [43].

Although “Higher Shot” training is not the focus of this paper, we conducted experiments with higher shots and report the results in Table 3, adopting the procedure described in the original TPN paper [23] to enable fair comparisons. The results in Table 3 indicate that Looking-Back utilizing lower-level information outperforms TPN in a “Higher Shot” setting as well.

Table 3.

Accuracy (in %) after training with higher shots. Here, the system evaluated on a 1-shot test set was trained in a 5-shot setting, and the system evaluated on a 5-shot test was trained in a 10-shot setting. Best results are shown in bold.

Table 4 summarizes the performance gain of Looking-Back over TPN for the regular meta-training scenario (same number of shots in the training and test tasks, Table 1 and Table 2) and meta-training with higher shots (Table 3). From Table 4, we can observe that on both datasets, the improvement of same versus higher shot meta-training in 1-shot settings is more significant than in 5-shot settings. We argue that when more support images are available (higher shot), the role of utilizing lower-level information becomes less important. The main rationale behind using previous layers’ feature embeddings is to use additional lower-level information when information from the final layer’s feature embedding is scarce. Intuitively, the role of using lower-level information degrades if a meta-learner can utilize a larger number of examples in the support set.

Table 4.

Performance gain (in % points) of Looking-Back vs transductive propagation network (TPN) on the test sets when using lower-level information in same-shot (Same) and higher-shot (Higher) training.

4.3.3. Influence of Higher-Shot Training on Looking-Back

As indicated by the results in Table 4 and hypothesized in the previous section, our Looking-Back method could be more useful when the data is more scarce. This is likely because the more information is available during training (i.e., the support sets consist of additional examples in higher-shot settings), the more negligible the information from earlier layers becomes as supportive information.

In a 1-shot setting, we were still able to observe that the lower-level information used by Looking-Back models benefits the model performance when training in the higher shots setting, as summarized in Table 5. However, in the presence of a larger number of images, using lower-level information during training results in more limited improvements (5-shot test setting on tieredImageNet) or may have a small detrimental impact (5-shot test settings on miniImageNet) as shown in Table 5. This finding provides further evidence that the lower-level information has a more beneficial effect when the data is more scarce.

Table 5.

Performance gain (in % points) of Looking-Back when trained with higher shots compared to training with same shots.

4.3.4. Why Only Using the Last Layer’s Information during Inference

Both DN4 [30] and the dense classification network [31] use the entire expanded feature embeddings of the last layer during training as well as inference. One of the main reasons we only use the feature embeddings of the last layer during inference is that the lower-level information from previous layers is used to augment the graph construction during training but does not have equal relevance for the prediction task during inference. In contrast to Looking-Back, in both DN4 and the dense classification network, the additional information of the expanded feature embeddings are on the same footing.

To test our hypothesis that the feature embeddings of the last layer bear the most relevance for the prediction task, we compared the prediction accuracy of Looking-Back when using different layers for the class label prediction. As indicated by the results in Table 6, the prediction accuracy of the 4th (last) layer is higher than the prediction accuracy of the 3rd layer, and the accuracy of the 3rd layer is higher than the accuracy of the 2nd layer, supporting the hypothesis that the last layer contains the most useful information.

Table 6.

Different layers’ prediction accuracy (in %) on 5-way tasks after label propagation with same-shot training.

5. Conclusions

In this paper, we propose a new approach to FSL that captures additional information inside the feature extracting network to improve prediction performance. In particular, the proposed Looking-Back method employs a graphical structure to utilize the lower-level information from previous layers’ feature embeddings, which differs from existing methods that only focus on expansions of the last layer’s feature embeddings. Experiments on two popular FSL datasets provide evidence that the utilization of lower-level information in FSL improves the performance of FSL meta-learners.

Author Contributions

Conceptualization, Z.Y. and S.R.; investigation and validation, S.R. and Z.Y.; data curation, Z.Y.; writing—original draft preparation, Z.Y. and S.R.; writing—review and editing, Z.Y. and S.R.; visualization, Z.Y. and S.R.; supervision, S.R.; project administration, S.R.; funding acquisition, S.R. All authors have read and agreed to the published version of the manuscript.

Funding

Support for this review article was provided by the Office of the Vice Chancellor for Research and Graduate Education at the University of Wisconsin-Madison with funding from the Wisconsin Alumni Research Foundation.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Wani, M.A.; Bhat, F.A.; Afzal, S.; Khan, A.I. Supervised deep learning in face recognition. In Advances in Deep Learning; Springer: Berlin/Heidelberg, Germany, 2020; pp. 95–110. [Google Scholar]

- Wang, W.; Liang, D.; Chen, Q.; Iwamoto, Y.; Han, X.H.; Zhang, Q.; Hu, H.; Lin, L.; Chen, Y.W. Medical image classification using deep learning. In Deep Learning in Healthcare; Springer: Berlin/Heidelberg, Germany, 2020; pp. 33–51. [Google Scholar]

- Raschka, S.; Patterson, J.; Nolet, C. Machine learning in Python: Main developments and technology trends in data science, machine learning, and artificial intelligence. Information 2020, 11, 193. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. 2019, 53, 63. [Google Scholar] [CrossRef]

- Kang, B.; Liu, Z.; Wang, X.; Yu, F.; Feng, J.; Darrell, T. Few-shot object detection via feature reweighting. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 8420–8429. [Google Scholar]

- Bao, Y.; Wu, M.; Chang, S.; Barzilay, R. Few-shot Text Classification with Distributional Signatures. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Wierstra, D. Matching networks for one shot learning. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 3630–3638. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 4077–4087. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.S.; Hospedales, T.M. Learning to compare: Relation network for few-shot learning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1199–1208. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Ravi, S.; Larochelle, H. Optimization as a model for few-shot learning. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Qi, H.; Brown, M.; Lowe, D.G. Low-shot learning with imprinted weights. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5822–5830. [Google Scholar]

- Gidaris, S.; Komodakis, N. Dynamic few-shot visual learning without forgetting. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4367–4375. [Google Scholar]

- Qiao, S.; Liu, C.; Shen, W.; Yuille, A.L. Few-shot image recognition by predicting parameters from activations. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7229–7238. [Google Scholar]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The graph neural network model. IEEE Trans. Neural Netw. 2009, 20, 61–80. [Google Scholar] [CrossRef] [PubMed]

- Duvenaud, D.K.; Maclaurin, D.; Iparraguirre, J.; Bombarell, R.; Hirzel, T.; Aspuru-Guzik, A.; Adams, R.P. Convolutional networks on graphs for learning molecular fingerprints. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 2224–2232. [Google Scholar]

- Kipf, T.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2017, arXiv:1609.02907. [Google Scholar]

- Velickovic, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph attention networks. arXiv 2018, arXiv:1710.10903. [Google Scholar]

- Gilmer, J.; Schoenholz, S.S.; Riley, P.F.; Vinyals, O.; Dahl, G.E. Neural message passing for quantum chemistry. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 1263–1272. [Google Scholar]

- Garcia, V.; Estrach, J.B. Few-shot learning with graph neural networks. In Proceedings of the 6th International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Liu, Y.; Lee, J.; Park, M.; Kim, S.; Yang, E.; Hwang, S.J.; Yang, Y. Learning to propagate labels: Transductive propogation network for few-shot learning. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Kim, J.; Kim, T.; Kim, S.; Yoo, C.D. Edge-labeling graph neural network for few-shot Learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 11–20. [Google Scholar]

- Ren, M.; Triantafillou, E.; Ravi, S.; Snell, J.; Swersky, K.; Tenenbaum, J.B.; Larochelle, H.; Zemel, R.S. Meta-Learning for semi-supervised few-shot classification. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Li, X.; Sun, Q.; Liu, Y.; Zhou, Q.; Zheng, S.; Chua, T.S.; Schiele, B. Learning to self-train for semi-supervised few-shot classification. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 10276–10286. [Google Scholar]

- Yu, Z.; Chen, L.; Cheng, Z.; Luo, J. TransMatch: A Transfer-Learning Scheme for Semi-Supervised Few-Shot Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12856–12864. [Google Scholar]

- Xing, C.; Rostamzadeh, N.; Oreshkin, B.; Pinheiro, P.O. Adaptive cross-modal few-shot learning. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 4848–4858. [Google Scholar]

- Schonfeld, E.; Ebrahimi, S.; Sinha, S.; Darrell, T.; Akata, Z. Generalized zero-and few-shot learning via aligned variational autoencoders. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8247–8255. [Google Scholar]

- Li, W.; Wang, L.; Xu, J.; Huo, J.; Gao, Y.; Luo, J. Revisiting local descriptor based image-to-class measure for few-shot learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7253–7260. [Google Scholar]

- Lifchitz, Y.; Avrithis, Y.; Picard, S.; Bursuc, A. Dense classification and implanting for few-shot learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9250–9259. [Google Scholar]

- Nichol, A.; Achiam, J.; Schulman, J. On first-order meta-learning algorithms. arXiv 2018, arXiv:1803.02999. [Google Scholar]

- Mallya, A.; Lazebnik, S. PackNet: Adding multiple tasks to a single network by iterative pruning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7765–7773. [Google Scholar]

- Mishra, N.; Rohaninejad, M.; Chen, X.; Abbeel, P. A simple neural attentive meta-learner. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Oreshkin, B.; López, P.R.; Lacoste, A. TADAM: Task dependent adaptive metric for improved few-shot learning. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 721–731. [Google Scholar]

- Lee, K.; Maji, S.; Ravichandran, A.; Soatto, S. Meta-learning with differentiable convex optimization. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 10657–10665. [Google Scholar]

- Sun, Q.; Liu, Y.; Chua, T.S.; Schiele, B. Meta-transfer learning for few-shot learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 403–412. [Google Scholar]

- Chung, F.R. Spectral Graph Theory; American Mathematical Soc. Press: Providence, RI, USA, 1997. [Google Scholar]

- Zhou, D.; Bousquet, O.; Lal, T.N.; Weston, J.; Schölkopf, B. Learning with local and global consistency. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 13–18 December 2004; pp. 321–328. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Fort, S. Gaussian prototypical networks for few-shot learning on omniglot. arXiv 2018, arXiv:1708.02735. [Google Scholar]

- Cao, T.; Law, M.T.; Fidler, S. A theoretical analysis of the number of sShots in few-shot learning. arXiv 2020, arXiv:1909.11722. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).