1. Introduction

In an era of digital transformations, traditional bureaucratic organizational structures tend to be replaced by e-government projects. Information and Communications Technology (ICT) has enabled a reduction in administrative costs and leveraged the immense potential of technology for more efficient public services. Digital public services (also called e-government) are delivered to the citizens by the government using ICTs. Examples of digital public services include income tax declarations, notification and assessment; birth and marriage certificate; renewing a driver’s license as well as other kinds of request and delivery of permissions and licenses. By using digital services, the government can deliver information and services to citizens anytime, anywhere, and on any platform or device.

The adoption of digital services in the public sector is complex and challenging, particularly in developing countries [

1]. This is due to the inefficiency of public organizations, the shortage of skilled human resources, the poor ICT infrastructure, the low standards of living and the large rural population [

2]. Many developing countries have implemented specific e-government initiatives for making a full use of the potential benefits of e-government [

3]. In this sense, the demand for a systematic, continuous and effective assessment of the provision of digital public services contrasts with the lack of clarity regarding performance indicators as service evaluation is a broad theme [

4].

The development of e-government creates a need for its continuous evaluation around the world [

5]. There are several approaches to evaluate the performance of e-government in the literature. The readiness assessment, for example, examines the maturity of the e-government environment by evaluating the awareness, willingness, and preparedness of e-government stakeholders and identifying the enabling factors for the development of e-government [

6]. The main advantage of this approach is using quantifiable indicators that provided an overview of the maturity of e-government [

3]. However, using the readiness perspective to evaluate e-government is often criticized for neglecting the demands of citizens and the impact of digital services on citizens and the society.

There have been many others attempts for evaluating e-government across the global regions. In the UK, the performance of digital public services is assessed considering three public value evaluation dimensions: quality of public service delivery, outcomes, and trust [

7]. Quality of public service delivery is evaluated through the level of information provision, level of use, availability of choice, user satisfaction, user priorities, fairness, and cost savings. The European e-Government Action Plan 2016–2020 [

8] assesses e-government as the reduction of administrative burden on citizens, improvement of citizens’ satisfaction and inclusiveness of public services. Russian Federation [

9] assesses effectiveness as the quality of public services, trust, and outcomes. The Agency for the Development of Electronic Administration in France [

10] proposes a framework for evaluating the public value of information technology in government with a focus on the financial benefits of e-government projects for citizens. One of the critical issues is how to evaluate and assess the successfulness of such projects. The traditional value assessment methods existing in the business field are not good enough to cope with the issue, as business and government hold different value perspectives and have different concerns [

11].

Some of the more traditional models for service evaluation are based on efficiency, efficacy and effectiveness [

12]. Effectiveness can be defined as the perception of changes made; efficacy refers to the extent to which the intended goals were achieved, and efficiency means doing more with fewer resources [

13]. Among these three criteria, effectiveness plays a key role to produce desired social outcomes. Indeed, it aims to guarantee practical results, as it would be useless to have the most satisfactory result if it were not possible to realize the impact of the service on society. In this sense, effectiveness has a lot to do with the search for users’ satisfaction.

The great challenge for the measurement of effectiveness in the public sector is to obtain valid data to inform the Public Administration of the results the services. There is no consensus in the literature on what is the best indicator to measure the effectiveness of a public service. There is a gap on this subject, mainly regarding the importance of the indicators considering the needs of the user. In this sense, evaluating the effectiveness of public services from the perspective of the user is an opportunity to detect the factors that hinder or facilitate their impact on society.

This work is a systematic research carried out to identify the most common effectiveness-based indicators of compliance with the expectation of users of digital public services. For this purpose, a bibliographic review was performed to retrieve the most frequent indicators of effectiveness in the literature. Then, a perception research was undertaken with academics and professionals in digital government to measure the relevance of the indicators and identify correlations between them. We discuss the results and present suggestions for the uptake of the indicators in the evaluation of public services. This perception research with experts in the area of digital government is an important contribution of this work to the literature, since it presents a quantitative analysis of the relevance of each indicator to measure effectiveness of public services. Based on the results, we present some discussions and suggestions for the uptake of the indicators in the evaluation of public services.

This paper is organized as follows:

Section 2 presents the steps of our systematic literature mapping.

Section 3 briefly discusses some important results raised by our literature review;

Section 4 presents the results of a perception survey carried out with experts in digital government. Finally,

Section 5 displays the final considerations of this paper.

2. Methodology: Mapping Study Planning

To support this research, we used methods of bibliometric studies [

14] and systematic literature reviews [

15]. Bibliometric studies are quantitative approaches to identify, evaluate and monitor published studies available in each area or line of research. They aim to examine how disciplines, fields of research, specialties and individual publications relate. Unlike bibliometric studies, the Systematic Literature Review is performed to identify publications and analyze their contents and consolidated results. Formal procedures for retrieving documents from digital publication bases, selection criteria, and well-established routines to extract and record data are followed. In this work, we adopted the Population, Intervention, Comparison, Outcome, Context (P.I.C.O.C) criteria to frame research questions [

15,

16]. The population of this study was defined as “e-gov”, the intervention was defined as “effectiveness”, the outcome was defined as “definition”, and the context was defined as “government”. A comparison scheme was not set. This formal procedure was useful to postulate an initial search string.

In this study, the search was performed in SCOPUS database. This database was chosen because of its advanced search engines, which allow the use of complex Boolean expressions, as well as several useful filters to refine the results of the publications retrieved. The digital database of SCOPUS also indexes publications from different sources, including ACM, Springer Link, and IEEE. Moreover, it is expected that a high percentage of articles in these databases are also indexed in SCOPUS [

17].

The research problem was: How does effectiveness support evaluations of digital public services in the perspective of the user? Based on this problem, the following research questions were:

Then, an initial query string was defined as presented in

Table 1.

After running the search string in

Table 1, a total of 550 papers were found. Then, we used SCOPUS’ own resources to evaluate this set of papers bibliometrically.

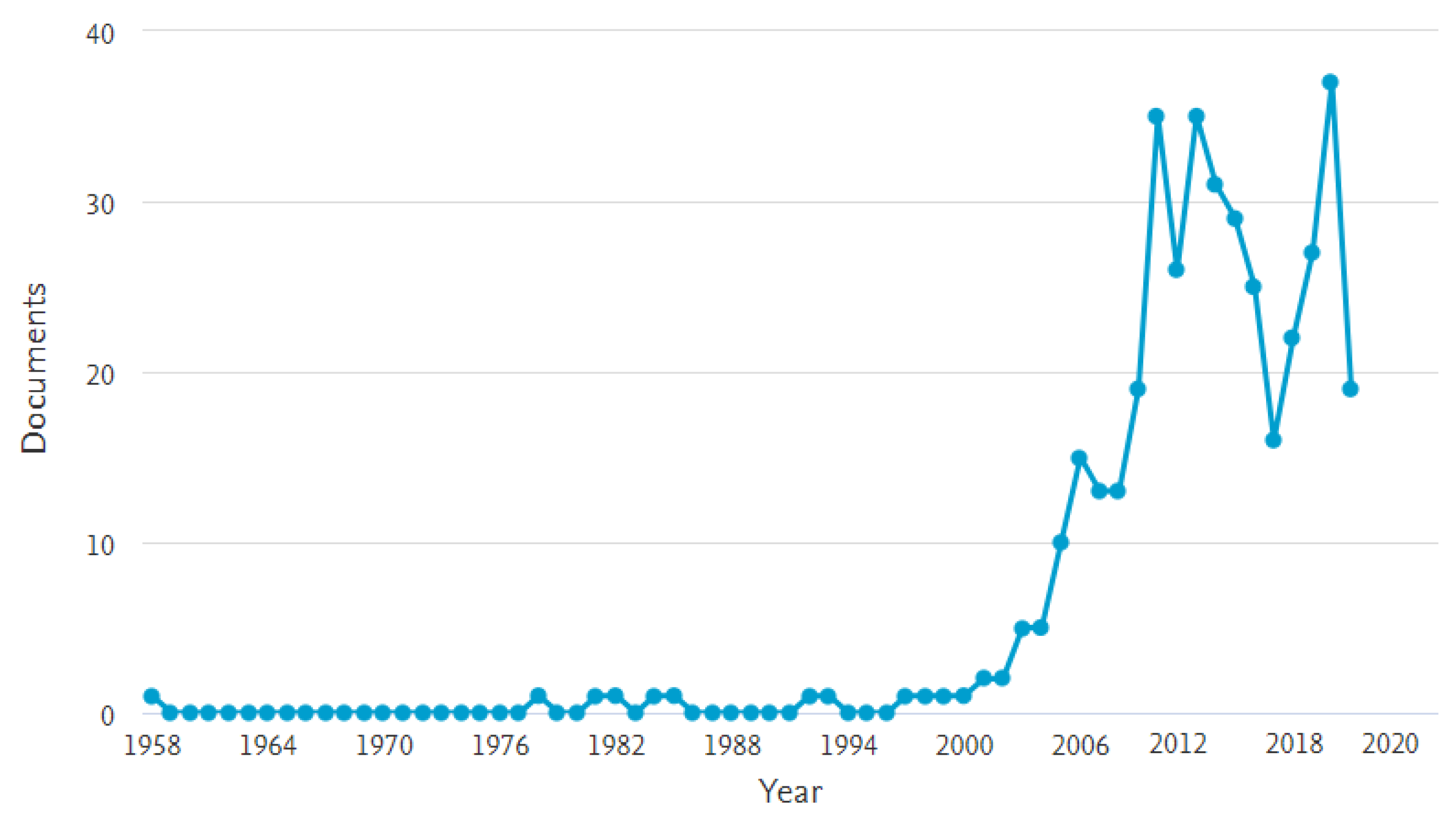

Figure 1 shows that most of the publications retrieved were published after 2009. Before this year, only four documents were, in fact, related to our study. This supported the idea of refining the search for publications since 2009. The ongoing year of 2020 was also included in the search, so papers still in press were considered.

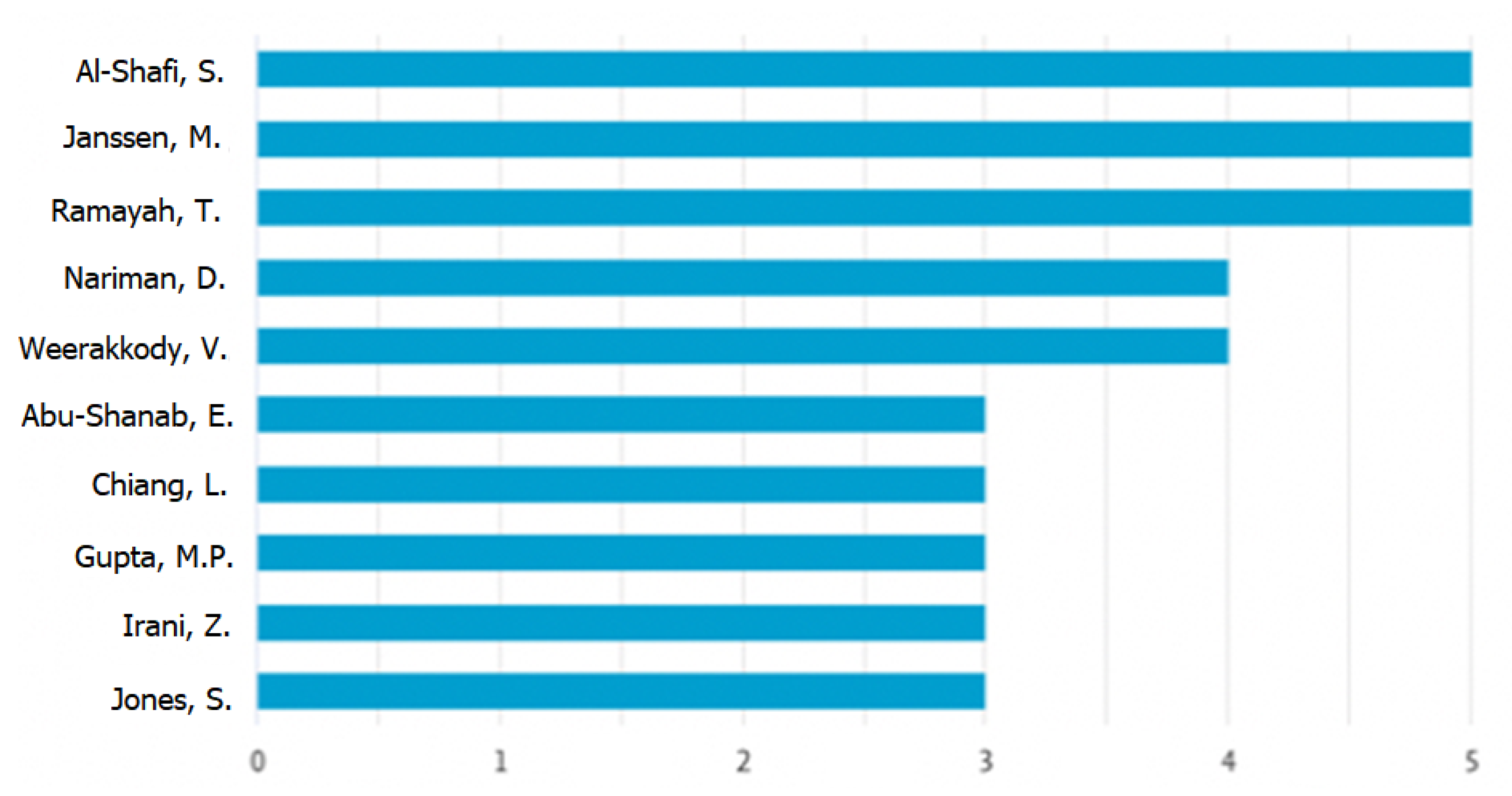

Figure 2 shows that the subject is still little explored, as the top 10 authors found have published between 2 and 5 papers.

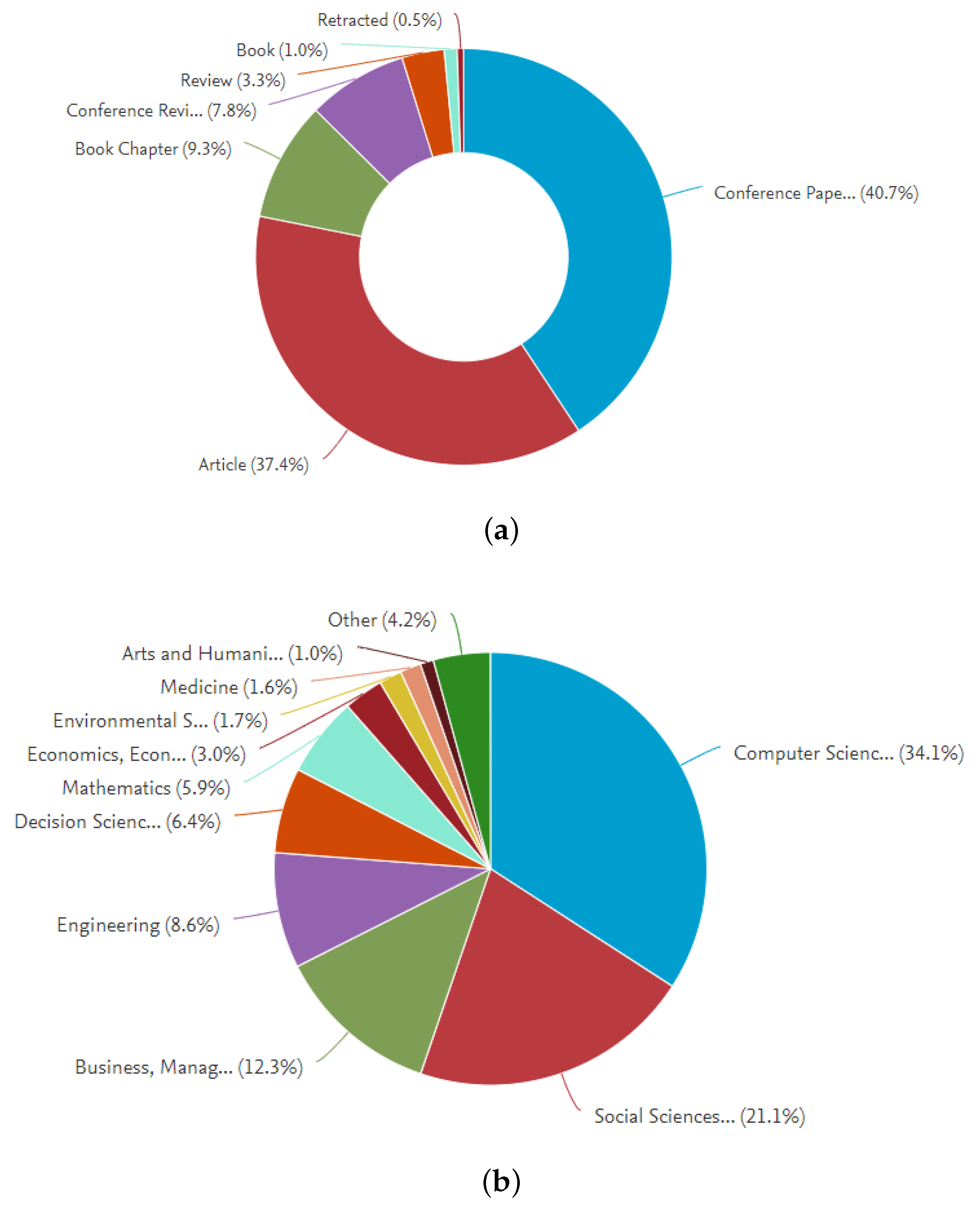

Figure 3a,b show the results by type of document and area. Newspaper articles and conference papers, in addition to papers in the areas of computer science, social science, business and management, and engineering, comprised most publications retrieved.

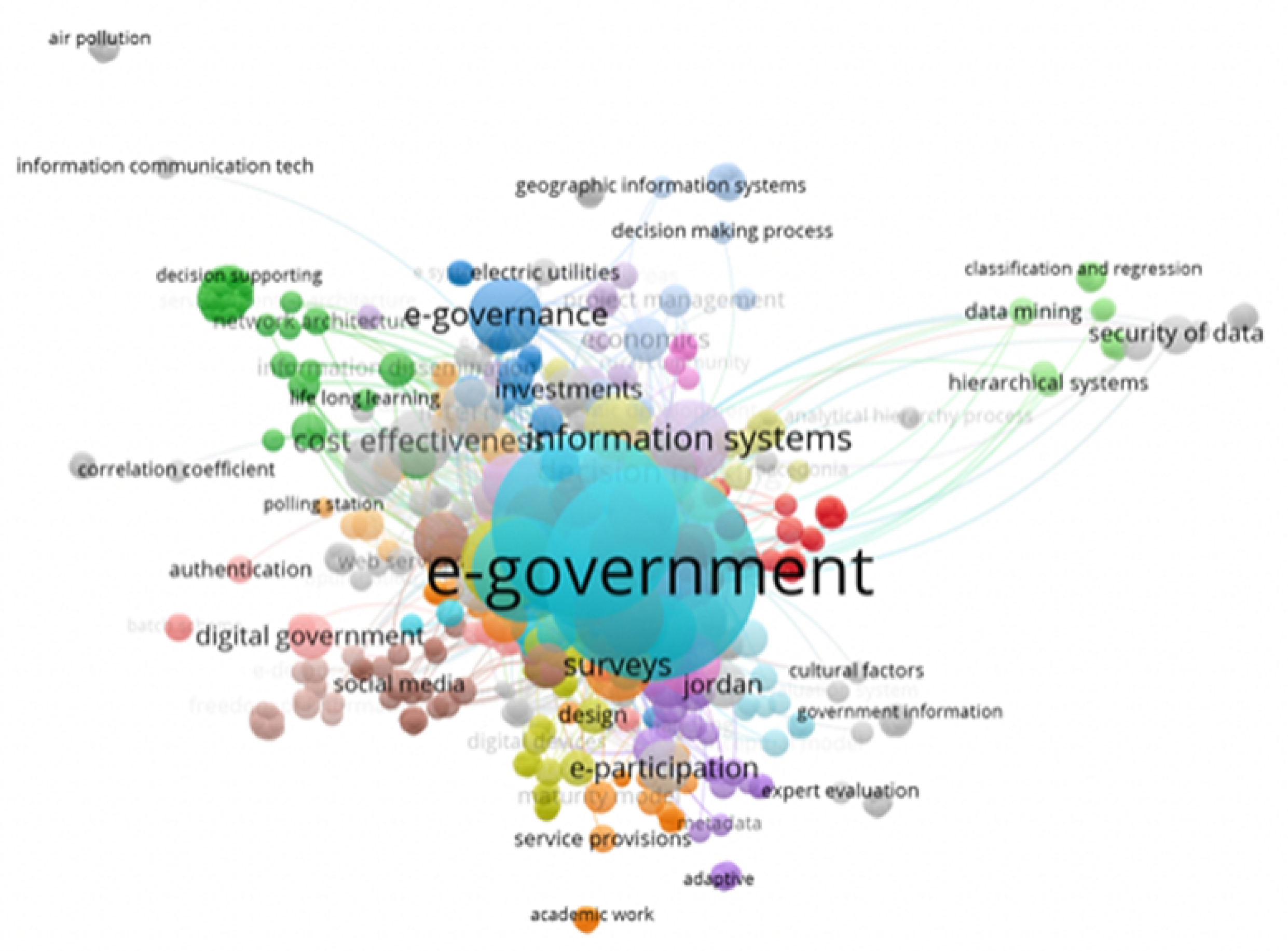

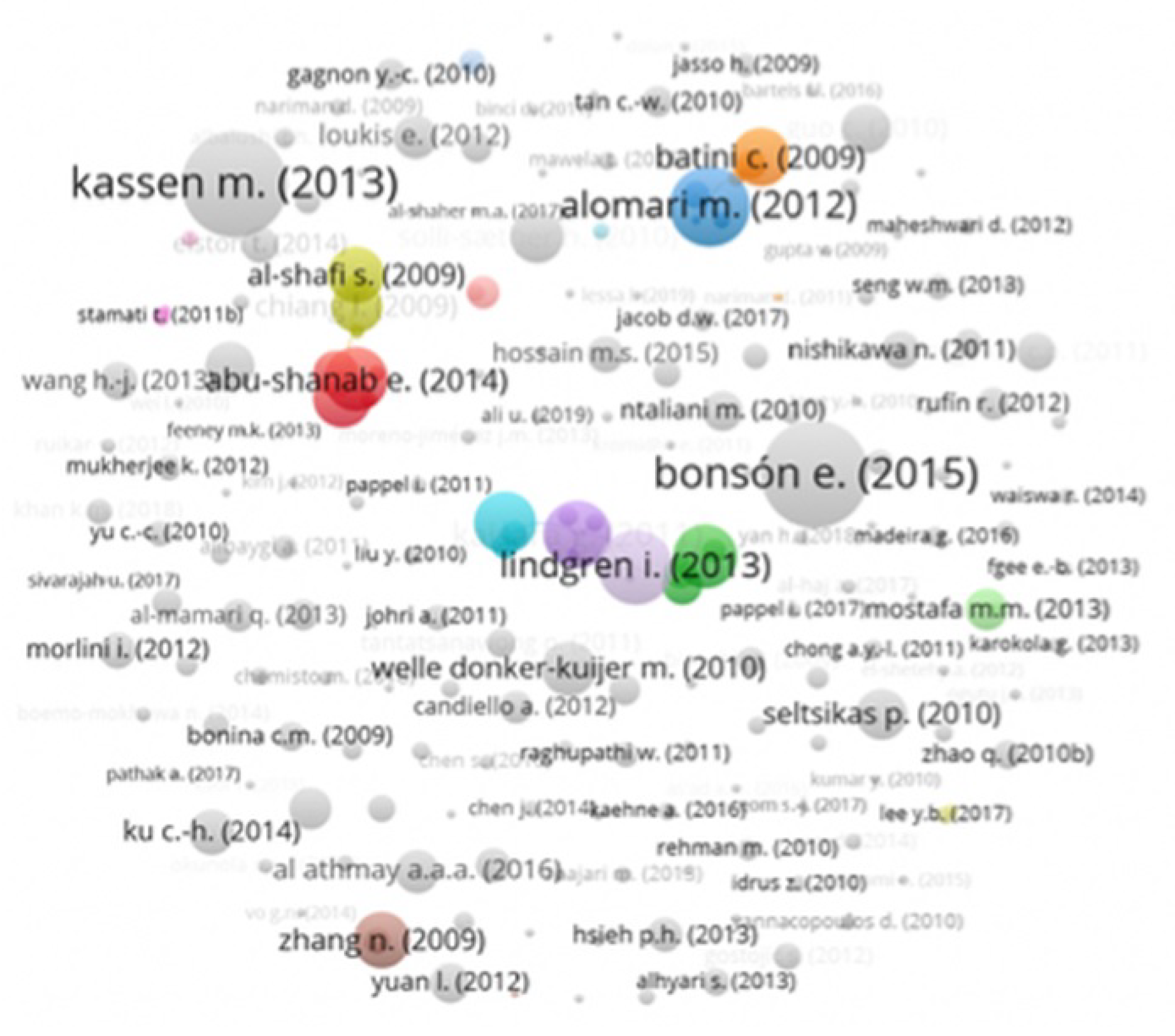

Next, the metadata generated by SCOPUS regarding the papers retrieved were evaluated by the Vosviewer Software 1.6.15. This evaluation generated the graphs presented in

Figure 4 and

Figure 5.

Figure 4 refers to the co-citation of keywords used in the 550 papers retrieved by the search string in

Table 1.

Figure 5 refers to the co-citation of authors, which is a useful tool to identify the list of most cited studies, authors, keywords, etc.; therefore, it is considered to have the greatest influence on the study. In fact, the word cloud of the co-citations overlap with the frequency of authors described in

Figure 2.

The search string was refined after the bibliometric evaluation of the preliminary results. When the new search string, presented in

Table 2, was run, 289 records were retrieved. The titles and abstracts of these 289 documents were read, and the following criteria were applied to select those that would be read in full:

the paper should contain the keywords in the title or abstract;

the paper should contain concepts related to digital government;

the paper should answer at least one research question;

the paper should approach the point of view of users of digital government services.

By applying these criteria, the set of 289 papers was reduced to 120 papers, which were read by our research team. The data collected from the papers were listed on a digital spreadsheet in a collaborative work environment. The consolidated results are reported as follows.

3. Mapping Study—Discussion of Results

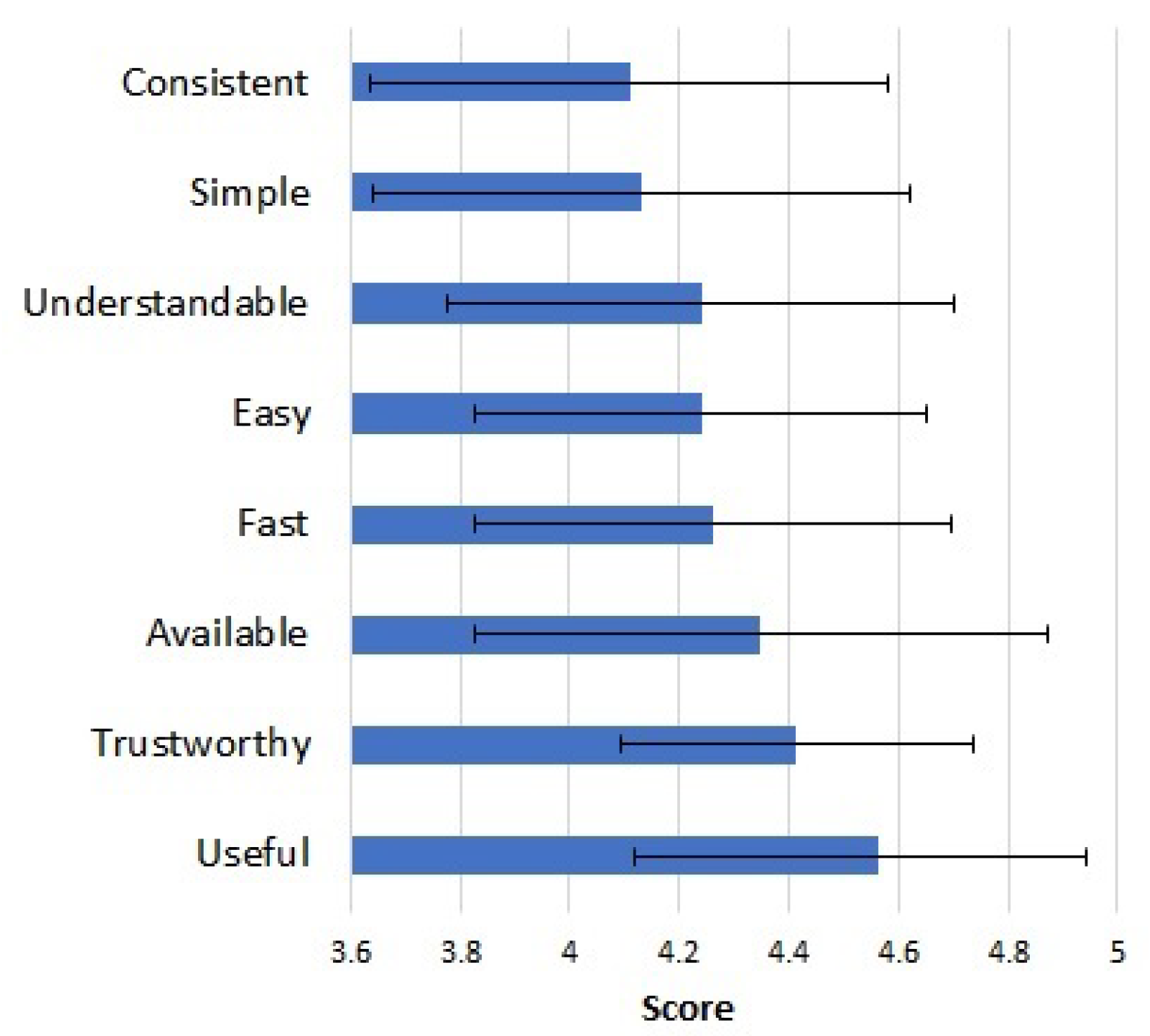

After the bibliographic research, we performed a quantitative analysis to identity the indicators related to effectiveness found in the 120 selected papers.

Table 3 shows the most common indicators to evaluate effectiveness of digital public services.

The most frequent indicators of effectiveness are related to the ease of the service and usefulness. This is because many papers applied the Technology Acceptance Model (TAM) [

18] to evaluate the effectiveness of digital services. In this model, effectiveness is analyzed considering the acceptance of new technologies by users of digital public services, i.e., the model seeks to predict the acceptance of systems based on the measurement of the intentions of the user and to explain such intentions through their attitudes, subjective norms, perceived usefulness, perceived ease of use and related variables. TAM consists of six dimensions, illustrated in

Figure 6: (i) external variables, (ii) perceived usefulness, (iii) perceived ease of use, (iv) attitude towards using, (v) behavioral intention to use and (vi) actual system use.

One of the key issues of TAM is the influence of external variables on the beliefs, attitudes and behaviors of the users. Then, the impact of perceived usefulness and perceived ease of use on the uptake of a system is observed. Still, these indicators are related to the fact that greater ease of use can improve performance, allowing the user to produce more with the same effort. Therefore, it impacts the perceived usefulness. Experiences of applying TAM to the public sector in some countries, such as India [

19,

20], Saudi Arabia [

21,

22], Tanzania [

23], Jordan [

24] have been studied. Other works, such as [

22], integrated the DeLone and McLean IS success model [

25] with TAM. Also, culture issues have been taken into consideration by using the theory of personal values.

The ‘useful’ indicator is a fundamental driver of usage intentions affecting the perceived effectiveness of digital public services. This indicator has been used extensively in information systems and technology research [

26] and refers to “the extent to which a person believes that using a particular technology will enhance her/his job performance” [

18]. The literature of service management has stated that the service delivery system has a direct impact on service value [

27]. It was argued that the perceived e-service system delivery process is directly related to the usefulness of the service yielding a component of value for public services. Some works (e.g., [

28]) presented an extension of TAM model to define the determinants of perceived usefulness and intentions of use related to social influence and cognitive processes. Such determinants are also related to the external variables of the original model.

The ‘simple’ indicator refers to the process of user interaction with the services. It reflects on the usability of the services [

29], as a service can be easy to, while the delivery process demands redundant steps and/or too much bureaucracy. According to [

30], e-service delivery has greater potential for success in public sector tasks that have low or limited levels of complexity. Simple processes yield a better user experience and improve quality and consistency as simple e-services are easier to learn, easier to change and faster to execute [

24].

Presently, security and privacy concerns are increasing with the rapid growth of online services and users are getting more and more reluctant to give their personal information online [

31]. The ‘trustworthy’ indicator can refer to both the perception of security of the system used to provide the service and the trust in the institution that provides the service. In fact, trust in the system is important especially for services that use the Internet as a transaction channel [

32], especially for those that require online payments [

33], as this issue affects the quality and intention to use. The concept of trust regarding the use of e-government services is also justified by its relevance in the context of a political system, specific institutions or organizations, and political staff [

34]. According to [

35], trust in the institution and in the government is an important factor for the adoption and intention to use the service.

The use of communication technology has provided an opportunity to improve the quality of the service through electronic interactions [

36]. The ‘available’ indicator refers to a variable of technical quality related to readiness and absence of interruptions in access to digital systems [

37]. Methodologies have been proposed to describe the availability of e-service by modelling the evolutionary path of the digital interface between public agencies and users, such as the model proposed by the European Commission (2001) [

38] in a report on the provision of public e-services to measure the level of online sophistication of the services. ‘available’ also means the coverage of the public service: it should be available to everyone, regardless of where the users live [

39].

The ‘understandable’ indicator consists of the presentation of information aiming at simplicity in the execution of different transactions and navigation in the service journey. It can be associated with web design and service complexity. According to [

24], the adequacy, attractiveness and good organization of information in websites makes the service more comprehensive to all citizens.

The ‘consistent’ indicator refers to the coherent maintenance and presentation of e-services in terms of design, organization and interactivity to optimize and meet the expectations of users [

40]. According to [

29], the consistency of a digital service is related both to the perception of how easy it is for users to find what they are looking for. E-Government services must be accessible and well-designed, and should follow established standards [

41]. Maintaining consistency in the layout of the service is essential so that the pattern of interactions is the same for every process—once learned, it will be replicable in other contexts. In addition, the experience of use becomes much more interesting because there will be no feeling of being lost. Often the reason users do not interact with applications is this feeling, caused by the lack of consistency and standardization.

Finally, the ‘fast’ indicator refers to the ability to finish the service quickly. As organizations get prepared for digitalization, so must their IT departments. This means they have to respond more quickly to requests from different groups of users, increase infrastructure flexibility, and improve the use of the current resources [

42]. An important advantage of ICT was to promote more efficient and cost-effective government, allow greater public access to information, and make government more transparent and accountable to citizens [

43]. Such initiatives particularly benefit rural areas by connecting regional and local offices with central government ministries. These also allow national government agencies to communicate and interact with their local constituency and improve public services. To answer these needs, traditional approaches and modes of IT management are often insufficient. The public sector IT departments should adjust their operations as a response to digitalization efforts, for example, smart cities and digital transformation. In this sense, the IT development process within the organization should be improved, i.e., how the IT department can better respond to the needs of business units. For this purpose, adjustments are required both in management and daily operations. Moreover, changes should not be made only internally within the IT department, as the whole organization should be involved.

5. Final Considerations

The evaluation of public services has become an important aspect of the decision-making process by managers and public institutions. This evaluation increases the probability of obtaining better results and finding unexpected results. Monitoring and evaluation are always based on indicators that assist in decision-making, allowing for better performance, for more rational planning and for a clearer and more objective accountability.

This study aimed to identify the indicators commonly adopted in the literature to measure the effectiveness of digital public services regarding the perception of the user. Based on a literature review and a subsequent perception survey with experts in digital government, two groups of semantically correlated indicators were found. Although the first group of indicators refers to factors commonly related to the quality of information, usability and technical performance of the service, the second one refers to the usefulness of the service. Based on the indicators and the statistical analyses carried out by our work, it is possible for the manager to create a more complete model for evaluating the effectiveness of their services, thus potentially increasing the satisfaction of its users with the service.

The ‘useful’ indicator may also refer to the value that citizens attribute to their experience in public services and that can be understood as “public value”. In other words, it refers to the provision of services that are actually necessary and will be used [

40]. It provides a new way of thinking about the evaluation of government activities and a new conceptualization of the public interest and the creation of social value. In a citizen-centered approach, developing services without considering the demands of users may lead to low rates of service use. In this sense, the Public Administration should minimize wasteful and unnecessary public services to save costs that may generate fiscal stress. Providing appropriate services narrow the state management apparatus according to its core functions, whereby it can provide better services and respond to demands for transparency and accountability.

According to [

28], due to the nature of public services, effectiveness can be considered based not only on the quality perceived individually by users, but also on its social interest. The social interest of a public service is related to the government’s duty-power in guaranteeing the basic rights of the citizens [

44]. For essential services—for example, those related to health—the usefulness and social need for the service are clear to the citizens. On the other hand, the social importance of other services—for example, the payment of fees and taxes—is sometimes not so clear as the direct benefits are not noticeable for the citizen. This observation is important since the application and implication of service evaluation may be contingent on the perspective taken [

45].

The literature is still unclear regarding how to measure the personal and social impact of public services. This is a gap for advances in research in this area, especially in developing countries [

46]. Such impact could be analyzed, for example, considering the category of service delivery, i.e., the ways in which services are delivered to users. In this way, it is possible to capture a clear articulation of the nature, boundaries, components and elements of specific e-service experiences, and to further investigate the interaction between these factors and the dimensions of service quality [

47]. Another possibility is to perform ethnography studies on the digital transformations of services. Ethnographic may be used as method to collect qualitative information from the observation of people carrying out daily tasks that interact in complex social environments [

48]. An ethnographic analysis is pertinent when new technologies are studied as it helps to find out and explain why many services are not welcome or used.

Social indicators end up being less frequently assessed in the literature on the evaluation of digital services, but that does not make them less relevant. In recent years, the concept of public value has become popular in the United States, the European Union, Australia and even in developing countries due to its ability to investigate the performance of public services from the point of view of citizens [

49].

Although all studies reviewed identified indicators of effectiveness, very few made recommendations about how to turn these qualitative indicators into quantitative scores. This is also the limitation of this work. Several studies noted difficulties associated with the development of quantitative measures. This work contributed to enrich the discussion on the evaluation of effectiveness as a tool to measure the quality of a public service complying with the expectations of the users. The next stage of our study is to develop a practical model and apply it to real users. This model is being developed in partnership with the Digital Government Secretariat of the Brazilian Ministry of Economy.