Adverse Drug Event Detection Using a Weakly Supervised Convolutional Neural Network and Recurrent Neural Network Model

Abstract

:1. Introduction

2. Related Work

3. Methods

3.1. Word Embedding

3.2. Framework of the WSM-CNN-LSTM Model

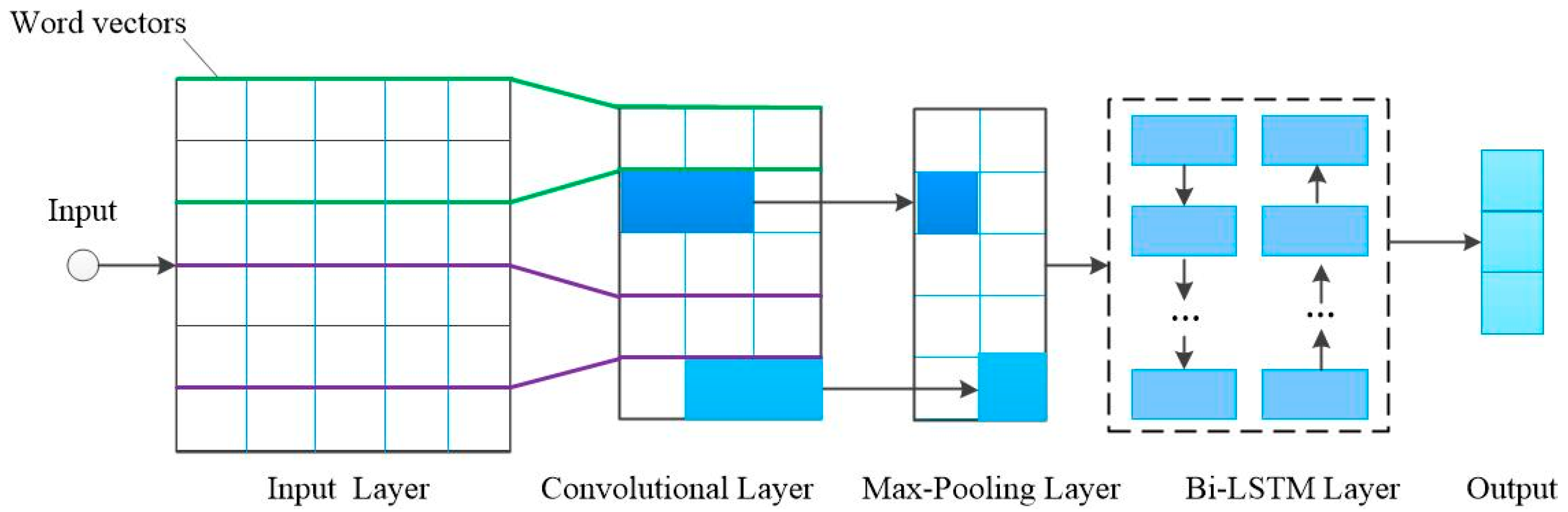

3.3. CNN-LSTM Model

3.3.1. Convolutional Layer

3.3.2. Max-Pooling and Dropout Layer

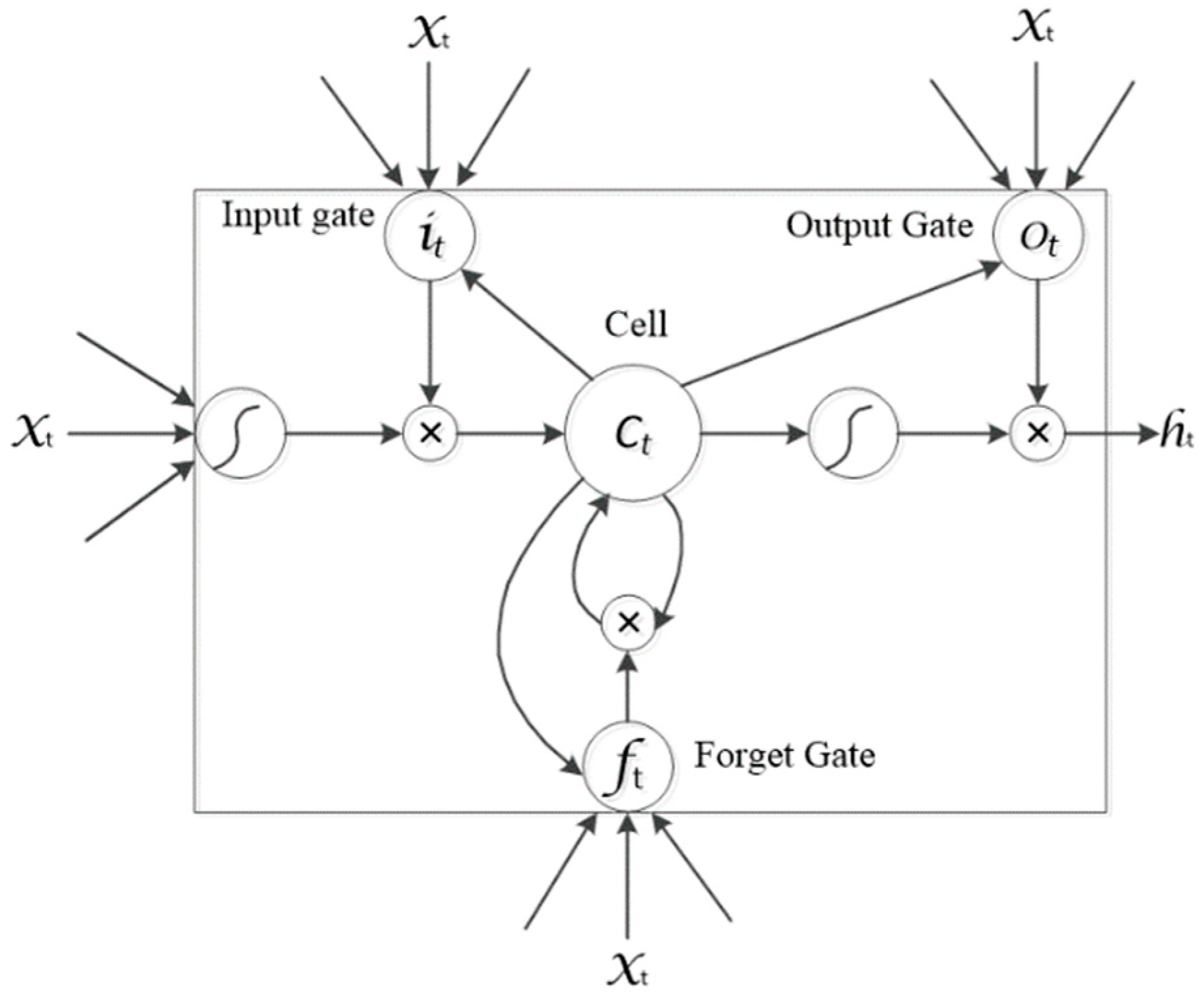

3.3.3. Bi-LSTM Layer

3.3.4. Fully Connected Layer

3.3.5. Softmax Layer

3.4. Weakly Supervised Mechanism

4. Experiments and Discussion

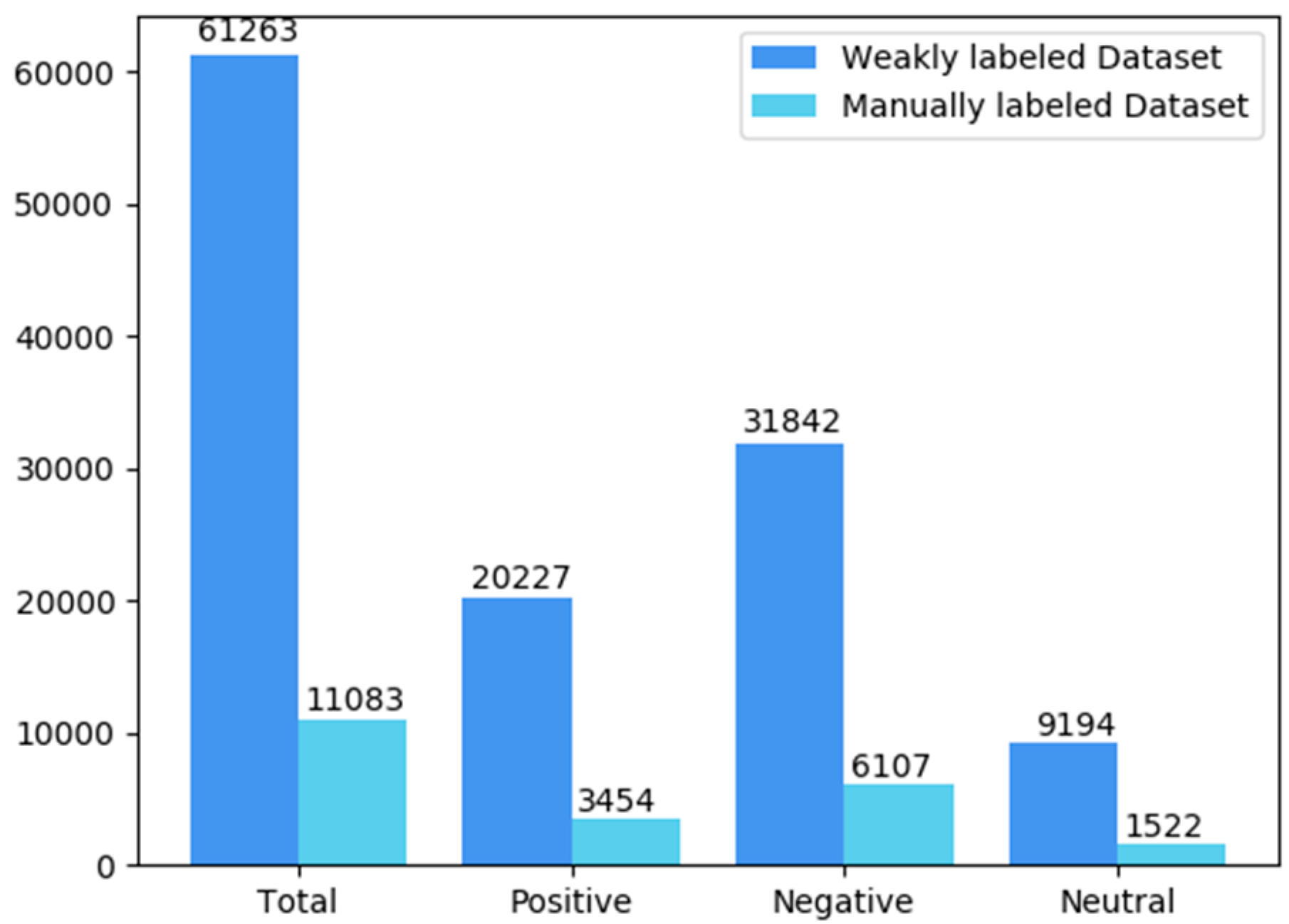

4.1. Dataset

4.2. Experimental Setup

4.3. Comparison Models

- SVM. Support vector machines. We used trigrams and Liblinear classifier;

- CNN-rand. We trained the CNN on of the labeled dataset and randomly initialized the network parameters;

- Weakly supervised mechanism CNN model (WSM-CNN). The weakly labeled data were utilized to train the network model based on the CNN, and the labeled data were used to fine-tune the initialized network parameters;

- LSTM-rand. We trained the LSTM on the labeled dataset and randomly initialized the network parameters;

- Weakly supervised mechanism LSTM model (WSM-LSTM). The weakly labeled data were utilized to train the network model based on LSTM, and the labeled data were used to fine-tune the initialized network parameters;

- CNN-LSTM-rand. We trained the combined CNN and LSTM on the labeled dataset and randomly initialize the network parameters.

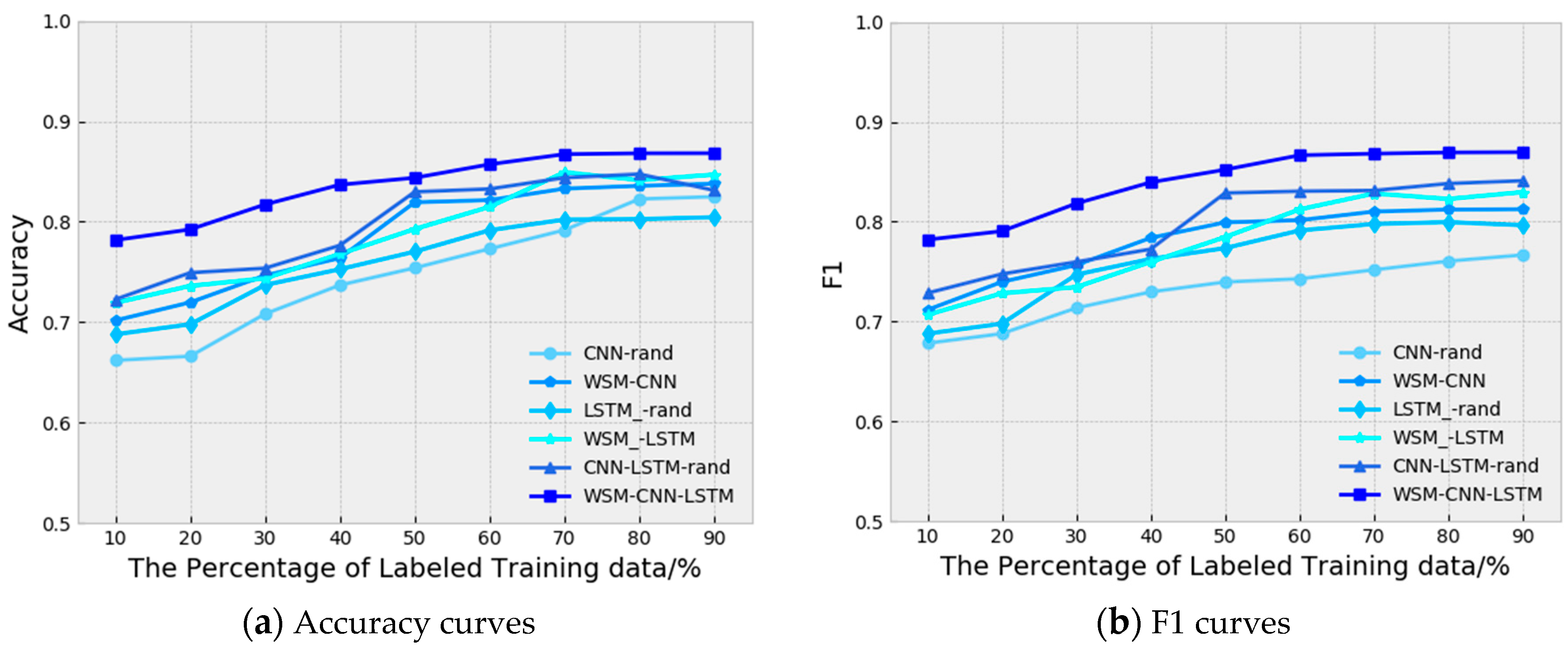

4.4. Experimental Results and Discussion

4.4.1. Weakly Supervised Model Performance

4.4.2. Rand Compared with the WSM

4.4.3. Macro-F1 Result of Our Model

4.4.4. Impact of the Labeled Training Data Size on Our Model

5. Conclusions and Future Work Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Lazarou, J.; Pomeranz, B.H.; Corey, P.N. Incidence of adverse drug reactions in hospitalized patients: A meta-analysis of prospective studies. JAMA 1998, 279, 1200–1205. [Google Scholar] [CrossRef] [PubMed]

- Hakkarainen, K.M.; Hedna, K.; Petzold, M.; Hägg, S. Percentage of Patients with Preventable Adverse Drug Reactions and Preventability of Adverse Drug Reactions—A Meta-Analysis. PLoS ONE 2012, 7, e33236. [Google Scholar] [CrossRef] [PubMed]

- Xu, R.; Wang, Q. Large-scale combining signals from both biomedical literature and the FDA Adverse Event Reporting System (FAERS) to improve post-marketing drug safety signal detection. BMC Bioinform. 2014, 15, 17. [Google Scholar] [CrossRef] [PubMed]

- Hazell, L.; Shakir, S.A. Under-Reporting of Adverse Drug Reactions. Drug Saf. 2006, 29, 385–396. [Google Scholar] [CrossRef] [PubMed]

- Pirmohamed, M.; James, S.; Meakin, S.; Green, C.; Scott, A.K.; Walley, T.J.; Farrar, K.; Park, B.K.; Breckenridge, A.M. Adverse drug reactions as cause of admission to hospital: Prospective analysis of 18820 patients. BMJ Br. Med. J. 2004, 329, 15–19. [Google Scholar] [CrossRef] [PubMed]

- Curcin, V.; Ghanem, M.; Molokhia, M.; Guo, Y.; Darlington, J. Mining Adverse Drug Reactions with E-Science Workflows. In Proceedings of the Cairo International Biomedical Engineering Conference, Cairo, Egypt, 18–20 December 2008; pp. 1–5. [Google Scholar]

- Sarker, A.; Gonzalez, G. Portable automatic text classification for adverse drug reaction detection via multi-corpus training. J. Biomed. Inform. 2015, 53, 196–207. [Google Scholar] [CrossRef] [PubMed]

- Korkontzelos, I.; Nikfarjam, A.; Shardlow, M.; Sarker, A.; Ananiadou, S.; Gonzalez, G.H. Analysis of the effect of sentiment analysis on extracting adverse drug reactions from tweets and forum posts. J. Biomed. Inform. 2016, 62, 148–158. [Google Scholar] [CrossRef] [PubMed]

- Ji, X.; Chun, S.A.; Geller, J. Monitoring Public Health Concerns Using Twitter Sentiment Classifications. In Proceedings of the IEEE International Conference on Healthcare Informatics (ICHI), Philadelphia, PA, USA, 9–11 September 2013; pp. 335–344. [Google Scholar]

- Sarker, A.; Ginn, R.; Nikfarjam, A.; O’Connor, K.; Smith, K.; Jayaraman, S.; Upadhaya, T.; Gonzalez, G. Utilizing social media data for pharmacovigilance: A review. J. Biomed. Inform. 2015, 54, 202–212. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Freifeld, C.C.; Brownstein, J.S.; Menone, C.M.; Bao, W.; Filice, R.; Kass-Hout, T.; Dasgupta, N. Digital Drug Safety Surveillance: Monitoring Pharmaceutical Products in Twitter. Drug Saf. 2014, 37, 343–350. [Google Scholar] [CrossRef] [Green Version]

- Lafferty, J.; McCallum, A.; Pereira, F.C. Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data. In Proceedings of the 18th International Conference on Machine Learning, Williamstown, MA, USA, 28 June–1 July 2001; pp. 282–289. [Google Scholar]

- Wang, W. Mining Adverse Drug Reaction Mentions in Twitter with Word Embeddings. In Proceedings of the Social Media Mining Shared Task Workshop at the Pacific Symposium on Biocomputing, Kohala Coast, HI, USA, 4–8 January 2016. [Google Scholar]

- Limsopatham, N.; Collier, N. Modeling the Combination of Generic and Target Domain Embeddings in a Convolutional Neural Network for Sentence Classification. In Proceedings of the 15th Workshop on Biomedical Natural Language Processing, Berlin, Germany, 12 August 2016; pp. 136–140. [Google Scholar]

- Magge, A.; Scotch, M.; Gonzalez, G. CSaRUS-CNN at AMIA-2017 Tasks 1, 2: Under Sampled CNN for Text Classification. In Proceedings of the CEUR Workshop Proceedings, Honolulu, HI, USA, 27 January 2017; pp. 76–78. [Google Scholar]

- Odeh, F. A Domain-Based Feature Generation and Convolution Neural Network Approach for Extracting Adverse Drug Reactions from Social Media Posts. Ph.D. Thesis, Birzeit University, Birzeit, Palestine, 22 February 2018. [Google Scholar]

- Gupta, S.; Pawar, S.; Ramrakhiyani, N.; Palshikar, G.K.; Varma, V. Semi-Supervised Recurrent Neural Network for Adverse Drug Reaction mention extraction. BMC Bioinform. 2018, 19, 212. [Google Scholar] [CrossRef]

- Comfort, S.; Perera, S.; Hudson, Z.; Dorrell, D.; Meireis, S.; Nagarajan, M.; Ramakrishnan, C.; Fine, J. Sorting Through the Safety Data Haystack: Using Machine Learning to Identify Individual Case Safety Reports in Social-Digital Media. Drug Saf. 2018, 41, 579–590. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yin, W.; Kann, K.; Yu, M.; Schütze, H. Comparative study of cnn and rnn for natural language processing. arXiv 2017, arXiv:170201923. [Google Scholar]

- Cocos, A.; Fiks, A.G.; Masino, A.J. Deep learning for pharmacovigilance: Recurrent neural network architectures for labeling adverse drug reactions in Twitter posts. J. Am. Med. Inform. Assoc. 2017, 24, 813–821. [Google Scholar] [CrossRef] [PubMed]

- Guan, Z.; Chen, L.; Zhao, W.; Zheng, Y.; Tan, S.; Cai, D. Weakly-Supervised Deep Learning for Customer Review Sentiment Classification. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence (IJCAI), New York, NY, USA, 9–15 July 2016; pp. 3719–3725. [Google Scholar]

- Qu, L.; Gemulla, R.; Weikum, G. A Weakly Supervised Model for Sentence-Level Semantic Orientation Analysis with Multiple Experts. In Proceedings of the Joint Conference on Empirical Methods in Natural language Processing and Computational Natural Language Learning. Association for Computational Linguistics, Jeju Island, Korea, 12–14 July 2012; pp. 149–159. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Paliwal, K.; Schuster, M. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [Green Version]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef]

- Leaman, R.; Wojtulewicz, L.; Sullivan, R.; Skariah, A.; Yang, J.; Gonzalez, G. Towards Internet-Age Pharmacovigilance: Extracting Adverse Drug Reactions from User Posts to Health-Related Social Networks. In Proceedings of the 2010 Workshop on Biomedical Natural Language Processing, Uppsala, Sweden, 15 July 2010; pp. 117–125. [Google Scholar]

- Liliya, A.; Ignatov, A.; Cardiff, J. A Large-scale CNN ensemble for medication safety analysis. In Proceedings of the International Conference on Applications of Natural Language to Information Systems, Liège, Belgium, 21–23 June 2017; pp. 247–253. [Google Scholar]

- Sara, S.; Perez, A.; Casillas, A. Exploring Joint AB-LSTM with embedded lemmas for Adverse Drug Reaction discovery. IEEE J. Biomed. Health Inform 2018, 2168–2194. [Google Scholar]

- Tutubalina, E.; Nikolenko, S. Demographic Prediction Based on User Reviews about Medications. Comput. y Sist. 2017, 21, 227–241. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global Vectors for Word Representation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Yoon, J.; Kim, H. Multi-Channel Lexicon Integrated CNN-BiLSTM Models for Sentiment Analysis. In Proceedings of the 29th Conference on Computational Linguistics and Speech Processing (ROCLING), Taipei, Taiwan, 27–28 November 2017; pp. 244–253. [Google Scholar]

- Zhang, Y.; Yuan, H.; Wang, J.; Zhang, X. YNU-HPCC at EmoInt-2017: Using a CNN-LSTM Model for Sentiment Intensity Prediction. In Proceedings of the 8th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, Copenhagen, Denmark, 8 September 2017; pp. 200–204. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the 27th international conference on machine learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Tobergte, D.R.; Curtis, S. Improving neural networks with dropout. J. Chem. Inf. Model. 2015, 5, 1689–1699. [Google Scholar]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradent descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef]

- Mesnil, G.; He, X.; Deng, L.; Bengio, Y. Investigation of Recurrent-Neural-Network Architectures and Learning Methods for Spoken Language Understanding. In Proceedings of the 14th Annual Conference of the International Speech Communication Association (INTERSPEECH), Lyon, France, 25–29 August 2013; pp. 3771–3775. [Google Scholar]

- Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer International Publishing: Cham, Switzerland, 2007; Volume 16, p. 049901. [Google Scholar]

- Owoputi, O.; O’Connor, B.; Dyer, C.; Gimpel, K.; Schneider, N. Part-of-Speech Tagging for Twitter: Word Clusters and Other Advances. Available online: http://www.cs.cmu.edu/~ark/TweetNLP/owoputi+etal.tr12.pdf (accessed on 30 August 2019).

- Ikonomakis, M.; Kotsiantis, S.; Tampakas, V. Text classification using machine learning techniques. WSEAS Trans. Comput. 2005, 4, 966–974. [Google Scholar]

- Guo, S.X.; Sun, X.; Wang, S.X.; Gao, Y.; Feng, J. Attention-Based Character-Word Hybrid Neural Networks with semantic and structural information for identifying of urgent posts in MOOC discussion forums. IEEE Access 2019, 1–9. [Google Scholar] [CrossRef]

| Drug Ratings | Satisfied Level | General Meaning |

|---|---|---|

| 1 | Dissatisfied | I would not recommend taking this medicine |

| 2 | Not satisfied | This medicine did not work to my satisfaction |

| 3 | Somewhat Satisfied | This medicine helped somewhat |

| 4 | Satisfied | This medicine helped |

| 5 | Very Satisfied | This medicine cured me or helped me a great deal |

| Method | Accuracy | F1 | Precision | Recall |

|---|---|---|---|---|

| SYM | 80.69 | 75.93 | 81.56 | 71.02 |

| CNN-rand | 79.17 | 75.18 | 80.21 | 70.74 |

| WSM-CNN | 83.29 | 81.01 | 82.47 | 79.60 |

| LSTM-rand | 80.71 | 79.77 | 81.86 | 77.78 |

| WSM-LSTM | 84.92 | 82.82 | 83.02 | 82.62 |

| CNN-LSTM-rand | 83.78 | 83.12 | 85.3 | 81.05 |

| WSM-CNN-LSTM | 86.72 | 86.81 | 87.92 | 85.73 |

| Method | Accuracy | F1 | Precision | Recall |

|---|---|---|---|---|

| SVM | 80.42 | 77.68 | 82.01 | 73.78 |

| CNN-rand | 78.36 | 74.76 | 79.76 | 70.97 |

| WSM-CNN | 82.94 | 80.41 | 81.91 | 78.19 |

| LSTM-rand | 80.32 | 79.63 | 81.28 | 77.29 |

| WSM-LSTM | 84.56 | 82.01 | 82.36 | 81.92 |

| CNN-LSTM-rand | 82.79 | 82.83 | 85.12 | 80.15 |

| WSM-CNN-LSTM | 85.67 * | 85.57 * | 86.88 * | 84.16 * |

| Negative | Neural | Positive |

|---|---|---|

| 89.73 | 78.94 | 91.25 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, M.; Geng, G. Adverse Drug Event Detection Using a Weakly Supervised Convolutional Neural Network and Recurrent Neural Network Model. Information 2019, 10, 276. https://doi.org/10.3390/info10090276

Zhang M, Geng G. Adverse Drug Event Detection Using a Weakly Supervised Convolutional Neural Network and Recurrent Neural Network Model. Information. 2019; 10(9):276. https://doi.org/10.3390/info10090276

Chicago/Turabian StyleZhang, Min, and Guohua Geng. 2019. "Adverse Drug Event Detection Using a Weakly Supervised Convolutional Neural Network and Recurrent Neural Network Model" Information 10, no. 9: 276. https://doi.org/10.3390/info10090276

APA StyleZhang, M., & Geng, G. (2019). Adverse Drug Event Detection Using a Weakly Supervised Convolutional Neural Network and Recurrent Neural Network Model. Information, 10(9), 276. https://doi.org/10.3390/info10090276