Abstract

The ocean connects all continents and is an important space for human activities. Ship detection with electro-optical images has shown great potential due to the abundant imaging spectrum and, hence, strongly supports human activities in the ocean. A suitable imaging spectrum can obtain effective images in complex marine environments, which is the premise of ship detection. This paper provides an overview of ship detection methods with electro-optical images in marine environments. Ship detection methods with sea–sky backgrounds include traditional and deep learning methods. Traditional ship detection methods comprise the following steps: preprocessing, sea–sky line (SSL) detection, region of interest (ROI) extraction, and identification. The use of deep learning is promising in ship detection; however, it requires a large amount of labeled data to build a robust model, and its targeted optimization for ship detection in marine environments is not sufficient.

1. Introduction

Human activities in the ocean are becoming more frequent and include maritime transportation, maritime trade, marine fishing, and military activities [1]. Ships are important carriers for human activities in the ocean, so the monitoring and management of maritime ships is critical.

With the rise of autonomous vehicles, autonomous ships have attracted more attention, especially for long-distance and short-distance cargo ships and special ships participating in high-risk military operations [2]. Some ships are equipped with automatic identification systems (AISs) and broadcast their own position and other information through radio signals, which can be received by surrounding ships to avoid collisions. However, little marine crafts (canoes, kayaks, sailboats, small fishing boats, etc.) are often not equipped with AIS [3], and the signals emitted by AIS are vulnerable to interference. Therefore, for ship management in the marine environment and the unmanned and autonomous navigation of ships, the ability to accurately detect ships is needed.

Radar is widely used in ship detection, but it has some limitations. Radar is vulnerable to the interference of objects around a port such as metal facilities. In addition, it cannot detect small nonmetallic (wood or glass fiber) marine crafts [4]. For synthetic aperture radar (SAR), speckle noise and motion blurring in SAR images make feature extraction difficult [5,6,7,8]. Due to the imaging mechanism of SAR, images are difficult to comprehend and interpret [5].

Ship detection with electro-optical images has attracted the interest of researchers. The images are intuitive and easy to understand for the human eye, and the rich imaging spectrum also meets the imaging needs in various scenarios. Visible spectrum imaging has high resolution and can obtain color and texture information. Thermal infrared can obtain images of a scene under night vision and low-light conditions. Short-wave infrared is less affected by atmospheric scattering and has a strong penetrating ability for fog and haze. The abundant imaging spectrum can enable ship detection in marine environments to obtain clear and effective images under various light and weather conditions. This is beneficial to the accuracy and robustness of ship detection.

There have been some reviews on ship detection. For example, Kanjir [3] reviewed articles on ship detection using optical satellite images. Prasad [9] paid more attention to video-based object detection and tracking in the marine environment. Spraul [10] compared the performance of several deep learning methods in maritime ship detection. Fingas [11] discussed ship detection methods based on airborne platforms. These authors all mentioned the subject of ship detection. However, ship detection with electro-optical images in marine environments still lacks sufficient discussion. For example, discussions on applicable scenarios of different imaging spectra are lacking. The characteristics of a marine environment and a ship’s appearance make ship detection different from generic object detection. Therefore, ship detection workflows in marine environments are special. In addition to traditional ship detection methods, deep learning has been introduced into ship detection by researchers in recent years. In the open sea, there is often insufficient texture information, and it is difficult to select appropriate hand-crafted features. Deep learning can automatically learn robust features from data. However, current deep learning methods do not fully consider the characteristics of ship detection in marine environments, which limits their performance. To our knowledge, there is no article that fully discusses these issues.

This paper focuses on ship detection with electro-optical images in marine environments to meet the needs of ship management and flow monitoring in ports and coasts, as well as the unmanned and autonomous navigation of ships. In this paper, Section 2 introduces the applicable scenarios of different imaging spectra and the image characteristics of ship detection in marine environments. Section 3 presents the traditional ship detection methods and deep learning methods and discusses the advantages and limitations of different methods. Section 4 presents concluding remarks.

2. Electro-Optical Images in Ship Detection: Spectral Bands and Characteristics

Acquiring clear and effective images is a prerequisite for ship detection. Appropriate spectral bands can obtain better images in harsh and changeable marine environments. Although objects can be enhanced in an original image by using image processing methods, it increases the burden of computing devices, especially for some embedded devices. The characteristics of electro-optical images, including the appearance characteristics of ships and the components of maritime scenarios, determine the ship detection workflow.

2.1. Image Spectral Bands in Ship Detection

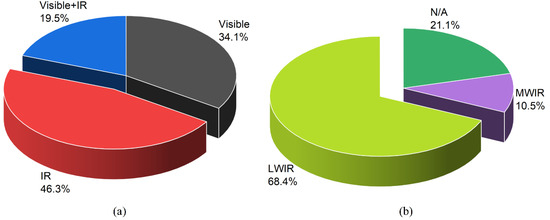

To fulfil accurate ship detection in various maritime scenarios (night vision, fog, or haze), it is worth considering selecting a suitable imaging spectrum. The changeable weather conditions and light in marine environments make it difficult for imagers to obtain clear and effective images. This paper counts the imaging spectrum commonly used in ship detection. Table 1 shows the types of electro-optical images commonly used in ship detection. Figure 1 shows the percentage of various optical images. It can be seen from the statistical data that infrared images are used the most, followed by visible images. Among the infrared image types, the most commonly used is long-wave infrared (LWIR).

Table 1.

Types of electro-optical images commonly used in ship detection.

Figure 1.

The use of images with different spectra in ship detection: (a) The share of the usage of image spectral bands in ship detection. Infrared images were the most commonly used, followed by visible-band images. (b) Use of infrared images in different bands; LWIR was used the most.

Different imaging spectral bands have unique advantages. LWIR and medium-wave infrared (MWIR) can be imaged without any natural (sun or moon) or artificial light. For night vision, there is a temperature difference between the ship’s power cabin and the sea–sky background, which is beneficial for thermal infrared imaging. However, for some small marine crafts (canoes, etc.), due to the fact of long-term immersion in the sea, they have reached a thermal balance with the seawater. In these cases, LWIR is usually unable to distinguish the target. In daytime ship detection, LWIR imaging has no obvious advantages [51]. Visible-band camera technology is well established and widely used. For daylight imaging, or in clear weather, visible-band cameras can provide more information than LWIR and MWIR imaging. Visible-band cameras are sensitive to sudden changes in light. Severe weather conditions reduce the effectiveness of visible-band cameras. Short-wave infrared (SWIR) is currently rarely used but has great potential. Both SWIR and visible-band cameras rely on reflected light for imaging, and their imaging mechanisms are similar. Therefore, SWIR can obtain more information than thermal infrared such as texture information. SWIR penetrates fog and haze much better than sensitive sensors in the visible spectral range, especially for long-range distances [53].

Limited by the spectral response or imaging mechanisms, it is difficult for a single-band sensor to simultaneously take into account spatial resolution, penetration (such as smoke, fog, and rain), and sensitivity. The combination of different band sensors can meet the needs of all-day and all-weather ship detection in a marine environment and better cope with the complex environment. For example, the combination of thermal infrared and visible-band cameras can provide daytime and night detection. A visible-band camera has a high resolution and can also compensate for the texture and color information missing from thermal infrared. SWIR has a strong permeability for fog and haze and can effectively be used to supply LWIR and visible-band imaging. Currently, most researchers use images of different spectral bands separately. Multi-sensor data fusion can provide comprehensive information, give play to the advantages of each sensor, and obtain more accurate detection results. However, data fusion will also increase the computational load. Existing fusion methods mainly include pixel-level fusion, feature-level fusion, and decision-level fusion [54]. Farahnakian [50] uses three fusion methods to detect ships. Their research shows that feature-level fusion performs better than other fusion architectures.

2.2. Image Characteristics with a Sea–Sky Background

Ship detection in marine environments is usually performed with a sea–sky background, and the components of typical scenarios include sea, sky, and sea–sky regions. In sea–sky regions, the sea–sky line (SSL) is the boundary between the sea and sky regions. Ships usually appear near the SSL, so the SSL can be used as a pivotal clue for ship detection. In the following discussion on the workflow of ship detection, it can be seen that the SSL is the main reason for the difference between ship detection and generic object detection.

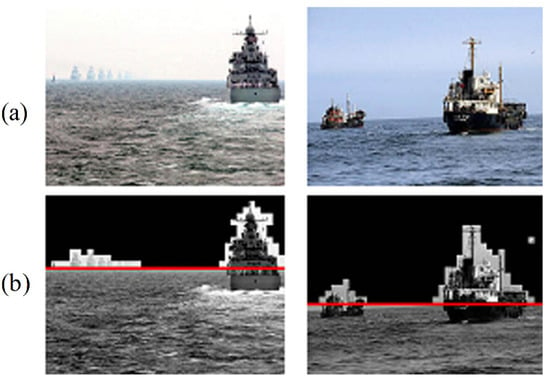

Figure 2 shows typical scenes captured by ship detection in a marine environment.

Figure 2.

Typical scene depicting ship detection in a marine environment: (a) original images; (b) processed images including sea–sky line detection. Zhang et al. [55]. Copyright (2021), with permission from Elsevier.

Unlike observing ships by satellite or airborne platforms, the complete contour of a ship cannot be obtained in a marine environment. A ship’s appearance will change due to the detection distance and view angle. The appearance of a ship is easily affected by lighting, occlusion, and the observation angle. In addition, wide intraclass variations in some types of ships also make it difficult to classify ships [56]. These bring challenges to the classification task of ships (such as passenger ships, and cargo ships). Some research in the field of computer vision may provide some inspiration to solve this problem such as person re-identification [57] and vehicle re-identification [58,59,60]. They face similar problems such as the effect different viewing angles have on the appearance of a target. Currently, most researchers only distinguish between ships and non-ships in ship detection in marine environments.

The marine environment is complex and changeable. The motion of floating offshore platforms, buoys, and ships is affected by waves, currents, wind, and variations in water depth owing to the tide [61,62,63,64]. For example, in certain seas, such as Nigeria’s Bonga Field in offshore West Africa, near-surface currents are usually powerful and vary with time and location [63]. But for ship detection in marine environments, most researchers pay more attention to the impact of ship appearance changes on identification. Especially for some types of ships, their wide intraclass variations bring challenges to ship detection [56].

3. Ship Detection Methods from Electro-Optical Images

Ship detection can be regarded as a subcategory of generic object detection. Due to the particularity of a marine environment and the characteristics of ships, ship detection cannot fully follow the paradigm of generic object detection. Currently, deep learning has achieved impressive performance in object detection, and more researchers have introduced deep learning into ship detection. Detectors based on deep learning can be directly used for ship detection, but it is worth considering combining the characteristics of marine environments. For ease of understanding, this paper uses illustrations to show the scheme of ship detection workflow including traditional methods and deep learning methods.

3.1. Traditional Ship Detection Methods

From the current literature, this paper summarizes the workflow of ship detection in marine environments. Traditional object detection uses sliding windows of different scales to search for candidate regions. In ship detection, detecting the location of SSL can effectively reduce the search range of candidate areas and reduce interference. The SSL is the main reason for the difference between ship detection and generic object detection. In addition, in the stage of region of interest (ROI) extraction and ship identification, many researchers use SSL as an important clue.

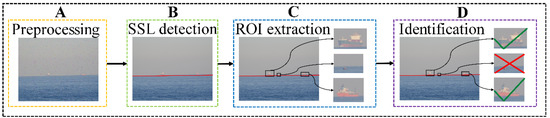

The workflow of ship detection is shown in Figure 3. Traditional ship detection consists of four steps: preprocessing, SSL detection, ROI extraction, and recognition.

Figure 3.

Workflow of the traditional ship detection method in marine environments. (A) Preprocessing. (B) SSL detection. (C) ROI extraction. (D) Identification.

3.1.1. Preprocessing

The captured images usually contain noise and interfering objects such as sea clutter and clouds. The first ship detection step for some researchers is to remove or mitigate the environmental effects.

Tang [26] used Gaussian smoothing to filter noise. Li [43] used gray morphological reconstruction based on opening-and-closing operations to address background clutter. Lu [33] used a median filter to eliminate noise. Sun [32] used a wavelet transform to suppress the background. Bouma [52] estimated the background intensity and subtracted the background intensity from the image to eliminate the change in the background.

In preprocessing, it is difficult to completely eliminate the environmental effects. At present, most of the preprocessing methods used are only for significant noise interference and have trouble addressing strong sea clutter or some extreme situations.

3.1.2. SSL Detection

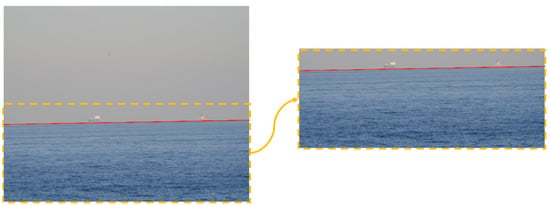

In maritime scenarios, the SSL is the boundary between the sky and the ocean, and it is conducive to locating the ocean area and reducing the search range. Figure 4 shows the process of reducing the candidate search regions after the SSL is detected. In long-range ship detection, ships usually appear near the SSL. There are some SSL-based methods that apply subsequent steps such as ROI extraction and identification. Many researchers, such as Prasad [9] and Lipschutz [65], have summarized related methods for SSL detection. This paper summarizes the common methods of SSL extraction in ship detection.

Figure 4.

After the sea–sky line is detected, it can be used to effectively reduce the candidate search regions.

Transformation

Methods based on transformation first extract all edges in an image to generate an edge map. Then, the Hough transform (Wei [46], Özertem [30], and Chen [12]) or Radon transform (Tang [26]) is used to detect all lines in the image. Finally, the correct SSL result will be selected according to some criteria. For example, Tang [26] comprehensively judged the candidate SSL locations through a gradient transform, the gradient direction, and the average gray difference between the sea and sky.

The SSL detection method based on transformation is mature and easy to use, but it requires image edge extraction. Therefore, when the contrast of an image is low or the sea–sky boundary is blurred, it is difficult to obtain a clear edge image. An edge detector only responds to local changes and is easily disturbed by noise. In this case, the detection of the SSL is difficult or results in misidentification. In addition, the brightness of the sea surface is nonuniform, and sea clutter can produce many edge features, resulting in misjudgments.

Region Features

Generally, the various features of the sea or sky region change slowly, but there will be drastic changes at the sea–sky boundary. Therefore, the location of the SSL can be determined according to the change in features in an image. This article summarizes the features that are used to describe regions as shown in Table 2. It is difficult to classify various regional description features and describe their advantages and disadvantages. In particular, many authors use combined features. For details on the proposed algorithms, please refer to the original works.

Table 2.

The features used to describe regions in sea–sky line detection.

This method uses some features to represent an image and distinguishes different regions through the differences in features. It considers the global image features and prevents the interference of local noise. Nonetheless, it needs to determine the distribution of features in an image, which requires a large amount of computation.

Semantic Segmentation

Semantic segmentation can mark the pixels and make a more detailed division of regions (ocean, sky, and mixed region) to obtain a more accurate location of the SSL. For example, Yang [70] used the Gaussian mixture model (GMM) and Jeong [71] used a neural network to semantically segment images. A segmented image can be used to determine the sky and ocean area and locate the SSL.

This method is usually complex or needs labeled training samples. Due to the large amount of computation, semantic segmentation may not meet the real-time requirement on embedded devices with limited computing resources.

Summary of SSL Detection Methods

Table 3 shows the advantages and limitations of each SSL detection method.

Table 3.

Summary of the sea–sky line detection methods.

3.1.3. ROI Extraction

After extracting the location of SSL, the next step is to find the area containing candidate ships, that is, the ROI. Some researchers use SSL information, while others use common threshold methods. In the face of low-contrast images, such as thermal infrared images, some researchers use saliency detection to extract ROIs. Frequency domain methods are also commonly used.

SSL-Based

The SSL is an effective clue for ROI extraction. Reasonable use of the SSL can effectively reduce the scope of a search. Especially for long-range detection, ships usually appear near SSL.

Researchers search for candidate ROIs near the SSL and usually combine other information to comprehensively judge the correct ROI. For example, Tang [26] used gradient and shape information, Lu [33] used the distance rule, and Chen [12] used the mutation of gray values. Some researchers also use a fixed-height search range near the SSL (Fefilatyev [25]) or cut the image directly near the SSL to extract ROIs (Shan [18]).

However, the location error of the SSL will reduce the accuracy of ROI extraction. Therefore, the premise of using this method is to obtain the accurate position of the SSL.

Threshold-Based

There is usually an obvious intensity difference between ships and the background. Ships and the background can be distinguished by a threshold. Extracting ROIs based on thresholds is a traditional method.

Researchers use different methods to determine the appropriate threshold. Wang [72] analyzed the complexity of an image. Singh [38] used linguistic quantifiers to determine the appropriate threshold. Bouma [52] estimated the intensity of the background and detected the target using hysteresis thresholding.

When the contrast between a ship and the background is low (especially for thermal infrared images), it is difficult to determine an appropriate threshold. In addition, sea clutter can also affect threshold-based methods.

Saliency Detection

Sometimes the contrast of an image is low, and the intensity distribution is uneven. To effectively detect ships, visual saliency detection can be used to find saliency regions according to global information instead of relying on local information. To prevent interference caused by local noise to a certain extent, the bottom-up visual saliency detection method based on data is commonly used. It judges the difference between the target region and its surrounding pixels by the color, the brightness, the edge, and other features.

Researchers have used different strategies to detect salient regions. Li [43] used intensity and contrast features. Liu [27] proposed an improved light nonlocal depth feature (NLDF) for low-contrast infrared images. Mumtaz [37] used graph-based visual saliency (GBVS). Lin [20] fused a variety of visual features, such as gradient texture, brightness, and color features, to obtain a salient image.

When there is a large area with strong sea clutter, it will cause misidentification of the saliency regions. Therefore, the suppression of sea clutter is a key issue.

Frequency Domain

There are usually obvious differences between the sea surface and a ship in the frequency domain. The background and target can be separated by setting an appropriate threshold.

The commonly used frequency domain methods are the Fourier transform and wavelet transform methods. Zhou [41] combined the fractional Fourier transform (FRFT) and high-order statistical filtering. The target and sea clutter were separated by a high-order statistical curve (HOSC). Sun [32] used a wavelet transform.

When the sea clutter is strong, it is difficult to separate the background and a ship simply by the features of the frequency spectrum; however, when these methods are combined with morphological analysis, they can usually achieve better results. For example, Zhou [41] combined shape features to obtain more accurate results.

Summary of the ROI Extraction Methods

Table 4 summarizes the advantages and disadvantages of various ROI extraction methods.

Table 4.

Summary of the ROI extraction methods.

3.1.4. Identification

The extracted ROIs may contain false alarms such as sea clutter and islands. This step removes false alarms and identifies the correct candidate ships. There are two kinds of recognition results: ship and non-ship. Few authors mention the classification of ship types. This article is more concerned with identifying the correct ships and non-ships.

Prior Knowledge

Ships have fixed sizes, shapes, lengths, and widths, so some researchers have used prior knowledge to identify ships.

Tang [26] used the aspect ratio, the contrast ratio, the duty ratio, and other features for ship recognition. Özertem [30] removed false alarms according to prior knowledge of a ship’s size and the size of the target’s edge on the SSL. Lu [33] found that a ship is usually near the SSL and has the brightest grayscale in an infrared image, so the distance rule and gray rule were combined to determine whether a target was a ship.

However, the robustness of ship identification using prior knowledge is weak, and it is easily disturbed by waves, clouds, and islands in the ocean, resulting in false alarms.

Classifier

Some researchers have used feature vectors to describe ships and used samples to train classifiers to obtain more robust results.

Xu [40] proposed a rotation-invariant descriptor, a circle histogram of oriented gradient (C-HOG), to describe ships. This descriptor can identify infrared ships with different rotation angles. Finally, they used support vector machine (SVM) as a classifier. Lin [29] used three types of features: size, shape, and texture; constructed a 10-dimensional feature vector to describe a ship; used SVM for offline training to effectively remove false alarms.

This method needs to select appropriate hand-crafted features, and the training of the classifier depends on the training samples.

Summary of Ship Identification

Table 5 summarizes the various ship identification methods.

Table 5.

Summary of the various ship identification methods.

3.2. Ship Detection Based on Deep Learning

Deep learning has great potential in various fields, such as biology and physics, and it is not limited to computer vision. It breaks through some of the limitations of traditional methods and has achieved remarkable results.

Currently, in object detection, most of the state-of-the-art methods use deep learning networks as the backbone and detection networks to extract features from images and perform classification and location tasks [73]. At present, ship detection based on deep learning mostly borrows methods from object detection.

There are many open-source deep learning frameworks that provide basic deep learning components and rich application programming interfaces (APIs), which provide convenience for researchers to use deep learning methods to deal with ship detection. Popular open-source deep learning frameworks are shown in Table 6.

Table 6.

Summary of deep learning frameworks.

3.2.1. One-Stage and Two-Stage Detectors

Traditional object detection methods using hand-crafted features have encountered a development bottleneck. Convolutional neural networks (CNNs) can extract high-level semantic information from images and obtain more robust features. In 2012, AlexNet [80] achieved impressive results in the ImageNet competition. Since then, some researchers have tried to introduce CNNs into object detection. In 2014, Girshick et al. proposed R-CNN [81] and used CNN for object detection for the first time. At present, object detection based on deep learning has become mainstream.

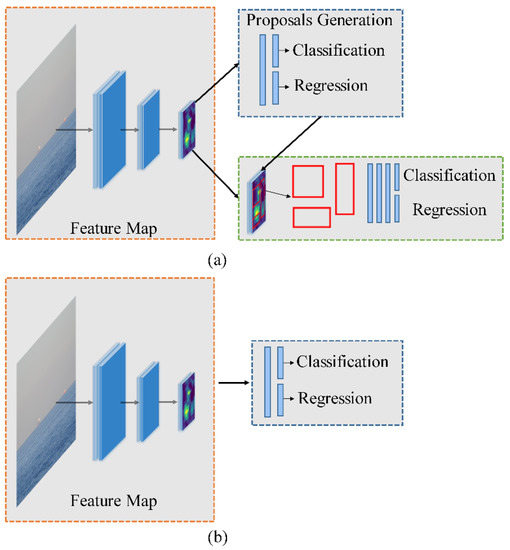

Object detection based on deep learning can be divided into two categories: (a) two-stage detectors, which mainly include R-CNN [81], Fast R-CNN [82], and Faster R-CNN [83]; (b) one-stage detectors, which mainly include YOLO (YOLO v2 [84] and YOLO v3 [85]) and SSD (SSD [86] and DSSD [87]).

The two-stage detectors have a proposal generation stage, and the generated proposals are sent to the next stage for object classification and location regression. A one-stage detector directly predicts the category of objects on the extracted feature map and regresses the positions of the objects. Figure 5 shows two-stage and one-stage detectors. Two-stage detectors usually have better detection performance, while one-stage detectors have faster inference speeds.

Figure 5.

(a) Two-stage detector, which is composed of a backbone network, region proposal network, and detection network. (b) One-stage detector that predicts the bounding box directly from the input image.

3.2.2. Deep Learning in Ship Detection

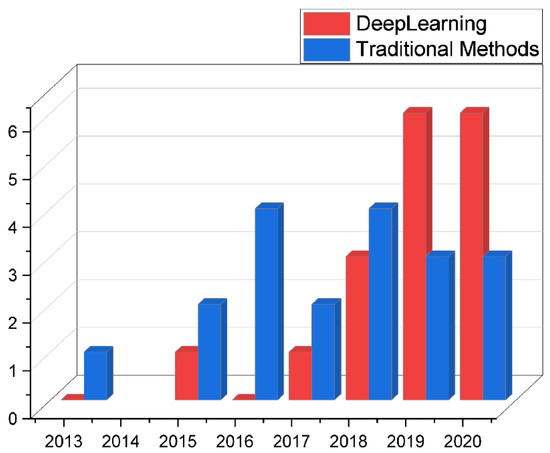

In view of the excellent performance of CNNs in the field of object detection, more researchers have used deep learning for ship detection in recent years. Figure 6 shows the development trend of traditional methods and deep learning methods in ship detection.

Figure 6.

Development trend of traditional methods and deep learning methods in ship detection.

One-Stage and Two-Stage Detectors in Ship Detection

This paper sorted the CNN models commonly used in ship detection as shown in Table 7.

Table 7.

Application of deep learning models in ship detection.

Many researchers have used one-stage detectors. In ship detection, the detection speed needs to be considered. For example, for the anticollision warning of ships, there are high requirements for the real-time performance of ship detection. The one-stage models usually have faster inference speeds and can meet real-time tasks. In addition, many shipborne platforms need to consider the volume and power consumption of computing equipment. Shipborne platforms usually use embedded devices for ship detection, where one-stage models have less computation and lower power consumption and are suitable for deployment on embedded platforms.

Two-stage detectors usually have better detection accuracy but also require a large number of calculations and high consumption of power. Shore-static platforms usually monitor ships in a port, where the moving speed of the ships is slow, and there is no need to strictly limit the volume and power consumption of the computing device. In these cases, the two-stage model can be deployed on a desktop computer.

As the observation distance changes, ships have different scales in an image. The sizes of ships are also diverse. The scale change in ships brings challenges to the current detection networks based on predesigned anchors [24]. In addition, in the long-distance detection of small marine crafts, the ship usually occupies a few pixels in the image. This is unfavorable for the feature extraction of a CNN. Especially for a one-stage network, small marine crafts in an image may be ignored.

Currently, researchers mainly start with two factors to improve the performance of detection networks in ship detection: multiscale features and anchor settings.

Researchers use multiscale features or high-resolution feature maps to improve the detection ability of ships with different sizes. Generally, in a neural network, shallow features contain more structural and geometric information of an object [89], which is conducive to the regression of the target. High-level features represent more semantic information, which is conducive to the classification of objects [89]. Therefore, combining the features of different layers can obtain complementary information. To enhance the detection capability of small marine crafts, Chen [15] extracted proposals of different scales in a multilayer convolution feature map. In the detection network, they used high-resolution convolution feature maps to extract targets. Hu [28] used a scale transform module to establish a feature pyramid network (FPN) to cope with the changes in ship appearance caused by different imaging distances. The module used context information to combine high-level features and low-level features. Shan [24] used ResNet-50 as a backbone network and added an FPN structure to solve the problem of variation scales, especially for some small marine crafts. They used the feature maps output by the three convolutional layers as the input of three region proposal networks (RPNs), which improve the discriminative ability at different scales.

Anchors are a set of initial, fixed-size candidate boxes [28]. The shape and size of an anchor will affect the accuracy and speed of object recognition and regression. The diversity of a ship’s shape and size as well as changes in the observation distance, bring challenges to the selection of anchors. Currently, the commonly used method is to analyze the ground truth bounding boxes in the training set to select suitable shapes and sizes for the anchor. For example, Hu [28] and Shan [24] used k-means clustering to cluster all ground truth bounding boxes in the training data set, and the cluster centers were used as the initial anchors. However, the effect of this method depends on whether the training data set contains enough ship sizes and shapes.

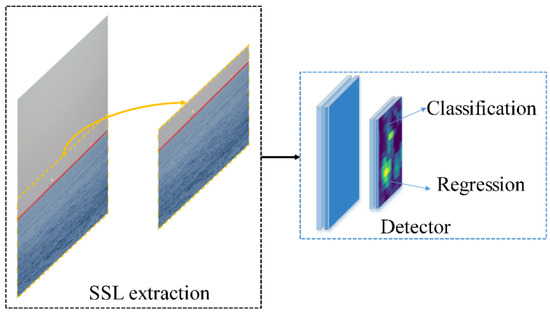

Discussion about the SSL

The current deep learning-based ship detection methods have not considered the use of the SSL. In Section 3.1.2., it was mentioned that the SSL is important in ship detection, can effectively reduce the search area, and can remove some interference areas. To the best of our knowledge, there is no application of the SSL in ship detection based on deep learning.

Some researchers mentioned that before sending an image to the detection network, they will first screen the potential candidate regions. For example, Marie [14] analyzed video streams to determine potential key points of objects or backgrounds. Using the SSL may be a more effective way to reduce the candidate region. For some lightweight SSL extraction methods, their calculations are acceptable. In two-stage detectors, proposal generation is time consuming. The detection of the SSL can improve the efficiency of two-stage detectors and give better play to their advantages of detection accuracy. Figure 7 shows this process.

Figure 7.

Before sending an image to the detector, SSL detection can reduce the search for candidate regions.

3.3. Comparison of Traditional Methods and Deep Learning

Traditional ship detection methods do not need a large number of training samples and consume relatively few computing resources, and they have strong interpretability. However, traditional methods have poor adaptability to complex scenes and need to select appropriate hand-crafted features. Especially for thermal infrared images, because they lack detailed object information and retain only the contour and spatial information of an object, it is difficult to select appropriate hand-crafted features.

Deep learning models avoid selecting hand-crafted features, automatically learn more robust image features through backbone networks, and then locate and recognize objects. Deep learning methods have strong adaptability to complex scenes, especially in marine environments, which usually encounter drastic environmental changes. However, to obtain better prediction results, a deep learning model requires a large number of training samples; otherwise, it will experience overfitting. At present, there are few public data sets of ships. There is still a big gap between some existing ships public data sets and generic object data sets in terms of data scale.

Table 8 summarizes the advantages and disadvantages of traditional and deep learning methods.

Table 8.

Advantages and disadvantages of traditional methods and deep learning methods in ship detection.

3.4. Validation

Compared with generic object detection, ship detection lacks public data sets, especially for multispectral data. Many researchers use private data sets for research on ship detection, which is not conducive to comparison among different methods. Therefore, this paper only provides methods for evaluating performance of ship detection.

The intersection over union (IoU) is used to measure the localization accuracy in ship detection. It can check whether the IoU between the predicated bounding box and the ground truth is greater than a predefined threshold. IoU is defined as in Equation (1):

where bbox is the predicated bounding box, gt is the ground truth, and Area denotes the number of pixels. If IoU is greater than the predefined threshold, the predicated box is considered to correctly detect ships; that is a true positive (TP). For false detection, it is called a false positive (FP). For missed detection, it is called a false negative (FN). After obtaining the total number of TPs, FPs, and FNs, the precision and recall are computed as:

4. Conclusions

This paper reviewed ship detection methods with electro-optical images in marine environments and mainly included two parts: electro-optical images and ship detection methods. Suitable imaging spectral bands can suppress interference from the environment to capture clear and effective images. At present, the most commonly used images by researchers are visible and LWIR images. Although SWIR images are rarely used, they are promising for ship detection in marine environments. The combination of multiple spectral bands can better cope with complex marine environments. Among the traditional ship detection methods, the SSL makes ship detection different from generic object detection. Using the location of the SSL can effectively reduce the search for candidate regions. Deep learning avoids the selection of hand-crafted features, uses artificial neural networks to classify and regress objects, and achieves impressive results in ship detection. However, deep learning does not use SSL information, which is worth considering in future research. In addition, deep learning relies on labeled training samples, which also affects its application in ship detection because there are few public data sets of ships at present.

Author Contributions

Conceptualization, S.F., Z.L. and X.Z.; methodology, Y.L. (Yongfu Li); formal analysis, L.W.; investigation, B.L., Y.D. and Y.L. (Yunxia Liu); resources, C.F. and J.L.; writing—original draft preparation, L.W.; writing—review and editing, Y.L. (Yongfu Li) and S.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The permission granted to use image by Yang Zhang, Qing-Zhong Li and Feng-Ni Zang of Ocean University of China, and Elsevier Publisher for adapted image in Figure 2 is duly appreciated and well acknowledged.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| AIS | Automatic Identification System |

| API | Application Programming Interface |

| C-HOG | Circle Histogram of Oriented Gradient |

| CNN | Convolutional Neural Network |

| FN | False Negative |

| FP | False Positive |

| FPN | Feature Pyramid Network |

| FRFT | Fractional Fourier Transform |

| GBVS | Graph-Based Visual Saliency |

| GMM | Gaussian Mixture Model |

| HOSC | High-Order Statistical Curve |

| IoU | Intersection over Union |

| IR | Infrared |

| LWIR | Long-Wave Infrared |

| MWIR | Medium-Wave Infrared |

| NLDF | Nonlocal Depth Feature |

| ROI | Region of Interest |

| RPN | Region Proposal Network |

| SAR | Synthetic Aperture Radar |

| SDM | Standard Deviation Map |

| SSL | Sea–Sky Line |

| SVM | Support Vector Machine |

| SWIR | Short-Wave Infrared |

| TP | True Positive |

References

- Zhu, C.; Zhou, H.; Wang, R.; Guo, J. A novel hierarchical method of ship detection from spaceborne optical image based on shape and texture features. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3446–3456. [Google Scholar] [CrossRef]

- Kretschmann, L.; Burmeister, H.-C.; Jahn, C. Analyzing the economic benefit of unmanned autonomous ships: An exploratory cost-comparison between an autonomous and a conventional bulk carrier. Res. Transp. Bus. Manag. 2017, 25, 76–86. [Google Scholar] [CrossRef]

- Kanjir, U.; Greidanus, H.; Oštir, K. Vessel detection and classification from spaceborne optical images: A literature survey. Remote Sens. Environ. 2018, 207, 1–26. [Google Scholar] [CrossRef]

- Bao, X.; Zinger, S.; Wijnhoven, R. Ship detection in port surveillance based on context and motion saliency analysis. In Proceedings of the Video Surveillance and Transportation Imaging Applications, Burlingame, CA, USA, 3–7 February 2013; p. 86630D. [Google Scholar]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.-Q. Target classification using the deep convolutional networks for SAR images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Lin, Z.; Ji, K.; Leng, X.; Kuang, G. Squeeze and excitation rank faster R-CNN for ship detection in SAR images. IEEE Geosci. Remote Sens. Lett. 2018, 16, 751–755. [Google Scholar] [CrossRef]

- Kang, M.; Ji, K.; Leng, X.; Lin, Z. Contextual region-based convolutional neural network with multilayer fusion for SAR ship detection. Remote Sens. 2017, 9, 860. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. Automatic ship detection based on RetinaNet using multi-resolution Gaofen-3 imagery. Remote Sens. 2019, 11, 531. [Google Scholar] [CrossRef] [Green Version]

- Prasad, D.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Video processing from electro-optical sensors for object detection and tracking in a maritime environment: A survey. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1993–2016. [Google Scholar] [CrossRef] [Green Version]

- Spraul, R.; Sommer, L.; Schumann, A. A comprehensive analysis of modern object detection methods for maritime vessel detection. In Proceedings of the Artificial Intelligence and Machine Learning in Defense Applications II, Online Only, 21–25 September 2020; p. 1154305. [Google Scholar]

- Fingas, M.; Brown, C. Review of ship detection from airborne platforms. Can. J. Remote Sens. 2001, 27, 379–385. [Google Scholar] [CrossRef]

- Chen, Z.; Li, B.; Tian, L.F.; Chao, D. Automatic detection and tracking of ship based on mean shift in corrected video sequences. In Proceedings of the 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 June 2017; pp. 449–453. [Google Scholar]

- Cane, T.; Ferryman, J. Evaluating deep semantic segmentation networks for object detection in maritime surveillance. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar]

- Marie, V.; Bechar, I.; Bouchara, F. Real-time maritime situation awareness based on deep learning with dynamic anchors. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar]

- Chen, W.; Li, J.; Xing, J.; Yang, Q.; Zhou, Q. A maritime targets detection method based on hierarchical and multi-scale deep convolutional neural network. In Proceedings of the Tenth International Conference on Digital Image Processing (ICDIP 2018), Shanghai, China, 11–14 May 2018; p. 1080616. [Google Scholar]

- Chen, X.; Qi, L.; Yang, Y.; Postolache, O.; Yu, Z.; Xu, X. Port ship detection in complex environments. In Proceedings of the 2019 International Conference on Sensing and Instrumentation in IoT Era (ISSI), Lisbon, Portugal, 29–30 August 2019; pp. 1–6. [Google Scholar]

- Liu, L.; Liu, G.; Chu, X.; Jiang, Z.; Zhang, M.; Ye, J. Ship Detection and Tracking in Nighttime Video Images Based on the Method of LSDT. J. Phys. Conf. Ser. 2019, 1187, 042074. [Google Scholar] [CrossRef]

- Shan, X.; Zhao, D.; Pan, M.; Wang, D.; Zhao, L. Sea-Sky line and its nearby ships detection based on the motion attitude of visible light sensors. Sensors 2019, 19, 4004. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gal, O. Object Identification in Maritime Environments for ASV Path Planner. Int. J. Data Sci. Adv. Anal. 2019, 1, 18–26, ISSN 2563-4429. [Google Scholar]

- Lin, C.; Chen, W.; Zhou, H. Multi-Visual Feature Saliency Detection for Sea-Surface Targets through Improved Sea-Sky-Line Detection. J. Mar. Sci. Eng. 2020, 8, 799. [Google Scholar] [CrossRef]

- Chen, X.; Qi, L.; Yang, Y.; Luo, Q.; Postolache, O.; Tang, J.; Wu, H. Video-based detection infrastructure enhancement for automated ship recognition and behavior analysis. J. Adv. Transp. 2020, 2020, 7194342. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.-J.; Roh, M.-I.; Oh, M.-J. Image-based ship detection using deep learning. Ocean Syst. Eng. 2020, 10, 415–434. [Google Scholar] [CrossRef]

- Feng, J.; Li, B.; Tian, L.; Dong, C. Rapid Ship Detection Method on Movable Platform Based on Discriminative Multi-Size Gradient Features and Multi-Branch Support Vector Machine. IEEE Trans. Intell. Transp. Syst. 2020, 1–11. [Google Scholar] [CrossRef]

- Shan, Y.; Zhou, X.; Liu, S.; Zhang, Y.; Huang, K. SiamFPN: A deep learning method for accurate and real-time maritime ship tracking. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 315–325. [Google Scholar] [CrossRef]

- Fefilatyev, S.; Goldgof, D.; Shreve, M.; Lembke, C. Detection and tracking of ships in open sea with rapidly moving buoy-mounted camera system. Ocean Eng. 2012, 54, 1–12. [Google Scholar] [CrossRef]

- Tang, D.; Sun, G.; Wang, D.-H.; Niu, Z.-D.; Chen, Z.-P. Research on infrared ship detection method in sea-sky background. In Proceedings of the International Symposium on Photoelectronic Detection and Imaging 2013: Infrared Imaging and Applications, Beijing, China, 25–27 June 2013; p. 89072H. [Google Scholar]

- Liu, Z.; Jiang, T.; Zhang, T.; Li, Y. IR ship target saliency detection based on lightweight non-local depth features. In Proceedings of the 2019 3rd International Conference on Electronic Information Technology and Computer Engineering (EITCE), Xiamen, China, 18–20 October 2019; pp. 1681–1686. [Google Scholar]

- Hu, Z.; Qin, H.; Peng, X.; Yue, T.; Yue, H.; Luo, G.; Zhu, W. Infrared polymorphic target recognition based on single step cascade neural network. In Proceedings of the AOPC 2019: AI in Optics and Photonics, Beijing, China, 7–9 July 2019; p. 113420T. [Google Scholar]

- Lin, J.; Yu, Q.; Chen, G. Infrared ship target detection based on the combination of Bayesian theory and SVM. In Proceedings of the MIPPR 2019: Automatic Target Recognition and Navigation, Wuhan, China, 2–3 November 2019; p. 1142919. [Google Scholar]

- Özertem, K.A. A fast automatic target detection method for detecting ships in infrared scenes. In Proceedings of the Automatic Target Recognition XXVI, Baltimore, MD, USA, 17–21 April 2016; p. 984404. [Google Scholar]

- Wang, X.; Zhang, T. Clutter-adaptive infrared small target detection in infrared maritime scenarios. Opt. Eng. 2011, 50, 067001. [Google Scholar] [CrossRef]

- Sun, Y.-Q.; Tian, J.-W.; Liu, J. Background suppression based-on wavelet transformation to detect infrared target. In Proceedings of the 2005 International Conference on Machine Learning and Cybernetics, Guangzhou, China, 18–21 August 2005; pp. 4611–4615. [Google Scholar]

- Lu, J.-W.; He, Y.-J.; Li, H.-Y.; Lu, F.-L. Detecting small target of ship at sea by infrared image. In Proceedings of the 2006 IEEE International Conference on Automation Science and Engineering, Shanghai, China, 8–10 October 2006; pp. 165–169. [Google Scholar]

- Bai, X.; Chen, Z.; Zhang, Y.; Liu, Z.; Lu, Y. Infrared ship target segmentation based on spatial information improved FCM. IEEE Trans. Cybern. 2015, 46, 3259–3271. [Google Scholar] [CrossRef]

- Leira, F.S.; Johansen, T.A.; Fossen, T.I. Automatic detection, classification and tracking of objects in the ocean surface from UAVs using a thermal camera. In Proceedings of the 2015 IEEE Aerospace Conference, Big Sky, MT, USA, 7–14 March 2015; pp. 1–10. [Google Scholar]

- Bai, X.; Liu, M.; Wang, T.; Chen, Z.; Wang, P.; Zhang, Y. Feature based fuzzy inference system for segmentation of low-contrast infrared ship images. Appl. Soft Comput. 2016, 46, 128–142. [Google Scholar] [CrossRef]

- Mumtaz, A.; Jabbar, A.; Mahmood, Z.; Nawaz, R.; Ahsan, Q. Saliency based algorithm for ship detection in infrared images. In Proceedings of the 2016 13th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 12–16 January 2016; pp. 167–172. [Google Scholar]

- Singh, R.; Vashisht, M.; Qamar, S. Role of linguistic quantifier and digitally approximated Laplace operator in infrared based ship detection. Int. J. Syst. Assur. Eng. Manag. 2017, 8, 1336–1342. [Google Scholar] [CrossRef]

- Zhang, Y.; Shang, J.; Xie, B.; Ding, R.; Zhang, Z. Research for infrared ship target characteristics based on space-based detection. In Proceedings of the Advanced Optical Imaging Technologies, Beijing, China, 11–13 October 2018; p. 108160U. [Google Scholar]

- Xu, G.; Wang, J.; Qi, S. Ship detection based on rotation-invariant HOG descriptors for airborne infrared images. In Proceedings of the MIPPR 2017: Pattern Recognition and Computer Vision, Xiangyang, China, 28–29 October 2017; p. 1060912. [Google Scholar]

- Zhou, A.; Xie, W.; Pei, J. Infrared maritime target detection using the high order statistic filtering in fractional Fourier domain. Infrared Phys. Technol. 2018, 91, 123–136. [Google Scholar] [CrossRef]

- Schöller, F.E.; Plenge-Feidenhans, M.K.; Stets, J.D.; Blanke, M. Assessing deep-learning methods for object detection at sea from LWIR images. IFAC Pap. 2019, 52, 64–71. [Google Scholar] [CrossRef]

- Li, Y.; Li, Z.; Zhu, Y.; Li, B.; Xiong, W.; Huang, Y. Thermal infrared small ship detection in sea clutter based on morphological reconstruction and multi-feature analysis. Appl. Sci. 2019, 9, 3786. [Google Scholar] [CrossRef] [Green Version]

- Westlake, S.T.; Volonakis, T.N.; Jackman, J.; James, D.B.; Sherriff, A. Deep learning for automatic target recognition with real and synthetic infrared maritime imagery. In Proceedings of the Artificial Intelligence and Machine Learning in Defense Applications II, Online Only, 21–25 September 2020; p. 1154309. [Google Scholar]

- Islam, M.M.; Islam, M.N.; Asari, K.V.; Karim, M.A. Anomaly based vessel detection in visible and infrared images. In Proceedings of the Image Processing: Machine Vision Applications II, San Jose, CA, USA, 18–22 January 2009; p. 72510B. [Google Scholar]

- Wei, H.; Nguyen, H.; Ramu, P.; Raju, C.; Liu, X.; Yadegar, J. Automated intelligent video surveillance system for ships. In Proceedings of the Optics and Photonics in Global Homeland Security V and Biometric Technology for Human Identification VI, Orlando, FL, USA, 13–17 April 2009; p. 73061N. [Google Scholar]

- Nita, C.; Vandewal, M. CNN-based object detection and segmentation for maritime domain awareness. In Proceedings of the Artificial Intelligence and Machine Learning in Defense Applications II, Online Only, 21–25 September 2020; p. 1154306. [Google Scholar]

- Zhang, M.M.; Choi, J.; Daniilidis, K.; Wolf, M.T.; Kanan, C. VAIS: A dataset for recognizing maritime imagery in the visible and infrared spectrums. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 10–16. [Google Scholar]

- Ribeiro, R.; Cruz, G.; Matos, J.; Bernardino, A. A data set for airborne maritime surveillance environments. IEEE Trans. Circuits Syst. Video Technol. 2017, 29, 2720–2732. [Google Scholar] [CrossRef]

- Farahnakian, F.; Heikkonen, J. Deep learning based multi-modal fusion architectures for maritime vessel detection. Remote Sens. 2020, 12, 2509. [Google Scholar] [CrossRef]

- Stets, J.D.; Schöller, F.E.; Plenge-Feidenhans, M.K.; Andersen, R.H.; Hansen, S.; Blanke, M. Comparing spectral bands for object detection at sea using convolutional neural networks. J. Phys. Conf. Ser. 2019, 1357, 012036. [Google Scholar] [CrossRef]

- Bouma, H.; de Lange, D.-J.J.; van den Broek, S.P.; Kemp, R.A.; Schwering, P.B. Automatic detection of small surface targets with electro-optical sensors in a harbor environment. In Proceedings of the Electro-Optical Remote Sensing, Photonic Technologies, and Applications II, Cardiff, Wales, UK, 15–18 September 2008; p. 711402. [Google Scholar]

- Perić, D.; Livada, B. Analysis of SWIR Imagers Application in Electro-Optical Systems. In Proceedings of the Proceedings of 4th International Conference on Electrical, Electronics and Computing Engineering, IcETRAN, Kladovo, Serbia, 5–8 June 2017; pp. 5–8. [Google Scholar]

- Farahnakian, F.; Movahedi, P.; Poikonen, J.; Lehtonen, E.; Makris, D.; Heikkonen, J. Comparative analysis of image fusion methods in marine environment. In Proceedings of the 2019 IEEE International Symposium on Robotic and Sensors Environments (ROSE), Ottawa, ON, Canada, 17–18 June 2019; pp. 1–8. [Google Scholar]

- Zhang, Y.; Li, Q.-Z.; Zang, F.-N. Ship detection for visual maritime surveillance from non-stationary platforms. Ocean Eng. 2017, 141, 53–63. [Google Scholar] [CrossRef]

- Shi, Q.; Li, W.; Tao, R.; Sun, X.; Gao, L. Ship classification based on multifeature ensemble with convolutional neural network. Remote Sens. 2019, 11, 419. [Google Scholar] [CrossRef] [Green Version]

- Wu, D.; Zheng, S.-J.; Zhang, X.-P.; Yuan, C.-A.; Cheng, F.; Zhao, Y.; Lin, Y.-J.; Zhao, Z.-Q.; Jiang, Y.-L.; Huang, D.-S. Deep learning-based methods for person re-identification: A comprehensive review. Neurocomputing 2019, 337, 354–371. [Google Scholar] [CrossRef]

- Liu, X.; Liu, W.; Mei, T.; Ma, H. A deep learning-based approach to progressive vehicle re-identification for urban surveillance. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 869–884. [Google Scholar]

- Zhou, Y.; Liu, L.; Shao, L. Vehicle re-identification by deep hidden multi-view inference. IEEE Trans. Image Process. 2018, 27, 3275–3287. [Google Scholar] [CrossRef]

- Zhou, Y.; Shao, L. Aware attentive multi-view inference for vehicle re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6489–6498. [Google Scholar]

- Amaechi, C.V.; Chesterton, C.; Butler, H.O.; Wang, F.; Ye, J. An Overview on Bonded Marine Hoses for sustainable fluid transfer and (un) loading operations via Floating Offshore Structures (FOS). J. Mar. Sci. Eng. 2021, 9, 1236. [Google Scholar] [CrossRef]

- Amaechi, C.V.; Wang, F.; Ye, J. Mathematical Modelling of Bonded Marine Hoses for Single Point Mooring (SPM) Systems, with Catenary Anchor Leg Mooring (CALM) Buoy application—A Review. J. Mar. Sci. Eng. 2021, 9, 1179. [Google Scholar] [CrossRef]

- Amaechi, C.V.; Chesterton, C.; Butler, H.O.; Wang, F.; Ye, J. Review on the design and mechanics of bonded marine hoses for Catenary Anchor Leg Mooring (CALM) buoys. Ocean Eng. 2021, 242, 110062. [Google Scholar] [CrossRef]

- Odijie, A.C.; Wang, F.; Ye, J. A review of floating semisubmersible hull systems: Column stabilized unit. Ocean Eng. 2017, 144, 191–202. [Google Scholar] [CrossRef] [Green Version]

- Lipschutz, I.; Gershikov, E.; Milgrom, B. New methods for horizon line detection in infrared and visible sea images. Int. J. Comput. Eng. Res 2013, 3, 1197–1215. [Google Scholar]

- Fefilatyev, S.; Smarodzinava, V.; Hall, L.O.; Goldgof, D.B. Horizon detection using machine learning techniques. In Proceedings of the 2006 5th International Conference on Machine Learning and Applications (ICMLA’06), Orlando, FL, USA, 14–16 December 2006; pp. 17–21. [Google Scholar]

- Liang, D.; Zhang, W.; Huang, Q.; Yang, F. Robust sea-sky-line detection for complex sea background. In Proceedings of the 2015 IEEE International Conference on Progress in Informatics and Computing (PIC), Nanjing, China, 18–20 December 2015; pp. 317–321. [Google Scholar]

- Sun, Y.; Fu, L. Coarse-fine-stitched: A robust maritime horizon line detection method for unmanned surface vehicle applications. Sensors 2018, 18, 2825. [Google Scholar] [CrossRef] [Green Version]

- Liang, D.; Liang, Y. Horizon detection from electro-optical sensors under maritime environment. IEEE Trans. Instrum. Meas. 2019, 69, 45–53. [Google Scholar] [CrossRef]

- Yang, W.; Li, H.; Liu, J.; Xie, S.; Luo, J. A sea-sky-line detection method based on Gaussian mixture models and image texture features. Int. J. Adv. Robot. Syst. 2019, 16, 1–12. [Google Scholar] [CrossRef]

- Jeong, C.; Yang, H.S.; Moon, K. Horizon detection in maritime images using scene parsing network. Electron. Lett. 2018, 54, 760–762. [Google Scholar] [CrossRef]

- Wang, A.-B.; Wang, C.-X.; Su, W.-X.; Dong, Y.-F. Adaptive segmentation algorithm for ship target under complex background. In Proceedings of the 2010 3rd International Conference on Advanced Computer Theory and Engineering (ICACTE), Chengdu, China, 20–22 August 2010; pp. V2-219–V2-223. [Google Scholar]

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. A survey of deep learning-based object detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar] [CrossRef]

- TensorFlow. Available online: https://www.tensorflow.org (accessed on 28 November 2021).

- Keras. Available online: https://keras.io/ (accessed on 28 November 2021).

- PyTorch. Available online: https://pytorch.org/ (accessed on 28 November 2021).

- MXNet. Available online: https://mxnet.apache.org (accessed on 28 November 2021).

- CNTK. Available online: https://docs.microsoft.com/en-us/cognitive-toolkit/ (accessed on 28 November 2021).

- Theano. Available online: https://pypi.org/project/Theano/ (accessed on 28 November 2021).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. All the Code Is Online. Available online: https://pjreddie.com/yolo/ (accessed on 28 November 2021).

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Fu, C.-Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. Available online: https://github.com/chengyangfu/caffe/tree/dssd (accessed on 28 November 2021).

- Gal, O. High Level Mission Assignment Optimization. Int. J. Data Sci. Adv. Anal. 2020, 2, 26–29. [Google Scholar]

- Oksuz, K.; Cam, B.C.; Kalkan, S.; Akbas, E. Imbalance problems in object detection: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3388–3415. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).