Fishing Net Health State Estimation Using Underwater Imaging

Abstract

1. Introduction

- A visual information based framework of underwater fishing net health state estimation is proposed.

- Net-opening structure analysis and blocked percentage estimation methods are introduced to infer the health state of each net cell.

- A Virtual Fishing Net(VFN) dataset is built, which can easily simulate the situation of fishing nets in different conditions.

- Seven evaluation metrics are introduced for a comprehensive evaluation for both intermediate results and final estimation results.

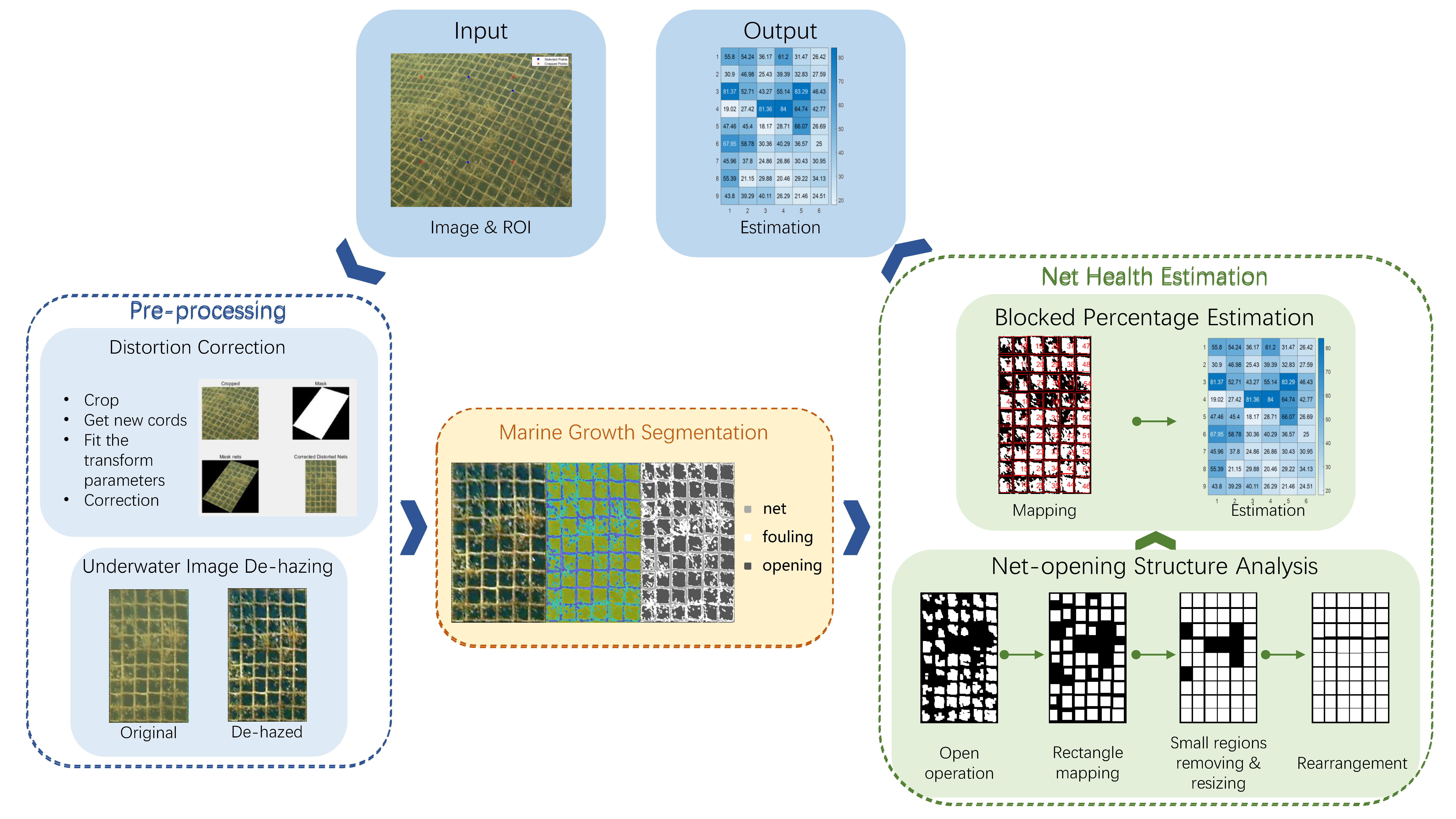

2. Methodology

2.1. Pre-Processing

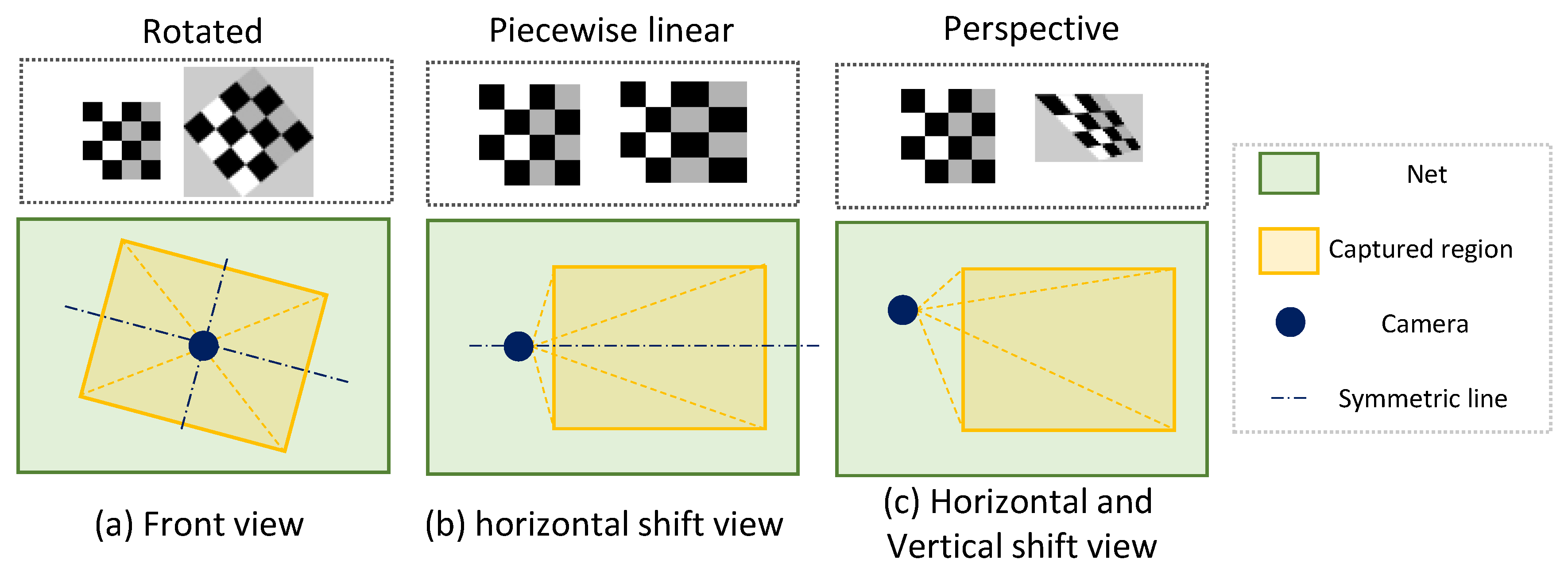

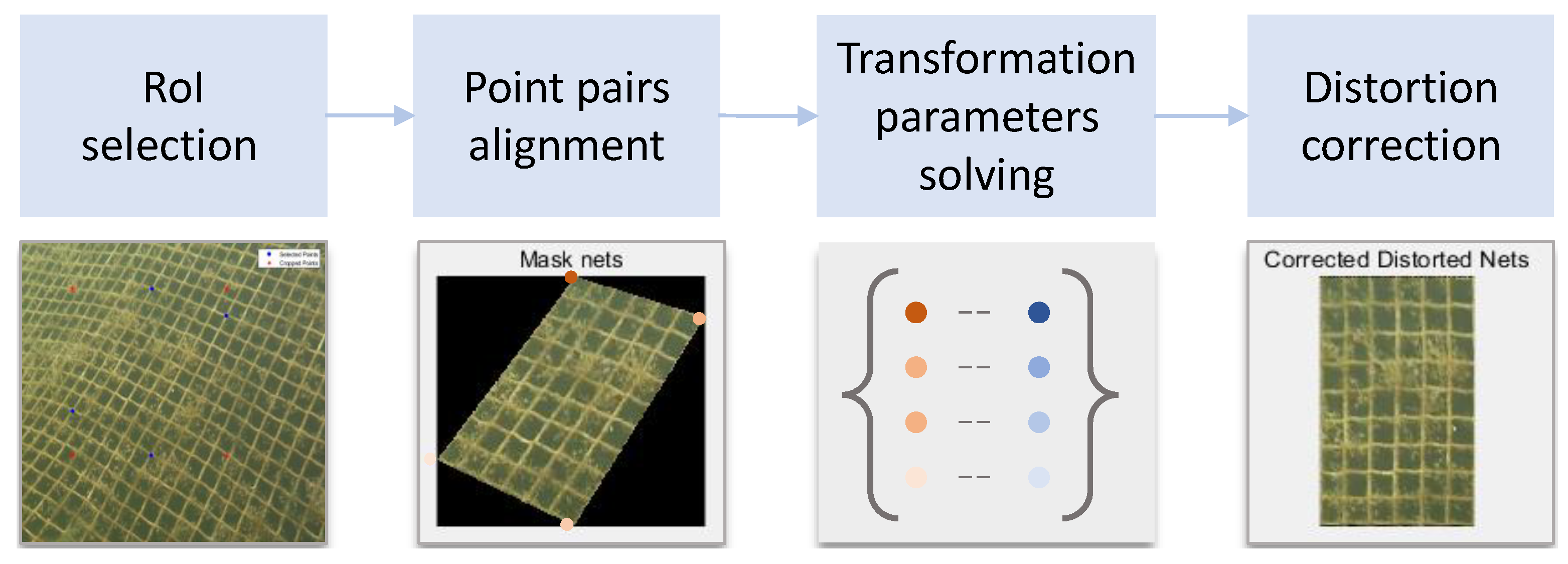

2.1.1. Distortion Correction

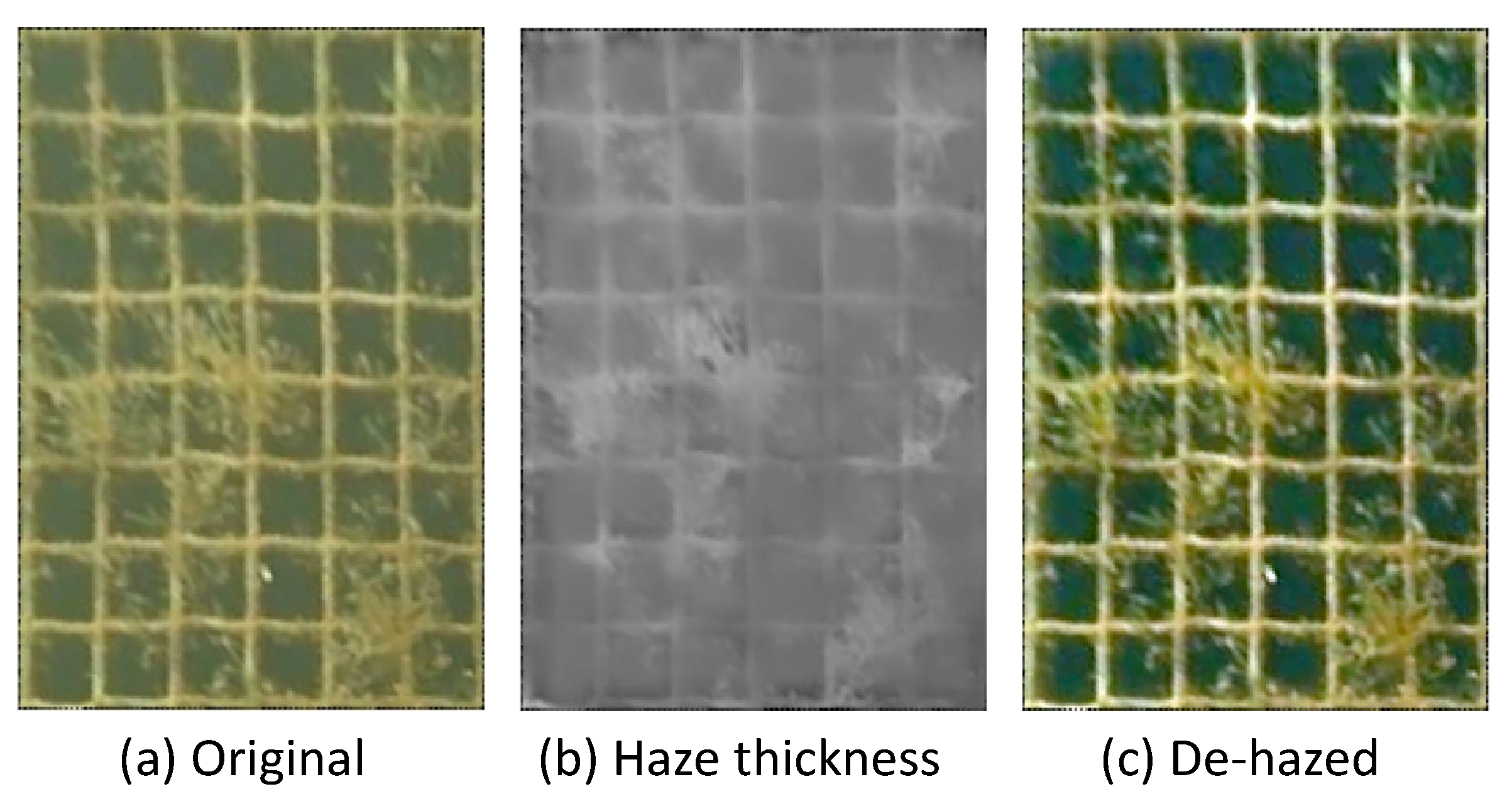

2.1.2. Underwater Image De-Hazing

2.2. Marine Growth Segmentation

| Algorithm 1 Main steps of k-means++. |

|

2.3. Net Health Estimation

2.3.1. Net-Opening Structure Analysis

| Algorithm 2 Region resizing and Rearrangement |

Input: Output:

|

2.3.2. Blocked Percentage Estimation

3. Experiments

3.1. Dataset

3.1.1. Real Scene Dataset

3.1.2. Virtual Fishing Net (VFN) Dataset

3.2. Evaluation Metrics

3.2.1. For Segmentation Results

3.2.2. For Location Results

3.2.3. For Blocked Percentage Results

3.3. Quantitative Evaluation on Real Scene

3.4. Quantitative Evaluation on Virtual Scene

3.4.1. Variations in Image Capture Angles

3.4.2. Variations in Underwater Situations

3.4.3. Conclusions

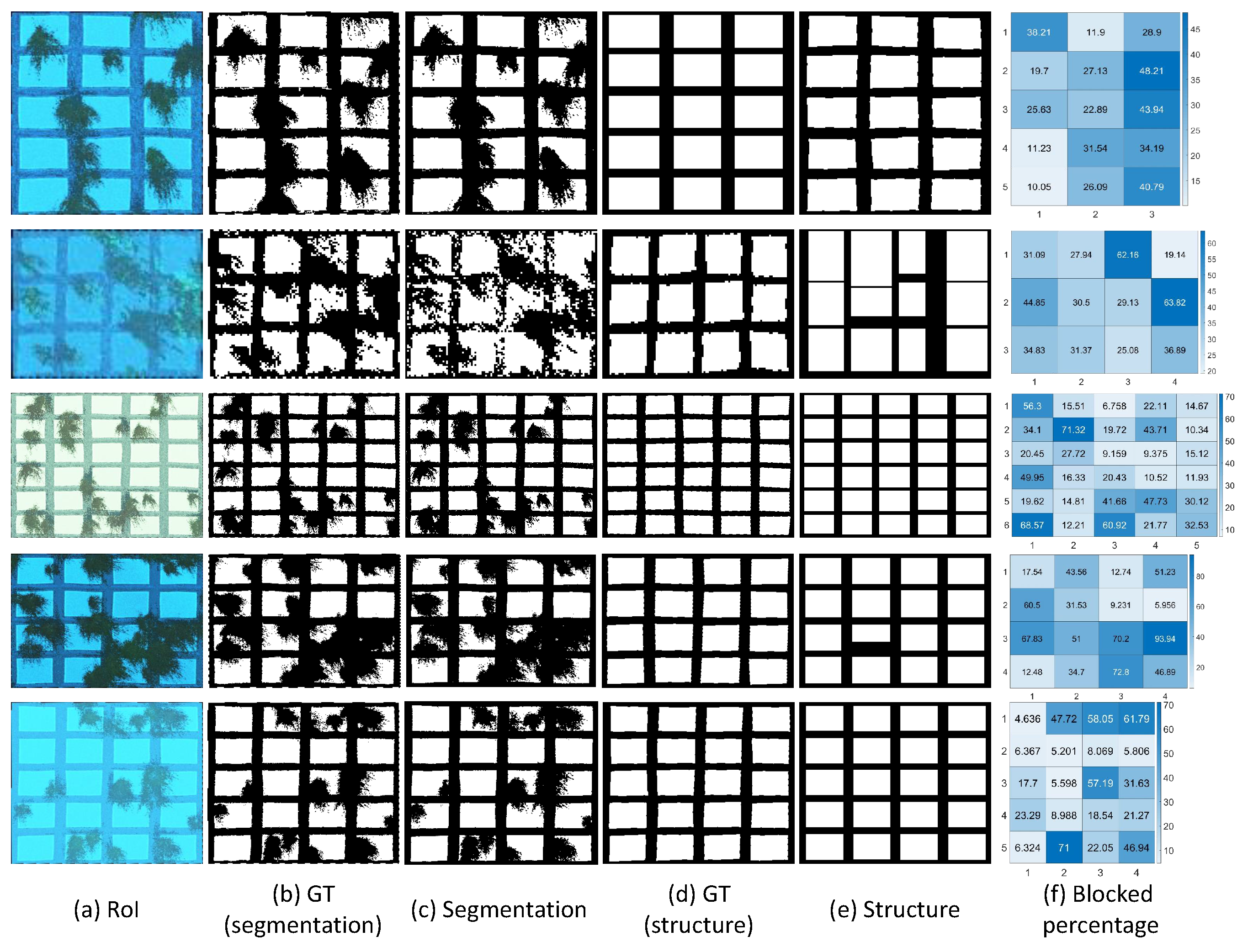

3.5. Qualitative Evaluation

4. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Chen, J.H.; Sung, W.T.; Lin, G.Y. Automated monitoring system for the fish farm aquaculture environment. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 1161–1166. [Google Scholar]

- Cordova-Rozas, M.; Aucapuri-Lecarnaque, J.; Shiguihara-Juárez, P. A Cloud Monitoring System for Aquaculture using IoT. In Proceedings of the 2019 IEEE Sciences and Humanities International Research Conference (SHIRCON), Lima, Peru, 12–15 November 2019; pp. 1–4. [Google Scholar]

- Wahjuni, S.; Maarik, A.; Budiardi, T. The fuzzy inference system for intelligent water quality monitoring system to optimize eel fish farming. In Proceedings of the 2016 International Symposium on Electronics and Smart Devices (ISESD), Bandung, Indonesia, 29–30 November 2016; pp. 163–167. [Google Scholar]

- Hairol, K.N.; Adnan, R.; Samad, A.M.; Ruslan, F.A. Aquaculture Monitoring System using Arduino Mega for Automated Fish Pond System Application. In Proceedings of the 2018 IEEE Conference on Systems, Process and Control (ICSPC), Melaka, Malaysia, 14–15 December 2018; pp. 218–223. [Google Scholar]

- Luo, S.; Li, X.; Wang, D.; Sun, C.; Li, J.; Tang, G. Intelligent tuna recognition for fisheries monitoring. In Proceedings of the 2015 12th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), Chengdu, China, 18–20 December 2015; pp. 158–162. [Google Scholar]

- Huang, T.W.; Hwang, J.N.; Romain, S.; Wallace, F. Fish tracking and segmentation from stereo videos on the wild sea surface for electronic monitoring of rail fishing. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 3146–3158. [Google Scholar] [CrossRef]

- Li, C.Y.; Guo, J.C.; Cong, R.M.; Pang, Y.W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar] [CrossRef] [PubMed]

- Christensen, J.H.; Mogensen, L.V.; Galeazzi, R.; Andersen, J.C. Detection, Localization and Classification of Fish and Fish Species in Poor Conditions using Convolutional Neural Networks. In Proceedings of the 2018 IEEE/OES Autonomous Underwater Vehicle Workshop (AUV), Porto, Portugal, 6–9 November 2018; pp. 1–6. [Google Scholar]

- O’Byrne, M.; Ghosh, B.; Schoefs, F.; Pakrashi, V. Applications of Virtual Data in Subsea Inspections. J. Mar. Sci. Eng. 2020, 8, 328. [Google Scholar] [CrossRef]

- O’Byrne, M.; Pakrashi, V.; Schoefs, F.; Ghosh, B. Semantic segmentation of underwater imagery using deep networks trained on synthetic imagery. J. Mar. Sci. Eng. 2018, 6, 93. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- O’Byrne, M.; Schoefs, F.; Pakrashi, V.; Ghosh, B. An underwater lighting and turbidity image repository for analysing the performance of image-based non-destructive techniques. Struct. Infrastruct. Eng. 2018, 14, 104–123. [Google Scholar] [CrossRef]

- Narasimhan, S.G.; Nayar, S.K. Vision and the atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- Fattal, R. Single image dehazing. ACM Trans. Graph. 2008, 27, 1–9. [Google Scholar] [CrossRef]

- Arthur, D.; Vassilvitskii, S. k-means++: The Advantages of Careful Seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms. Society for Industrial and Applied Mathematics, Philadelphia, PA, USA, 7–9 January 2007; pp. 1027–1035. [Google Scholar]

- Tan, R.T. Visibility in bad weather from a single image. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Pakrashi, V.; Schoefs, F.; Memet, J.B.; O’Connor, A. ROC dependent event isolation method for image processing based assessment of corroded harbour structures. Struct. Infrastruct. Eng. 2010, 6, 365–378. [Google Scholar] [CrossRef]

| Image sensor | Sony IMX 117 1/2.3 inch CMOS |

| Lens | FOV90°, f/2.8, infinity focus |

| Image resolution | 4000 × 3000 |

| Video resolution | 4K (3840 × 2160) 30P |

| Seg. Eval. | Struc. Eval. | Bloc. Eval. | |||||

|---|---|---|---|---|---|---|---|

| Precision↑ | Recall↑ | F1-Score↑ | mIoU↑ | stdIoU↓ | PCC↑ | mError↓ | |

| Front | 0.9892 | 0.9439 | 0.9660 | 0.8776 | 0.0539 | 0.9715 | 0.0616 |

| Left/Right | 0.9414 | 0.9269 | 0.9328 | 0.8313 | 0.0770 | 0.9253 | 0.0690 |

| Up/Down | 0.9808 | 0.9327 | 0.9561 | 0.8690 | 0.0630 | 0.9430 | 0.1652 |

| Mean of angles | 0.9705 | 0.9345 | 0.9516 | 0.8593 | 0.0646 | 0.9466 | 0.0986 |

| Color | 0.9853 | 0.9544 | 0.9695 | 0.8932 | 0.0582 | 0.9778 | 0.0466 |

| Density | 0.9618 | 0.9548 | 0.9583 | 0.8630 | 0.0899 | 0.9757 | 0.0546 |

| Visibility | 0.9848 | 0.9553 | 0.9698 | 0.8958 | 0.0530 | 0.9765 | 0.0574 |

| Mean of all | 0.9739 | 0.9447 | 0.9617 | 0.8717 | 0.658 | 0.9616 | 0.0757 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, W.; Pakrashi, V.; Ghosh, B. Fishing Net Health State Estimation Using Underwater Imaging. J. Mar. Sci. Eng. 2020, 8, 707. https://doi.org/10.3390/jmse8090707

Qiu W, Pakrashi V, Ghosh B. Fishing Net Health State Estimation Using Underwater Imaging. Journal of Marine Science and Engineering. 2020; 8(9):707. https://doi.org/10.3390/jmse8090707

Chicago/Turabian StyleQiu, Wenliang, Vikram Pakrashi, and Bidisha Ghosh. 2020. "Fishing Net Health State Estimation Using Underwater Imaging" Journal of Marine Science and Engineering 8, no. 9: 707. https://doi.org/10.3390/jmse8090707

APA StyleQiu, W., Pakrashi, V., & Ghosh, B. (2020). Fishing Net Health State Estimation Using Underwater Imaging. Journal of Marine Science and Engineering, 8(9), 707. https://doi.org/10.3390/jmse8090707