Progressive Color Correction and Vision-Inspired Adaptive Framework for Underwater Image Enhancement

Abstract

1. Introduction

- (1)

- A progressive underwater image color correction algorithm is proposed to enhance color balance while systematically mitigating the risk of overcompensation.

- (2)

- A vision-inspired underwater image enhancement algorithm is designed to uncover latent information within the image while preserving the naturalness of the scene and fine-grained details.

- (3)

- The proposed method is extensively evaluated on multiple datasets and benchmarked against state-of-the-art methods. It is assessed in terms of quantitative metrics, qualitative results, and computational efficiency.

2. Related Work

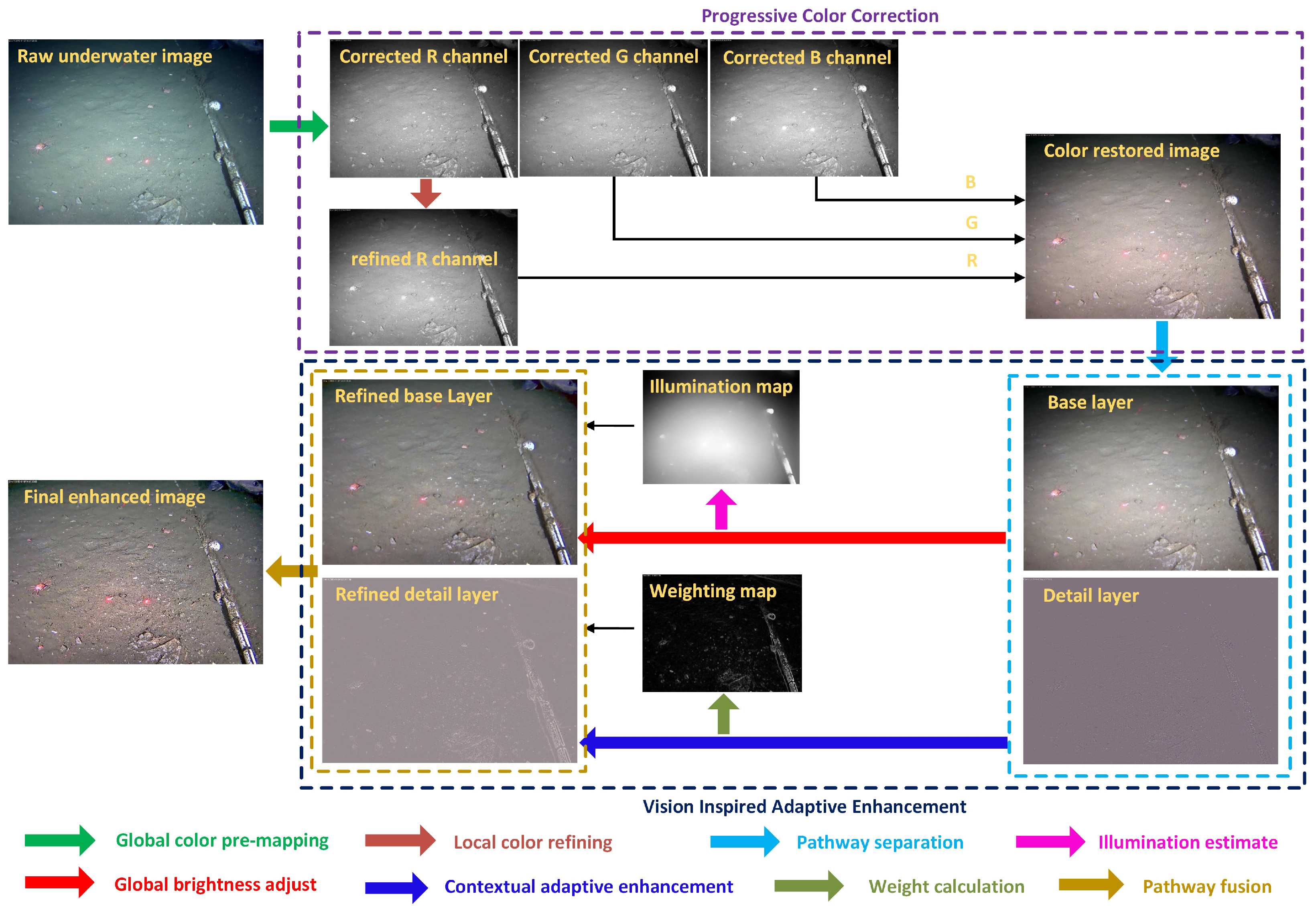

3. Methodology

3.1. Progressive Color Correction

3.1.1. Global Color Pre-Mapping

3.1.2. Local Color Refining

3.2. Vision Inspired Adaptive Image Enhancement

3.2.1. Pathway Separation

3.2.2. Global Brightness Adjust

3.2.3. Contextual Adaptive Enhancement

| Algorithm 1: Vision-Inspired Adaptive Enhancement for Underwater Images |

1 Input: Color-corrected image , parameters , , , ; Output: Enhanced image ; 6 Obtain the detail-preserving enhanced detail layer via Equation (20); 7 Obtain the enhanced image via Equation (21); |

4. Results and Analysis

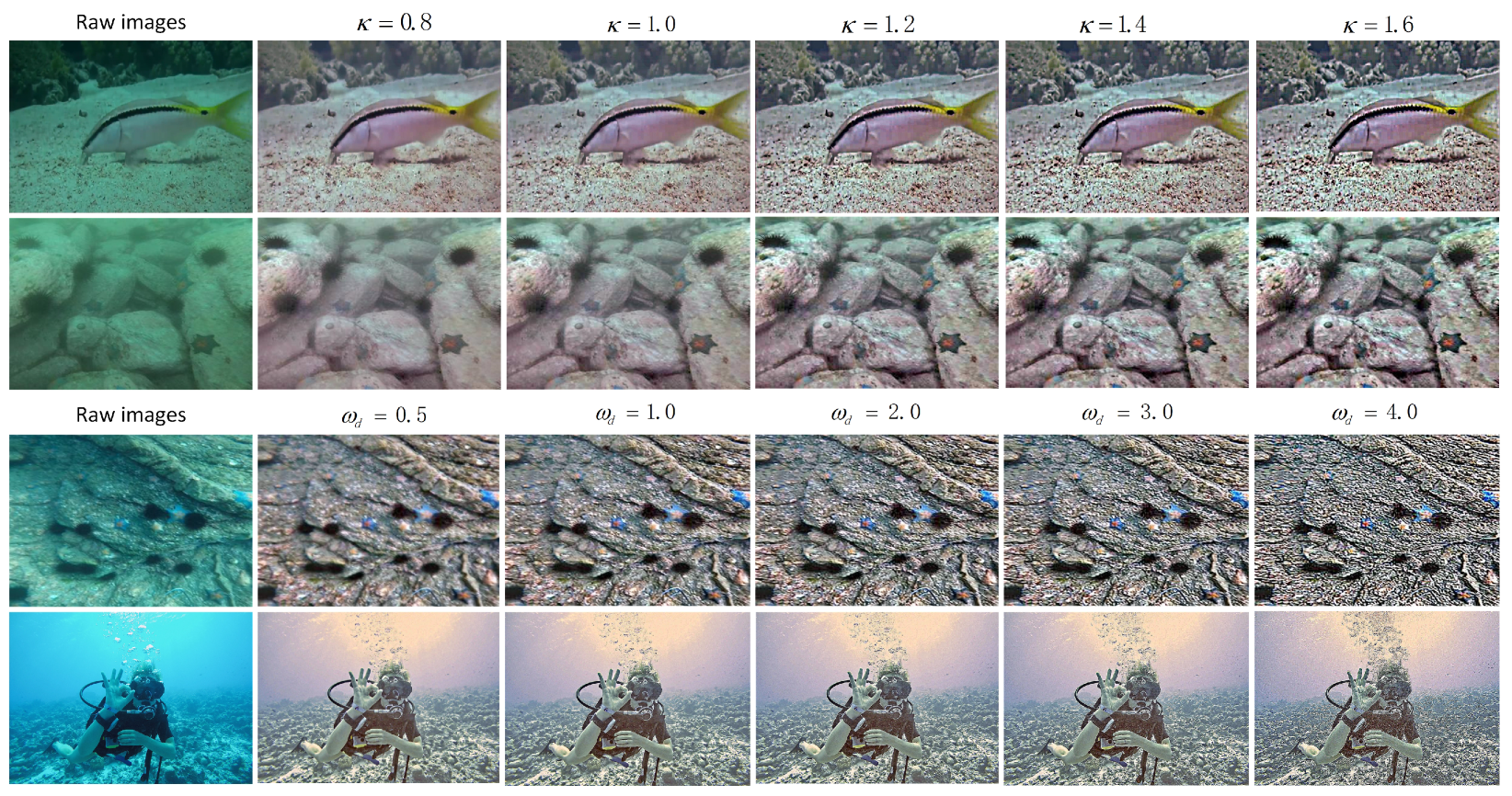

4.1. Parameters Setting

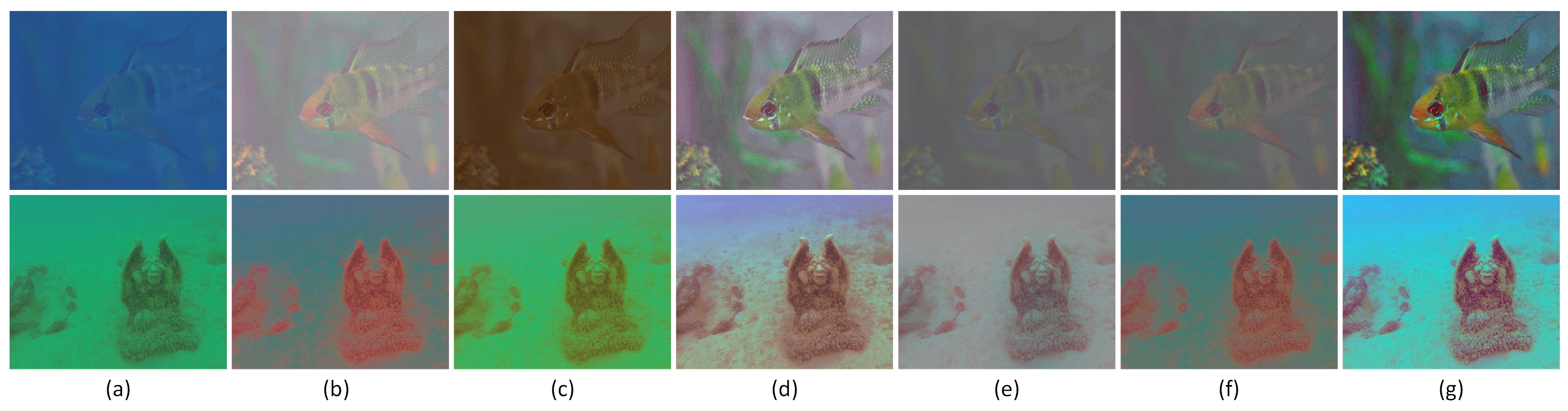

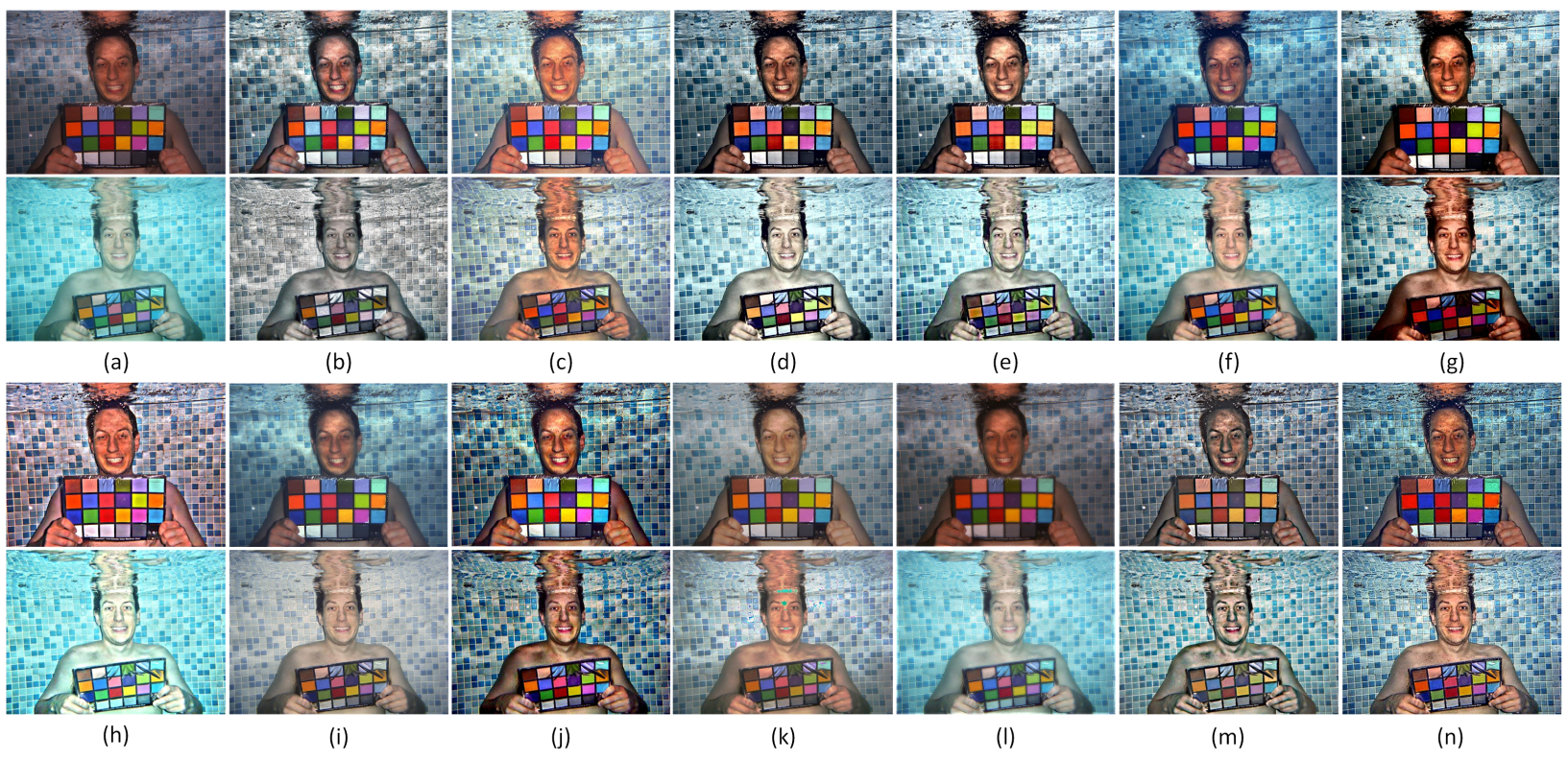

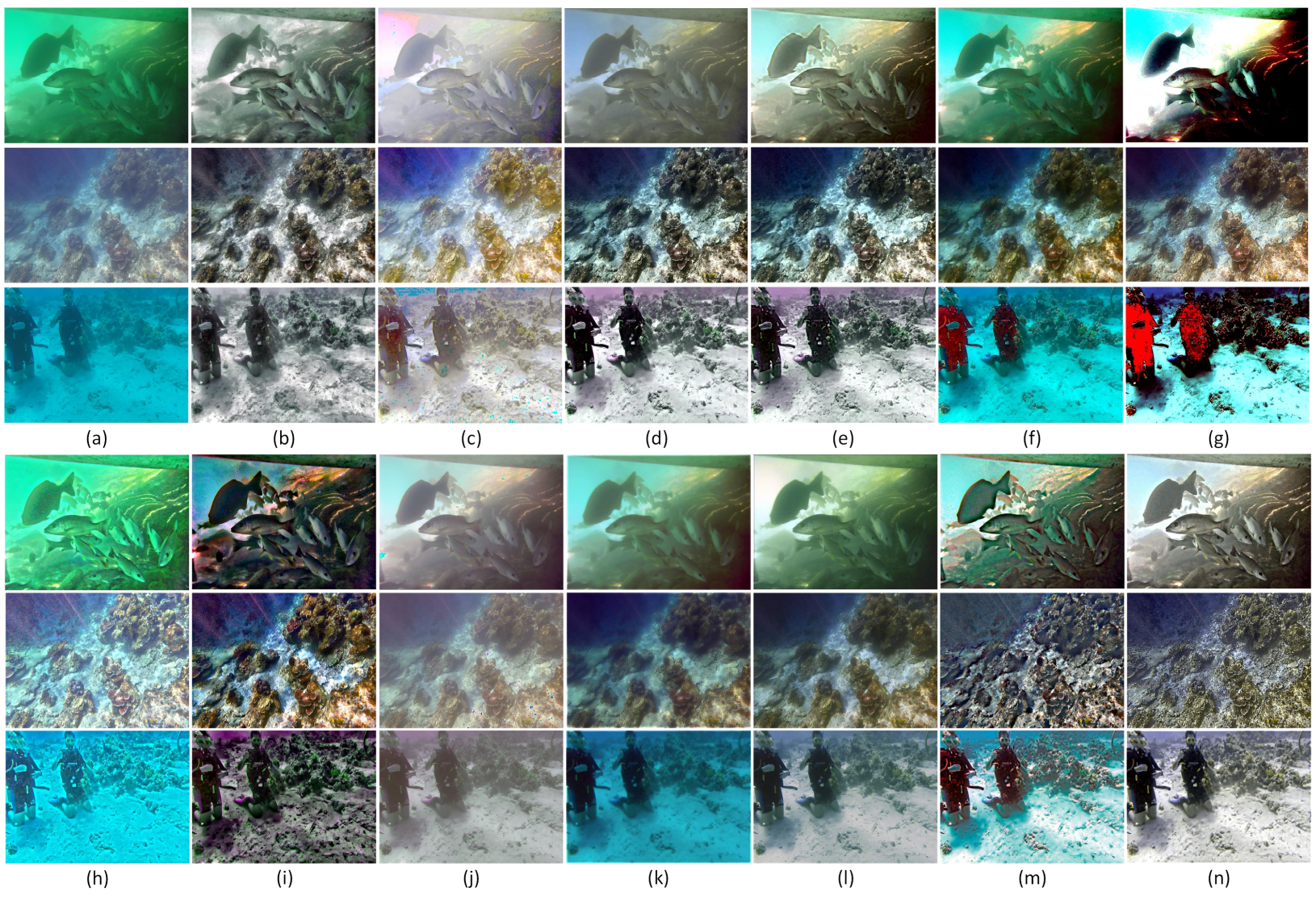

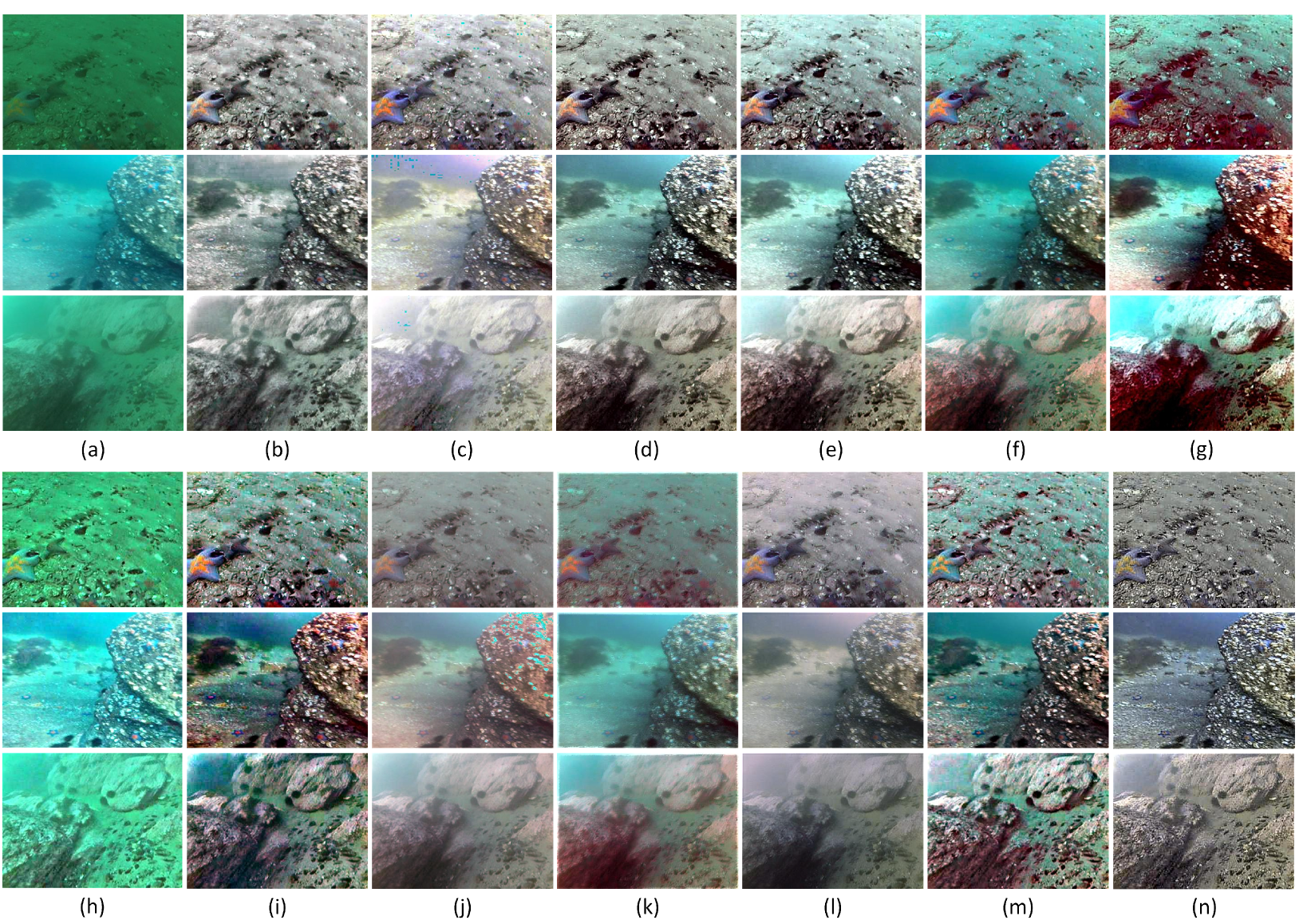

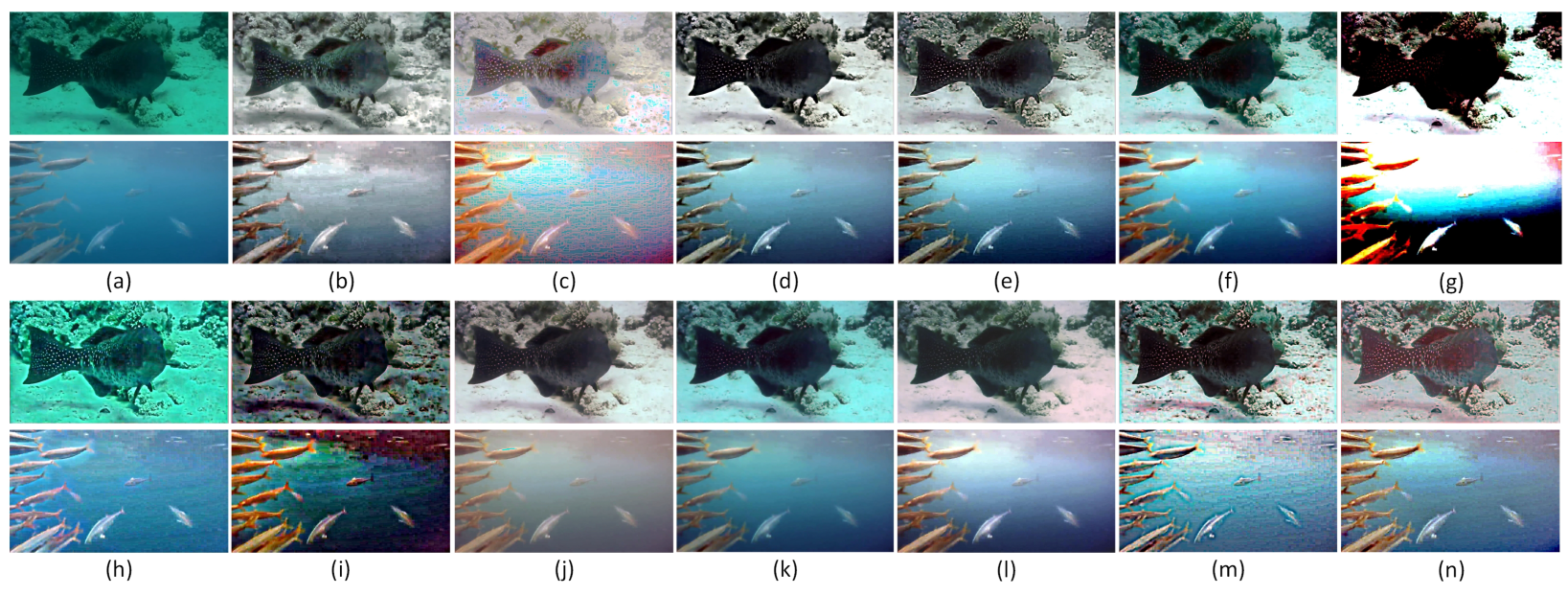

4.2. Comparisons on the Color-Check7 Dataset

4.3. Comparisons on the UIEB Dataset

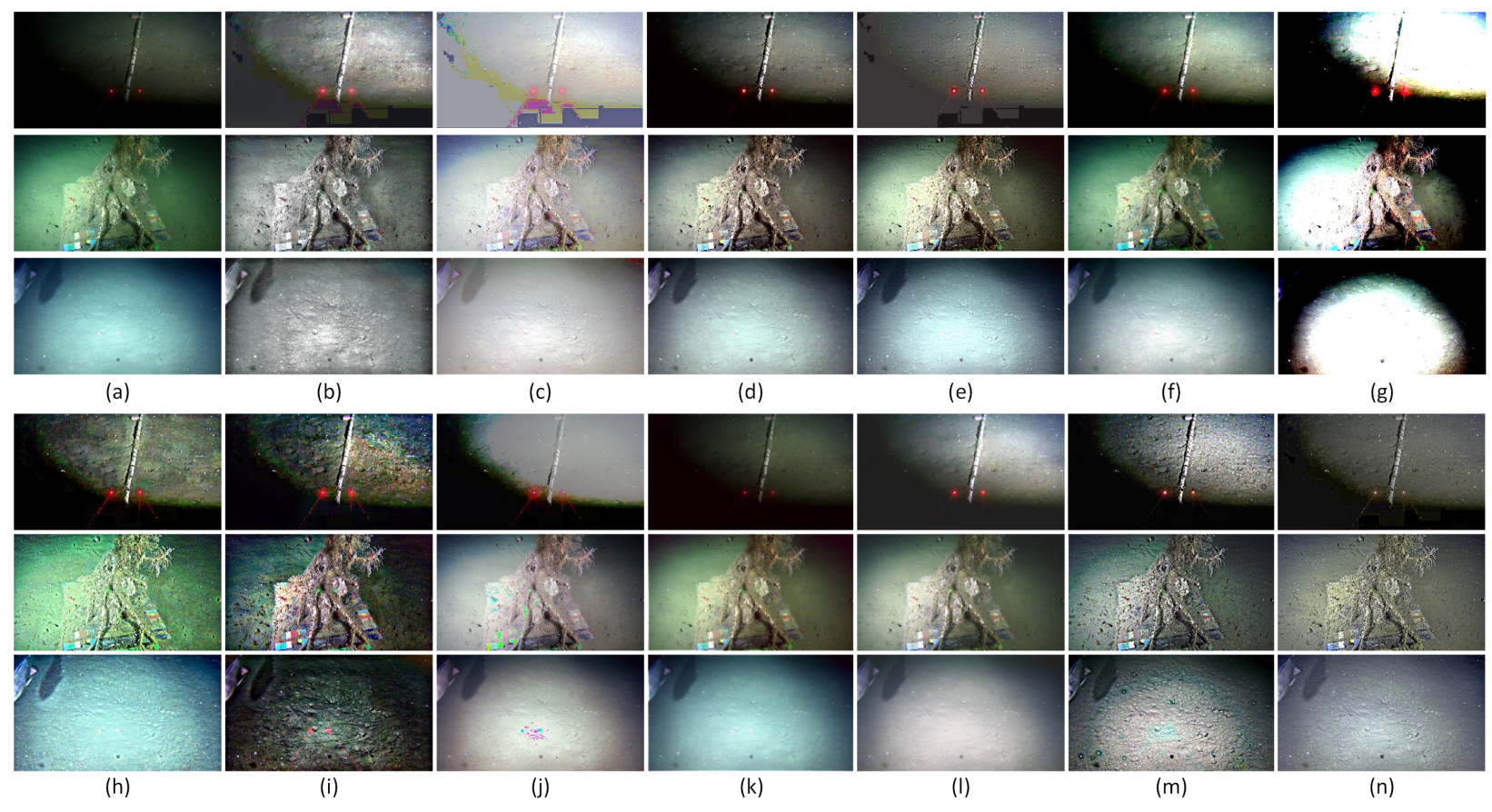

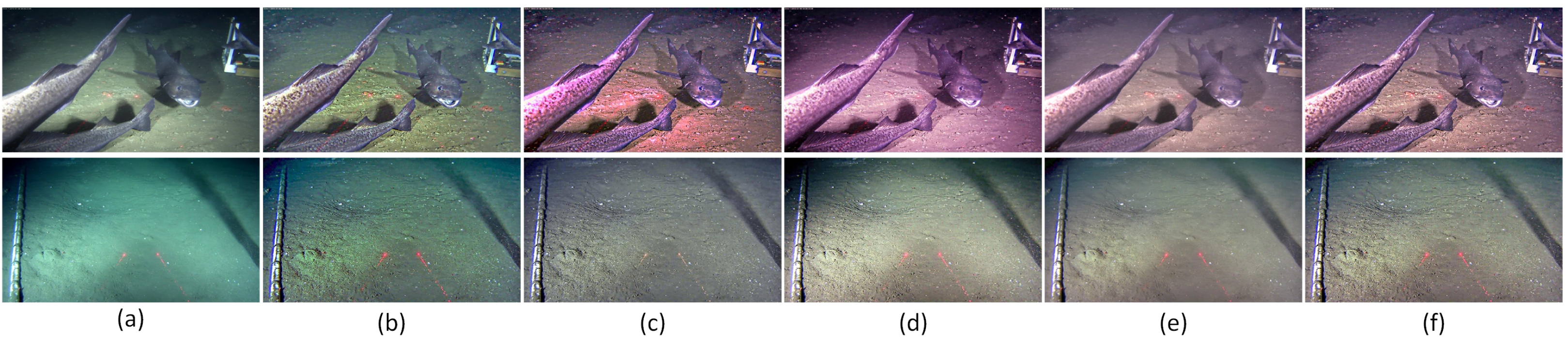

4.4. Comparisons on the RUIE and OceanDark Datasets

4.5. Ablation Study

4.6. Runtime Analysis

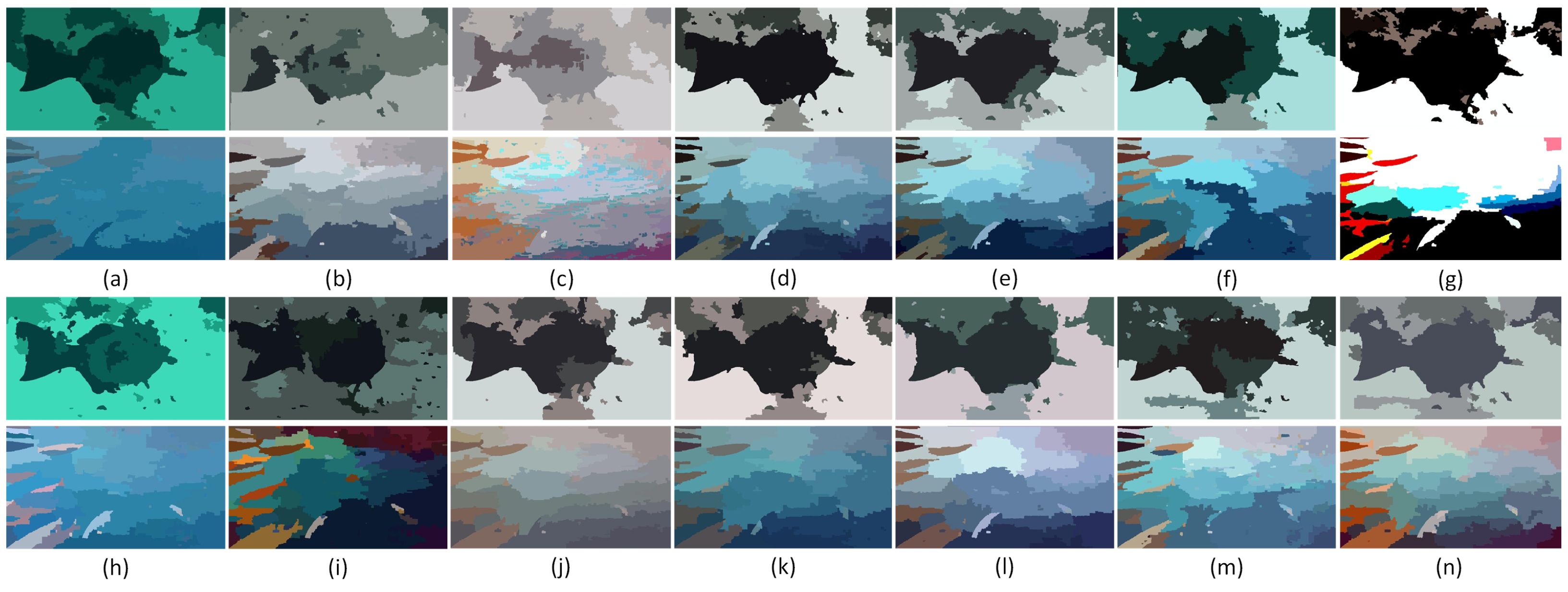

4.7. Application to Image Segmentation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hu, K.; Weng, C.; Zhang, Y.; Jin, J.; Xia, Q. An overview of underwater vision enhancement: From traditional methods to recent deep learning. J. Mar. Sci. Eng. 2022, 10, 241. [Google Scholar] [CrossRef]

- Zhou, J.; Pang, L.; Zhang, D.; Zhang, W. Underwater image enhancement method via multi-interval subhistogram perspective equalization. IEEE J. Ocean. Eng. 2023, 48, 474–488. [Google Scholar] [CrossRef]

- Jha, M.; Bhandari, A.K. Cbla: Color-balanced locally adjustable underwater image enhancement. IEEE Trans. Instrum. Meas. 2024, 73, 1–11. [Google Scholar] [CrossRef]

- Zhang, W.; Zhuang, P.; Sun, H.-H.; Li, G.; Kwong, S.; Li, C. Underwater image enhancement via minimal color loss and locally adaptive contrast enhancement. IEEE Trans. Image Process. 2022, 31, 3997–4010. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color balance and fusion for underwater image enhancement. IEEE Trans. Image Process. 2017, 27, 379–393. [Google Scholar] [CrossRef]

- An, S.; Xu, L.; Member, I.S.; Deng, Z.; Zhang, H. Hfm: A hybrid fusion method for underwater image enhancement. Eng. Appl. Artif. Intell. 2024, 127, 107219. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Sbert, M. Color channel compensation (3c): A fundamental pre-processing step for image enhancement. IEEE Trans. Image Process. 2019, 29, 2653–2665. [Google Scholar] [CrossRef] [PubMed]

- Subramani, B.; Veluchamy, M. Pixel intensity optimization and detail-preserving contextual contrast enhancement for underwater images. Opt. Laser Technol. 2025, 180, 111464. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, S.; Lin, Z.; Jiang, Q.; Sohel, F. A pixel distribution remapping and multi-prior retinex variational model for underwater image enhancement. IEEE Trans. Multimed. 2024, 26, 7838–7849. [Google Scholar] [CrossRef]

- Zhuang, P.; Wu, J.; Porikli, F.; Li, C. Underwater image enhancement with hyper-laplacian reflectance priors. IEEE Trans. Image Process. 2022, 31, 5442–5455. [Google Scholar] [CrossRef]

- Hou, G.; Li, J.; Wang, G.; Yang, H.; Huang, B.; Pan, Z. A novel dark channel prior guided variational framework for underwater image restoration. J. Vis. Commun. Image Represent. 2020, 66, 102732. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, S.; Zhang, D.; He, Z.; Lam, K.-M.; Sohel, F.; Vivone, G. Adaptive variational decomposition for water-related optical image enhancement. ISPRS J. Photogramm. Remote Sens. 2024, 216, 15–31. [Google Scholar] [CrossRef]

- Peng, Y.-T.; Cao, K.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef]

- Liang, Z.; Ding, X.; Wang, Y.; Yan, X.; Fu, X. Gudcp: Generalization of underwater dark channel prior for underwater image restoration. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4879–4884. [Google Scholar] [CrossRef]

- Liang, Z.; Zhang, W.; Ruan, R.; Zhuang, P.; Xie, X.; Li, C. Underwater image quality improvement via color, detail, and contrast restoration. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 1726–1742. [Google Scholar] [CrossRef]

- Huo, G.; Wu, Z.; Li, J. Underwater image restoration based on color correction and red channel prior. In Proceedings of the 2018 IEEE International Conference on Systems, Man and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 3975–3980. [Google Scholar]

- Cui, Z.; Yang, C.; Wang, S.; Wang, X.; Duan, H.; Na, J. Underwater image enhancement by illumination map estimation and adaptive high-frequency gain. IEEE Sens. J. 2024, 24, 24677–24689. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, H.; Chau, L.-P. Single underwater image restoration using adaptive attenuation-curve prior. IEEE Trans. Circuits Syst. I Regul. Pap. 2017, 65, 992–1002. [Google Scholar] [CrossRef]

- Song, W.; Wang, Y.; Huang, D.; Tjondronegoro, D. A rapid scene depth estimation model based on underwater light attenuation prior for underwater image restoration. In Advances in Multimedia Information Processing–PCM 2018, Proceedings of the Pacific Rim Conference on Multimedia, Hefei, China, 21–22 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 678–688. [Google Scholar]

- Liu, K.; Liang, Y. Underwater image enhancement method based on adaptive attenuation-curve prior. Opt. Express 2021, 29, 10321–10345. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, Y.; Li, C.; Zhang, W. Multicolor light attenuation modeling for underwater image restoration. IEEE J. Ocean. Eng. 2023, 48, 1322–1337. [Google Scholar] [CrossRef]

- Zhuang, P.; Li, C.; Wu, J. Bayesian retinex underwater image enhancement. Eng. Appl. Artif. Intell. 2021, 101, 104171. [Google Scholar] [CrossRef]

- Li, D.; Zhou, J.; Wang, S.; Zhang, D.; Zhang, W.; Alwadai, R.; Alenezi, F.; Tiwari, P.; Shi, T. Adaptive weighted multiscale retinex for underwater image enhancement. Eng. Appl. Artif. Intell. 2023, 123, 106457. [Google Scholar] [CrossRef]

- Yeh, C.-H.; Lai, Y.-W.; Lin, Y.-Y.; Chen, M.-J.; Wang, C.-C. Underwater image enhancement based on light field-guided rendering network. J. Mar. Sci. Eng. 2024, 12, 1217. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2020, 29, 4376–4389. [Google Scholar] [CrossRef]

- Li, F.; Li, W.; Zheng, J.; Wang, L.; Xi, Y. Contrastive feature disentanglement via physical priors for underwater image enhancement. Remote Sens. 2025, 17, 759. [Google Scholar] [CrossRef]

- Sun, B.; Mei, Y.; Yan, N.; Chen, Y. Umgan: Underwater image enhancement network for unpaired image-to-image translation. J. Mar. Sci. Eng. 2023, 11, 447. [Google Scholar] [CrossRef]

- Fu, F.; Liu, P.; Shao, Z.; Xu, J.; Fang, M. Mevo-gan: A multi-scale evolutionary generative adversarial network for underwater image enhancement. J. Mar. Sci. Eng. 2024, 12, 1210. [Google Scholar] [CrossRef]

- Cong, R.; Yang, W.; Zhang, W.; Li, C.; Guo, C.-L.; Huang, Q.; Kwong, S. Pugan: Physical model-guided underwater image enhancement using gan with dual-discriminators. IEEE Trans. Image Process. 2023, 32, 4472–4485. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Peng, L.; Zhu, C.; Bian, L. U-shape transformer for underwater image enhancement. IEEE Trans. Image Process. 2023, 32, 3066–3079. [Google Scholar] [CrossRef]

- Tang, Y.; Iwaguchi, T.; Kawasaki, H.; Sagawa, R.; Furukawa, R. Autoenhancer: Transformer on u-net architecture search for underwater image enhancement. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 1403–1420. [Google Scholar]

- An, G.; He, A.; Wang, Y.; Guo, J. Uwmamba: Underwater image enhancement with state space model. IEEE Signal Process. Lett. 2024, 31, 2725–2729. [Google Scholar] [CrossRef]

- Guan, M.; Xu, H.; Jiang, G.; Yu, M.; Chen, Y.; Luo, T.; Song, Y. Watermamba: Visual State Space Model for Underwater Image Enhancement. arXiv 2024, arXiv:2405.08419. [Google Scholar] [CrossRef]

- Berman, D.; Levy, D.; Avidan, S.; Treibitz, T. Underwater single image color restoration using haze-lines and a new quantitative dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2822–2837. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Wang, Y.; Zhang, W. Underwater image restoration via information distribution and light scattering prior. Comput. Electr. Eng. 2022, 100, 107908. [Google Scholar] [CrossRef]

- Li, C.-Y.; Guo, J.-C.; Cong, R.-M.; Pang, Y.-W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar] [CrossRef] [PubMed]

- Akkaynak, D.; Treibitz, T. Sea-thru: A method for removing water from underwater images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1682–1691. [Google Scholar]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Liu, J.; Liu, R.W.; Sun, J.; Zeng, T. Rank-one prior: Real-time scene recovery. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 8845–8860. [Google Scholar] [CrossRef]

- Xie, J.; Hou, G.; Wang, G.; Pan, Z. A variational framework for underwater image dehazing and deblurring. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 3514–3526. [Google Scholar] [CrossRef]

- Zhang, W.; Pan, X.; Xie, X.; Li, L.; Wang, Z.; Han, C. Color correction and adaptive contrast enhancement for underwater image enhancement. Comput. Electr. Eng. 2021, 91, 106981. [Google Scholar] [CrossRef]

- Balta, H.; Ancuti, C.O.; Ancuti, C. Effective underwater image restoration based on color channel compensation. In Proceedings of the OCEANS 2021: San Diego–Porto, San Diego, CA, USA, 20–23 September 2021; pp. 1–4. [Google Scholar]

- Kang, Y.; Jiang, Q.; Li, C.; Ren, W.; Liu, H.; Wang, P. A perception-aware decomposition and fusion framework for underwater image enhancement. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 988–1002. [Google Scholar] [CrossRef]

- Zhang, W.; Dong, L.; Zhang, T.; Xu, W. Enhancing underwater image via color correction and bi-interval contrast enhancement. Signal Process. Image Commun. 2021, 90, 116030. [Google Scholar] [CrossRef]

- Zhang, T.; Su, H.; Fan, B.; Yang, N.; Zhong, S.; Yin, J. Underwater image enhancement based on red channel correction and improved multiscale fusion. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–20. [Google Scholar] [CrossRef]

- Zhang, W.; Zhou, L.; Zhuang, P.; Li, G.; Pan, X.; Zhao, W.; Li, C. Underwater image enhancement via weighted wavelet visual perception fusion. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 2469–2483. [Google Scholar] [CrossRef]

- Lu, S.; Guan, F.; Zhang, H.; Lai, H. Underwater image enhancement method based on denoising diffusion probabilistic model. J. Vis. Commun. Image Represent. 2023, 96, 103926. [Google Scholar] [CrossRef]

- Wang, Z.; Shen, L.; Xu, M.; Yu, M.; Wang, K.; Lin, Y. Domain adaptation for underwater image enhancement. IEEE Trans. Image Process. 2023, 32, 1442–1457. [Google Scholar] [CrossRef]

- Lin, W.-T.; Lin, Y.-X.; Chen, J.-W.; Hua, K.-L. Pixmamba: Leveraging state space models in a dual-level architecture for underwater image enhancement. In Proceedings of the Asian Conference on Computer Vision, Hanoi, Vietnam, 8–12 December 2024; pp. 3622–3637. [Google Scholar]

- Ouyang, W.; Liu, J.; Wei, Y. An underwater image enhancement method based on balanced adaption compensation. IEEE Signal Process. Lett. 2024, 31, 1034–1038. [Google Scholar] [CrossRef]

- Van De Weijer, J.; Gevers, T.; Gijsenij, A. Edge-based color constancy. IEEE Trans. Image Process. 2007, 16, 2207–2214. [Google Scholar] [CrossRef]

- Afifi, M.; Price, B.; Cohen, S.; Brown, M.S. When color constancy goes wrong: Correcting improperly white-balanced images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1535–1544. [Google Scholar]

- Azmi, K.Z.M.; Ghani, A.S.A.; Yusof, Z.M.; Ibrahim, Z. Natural-based underwater image color enhancement through fusion of swarm-intelligence algorithm. Appl. Soft Comput. 2019, 85, 105810. [Google Scholar] [CrossRef]

- Finlayson, G.D.; Trezzi, E. Shades of gray and colour constancy. In Proceedings of the Color and Imaging Conference, Scottsdale, AZ, USA, 9 November 2004; Society of Imaging Science and Technology: Springfield, VA, USA, 2004; Volume 12, pp. 37–41. [Google Scholar]

- Yang, K.-F.; Zhang, X.-S.; Li, Y.-J. A biological vision inspired framework for image enhancement in poor visibility conditions. IEEE Trans. Image Process. 2019, 29, 1493–1506. [Google Scholar] [CrossRef]

- Gong, Y.; Sbalzarini, I.F. Curvature filters efficiently reduce certain variational energies. IEEE Trans. Image Process. 2017, 26, 1786–1798. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, Y.; Li, C. Underwater image enhancement by attenuated color channel correction and detail preserved contrast enhancement. IEEE J. Ocean. Eng. 2022, 47, 718–735. [Google Scholar] [CrossRef]

- Marques, T.P.; Albu, A.B. L2uwe: A framework for the efficient enhancement of low-light underwater images using local contrast and multi-scale fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 538–539. [Google Scholar]

- Li, Y.; Hou, G.; Zhuang, P.; Pan, Z. Dual high-order total variation model for underwater image restoration. arXiv 2024, arXiv:2407.14868. [Google Scholar] [CrossRef]

- Liu, R.; Fan, X.; Zhu, M.; Hou, M.; Luo, Z. Real-world underwater enhancement: Challenges, benchmarks, and solutions under natural light. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Liu, R.; Ma, L.; Zhang, J.; Fan, X.; Luo, Z. Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10561–10570. [Google Scholar]

- Wang, Y.; Li, N.; Li, Z.; Gu, Z.; Zheng, H.; Zheng, B.; Sun, M. An imaging-inspired no-reference underwater color image quality assessment metric. Comput. Electr. Eng. 2018, 70, 904–913. [Google Scholar] [CrossRef]

- Choi, L.K.; You, J.; Bovik, A.C. Referenceless prediction of perceptual fog density and perceptual image defogging. IEEE Trans. Image Process. 2015, 24, 3888–3901. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Gómez-Polo, C.; Muñoz, M.P.; Luengo, M.C.L.; Vicente, P.; Galindo, P.; Casado, A.M.M. Comparison of the cielab and ciede2000 color difference formulas. J. Prosthet. Dent. 2016, 115, 65–70. [Google Scholar] [CrossRef]

- Lei, T.; Jia, X.; Zhang, Y.; Liu, S.; Meng, H.; Nandi, A.K. Superpixel-based fast fuzzy c-means clustering for color image segmentation. IEEE Trans. Fuzzy Syst. 2018, 27, 1753–1766. [Google Scholar] [CrossRef]

| Methods | Raw Image | ACDC | PDRMRV | MMLE | WWPF | MCLA | HLRP | L2UWE | SPDF | UDHTV | HFM | WaterNet | UTransNet | Proposed |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| T6000 | 23.277 | 28.513 | 12.166 | 27.543 | 26.053 | 23.619 | 20.803 | 12.954 | 24.672 | 10.454 | 12.519 | 25.033 | 23.189 | 11.563 |

| T8000 | 29.081 | 18.630 | 12.051 | 19.048 | 19.416 | 18.551 | 20.427 | 11.163 | 20.238 | 10.633 | 11.696 | 20.417 | 21.948 | 10.249 |

| D10 | 22.189 | 21.644 | 13.536 | 20.604 | 22.151 | 23.253 | 16.617 | 13.158 | 20.903 | 12.477 | 13.344 | 20.462 | 18.716 | 12.254 |

| TS1 | 24.341 | 20.152 | 9.103 | 18.000 | 21.172 | 20.458 | 12.932 | 12.161 | 19.118 | 9.412 | 14.185 | 21.806 | 18.438 | 9.538 |

| W60 | 22.070 | 25.601 | 11.726 | 22.729 | 23.569 | 22.131 | 14.776 | 12.312 | 23.816 | 11.664 | 13.914 | 23.791 | 20.762 | 11.043 |

| Z33 | 23.262 | 23.045 | 13.485 | 26.136 | 25.155 | 22.913 | 36.186 | 15.336 | 24.465 | 14.333 | 13.953 | 24.463 | 23.461 | 12.637 |

| W80 | 26.172 | 24.831 | 11.303 | 21.578 | 23.056 | 21.526 | 16.996 | 10.527 | 20.623 | 11.707 | 13.566 | 22.845 | 20.766 | 10.182 |

| Average | 24.342 | 23.202 | 11.910 | 22.234 | 22.939 | 21.779 | 19.820 | 12.516 | 21.976 | 11.526 | 13.311 | 22.688 | 21.040 | 11.067 |

| Methods | UIEB | RUIE | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ↑ | ↑ | ↑ | ↓ | ↑ | ↑ | ↑ | ↑ | ↑ | ↓ | ↑ | ↑ | |

| ACDC | 9.762 | 96.073 | 26.429 | 1.056 | 2.447 | 0.526 | 10.526 | 104.784 | 30.907 | 0.903 | 2.586 | 0.553 |

| PDRMRV | 10.332 | 100.615 | 30.126 | 1.163 | 3.449 | 0.581 | 11.484 | 115.715 | 32.455 | 1.186 | 3.328 | 0.572 |

| MMLE | 12.020 | 102.066 | 31.220 | 1.107 | 2.710 | 0.536 | 10.776 | 103.329 | 38.370 | 0.929 | 2.802 | 0.579 |

| WWPF | 11.660 | 105.163 | 27.185 | 1.164 | 2.451 | 0.514 | 11.152 | 112.237 | 37.163 | 0.872 | 2.929 | 0.563 |

| ↑ | ↑ | ↑ | ↓ | ↑ | ↑ | ↑ | ↑ | ↑ | ↓ | ↑ | ↑ | |

| MCLA | 8.165 | 73.561 | 32.536 | 1.412 | 2.175 | 0.529 | 8.116 | 73.130 | 40.154 | 1.536 | 1.814 | 0.502 |

| HLRP | 7.052 | 68.190 | 26.482 | 1.029 | 2.258 | 0.469 | 7.409 | 70.247 | 35.708 | 1.336 | 3.229 | 0.547 |

| L2UWE | 12.565 | 115.816 | 30.183 | 1.364 | 2.018 | 0.374 | 11.337 | 120.940 | 31.037 | 1.349 | 2.267 | 0.401 |

| SPDF | 15.161 | 130.258 | 27.676 | 0.915 | 3.040 | 0.566 | 11.702 | 138.135 | 29.537 | 0.981 | 2.848 | 0.553 |

| UDHTV | 8.748 | 78.461 | 32.715 | 1.146 | 2.769 | 0.551 | 9.017 | 75.398 | 34.126 | 1.406 | 2.533 | 0.527 |

| HFM | 7.260 | 72.009 | 34.247 | 1.391 | 2.326 | 0.492 | 9.443 | 87.596 | 43.533 | 1.548 | 1.922 | 0.516 |

| WaterNet | 10.591 | 90.163 | 29.155 | 1.088 | 2.819 | 0.527 | 11.665 | 108.283 | 30.308 | 0.902 | 3.057 | 0.572 |

| UTransNet | 11.191 | 110.530 | 31.490 | 1.286 | 2.731 | 0.544 | 12.238 | 123.541 | 34.048 | 0.916 | 2.97 | 0.563 |

| Proposed | 14.860 | 128.227 | 32.219 | 0.883 | 3.588 | 0.603 | 13.836 | 133.357 | 45.184 | 0.891 | 3.514 | 0.610 |

| Methods | ↑ | ↑ | ↑ | ↓ | ↑ | ↑ |

|---|---|---|---|---|---|---|

| ACDC | 5.912 | 67.048 | 22.825 | 0.837 | 2.257 | 0.511 |

| PDRMRV | 7.692 | 82.775 | 24.983 | 0.844 | 2.916 | 0.547 |

| MMLE | 9.519 | 87.131 | 26.782 | 0.831 | 2.426 | 0.517 |

| WWPF | 9.385 | 84.269 | 21.329 | 0.841 | 2.269 | 0.491 |

| MCLA | 5.866 | 64.578 | 25.837 | 0.925 | 2.084 | 0.549 |

| HLRP | 5.625 | 61.574 | 22.334 | 1.422 | 2.683 | 0.513 |

| L2UWE | 10.745 | 90.674 | 21.603 | 0.894 | 2.012 | 0.536 |

| SPDF | 13.236 | 116.174 | 22.740 | 0.855 | 2.806 | 0.531 |

| UDHTV | 5.917 | 66.233 | 26.175 | 0.948 | 2.553 | 0.549 |

| HFM | 5.434 | 62.113 | 25.068 | 0.904 | 2.127 | 0.473 |

| WaterNet | 8.379 | 80.158 | 23.256 | 0.836 | 3.066 | 0.577 |

| UTransNet | 9.016 | 86.441 | 24.698 | 0.859 | 2.511 | 0.528 |

| Proposed | 12.966 | 103.368 | 26.427 | 0.817 | 3.115 | 0.593 |

| Methods | ↑ | ↑ | ↑ | ↓ | ↑ | ↑ |

|---|---|---|---|---|---|---|

| -w/o CC | 8.531 | 78.072 | 18.194 | 1.792 | 1.363 | 0.254 |

| -w/o LCR | 10.273 | 93.785 | 21.869 | 1.207 | 3.082 | 0.586 |

| -w/o GBA | 9.385 | 87.156 | 25.806 | 1.386 | 2.238 | 0.318 |

| -w/o CAE | 6.249 | 52.328 | 26.063 | 0.941 | 2.725 | 0.572 |

| Proposed | 12.966 | 103.368 | 26.427 | 0.817 | 3.115 | 0.593 |

| Image Resolution | ACDC | PDRMRV | MMLE | WWPF | MCLA | HLRP | L2UWE | SPDF | UDHTV | HFM | WaterNet | UTransNet | Proposed |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 400 × 300 | 0.72 | 15.77 | 0.16 | 0.42 | 2.26 | 1.27 | 5.15 | 1.43 | 2.44 | 1.20 | 0.12 | 0.27 | 0.69 |

| 1280 × 720 | 3.08 | 137.50 | 1.29 | 2.83 | 12.60 | 5.26 | 38.20 | 9.37 | 19.94 | 5.25 | 1.17 | 1.53 | 4.51 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Liu, W.; Wang, J.; Yang, Y. Progressive Color Correction and Vision-Inspired Adaptive Framework for Underwater Image Enhancement. J. Mar. Sci. Eng. 2025, 13, 1820. https://doi.org/10.3390/jmse13091820

Li Z, Liu W, Wang J, Yang Y. Progressive Color Correction and Vision-Inspired Adaptive Framework for Underwater Image Enhancement. Journal of Marine Science and Engineering. 2025; 13(9):1820. https://doi.org/10.3390/jmse13091820

Chicago/Turabian StyleLi, Zhenhua, Wenjing Liu, Ji Wang, and Yuqiang Yang. 2025. "Progressive Color Correction and Vision-Inspired Adaptive Framework for Underwater Image Enhancement" Journal of Marine Science and Engineering 13, no. 9: 1820. https://doi.org/10.3390/jmse13091820

APA StyleLi, Z., Liu, W., Wang, J., & Yang, Y. (2025). Progressive Color Correction and Vision-Inspired Adaptive Framework for Underwater Image Enhancement. Journal of Marine Science and Engineering, 13(9), 1820. https://doi.org/10.3390/jmse13091820