1. Introduction

With the accelerating development of global marine resource exploitation and the continuous growth of maritime transportation and fishery activities [

1,

2,

3], maritime target monitoring has become increasingly critical for national security, marine law enforcement, environmental protection, and maritime traffic management [

4,

5]. Particularly in complex oceanic environments, the capability to achieve all-weather, high-efficiency, and high-precision automatic detection of key targets such as ships has emerged as a core technological requirement for building intelligent ocean monitoring systems and enhancing comprehensive maritime governance [

6,

7,

8]. Synthetic Aperture Radar (SAR), owing to its advantages of being unaffected by weather and illumination conditions and its long-range imaging capability, has been widely employed in maritime target monitoring tasks [

9,

10,

11]. Consequently, developing ship detection algorithms tailored for small targets in SAR imagery is essential for advancing intelligent remote sensing interpretation and enhancing maritime surveillance efficiency.

As a fundamental research direction in computer vision, object detection technology has evolved along two major paradigms: two-stage and one-stage detectors. Two-stage detectors, exemplified by Faster R-CNN, first generate region proposals through a Region Proposal Network (RPN), followed by the classification and regression of each candidate region [

12,

13]. Although such approaches offer reliable detection accuracy, their computational complexity limits real-time applicability. In contrast, one-stage detectors, represented by the YOLO series, directly predict object locations and classes in an end-to-end fashion, striking a better balance between inference speed and accuracy [

14]. In particular, the recently released YOLOv11 model [

15], which incorporates an anchor-free mechanism and an optimized feature pyramid structure, further enhances detection performance for multi-scale targets.

In the specific context of SAR image detection, researchers encounter distinctive challenges. The intrinsic characteristics of SAR imagery, such as speckle noise, geometric distortions, and complex sea clutter interference [

16,

17], significantly degrade the performance of traditional methods. To address this, Sun et al. proposed MSDFF-Net [

18], which employs multi-scale large-kernel convolutional blocks to enhance multi-scale feature representation, thereby improving ship feature extraction under noisy backgrounds. They further introduced a dynamic feature fusion block and a Gaussian probability distribution loss to alleviate scale imbalance and better model SAR-specific scattering characteristics. Wang et al. introduced an improved version of YOLOv5 by incorporating an asymmetric pyramid module and an SIM attention mechanism to mitigate near-shore background interference [

19]. Luo et al. proposed a lightweight SAR ship detection model named SHIP-YOLO [

20], which is developed based on the YOLOv8n architecture. In this model, standard convolutional layers in the neck are substituted with GhostConv modules to reduce the computational load, and a re-parameterized RepGhost bottleneck is embedded within the C2f module to enhance feature representation. Zheng et al. proposed YOLO-Ssboat [

21], a YOLOv8-based detector enhanced with C2f_DCNv3, MSWPN, and Dyhead_v3 to improve small ship detection under complex sea conditions, with added gradient-based wake suppression and adversarial training for robustness. The model integrates fine-grained feature extraction, multi-scale feature fusion, and multi-attention detection to address small-target challenges, while the gradient flow mechanism and adversarial training improve detection stability under wake interference. Shen et al. proposed DS-YOLO [

22], a SAR ship detection model built on YOLOv11, which incorporates a space-to-depth convolution to enhance the perception of small-scale targets and a pyramid-style cross-stage attention mechanism to strengthen multi-scale feature extraction while suppressing background interference. He et al. proposed AC-YOLO [

23], a lightweight SAR ship detection model that addresses the challenges of multi-scale targets, indistinct contours, and complex backgrounds. The model employs cross-scale feature fusion and a hybrid attention module to enhance feature representation while maintaining low computational cost. In addition, a new bounding box regression loss, MPDIoU, is introduced to improve localization accuracy. Tang et al. proposed BESW-YOLO [

24], a lightweight multiscale ship detection model based on YOLOv8n. It introduces a bidirectional and multiscale attention feature pyramid network to enhance cross-scale feature fusion and an EMSC-C2f module to improve feature extraction while reducing parameters.

However, the aforementioned improvements have not fully addressed several intrinsic challenges associated with ship targets in SAR imagery. These targets typically exhibit highly variable geometric shapes, significant scale distribution disparities, and weak scattering characteristics, which make them easily confused by interference such as sea clutter, wave textures, and shoreline structures in high-resolution backgrounds. This low signal-to-noise imaging nature reduces the discriminability between the targets and background, especially in near-shore areas or under complex sea conditions, where ships are often obscured or appear with blurred contours, severely degrading the precision and robustness of detection algorithms. Moreover, SAR images are inherently affected by speckle noise, a type of multiplicative noise that is unevenly distributed across target and background regions, further exacerbating the uncertainty in the feature extraction process. Most critically, existing methods rarely exploit the spatial frequency variations intrinsic to SAR imagery, which carry valuable information about structural edges and background smoothness, leaving an important perspective underexplored in ship detection.

To address these challenges, we propose an enhanced detection model tailored for SAR ship detection, termed YOLO-LDFI. Built upon YOLOv11n, this model incorporates targeted improvements across four key components—backbone network, attention mechanism, detection head, and loss function—to boost feature representation capacity and robustness. Unlike existing approaches that primarily focus on spatial or semantic feature optimization, our model explicitly integrates spatial frequency information into the detection pipeline, enabling more discriminative feature extraction at ship boundaries and suppressing background clutter, thereby introducing a novel perspective for SAR ship detection. Notably, despite the integration of multiple performance-enhancing modules, YOLO-LDFI maintains a level of lightweight design that is comparable to YOLOv11n, with only 2.63 M parameters and 6.7 GFLOPs of computational cost, making it highly deployable and practically applicable.

First, the model incorporates Linear Deformable Offset Convolution (LDConv), which enhances spatial adaptability by introducing linearly learnable offset fields into the sampling process. Unlike conventional fixed-grid convolution, LDConv adaptively aligns sampling points with the geometric contours of ship targets, effectively mitigating shape distortions caused by variations in imaging angles and occlusions. This mechanism ensures the accurate extraction of highly irregular ship structures and enhances the model’s capability to perceive fine-grained spatial deformations.

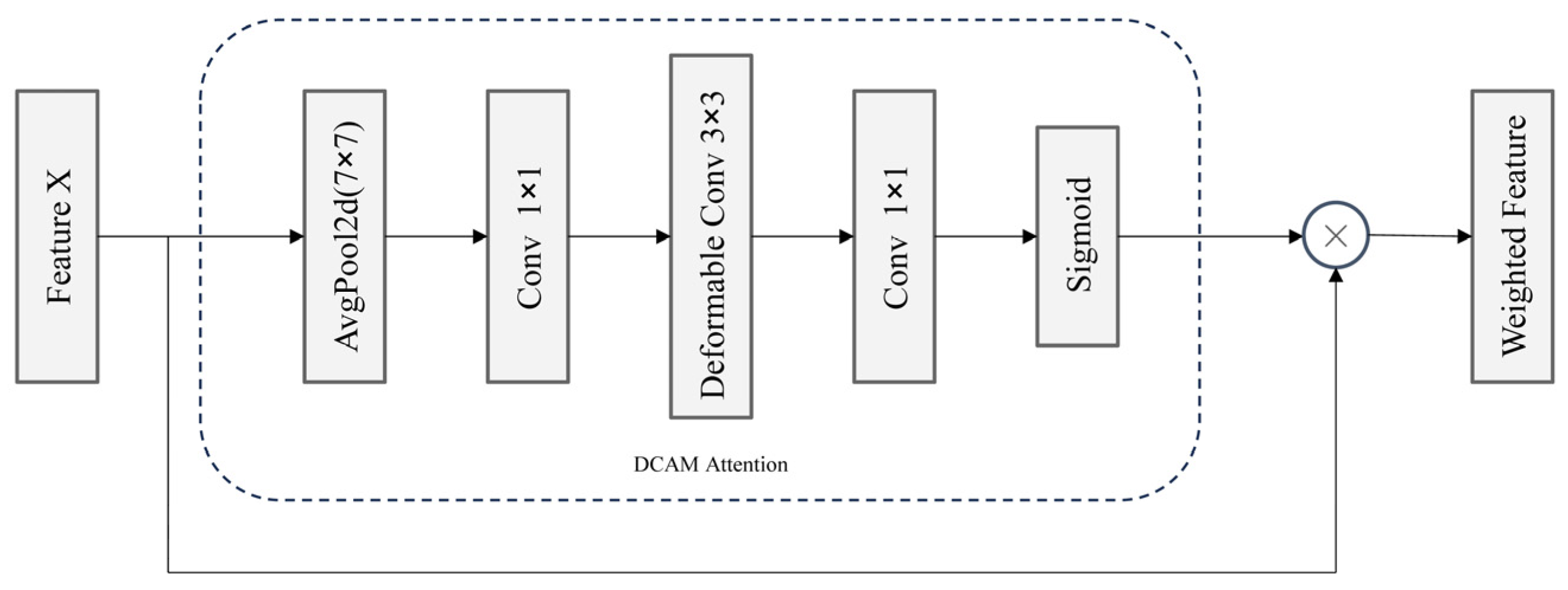

Second, we propose a Deformable Context-Aware Attention Mechanism (DCAM) to enhance spatial and contextual representation capabilities. Leveraging deformable convolutional operations, DCAM can capture non-rigid ship structures while suppressing irrelevant background interference. Its adaptive sampling strategy enables flexible focus on critical regions such as ship boundaries while ignoring misleading artifacts, thereby improving localization accuracy in complex maritime scenes.

Furthermore, the original detection head in YOLOv11 is restructured by introducing Frequency-Adaptive Dilated Convolution (FADC) to replace the standard Depthwise Separable Convolution (DWConv), resulting in a novel detection head named FAHead. FADC is designed to address the limited adaptability of fixed dilation rates when handling local structural variations. While conventional dilated convolution enlarges the receptive field, its inability to adapt to frequency variations across the image limits its effectiveness in capturing both detailed textures and broader contextual cues. FAHead resolves this by incorporating a frequency-responsive adaptive mechanism that dynamically adjusts dilation scales based on local frequency distributions. This allows the model to capture discriminative structural features of ship targets and effectively leverages spectral characteristics to enhance target detection performance in complex SAR environments, enhancing the contextual awareness of the detection head.

In addition, to mitigate misalignment and scale variation issues in bounding box regression, this work introduces the Inner-EIoU loss function as a more geometry-aware optimization strategy. While retaining EIoU’s advantages—such as penalizing center point distance, aspect ratio deviation, and overlap constraints—Inner-EIoU introduces the intersection area between the predicted and ground-truth boxes as a core metric. Unlike EIoU, which primarily focuses on external box differences, Inner-EIoU evaluates the internal structural alignment between the predicted and actual boxes, thereby guiding the model to converge more precisely to true object boundaries during training. This is especially beneficial in SAR imagery, where targets often exhibit blurred edges, low contrast, or occlusions. The proposed loss function significantly reduces bounding box regression errors and enhances the stability and accuracy of object localization.

Experiments conducted on the publicly available SAR ship detection datasets HRSID [

25] and SSDD [

26] demonstrate that the proposed YOLO-LDFI model outperforms existing mainstream methods in both detection accuracy and robustness. By integrating four key structural enhancements—LDConv, DCAM, FAHead, and Inner-EIoU—the model provides effective solutions to several critical challenges in SAR-based remote sensing scenarios, including target deformation and pose diversity, background interference and artifact suppression, scale variation and insufficient structural feature extraction, as well as inaccurate boundary localization and regression bias. Notably, these improvements are achieved while maintaining a lightweight architecture, ensuring the model’s practicality for real-world deployment.

2. Materials and Methods

2.1. YOLOv11

YOLOv11 [

15] is the official iterative version of the YOLO series, officially released on 30 September 2024. Building upon the end-to-end detection framework, it achieves a superior trade-off between detection accuracy and inference speed. The model introduces a series of innovative architectural optimizations that significantly enhance its generalization capability in complex scenes and improve convergence behavior during training. The overall network architecture of YOLOv11 consists of three major components: an improved backbone network, an enhanced feature fusion module, and a lightweight decoupled detection head.

In the backbone, YOLOv11 replaces the C2F module from YOLOv8 with a newly designed C3K2 module. By introducing a mechanism of variable convolutional kernel sizes, this module enables the network to dynamically select appropriate kernel sizes at runtime, thereby accommodating diverse feature extraction needs. This design improves the model’s ability to capture target structural variations while maintaining computational efficiency. In the neck structure, YOLOv11 expands the original C2f module into C2PSA, which incorporates the Pyramid Squeeze Attention (PSA) mechanism to enhance multi-scale feature fusion. The PSA module employs a pyramidal channel compression and attention allocation strategy that optimizes the gradient propagation path, significantly boosting the network’s responsiveness to objects of varying scales—a crucial advantage for remote sensing scenarios characterized by complex backgrounds and large-scale discrepancies.

For the detection head design, YOLOv11 adopts a decoupled classification and regression architecture, where each branch incorporates two Depthwise Separable Convolutions (DWConv) to reduce parameter and computation overhead while maintaining stable detection performance. Overall, YOLOv11 continues the YOLO family’s philosophy of high efficiency, lightweight design, and ease of deployment. It achieves state-of-the-art performance across multiple public benchmarks and serves as a solid foundation for further model enhancements in downstream tasks.

Notably, the decoupled detection head and multi-scale output mechanism of YOLOv11 align well with the specific demands of SAR image detection, particularly in terms of fine-grained boundary perception and scale sensitivity. Moreover, its lighter architecture compared to earlier versions facilitates deployment in resource-constrained environments, offering strong scalability and potential for future improvements. This work builds upon YOLOv11’s core structure and introduces a series of targeted enhancement modules specifically tailored to the challenges of SAR-based object detection.

2.2. YOLO-LDDE

To address the challenges posed by ship target detection in SAR imagery—such as geometric shape variability, significant scale differences, small object loss, and severe background interference—this paper proposes a lightweight and structurally adaptive detection model, termed YOLO-LDFI. The model is designed with a comprehensive focus on architectural flexibility, feature representation capability, and boundary regression accuracy.

First, to enhance the model’s adaptability to geometric deformations caused by v target rotations and partial occlusions, we introduce the Linear Deformable Offset Convolution (LDConv) module. By dynamically learning offset values, LDConv adjusts the position of each sampling point to form a deformable sampling grid that better aligns with the true contours of ships. This significantly improves the network’s capacity to capture shape-variant targets.

Second, to further strengthen spatial modeling and contextual representation during feature extraction, we design a Deformable Context-Aware Module (DCAM). This module combines deformable convolution with contextual enhancement mechanisms, enabling the network to accurately localize ship boundaries and suppress sea clutter interference under complex backgrounds.

Furthermore, considering the highly dynamic spectral characteristics of SAR images—such as abrupt frequency transitions and uneven frequency distribution, we propose a Frequency-Adaptive Head (FAHead) module. FAHead replaces the standard detection head’s DWConv with a Frequency-Adaptive Dilated Convolution (FADC). This enables the model to adjust its receptive field dynamically according to local frequency information, without significantly increasing computational cost. The FADC mechanism enhances robustness in regions with high-frequency texture discontinuities and improves the model’s ability to retain fine-grained edge details in cluttered scenes or when small targets are adjacent to background structures.

Finally, to mitigate the optimization gradient deficiency of traditional IoU-based loss functions under blurred boundaries and partial overlaps in SAR imagery, we introduce an improved Inner Enhanced IoU (Inner-EIoU) loss. This loss function jointly considers center point distance, aspect ratio discrepancy, and intersection-over-union (IoU) while further incorporating a containment constraint that reflects the degree of overlap between the predicted and ground-truth boxes. This provides a more stable optimization signal when targets exhibit weak boundary contrast or ambiguous structures, leading to more accurate localization and faster convergence.

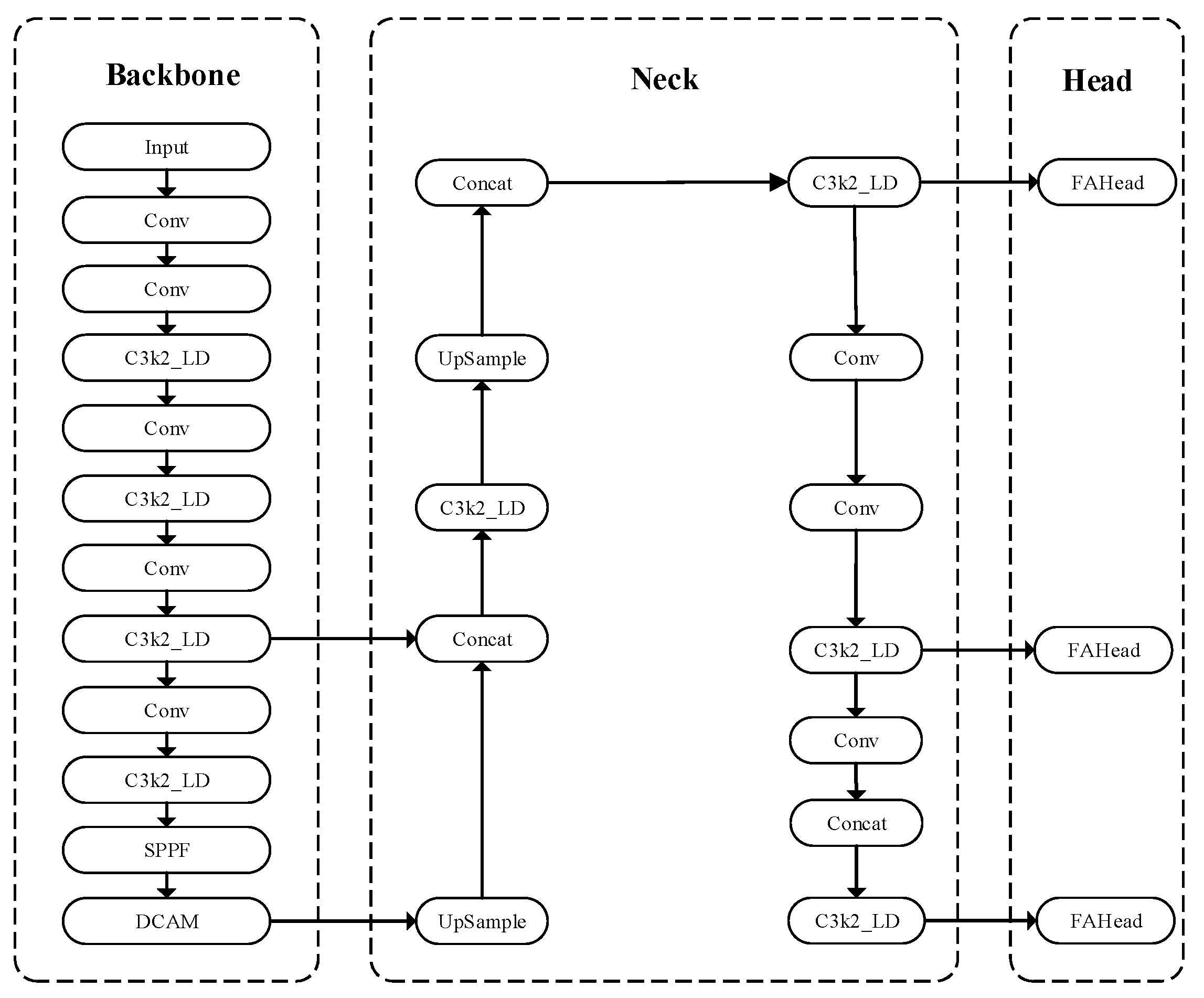

Collectively, these four enhancements—LDConv, DCAM, FAHead, and Inner-EIoU—synergistically improve the model’s adaptability to complex scenes and deformed targets in SAR images while maintaining its lightweight nature. The overall architecture of the proposed YOLO-LDFI model is illustrated in

Figure 1.

2.2.1. LDConv

In SAR ship target detection tasks, objects often exhibit diverse geometric structures. Using the HRSID dataset as an example, ship targets vary from elongated ocean-going vessels to small, near-rectangular or point-like boats. Such geometric diversity makes the standard convolution operations the backbone of YOLOv11 for capturing target contours, especially for small ships occupying only a few dozen pixels, where sampling points often fall on the background. In addition, abrupt backscatter changes between ship edges and the surrounding sea produce discontinuous edge features, increasing the risk of small targets being missed or confused.

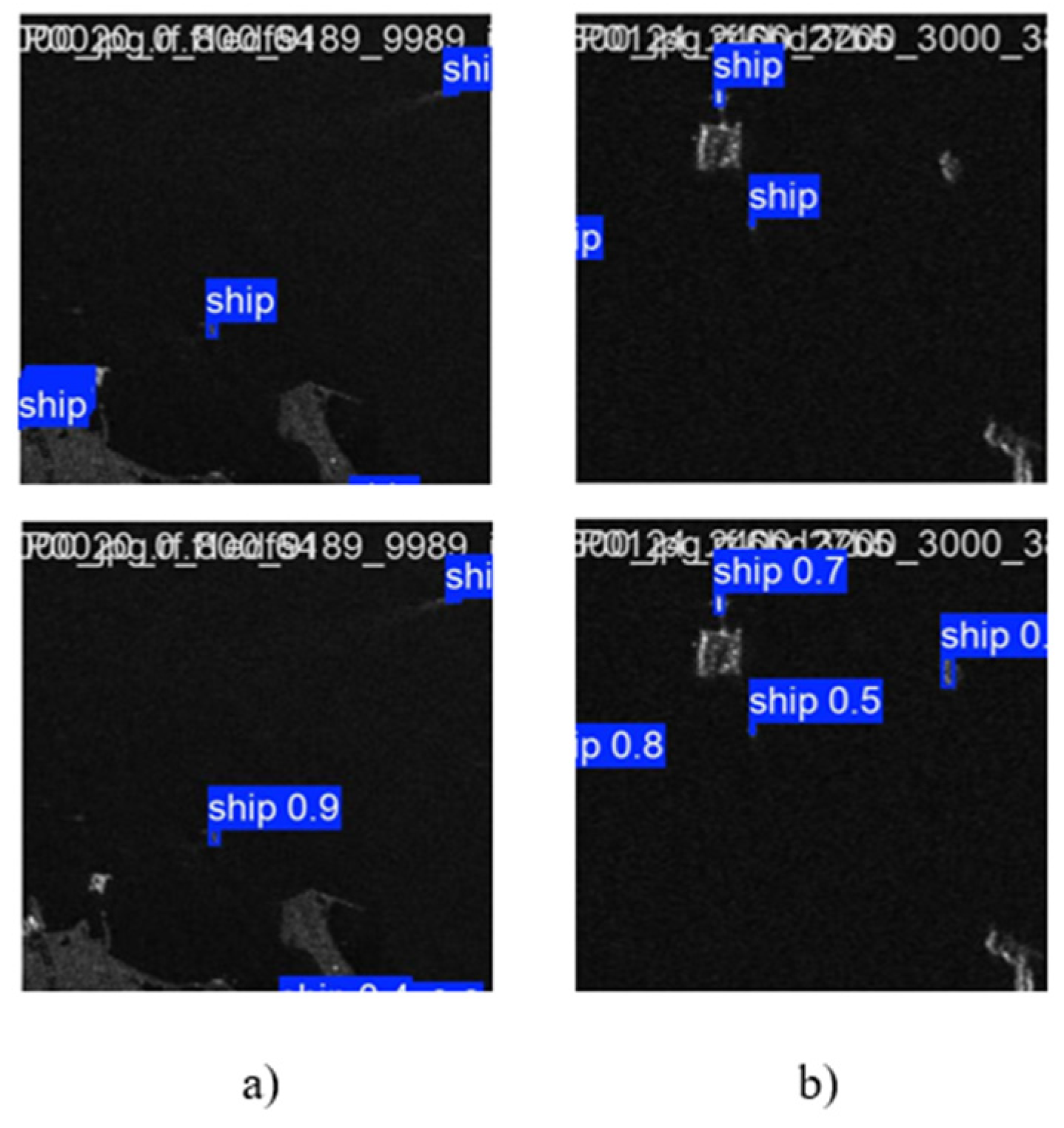

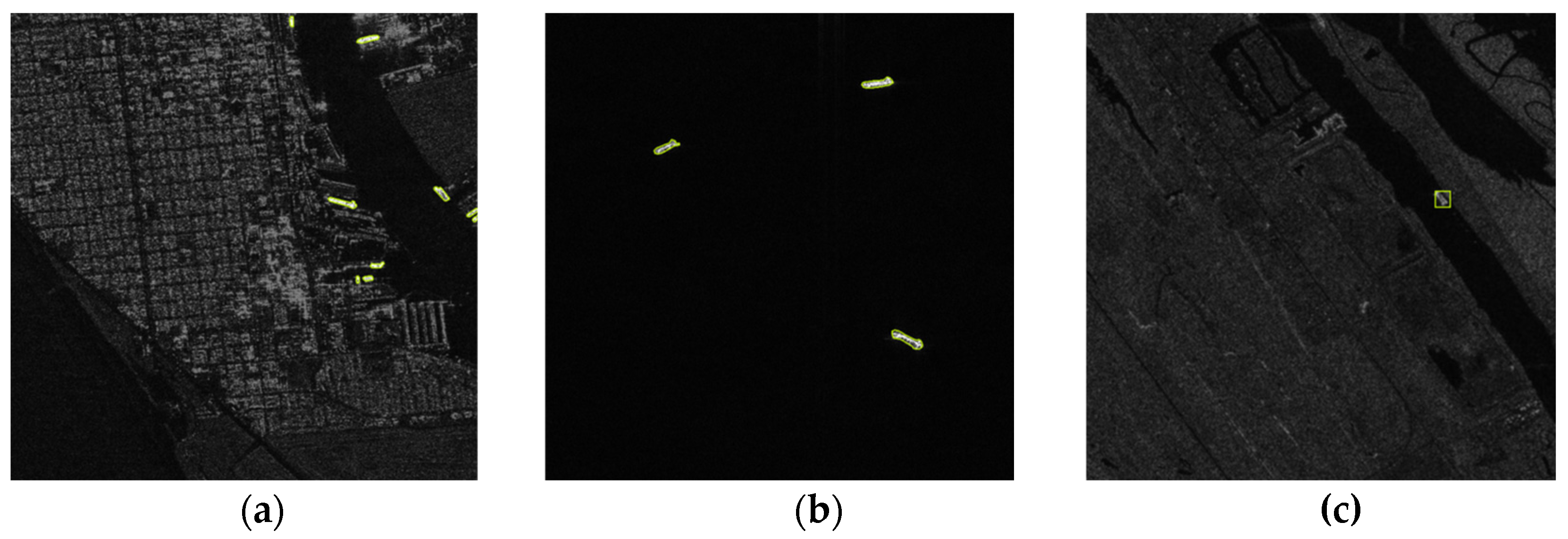

As shown in

Figure 2, these limitations of YOLOv11 are clearly illustrated. In panel (a), a small boat in the lower-left corner is missed, demonstrating the inability of standard convolutions to capture small-scale targets. In panel (b), an elongated island is misclassified as a ship, indicating that blurred edges and geometric misalignment lead to feature confusion that subsequent detection heads cannot fully resolve.

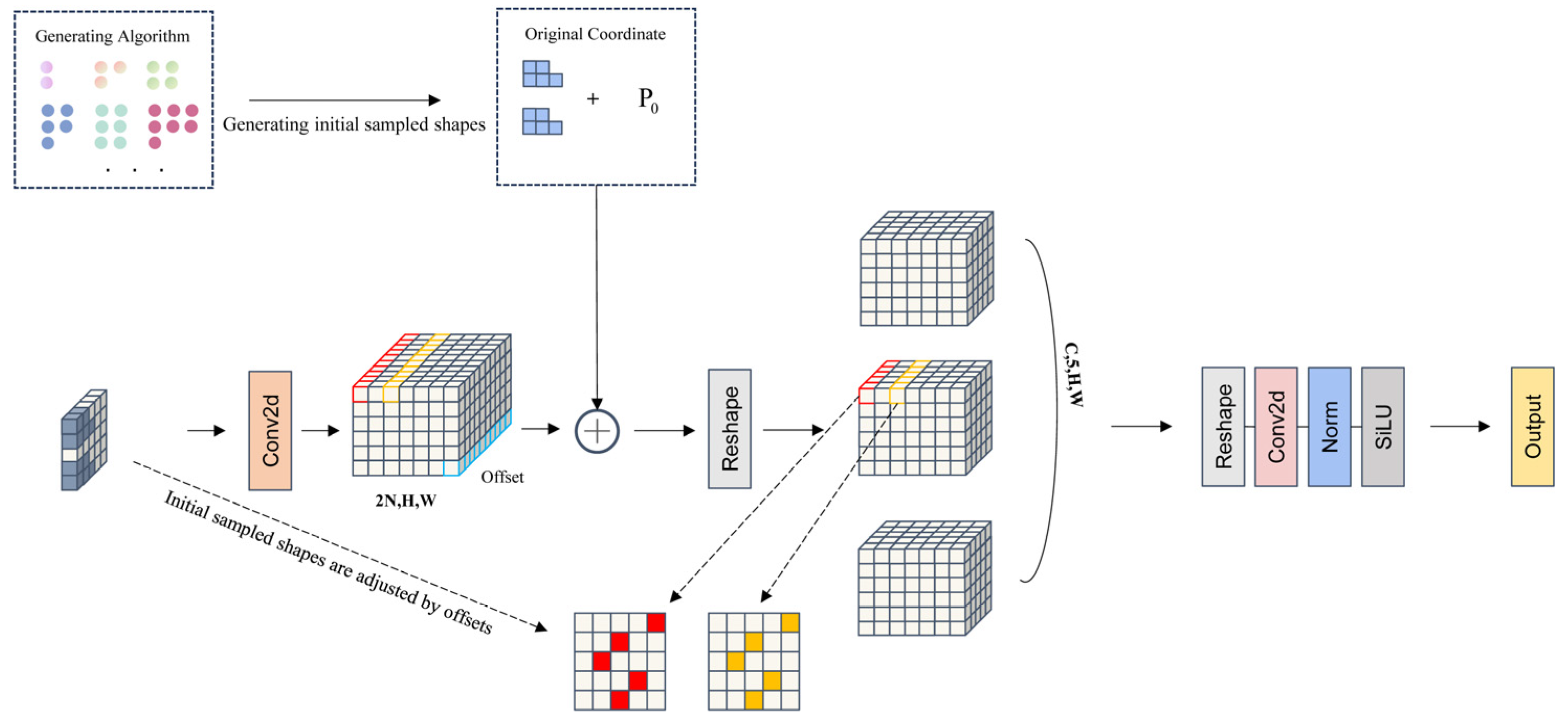

To address this, we introduce a Linear Deformable Convolution (LDConv) module, which enhances the model’s adaptability to ship targets. Notably, LDConv introduces only linearly scaled additional parameters, thereby maintaining computational efficiency. The architecture of LDConv is illustrated in

Figure 3.

LDConv processes the feature extraction in three stages:

First, it generates initial sampling coordinates for arbitrarily shaped convolutional kernels based on a proposed coordinate generation algorithm. Using the top-left corner of the feature map (0,0) as the origin, the algorithm partitions the region into a regular grid and supplements it with irregular sampling points. This allows for entirely flexible sampling configurations, enabling the formation of geometrically elongated sampling patterns optimized for the aspect ratios of ship targets. The initial sampling is defined in Equation (1):

Equation (1) defines three key components: R denotes the set of coordinates for convolution sampling positions, w corresponds to the convolutional kernel’s weight, and x (P0 + PN) indicates the pixel intensity at the location (P0 + PN).

Second, LDConv introduces learnable offset parameters to dynamically adjust the shape of the sampling grid. Each sampling point can adaptively shift based on the local content of the feature map. This mechanism constructs a deformation-aware sampling pattern that tightly aligns with the actual geometry of ship targets, effectively mitigating shape distortions caused by variations in imaging angles or occlusions.

Third, LDConv performs a feature resampling operation on the irregular sampling grid, applying the learned deformation to the input feature map. A convolution operation is then applied to the reorganized features, followed by Batch Normalization to stabilize the feature distribution. Finally, an SiLU activation function is employed for non-linear transformation. The smooth nature of the SiLU function preserves the continuity of features, which is particularly beneficial for capturing subtle feature variations of ship targets in SAR images.

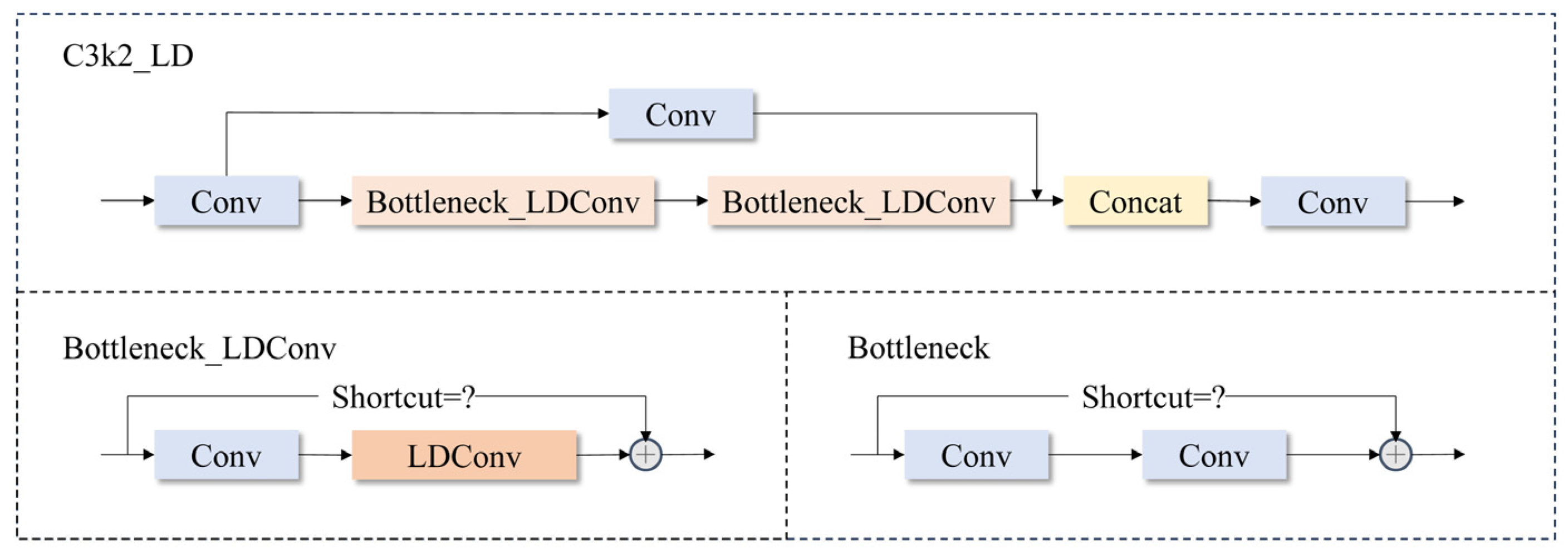

Building upon this, we further embed the LDConv module into the C3k2 structure and propose an integrated architecture termed C3k2_LD, aimed at enhancing the model’s capability for fine-grained feature modeling and representation efficiency.

Specifically, the C3k2_LD module adopts C2f as its basic framework while retaining its dual-branch structure. The main branch includes a switching mechanism controlled by the parameter c3k, which determines the type of submodule to be used. When c3k = False, the main branch employs a modified lightweight bottleneck unit, Bottleneck_LDConv, which introduces LDConv to replace the second convolutional layer in the standard residual bottleneck block.

Bottleneck_LDConv first compresses the channel dimension via a 1 × 1 convolution and then utilizes Linear Deformable Convolution (LDConv) to extract features representing spatial structural variation. Finally, a shortcut connection is applied conditionally, depending on whether the input and output channels are aligned. This design maintains low computational overhead while significantly enhancing the network’s ability to model local features such as edges and structural details. The overall structure of the C3k2_LD module is illustrated in

Figure 4.

2.2.2. Deformable Context-Aware Module

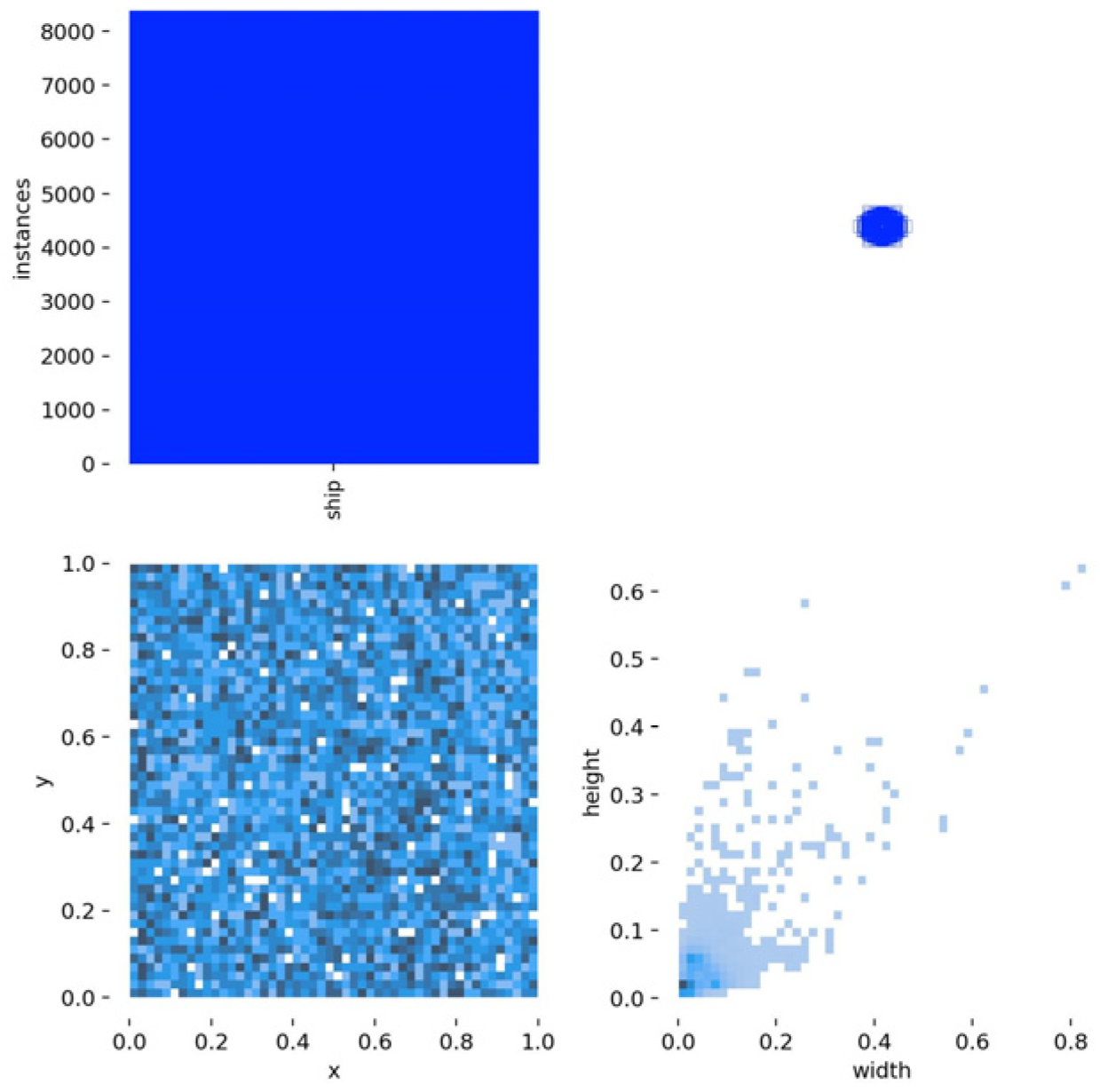

Figure 5 illustrates the distribution of ship instances in the HRSID dataset, which can reflect the common characteristics in SAR ship images. As shown, most ship targets exhibit a highly imbalanced aspect ratio, with widths generally much smaller than lengths, and their sizes predominantly fall in the small-to-medium scale range. Additionally, ship positions are scattered across the image plane without a clear spatial prior, while background regions such as sea clutter and coastal zones occupy the majority of the image. These characteristics lead to two challenges: (i) the elongated and small-scale shapes increase the risk of feature aliasing, making ships difficult to separate from surrounding noise; and (ii) the lack of positional regularity requires the network to dynamically emphasize discriminative structural cues rather than relying on fixed spatial priors.

To mitigate these issues, we design a novel attention mechanism named Deformable Context-Aware Module (DCAM). Inspired by deformable convolutions [

27], which enable spatially adaptive sampling and flexible alignment with irregular object geometries, DCAM leverages these properties to better accommodate the structural variability of ship targets in SAR imagery. This module is designed to better capture spatial structures and contextual relationships during feature extraction, thereby improving detection accuracy and robustness under complex imaging conditions. The structure of the DCAM is illustrated in

Figure 6.

Unlike conventional attention mechanisms that rely on static convolutional kernels within fixed receptive fields to model local responses, DCAM incorporates a dual mechanism of global context modeling and spatially adaptive sampling. First, a sliding average pooling operation with a kernel size of 7 is applied to the input feature map to aggregate contextual information. This step enhances the model’s ability to perceive regional statistical trends without introducing additional parameters, thereby enriching global semantic awareness. Subsequently, a convolution layer is applied to the pooled feature map to perform channel transformation and feature fusion while preserving spatial structural information.

On top of this, the core innovation of DCAM lies in the introduction of a Deformable Convolution operation. This mechanism enables the network to learn geometric offsets for each spatial position within the input feature, dynamically adjusting the sampling grid of the convolution kernel. By doing so, DCAM breaks free from the limitations of regular grid sampling and is particularly effective in capturing irregular contours, rotated shapes, and partially occluded targets—frequent occurrences in SAR-based ship detection tasks.

Finally, another convolution layer is applied to further refine the deformable features, followed by a Sigmoid activation function to generate the final attention map. The Sigmoid function normalizes responses across spatial positions and channels into the [0, 1] interval, thereby enhancing salient regions and suppressing background noise. This improves feature selectivity and expressive capacity, ultimately boosting the model’s sensitivity to complex targets.

Let the input feature map be denoted as

. The DCAM first aggregates contextual information using an average pooling operation:

Then, two convolution layers and one deformable convolution (denoted as DCN) are applied to extract structural context features and generate the attention map

A:

where

denotes the Sigmoid activation function, and DCN is the deformable convolution operator.

The final output feature map Y is obtained by applying the attention map A to the original input:

where ⊙ denotes element-wise multiplication, enabling the network to focus more on semantically meaningful regions.

Through this design, the DCAM module not only enhances the model’s perception of complex ship contours but also significantly improves feature discriminability under varying backgrounds, providing more stable and distinctive feature representations for subsequent detection heads.

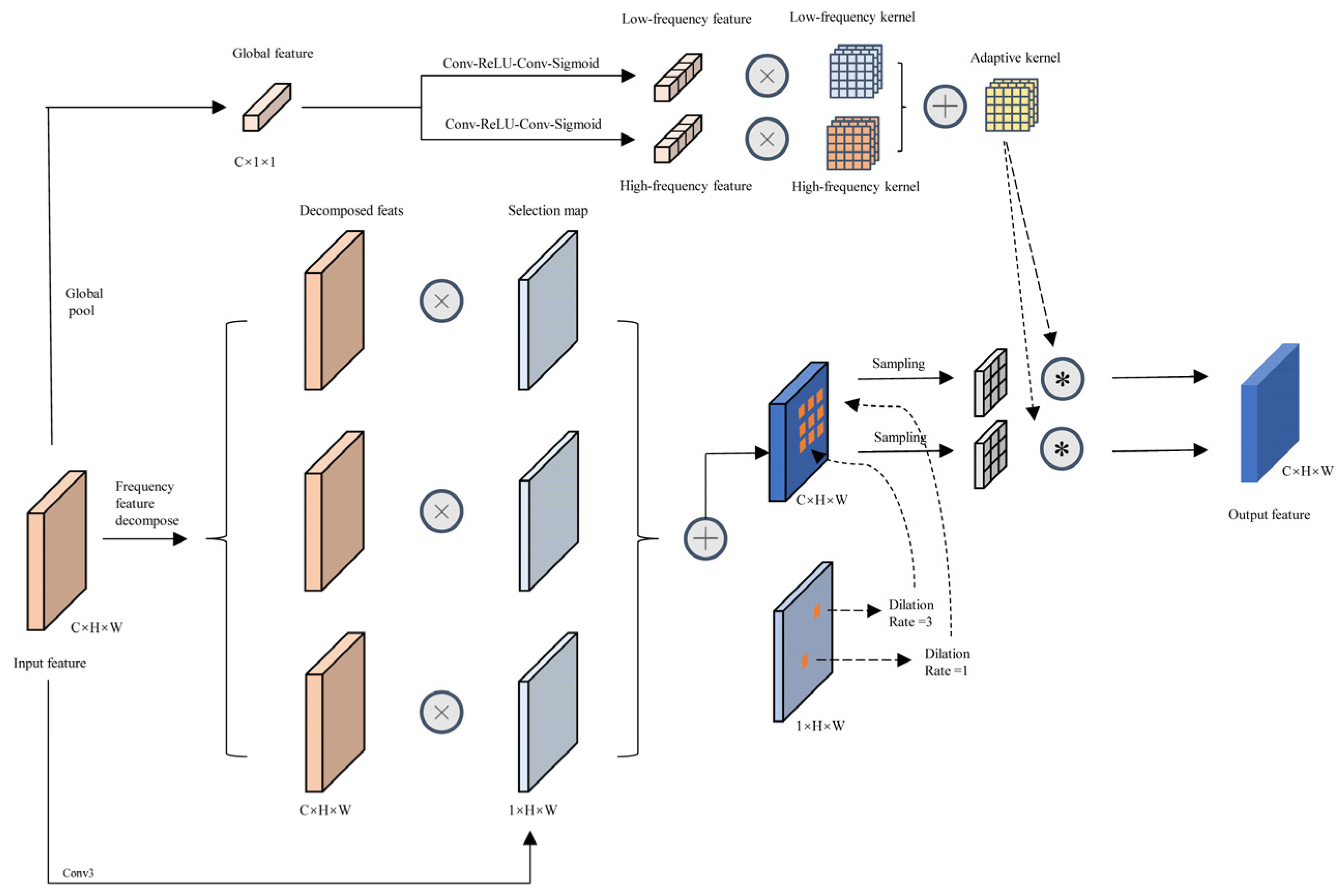

2.2.3. FAHead

During the imaging process, SAR images are often affected by electromagnetic scattering, viewpoint variations, and inherent imaging mechanisms, resulting in a spatially non-uniform distribution of spatial frequency components. For instance, structural regions such as ship edges typically exhibit localized high-frequency features, while the sea surface background tends to be low-frequency and smooth. This spatial frequency is of great significance in object detection, as it aids the model in distinguishing foreground objects from the background and enhances its sensitivity to structural details.

Figure 7 presents the Local High-Frequency Energy Overlay maps for both nearshore and pelagic scenarios. The highlighted red regions along ship contours indicate strong high-frequency responses that capture boundary sharpness and structural details, while the predominantly blue sea areas reflect low-frequency content associated with smooth and homogeneous backgrounds. This suggests that high-frequency energy concentrates at ship boundaries and can serve as a discriminative cue for detecting ships against cluttered maritime backgrounds.

To effectively leverage such spatial frequency differences, we introduce the Frequency-Adaptive Dilated Convolution (FADC), which replaces the Depthwise Separable Convolution (DWConv) in the detection head of YOLOv11. FADC incorporates a local frequency analysis mechanism to adaptively adjust the dilation rate of each convolutional receptive field according to the frequency distribution of different regions in the input feature map, which can effectively prevent the loss of critical details in high-frequency regions and the introduction of redundant noise in low-frequency areas. In areas with clear textures and complete structural edges, FADC automatically enlarges the receptive field to integrate a broader contextual range, whereas in low-frequency, texture-deficient, or occluded regions, it tends to reduce the receptive field, focusing on local visible details to avoid introducing irrelevant noise. Unlike traditional dilated convolution with a fixed dilation rate, FADC performs spatially adaptive adjustment based on local frequency characteristics, allowing the receptive field to flexibly vary in response to structural and textural complexity across the image.

In addition, the FADC module integrates a frequency-decomposed convolution kernel (AdaKern), which separates the weights of conventional convolution kernels in the frequency domain and explicitly enhances the high-frequency components. This design reinforces the model’s sensitivity to structural discontinuities, occlusion-related regions, and high-frequency boundaries, enabling the network to better complete and reconstruct features in semantically discontinuous areas. As a result, the model demonstrates improved discrimination capability for typical SAR-specific challenges such as structural variation, edge occlusion, and target ambiguity. The architecture of the FADC module is illustrated in

Figure 8.

2.2.4. Inner-EIOU

In object detection, bounding box regression is crucial for accurately localizing targets. Traditional IoU-based loss functions, such as IoU and its variants, measure spatial overlap between predicted and ground-truth boxes to guide regression. The mathematical definition of IoU is as follows:

However, standard IoU loss fails to provide meaningful gradients when boxes do not intersect and lacks modeling of geometric misalignments like center offset or aspect ratio differences. The Enhanced IoU (EIoU) [

28] loss addresses this issue. It incorporates the center distance, the width and height differences, and the geometry of the smallest enclosing box into the loss function. By jointly constraining the position, size, and shape of bounding boxes, EIoU provides stronger regression capability and optimization performance. The calculation of EIoU is defined as follows:

where

is the diagonal length of the smallest enclosing box for the predicted and ground-truth boxes, and

and

are the width and height of the enclosing box, respectively.

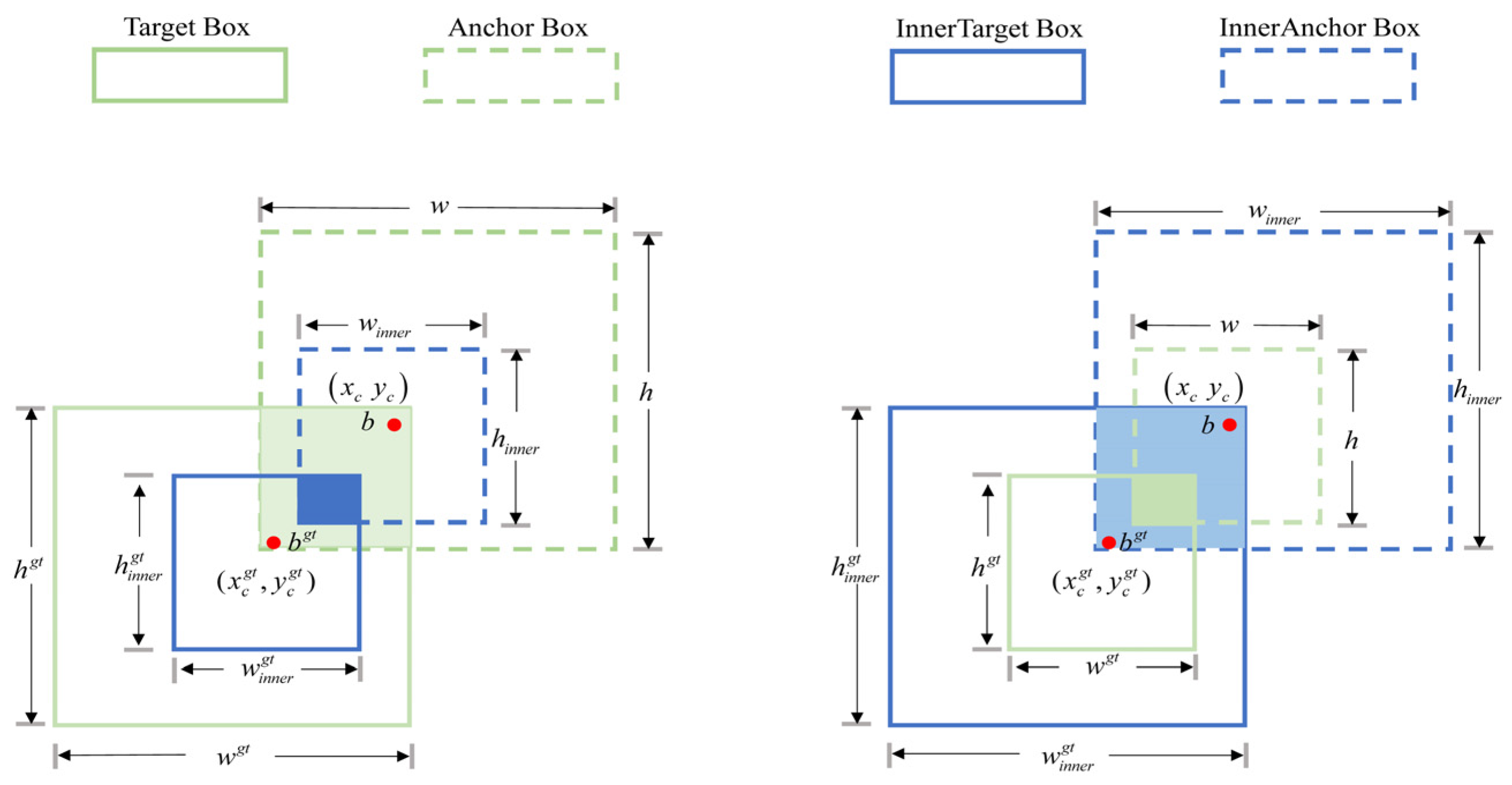

Although EIoU improves the modeling of geometric deviation by introducing center point distance and box size differences, its optimization remains limited to the geometric relationship between the predicted and ground-truth boxes. It overlooks the “inclusion relationship” between boxes, which carries potential semantic significance. For example, when the predicted box is entirely contained within the ground-truth box, or only partially overlaps it, there can still be considerable localization error. However, the drop in EIoU loss is relatively limited in such cases, making it insufficient in penalizing structural mismatches. This issue is particularly prominent in SAR ship detection tasks. Ship targets often present elongated shapes and blurred boundaries, which makes it easy for predicted boxes to resemble the contour shape but still exhibit significant boundary deviations. As a result, the localization robustness and detection accuracy are affected.

To address this problem, this paper incorporates the Inner-EIoU loss function inspired by the concept of inner-region awareness [

29] to enhance localization accuracy, which structurally enhances the traditional EIoU by incorporating the concept of Inner-IoU. The schematic of this method is illustrated in

Figure 9. Based on EIoU, the proposed approach adds an inclusion-guided mechanism that measures whether the predicted box is fully enclosed within the ground-truth box, or vice versa. This encourages the predicted box to better fit within the ground-truth in both position and scale. The inclusion guidance improves sensitivity to partial coverage and internal misalignment, which is especially beneficial for fitting targets with unclear boundaries, severe occlusion, or background interference in SAR imagery.

For a predicted box

B and a ground-truth box

, Inner-IoU introduces a scaling factor

, which generates auxiliary boxes centered at the respective box centers. Let the centers of the two boxes be

and

, with widths and heights

and

,respectively. The auxiliary ground-truth box is defined as follows:

Similarly, the auxiliary predicted box is given by the following:

where

∈ [0.5,1.5] is used to adjust the size of the auxiliary boxes relative to the originals.

Next, the intersection area of the two auxiliary boxes is calculated.

Then, their individual areas and union area are computed:

Finally, the Inner-EIoU loss function is defined by integrating this term into the EIoU formulation:

Overall, the Inner-EIoU loss effectively enhances bounding box precision by explicitly considering internal inclusion relationships, thereby improving localization robustness for targets with ambiguous edges, partial occlusion, or complex backgrounds in SAR images.

3. Results

3.1. Experimental Settings

All experiments were conducted on an Ubuntu 20.04 operating system. The hardware platform was equipped with an Intel(R) Xeon(R) Platinum 8481C processor, 80 GB of RAM, and an NVIDIA GeForce RTX 4090D GPU. The implementation was based on the PyTorch 1.11.0 deep learning framework, with CUDA version 11.3 and Python version 3.8. The number of training epochs was set to 300, and the batch size was set to 32.

3.2. Dataset

To thoroughly evaluate the effectiveness and generalization capability of the proposed YOLO-LDFI model in synthetic aperture radar (SAR) ship detection tasks, we conducted experiments on two widely recognized public datasets: HRSID [

25] and SSDD [

26]. For training and evaluation, 70% of the images were randomly selected for training, 20% were selected for validation, and the remaining 10% were selected for testing. The key characteristics of these datasets are summarized in

Table 1.

The HRSID dataset comprises high-resolution SAR images with various ship targets, covering complex maritime environments such as open seas, coastlines, harbors, and docks. The original data were segmented into 5604 image patches of size 800 × 800 pixels. A total of 16,591 ship instances are annotated, with small ships accounting for 54.5%, medium-sized ships for 43.5%, and large vessels for 2%. The spatial resolution of the images ranges from sub-meter (0.5 m) to medium resolution (3 m), making the dataset highly representative of real-world conditions. Sample images from the dataset are illustrated in

Figure 10.

The SSDD dataset is one of the earliest and most widely used open-source benchmarks for SAR ship detection. It consists of 1160 images collected from multiple satellite platforms, including RadarSat-2, TerraSAR-X, and Sentinel-1. The images have a fixed size of 500 × 500 pixels and a spatial resolution varying between 1 and 15 m. A total of 2456 ship targets are annotated within diverse maritime scenes under different sea states and polarization modes. Sample images from the dataset are illustrated in

Figure 11.

3.3. Evaluation Metrics

To comprehensively evaluate the performance of the improved model, several metrics were adopted, including mean Average Precision at IoU threshold 0.5 (mAP50), Precision (P), Recall (R), F1-score, the number of parameters, and GFLOPs (Giga Floating Point Operations per second).

Mean Average Precision (MAP) measures the overall detection performance across all classes. It is defined as follows:

where

denotes the precision–recall curve, and the area under the curve corresponds to the AP value.

Precision (P) reflects the proportion of true positive detections among all positive predictions. It is used to evaluate the false positive rate and is calculated as follows:

where TP is the number of true positive samples and FP is the number of false positive samples.

Recall (R) represents the proportion of true positive samples correctly detected among all actual positives. It is used to assess the model’s ability to avoid missed detections:

where FN is the number of false negative samples.

F1-score is the harmonic mean of precision and recall, providing a balanced metric that accounts for both false positives and false negatives:

3.4. Ablation Study

To verify the effectiveness of each proposed module, two groups of ablation experiments were conducted. Ablation Study 1 focused on the first three modules, namely, LDConv, the DCAM attention mechanism, and the FAHead detection head, to evaluate their individual and combined impacts on overall performance. Ablation Study 2 compared different loss functions, with a particular emphasis on the Inner-EIoU loss, to analyze their influence on detection accuracy and localization precision. The experimental setup of Ablation Study 1 is shown in

Table 2, and the results for each configuration are summarized in

Table 3, while the results of Ablation Study 2 are reported in

Table 4.

Compared to the baseline model, the introduction of the LDConv module alone improved the AP50 by 0.7%, reaching 88.3%. Meanwhile, the number of parameters was reduced from 2.62 M to 2.49 M, and the GFLOPs dropped to 6.4. These results demonstrate that LDConv enhances the model’s ability to adapt to object deformations while also contributing to parameter compression.

In experiment ②, the model was enhanced solely by incorporating the DCAM attention mechanism. This led to an increase in AP50 to 89.3%, and the F1-score improved from 85.4 to 86.1. The results indicate that DCAM, through its dynamic context modeling capability, effectively enhances the model’s discrimination ability in complex backgrounds and low signal-to-noise SAR image regions.

In experiment ③, replacing the original detection head with the improved FAHead led to a 1.5% improvement in detection precision over the baseline. Although the introduction of FAHead slightly increased GFLOPs, the module significantly improved the model’s ability to identify key SAR-specific features such as structural discontinuities, edge occlusions, and blurred targets, partially offsetting the cost of increased computation.

In experiment ④, the joint use of LDConv and DCAM yields a 2% improvement over the baseline in AP50. The effectiveness lies in their complementary design: LDConv adaptively aligns feature sampling with object structures, while DCAM strengthens the representation of salient regions against background clutter. Their synergy produces more discriminative features, leading to enhanced detection accuracy.

In experiment ⑤, combining LDConv with FAHead yields a smaller gain, with AP50 being 0.7% lower than in experiment ④. This is likely because LDConv emphasizes the spatial alignment of features, while FAHead focuses on frequency-adaptive receptive fields; their optimization directions are not fully aligned, leading to partial redundancy and thus a reduced improvement.

In experiment ⑥, replacing LDConv with DCAM while keeping FAHead raises AP50 by 0.6% versus experiment 5. This is because DCAM suppresses sea-clutter and emphasizes salient ship contours and steers sampling toward salient ship contours, synergizing with FAHead’s frequency-adaptive dilation that resolves boundary details. Compared to experiment 4, AP50 is lower because removing LDConv weakens backbone deformation alignment, which DCAM cannot fully correct.

In experiment ⑦, the model integrates three of the four key improvements and achieves an AP50 of 90.3%, representing a 2.7% gain over the baseline. The gain arises from their complementary functions: each module addresses a different weakness in detection—geometric consistency, contextual discrimination, and frequency-sensitive detail. When combined, they create a mutually reinforcing mechanism where localization and classification reinforce each other, whereas omitting any one of them creates a structural gap for which the others cannot compensate, leading to degraded accuracy. This integrated effect cannot be replicated by any single module alone, highlighting the necessity of their combined application for robust performance.

In Ablation Experiment 2, we further investigated the impact of different loss functions on detection performance. Among the compared candidates, EIoU loss exhibited the most promising results, demonstrating its potential to enhance bounding box regression. This improvement can be attributed to its design: (i) penalizing the distance between the predicted and ground-truth centers, thereby refining localization accuracy; (ii) incorporating width and height discrepancies to ensure closer shape alignment; and (iii) leveraging the size of the minimum enclosing box to increase sensitivity to scale and position. Building upon these advantages, we further introduced an Inner-based modification to EIoU, which emphasizes more precise structural constraints within the bounding box. This refinement led to an additional 0.4% gain in AP50, effectively accommodating the irregular shapes and scale variations inherent in SAR ship images.

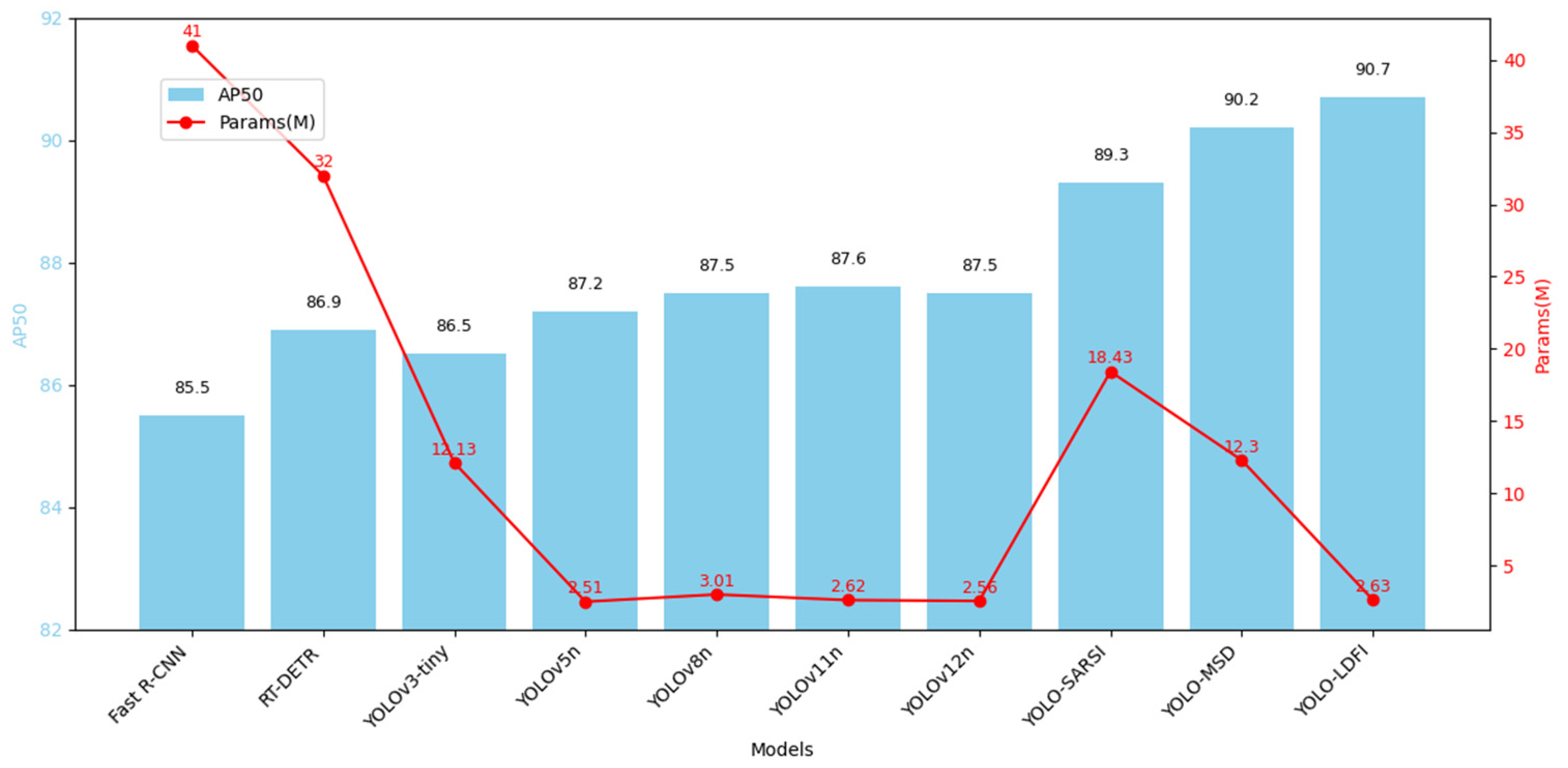

3.5. Comparison with Different Network Models

To comprehensively evaluate the performance advantages of the proposed YOLOv11-LDFI model in SAR ship target detection tasks, we conducted comparison experiments against a range of representative object detection models. These include the two-stage detection model R-CNN, the Transformer-based lightweight model RT-DETR, and several lightweight YOLO series models including YOLOv3-tiny, YOLOv5n, YOLOv8n, and YOLOv11n, as well as the SAR-specific improved models YOLO-SARSI [

30] and YOLO-MSD [

31]. All models were evaluated under the same training configuration and SAR remote sensing dataset. The evaluation metrics include AP50, Precision (P), Recall (R), F1 score, number of parameters (Params), and computational complexity (GFLOPs). The experimental results are summarized in

Table 5.

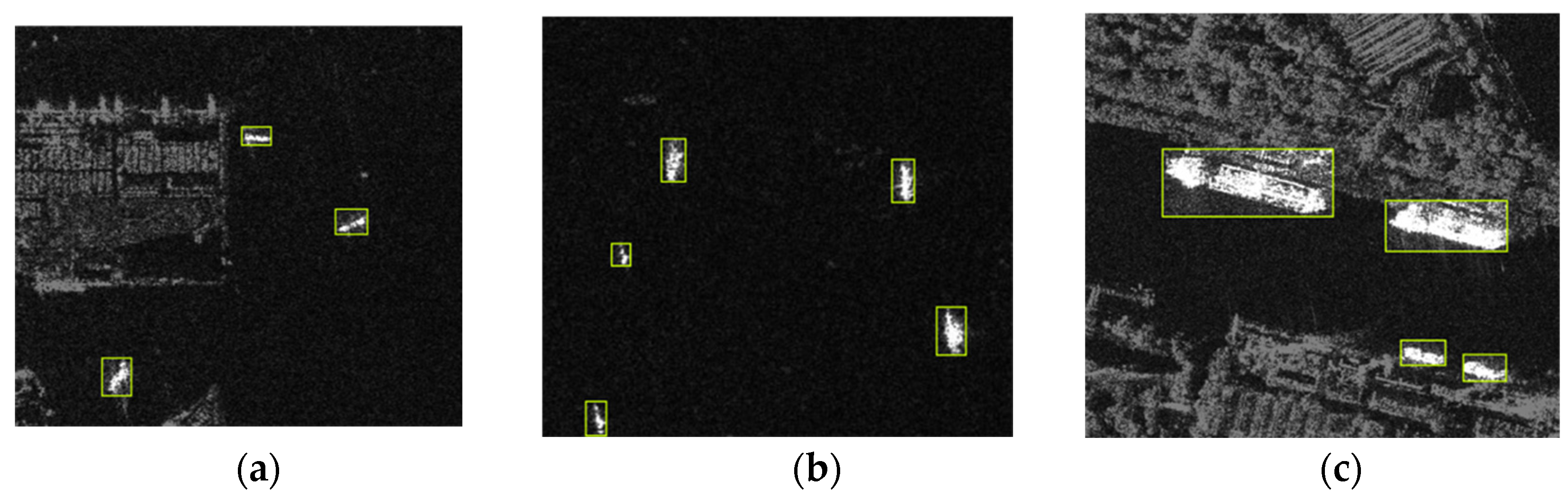

The results show that the traditional two-stage method R-CNN incurs a high computational burden, with 41M parameters and 235 GFLOPs, making it unsuitable for real-time and resource-constrained applications. RT-DETR, as a lightweight Transformer-based detector, achieved an AP50 of 86.9% while reducing computational overhead compared to R-CNN. However, it still falls short of the YOLO series in achieving a balance between accuracy and efficiency. The visual comparisons of different models are presented in

Figure 12.

Among the general-purpose YOLO models, YOLOv3-tiny, YOLOv5n, YOLOv8n, and YOLOv11n demonstrated competitive detection performance with relatively small parameter sizes. Notably, YOLOv11n outperformed both YOLOv5n and YOLOv8n in terms of AP50 and F1 score, indicating that its redesigned backbone and neck networks significantly enhanced feature extraction and scale awareness, thereby improving the overall detection capability.

For SAR-specific models, YOLO-SARSI leveraged the AMMRF multi-scale receptive field convolution block to better utilize spatial information, achieving an AP50 of 89.3%. YOLO-MSD, through the integration of a Deep Polygon Kernel Network (DPK-Net) and a Bidirectional Spatial Attention Module (BSAM), reached 90.2% in AP50, showing a clear improvement in handling SAR image features. However, these improvements came at the cost of larger parameter sizes, with YOLO-SARSI and YOLO-MSD containing 18.43M and 12.3 M parameters, respectively, indicating substantial computational demands.

In contrast, the proposed YOLOv11-LDFI achieved a 0.5% improvement in AP50 over YOLO-MSD, despite using only 21.3% of its parameters, and surpassed the baseline by 3.1%. These results confirm that YOLOv11-LDFI, by fully considering the unique characteristics of SAR imagery—including diverse geometric structures, occlusion, high noise, and blurred boundaries—and through the synergistic design of LDConv, DCAM, FAHead, and Inner-EIoU, significantly enhances detection accuracy and robustness. The model exhibits strong generalization capability and deployment value under practical constraints.

To further evaluate the generalization performance of the proposed model, we additionally conducted comparative experiments on the SSDD dataset, which includes SAR images with diverse sea states and satellite sources. The experimental configuration and results are presented in

Table 6. The visual comparisons of different models are presented in

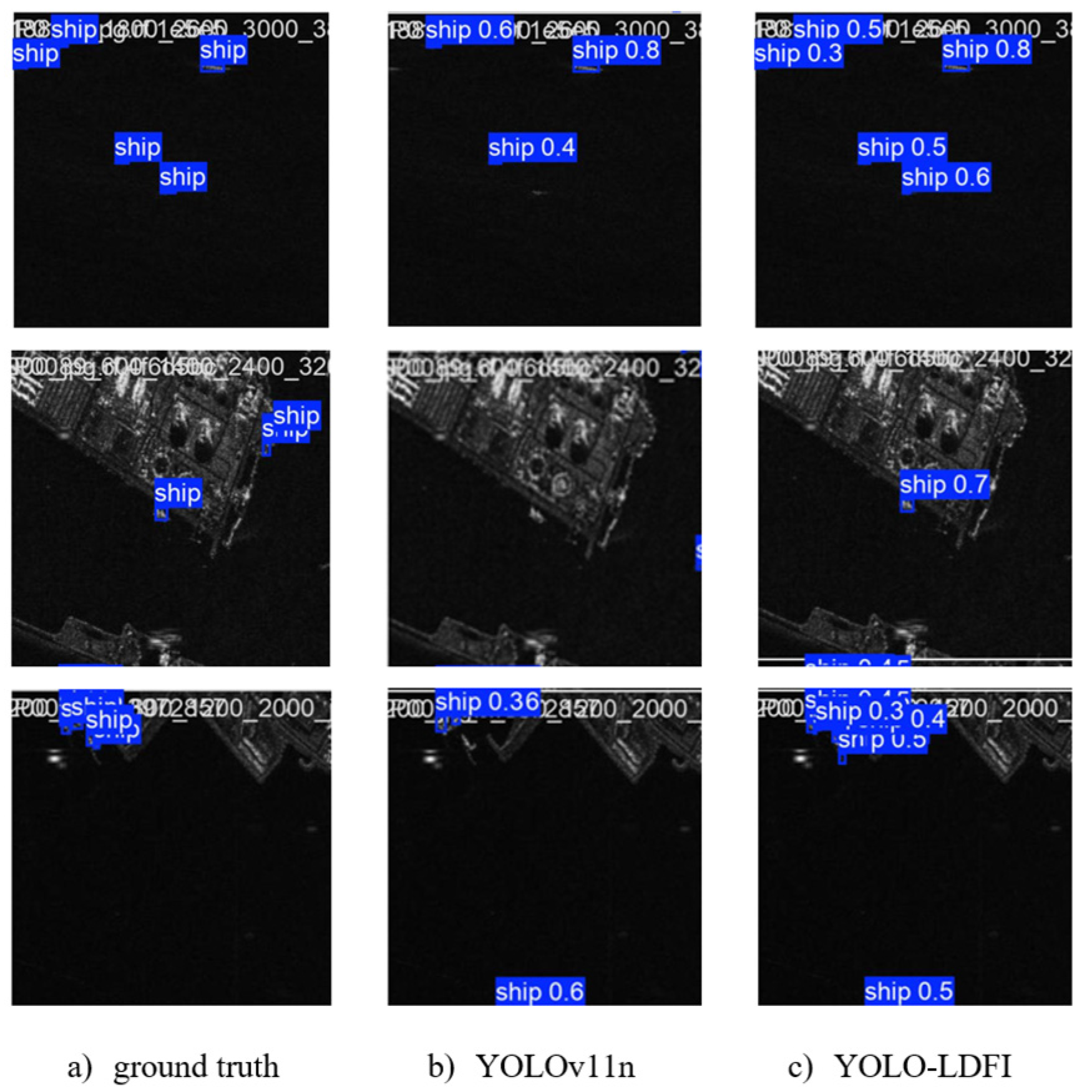

Figure 13. Consistent with the observations on the HRSID dataset, YOLO-LDFI also demonstrates superior detection performance on SSDD, particularly in terms of localization accuracy and robustness under complex maritime backgrounds.

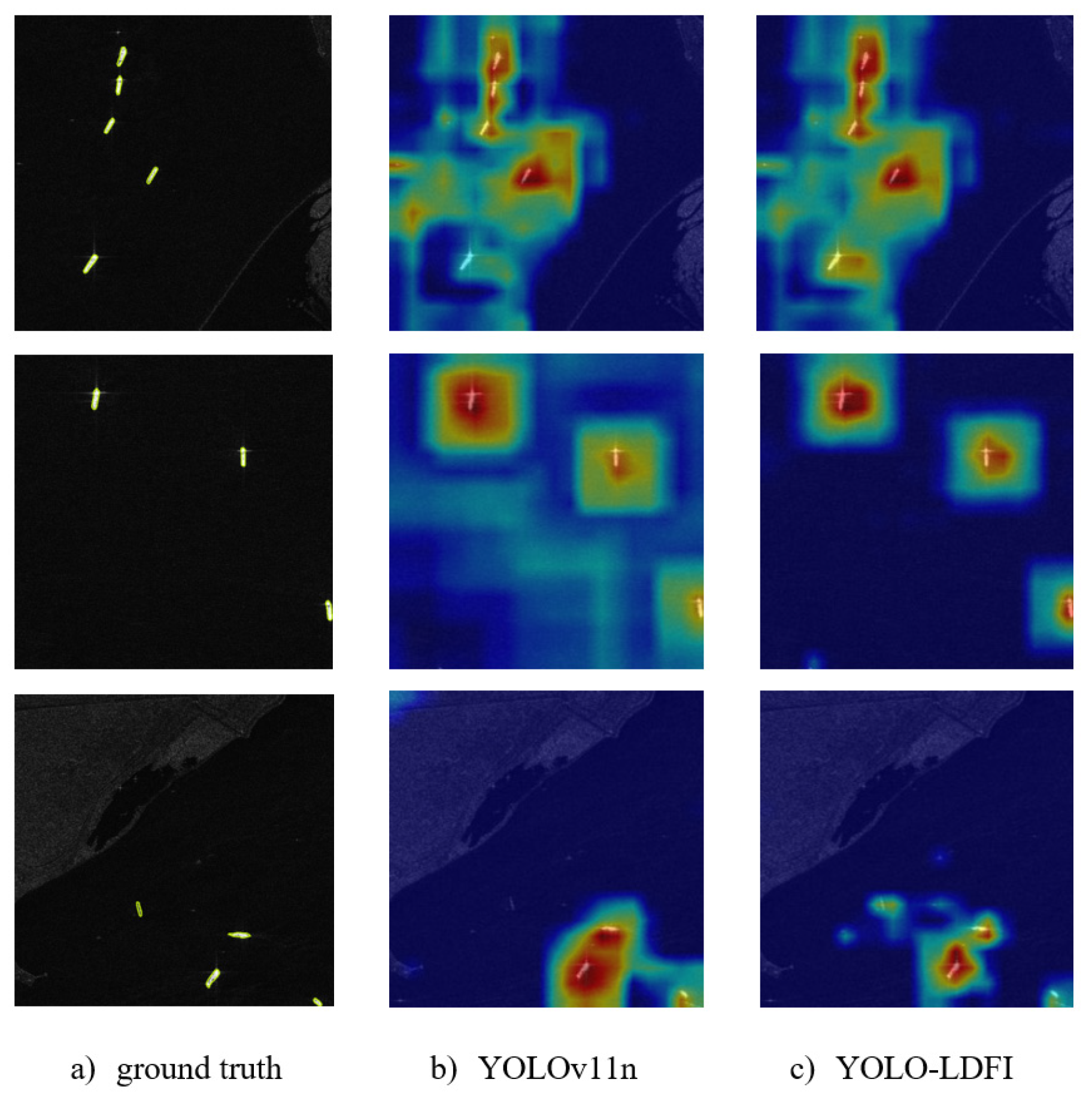

3.6. Visualization of Detection Results

To further validate the effectiveness of the proposed method in enhancing detection performance, this study employs Grad-CAM (Gradient-weighted Class Activation Mapping) to visualize the attention distribution within the models. By generating heatmaps, we illustrate how different models focus on key regions during the feature extraction process. In addition, by comparing the confidence bounding boxes with the corresponding ground-truth annotations, we intuitively demonstrate the improvements brought by our model in terms of localization precision and boundary fitting accuracy. This visualization analysis not only helps reveal the model’s discrimination mechanism in complex scenarios but also supports the effectiveness of the proposed structural enhancements in improving target perception. The results are illustrated in

Figure 14.

In the first row of heatmap visualizations, the baseline YOLOv11n model exhibits noticeable attention gaps in some ship regions—most notably, it fails to detect a vessel located at the bottom of the image. Additionally, false high responses are observed in the left background sea area where no targets exist. In contrast, the heatmap from YOLO-LDFI shows accurate and focused activation on all five ships, demonstrating that the introduced DCAM and FADC mechanisms effectively enhance the model’s spatial modeling and target localization capabilities.

The images in the second row represent the experimental results of ships in the pelagic region. YOLOv11n exhibits a coarse activation pattern, where the highlighted regions extend beyond the actual ship boundaries and incorporate substantial background responses. This indicates that the baseline model tends to rely on large receptive fields without sufficient discrimination, leading to blurred spatial localization and the risk of false activations around surrounding clutter. By contrast, YOLO-LDFI produces more compact and edge-focused responses, successfully concentrating on the ships themselves while suppressing redundant background noise. Such behavior demonstrates that the proposed modules enhance the model’s ability to capture fine-grained structural cues, which is particularly beneficial for the detection of small-scale or closely spaced targets.

In the third row, the images represent the experimental results of ships in the nearshore region. The difference is mainly that the model misses a small nearshore vessel, while the highlighted regions still extend beyond the actual ship boundaries and incorporate substantial background responses, as in the second row. In contrast, YOLO-LDFI shows sharper and more selective responses that are predominantly confined to the ship areas, indicating an improved capacity to disentangle target signatures from heterogeneous background structures. This suggests that the proposed improvements not only enhance feature localization but also increase robustness against background interference.

Figure 15 presents the actual detection results of YOLO-LDFI and YOLOv11n, providing a comparative illustration of their performance in complex maritime scenarios. The visualization highlights differences in target localization, background suppression, and detection completeness between the two models.

The first row shows detection results in an offshore scenario. As observed, YOLOv11n misses three small targets, whereas YOLO-LDFI successfully detects all ships. This demonstrates that by leveraging frequency-aware feature extraction, YOLO-LDFI achieves more complete and precise detection, particularly for small or subtle targets in challenging marine environments.

The second row features a port scene characterized by dense architectural structures and complex background textures, which can amplify interference and blur object boundaries. In such scenarios, YOLOv11n fails to detect any targets, indicating its limited feature separation capability under strong background noise. On the other hand, YOLO-LDFI successfully detects a ship located at the edge of the port with a confidence score of 0.7, highlighting its superior responsiveness in high-interference environments. Although some extremely small ships remain undetected—likely due to their near-noise-scale size and incomplete edge information—these results also reflect the general boundary perception limitations of the YOLO family when handling severely degraded targets.

In the third row, which depicts nearshore scenes with low-contrast and small objects, YOLOv11n detects only one ship with low confidence, showing evident missed detections. In contrast, YOLO-LDFI correctly identifies more targets with higher accuracy, showcasing stronger capability in small object perception and fine-grained feature extraction.

In summary, YOLO-LDFI consistently demonstrates more stable and accurate detection performance across various types of typical SAR image environments. These results further validate the effectiveness and synergistic gain of the proposed modules in addressing the challenges of complex remote sensing detection tasks.

4. Discussion

The proposed YOLO-LDFI model integrates four key architectural enhancements—LDConv, DCAM, FAHead, and Inner-EIoU—to significantly improve ship target detection performance in SAR imagery while maintaining the lightweight characteristics of the baseline YOLOv11n framework. Experimental results demonstrate that LDConv enables the dynamic alignment of ship contour features, effectively mitigating shape deformation disturbances. The DCAM module enhances the model’s spatial perception and contextual modeling capabilities, thereby improving robustness under complex background conditions. The FAHead, built upon the FADC structure, refines multi-scale feature representation and boosts detection accuracy in high-frequency regions. Furthermore, the Inner-EIoU loss function optimizes the bounding box regression strategy, resulting in improved localization accuracy and training stability.

These four components exhibit complementary and synergistic effects across multiple aspects of the detection pipeline, indicating the strong practical adaptability and integration potential of the proposed design. Notably, YOLO-LDFI achieves a 3.1% increase in AP50 and a 2.9% improvement in F1 score while keeping the total number of parameters (2.63M) and computational complexity (6.7 GFLOPs) nearly unchanged. This underscores the model’s excellent accuracy–efficiency balance, making it well suited for deployment on resource-constrained platforms.

Future work may focus on several targeted challenges identified during the experiments to further enhance the performance and robustness of YOLO-LDFI in SAR target detection. SAR images are often characterized by distinct spectral properties and complex textures. Therefore, incorporating techniques such as multi-resolution reconstruction [

34], frequency domain enhancement, or wavelet transforms could enable richer spectral decomposition and modeling of the input images, thereby improving the model’s adaptability to complex oceanic backscatter structures. Moreover, frequency resolution may play a critical role in distinguishing ship structural elements from background signals such as sea surface, wind waves, and coastline features, particularly when considering motion-induced Doppler distortions. Careful exploration of these frequency characteristics could provide deeper insights into ship feature representation and further improve detection accuracy. Additionally, the high cost of acquiring annotated SAR datasets and the significant domain gaps between different platforms remain critical challenges. To address this, future research could integrate self-supervised pretraining, contrastive learning, or few-shot domain generalization techniques with detection models to exploit structural priors and semantic cues inherent to SAR images. This would improve model adaptability under conditions of limited data or distribution shifts.

5. Conclusions

This study addresses the challenges in ship target detection within Synthetic Aperture Radar (SAR) imagery, which include complex geometric shapes, blurred edges, severe speckle interference, and the difficulty of lightweight model deployment. To this end, a lightweight and improved detection model, YOLO-LDFI, is proposed. Based on YOLOv11n, the model integrates four key modules: a lightweight deformable convolution (LDConv) with learnable offsets, designed to enhance the alignment and representation of target contours; a deformable context-aware attention mechanism (DCAM), which strengthens the model’s focus on salient regions under complex marine backgrounds; a frequency-adaptive dilated convolution module (FAHead) in the detection head, aiming to improve receptive field modulation in response to local spectral variations in SAR images; and a structurally aware regression loss function (Inner-EIoU), introduced to optimize the regression strategy for high-precision bounding box localization.

Experiments conducted on the public HRSID SAR ship detection dataset demonstrate that YOLO-LDFI achieves an AP50 of 90.7% and an F1 score of 87.0%, all while maintaining a parameter count of 2.63 M and a computational cost of 6.7 GFLOPs, thereby achieving an improved balance between detection accuracy and computational efficiency. The ablation study further confirms the effectiveness and complementarity of each module in enhancing spatial modeling, structural perception, and target localization accuracy.

Overall, YOLO-LDFI effectively combines deformable modeling, dynamic attention mechanisms, and lightweight detection architecture to provide an efficient, robust, and deployment-friendly solution for SAR ship detection. Looking ahead, the model may be further enhanced by integrating frequency-domain modeling, self-supervised learning, and cross-modal transfer strategies, aiming to improve generalization across diverse marine environments and SAR platforms. This would significantly expand its practical utility in applications such as maritime surveillance, law enforcement, and ocean security.