A Crossover Adjustment Method Considering the Beam Incident Angle for a Multibeam Bathymetric Survey Based on USV Swarms

Abstract

1. Introduction

- (1)

- The coordination of USVs leads to the superposition of systematic errors.

- (2)

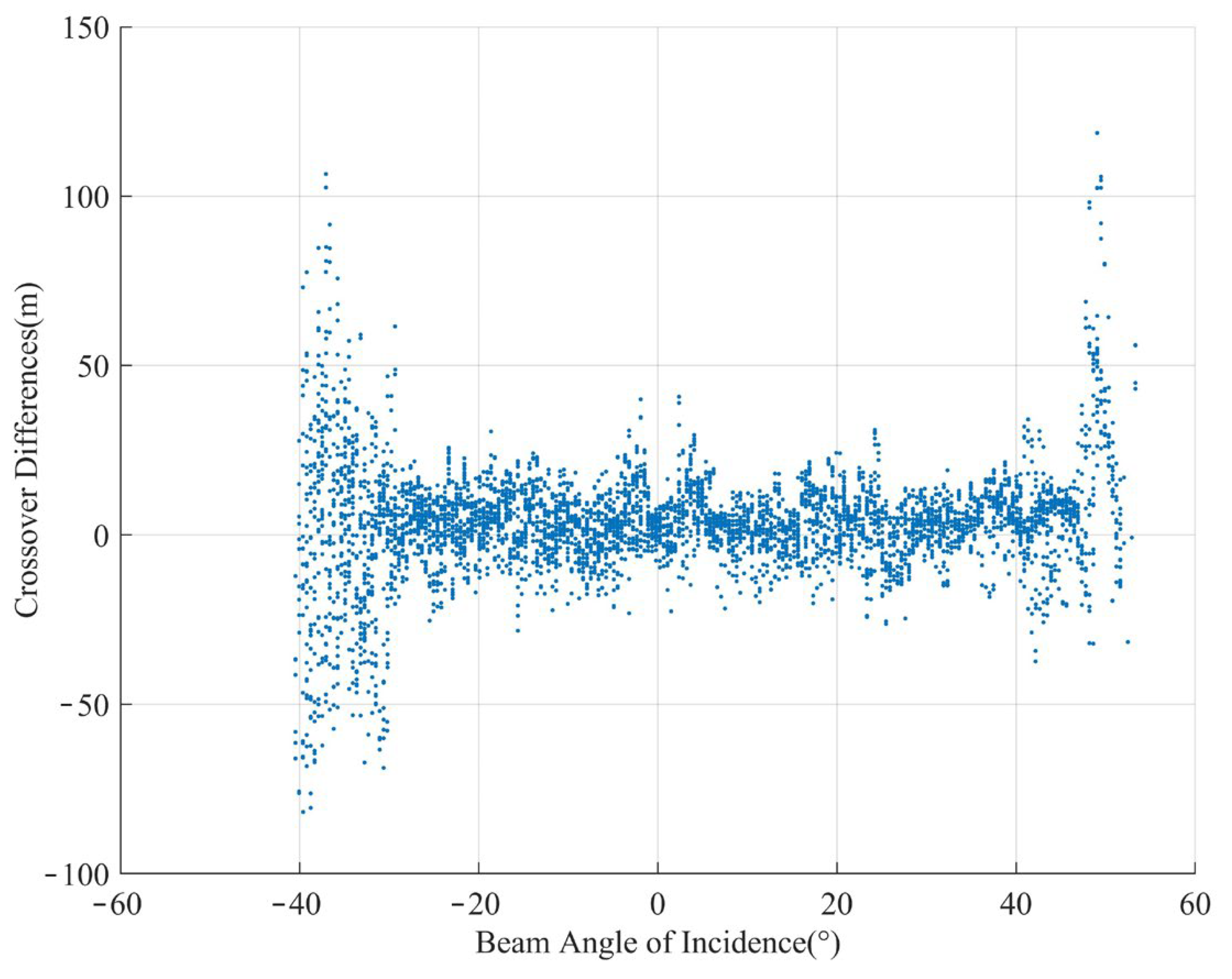

- The error propagation of the incident angle of the edge beam intensifies in the MBES.

- (1)

- A gross error detection model is constructed based on machine learning. The adaptive Density-Based Spatial Clustering of Applications with Noise (DBSCAN) algorithm is proposed. Dynamically optimizing the neighborhood radius Eps and the minimum sample number of parameters MinPts enables the intelligent recognition of gross errors in crossover point differences.

- (2)

- An incident angle-weighted error compensation model is established. Taking the incident angle function as the weight, an improved depth crossover difference quadratic surface model is constructed to suppress the edge error effect of multiple beams.

- (3)

- A regularized weighted least-squares framework is designed. The Tikhonov regularization matrix is introduced to effectively solve the pathological problem of the normal equation and ensure the stability of the solution after calculations with large volumes of data.

2. Methods

2.1. Error Elimination Method for Multibeam Bathymetry Data Based on Adaptive DBSCAN

2.2. Error Correction Method for Quadratic Surface Model of Bathymetric Data Based on BIA

2.2.1. The Quadratic Surface Error Correction Model for Bathymetric Data Considering the BIA

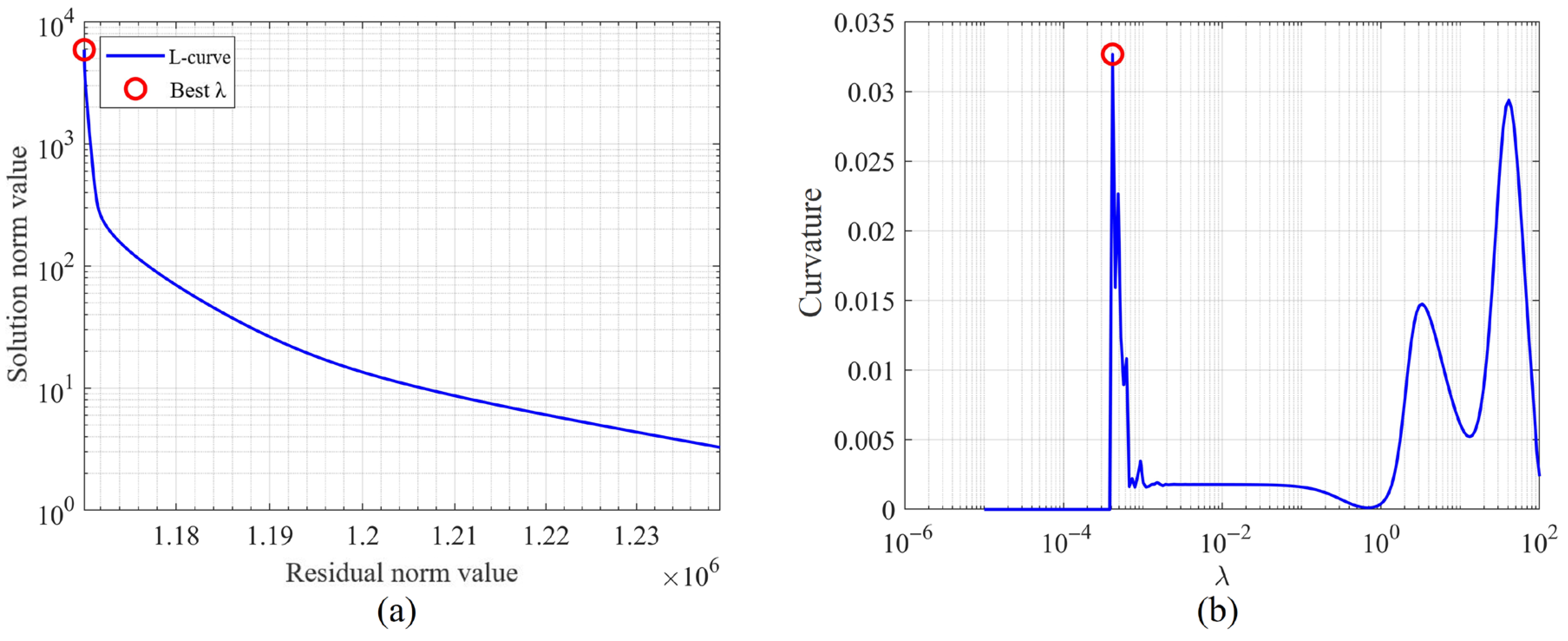

2.2.2. Regularized Weighted Least-Squares Adjustment Method for Crossover Adjustment of Bathymetric Data

- (1)

- Initializing: Taking the ordinary weighted least squares (WLS) solution as the iteration starting point to avoid the convergence instability caused by random initialization, we get

- (2)

- Calculating the residual: We calculate the deviation between the predicted value of the current iterative solution and the actual observed value L. The absolute value of the residuals , shown in (22), is used to dynamically adjust the weights; outliers correspond to large residuals, and the weights decrease. The size of the residuals reflects the fit degree of the model to the data points and guides the subsequent weight update.

- (3)

- Updating the weights: Data points with larger residuals (which may be outliers) have lower weights, weakening their influence on the next round of parameter estimation. In this study, the principle of using a weight update function based on residuals is to enhance the model’s poor resistance by smoothly adjusting the weights. When the residual value is large, its weight value can be reduced to weaken its influence on the next round of solutions. When the residual value is small, the weight value remains basically unchanged, and the effective water depth information is retained. The weight update function proposed in this study is essentially an equivalent variant of the Huber loss [51]. The Huber loss weight update function is:When approaching 0, it degenerates into Equation (24). The design of the denominator , avoids a zero value, and, at the same time, smooth adjustment is achieved to enhance the robustness of the model. In multibeam bathymetric data, outliers caused by residual systematic errors can be effectively suppressed.Based on the Huber loss, this study simplifies the algorithm steps by using a specific value. This not only effectively improves the computational efficiency but also satisfies the need to improve the model’s poor resistance ability.

- (4)

- Regularizing solutions: Through regularization solutions, the pathological nature of the matrix is suppressed and the numerical stability is improved.

- (5)

- Stopping solutions: When the variation in the solutions in adjacent iterations is less than 10−6, this indicates that the parameter estimation tends to be stable.

2.3. Evaluation Indicators

3. Materials and Experiments

3.1. Experimental Data

3.2. Experimental Process

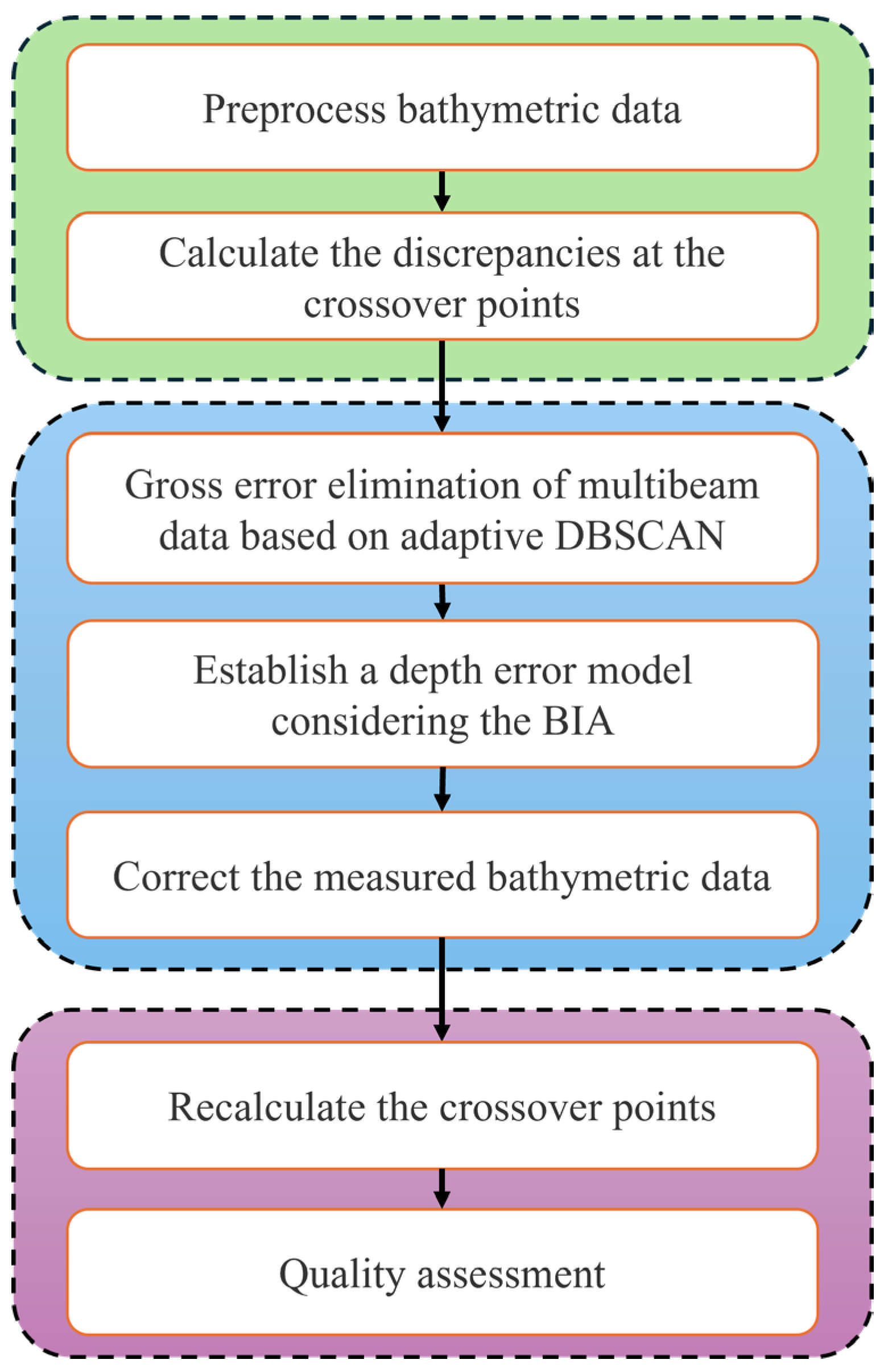

- (1)

- The preprocessing of depth measurement data: In the experiment, data processing such as draft correction, attitude correction, and water level correction was first carried out on the sounding data. Due to the influence of noise, the multibeam sounding data would contain obvious gross errors. Before fusing with high-precision sounding data, these gross errors had to be eliminated. Otherwise, the multibeam sounding data with gross errors being brought into the fused data model would have had a serious impact on the calculation.

- (2)

- Calculating the difference at the crossover of multibeam data: The 2022 edition of the Chinese National Standard for Hydrographic Surveys specification stipulates that depths within 1.0 mm between two points on a map are overlapping depths, while other parameters are not specified. Therefore, in this paper, the positions of each measurement point on the main measurement line were directly selected. This method can avoid additional depth errors and position errors generated during the grid processing of multibeam data, which may affect the accuracy and credibility of the measurement data. In the practical process of deep-sea and far-sea measurement, the scale of the survey area is generally 1:100,000. Therefore, 100 m was selected as the evaluation index for the same position. It is considered that two depths within 100 m belong to the same position and should participate in the comparison of the difference at crossover points.

- (3)

- Eliminating gross errors from multibeam data based on the proposed adaptive DBSCAN: We processed the crossover points data in accordance with the methods in Section 2.1 and set the parameters Eps and MinPts reasonably. Then, the data after the elimination of gross errors were compared with the data before the elimination of gross errors to test the effect of eliminating gross errors.

- (4)

- Establishing the depth error model considering the BIA: Using information such as the crossover point position, depth, and BIA obtained through screening, a bathymetric data correction model was established and the parameters of the characteristic equation were solved using the multiple linear regression method.

- (5)

- Correcting bathymetric data: The depth correction model based on incident angle compensation in Section 2.3 was utilized to correct the low-precision multibeam bathymetric data.

- (6)

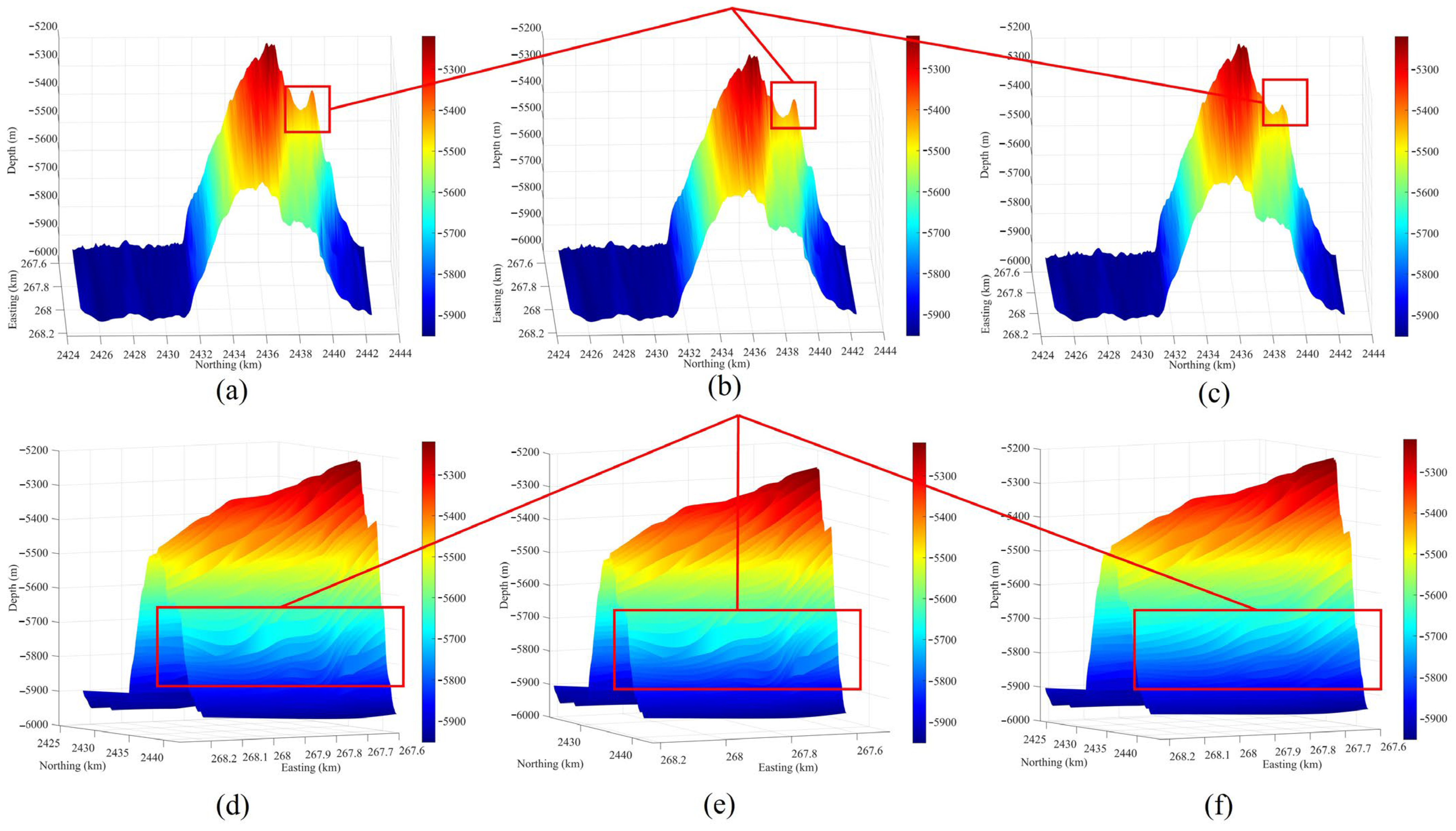

- Assessing data quality: The data for the crossover point difference before correction and the data for the crossover point difference after correction were statistically analyzed. The traditional method of fitting the conic surface to correct the depth was taken as the control group result, and the number of points with the crossover point difference exceeding the limit out of the total number of points was determined. The 2022 edition of the Chinese National Standard for Hydrographic Surveys specification stipulates that the number of points with an over-limit cross-point difference should not exceed 10% of the total points. The requirements for cross-point differences are listed in Table 3. Further, the accuracy of multibeam data was measured based on the average value, standard deviation, maximum value, and minimum value of the crossover point difference.

3.3. AI-Assisted Language Polishing

4. Results and Discussion

4.1. Adaptive DBSCAN Parameter Determination and Cluster Analysis

4.2. Determination of Regularization Parameters

4.3. Comparison of the Correction Effects of the Depth Error Model

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BIA | Beam Incidence Angle |

| DBSCAN | Density-Based Spatial Clustering of Applications with Noise |

| MAE | Maximum Error |

| ME | Mean Error |

| MBES | Multibeam Echo Sounder |

| RMSE | Root Mean Square Error |

| USV | Unmanned Surface Vehicle |

References

- Quiñones, J.D.P.; Sladen, A.; Ponte, A.; Lior, I.; Ampuero, J.-P.; Rivet, D.; Meulé, S.; Bouchette, F.; Pairaud, I.; Coyle, P. High Resolution Seafloor Thermometry for Internal Wave and Upwelling Monitoring Using Distributed Acoustic Sensing. Sci. Rep. 2023, 13, 17459. [Google Scholar] [CrossRef]

- Loureiro, G.; Dias, A.; Almeida, J.; Martins, A.; Hong, S.; Silva, E. A Survey of Seafloor Characterization and Mapping Techniques. Remote Sens. 2024, 16, 1163. [Google Scholar] [CrossRef]

- Shang, X.; Zhao, J.; Zhang, H. Obtaining High-Resolution Seabed Topography and Surface Details by Co-Registration of Side-Scan Sonar and Multibeam Echo Sounder Images. Remote Sens. 2019, 11, 1496. [Google Scholar] [CrossRef]

- Ashphaq, M.; Srivastava, P.K.; Mitra, D. Review of Near-Shore Satellite Derived Bathymetry: Classification and Account of Five Decades of Coastal Bathymetry Research. J. Ocean. Eng. Sci. 2021, 6, 340–359. [Google Scholar] [CrossRef]

- Sun, H.; Li, Q.; Bao, L.; Wu, Z.; Wu, L. Progress and Development Trend of Global Refined Seafloor Topography Modeling. Geomat. Inf. Sci. Wuhan Univ. 2022, 47, 1555–1567. [Google Scholar] [CrossRef]

- Janowski, Ł.; Tęgowski, J.; Montereale-Gavazzi, G. Editorial: Seafloor Mapping Using Underwater Remote Sensing Approaches. Front. Earth Sci. 2023, 11, 1306202. [Google Scholar] [CrossRef]

- Yu, X.; Wang, J.; Cui, Y. An Algorithm for Sound Velocity Error Correction Using GA-SVR Considering the Distortion Characteristics of Seabed Topography Measured by Multibeam Sonar Mounted on Autonomous Underwater Vehicle. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 20209–20226. [Google Scholar] [CrossRef]

- Grządziel, A. Method of Time Estimation for the Bathymetric Surveys Conducted with a Multi-Beam Echosounder System. Appl. Sci. 2023, 13, 10139. [Google Scholar] [CrossRef]

- Cao, W.; Fang, S.; Zhu, C.; Feng, M.; Zhou, Y.; Cao, H. Three-Dimensional Non-Uniform Sampled Data Visualization from Multibeam Echosounder Systems for Underwater Imaging and Environmental Monitoring. Remote Sens. 2025, 17, 294. [Google Scholar] [CrossRef]

- Sotelo-Torres, F.; Alvarez, L.V.; Roberts, R.C. An Unmanned Surface Vehicle (USV): Development of an Autonomous Boat with a Sensor Integration System for Bathymetric Surveys. Sensors 2023, 23, 4420. [Google Scholar] [CrossRef]

- Wang, L.; Zhu, D.; Pang, W.; Zhang, Y. A Survey of Underwater Search for Multi-Target Using Multi-AUV: Task Allocation, Path Planning, and Formation Control. Ocean Eng. 2023, 278, 114393. [Google Scholar] [CrossRef]

- Wang, J.; Tang, Y.; Jin, S.; Bian, G.; Zhao, X.; Peng, C. A Method for Multi-Beam Bathymetric Surveys in Unfamiliar Waters Based on the AUV Constant-Depth Mode. J. Ocean. Eng. Sci. 2023, 11, 1466. [Google Scholar] [CrossRef]

- Lubczonek, J.; Kazimierski, W.; Zaniewicz, G.; Lacka, M. Methodology for Combining Data Acquired by Unmanned Surface and Aerial Vehicles to Create Digital Bathymetric Models in Shallow and Ultra-Shallow Waters. Remote Sens. 2022, 14, 105. [Google Scholar] [CrossRef]

- Makar, A. Determination of the Minimum Safe Distance between a USV and a Hydro-Engineering Structure in a Restricted Water Region Sounding. Energies 2022, 15, 2441. [Google Scholar] [CrossRef]

- Yang, F.; Li, J.; Han, L.; Liu, Z. The Filtering and Compressing of Outer Beams to Multibeam Bathymetric Data. Mar. Geophys. Res. 2013, 34, 17–24. [Google Scholar] [CrossRef]

- Rezvani, M.-H.; Sabbagh, A.; Ardalan, A.A. Robust Automatic Reduction of Multibeam Bathymetric Data Based on M-Estimators. Mar. Geod. 2015, 38, 327–344. [Google Scholar] [CrossRef]

- Ferreira, I.O.; Santos, A.D.P.D.; Oliveira, J.C.D.; Medeiros, N.D.G.; Emiliano, P.C. Spatial outliers detection algorithm (soda) applied to multibeam bathymetric data processing. Bol. Ciênc. Geod. 2019, 25, e2019020. [Google Scholar] [CrossRef]

- He, G.; Gao, X.; Li, L.; Gao, P. OCT Monitoring Data Processing Method of Laser Deep Penetration Welding Based on HDBSCAN. Opt. Laser Technol. 2024, 179, 111303. [Google Scholar] [CrossRef]

- Nasaruddin, N.; Masseran, N.; Idris, W.M.R.; Ul-Saufie, A.Z. A SMOTE PCA HDBSCAN Approach for Enhancing Water Quality Classification in Imbalanced Datasets. Sci. Rep. 2025, 15, 13059. [Google Scholar] [CrossRef]

- Li, M.; Sun, L.; Sun, Q.; Jin, S. Adjustment Model Based on Ping Sructure for Swath Combination Net. Geomat. Inf. Sci. Wuhan Univ. 2011, 36, 652–655. [Google Scholar] [CrossRef]

- Huang, C.; Lu, X.; Liu, S.; Bian, G.; Ouyang, Y.; Huang, X.; Deng, K. Examination and Assessment of Bathymetric Surveying Products, Part I: Design of Cross Point Discrepancy Array. Hydrogr. Surv. Charting 2017, 37, 11–16. [Google Scholar]

- Xu, Y.; Gao, A.; Sun, L.; Lv, Y. Adjusting the Sounding Data’s System Error Acquired in a Grid Pattern. Eng. Surv. Mapp. 2016, 25, 21–24+29. [Google Scholar] [CrossRef]

- Jakobsson, M.; Calder, B.; Mayer, L. On the Effect of Random Errors in Gridded Bathymetric Compilations. J. Geophys. Res.-Solid Earth 2002, 107, ETG 14-1–ETG 14-11. [Google Scholar] [CrossRef]

- Yang, F.; Li, J.; Liu, Z.; Han, L. Correction for Depth Biases to Shallow Water Multibeam Bathymetric Data. China Ocean Eng. 2013, 27, 245–254. [Google Scholar] [CrossRef]

- Plant, N.G.; Holland, K.T.; Puleo, J.A. Analysis of the Scale of Errors in Nearshore Bathymetric Data. Mar. Geol. 2002, 191, 71–86. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, Z.; Zhao, D.; Zhou, J.; Shang, J.; Wang, M.; Zhu, C.; Lu, H. The MF Method for Multi-Source Bathymetric Data Fusion and Ocean Bathymetric Model Construction. Acta Geod. Cartogr. Sin. 2019, 48, 1171–1181. [Google Scholar]

- Guo, Y.; Zocca, S.; Dabove, P.; Dovis, F. A Post-Processing Multipath/NLoS Bias Estimation Method Based on DBSCAN. Sensors 2024, 24, 2611. [Google Scholar] [CrossRef]

- Mehmood, Z.; Wang, Z. Hybrid iForest-DBSCAN for Anomaly Detection and Wind Power Curve Modelling. Expert. Syst. Appl. 2025, 289, 128381. [Google Scholar] [CrossRef]

- Han, J.; Guo, X.; Jiao, R.; Nan, Y.; Yang, H.; Ni, X.; Zhao, D.; Wang, S.; Ma, X.; Yan, C.; et al. An Automatic Method for Delimiting Deformation Area in InSAR Based on HNSW-DBSCAN Clustering Algorithm. Remote Sens. 2023, 15, 4287. [Google Scholar] [CrossRef]

- Li, Y.; Wang, J.; Zhao, H.; Wang, C.; Shao, Q. Adaptive DBSCAN Clustering and GASA Optimization for Underdetermined Mixing Matrix Estimation in Fault Diagnosis of Reciprocating Compressors. Sensors 2023, 24, 167. [Google Scholar] [CrossRef]

- Frankl, N.; Kupavskii, A. Nearly K-Distance Sets. Discrete. Comput. Geom. 2023, 70, 455–494. [Google Scholar] [CrossRef]

- Dirgantoro, G.P.; Soeleman, M.A.; Supriyanto, C. Smoothing Weight Distance to Solve Euclidean Distance Measurement Problems in K-Nearest Neighbor Algorithm. In Proceedings of the 2021 IEEE 5th International Conference on Information Technology, Information Systems and Electrical Engineering (ICITISEE), Purwokerto, Indonesia, 24–25 November 2021; pp. 294–298. [Google Scholar]

- Agarwal, A.; Kakade, S.M.; Lee, J.D.; Mahajan, G. On the Theory of Policy Gradient Methods: Optimality, Approximation, and Distribution Shift. J. Mach. Learn. Res. 2021, 22, 1–76. [Google Scholar]

- Alikhanov, A.A.; Asl, M.S.; Huang, C. Stability Analysis of a Second-Order Difference Scheme for the Time-Fractional Mixed Sub-Diffusion and Diffusion-Wave Equation. Fract. Calc. Appl. Anal. 2024, 27, 102–123. [Google Scholar] [CrossRef]

- Mokhtari, F.; Akhlaghi, M.I.; Simpson, S.L.; Wu, G.; Laurienti, P.J. Sliding Window Correlation Analysis: Modulating Window Shape for Dynamic Brain Connectivity in Resting State. NeuroImage 2019, 189, 655–666. [Google Scholar] [CrossRef] [PubMed]

- Luo, J. The Processing M ethod of M ulti-beam and Single-beam Bathymetric Data Fusion. Hydrogr. Surv. Charting 2018, 38, 21–24. [Google Scholar]

- Marjetič, A.; Ambrožič, T.; Savšek, S. Use of Total Least Squares Adjustment in Geodetic Applications. Appl. Sci. 2024, 14, 2516. [Google Scholar] [CrossRef]

- Vestøl, O.; Breili, K.; Taskjelle, T. Common Adjustment of Geoid and Mean Sea Level with Least Squares Collocation. J. Geod. 2025, 99, 40. [Google Scholar] [CrossRef]

- Li, Z.; Peng, Z.; Zhang, Z.; Chu, Y.; Xu, C.; Yao, S.; García-Fernández, Á.F.; Zhu, X.; Yue, Y.; Levers, A.; et al. Exploring Modern Bathymetry: A Comprehensive Review of Data Acquisition Devices, Model Accuracy, and Interpolation Techniques for Enhanced Underwater Mapping. Front. Mar. Sci. 2023, 10, 1178845. [Google Scholar] [CrossRef]

- Lurton, X. An Introduction to Underwater Acoustics: Principles and Applications, 2nd ed.; Springer: Berlin, Germany, 2010; Chapter 7.2; pp. 235–238. [Google Scholar]

- Xu, T.; Yang, Y. Robust Tikhonov Regularization Method and Its Applications. Geomat. Inf. Sci. Wuhan Univ. 2003, 28, 719–722. [Google Scholar]

- Fischer, A.; Cellmer, S.; Nowel, K. Assessment of the Double-Parameter Iterative Tikhonov Regularization for Single-Epoch Measurement Model-Based Precise GNSS Positioning. Measurement 2023, 218, 113251. [Google Scholar] [CrossRef]

- Li, M.; Wang, L.; Luo, C.; Wu, H. A New Improved Fractional Tikhonov Regularization Method for Moving Force Identification. Structures 2024, 60, 105840. [Google Scholar] [CrossRef]

- Gerth, D. A New Interpretation of (Tikhonov) Regularization. Inverse Probl. 2021, 37, 064002. [Google Scholar] [CrossRef]

- Du, W.; Zhang, Y. The Calculation of High-Order Vertical Derivative in Gravity Field by Tikhonov Regularization Iterative Method. Math. Probl. Eng. 2021, 2021, 8818552. [Google Scholar] [CrossRef]

- Xing, J.; Chen, X.-X.; Ma, L. Bathymetry Inversion Using the Modified Gravity-Geologic Method: Application of the Rectangular Prism Model and Tikhonov Regularization. Appl. Geophys. 2020, 17, 377–389. [Google Scholar] [CrossRef]

- Li, X.; Xiong, Y.; Xu, S.; Chen, W.; Zhao, B.; Zhang, R. A Multipath Error Reduction Method for BDS Using Tikhonov Regularization with Parameter Optimization. Remote Sens. 2023, 15, 3400. [Google Scholar] [CrossRef]

- Calvetti, D.; Morigi, S.; Reichel, L.; Sgallari, F. Tikhonov Regularization and the L-curve for Large Discrete Ill-Posed Problems. J. Comput. Appl. Math. 2000, 123, 423–446. [Google Scholar] [CrossRef]

- Maharani, M.; Saputro, D.R.S. Generalized Cross Validation (GCV) in Smoothing Spline Nonparametric Regression Models. J. Phys. Conf. Ser. 2021, 1808, 012053. [Google Scholar] [CrossRef]

- Wang, L.; Xu, C.; Lu, T. Ridge Estimation Method in Ill-posed Weighted Total Least Squares Adjustment. Geomat. Inf. Sci. Wuhan Univ. 2010, 35, 1346–1350. [Google Scholar] [CrossRef]

- Tong, H. Functional Linear Regression with Huber Loss. J. Complex. 2023, 74, 101696. [Google Scholar] [CrossRef]

| Method | X0 | X1 | X2 | X3 | X4 | X5 | X6 | X7 | X8 | X9 | X10 | Time/s |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L-curve | 0.9940 | 0.9907 | 0.9962 | 1.0065 | 1.0075 | 0.9900 | 0.9971 | 1.0158 | 1.0106 | 0.9832 | 0.9940 | 0.22 |

| GCV | 0.9903 | 0.9871 | 0.9923 | 1.0029 | 1.0037 | 0.9862 | 0.9935 | 1.0120 | 1.0069 | 0.9794 | 0.9903 | 8.03 |

| Ridge trace | 0.9940 | 0.9907 | 0.9962 | 1.0065 | 1.0075 | 0.9900 | 0.9971 | 1.0158 | 1.0106 | 0.9832 | 0.9940 | 0.26 |

| Ture value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | \ |

| Survey Area | The Number of Crossover Points | ME (m) | RMSE (m) | MAE (m) |

|---|---|---|---|---|

| Z01 | 4836 | 3.76 | 16.21 | 118.61 |

| Z02 | 6102 | 2.54 | 19.64 | 127.62 |

| Z03 | 7216 | −2.58 | 24.18 | 146.77 |

| The Range of Depth Z (m) | The Limit Difference in the Crossover Depth Difference Values (m) |

|---|---|

| 0–20 | ±0.5 |

| 20–30 | ±0.6 |

| 30–50 | ±0.7 |

| 50–100 | ±1.5 |

| >100 | ±Z × 3% |

| Method | ME (m) | RMSE (m) | MAE (m) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Z01 | Z02 | Z03 | Z01 | Z02 | Z03 | Z01 | Z02 | Z03 | |

| Original data | 3.76 | 2.54 | −2.58 | 16.21 | 19.64 | 24.18 | 118.61 | 127.62 | 146.77 |

| Method 1 | 0 | 0 | 0 | 15.89 | 18.52 | 21.96 | 109.35 | 118.26 | 128.66 |

| Method 2 | 0 | 0 | 0 | 10.77 | 14.15 | 16.95 | 48.61 | 50.09 | 69.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, Q.; Xu, W.; Jin, S.; Sun, T. A Crossover Adjustment Method Considering the Beam Incident Angle for a Multibeam Bathymetric Survey Based on USV Swarms. J. Mar. Sci. Eng. 2025, 13, 1364. https://doi.org/10.3390/jmse13071364

Yuan Q, Xu W, Jin S, Sun T. A Crossover Adjustment Method Considering the Beam Incident Angle for a Multibeam Bathymetric Survey Based on USV Swarms. Journal of Marine Science and Engineering. 2025; 13(7):1364. https://doi.org/10.3390/jmse13071364

Chicago/Turabian StyleYuan, Qiang, Weiming Xu, Shaohua Jin, and Tong Sun. 2025. "A Crossover Adjustment Method Considering the Beam Incident Angle for a Multibeam Bathymetric Survey Based on USV Swarms" Journal of Marine Science and Engineering 13, no. 7: 1364. https://doi.org/10.3390/jmse13071364

APA StyleYuan, Q., Xu, W., Jin, S., & Sun, T. (2025). A Crossover Adjustment Method Considering the Beam Incident Angle for a Multibeam Bathymetric Survey Based on USV Swarms. Journal of Marine Science and Engineering, 13(7), 1364. https://doi.org/10.3390/jmse13071364