Visual-Based Position Estimation for Underwater Vehicles Using Tightly Coupled Hybrid Constrained Approach

Abstract

1. Introduction

- 1.

- A robust three-step hybrid tracking strategy is proposed. Feature tracking is used to obtain an initial rough pose that converges, and the direct method is then employed for rapid sparse refinement. The ultimate goal is to achieve accurate pose estimation between adjacent frames and reproject the map points to enhance both the number and stability of feature tracking.

- 2.

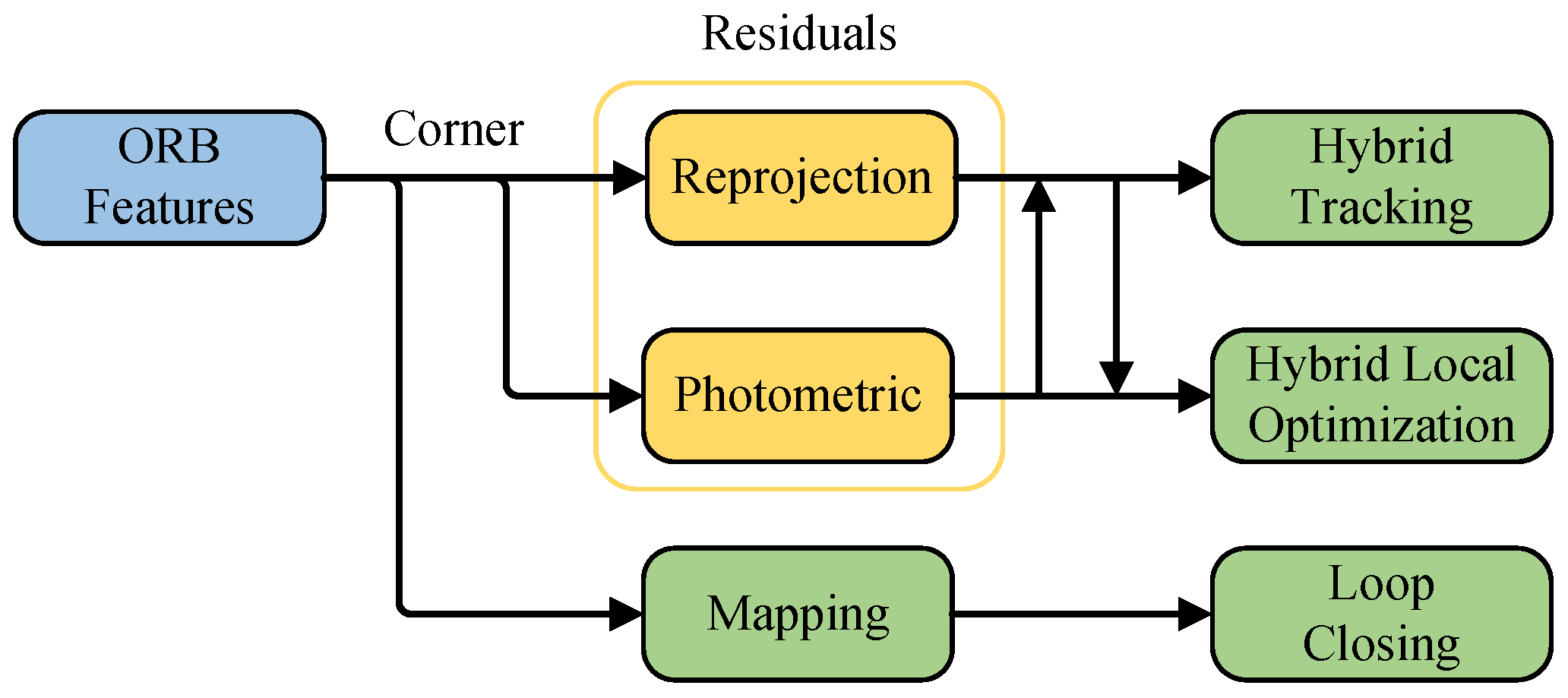

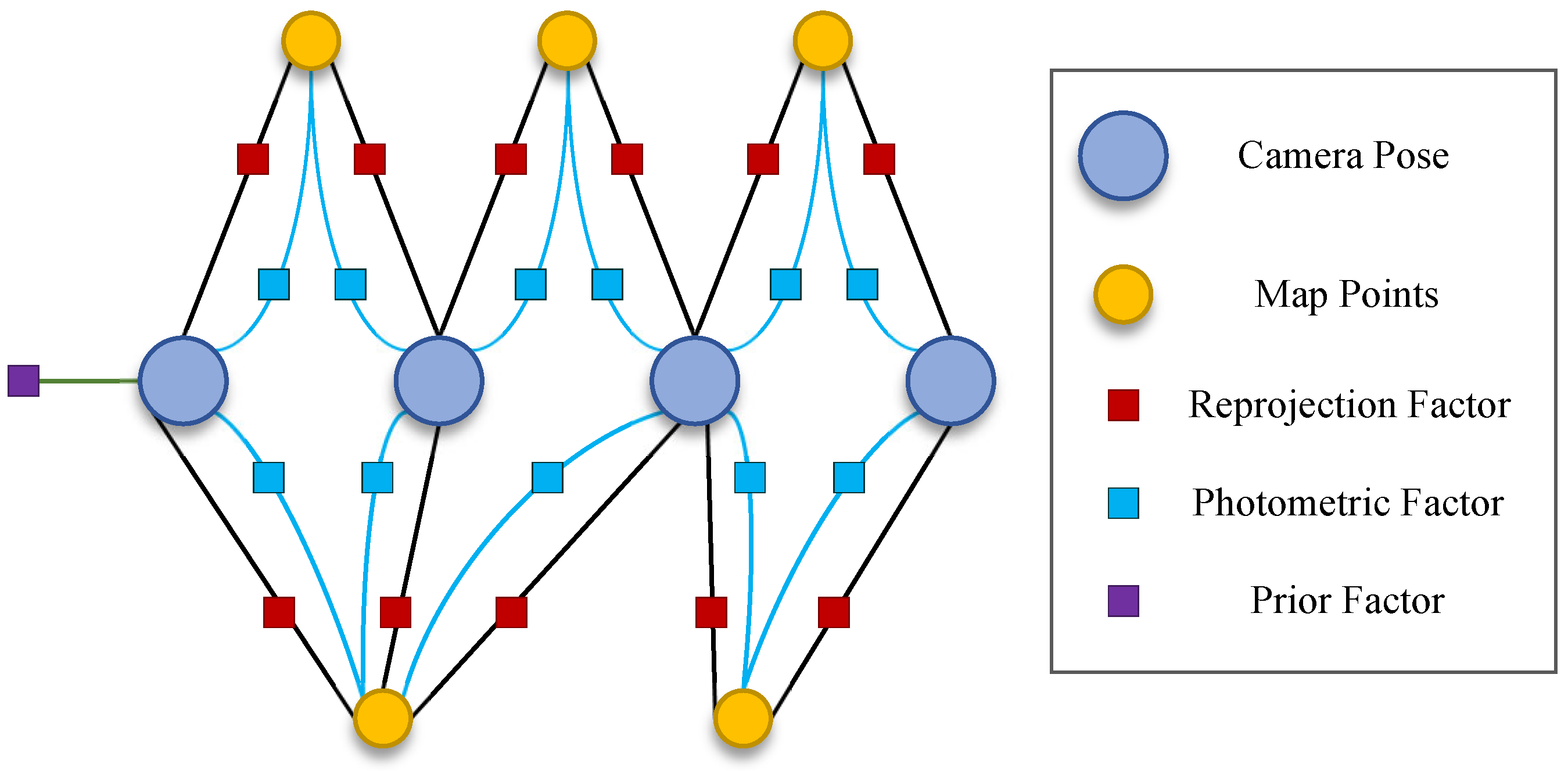

- Using reliably tracked features, a tight coupling hybrid visual optimization method is proposed. Robust features are used to jointly optimize two residuals: the reprojection error and the photometric error. This method tightly couples the hybrid VO and mapping processes, improving localization and mapping accuracy.

- 3.

- A tightly coupled hybrid monocular SLAM framework for underwater scenes, named UTH-SLAM, is constructed. The tracking stability and localization accuracy of the system are demonstrated using publicly available high-precision underwater datasets and natural underwater data.

2. Related Work

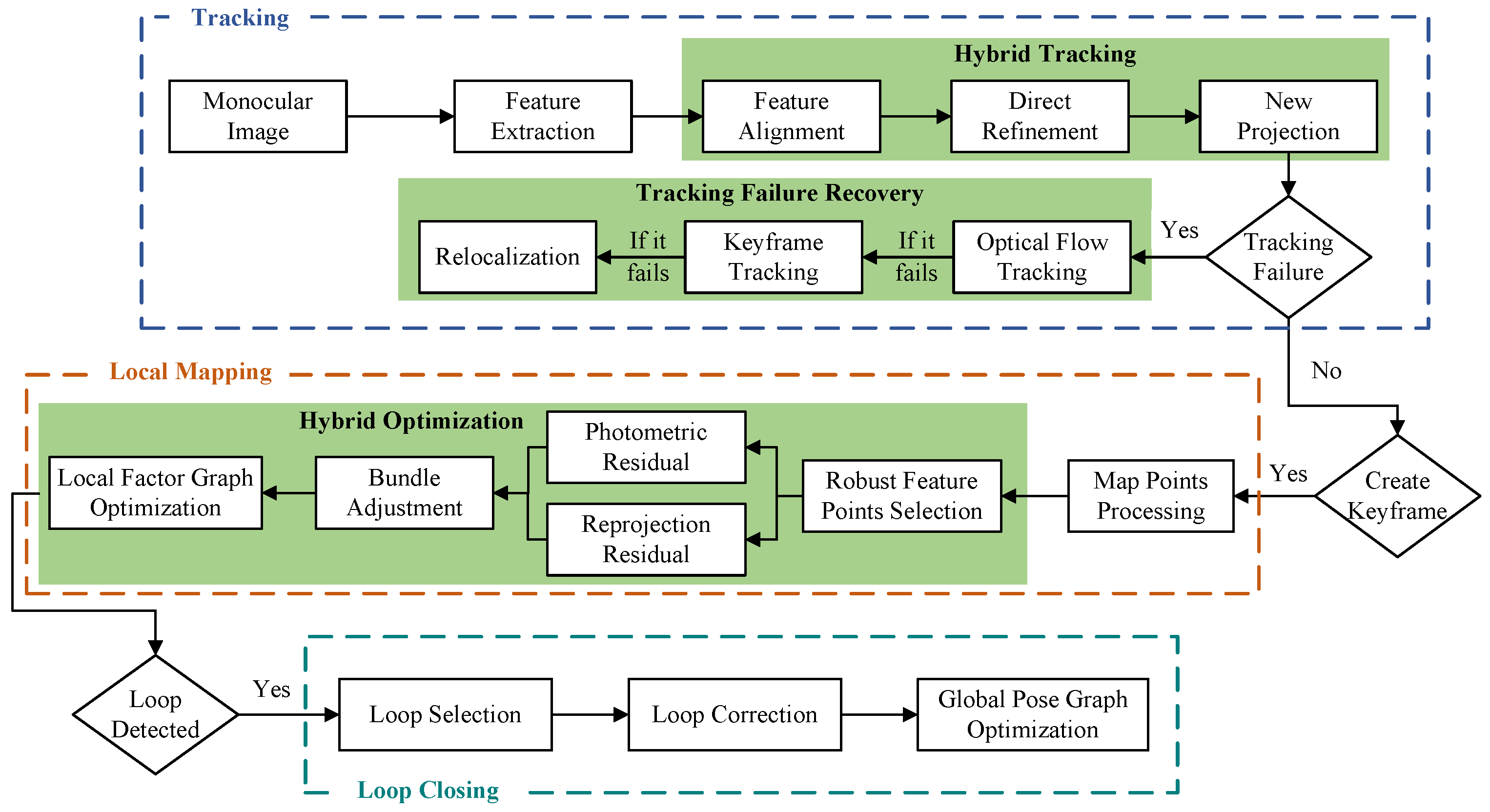

3. The Framework of UTH-SLAM

3.1. System Overview

3.2. Tracking Thread

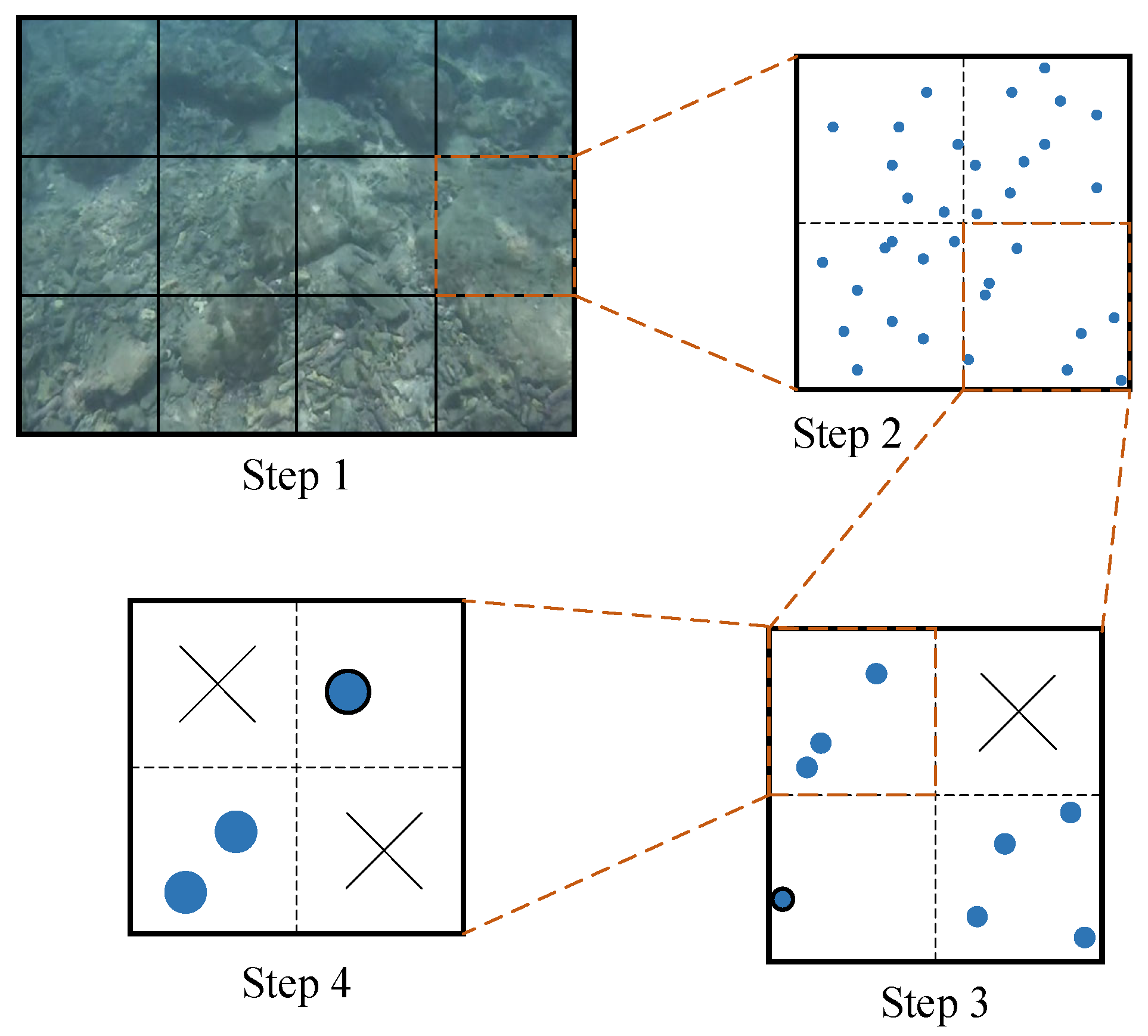

3.2.1. Feature Extraction

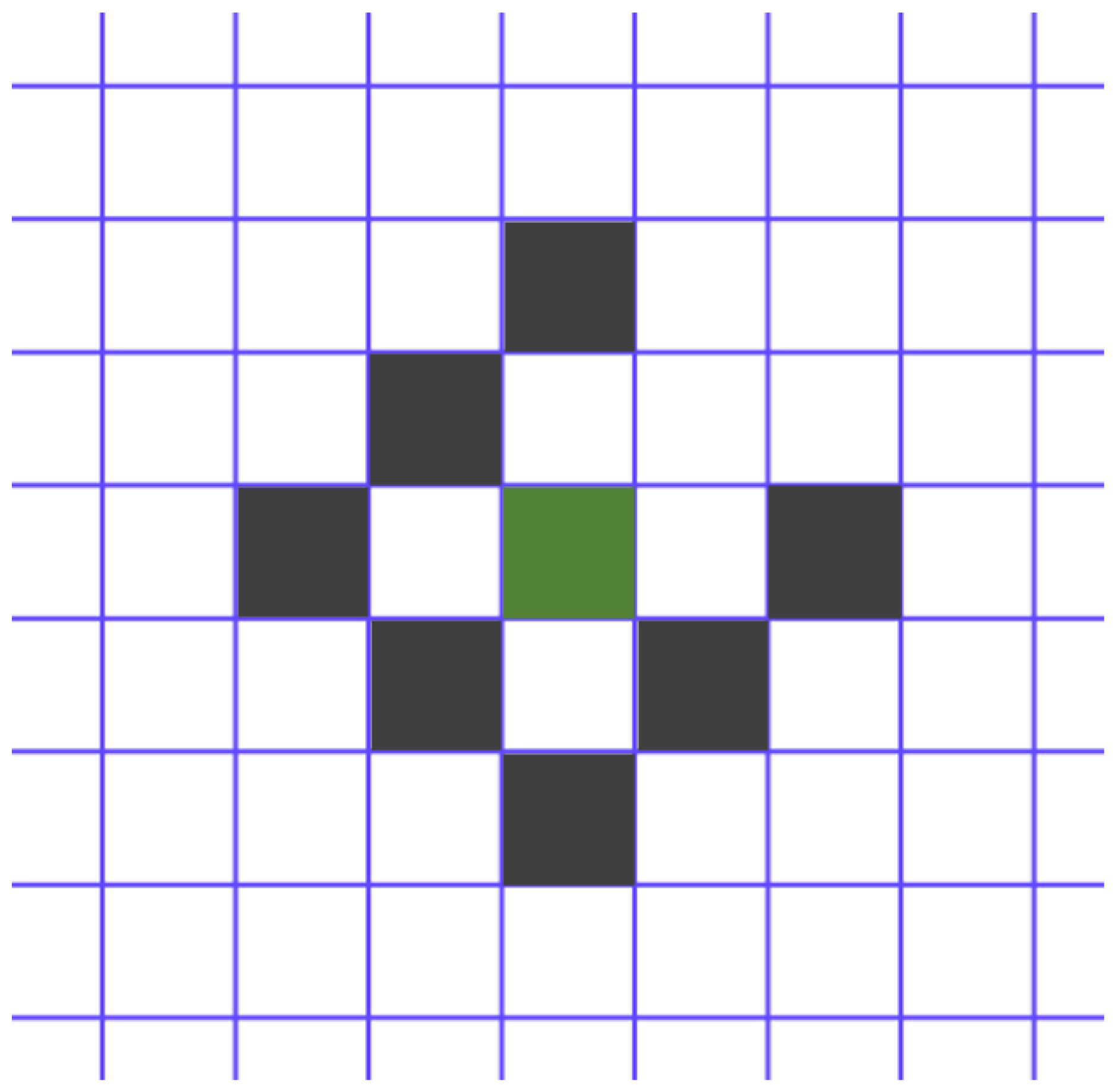

3.2.2. Hybrid Tracking Algorithm

3.2.3. Tracking Failure Recovery Module

3.3. Local Mapping Thread

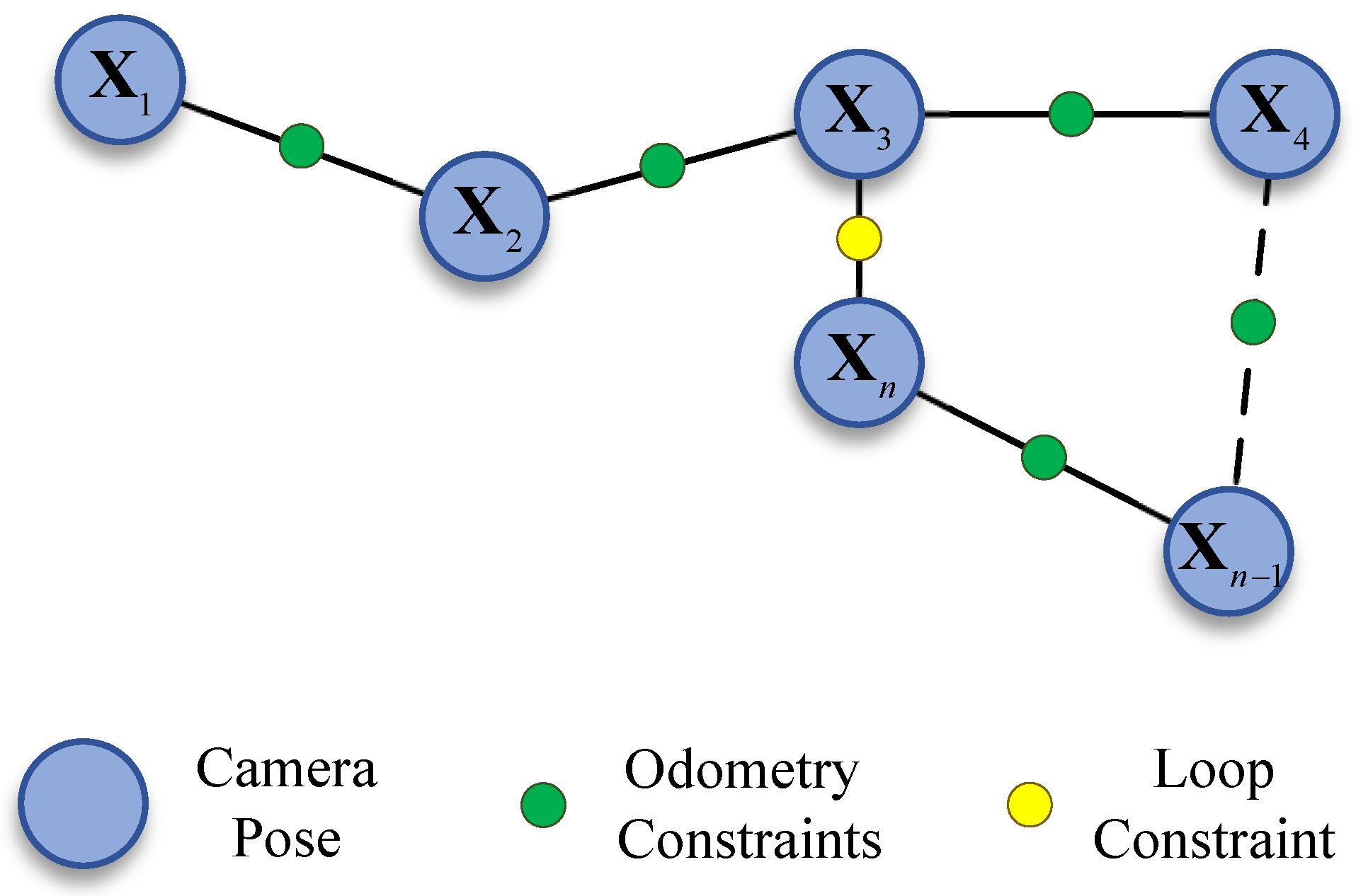

3.4. Loop Closing Thread

4. Experiments and Results

4.1. HAUD Experiments

4.1.1. Global Consistency

- 1.

- For systems without a loop closing thread, LDSO and UTH-SLAM demonstrate superior performance in comparison to the other two systems, which are based on a hybrid method. This finding serves to verify the hypothesis that hybrid features or residuals have the capacity to enhance the accuracy of localization by introducing new constraints to the pose estimation in visual scenes of superior quality. Our proposed hybrid approach still outperforms LDSO, obtaining an average ATE reduction of 11.11% compared to it. This is primarily attributable to the fact that the proposed hybrid approach leverages the strengths of both strategies, which are employed not only in the tracking process but also play a pivotal role in the optimization. This leads to enhanced accuracy in keyframe pose estimation within the local map.

- 2.

- For systems with a loop closing thread, ORB-SALM3 and LDSO have been shown to perform comparably. LDSO stores all keyframes along with their associated indirect features and depth estimates in memory for loop closure. However, due to its lack of feature matching for detecting redundant keyframes, it is unable to apply the keyframe culling strategy, leading to relatively large memory consumption. In contrast, ORB-SALM3 has been demonstrated to be more effective in managing redundant keyframes and co-visibility between keyframes. The proposed system leverages this advantage to achieve superior positioning accuracy. UTH-SLAM reduces the Avg. ATE by 20.31% and 22.72% compared to ORB-SALM3 and LDSO, respectively.

- 3.

- All three systems tested with loop closing threads utilized BoW to detect loops. If the loop closure can be effectively detected, the trajectory accuracy is significantly improved after the loop correction. The global localization accuracies of ORB-SALM3, LDSO, and UTH-SLAM are improved by 32.63%, 26.67%, and 36.25%, respectively, after enabling loop closing threads. This also demonstrates how well loop closure works in reducing system drift. The feature-based approach is more convenient than the direct method, as feature descriptors can be used not only for tracking but also for finding the loop closures.

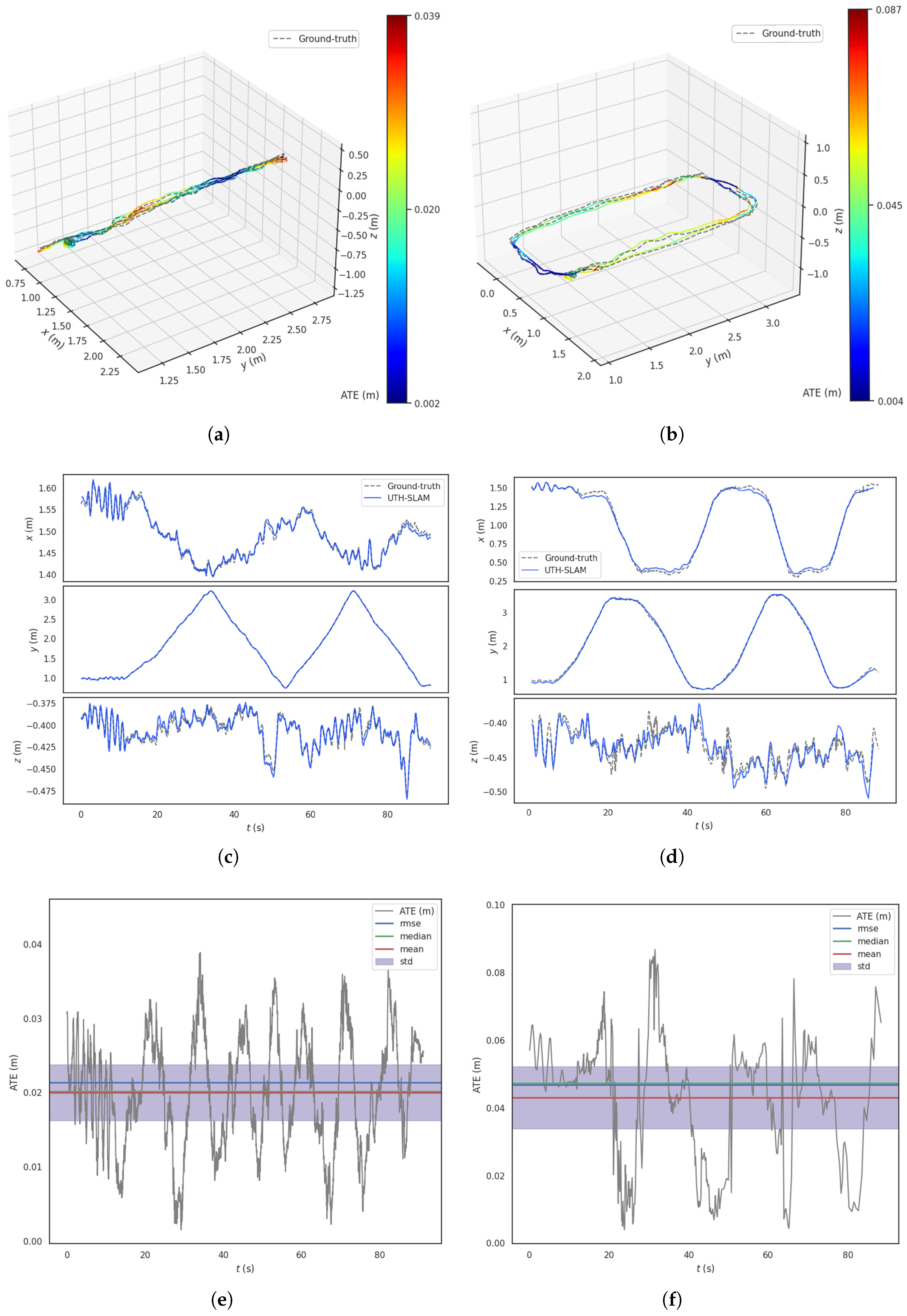

- 1.

- Figure 10a,b show the estimated trajectories made by UTH-SLAM compared to the ground truth. The estimated trajectories are depicted by colored lines, each representing the ATE at that location. In some regions, fluctuations in the error occur due to changes in image texture and motion. As shown in Figure 10c,d, tracking is more accurate along the x–y axes than along the z-axis, especially near the inflection point in the trajectory. The main cause of this is the sudden change in the z-axis movement. In the first step of the proposed hybrid tracking method, the assumption of constant velocity motion for the carrier fails to align with the actual motion at the inflection point, leading to increased error. Overall, UTH-SLAM demonstrates good tracking performance across all three axes.

- 2.

- The ATE exhibits a more uniform fluctuation over time, as shown in Figure 10e,f. The error distributions of the estimated trajectories show no large deviations, and the RMSE, mean, and median values remain closely aligned. This also demonstrates that the proposed system can maintain a relatively stable working state over extended periods, avoiding error accumulation and drastic fluctuations.

4.1.2. Local Accuracy

4.2. AQUALOC Experiments

4.2.1. Quantitative Analysis

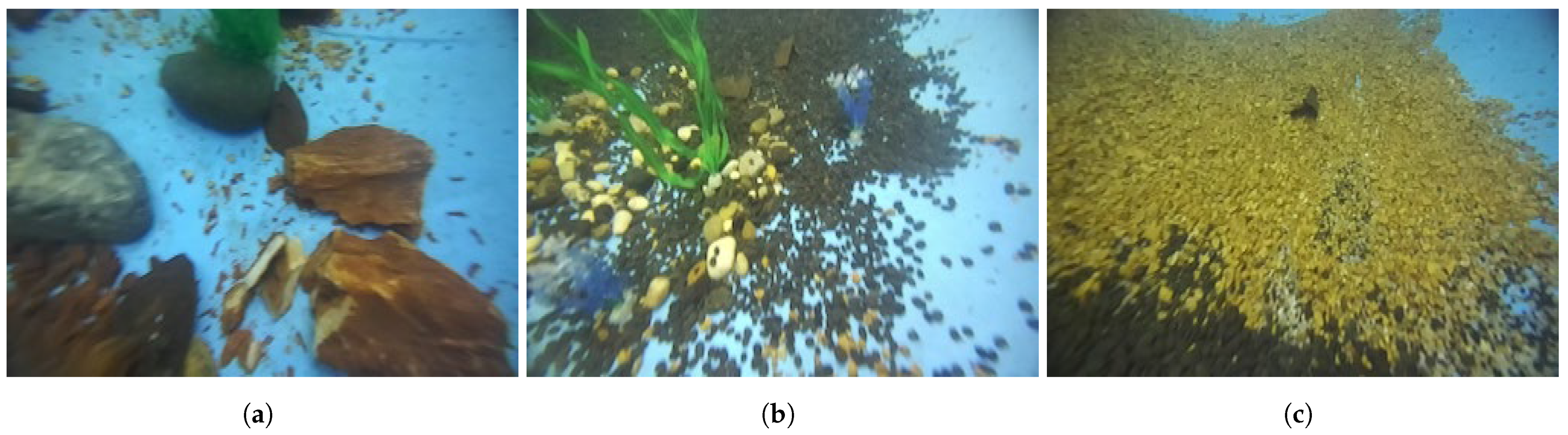

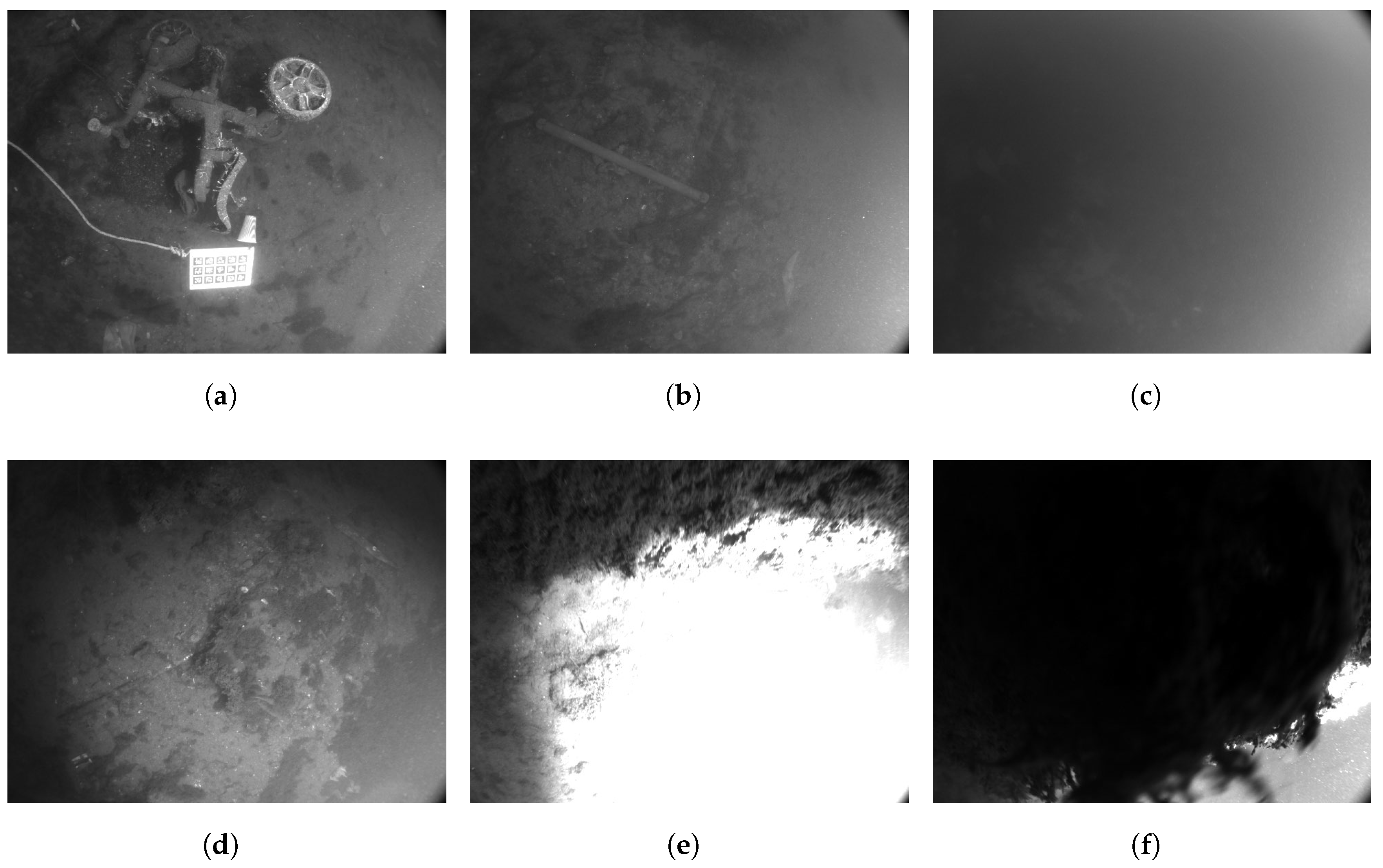

- 1.

- In natural underwater scenes, the effect of light variations becomes more pronounced, and both DSO and LDSO perform poorly. The tracking approach, which assumes photometric invariance, results in poor pose estimation between adjacent frames, which negatively affects the localization results. Furthermore, in Seq01, the ROV experiences large-scale motion, which poses a challenge for the direct method tracking approach. The DSO and LDSO were shown to produce drastic drifts, bringing the ATE to 0.933 m and 0.709 m, respectively, while the result obtained in this study is only 0.129 m. The proposed hybrid tracking strategy uses a feature-based method to estimate the rough pose, followed by direct refinement and a new projection of it. This approach is not only more robust to large geometric distortions but also enhances estimation accuracy. The effectiveness of the proposed approach is evident in the substantial reduction in Avg. ATE, with UTH-SLAM achieving a 76% and 71.6% reduction compared to DSO and LDSO, respectively.

- 2.

- In the context of harbor sequences, ORB-SLAM3 has been shown to outperform its counterparts, DSO and LDSO, due to its enhanced underwater camera internal parameter calibration and advanced processing algorithms. However, the performance of feature-based tracking is unstable in low-texture sequences, as illustrated in Figure 12c. The proposed system has been demonstrated to improve location accuracy by 47.25% compared to ORB-SLAM3. This improvement can be attributed to the hybrid approach we employ, which fully leverages both the feature-based and direct methods by refining the pose at the front-end and tightly coupling the two residuals at the optimization stage. This enables UTH-SLAM to generate more precise keyframe pose estimates.

4.2.2. Qualitative Analysis

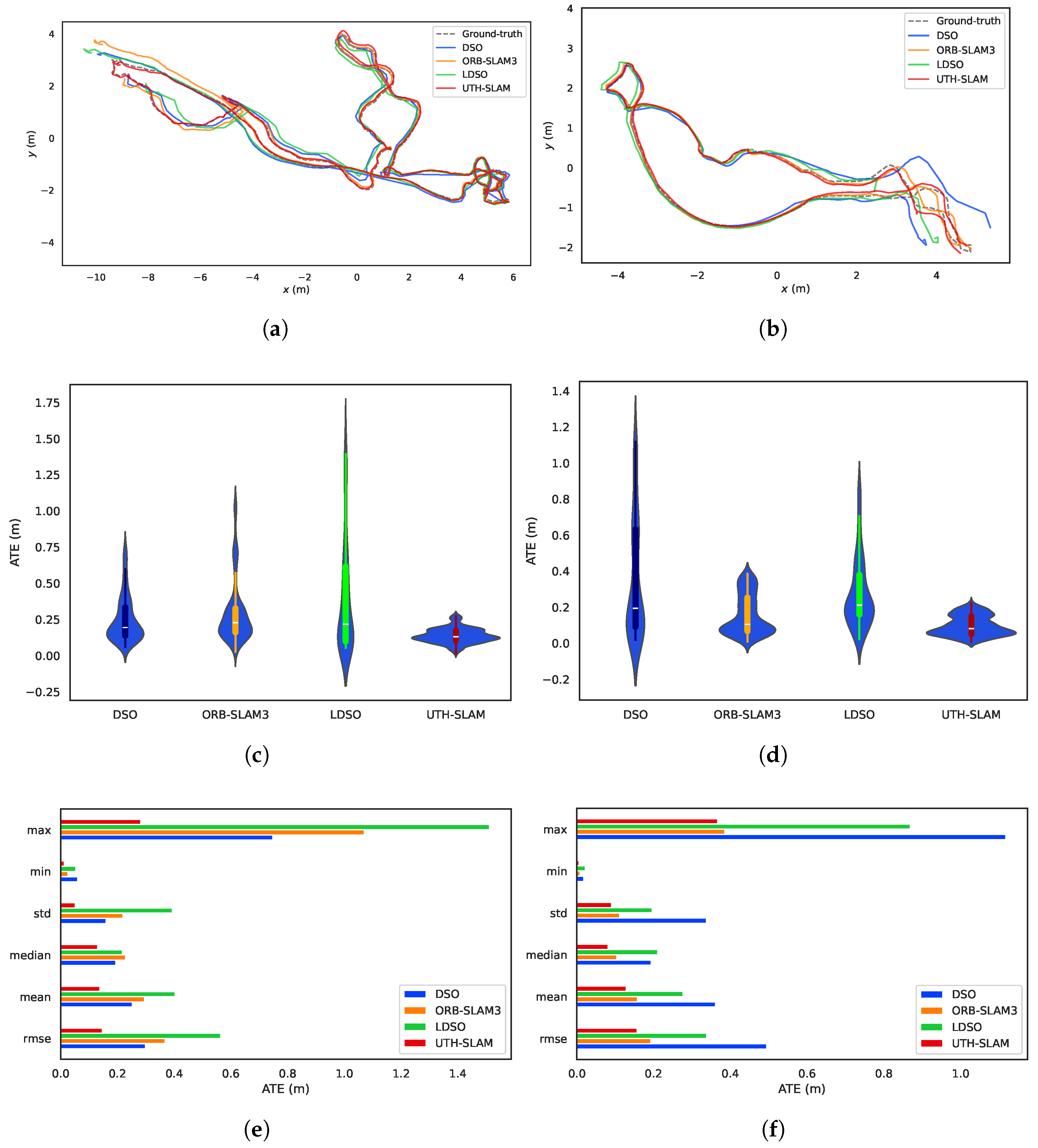

- 1.

- As shown in Figure 14a,c, both DSO and LDSO use a large number of pixel features to generate denser maps with better visibility compared to sparse maps. LDSO also uses corners as direct features and contributes photometric residuals. These corners are extracted using ORB descriptors, enabling loop closure detection. The system also constructs sparse maps, as shown in Figure 14d. The key distinction is that UTH-SLAM integrates geometric and photometric residuals more robustly by using stable features, providing stronger constraints for pose estimation and mapping.

- 2.

- The trajectories assessed by DSO, ORB-SLAM3, and LDSO showed significant pose errors, especially in the initial phase, as shown in Figure 15a,b. In contrast, UTH-SLAM demonstrated precise tracking throughout the entire trajectory. This superior performance can be primarily attributed to the efficacy of the proposed hybrid tracking strategy, which refines the pose estimation from the initial phase of the trajectory and reduces the instability of the system.

- 3.

- As shown by the violin plots in Figure 15c,d, the proposed system estimates trajectories with a higher concentration of ATE and fewer outliers. The hybrid strategy discussed here has been shown to exhibit greater stability and robustness than other methods. The maximum, minimum, standard deviation, median, and mean values of the ATE obtained by UTH-SLAM all show a similar trend to the RMSE of ATE across two sequences, as shown in Figure 15e,f. This finding underscores the consistent and reliable performance characteristics of our system.

4.3. Discussion

5. Conclusions

- 1.

- In comparison with ORB-SLAM3, which uses the feature-based method, and DSO, which uses the direct method, the proposed tightly coupled hybrid system utilizes the distinct strategies to their fullest potential. The efficacy of both global consistency and local accuracy is demonstrated in underwater scenario tests.

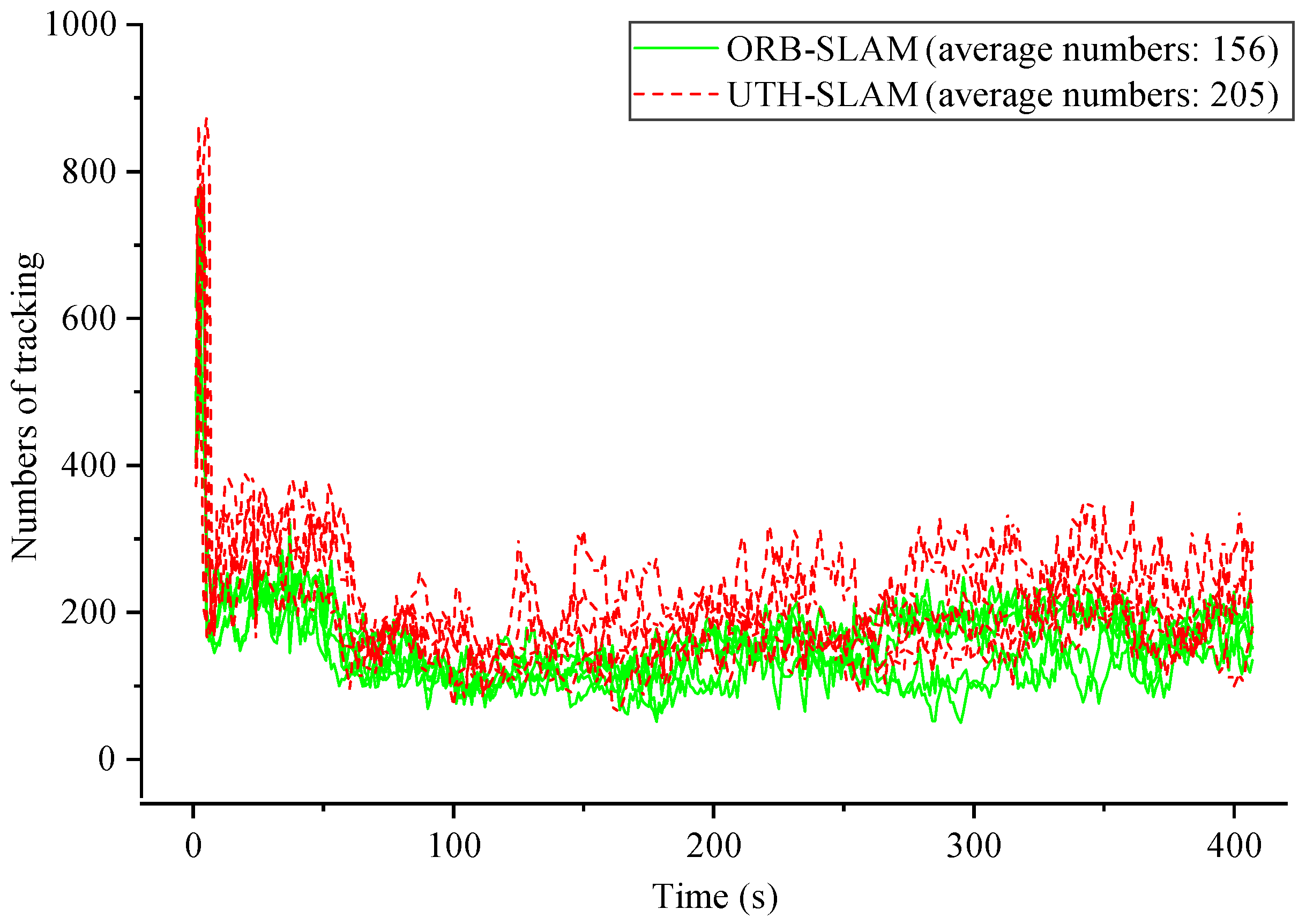

- 2.

- The employment of a hybrid tracking strategy has been shown to further improve the reliability of pose estimation between adjacent frames and the stability of tracking. A comparison of UTH-SLAM with ORB-SLAM3 reveals a 31.41% increase in the number of feature tracks. This enhancement in adaptability is particularly significant in low-texture underwater environments, where it can substantially impact system performance.

- 3.

- The proposed tightly coupled optimization strategy has demonstrated the ability to accomplish two different residual constraints using a single type of feature. The experimental findings demonstrate the superior localization accuracy of the proposed method. In the challenging natural underwater environment, UTH-SLAM reduces the ATE by 76%, 47.25%, and 71.6% compared to DSO, ORB-SLAM3, and LDSO, respectively.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Moud, H.I.; Shojaei, A.; Flood, I. Current and future applications of unmanned surface, underwater, and ground vehicles in construction. In Proceedings of the Construction Research Congress 2018, New Orleans, LA, USA, 2–4 April 2018; pp. 106–115. [Google Scholar]

- Ditria, E.M.; Buelow, C.A.; Gonzalez-Rivero, M.; Connolly, R.M. Artificial intelligence and automated monitoring for assisting conservation of marine ecosystems: A perspective. Front. Mar. Sci. 2022, 9, 918104. [Google Scholar] [CrossRef]

- Martin, S. An Introduction to Ocean Remote Sensing; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Zhang, B.; Ji, D.; Liu, S.; Zhu, X.; Xu, W. Autonomous underwater vehicle navigation: A review. Ocean Eng. 2023, 273, 113861. [Google Scholar] [CrossRef]

- Ding, S.; Zhang, T.; Lei, M.; Chai, H.; Jia, F. Robust visual-based localization and mapping for underwater vehicles: A survey. Ocean Eng. 2024, 312, 119274. [Google Scholar] [CrossRef]

- Wang, X.; Fan, X.; Shi, P.; Ni, J.; Zhou, Z. An overview of key SLAM technologies for underwater scenes. Remote Sens. 2023, 15, 2496. [Google Scholar] [CrossRef]

- Dou, H.; Zhao, X.; Liu, B.; Jia, Y.; Wang, G.; Wang, C. Enhancing Real-Time Visual SLAM with Distant Landmarks in Large-Scale Environments. Drones 2024, 8, 586. [Google Scholar] [CrossRef]

- Li, D.; Zhang, F.; Feng, J.; Wang, Z.; Fan, J.; Li, Y.; Li, J.; Yang, T. Ld-slam: A robust and accurate gnss-aided multi-map method for long-distance visual slam. Remote Sens. 2023, 15, 4442. [Google Scholar] [CrossRef]

- Wang, Z.; Li, X.; Chen, P.; Luo, D.; Zheng, G.; Chen, X. A Monocular Ranging Method for Ship Targets Based on Unmanned Surface Vessels in a Shaking Environment. Remote Sens. 2024, 16, 220. [Google Scholar] [CrossRef]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef]

- Zhou, L.; Wang, S.; Kaess, M. DPLVO: Direct point-line monocular visual odometry. IEEE Robot. Autom. Lett. 2021, 6, 7113–7120. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Meireles, M.; Lourenço, R.; Dias, A.; Almeida, J.M.; Silva, H.; Martins, A. Real time visual SLAM for underwater robotic inspection. In Proceedings of the 2014 Oceans, St. John’s, NL, Canada, 14–19 September 2014; pp. 1–5. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded up robust features. In Computer Vision—ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006, Proceedings, Part I; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Aulinas, J.; Petillot, Y.R.; Lladó, X.; Salvi, J.; Garcia, R. Vision-based underwater SLAM for the SPARUS AUV. In Proceedings of the 10th International Conference on Computer and IT Applications in the Maritime Industries, Berlin, Germany, 2–4 May 2011; pp. 171–179. [Google Scholar]

- Salvi, J.; Petillo, Y.; Thomas, S.; Aulinas, J. Visual slam for underwater vehicles using video velocity log and natural landmarks. In Proceedings of the OCEANS 2008, Quebec City, QC, Canada, 15–18 September 2008; pp. 1–6. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual-inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Computer Vision—ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006, Proceedings, Part I; Springer: Berlin/Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary robust independent elementary features. In Computer Vision—ECCV 2010: 11th European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010, Proceedings, Part IV; Springer: Berlin/Heidelberg, Germany, 2010; pp. 778–792. [Google Scholar]

- Xu, S.; Ma, T.; Li, Y.; Ding, S.; Gao, J.; Xia, J.; Qi, H.; Gu, H. An effective stereo SLAM with high-level primitives in underwater environment. Meas. Sci. Technol. 2023, 34, 105405. [Google Scholar] [CrossRef]

- Li, J.; Sun, C.; Hu, Y.; Yu, H. An underwater stereo matching method based on ORB features. Optoelectron. Eng. 2019, 46, 180456. [Google Scholar]

- Xia, J.; Ma, T.; Li, Y.; Xu, S.; Qi, H. A scale-aware monocular odometry for fishnet inspection with both repeated and weak features. IEEE Trans. Instrum. Meas. 2023, 73, 1–11. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Gao, X.; Wang, R.; Demmel, N.; Cremers, D. LDSO: Direct sparse odometry with loop closure. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2198–2204. [Google Scholar]

- Yu, Q.; Xiao, J.; Lu, H.; Zheng, Z. Hybrid-residual-based RGBD visual odometry. IEEE Access 2018, 6, 28540–28551. [Google Scholar] [CrossRef]

- Lee, S.H.; Civera, J. Loosely-coupled semi-direct monocular slam. IEEE Robot. Autom. Lett. 2018, 4, 399–406. [Google Scholar] [CrossRef]

- Younes, G.; Asmar, D.; Zelek, J. FDMO: Feature assisted direct monocular odometry. arXiv 2018, arXiv:1804.05422. [Google Scholar]

- Younes, G.; Asmar, D.; Zelek, J. A unified formulation for visual odometry. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 6237–6244. [Google Scholar]

- Ding, S.; Zhang, T.; Li, Y.; Xu, S.; Lei, M. Underwater multi-sensor fusion localization with visual-inertial-depth using hybrid residuals and efficient loop closing. Measurement 2024, 238, 115245. [Google Scholar] [CrossRef]

- Miao, R.; Qian, J.; Song, Y.; Ying, R.; Liu, P. UniVIO: Unified direct and feature-based underwater stereo visual-inertial odometry. IEEE Trans. Instrum. Meas. 2021, 71, 1–14. [Google Scholar] [CrossRef]

- Gálvez-López, D.; Tardos, J.D. Bags of binary words for fast place recognition in image sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based visual-inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the IJCAI’81: 7th International Joint Conference on ARTIFICIAL Intelligence, Vancouver, BC, Canada, 24–28 August 1981; Volume 2, pp. 674–679. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Urban, S.; Leitloff, J.; Hinz, S. Mlpnp-a real-time maximum likelihood solution to the perspective-n-point problem. arXiv 2016, arXiv:1607.08112. [Google Scholar]

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. G2o: A general framework for graph optimization. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar]

- Strasdat, H.; Montiel, J.; Davison, A.J. Scale drift-aware large scale monocular SLAM. Robot. Sci. Syst. VI 2010, 2, 7. [Google Scholar]

- Ferrera, M.; Creuze, V.; Moras, J.; Trouvé-Peloux, P. AQUALOC: An underwater dataset for visual-inertial-pressure localization. Int. J. Robot. Res. 2019, 38, 1549–1559. [Google Scholar] [CrossRef]

- Song, Y.; Qian, J.; Miao, R.; Xue, W.; Ying, R.; Liu, P. HAUD: A high-accuracy underwater dataset for visual-inertial odometry. In Proceedings of the 2021 IEEE Sensors, Virtual, 31 October–3 November 2021; pp. 1–4. [Google Scholar]

- Michael, G. evo: Python Package for the Evaluation of Odometry and Slam. Available online: https://github.com/MichaelGrupp/evo (accessed on 2 March 2025).

- Zhang, Z.; Scaramuzza, D. A tutorial on quantitative trajectory evaluation for visual (-inertial) odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7244–7251. [Google Scholar]

| Seq. | DSO a | ORB-SLAM3 b (Monocular) | LDSO c | UTH-SLAM c (Ours) | |||

|---|---|---|---|---|---|---|---|

| w/o LC | w/o LC | w/ LC | w/o LC | w/ LC | w/o LC | w/ LC | |

| Seq01 | 0.033 | 0.023 | 0.020 | 0.037 | 0.025 | 0.012 | 0.012 |

| Seq02 | 0.113 | 0.125 | 0.056 | 0.106 | 0.076 | 0.090 | 0.032 |

| Seq03 | 0.070 | 0.102 | 0.103 | 0.071 | 0.064 | 0.069 | 0.070 |

| Seq04 | 0.130 | 0.104 | 0.052 | 0.119 | 0.070 | 0.101 | 0.059 |

| Seq05 | 0.157 | 0.120 | 0.091 | 0.117 | 0.097 | 0.127 | 0.083 |

| Avg. | 0.100 | 0.095 | 0.064 | 0.090 | 0.066 | 0.080 | 0.051 |

| Seq. | DSO a | ORB-SLAM3 b (Monocular) | LDSO c | UTH-SLAM c (Ours) | |||

|---|---|---|---|---|---|---|---|

| w/o LC | w/o LC | w/ LC | w/o LC | w/ LC | w/o LC | w/ LC | |

| Seq01 | 0.034 | 0.020 | 0.020 | 0.032 | 0.030 | 0.018 | 0.018 |

| Seq02 | 0.035 | 0.030 | 0.029 | 0.023 | 0.025 | 0.023 | 0.022 |

| Seq03 | 0.031 | 0.035 | 0.037 | 0.033 | 0.030 | 0.026 | 0.027 |

| Seq04 | 0.044 | 0.031 | 0.031 | 0.037 | 0.037 | 0.027 | 0.025 |

| Seq05 | 0.040 | 0.033 | 0.034 | 0.035 | 0.033 | 0.023 | 0.022 |

| Avg. | 0.037 | 0.030 | 0.030 | 0.032 | 0.031 | 0.023 | 0.023 |

| Seq. | DSO a | ORB-SLAM3 b (Monocular) | LDSO c | UTH-SLAM c (Ours) |

|---|---|---|---|---|

| Seq01 | 0.933 | 0.280 | 0.709 | 0.129 |

| Seq02 | 0.288 | 0.364 | 0.558 | 0.142 |

| Seq03 | 0.207 | 0.034 | 0.051 | 0.033 |

| Seq05 | 0.499 | 0.185 | 0.336 | 0.151 |

| Seq06 | 0.073 | 0.048 | 0.036 | 0.026 |

| Avg. | 0.400 | 0.182 | 0.338 | 0.096 |

| Threads | Tracking | Local Mapping | Loop Closing |

|---|---|---|---|

| ORB-SLAM3 | 12.81 | 6.83 | 3.76 |

| UTH-SLAM | 15.52 | 7.68 | 3.51 |

| Computational Cost | Memory (MB) | CPU Power (W) |

|---|---|---|

| DSO | 434 | 84.3 |

| ORB-SLAM3 | 573 | 81.9 |

| LDSO | 604 | 84.0 |

| UTH-SLAM | 637 | 81.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, T.; Ding, S.; Yan, X.; Lu, Y.; Jiang, D.; Qiu, X.; Lu, Y. Visual-Based Position Estimation for Underwater Vehicles Using Tightly Coupled Hybrid Constrained Approach. J. Mar. Sci. Eng. 2025, 13, 1216. https://doi.org/10.3390/jmse13071216

Zhang T, Ding S, Yan X, Lu Y, Jiang D, Qiu X, Lu Y. Visual-Based Position Estimation for Underwater Vehicles Using Tightly Coupled Hybrid Constrained Approach. Journal of Marine Science and Engineering. 2025; 13(7):1216. https://doi.org/10.3390/jmse13071216

Chicago/Turabian StyleZhang, Tiedong, Shuoshuo Ding, Xun Yan, Yanze Lu, Dapeng Jiang, Xinjie Qiu, and Yu Lu. 2025. "Visual-Based Position Estimation for Underwater Vehicles Using Tightly Coupled Hybrid Constrained Approach" Journal of Marine Science and Engineering 13, no. 7: 1216. https://doi.org/10.3390/jmse13071216

APA StyleZhang, T., Ding, S., Yan, X., Lu, Y., Jiang, D., Qiu, X., & Lu, Y. (2025). Visual-Based Position Estimation for Underwater Vehicles Using Tightly Coupled Hybrid Constrained Approach. Journal of Marine Science and Engineering, 13(7), 1216. https://doi.org/10.3390/jmse13071216