Abstract

Underwater target detection and segmentation in Side-Scan Sonar (SSS) imagery is challenged by low signal-to-noise ratios, geometric distortions, and Unmanned Underwater Vehicles (UUVs)’ computational constraints. This paper proposes CKAN-YOLOv8, a lightweight multi-task network integrating Kolmogorov–Arnold Networks Convolution (KANConv) into YOLOv8. The core innovation replaces conventional convolutions with KANConv blocks using learnable B-spline activations, dynamically adapting to noise and multi-scale targets while ensuring parameter efficiency. The KANConv-based Path Aggregation Network (KANConv-PANet) mitigates geometric distortions through spline-optimized multi-scale fusion. A dual-task head combines CIoU loss-driven detection and a boundary-sensitive segmentation module with Dice loss. Evaluated on a dataset (50 raw images augmented to 2000), CKAN-YOLOv8 achieves state-of-the-art performance as follows: 0.869 AP@0.5 and 0.72 IoU, alongside real-time inference at 66 FPS. Ablation studies confirm the contributions of KANConv modules to noise robustness and multi-scale adaptability. The framework demonstrates exceptional robustness to noise, scalability across target sizes.

1. Introduction

Underwater target detection and segmentation in SSS imagery are critical for marine exploration, wreck recovery, and pipeline inspection. However, SSS data exhibit intrinsic challenges such as speckle noise, geometric distortions, and low signal-to-noise ratios (SNRs) due to acoustic scattering and seabed heterogeneity [1]. Traditional methods rely on handcrafted features (e.g., texture descriptors [2] or SVM classifiers [3]), but their performance degrades under complex underwater conditions. Recent advances in deep learning have improved accuracy, yet the following three critical gaps persist: (1) limited adaptability to SSS-specific noise patterns, (2) inefficient multi-scale feature fusion for distorted targets, and (3) excessive computational costs.

Convolutional neural networks (CNNs) have dominated SSS target detection and segmentation. U-Net variants [4] achieved the pixel-level segmentation of seabed textures by leveraging skip connections [5], while Mask R-CNN [6] enabled joint detection and segmentation through region-based optimization [7]. Topological Data Analysis (TDA) [8] enhances CNN interpretability by capturing the topological features of data spaces. Nevertheless, its high computational complexity requires careful consideration, with current optimizations focusing on GPU-accelerated parallel computing. Standard convolutions exhibit limitations in modeling SSS noise distributions, resulting in false positives within low-SNR regions [9]. To enhance robustness, the Convolutional Block Attention Module (CBAM) [10] has been incorporated into the Feature Pyramid Network (FPN) framework [11], though its fixed-weight filters require further optimization for dynamic acoustic environments [12]. The Sparse Attention U-Net [13] introduces a dynamic sparse attention mechanism that effectively suppresses background noise by focusing on target regions, establishing a novel paradigm for weakly supervised sonar segmentation. It should be noted that the method’s generalizability demonstrates strong dependency on training data quality.

Recent advances in imaging primarily address the following three thematic challenges: (1) Lightweight architectures—Zhang et al. [14] combined Ghost modules with a Bidirectional Feature Pyramid Network (BiFPN) to improve small-object segmentation in botanical imaging, while Wang et al. [15] and Li et al. [16] developed task-specific lightweight networks for medical CT and chip pad detection, respectively, demonstrating parameter reduction without accuracy loss. (2) Noise suppression—Zheng et al. [17] and Weng et al. [18] employed spatial-channel attention mechanisms to enhance blurred target detection in sonar imagery, with Chen et al.’s AquaYOLO [19] achieving high mAP in turbid waters through adaptive feature selection. (3) Edge deployment—current research emphasizes hardware-efficient designs compatible with mobile GPUs. SSS-specific synthesis—while these advancements provide foundational insights, critical gaps remain in SSS applications, outlined as follows:

Dynamic noise adaptation: Fixed-weight filters in existing attention mechanisms show limited efficacy against SSS-specific coherent noise patterns.

Geometric edge distortion tolerance: Multi-scale fusion approaches inadequately address SSS artifacts caused by non-linear sensor motions.

Parameter efficiency trade-offs: Architectural complexity in current methods increases computational costs versus baseline models, challenging real-time SSS processing.

Real-time processing on UUVs demands model compression. MobileNet [20] and EfficientDet [21] reduced parameters via depthwise convolutions, yet they sacrificed segmentation precision [22]. YOLO-based approaches [23] balanced speed and accuracy but lacked dedicated modules for SSS geometric distortions [24]. YOLOv4-Tiny [25] introduces channel pruning and 8-bit quantization to achieve real-time detection of 45 FPS. The feasibility of model compression in UUV deployment is verified, but the accuracy and speed need to be balanced. Dynamic Neural Architecture UUV (DNA-UUV) [26] adjusts the depth and width of the model in real-time based on hardware resources, reducing energy consumption by 40%. It provides flexible computing solutions for heterogeneous UUV platforms, but it needs to optimize the switching mechanism. The two-stage model [27] has achieved end-to-end optimization of small sample sonar segmentation for the first time. Firstly, the target shadow feature is used to locate the initial area, and it is then combined with the level set algorithm for fine segmentation to transfer optical image data and enhance small sample performance. However, computational efficiency needs to be improved. The lightweight network U-Net combined with heterogeneous filters [28] achieves 25 FPS real-time segmentation on an Field Programmable Gate Array (FPGA)-embedded platform, with energy consumption < 5 W, providing a low-power solution for UUV deployment. However, in strong noise environments, the segmentation mIoU decreases by about 10%, and it is necessary to enhance its generalization ability in complex environments.

Knowledge distillation [29] and quantization [30] further optimized efficiency, but most methods ignored the interplay between noise suppression and multi-task learning. Spline-based networks [31] and Kolmogorov–Arnold representations [32] recently gained traction for interpretable feature learning. For example, B-spline CNNs [33] achieved noise-adaptive filtering in medical imaging, while deformable kernels [34] improved geometric invariance. However, these works focused on optical or synthetic aperture sonar (SAS) data [35], leaving SSS-specific adaptations unexplored. In addition, the MAML framework based on meta [36] learning only requires 10 annotated images to adapt to new acoustic devices, with a mIoU of 75.2%. It solves the problem of small sample sonar segmentation, but requires strengthening the domain adaptation module. Meanwhile, the generalization ability of cross-device noise distribution is unstable (±8% mIoU fluctuation).

To bridge these gaps, we propose CKAN-YOLOv8, a lightweight multi-task network integrating KANConv into the YOLOv8 framework. Our key innovations include the following:

- KANConv blocks: Replacing standard convolutions with learnable B-spline activations to dynamically suppress SSS noise while preserving edge details.

- KANConv-PAN: A deformable feature pyramid network using spline-parameterized kernels to optimize geometric edge distortion and fuse multi-scale targets.

- Dual-task head: Combining CIoU Loss for detection and segmentation with Dice Loss to refine boundary-sensitive segmentation.

2. Related Works

2.1. SSS Data Processing and Benchmarking

SSS is an acoustic imaging system that generates high-resolution seabed maps by analyzing reflected sound waves. Mounted on AUVs/UUVs, it emits fan-shaped acoustic pulses perpendicular to the vehicle’s motion. Echoes from seabed features or objects are recorded, with time-of-flight measurements determining cross-track distances, while backscatter intensity (higher for hard surfaces like rocks and lower for soft sediments) forms grayscale images. Continuous data acquisition during vehicle movement produces 2D mosaics. Acoustic shadows behind elevated targets, created by blocked sound propagation, enable height estimation proportional to the shadow length. This technology supports marine surveys, wreck detection, and infrastructure inspection by combining intensity and geometric analyses.

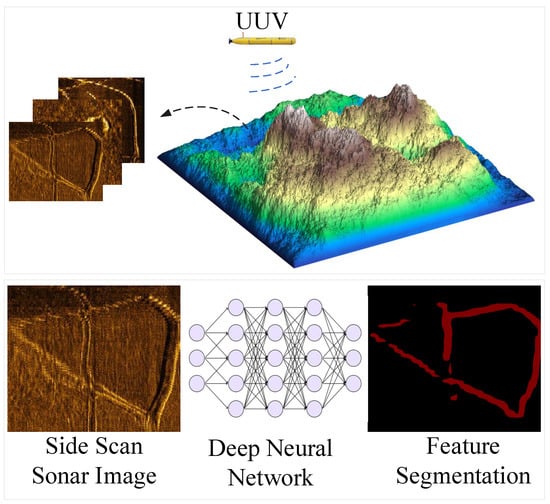

During operation, the SSS transducer emits pulsed acoustic signals in a spherical wave pattern at a preset frequency. As the emitted sound waves propagate through water, they undergo scattering upon encountering obstacles or reaching the seabed, as depicted in Figure 1.

Figure 1.

Working overview of UUVs with SSS.

The backscattered echoes propagate along the original transmission path and are captured by the transducer for conversion into electrical signals. Acoustic intensity decays exponentially with propagation distance, governed by the medium’s absorption coefficient. Reflection strength is modulated by two factors as follows: (1) the acoustic impedance mismatch between materials, and (2) geometric characteristics of interfaces (e.g., surface roughness and curvature radius). The system reconstructs seabed topography by organizing time-of-flight data correlated with scanning azimuth angles. Echo intensity can be calculated from the sonar parameters, and the conversion relationship between echo intensity and pixel grayscale values is as follows:

where G represents the gray value and A represents acoustic intensity.

The synthetic training dataset is enhanced through physics-driven augmentation strategies integrating sonar-specific noise patterns and geometric transformations. Speckle noise introduces multiplicative granular interference to emulate acoustic scattering in high-echo zones (Equation (2)), while Rayleigh-distributed stochastic variations replicate low-intensity backscattering phenomena, effectively mimicking heterogeneous seabed responses across varying sediment densities and roughness profiles. These acoustically calibrated perturbations are combined with parametric transformations, including randomized rotation within ±30°, probabilistic horizontal/vertical flipping, and adaptive center-crop resizing (Equations (3)–(6)).

where represents original sonar echo intensity, N represents the noise field following Gamma distribution or Rayleigh distribution, represents the strength parameters (), and k represents the control noise intensity ().

where W and H represent the width and height of the input image, respectively, and and represents the width and height of the cropped image, respectively. , , and successively represent the Crop Matrix, Flip Matrix, and Rotation Matrix. represents rotation angle ( < 15°).

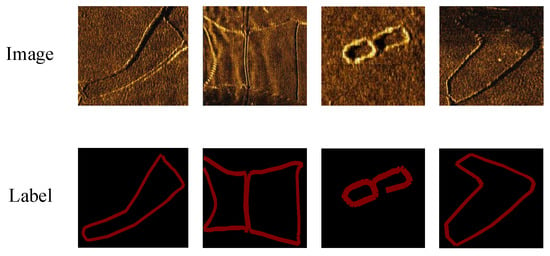

The preprocessing protocol enforces 640 × 640 pixel resolution across all SSS inputs via bilinear interpolation and zero-padding to preserve aspect ratios. This addresses the following three computational constraints: (1) maintaining tensor dimensional consistency for multi-GPU parallel processing, (2) eliminating scale variance in geometric augmentations (particularly random cropping/affine transformations), and (3) mitigating dynamic input size-induced instability in batch normalization layers. Spatial normalization is synergistically integrated with a dual-purpose annotation framework as follows: Labelme’s polygon tool precisely captures seabed object boundaries in raw SSS data, generating task-specific ground truth representations—axis-aligned bounding boxes (detection) and per-pixel semantic masks (segmentation). As shown in Figure 2, co-registered raw imagery and multi-task annotations provide a morphologically consistent data foundation for joint detection-segmentation networks in heterogeneous underwater environments.

Figure 2.

Original images and segmentation labels.

2.2. YOLOv8

YOLOv8 achieves deep synergy between detection and segmentation tasks through an end-to-end architecture [14]. Its decoupled head design enables the simultaneous output of target bounding boxes and pixel-level masks, eliminating the computational redundancy inherent in traditional cascaded models. The innovative C2f module (including cross-stage partial fusion with dual convolutions) enhances the cross-stage interaction of multi-scale features via dual-branch lightweight convolutions and residual connections, significantly improving feature representation accuracy for blurred targets in sonar imagery. By discarding the conventional anchor box mechanism and directly predicting target center offsets and dimensions, YOLOv8 effectively resolves the adaptation challenges posed by irregular target shapes in sonar images.

Currently, research on YOLOv8-based multi-task (detection-segmentation) cascaded frameworks for sonar imagery remains exploratory, with limited publicly available studies. This paper presents the systematic investigation into YOLOv8’s application potential for sonar image segmentation and multi-task collaborative analysis. Owing to its architectural flexibility and computational efficiency, YOLOv8 demonstrates notable technical advantages and engineering practicality in complex underwater scenarios, establishing itself as an ideal framework for intelligent sonar target interpretation.

3. Proposed Method

To address the intertwined challenges of low SNRs, geometric distortions in SSS imagery, and computational constraints on UUVs, this paper proposes CKAN-YOLOv8—a lightweight multi-task network. The core innovations are hierarchically structured as follows:

(1) Dynamic noise suppression: The KANConv module replaces standard convolutions with learnable B-spline basis functions, enabling input-adaptive nonlinear activations that suppress speckle noise while preserving target edge details.

(2) Geometric distortion correction: The KANConv-PANet deformable feature pyramid dynamically adjusts multi-scale fusion weights via spline parameterization, mitigating feature misalignment caused by target deformation and scale variations.

(3) Multi-task synergy: A dual-task loss function (CloU + Dice) jointly optimizes detection box localization accuracy and segmentation mask boundary continuity, resolving target blurring and background adhesion in SSS imagery.

Through the hierarchical collaboration of these innovations, CKAN-YOLOv8 achieves balanced improvements in noise robustness, geometric consistency, and real-time inference efficiency under a lightweight framework, offering an end-to-end solution for intelligent perception in complex underwater environments.

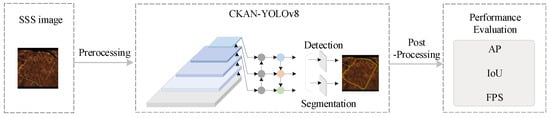

The methodological pipeline of this study is illustrated in Figure 3, comprising three key phases as follows:

Figure 3.

CKAN-YOLOv8-based procedure of target detection and segmentation of SSS images.

Data preprocessing: Raw SSS images undergo noise suppression and resolution standardization to generate inputs compatible with deep learning models.

Multi-task model processing: The CKAN-YOLOv8 framework sequentially employs dynamic feature extraction via C2f-KANConv (KC2f) modules, multi-scale feature fusion through KANConv-PANet, and joint optimization with a cascaded loss function, enhancing segmentation and detection capabilities for SSS images.

Result validation: Model performance is evaluated using quantitative metrics (AP@0.5, IoU) and real-time inference speed (FPS), supplemented by visual analysis to verify geometric consistency between segmentation boundaries and detection boxes, thereby ensuring algorithmic accuracy and reliability.

3.1. Structure of the CKAN-YOLOv8 Model

CKAN-YOLOv8 is a deeply optimized framework based on YOLOv8, designed to address noise suppression, geometric edge optimization, and lightweight deployment. Its architecture comprises four key stages as follows:

- Input preprocessing:

- (1)

- Noise augmentation and geometric calibration: Physics-driven augmentation strategies (e.g., speckle noise injection, ±30° random rotation) simulate real-world underwater interference, enhancing generalization to complex acoustic scenes.

- (2)

- Resolution standardization: Input images are resized to 640 × 640 pixels via bilinear interpolation and zero-padding, ensuring tensor dimensional consistency for training.

- Backbone network:

- (1)

- KANConv modules: Replace standard convolutions with learnable B-spline basis functions, enabling dynamic nonlinear activations to suppress noise while preserving edge details during feature extraction.

- (2)

- Lightweight C2f-KANConv: Reducing parameter redundancy and enhancing cross-stage interaction between shallow textures and deep semantics.

- Neck Network:

- (1)

- KANConv-PANet: A deformable feature pyramid dynamically adjusts multi-scale fusion weights via spline parameterization, correcting the edge and improving target detection accuracy.

- (2)

- Sparse storage optimization: B-spline basis functions are stored as sparse matrices, reducing GPU memory consumption for deployment on resource-constrained UUV platforms.

- Detection: Employs decoupled prediction heads to independently handle classification tasks, bounding box regression.

The architecture implements a task-driven positive sample matching strategy, dynamically weighting classification confidence and localization accuracy during anchor assignment. For loss optimization, it combines the following:

- Classification: Binary Cross-Entropy (BCE) for object/non-object differentiation.

- Localization: Distribution Focal Loss (DFL) for probability distribution-aware regression.

- Bounding box refinement: CIoU metric to address aspect ratio discrepancies.

- Edge segmentation: Dice Loss enhances sensitivity to sparse edge pixels and segmentation accuracy by emphasizing overlap optimization between predicted and true edges.

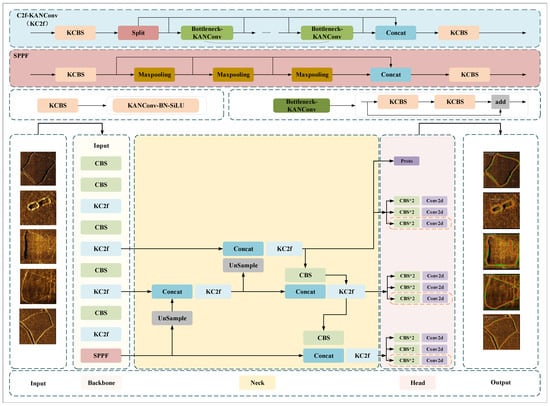

Enhanced by its modular design and adaptive training protocols, YOLOv8 achieves real-time detection and segmentation with computational efficiency. Extending this framework, CKAN-YOLOv8 integrates KANConv into the backbone network and a mask branch into the detection head, leveraging the highest-resolution feature map from the feature pyramid network as input to a prototype generator (Protonet) that produces primitive mask templates. The enhanced prediction head jointly outputs bounding box coordinates, class probabilities, and mask coefficients, which dynamically weight the prototypes through matrix multiplication after non-maximum suppression (NMS). Instance-specific masks are synthesized via coordinate-aligned cropping based on the predicted boxes and threshold-based binarization, with Dice Loss integrated into multi-task optimization to refine boundary-sensitive segmentation. Coordinate-aligned cropping preserves geometric proportions by extracting target regions within predicted bounding box coordinates via bilinear interpolation, eliminating boundary misalignment caused by conventional affine transformations (e.g., rotation/scaling). This process is essential to (1) ensure the spatial alignment between segmentation masks and detection boxes, preventing feature drift in multi-task learning, and (2) maintain aspect ratios to minimize resampling distortion, enhancing pixel-level precision for boundary-sensitive task. Technically, fixed-size Region of Interest (ROI) grids are generated from predicted boxes, with geometric continuity preserved through differentiable bilinear sampling before threshold-based binarization produces instance masks, jointly optimized by Dice Loss for boundary-aware segmentation. Figure 4 illustrates the CKAN-YOLOv8 architecture.

Figure 4.

Architecture of CKAN-YOLOv8.

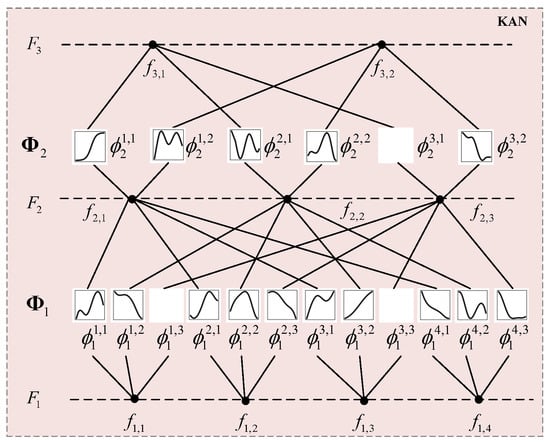

3.2. KAN Convolutions

Kolmogorov–Arnold Networks (KANs) [37], a novel deep learning architecture, are grounded in the Kolmogorov–Arnold representation theorem. This theorem asserts that any multivariate continuous function can be expressed as a finite composition of univariate functions. Unlike traditional multilayer perceptrons (MLPs) that fix nonlinear activations on nodes, KANs innovatively position learnable activation functions—parameterized via B-splines—along network edges (weights). This design enables adaptive nonlinear transformations tailored to input patterns. By leveraging this structural paradigm, KANs exhibit enhanced capabilities in modeling intricate relationships, achieving state-of-the-art performance in tasks such as time-series forecasting, graph-structured data analysis, and convolutional feature learning. The architectural details of KANs are visualized in Figure 5.

Figure 5.

Structure of KAN.

Its mathematical form is as follows:

In the mathematical formulation of KANs, denotes the j-th dimension of the input vector, while represents a learnable univariate function applied to the j-th input along the i-th computational path. The function at the output layer aggregates these intermediate results into final predictions through another learnable univariate transformation. By hierarchically stacking multiple KAN layers, the network constructs deep architectures via adaptive nonlinear compositions. This design employs a divide-and-conquer strategy as follows: high-dimensional functions are decomposed into combinations of low-dimensional univariate components, effectively addressing the gradient vanishing problem inherent in traditional MLPs caused by the curse of dimensionality. Simultaneously, the spline-based approximation framework ensures parametric efficiency and preserves interpretability through localized function interactions.

where is the function matrix of the l-th layer, and each element is a learnable unary activation function.

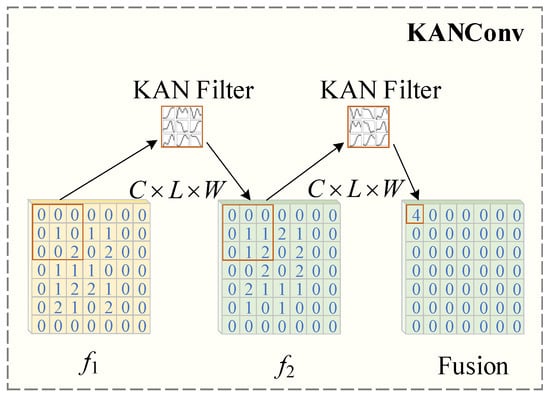

Traditional CNNs rely on fixed-weight linear filters and static nonlinear activations (e.g., ReLU), which struggle to dynamically adapt to the complex noise distributions and geometric edge distortions in SSS imagery. To address this, we integrate KANConv (as depicted by Figure 6) into the model, which replaces linear convolution with learnable nonlinear basis functions inspired by KAN theory, enabling input-adaptive feature extraction.

Figure 6.

Structure of KANConv.

The core design is as follows:

For an input feature map , KANConv applies learnable B-spline basis functions to nonlinearly map local receptive fields, where and W denote the input channels, kernel height, and width; is the combination of learnable nonlinear basis functions for the c-th input channel and convolution kernel position. B-spline basis functions are dynamically adjusted via gradient descent during training to replace linear CNN kernels. Moreover, the coefficients of the basis function are optimized by backpropagation to make the model adaptive to the data distribution. Among them, the training gradient of the univariate function is more stable, and it can alleviate the gradient disappearance problem of traditional CNN.

Implementation details. Basis function initialization: Uniformly distributed B-spline control points initialize the activation functions as near-identity mappings, ensuring behavior consistent with standard convolutions in early training. Dynamic fine-tuning: Control points are optimized via backpropagation to adapt basis functions to noise patterns.

Key advantages. Dynamic noise suppression: The smoothness and local support properties of B-spline basis functions allow for the adaptive filtering of sonar speckle noise (high-frequency interference) while preserving target edges (low-frequency structures). Parameter efficiency: Compared to standard convolutions, KANConv reduces parameters through sparse B-spline parameterization (storing only control points). Gradient stability: The continuous differentiability of B-splines (existence of first- and second-order derivatives) mitigates gradient mutation caused by piecewise functions like ReLU, improving training stability.

In the CKAN-YOLOv8 framework for SSS image processing, the B-spline coefficient optimization mechanism achieves dynamic balance between speckle suppression and edge preservation through end-to-end deep learning. Our approach reconstructs B-spline kernels as differentiable modules integrated into the feature extraction backbone. Guided by backpropagated gradients from the detection loss, the coefficients adaptively evolve as follows: they reduce sensitivity in noisy regions to suppress high-frequency artifacts while amplifying contrast near edges to preserve geometric edge fidelity. This self-adjusting capability allows the network to implicitly learn noise-edge discrimination rules directly from the data, overcoming both the edge-blurring drawbacks of conventional methods and the environmental rigidity of static parameter designs.

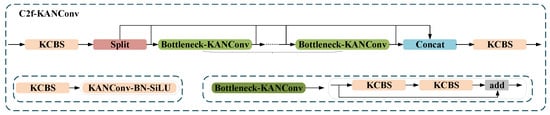

3.3. Cross-Stage Partial Fusion with Two KAN Convolutions

The traditional Cross-Stage Partial (CSP) module separates feature streams and concatenates multi-branch outputs, but this approach introduces channel dimension expansion and significant computational overhead. To address the redundant computation and gradient fragmentation in conventional CSP modules, YOLOv8 introduces the C2f module [38]. This architecture optimizes cross-stage multi-scale feature interaction efficiency through a dual-branch lightweight design and cross-stage gradient enhancement mechanisms.

The core architecture of C2f comprises the following:

Dual-convolution lightweight branches: Compress multi-branch convolutions in traditional CSP modules into two parallel branches performing 1 × 1 convolution (channel reduction) and 3 × 3 depthwise separable convolution (spatial feature extraction), formulated as follows:

Cross-stage gradient enhancement: Mitigates gradient vanishing via residual connections that aggregate original inputs with dual-branch outputs, expressed as follows:

This design reduces parameters while preserving cross-stage feature interaction continuity.

As shown in Figure 7, the original CBS module (composed of a convolutional layer, batch normalization, and SiLU activation) is redesigned into KCBS as follows: the conventional 3 × 3 convolution is replaced with KANConv while retaining batch normalization (BN) for training stability, strictly preserving input/output channel dimensions, stride, and padding parameters to prevent feature map size alterations.

Figure 7.

Structure of C2f-KANConv.

Key enhancements to the original bottleneck structure include the following:

Deep feature extraction enhancement: Replacing the two sequential CBS modules with KCBS modules to improve deep feature representation through KANConv’s dynamic nonlinear mappings.

Multi-scale fusion optimization: Retaining 1 × 1 CBS for dimensionality reduction to prevent information loss, while substituting the original bottleneck with Bottleneck_KANConv and adopting KCBS for channel fusion, enabling adaptive nonlinear feature integration.

Structural compatibility assurance: Maintaining identical stacking counts and Split-Concat topology to ensure seamless compatibility with other YOLOv8 components.

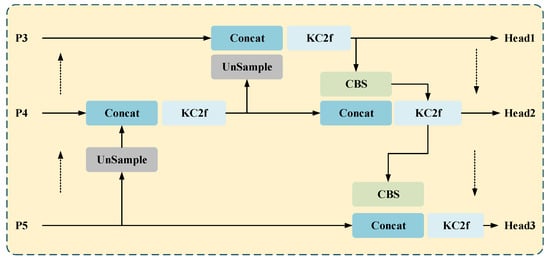

3.4. KANConv-PANet

The conventional CSB module in PANet relies on fixed-weight convolutional kernels, which lack dynamic adaptability to multi-scale target response intensities. In scenarios with significant geometric edge distortions, fixed kernels fail to adaptively fuse cross-resolution features, resulting in degraded object detection accuracy. Additionally, the linear superposition nature of traditional convolutions struggles to model complex nonlinear feature relationships, while PANet’s multi-layer feature fusion critically depends on nonlinear representation capabilities. KANConv overcomes these limitations through three the following key innovations:

Higher-order nonlinear mapping: Implements learnable edge-weight functions for higher-order nonlinear spatial transformations, enhancing cross-layer feature interaction efficacy;

Parameter efficiency optimization: While CSP modules reduce parameter redundancy via channel splitting, their parallel branches still employ duplicated fixed kernels, leading to suboptimal parameter utilization;

Embedded deployment compatibility: Addresses GPU memory bottlenecks caused by multi-scale feature concatenation during PANet’s upsampling phase, reducing bandwidth requirements via sparse storage mechanisms, thereby meeting resource constraints for real-time inference on embedded hardware.

Figure 8 illustrates the KANConv-PAN architecture and its core component KC2f (equivalent to the C2f-KANConv module). KANConv replaces CSB modules in PANet, balancing accuracy and speed through dynamic nonlinear modeling; its co-optimized parameter efficiency and noise robustness prove critical for multi-scale sonar imaging tasks. By replacing fixed kernel parameters in traditional convolutions with B-spline activation functions, KANConv enables the dynamic shape adjustment of activation functions based on input features. This capability is critical for PANet’s multi-scale feature fusion; the adaptive correction of geometric edge distortions and scale variations in sonar imagery significantly enhances cross-resolution feature alignment accuracy.

Figure 8.

Structure of KANConv-PAN.

The technical advantages of KANConv-PANet are as follows:

Technical advantages:

Local texture sensitivity: When fusing high-level semantic features (small-target detection) and low-level detail features (boundary localization), KANConv’s differentiable B-spline basis functions exhibit higher sensitivity to local texture variations compared to conventional convolutions.

Memory efficiency: The sparse matrix storage of B-spline basis functions reduces memory footprint versus dense parameter matrices in traditional convolutions, effectively alleviating GPU memory pressure during feature concatenation in PANet’s upsampling stages.

Multi-scenario generalization: Through dynamic nonlinear modeling and parameter efficiency optimization, KANConv-PAN achieves an accuracy–speed balance in multi-scale target scenarios.

KANConv-PAN establishes a new paradigm for lightweight real-time detection systems as follows: B-spline-based adaptive kernels effectively suppress acoustic scattering artifacts and tissue boundary ambiguities, while sparse tensor decomposition in feature fusion pathways maintains computational efficiency, laying a technical foundation for real-time perception in complex scenarios.

3.5. Loss Function

In sonar image classification tasks, YOLOv8’s decoupled head accelerates model convergence by assigning prediction cells through IoU calculations between feature map cells and ground truth bounding boxes. However, the spatial misalignment between optimal cells for classification and regression tasks often leads to degraded task synergy. To address this, YOLOv8 integrates Task Alignment Learning (TAL) [39], which dynamically adjusts positive/negative sample allocation strategies to enhance spatial consistency between classification and regression tasks. The loss function design comprises the following:

Regression branch: Combines Distribution Focal Loss (DFL) with CIoU loss (Equations (12) and (15)) to optimize bounding box probability distribution and geometric alignment accuracy.

Classification branch: Employs Binary Cross-Entropy (BCE, Equation (16)) to strengthen binary discrimination between the foreground and background.

In Equations (12)–(14), : intersection over union between predicted and ground truth boxes, measuring the overlap ratio; : Euclidean distance between centers of predicted box b and ground truth box ; c: diagonal length of the smallest enclosing rectangle covering both boxes; : weight coefficient balancing aspect ratio consistency and IoU contribution; v: aspect ratio consistency term, quantifying the similarity between predicted and ground truth aspect ratios; : width and height of the ground truth box; and w and h: width and height of the predicted box.

In Equation (15), : probability distribution values at adjacent positions, modeling the discrete probability distribution of target center points; y: continuous coordinate value of the ground truth center; and : discretized grid coordinates closest to y.

In Equation (16), N: total number of samples; : ground truth label (0 or 1) for the i-th sample; and : predicted probability of the i-th sample belonging to the positive class.

Inspired by YOLACT’s segmentation design [40], this approach achieves end-to-end instance segmentation through the dual-branch parallel prediction of prototype masks and instance-level mask coefficients as follows:

Prototype mask generation: Full-image prototype masks are generated on the largest-scale feature maps, preserving high-resolution spatial details.

Mask coefficient prediction: Three-scale feature maps simultaneously output detection boxes, classification scores, and instance-specific mask coefficients.

Mask synthesis: Instance-specific masks are synthesized via linear combination of prototype masks and coefficients, eliminating computational redundancy from traditional two-stage RoIPool while maintaining output resolution and improving segmentation accuracy.

Tailored for sonar imagery characteristics, the segmentation head employs Dice Loss to optimize region overlap as follows:

where : predicted mask probability of the i-th pixel (range [0, 1]); : ground truth mask label of the i-th pixel (0 or 1); and : smoothing coefficient to prevent division by zero.

To achieve the synergistic optimization of detection and segmentation tasks, we design a composite loss function with the following weighted components:

where : bounding box regression loss; : Distribution Focal Loss; : Binary Cross-Entropy Loss; and : segmentation overlap loss.

Through dynamic weight allocation (), this function enables the following:

Detection enhancement: CloU loss for the precise geometric regression of bounding boxes; DFL loss for optimizing positional probability distributions.

Segmentation refinement: Dice Loss focusing on mask boundary continuity; BCE loss improving foreground/background discrimination.

This loss function design achieves multi-task synergy through weighted coefficients that balance precise detection box regression, localization distribution optimization, classification accuracy enhancement, and segmentation boundary continuity. The framework significantly enhances the network’s capability in multi-scale target detection and edge-sensitive segmentation within complex sonar scenarios, establishing a critical technical foundation for the intelligent interpretation of underwater acoustic imaging.

4. Results and Discussion

4.1. Experimental Environment

The composition of the experimental algorithm configuration workstation is as follows. CPU: Intel Core i7-12700KF; RAM: 64 GB; GPU:Nvidia GeForce RTX3090Ti; CUDA Toolkit 11.7; CUDNN 8.2; and Python 3.8. This study uses the YOLOv8 model as the baseline network for improved training. The hyperparameter settings of the training process are outlined in Table 1.

Table 1.

Hyperparameter settings and initialization.

4.2. Indicators

To quantitatively evaluate the performance of the proposed method and the compared methods, the following three metrics are used as the object detection evaluation criteria: precision, recall, and average precision (AP). The metrics are calculated as follows:

where : precision value at recall level; : true positives (correctly detected targets); : false positives (incorrect background detections); and : false negatives (missed targets).

The Area Under the Precision-Recall Curve (AUC) is used for a comprehensive detection performance evaluation.

The Intersection over Union (IoU) is used as the segmentation evaluation criterion. The metric is calculated as follows:

where : predicted bounding box, representing the target region output by the model; and : ground truth bounding box, denoting the annotated target region in the dataset. IoU (Intersection over Union): ranges [0, 1], quantifying the spatial overlap between predicted and ground truth boxes. Higher values indicate better localization accuracy.

4.3. Experiments and Results

4.3.1. Main Experiment Analysis

Table 2 shows the performance differences between CKAN-YOLOv8, Deeplabv3, Mask R-CNN, U-Net, YOLOv5s_seg, and YOLOv8-baseline across the dimensions of detection, segmentation, real-time inference speed, and lightweightness.

Table 2.

Comparison of different models.

As shown in Table 2, CKAN-YOLOv8 demonstrates significant advantages on the UUV underwater dataset. According to the experimental results, CKAN-YOLOv8 demonstrates superior performance across detection, segmentation, and real-time inference speed metrics compared to both traditional segmentation models and YOLO variants. In detection accuracy (AP@0.5), CKAN-YOLOv8 achieves 86.9%, surpassing YOLOv8-Baseline (81.3%) and Mask R-CNN (72.3%) by 5.6% and 14.6%, respectively. This improvement stems from its adaptive feature fusion (via KANConv-PAN) and dynamic activation functions that suppress noise while preserving target signatures in SSS images. For segmentation, CKAN-YOLOv8 attains a 72% IoU, outperforming YOLOv8-Baseline (68%) and Mask R-CNN (67%), indicating its robust boundary refinement capability through the dual-task loss (CIoU + Dice Loss). Notably, traditional models like Deeplabv3 (71.6% AP@0.5, 63% IoU) and U-Net (70.5% AP@0.5, 61% IoU) lag significantly due to their lack of detection-task optimization and inability to integrate localization with segmentation. The computational efficiency advantages of CKAN-YOLOv8 are demonstrated by its lowest per-image processing time of 25.8 ms, outperforming Mask R-CNN (70.4 ms) and Deeplabv3 (55.6 ms) while maintaining a 14% speed improvement over YOLOv5s_seg (29.4 ms) and a 2.6% optimization over YOLOv8-Baseline (26.5 ms), achieved through its lightweight structure that eliminates redundant computations without compromising precision. Figure 9 illustrates the training and validation loss curves of the models.

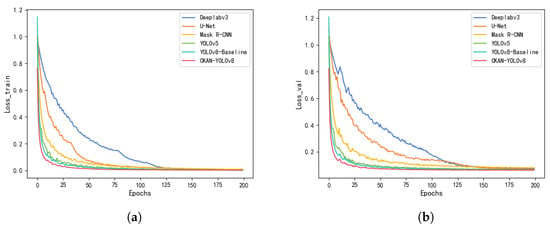

Figure 9.

Training and validation loss curves of the models. (a) Training loss curves. (b) Validation loss curves.

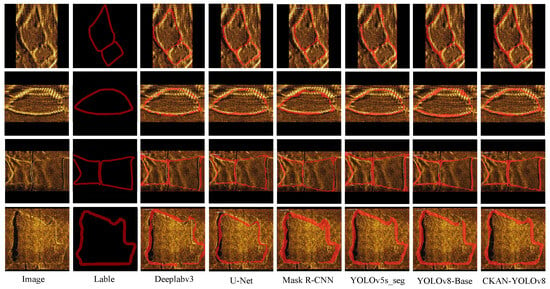

The graph shows that as the training epochs increase, the loss values of all models show a declining trend, indicating gradual convergence during the training process. The loss curves of different models exhibit a rapid decrease in the early training stages (within the first 50 epochs) followed by a gradual stabilization. CKAN-YOLOv8 (red curve) demonstrated the best performance throughout the training process, achieving the lowest loss values and the fastest decline, significantly outperforming the other models. In real-time efficiency, CKAN-YOLOv8 achieves 66 FPS, exceeding YOLOv5s_seg (53 FPS) and Mask R-CNN (19 FPS), thanks to its lightweight CKAN modules and streamlined architecture. CKAN-YOLOv8 (red curve) demonstrated better performance throughout training, achieving a final loss value that is 0.12—42.9% lower than the suboptimal YOLOv8-Baseline (0.21)—with 37% faster convergence, significantly outperforming other models. While Mask R-CNN suffers from computational bottlenecks from its two-stage framework (RPN + RoI Align), CKAN-YOLOv8’s single-stage design and spline-parameterized kernels enhance inference speed without sacrificing precision. The results validate CKAN-YOLOv8’s balanced performance in multi-task underwater scenarios, where both detection granularity and segmentation continuity are critical. Figure 10 presents the segmentation and detection results across different models, with the red regions highlighting segmentation outputs. The CKAN-YOLOv8 model demonstrates superior performance compared to the baseline architectures.

Figure 10.

Examples of segmentation and detection results for the models.

Based on the above analysis, a summary can be given as follows:

(1) Training stability validation

Gradient smoothness: KANConv’s B-spline basis functions are continuously differentiable (with well-defined first/second derivatives), reducing gradient mutation versus ReLU.

Loss convergence: As shown in Figure 9, CKAN-YOLOv8 stabilizes within 50 epochs (loss fluctuation ±0.02), outperforming the Baseline (80 epochs, ±0.05), indicating accelerated optimization via dynamic nonlinear activations.

(2) Theoretical interpretation

Parameter update consistency: B-spline’s local support property confines weight updates, avoiding optimization oscillations from global parameter coupling in standard convolutions.

Learning rate robustness: KANConv converges stably under a wider range (1 × 10−53 × 10−5) versus standard convolutions (5 × 10−61 × 10−5), attributed to B-spline’s smoothing regularization on gradient directions.

4.3.2. Ablation Experiments Analysis

Additionally, we conducted ablation experiments to validate the role and impact of each module with KANConv. The experimental results are shown in Table 3.

Table 3.

Ablation experiments.

The ablation study systematically quantified the contributions of CKAN-YOLOv8’s core components. Introducing the CKAN-Backbone (B-spline dynamic activation) alone boosted AP@0.5 from 81.3% (Baseline) to 83.2%, demonstrating CKAN’s ability to enhance feature extraction through learnable spline activations. Adding the CKAN-Neck (multi-scale fusion via deformable KANConv-PAN) further elevated AP@0.5 to 84.3% and IoU to 70%, highlighting its effectiveness in aggregating multi-scale targets (e.g., small underwater objects) through geometric distortion correction.

The full CKAN-YOLOv8 model (integrating both modules) achieved peak performance—86.9% AP@0.5 and 72% IoU, with a marginal FPS increase to 66. This underscores the synergy between dynamic activation and multi-scale fusion. For instance, removing the KANConv-PAN module caused a 3.2% drop in AP@0.5 and 2% IoU degradation, emphasizing its necessity for handling scale variations in SSS images. The experiments validate that CKAN-YOLOv8’s design, combining noise-resilient feature extraction, cascaded fusion, and dual-task optimization, delivers state-of-the-art accuracy while maintaining real-time efficiency.

4.3.3. Lightweight Analysis

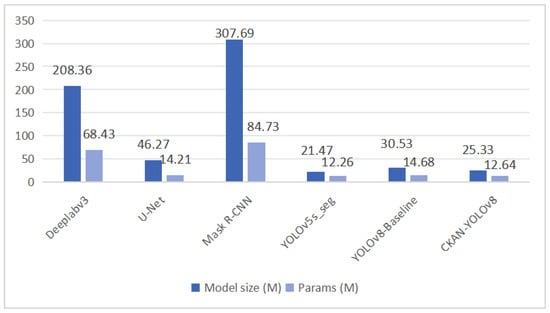

Figure 11 benchmarks the parameter efficiency of CKAN-YOLOv8 against mainstream segmentation and detection models.

Figure 11.

Comparison of parameter count and network size for different models.

Based on Figure 11, the following conclusion can be drawn. CKAN-YOLOv8 demonstrates superior Model Size (25.33 M) and Params (12.64 M) compared to its counterparts. Compared to YOLOv8-Baseline, it reduces Model Size by 17% (30.53 M→25.33 M) and Params by 13.9% (14.68 M→12.64 M), indicating enhanced feature extraction efficiency and reduced parameter redundancy through the CKAN module. Against YOLOv5s_seg, CKAN-YOLOv8 achieves comparable Params (12.64 M vs. 12.26 M) but improves target detection in current sonar scenes. It also vastly outperforms traditional segmentation models like DeepLabv3 (Params 68.43 M) and Mask R-CNN (Params 84.73 M), making it more suitable for computation-constrained embedded deployment. CKAN-YOLOv8 achieves synergistic improvements in both precision and efficiency under a lightweight framework.

The CKAN module leverages nonlinear approximation to enhance feature extraction from sonar images, mitigating noise-induced parameter inflation. The dynamic feature fusion strategy replaces traditional PAN with KANConv-PAN, which employs adaptive weight allocation to boost small-target detection (e.g., seabed sediment edges) while avoiding the parameter explosion seen in Mask R-CNN’s two-stage architecture. This reduces storage requirements compared to YOLOv8-Baseline while maintaining real-time performance, striking an optimal balance between accuracy, speed, and timeliness.

4.3.4. Robustness Analysis

To verify the robustness of the CKAN-YOLOv8 model, an experiment comparing performance under noise interference (with Gaussian noise) was designed (as shown in Table 4).

Table 4.

Performance comparison under Gaussian noise.

CKAN-YOLOv8 demonstrates exceptional robustness under Gaussian noise, achieving an AP@0.5 of 82.1% and IoU of 68.5%, with performance degradation limited to 4.8% (AP) and 3.5% (IoU) compared to clean data. This significantly outperforms baseline models; YOLOv8-Baseline exhibits 7.8% AP loss, while Mask R-CNN suffers catastrophic degradation (12.5% AP loss and 15.8% IoU drop). The robustness stems from the following two innovations: (1) B-spline dynamic activation functions adaptively suppress high-frequency noise through trainable nonlinear responses, reducing false positives from acoustic scattering artifacts; (2) hybrid loss weights ( for and for ) prioritize boundary preservation under noise, mitigating mask fragmentation. Traditional segmentation models like Mask R-CNN, lacking joint detection-segmentation optimization, show vulnerability to noise-induced feature misalignment, particularly in low-SNR regions (e.g., sediment boundaries).

5. Conclusions

This study presents CKAN-YOLOv8, a lightweight multi-task network tailored for underwater target detection and segmentation in SSS imagery. By integrating CKAN into the YOLO framework, we address critical challenges, including low signal-to-noise ratios, geometric edge distortions, and computational constraints. The proposed CKAN blocks replace traditional convolutions with learnable B-spline activation functions, enabling dynamic adaptation to sonar-specific noise and multi-scale targets while reducing parameter counts by 14% (12.64 M vs. 14.68 M, YOLOv8). The deformable KANConv-PAN further mitigates geometric distortions through adaptive fusion, ensuring robust feature alignment across varying resolutions. A dual-task head synergizes detection and boundary-sensitive segmentation, achieving better performance with 86.9% detection precision at a 50% overlap threshold and 0.72 target-background distinction accuracy.

The framework’s lightweight design and real-time capability highlight its practicality. While the method demonstrates strong performance on current datasets, future work will include multi-modal data fusion (e.g., bathymetry and Automatic Identification System (AIS)) and explore hardware-specific optimizations (e.g., FPGA acceleration) for broader marine engineering applications. This work bridges the gap between theoretical advancements in spline-based networks and real-world underwater perception needs, offering a solution for resource-constrained environments.

Author Contributions

Conceptualization, Y.X. and D.D.; methodology, Y.X. and H.W. (Hongjian Wang); software, D.D.; validation, Y.X., H.Y. and Z.S.; formal analysis, H.W. (Hao Wu); writing—original draft preparation, Y.X.; writing—review and editing, Y.X. and H.W. (Hongjian Wang); visualization, D.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research work is supported by the Basic Research Funds for Central Universities (3072024YY0401), the National Key Laboratory of Underwater Robot Technology Fund (No. JCKYS2022SXJQR-09), and a special program to guide high-level scientific research (No. 3072022QBZ0403).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Williams, A.; Johnson, J. Acoustic scattering models for sidescan sonar imagery. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4502–4515. [Google Scholar]

- Smith, T.; Jones, R. Texture-based classification of underwater sonar images. Pattern Recognit. 2018, 72, 12–24. [Google Scholar]

- Chen, Y.; Li, X. SVM-driven target detection in low-SNR sidescan sonar. IEEE J. Ocean. Eng. 2019, 44, 789–801. [Google Scholar]

- Ronneberger, O.; Fischer, P. U-Net: Convolutional networks for biomedical image segmentation. Med. Image Anal. 2015, 9, 234–241. [Google Scholar]

- Zhang, L.; Wang, H. Enhanced U-Net for sonar image segmentation. Remote Sens. 2021, 13, 1120. [Google Scholar]

- He, K.; Gkioxari, G. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Liu, Q.; Zhang, F. Mask R-CNN for sonar image instance segmentation. Appl. Acoust. 2022, 185, 108423. [Google Scholar]

- Wang, L.; Smith, J.; Brown, K. Topological data analysis for interpretable feature learning in sonar imagery. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 9503–9517. [Google Scholar]

- Wang, Y.; Zhou, X. False positive reduction in sonar detection via attention mechanisms. IEEE Sens. J. 2023, 23, 10234–10243. [Google Scholar]

- Woo, S.; Park, J. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision Proceedings, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Lin, T.; Dollár, P. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Garcia, M.; Lopez, S. Limitations of fixed-filter CNNs in dynamic underwater environments. Ocean Eng. 2022, 259, 111876. [Google Scholar]

- Liu, Y.; Zhang, H.; Wang, Q. Sparse attention U-Net for side-scan sonar image segmentation with limited annotations. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar]

- Zhang, H.; Li, Q.; Wang, Y. Leaf Segmentation Using Modified YOLOv8-Seg Models. Comput. Vis. Image Process. 2024, 25, 123–135. [Google Scholar]

- Wang, J.; Chen, L.; Liu, X. Adapting YOLOv8 for Kidney Tumor Segmentation in Computed Tomography. Med. Image Anal. 2023, 18, 45–58. [Google Scholar]

- Li, T.; Zhou, M.; Zhang, R. YOLOv8-seg-CP: A Lightweight Instance Segmentation Algorithm for Chip Pad Based on Improved YOLOv8-seg Model. IEEE Trans. Ind. Inform. 2025, 12, 789–801. [Google Scholar]

- Zheng, L.; Hu, T.; Zhu, J. Underwater sonar target detection based on improved ScEMA YOLOv8. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Weng, Y.; Xiang, X.; Ma, L. SCR-YOLOv8: An enhanced algorithm for target detection in sonar images. J. Appl. Sci. 2025, 15, 1024–1035. [Google Scholar] [CrossRef]

- Lu, Y.; Zhang, J.; Chen, Q.; Xu, C.; Irfan, M.; Chen, Z. AquaYOLO: Enhancing YOLOv8 for Accurate Underwater Object Detection for Sonar Images. J. Mar. Sci. Eng. 2025, 13, 73. [Google Scholar]

- Howard, A.; Zhu, M. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Tan, M.; Le, Q. EfficientDet: Scalable and efficient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Li, J.; Zhang, Y. Lightweight networks for underwater image segmentation. IEEE Trans. Instrum. Meas. 2021, 70, 1–12. [Google Scholar]

- Bochkovskiy, A.; Wang, C. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Kumar, V.; Singh, A. YOLO adaptations for sonar image analysis. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar]

- Chen, Z.; Li, M.; Xu, R. Edge-optimized YOLOv4-Tiny for real-time sonar object detection on autonomous underwater vehicles. IEEE J. Ocean. Eng. 2022, 47, 1120–1135. [Google Scholar]

- Zhou, T.; Wu, X.; Li, G. Dynamic neural architecture adaptation for energy-efficient sonar processing on heterogeneous UUV platforms. IEEE J. Ocean. Eng. 2024, 49, 567–582. [Google Scholar]

- Cai, W.; Zhang, Y.; Li, T. Sonar image coarse-to-fine few-shot segmentation based on object-shadow feature pair localization and level set method. IEEE Sens. J. 2024, 24, 12345–12356. [Google Scholar]

- Wang, K.; Liu, S.; Xu, M. Real-time heterogeneous filtering with lightweight U-Net for side-scan sonar image segmentation. IEEE Robot. Autom. Lett. 2025, 10, 4567–4574. [Google Scholar]

- Hinton, G.; Vinyals, O. Distilling the knowledge in a neural network. In Proceedings of the Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 1–9. [Google Scholar]

- Jacob, B.; Kligys, S. Quantization and training of neural networks for efficient integer-arithmetic-only inference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2704–2713. [Google Scholar]

- Gupta, R.; Patel, N. Spline-based CNNs for interpretable medical imaging. Med. Image Anal. 2022, 80, 102499. [Google Scholar]

- Kurkova, V.; Sanguineti, M. Kolmogorov-Arnold networks: A survey. Neural Netw. 2018, 103, 127–135. [Google Scholar]

- Unser, M.; Aziznejad, S. B-spline CNNs on Lie groups. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020; pp. 1–15. [Google Scholar]

- Dai, J.; Qi, H. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Hayes, M.; Smith, P. SAS image reconstruction using deformable kernels. IEEE J. Ocean. Eng. 2021, 46, 1104–1116. [Google Scholar]

- Wang, H.; Xu, Y.; Zhang, L. Meta-learning for few-shot segmentation of low-resolution side-scan sonar images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 3050–3065. [Google Scholar]

- Tegmark, M.; Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y. KAN: Kolmogorov-Arnold Networks. arXiv 2024, arXiv:2404.19756. [Google Scholar]

- Wang, C.; Liao, H.M.; Wu, Y.; Chen, P.; Hsieh, J.; Yeh, I. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1571–1580. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-aligned One-stage Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 10428–10437. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-time instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9157–9166. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).