1. Introduction

Underwater optical imaging serves as a critical pillar for marine science research and maritime safety assurance, while also representing a major challenge that has long perplexed both academia and industry. Its applications span underwater infrastructure inspection [

1], underwater robot navigation and obstacle avoidance, underwater search and rescue operations, and military reconnaissance. However, traditional underwater optical imaging methods face challenges such as limited detection range, severe backscatter noise from aquatic media, and imaging difficulties in deep-sea darkness. These challenges become more pronounced in turbid waters, significantly limiting the practical application effectiveness of conventional underwater optical imaging.

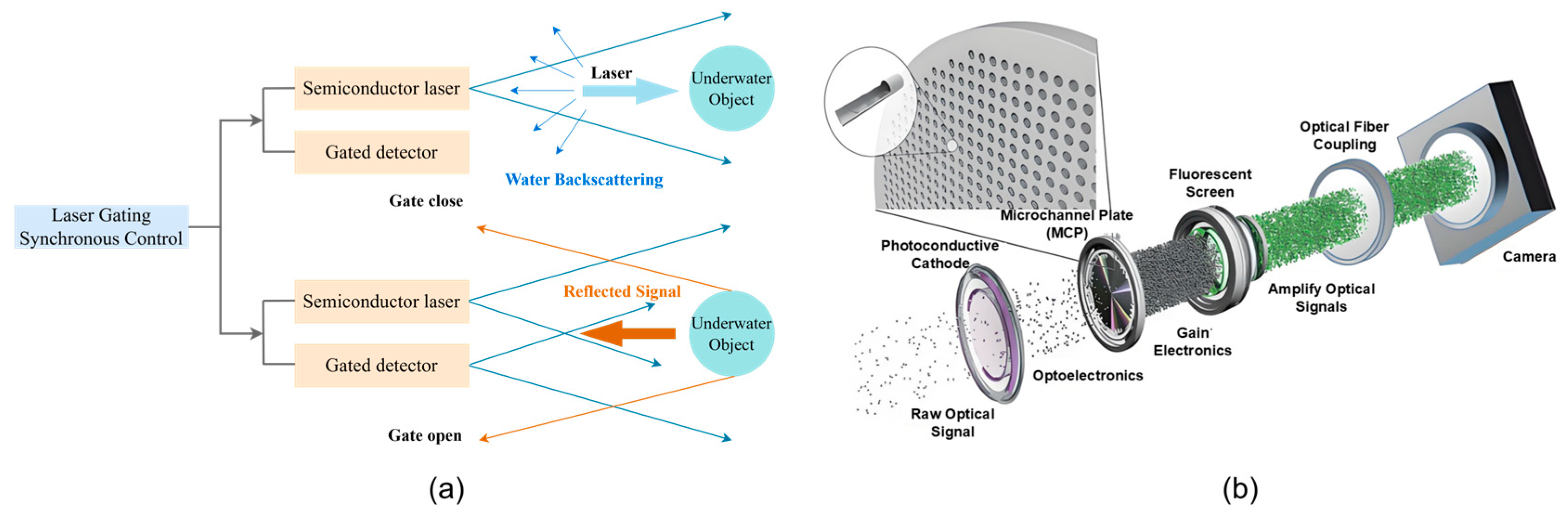

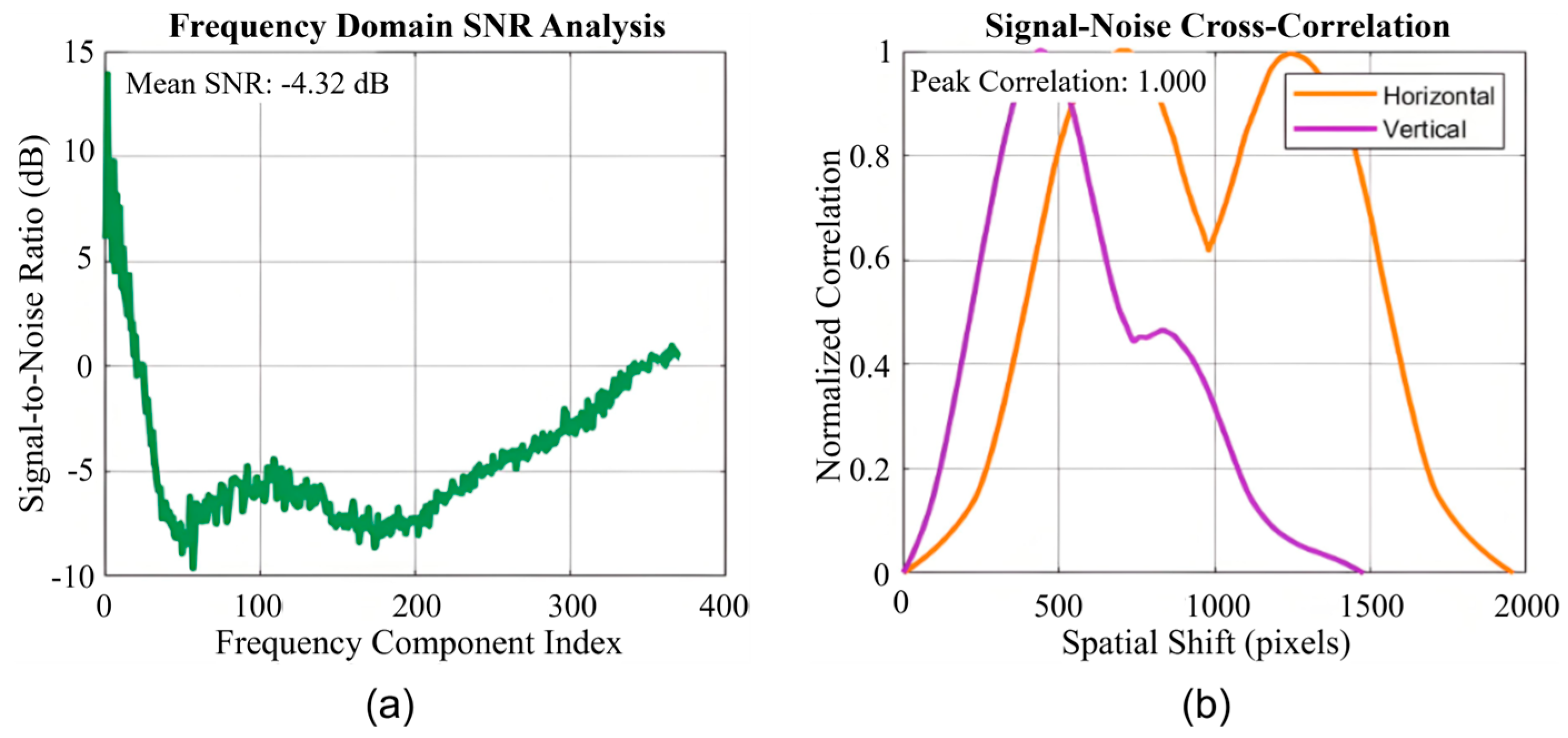

ULRGI is currently one of the primary applied technologies in deep-sea exploration. To overcome the severe absorption and scattering effects of the underwater environment on light, this technology precisely synchronizes nanosecond-level pulsed laser illumination with the nanosecond-level gating time of an ICMOS (Image Coupled CMOS) camera. This synchronization effectively masks backscatter noise [

2,

3] generated by the underwater environment and suspended particles in target echo signals, enabling imaging in deep-water dark environments. As imaging distances increase, the propagation attenuation rate of pulsed lasers in seawater accelerates. Additionally, photons undergo continuous forward and backscattering by suspended particles during both emission and reflection, leading to energy dispersion. This reduces the proportion of effective signals received by detectors, causing dual degradation in image quality. Consequently, images exhibit non-uniform sub-pixel speckle, blurring, and low contrast. Enhancing and restoring underwater laser range-gated images holds promise for extending the hardware detection limit. Mounted on remotely operated vehicles (ROVs), this technology enables long-range acquisition of information on submerged communication cables, cultural relics, and biological remains.

Confronted with extreme conditions like light-deprived seawater and high suspended particle concentrations, the presence of suspended particles, plankton, and microorganisms further degrades underwater visibility and detection range, posing severe challenges to imaging systems. Traditional underwater optical cameras are limited to imaging within 2 m [

4]. Even with intense illumination, suspended particles reflect excessive light back to the lens, creating a “light haze”—akin to shining a flashlight in a blizzard. This intense scattered light exacerbates diffusion, causing sensor overexposure and preventing breakthroughs in long-range imaging. ULRGI achieves imaging at 3–5 times the attenuation length [

5,

6,

7]. However, due to its imaging mechanism, ULRGI images suffer from pulse broadening, gating noise, and partial water scattering noise. Returned target information remains heavily contaminated by multiple noise sources, significantly increasing the difficulty of image restoration in this field.

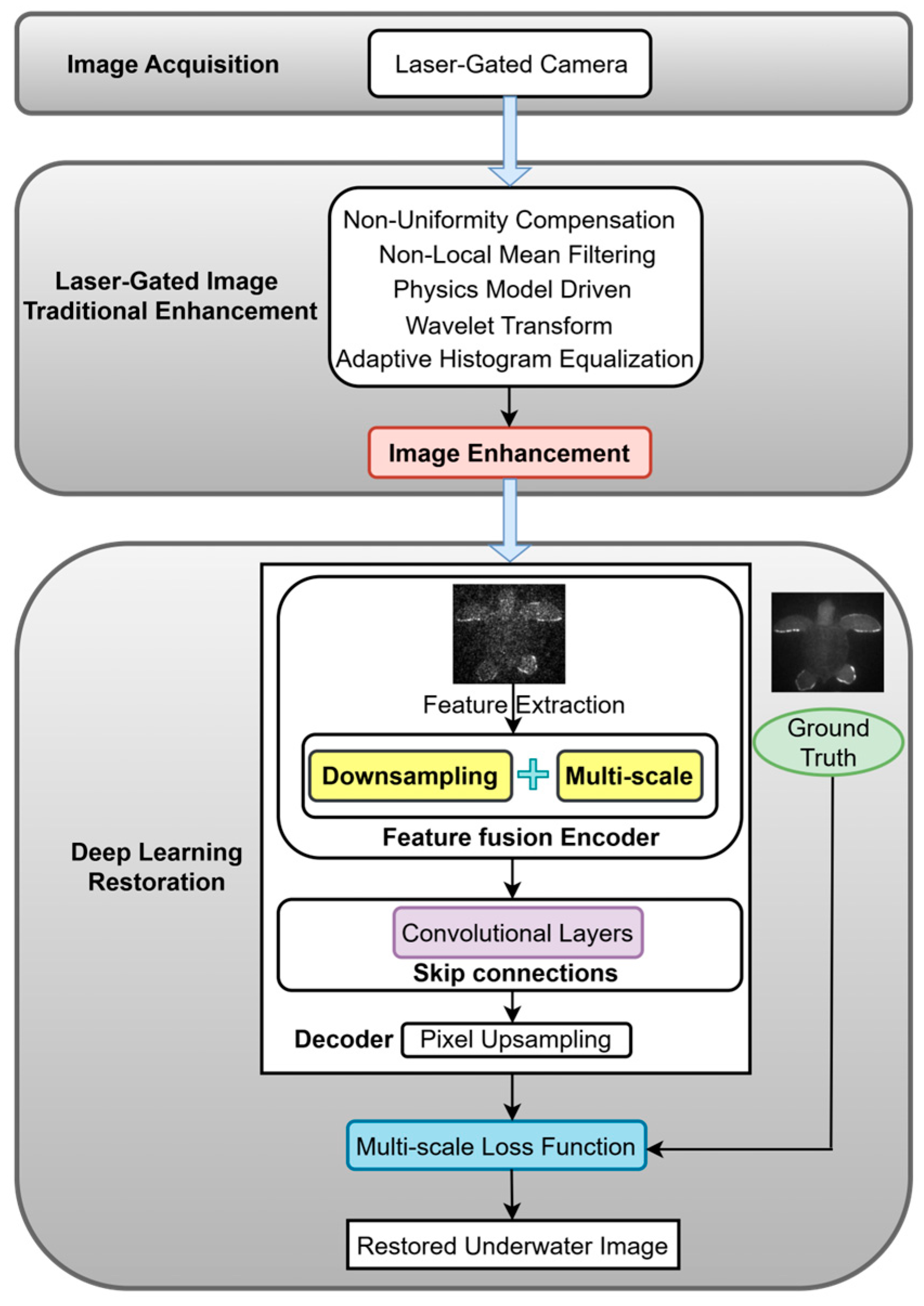

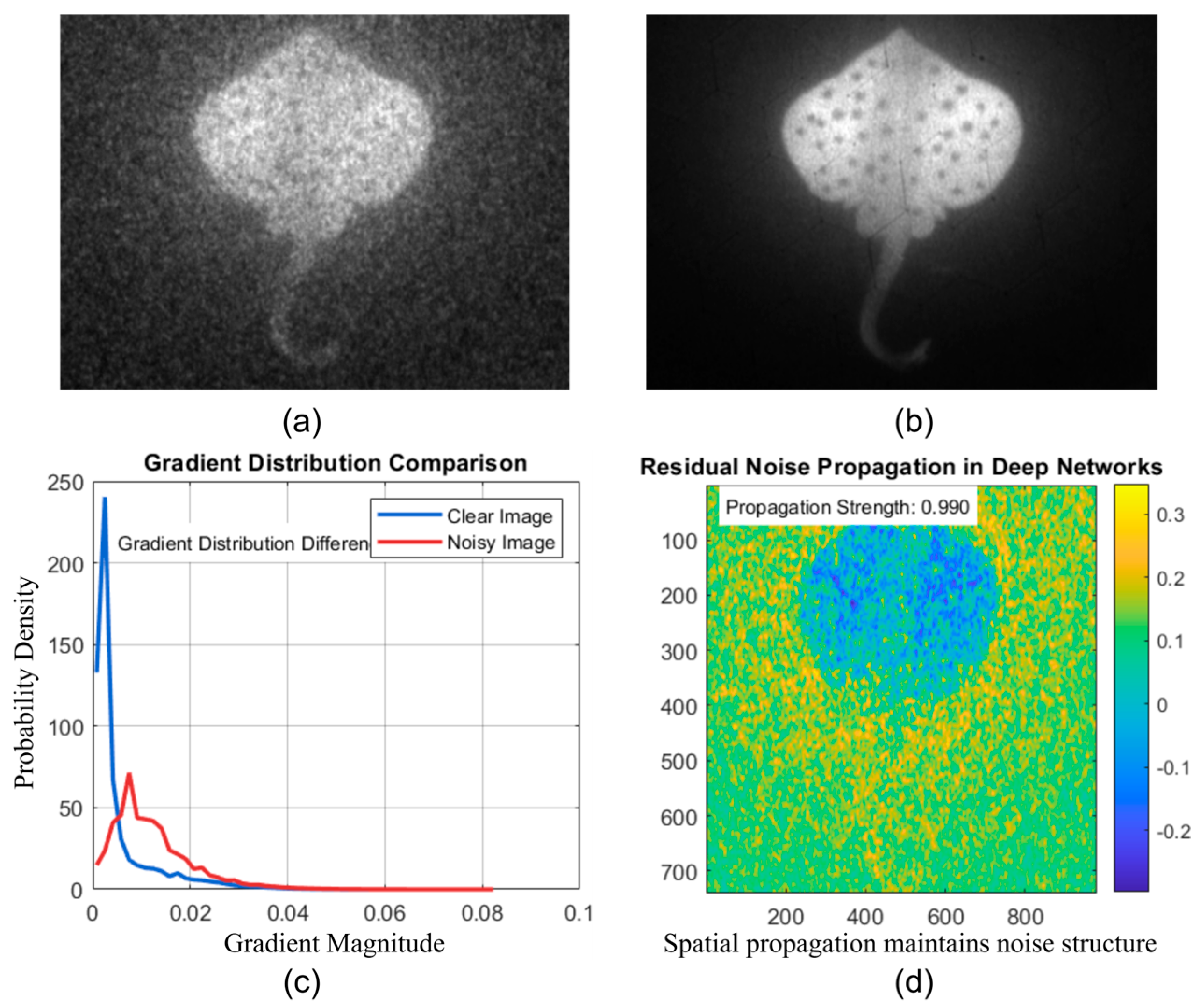

Traditional underwater image restoration techniques, as shown in

Figure 1, primarily focus on two approaches to enhance image quality [

8]: one is driven by physical models based on the optical properties of water [

9], and the other relies on classical image processing algorithms. Physics-model-driven image enhancement methods require precise simulation and modeling of laser optical transmission properties in water, constructing degradation models based on water scattering models for recovery, or employing point spread function (PSF) estimation for blind deconvolution recovery. For instance, Hou et al. [

10] incorporated sparse priors for the illumination channel into an extended underwater imaging model, while Voss and Chapin [

11] employed specialized instruments to measure the point spread function (PSF) in the ocean. However, the process from measurement to modeling faces challenges such as high equipment costs and complex parameter involvement. Consequently, the resulting underwater imaging models often lack sufficient precision, leading to time-consuming blind restoration algorithms that cannot be processed in real-time for practical applications, rendering real-time processing unfeasible in practical applications. Peng [

12] proposed an underwater scene depth estimation technique based on image blur and light absorption, applying the physical principles of underwater light propagation to the image formation model (IFM). However, the inherently complex and diverse characteristics of the underwater environment pose a core obstacle to acquiring “accurate and universal” prior knowledge. When confronted with unfamiliar environmental conditions, the domain-specific nature of such priors often becomes a key factor leading to suboptimal restoration performance. In recent years, as research into the challenging task of underwater image enhancement has deepened, several specialized underwater optical image restoration algorithms have emerged [

13,

14,

15,

16]. Li, Z et al. [

17] proposed an iterative color correction and vision-based enhancement framework to address issues like color distortion in underwater images. Dengyu Cao et al. [

18] proposed an adaptive enhancement method for small underwater targets based on dense residual denoising. Shiyan Li et al. [

19] introduced CMFNet, an end-to-end ultra-lightweight underwater image enhancement network. Zhang, W. et al. [

20] developed a fusion method combining frequency and spatial domains for underwater image restoration. Jingyu Yang et al. [

21] presented a structure-texture decomposition-based approach for enhancing underwater image details and edges. Among these, classical image processing algorithms fail to eliminate scattering noise at the physical level, often leading to reverse amplification of scattering noise and detail distortion. Single-branch end-to-end restoration networks often misinterpret scattered noise as target details, yielding results that violate underwater light propagation laws. Furthermore, the integration of physical models and network architecture remains largely “loosely coupled,” failing to deeply incorporate branch design. This results in abrupt performance degradation under specific scenarios.

Unlike the imaging mechanism of traditional underwater optical cameras, laser-gated imaging employs pulsed beams that approximately follow a Gaussian distribution. This uneven distribution during underwater transmission often leads to overexposure or underexposure of local target features. Furthermore, the temporal broadening of laser transmission makes it challenging to precisely match the gating signal with the target echo signal. Compared to conventional underwater images, enhancing color bias in laser-gated images presents greater difficulty. Numerous researchers have focused on enhancing underwater laser-gated distance images as a key research direction; for instance, Sheng Wu et al. [

22] proposed a convolutional sparse coding denoising neural network based on deep learning to improve resolution, while Liu, P. et al. [

23] proposed a U-Net architecture incorporating residual connections. However, most methods fail to effectively account for the noise photon distribution characteristics inherent in underwater imaging, making it difficult to recover pixel loss caused by scattering noise and camera dark noise. Therefore, to more precisely adapt to the core characteristics of underwater laser-gated images, it is necessary to reconstruct existing algorithms to ultimately achieve efficient and reliable restoration of such images, as shown in

Figure 2.

In this paper, we propose PLPGR-Net, a photon-level physics-guided underwater laser-gated image restoration network. Its main contributions are summarized as follows:

PLPGR-Net achieves effective separation of echo photons from scattering noise by integrating a water scattering background suppression module with a sparse photon perception module to model photon propagation and distribution characteristics. The network utilizes an enhanced U-Net decoder to recover high-frequency image information and introduces a multi-scale discriminator with self-attention to learn true photon distribution patterns, thereby constraining image generation. Ultimately, the algorithm overcomes the limitations of traditional multi-frame fusion methods that rely on temporal continuity, achieving high-quality reconstruction from single-frame underwater laser-gated images.

This paper proposes a multidimensional physical joint-constraint loss framework that translates underwater optical imaging principles into a differentiable optimization objective. The framework integrates gradient consistency, frequency-domain amplitude spectrum constraints, and sparse photon-region binary cross-entropy loss to synergistically guide image reconstruction across three dimensions: pixel-level, feature-level, and photon-level.

We have developed a highly integrated underwater laser-gated imaging system for underwater detection and constructed a paired dataset of “single-frame gated images and multi-frame enhanced images,” which holds significant importance for advancing the research and development of underwater equipment. Experiments demonstrate that our algorithm significantly outperforms existing noise reduction and underwater image restoration techniques in repairing laser-gated underwater images. This breakthrough is expected to propel underwater detection technology from passive environmental adaptation toward active sensing and reconstruction capabilities.

3. Methodology

3.1. Overview

This study focuses on resolving the strong coupling between target photons and scattered photons in underwater laser range-gated images. It eliminates the need for complex frame alignment and temporal processing, thereby avoiding motion artifacts that arise when multiple frames are stacked during target or payload platform movement. This approach is suitable for dynamic scenarios and real-time applications. Inspired by the implementation of composite multi-branch recovery tasks in a general framework [

33,

34], and addressing the poor system adaptability and unstable imaging inherent in complex underwater environments for laser-gated imaging, we propose an innovative single-frame image restoration algorithm for underwater laser-gated distance imaging: PLPGR-Net. This achieves efficient, high-quality imaging, further enabling underwater laser range-gated imaging systems with fixed hardware configurations to overcome physical limitations and achieve imaging at greater distances. As shown in

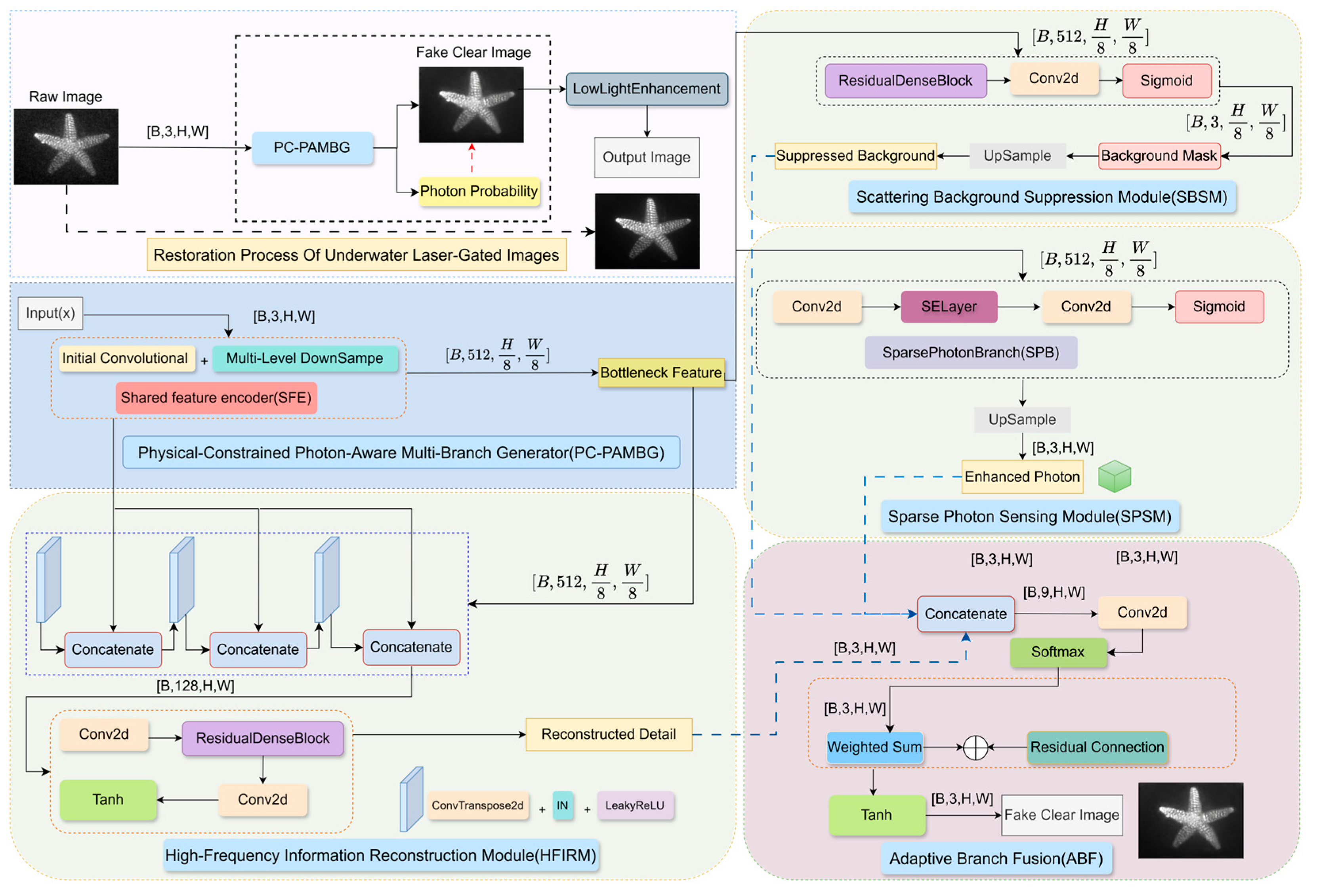

Figure 6, the proposed algorithm’s network architecture is as follows: First, an integrated underwater laser range-gated imaging system acquires time-synchronized degraded images and their corresponding high-quality reference images. PLPGR-Net undergoes end-to-end optimization training. Once fully trained and deployed, it can directly process single-frame degraded images, breaking away from the traditional multi-frame stacking paradigm, requiring only a single forward pass to output restored images. This provides highly robust optical imaging support for complex scenarios such as underwater military reconnaissance and deep-sea exploration.

This underwater laser range-gated single-frame image restoration network achieves end-to-end collaborative processing from degraded single-frame images to clear restored images through a four-stage architecture featuring: carefully designed shared underlying feature encoding, multi-task module branch decoupling, adaptive weight fusion, and multi-dimensional physical joint loss constraints. The network processing flow begins with pre-processing the input image , obtaining through normalization transformation, and entering the shared feature encoder (SFE) for multi-level downsampling. This extracts initial features capturing fundamental spatial information. After progressively extracting multi-scale bottleneck features, the network concurrently activates three specialized processing modules for underwater laser range-gated images. The outputs from these three modules are fed into the critical adaptive branch fusion (ABF) to yield . During this process, the Scatter Background Suppression Module (SBSM) and Sparse Photon Sensing Module (SPSM) employ physics-guided methods to suppress strong water scattering while locating and enhancing valid target information from low-photon signals. Concurrently, the High-Frequency Information Reconstruction Module (HFIRM) ensures structural integrity and visual quality of the final output. Finally, residual connections yield preliminary reconstruction results , with the Multi-Dimensional Joint Physical Loss Module (MDJPLM) providing feedback on output accuracy. Detailed descriptions of ABF, SBSM, SPSM, HFIRM, and MDJPLM are provided below.

3.2. Adaptive Branch Fusioner

Different regions within underwater laser range-gated images exhibit distinctly different noise-signal coupling characteristics. Target areas require enhancement of sparse photon signals, background areas necessitate suppression of scattering noise, while edge regions demand accurate discrimination and preservation of detailed features. Traditional fixed-weight fusion methods cannot adapt to these spatially varying processing requirements. The ABF module achieves true spatially adaptive fusion by dynamically learning the preference weights for each branch output at every pixel location [

35], fundamentally overcoming the limitations of fixed-weight fusion strategies.

This module takes the outputs

,

, and

from three branches as input. It concatenates these outputs along the channel dimension to form a 9-channel composite feature representation

. During weight generation, it uses a 1 × 1 convolution to learn preliminary fusion weights

, capturing cross-channel dependencies. It then performs spatial soft maximization: for each spatial location

,

is calculated to ensure the sum of the three weights at each position equals 1, forming a probabilistic weight distribution

. Finally, element-wise multiplication and weighted fusion are performed to achieve spatially adaptive fine-grained fusion. The core objective of the entire optimization process is to minimize the supervised loss term, ensuring the fused output approximates the true target as closely as possible at the pixel level.

3.3. Scattering Background Suppression Module

In underwater laser range-gated images, backscatter noise from the underwater environment manifests as global background interference. This noise fundamentally arises from “the random propagation of photons reflected by impurities in the water”, severely impairing the visibility of target signals. Traditional denoising methods often struggle to distinguish target photons from scattered noise photons, leading to either excessive suppression that causes target loss or insufficient suppression that leaves residual noise. The SBSM module leverages the Beer–Lambert law [

36], a fundamental principle of underwater optical imaging. It employs deep learning to learn global illumination distribution patterns, modeling the physical characteristics of underwater images—namely, the global nature of backscatter noise and the regional unevenness of scattering intensity. This process generates spatially variable suppression masks, enabling precise suppression of background scattering noise while preserving regions containing potential target signals.

The bottleneck features

from the shared encoder serve as input to this module. Feature enhancement is performed via residual dense blocks (RDB) to obtain

, employing dense connections and residual learning to capture complex feature dependencies. A 3 × 3 convolution then maps the 512-channel features to 3 channels, and a Sigmoid activation generates the 0–1 range suppression mask

. An 8× upsampling of

yields

, restoring the low-resolution mask to the input image dimensions. Finally, element-wise multiplication with the original image data performs background suppression to obtain

, achieving spatially adaptive background darkening.

In Equation (5),

is a learnable weight parameter (not explicitly defined in the code and requires additional implementation) that controls the contribution of features from the

section to

, enabling the model to adaptively adjust the intensity of feature enhancement.

3.4. Sparse Photon Sensing Module

In underwater laser-gated images, target signals often manifest as sparsely distributed photon points. These photon signals are easily overwhelmed by intense backscatter noise, and their photon-counting nature leads to signal discontinuity. Building upon the concept of time-of-flight-correlated single-photon counting to reconstruct sparse photon regions [

37], the SPSM module addresses the challenge of sparse signal detection. It employs dilated convolutions to expand the receptive field, capturing widely distributed yet sparse photon events. Combined with a channel attention mechanism that focuses on important feature channels, it ultimately outputs a photon presence probability map, providing precise guidance for subsequent signal enhancement.

First, dilated convolution feature extraction is performed on the input bottleneck feature

. A 3 × 3 convolution with a dilation factor of 2 yields

, expanding the receptive field from 3 × 3 to 5 × 5 while maintaining resolution. This effectively captures sparse yet potentially widely distributed photon signals. The

feature is enhanced via channel attention to produce

. The channel attention layer first obtains channel-level statistics through global average pooling (GAP), then learns channel importance weights through two fully connected layers. Finally, it multiplies the original feature to achieve channel recalibration. A 1 × 1 convolution maps the 64-channel feature to 3 channels, and Sigmoid activation generates the photon presence probability map, yielding the photon probability estimate

. Finally, 8× upsampling and signal enhancement are applied to

to yield

. This module employs a probability-map-guided additive enhancement strategy to strengthen potential target regions.

3.5. High-Frequency Information Reconstruction Module

To further enhance image texture information by suppressing scattered noise and amplifying faint signals, a High-Frequency Image Recovery Module (HFIRM) was designed to specifically restore target contours and edge details within images. Based on an enhanced U-Net decoder architecture, this module leverages multi-level features extracted during the encoding phase. Employing residual dense blocks to strengthen feature representation capabilities ensures reconstructed images retain structural integrity close to that of the original.

This module takes the bottleneck feature as input, upsamples it to 256 × 256 via the first transposed convolution, and concatenates it along channels with the encoder’s second layer output to form a 512-channel fusion feature map. It then upscales the resolution to 512 × 512 through another transposed convolution and concatenates it with the encoder’s first layer output to form a 256-channel feature. After a third upsampling, it restores the original 1024 × 1024 size while simultaneously compressing the channel count to 64. It is then concatenated with the initial encoded features to form a 128-channel feature. Subsequent 1 × 1 convolutions compress this to 64 channels. After inputting to the RDB, five layers of 3 × 3 convolutions progressively concatenate the pre-processed features. Following 1 × 1 convolution fusion, 64 channels are retained. Finally, 3 × 3 convolutions map to 3 channels, with a hyperbolic tangent activation function, outputting the high-frequency detail reconstruction result.

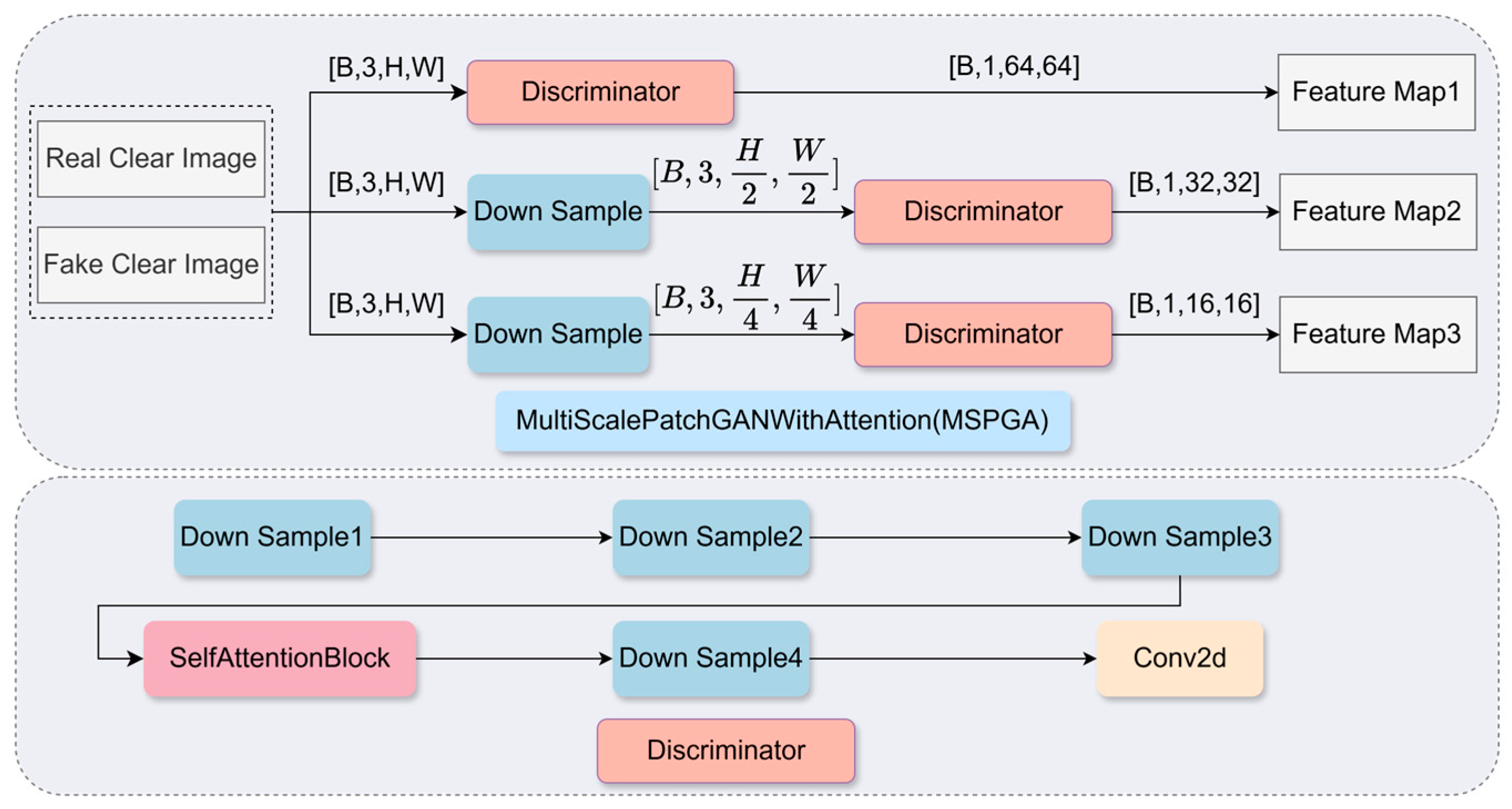

3.6. Multi-Scale PatchGAN Discriminator with Self-Attention Enhancement

Assessing the generation quality of underwater laser-gated images presents unique challenges, as generated images may exhibit inconsistencies in global photon distribution patterns. Traditional discriminators struggle to capture artifacts that are “locally realistic but globally inconsistent”. This module employs a multi-scale architecture combined with self-attention mechanisms to evaluate image authenticity across multiple spatial scales. Simultaneously, it leverages self-attention to capture long-range dependencies, identifying overall consistency in photon distribution. This dual-discrimination capability—combining “multi-scale local discrimination” with “long-distance dependency capture”—indirectly constrains the generator’s photon distribution, ensuring visually coherent reconstruction results.

As shown in

Figure 7, the discriminator takes generated images or real images

as input. Multi-scale processing is performed at three scales:

,

, and

. The discriminators at each scale share the same architecture but have independent parameters. Taking the original scale as an example, features are sequentially extracted through subsampling

,

, and

. The self-attention mechanism is applied to the 256-channel features to compute the output

, followed by the extraction of deep features

. Finally, patch-level discrimination is performed, outputting the authenticity probability

for each image patch. The outputs from all three scales collectively form the final discrimination result.

In Equation (19), denotes the transpose of the key matrix, where represents the dimension of both the query vector and the key vector. The square root is used to scale the dot product result, preventing gradient instability. In Equation (20), is a learnable weight parameter within the self-attention module, controlling the extent to which the enhanced features contribute to the original features.

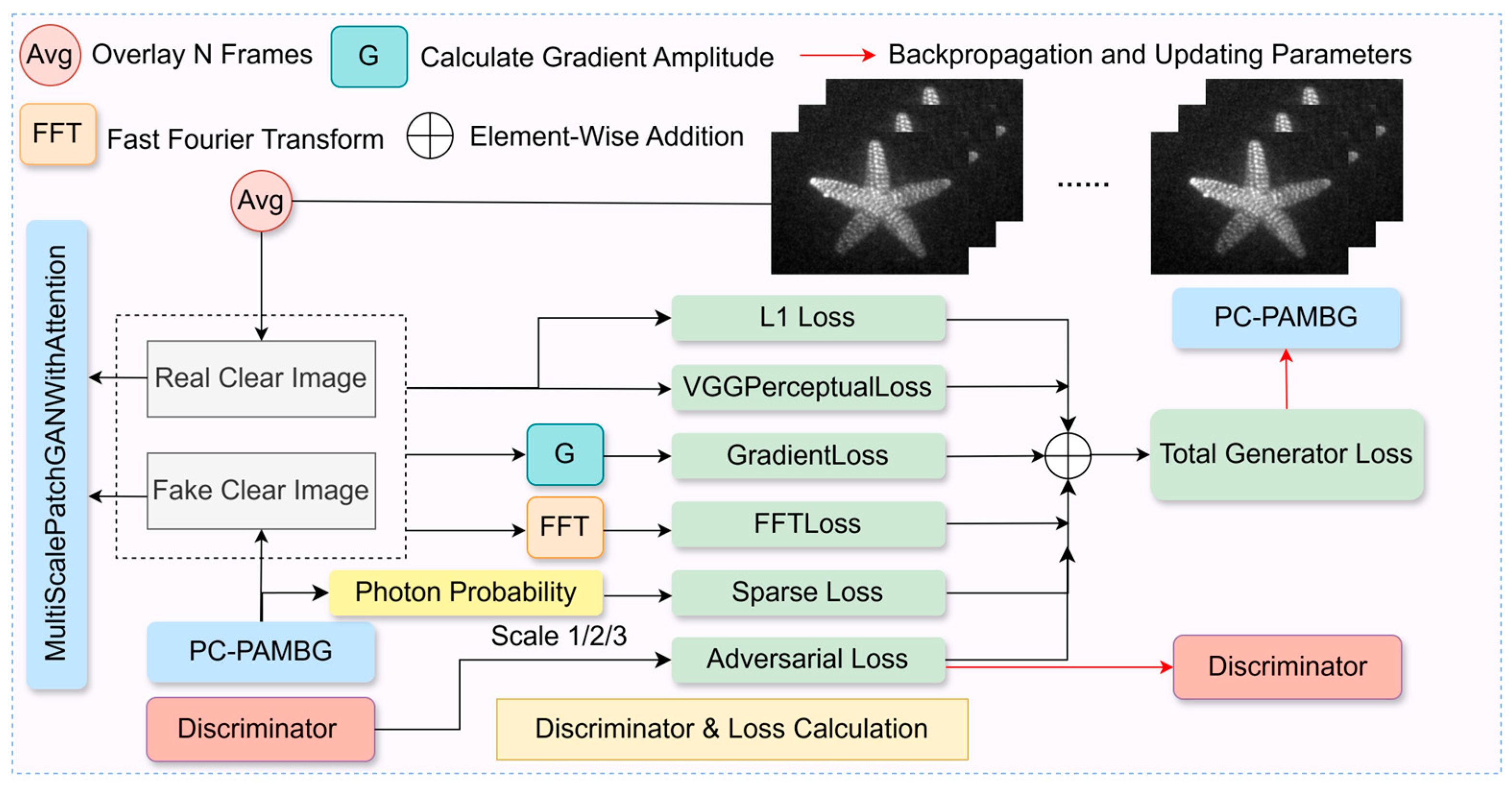

3.7. Multi-Dimensional Joint Physical Loss Module

Since a single loss function struggles to comprehensively capture all aspects of image quality, this module combines six loss functions with distinct physical interpretations, as shown in

Figure 8. These encompass pixel-level accuracy, feature-level precision, edge sharpness, frequency-domain texture consistency, and photon-level specificity. Together, they form a comprehensive constraint optimization objective for underwater laser-gated images, ensuring generated images achieve optimal visual quality and physical plausibility.

The system takes the forward propagation outputs as inputs to compute a six-dimensional loss component. It employs pixel-level loss, adversarial loss, and perceptual loss to enhance feature consistency between generated and real images. Gradient loss and frequency-domain loss are introduced to sharpen edges, while texture consistency is constrained in the frequency domain via the fast Fourier transform. Finally, we designed a sparse photon-assisted loss. Leveraging the brightness distribution of the clear image as weak supervision, we constrain the consistency between the photon probability map and the true signal region via binary cross-entropy (BCE). This highlights target signal photons, guiding the model to focus on recovering sparse photon signals. This further enables learning of photon statistical patterns, thereby optimizing the recovery quality of photon-sensitive regions.

In Equation (27), denotes the mathematical expectation, and represents the output of the discriminator. In Equation (32), the values of the respective weights are .

4. Experimental Results

4.1. Dataset Description

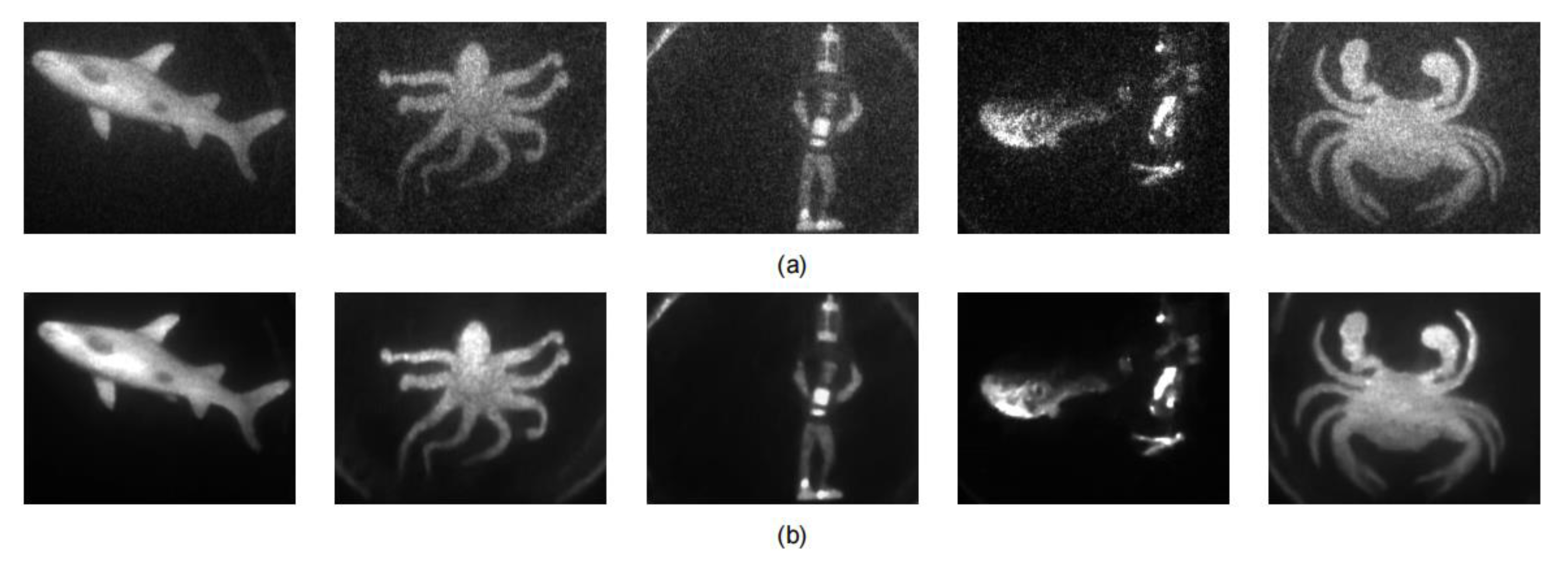

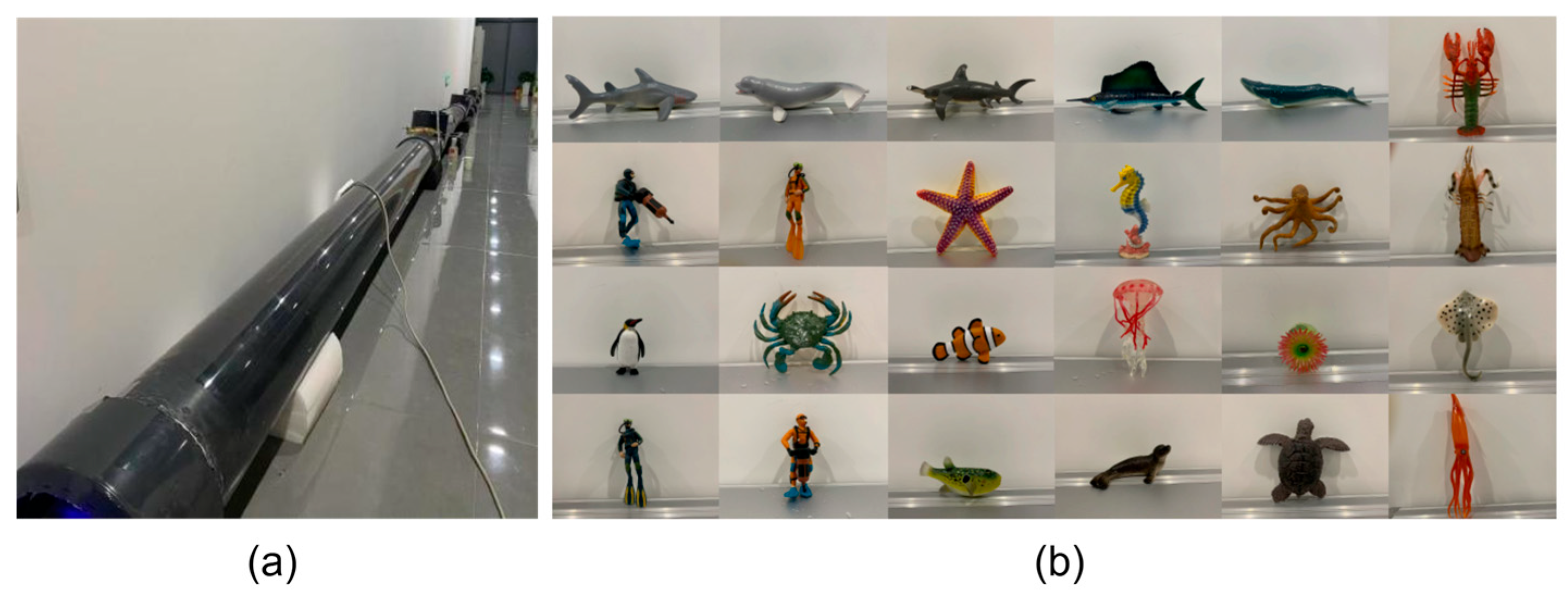

This section introduces the underwater laser-gated image dataset used for training and evaluating algorithm effectiveness. We are releasing for the first time a dataset of reconstructed underwater laser-gated slices, containing multiple “underwater real-world target scenarios” such as marine life and divers, as shown in

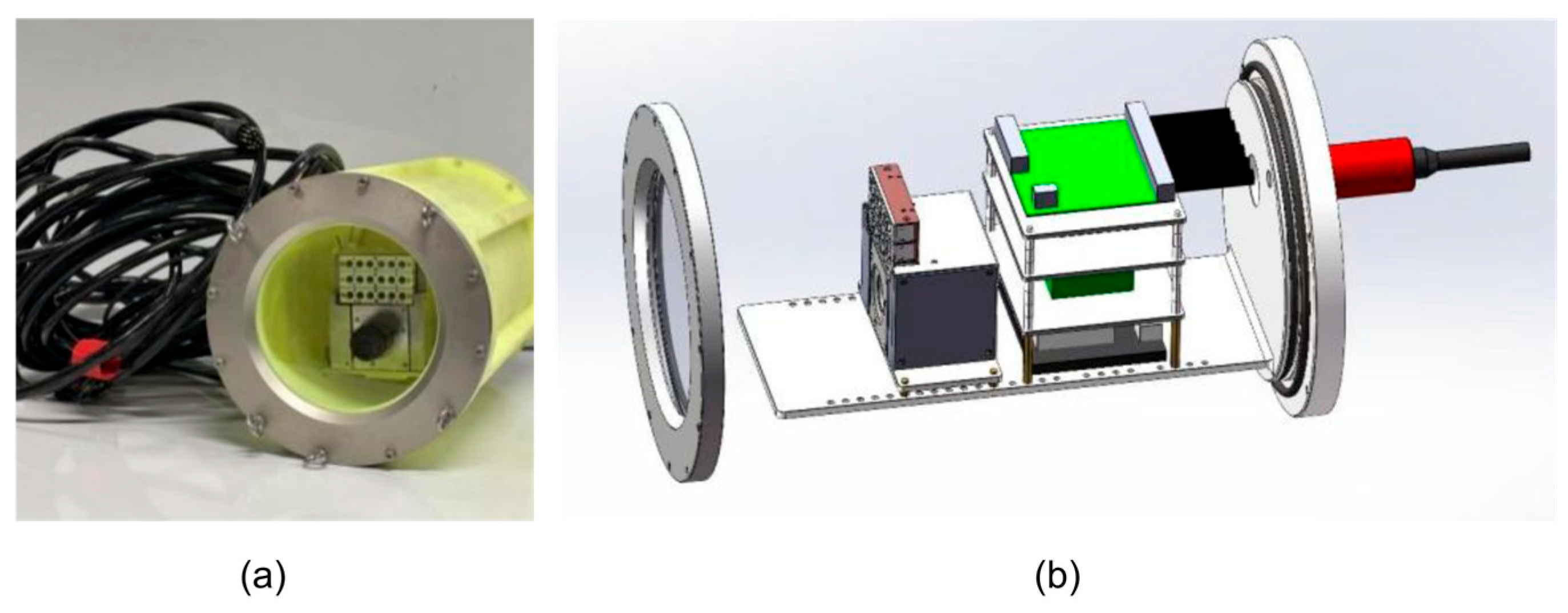

Figure 9. To construct this dataset, we designed an integrated underwater nanosecond laser imaging system.

Figure 10 shows its three-dimensional structural model. The core components include a 450 nm ultra-high repetition rate semiconductor laser with a maximum average power of approximately 1.5 W and a miniaturized nanosecond-level ultrafast single-photon gated camera featuring a maximum shutter repetition rate of 500 KHz, a minimum shutter width of 3 ns, and a frame rate up to 98 fps. The laser possesses a field of view of 6.8° in the horizontal direction and 4.9° in the vertical direction, ensuring sufficient field of view for practical aquatic applications while maintaining effective laser beam focusing during emission. To guarantee data source stability and validity, we constructed an indoor pipeline measuring 16 m in length and 20 cm in diameter as an experimental testing platform for the equipment. Over a four-month period, the underwater laser-gated imaging system captured 1120 single-frame gated images alongside corresponding clear reference images of marine life in various forms and combinations.

To obtain ultra-low-noise, high-signal-to-noise-ratio “true” images as benchmarks for supervised learning, we employ a static gazing method in fixed-delay mode. Specific parameters were exposure time of 10 milliseconds, gate width of 20 nanoseconds, and laser repetition rate of 100 kHz. By actively synchronizing the laser pulse with the camera gate, we effectively capture the echo signal window reflected from the target. Building upon this foundation, we dynamically adjusted image stacking from 20 to 100 frames based on target reflectivity variations. This approach achieved an optimal balance between preserving sufficient detail and maximizing noise suppression, thereby generating a reliable reference image for each single-frame underwater laser-gated image under experimental conditions. To simulate the diverse scattering noise of real underwater environments and enhance model generalization, we established a controlled experimental setup: quantitatively adding 1 milliliter of pure milk as a scattering medium to the experimental water pipe each time, thereby generating a series of “noisy” single-frame images with varying noise intensities and degradation levels. Each noisy single-frame image was strictly paired with a reference image acquired under the same medium concentration.

4.2. Implementation Details

During network training, the Adam optimizer was employed to update parameters for both the generator and discriminator. The optimizer parameters were set to β1 = 0.5 and β2 = 0.999, accelerating early gradient descent while accommodating convergence requirements. The initial learning rate was set to 2 × 10−4. Gradually decreasing the learning rate in later stages prevented loss oscillations and further enhanced the model’s learning accuracy in separating echo signals from scattering noise. Additionally, to increase training data diversity, image degradation levels were progressively elevated by adding milk to tap water, thereby improving generalization performance in real underwater scenarios.

4.3. Quantitative Evaluation

To establish a multidimensional evaluation framework, we comprehensively quantified the restoration performance and practical deployment value of underwater laser-gated images across five dimensions: objective error, structural fidelity, detail preservation, subjective perception, and model efficiency. Six key evaluation metrics from image restoration and engineering applications were selected: mean absolute error (MAE), a pixel-based metric robust to outliers, better suited for the degradation caused by “brightness inhomogeneity due to scattering noise”; peak signal-to-noise ratio (PSNR), derived from pixel error, for intuitively measuring overall restoration accuracy; Structural Similarity Index (SSIM) [

38], emphasizing structural similarity perception, to specifically quantify improvements in “structural blurring caused by target-noise coupling”; and LPIPS [

39] quantifies human-perceived subjective restoration quality differences, precisely matching subjective visual biases caused by “detail loss due to photon sparsity”. Simultaneously, to accurately reflect the inherent computational overhead of different models, we introduced total model parameters (Params) and cumulative floating-point operations (FLOPs) to evaluate model lightweighting and inference efficiency, ensuring deployment feasibility in practical scenarios such as underwater embedded devices. These metrics establish a precise alignment of “degradation issues–evaluation dimensions–engineering requirements,” providing comprehensive validation from performance to practicality for the proposed method.

To establish a multidimensional evaluation framework, we comprehensively quantified the restoration performance and practical deployment value of underwater laser-gated images across five dimensions: objective error, structural fidelity, detail preservation, subjective perception, and model efficiency. Six key evaluation metrics from image restoration and engineering applications were selected: mean absolute error (MAE), a pixel-based metric robust to outliers, better suited for the degradation caused by “brightness inhomogeneity due to scattering noise”; peak signal-to-noise ratio (PSNR), derived from pixel error, for intuitively measuring overall restoration accuracy; Structural Similarity Index (SSIM) [

38], emphasizing structural similarity perception, to specifically quantify improvements in “structural blurring caused by target-noise coupling”; and LPIPS [

39] quantifies human-perceived subjective restoration quality differences, precisely matching subjective visual biases caused by “detail loss due to photon sparsity”. Simultaneously, to accurately reflect the inherent computational overhead of different models, we introduced total model parameters (Params) and cumulative floating-point operations (FLOPs) to evaluate model lightweighting and inference efficiency, ensuring deployment feasibility in practical scenarios such as underwater embedded devices. These metrics establish a precise alignment of “degradation issues–evaluation dimensions–engineering requirements”, providing comprehensive validation from performance to practicality for the proposed method.

4.4. Comparison with State-of-the-Art Methods

To comprehensively validate the performance advantages of PLPGR-Net in underwater laser-gated image restoration tasks, this study selected advanced image restoration methods as baselines. These methods encompass seven algorithms across three major categories: traditional enhancement algorithms (BM3D [

40]), deep learning-based image denoising algorithms (DnCNN, USRnet [

41], CWR [

42], MPRNet [

43], NAFNet [

44], DDNM [

45]). By selecting seven representative algorithms from three technical pathways, this study comprehensively validates PLPGR-Net’s superior performance in the specific degradation scenarios of underwater laser-gated images. To verify the method’s universality, experiments employ an identical training dataset comprising 80% of the total dataset, with the test dataset accounting for 10% of the total. All neural networks were trained from scratch until convergence. BM3D is a non-data-driven method that requires no training; it was evaluated using the same test set to maintain consistent comparison conditions.

PLPGR-Net achieved an average PSNR of 36.27 dB in underwater laser-gated image tests across varying photon densities, as shown in

Table 1. This represents a 44.62% improvement over the traditional BM3D algorithm (25.08 dB) and a 25.55% enhancement compared to CWR (29.12 dB), an underwater image restoration algorithm with a similar architecture, and surpassed the multi-scale restoration model MPRNet (25.53 dB) by 42.07%. This advantage stems from PLPGR-Net’s physically guided layer, which simulates the propagation laws of single photons. The generated photon probability map accurately distinguishes target photons from scattering noise. PLPGR-Net also demonstrates significant advantages in structural integrity and detail preservation, achieving nearly 90% similarity to multi-frame superimposed clear images. Results indicate it restores finer details and higher-resolution images.

Secondly, PLPGR-Net also demonstrates outstanding performance in terms of model parameters. Compared to other high-performance models, it has 5.17 M parameters, significantly lower than NAF-Net (116.41 M) and DDNM (552.81 M), enabling efficient utilization of computational resources. Given the camera resolution of 1600 × 1088, the model’s moderate FLOPs value ensures rapid processing of large-scale images in practical engineering applications. It achieves efficient computation by restoring a single 1600 × 1088 image within one second.

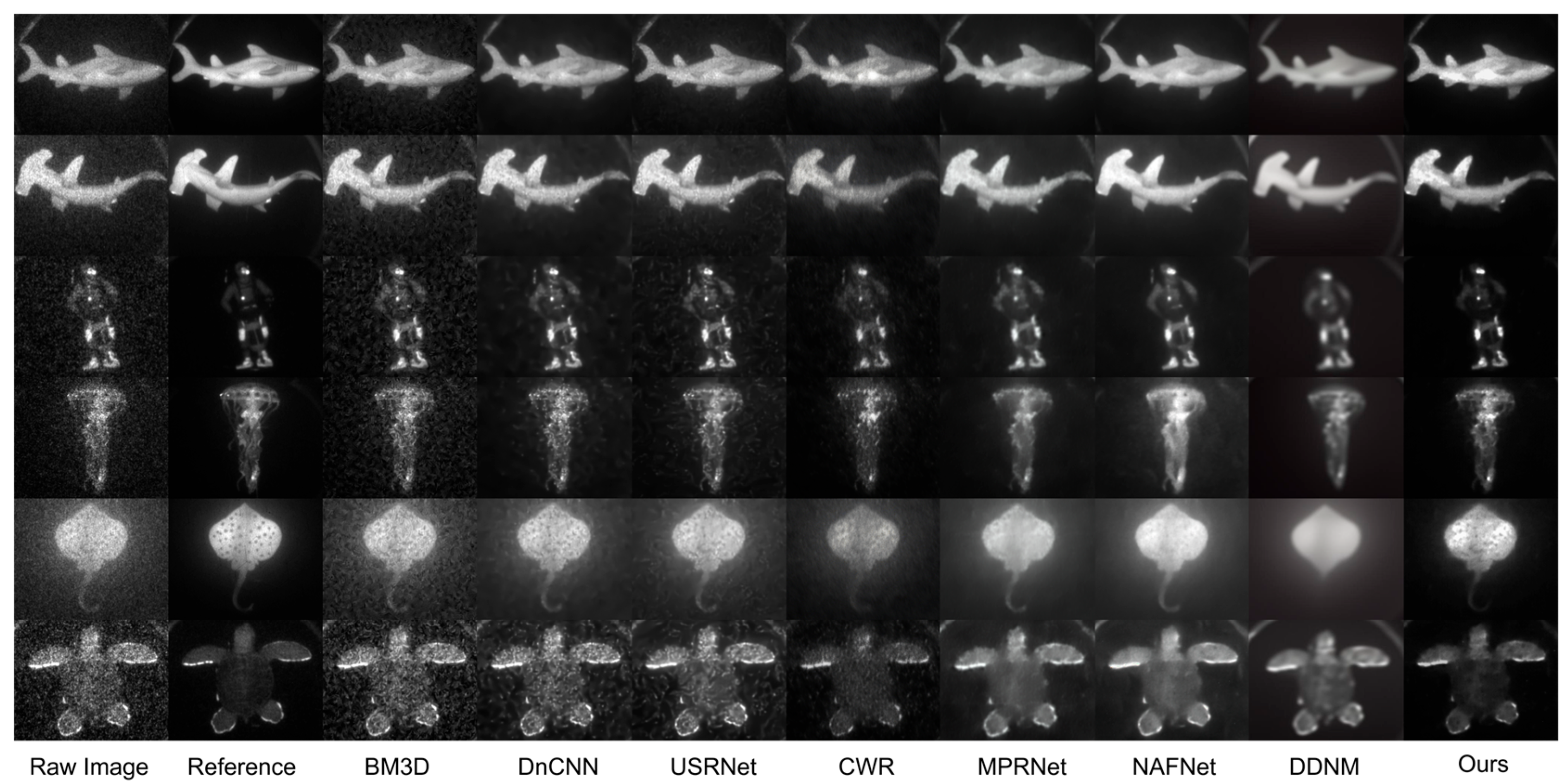

As shown in

Figure 11, to fully demonstrate the model’s generalization capability, we selected images with varying levels of scattering noise—from weak to strong—for processing. PLPGR-Net exhibits significantly superior visual plausibility and practicality compared to competing algorithms in underwater laser-gated image reconstruction, based on the visual performance achieved by preserving sparse photon signals. The spatial distribution of reconstructed photon signals closely matches the actual scene, with no significant loss or spurious generation. A limitation of DnCNN among deep learning restoration algorithms is its failure to effectively filter scattered noise photons (manifesting as low-intensity photons), due to its lack of photon-specific design. While NAF-Net preserves most photons, it suffers from target blurring in visual perception. Consequently, although deep learning-based underwater laser-gated image restoration techniques exhibit less visual bias than PLPGR-Net, they primarily achieve noise reduction by blurring scattered noise photons, resulting in a significant disadvantage in preserving high-frequency details.

In summary, PLPGR-Net leverages its physically guided and multi-branch adaptive fusion design to specifically address the core visual challenges in underwater laser-gated image reconstruction. It achieves precise reconstruction of sparse photon signals without generating artifacts. Its comprehensive advantages in both quantitative performance and qualitative visual effects, coupled with its stability in complex scenarios, demonstrate that it is the more reliable and practical reconstruction solution for this task.

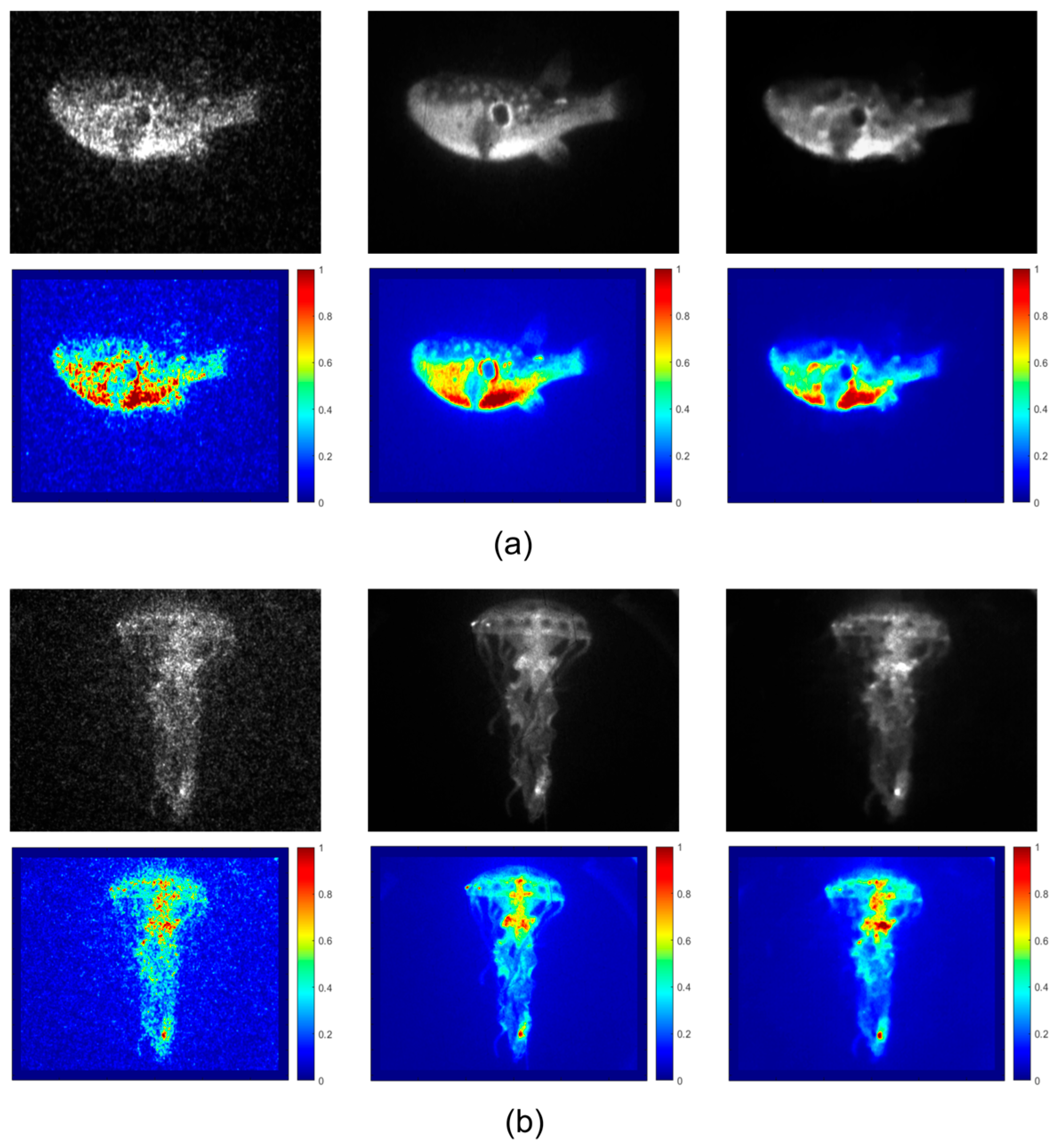

As shown in

Figure 12, by constructing heatmaps from the original image, the clear reference image, and the restored image, it was discovered that the heatmap of the image containing water scattering noise clearly exposed defects in the photon distribution of the underwater laser-gated image. The heatmap of the true, clear image exhibited a highly ordered photon density distribution, establishing an ideal reference standard for photon distribution. Within the blue background regions of low photon density (corresponding to the water background), numerous randomly distributed yellow/red medium-to-high brightness patches are interspersed. Target contours become blurred and fragmented due to the intrusion of these noise patches, and some genuine photon signals become indistinguishable from noise patches, making it impossible to visually differentiate “valid photons” from “scattered noise”. Comparing the three images reveals that the restored image’s photon distribution closely approximates the true image, with significant suppression of uneven scattering noise in the background. The medium-to-high brightness patches in the target area exhibit high alignment with the true image in terms of shape, position, and photon density gradient. This heatmap performance—characterized by “thorough background noise removal and precise target photon restoration”—directly visualizes the core capability of the restoration algorithm in reconstructing true photon distributions. It offers a more intuitive understanding of how well the restoration aligns with real-world scenarios than purely numerical metrics.

4.5. Ablation Studies

We conducted systematic ablation experiments to validate the effectiveness of each component in PLPGR-Net. As shown in

Table 2, the experimental setup included six progressive configurations: the baseline model employed a standard U-Net architecture using only L1 loss. Adding the Background Suppression Module (SBSM) to the baseline improved PSNR from 26.53 dB to 31.46 dB and SSIM from 0.599 to 0.830, primarily enhancing noise suppression in background regions but insufficiently restoring target details. Further incorporating the Sparse Photon Sensing Module (SPSM) achieved a PSNR of 33.61 dB and an SSIM of 0.867, significantly enhancing sparse target signal recovery. Integrating background suppression, sparse photon detection, and detail feature reconstruction branches further boosted PSNR to 34.41 dB and SSIM to 0.883, establishing preliminary multi-branch collaborative processing capability. Building upon this, the introduction of an adaptive fusion layer and a discriminator self-attention mechanism maintained high PSNR while significantly boosting SSIM to 0.892. Visual quality markedly improved, achieving a better balance between global consistency and local realism in generated images. The final full-scale model PLPGR-Net, integrating all innovative components and multi-dimensional loss functions, achieved optimal performance on the test set. Compared to the baseline model, it demonstrated improvements of 9.74 dB and 0.293, respectively. It also exhibited outstanding background purification effects, sparse photon enhancement capabilities, and detail fidelity in visual evaluations, fully validating the synergistic effects of each module and the effectiveness of the overall architectural design.

5. Discussion and Conclusions

This study successfully developed an integrated underwater laser-gated imaging application platform. By employing a high-precision synchronized laser emitter and a nanosecond-level ultra-fast gated CCD camera, it achieved high-quality data acquisition of underwater targets under varying turbidity conditions. Based on this platform, we systematically constructed a large-scale underwater laser-gated image dataset. This dataset encompasses blurred-sharp image pairs of various target types and water conditions in underwater scenes, providing a robust data foundation for deep learning model training. Leveraging this dataset, we propose PLPGR-Net (Photon-Layer Photon-Gated Restoration Network), a deep, sparse photon-adaptive enhancement network. Through multi-branch collaborative processing and physics-constrained optimization, PLPGR-Net achieves high-fidelity restoration of single-frame laser-gated slices. It effectively suppresses backscatter noise while preserving target detail integrity, yielding significantly enhanced visual quality in the output clear images.

This research addresses several critical challenges in underwater laser-gated imaging: First, to tackle the strong coupling between target signals and water scattering noise—both composed of photons—we propose a photon coupling disentanglement mechanism that effectively separates signal photons from noise photons. Second, to address pixel loss and photon sparsity, a dedicated sparse photon enhancement branch was designed to ensure the complete preservation and amplification of sparse photon signals. Third, to overcome the reliance on static assumptions and motion artifacts in traditional multi-frame stacking methods, high-quality restoration of single-frame underwater laser-gated slice images was achieved, significantly enhancing the method’s practicality and robustness. The profound significance of this advancement lies in establishing a novel technical paradigm to push beyond the physical imaging range limitations of underwater laser-gated imaging systems. By deeply integrating the powerful representational capabilities of deep learning, the physical prior knowledge of underwater optics, and the unique characteristics of underwater laser-gated images, this approach not only propels the development of underwater vision technology but also provides reliable technical support for marine exploration, underwater engineering, military applications, and scientific research. It holds significant theoretical value and broad application prospects.