An Unsupervised Obstacle Segmentation Method for Forward-Looking Sonar Based on Teacher–Student Transfer Learning

Abstract

1. Introduction

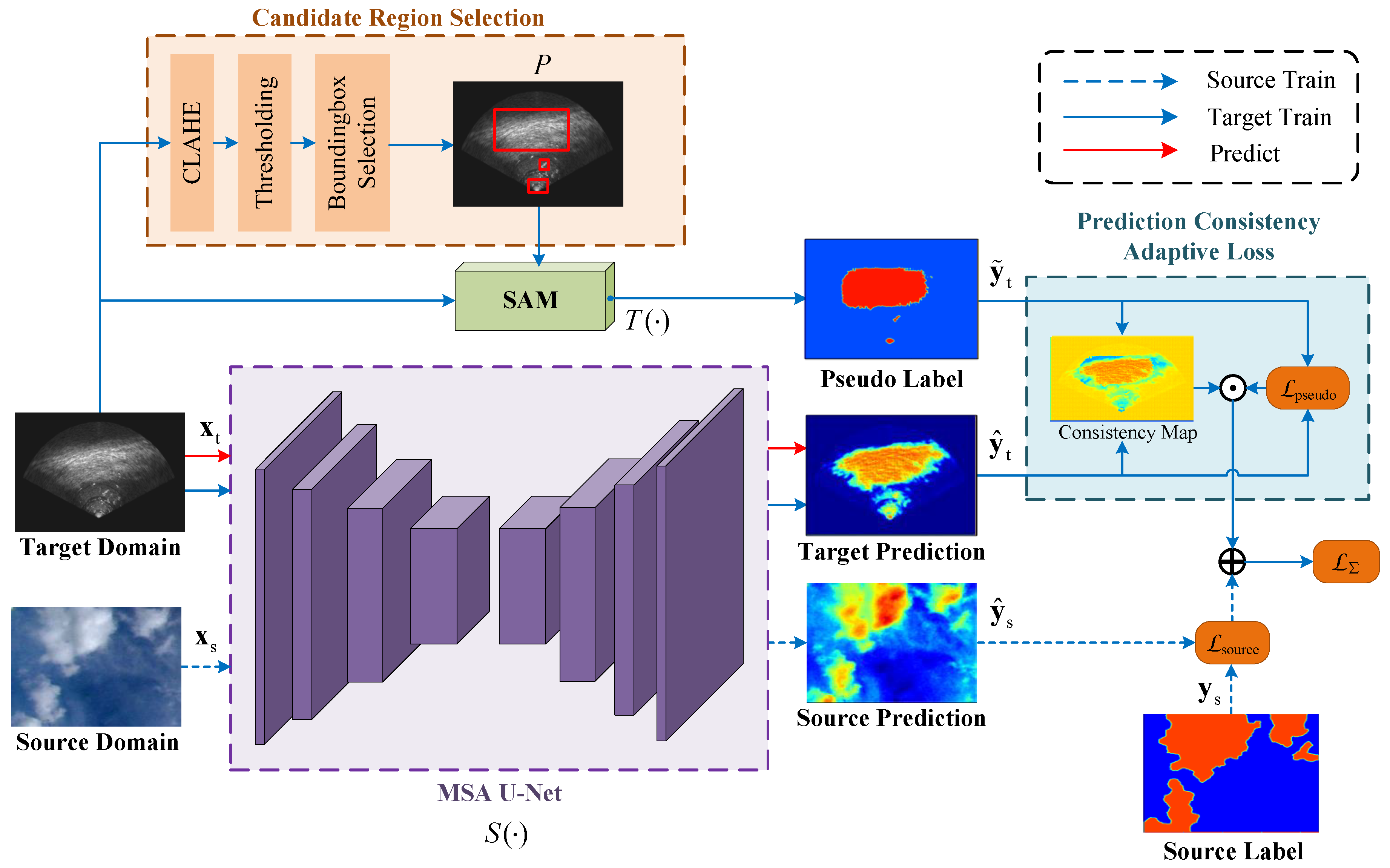

- A teacher–student framework is proposed, in which the student model conducts supervised training in the source domain (optical images) to learn basic segmentation capabilities. Simultaneously, a pre-trained SAM network is employed as the teacher model to produce pseudo-labels in the target domain (sonar images), guiding the transfer learning process.

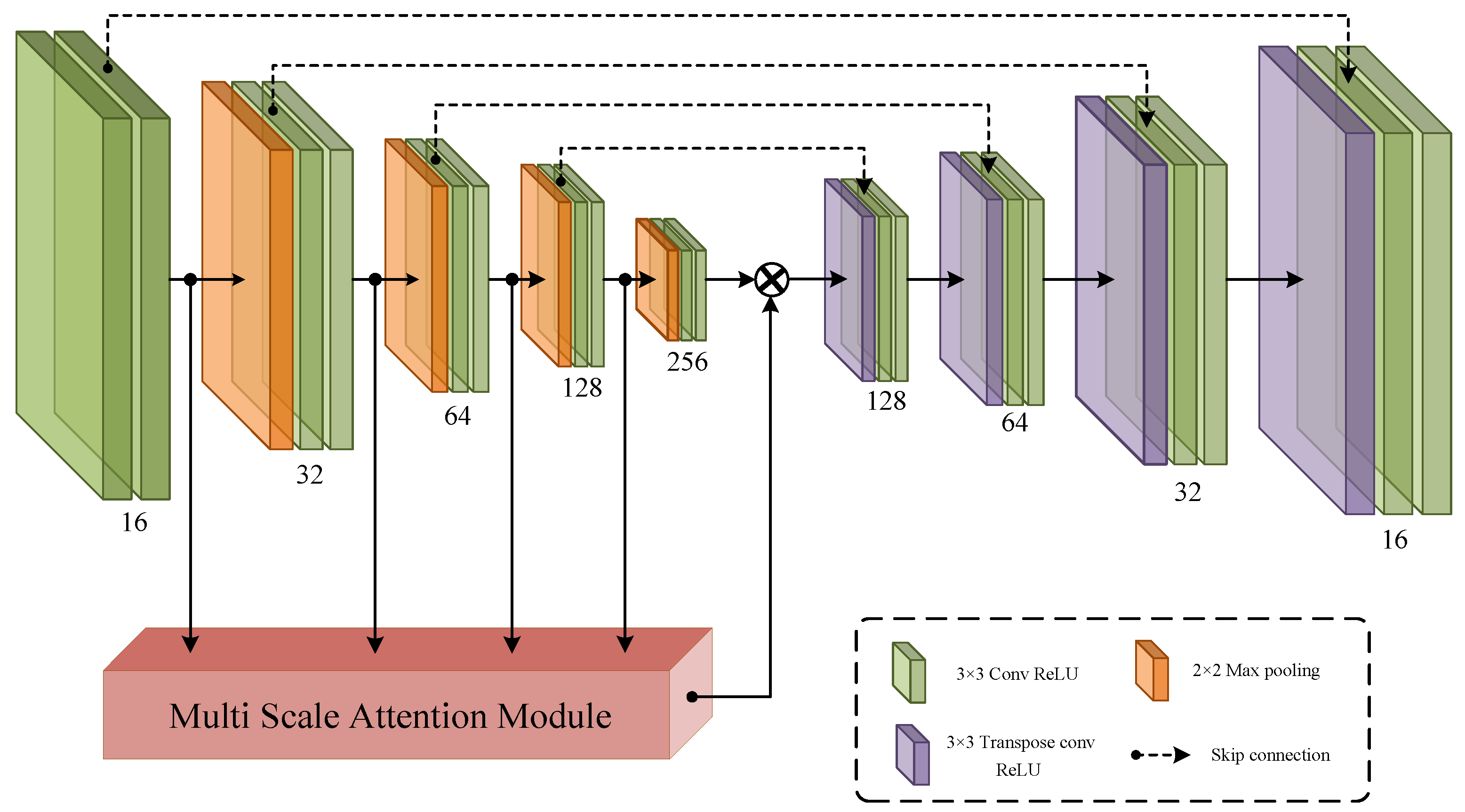

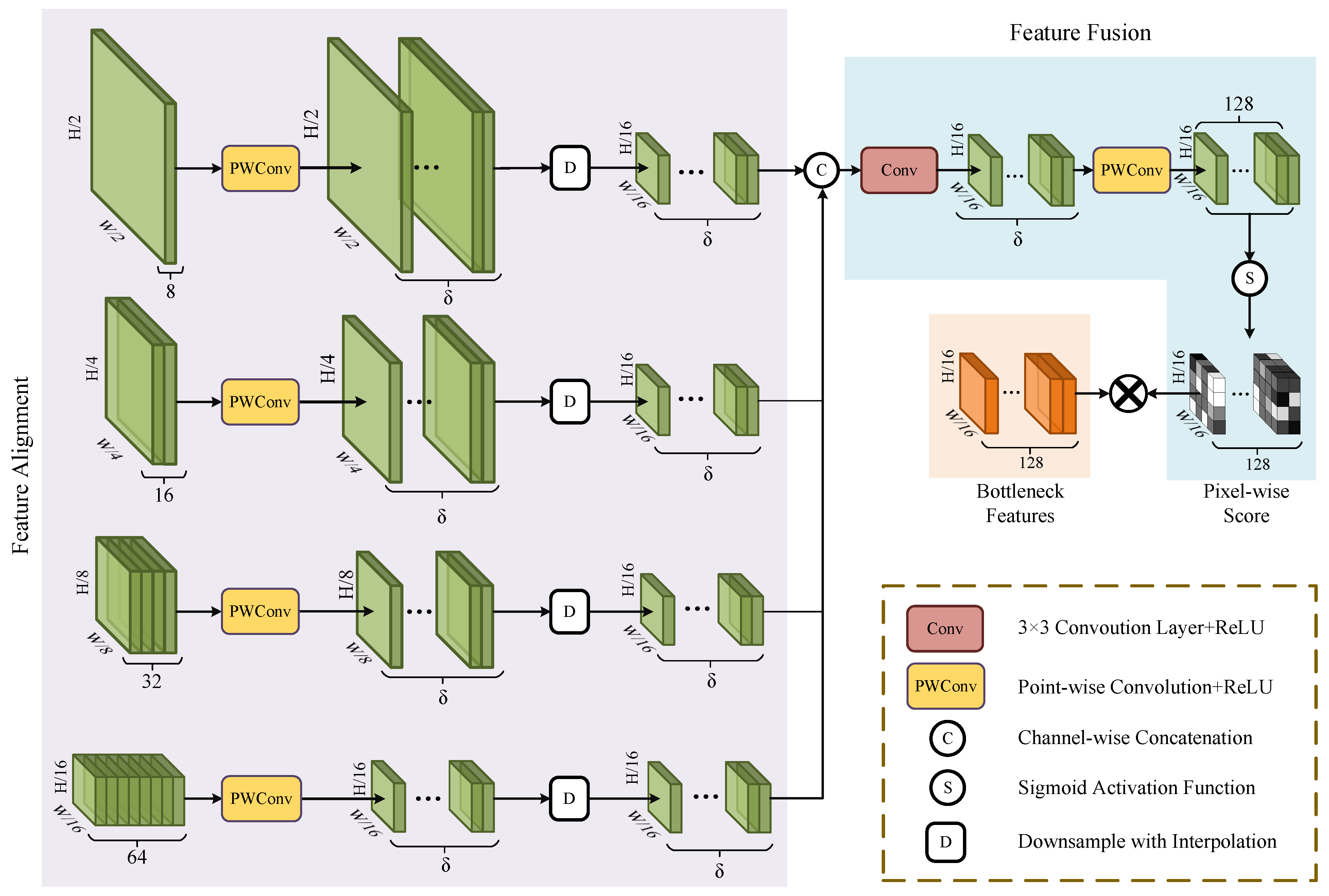

- A multi-scale attention module is designed to improve the performance of U-Net by emphasizing features across different receptive fields. This module enhances the model’s multi-scale representation by generating element-wise attention weights from the encoder features to refine the bottleneck features.

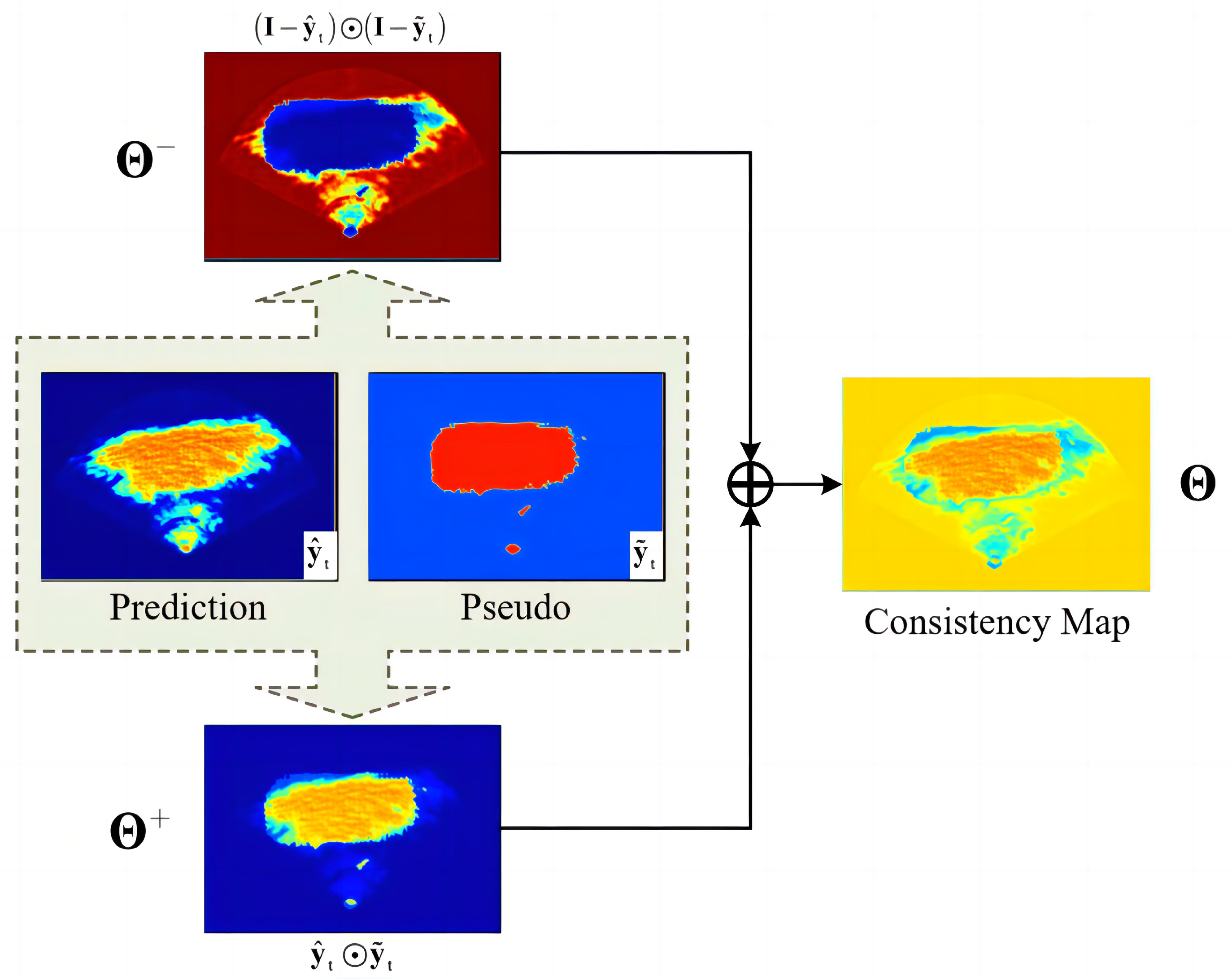

- An adaptive supervision weight adjustment method is proposed based on the consistency between pseudo-labels and student predictions. The consistency between the teacher and student predictions to each same sample in target domain is computed and represented as a pixel-wise consistency map. The guidance intensity is dynamically adjusted based on the consistency maps.

2. Related Works

2.1. U-Net Sonar Image Segmentation

2.2. Unsupervised Domain Adaptation

2.3. Teacher–Student Model

3. Methodology

3.1. Overview

3.2. MSA U-Net

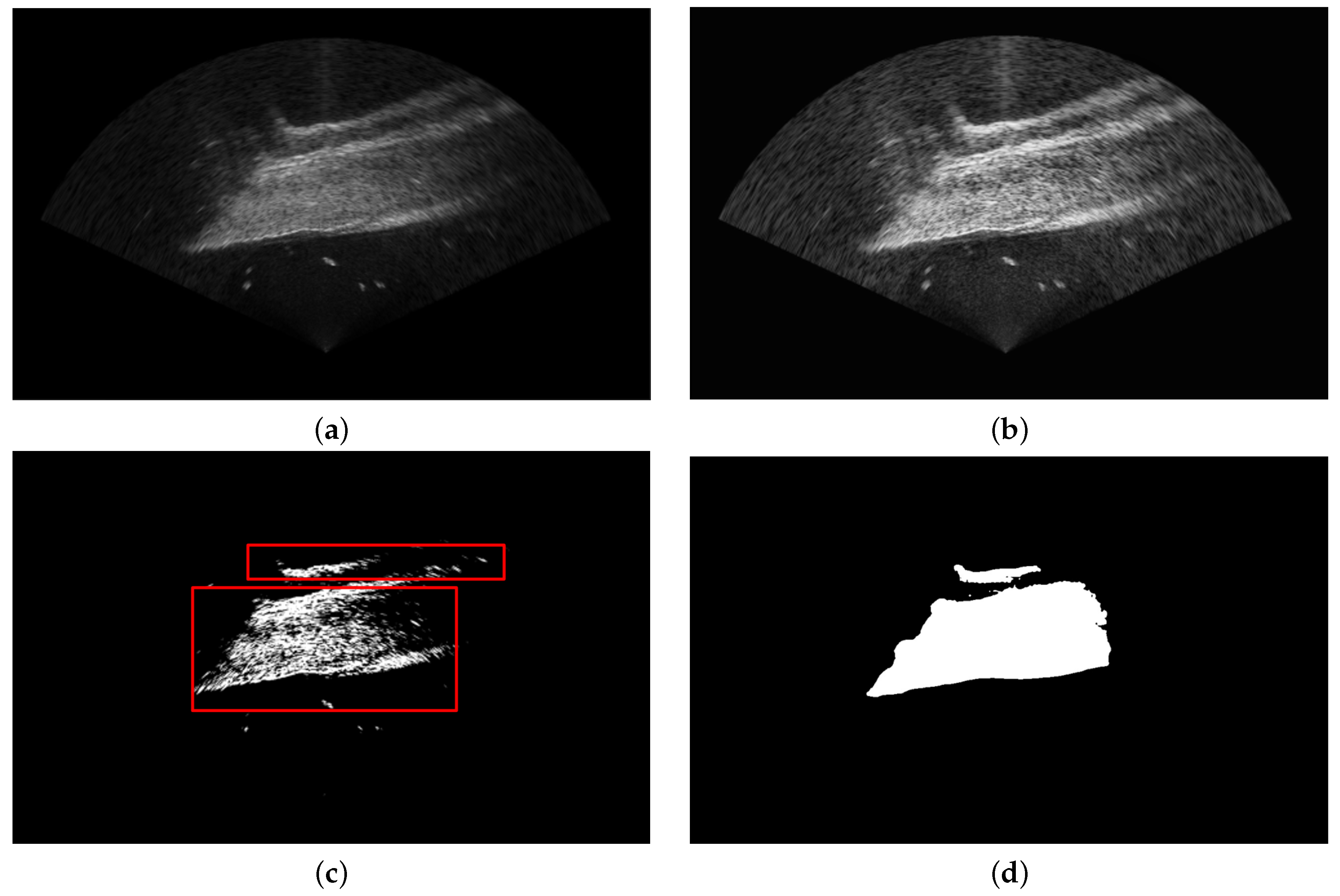

3.3. Candidate Region Selection for SAM

3.4. Consistency-Aware Joint Loss Function

4. Experiments

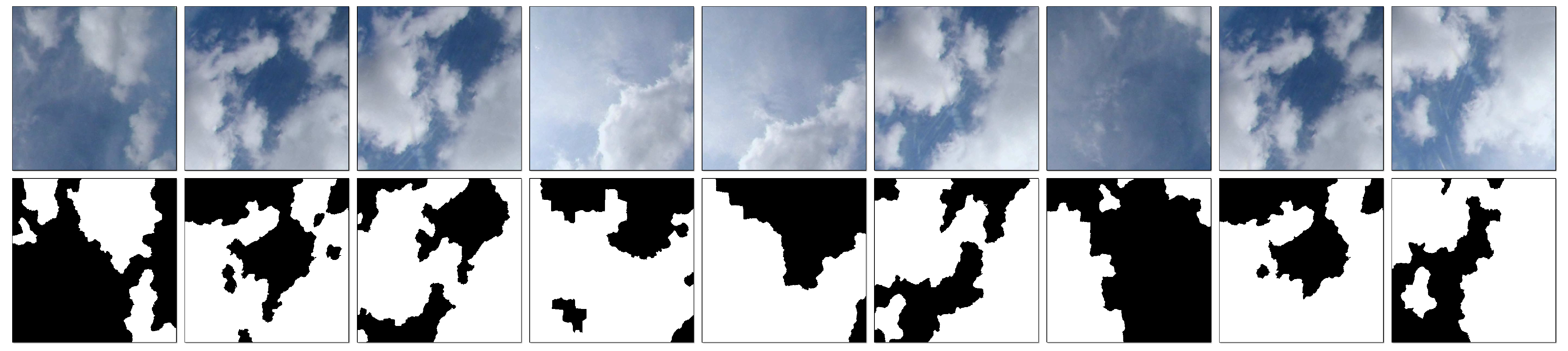

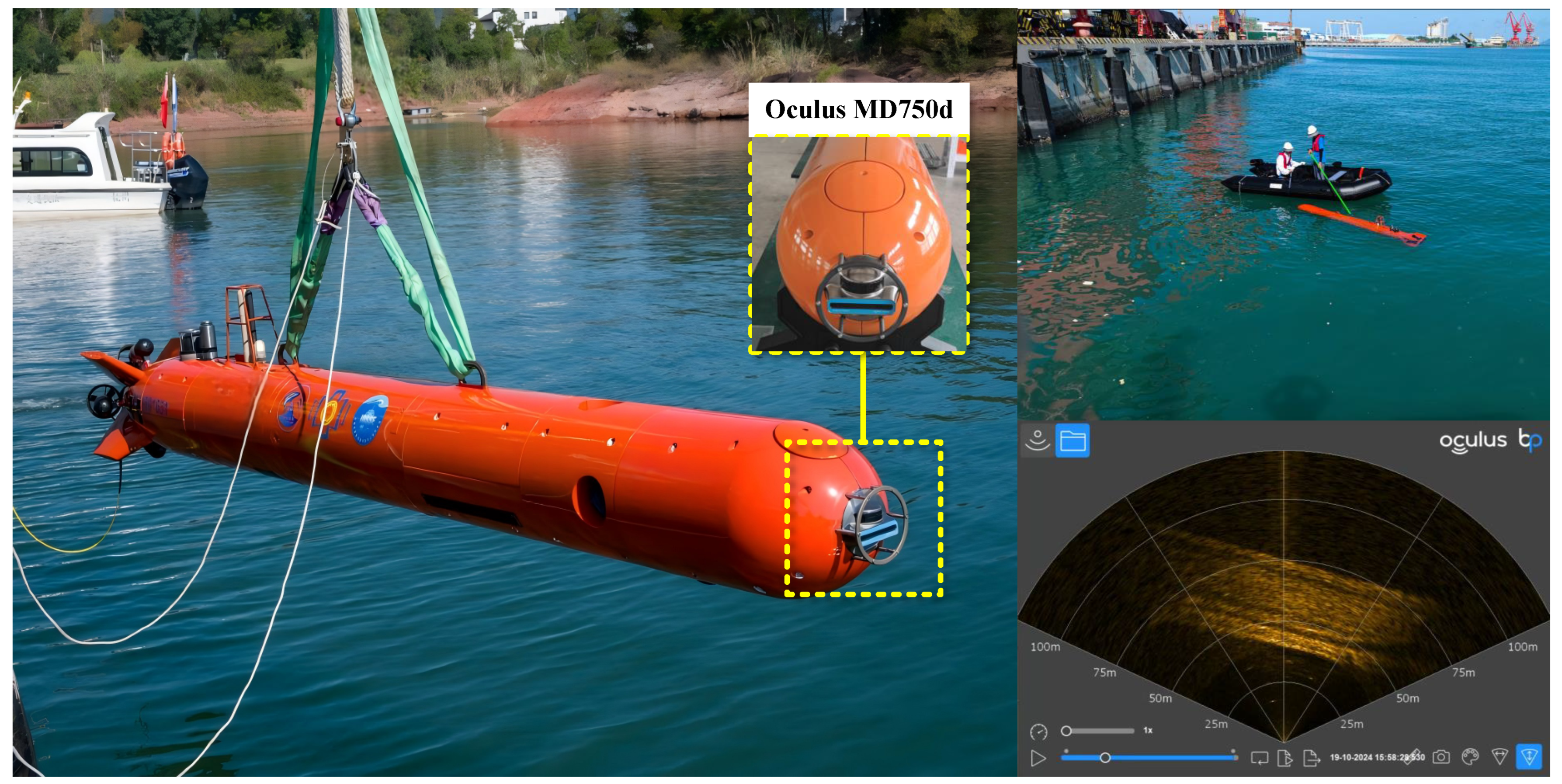

4.1. Introduction of Datasets

4.2. Experimental Setup and Evaluation

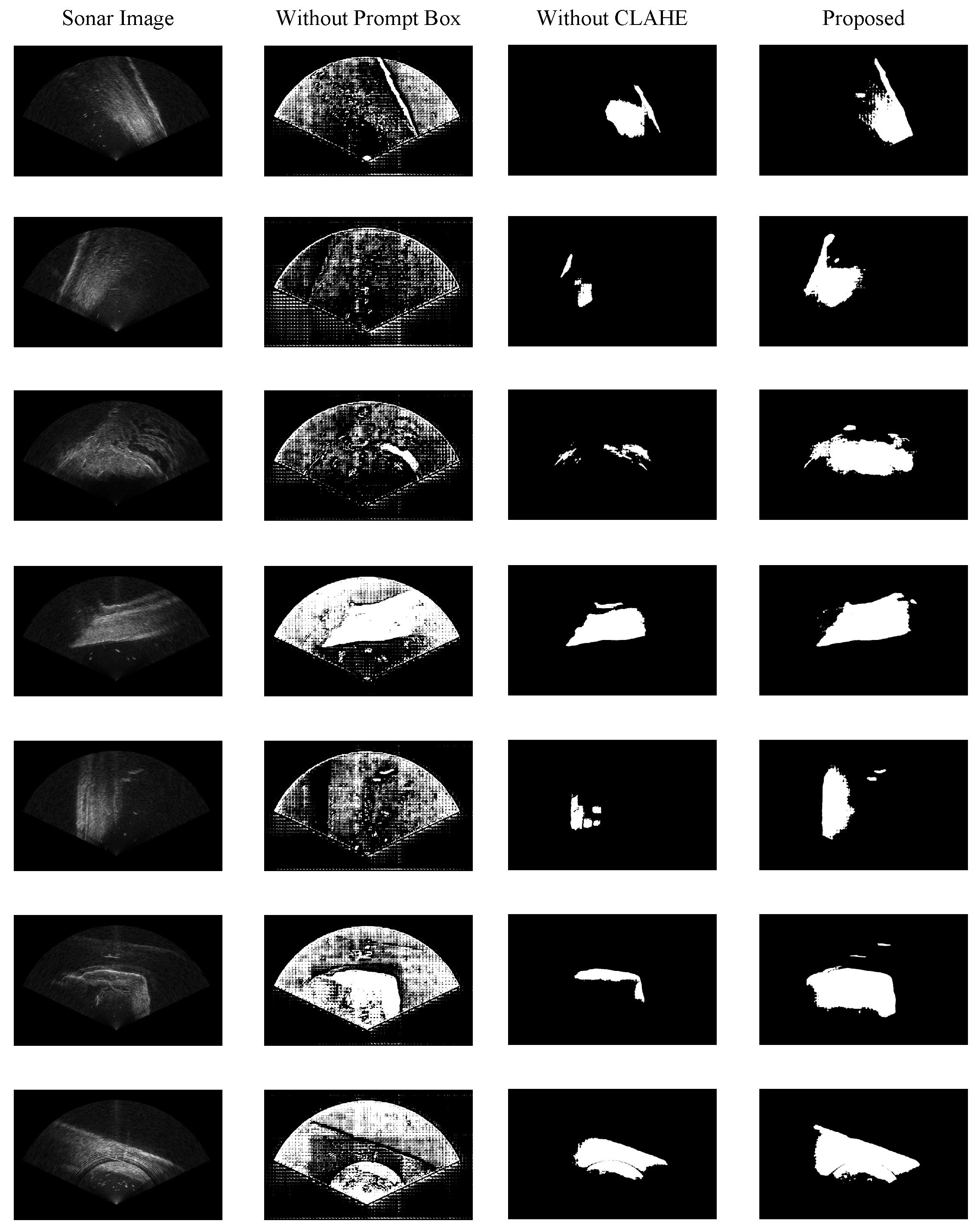

4.3. Experiments on Pseudo-Label Generation

4.4. Model Training

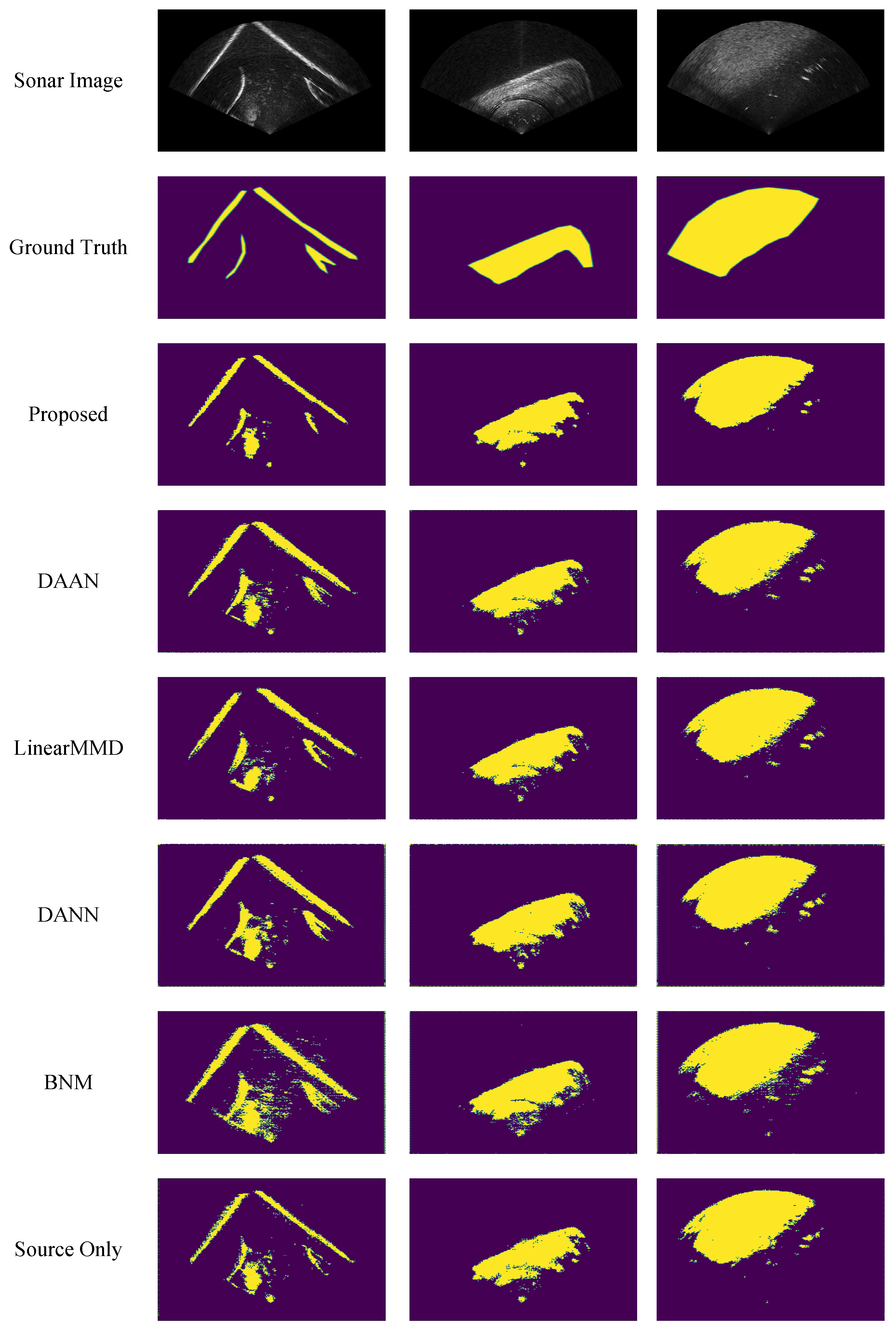

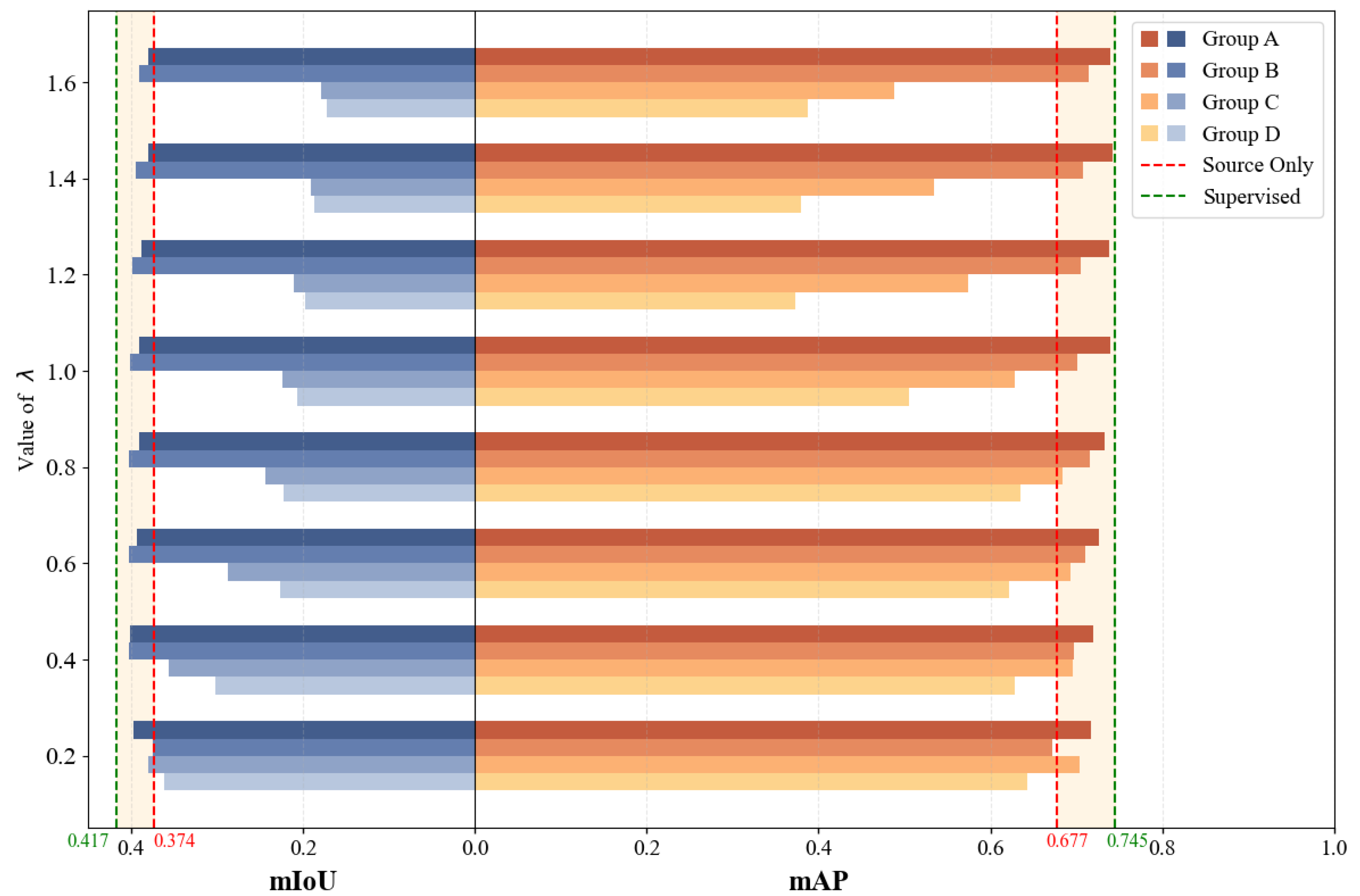

4.5. Comparative Experiments

4.6. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Vishwakarma, A. Denoising and Inpainting of Sonar Images Using Convolutional Sparse Representation. IEEE Trans. Instrum. Meas. 2023, 72, 1–9. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Sobel, I.; Feldman, G. A 3×3 Isotropic Gradient Operator for Image Processing. Stanf. Artif. Proj. 1968, 271–272. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. Automatica 1975, 11, 23–27. [Google Scholar] [CrossRef]

- Tian, Y.; Lan, L.; Guo, H. A Review on the Wavelet Methods for Sonar Image Segmentation. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420936091. [Google Scholar] [CrossRef]

- Chai, Y.; Yu, H.; Xu, L.; Li, D.; Chen, Y. Deep Learning Algorithms for Sonar Imagery Analysis and Its Application in Aquaculture: A Review. IEEE Sens. J. 2023, 23, 28549–28563. [Google Scholar] [CrossRef]

- Yu, S. Sonar Image Target Detection Based on Deep Learning. Math. Probl. Eng. 2022, 2022, 5294151. [Google Scholar] [CrossRef]

- Choi, K.-H.; Ha, J.-E. An Adaptive Threshold for the Canny Algorithm with Deep Reinforcement Learning. IEEE Access 2021, 9, 156846–156856. [Google Scholar] [CrossRef]

- Cao, X.; Ren, L.; Sun, C. Research on Obstacle Detection and Avoidance of Autonomous Underwater Vehicle Based on Forward-Looking Sonar. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 9198–9208. [Google Scholar] [CrossRef]

- Khan, R.; Mehmood, A.; Akbar, S.; Zheng, Z. Underwater Image Enhancement with an Adaptive Self Supervised Network. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; pp. 1355–1360. [Google Scholar]

- Toldo, M.; Maracani, A.; Michieli, U.; Zanuttigh, P. Unsupervised Domain Adaptation in Semantic Segmentation: A Review. Technologies 2020, 8, 35. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 4015–4026. [Google Scholar]

- Dev, S.; Lee, Y.H.; Winkler, S. Color-Based Segmentation of Sky/Cloud Images From Ground-Based Cameras. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 231–242. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Tran, S.-T.; Cheng, C.-H.; Nguyen, T.-T.; Le, M.-H.; Liu, D.-G. TMD-Unet: Triple-Unet with Multi-Scale Input Features and Dense Skip Connection for Medical Image Segmentation. Healthcare 2021, 9, 54. [Google Scholar] [CrossRef]

- Sun, Y.; Zheng, H.; Zhang, G.; Ren, J.; Shu, G. CGF-Unet: Semantic Segmentation of Sidescan Sonar Based on Unet Combined with Global Features. IEEE J. Ocean. Eng. 2024, 49, 963–975. [Google Scholar] [CrossRef]

- Sun, Y.-C.; Gerg, I.D.; Monga, V. Iterative, Deep Synthetic Aperture Sonar Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- He, J.; Chen, J.; Xu, H.; Yu, Y. SonarNet: Hybrid CNN-Transformer-HOG Framework and Multifeature Fusion Mechanism for Forward-Looking Sonar Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–17. [Google Scholar] [CrossRef]

- Long, M.; Cao, Y.; Wang, J.; Jordan, M.I. Learning Transferable Features with Deep Adaptation Networks. In Proceedings of the International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 97–105. [Google Scholar]

- Sun, B.; Saenko, K. Deep CORAL: Correlation Alignment for Deep Domain Adaptation. In Proceedings of the Computer Vision—ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; pp. 443–450. [Google Scholar]

- Ganin, Y.; Lempitsky, V. Unsupervised Domain Adaptation by Backpropagation. In Proceedings of the International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 1180–1189. [Google Scholar]

- Yu, C.; Wang, J.; Chen, Y.; Huang, M. Transfer Learning with Dynamic Adversarial Adaptation Network. In Proceedings of the 2019 IEEE International Conference on Data Mining (ICDM), Beijing, China, 8–11 November 2019; pp. 778–786. [Google Scholar]

- Sethuraman, A.V.; Skinner, K.A. STARS: Zero-Shot Sim-to-Real Transfer for Segmentation of Shipwrecks in Sonar Imagery. arXiv 2023, arXiv:2310.01667. [Google Scholar]

- Wang, Q.; Zhang, Y.; He, B. Automatic Seabed Target Segmentation of AUV via Multilevel Adversarial Network and Marginal Distribution Adaptation. IEEE Trans. Ind. Electron. 2023, 71, 749–759. [Google Scholar] [CrossRef]

- Li, J.; Seltzer, M.L.; Wang, X.; Zhao, R.; Gong, Y. Large-Scale Domain Adaptation via Teacher-Student Learning. arXiv 2017, arXiv:1708.05466. [Google Scholar]

- Li, W.; Fan, K.; Yang, H. Teacher–Student Mutual Learning for Efficient Source-Free Unsupervised Domain Adaptation. Knowl.-Based Syst. 2023, 261, 110204. [Google Scholar] [CrossRef]

- Zhang, H.; Tang, J.; Cao, Y.; Chen, Y.; Wang, Y.; Wu, Q.J. Cycle Consistency Based Pseudo Label and Fine Alignment for Unsupervised Domain Adaptation. IEEE Trans. Multimed. 2022, 25, 8051–8063. [Google Scholar] [CrossRef]

- Zhao, X.; Mithun, N.C.; Rajvanshi, A.; Chiu, H.-P.; Samarasekera, S. Unsupervised Domain Adaptation for Semantic Segmentation with Pseudo Label Self-Refinement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 1–6 January 2024; pp. 2399–2409. [Google Scholar]

- Deng, Z.; Luo, Y.; Zhu, J. Cluster Alignment with a Teacher for Unsupervised Domain Adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9944–9953. [Google Scholar]

- Wang, Z.; Zhang, Y.; Zhang, Z.; Jiang, Z.; Yu, Y.; Li, L.; Li, L. Exploring Semantic Prompts in the Segment Anything Model for Domain Adaptation. Remote Sens. 2024, 16, 758. [Google Scholar] [CrossRef]

- Yan, W.; Qian, Y.; Zhuang, H.; Wang, C.; Yang, M. Sam4udass: When SAM Meets Unsupervised Domain Adaptive Semantic Segmentation in Intelligent Vehicles. IEEE Trans. Intell. Veh. 2023, 9, 3396–3408. [Google Scholar] [CrossRef]

- Wang, F.; Zhao, L.; Hong, S.; Wang, Z.; Liu, C.; Gao, C.; Li, J.; Li, X.; Luo, D. Dual-Domain Teacher for Unsupervised Domain Adaptation Detection. IEEE Trans. Multimed. 2025, 27, 4217–4226. [Google Scholar] [CrossRef]

- Stenger, A.; Baudrier, É.; Naegel, B.; Passat, N. RESAMPL-UDA: Leveraging foundation models for unsupervised domain adaptation in biomedical images. Pattern Recognit. Lett. 2025, 196, 221–227. [Google Scholar] [CrossRef]

- Chen, H.; Li, L.; Chen, J.; Lin, K.-Y. Unsupervised Domain Adaptation via Double Classifiers Based on High Confidence Pseudo Label. arXiv 2021, arXiv:2105.04729. [Google Scholar] [CrossRef]

- Yang, R.; Tian, T.; Tian, J. Versatile Teacher: A Class-Aware Teacher–Student Framework for Cross-Domain Adaptation. Pattern Recognit. 2025, 158, 111024. [Google Scholar] [CrossRef]

- Wang, Q.; Breckon, T. Unsupervised Domain Adaptation via Structured Prediction Based Selective Pseudo-Labeling. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 6243–6250. [Google Scholar]

- Zhang, B.; Wang, Y.; Hou, W.; Wu, H.; Wang, J.; Okumura, M.; Shinozaki, T. FlexMatch: Boosting Semi-Supervised Learning with Curriculum Pseudo Labeling. Adv. Neural Inf. Process. Syst. 2021, 34, 18408–18419. [Google Scholar]

- Lee, D.-H. Pseudo-Label: The Simple and Efficient Semi-Supervised Learning Method for Deep Neural Networks. In Proceedings of the Workshop on Challenges in Representation Learning, ICML, Atlanta, GA, USA, 16–21 June 2013; Volume 3, p. 896. [Google Scholar]

- Yang, D.; Cheng, C.; Wang, C.; Pan, G.; Zhang, F. Side-Scan Sonar Image Segmentation Based on Multi-Channel CNN for AUV Navigation. Front. Neurorobotics 2022, 16, 928206. [Google Scholar] [CrossRef]

- Zuiderveld, K. Contrast Limited Adaptive Histogram Equalization. In Graphics Gems; Academic Press: San Diego, CA, USA, 1994; pp. 474–485. [Google Scholar]

- Gao, S.; Guo, W.; Xu, G.; Liu, B.; Sun, Y.; Yuan, B. A Lightweight YOLO Network Using Temporal Features for High-Resolution Sonar Segmentation. Front. Mar. Sci. 2025, 12, 1581794. [Google Scholar] [CrossRef]

- Ghifary, M.; Kleijn, W.B.; Zhang, M. Domain Adaptive Neural Networks for Object Recognition; Springer International Publishing: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Cui, S.; Wang, S.; Zhuo, J.; Li, L.; Huang, Q.; Tian, Q. Towards Discriminability and Diversity: Batch Nuclear-Norm Maximization under Label Insufficient Situations. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

| Hyper-Parameters | Values |

|---|---|

| Training epoch | 200 |

| Batch size | 32 |

| Learning rate | |

| Optimizer | SGD |

| Weight decay | |

| Momentum | 0.9 |

| Preprocessing | Dice ↑ | IoU ↑ | Precision ↑ | F1-Score ↑ |

|---|---|---|---|---|

| Proposed method | 0.5991 | 0.4699 | 0.6662 | 0.5962 |

| Without CLAHE | 0.3951 | 0.3050 | 0.6583 | 0.3746 |

| Without prompt box | 0.2408 | 0.1652 | 0.1901 | 0.2203 |

| Linear-MMD [42] | BNM [43] | DANN [21] | DAAN [22] | Proposed | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| mIoU | mAP | mIoU | mAP | mIoU | mAP | mIoU | mAP | mIoU | mAP | |

| 0.2 | 0.382 | 0.675 | 0.384 | 0.644 | 0.366 | 0.649 | 0.383 | 0.675 | 0.404 | 0.701 |

| 0.4 | 0.383 | 0.682 | 0.368 | 0.620 | 0.375 | 0.640 | 0.382 | 0.682 | 0.406 | 0.713 |

| 0.6 | 0.386 | 0.686 | 0.364 | 0.616 | 0.381 | 0.639 | 0.386 | 0.691 | 0.405 | 0.719 |

| 0.8 | 0.384 | 0.687 | 0.364 | 0.623 | 0.370 | 0.613 | 0.385 | 0.692 | 0.408 | 0.703 |

| 1.0 | 0.391 | 0.692 | 0.364 | 0.623 | 0.364 | 0.646 | 0.391 | 0.693 | 0.405 | 0.705 |

| 1.2 | 0.383 | 0.685 | 0.365 | 0.630 | 0.359 | 0.643 | 0.383 | 0.690 | 0.398 | 0.708 |

| 1.4 | 0.380 | 0.683 | 0.364 | 0.626 | 0.374 | 0.670 | 0.380 | 0.682 | 0.395 | 0.712 |

| 1.6 | 0.379 | 0.684 | 0.365 | 0.634 | 0.369 | 0.660 | 0.380 | 0.684 | 0.396 | 0.716 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, S.; Guo, W.; Xu, G.; Liu, B. An Unsupervised Obstacle Segmentation Method for Forward-Looking Sonar Based on Teacher–Student Transfer Learning. J. Mar. Sci. Eng. 2025, 13, 2134. https://doi.org/10.3390/jmse13112134

Gao S, Guo W, Xu G, Liu B. An Unsupervised Obstacle Segmentation Method for Forward-Looking Sonar Based on Teacher–Student Transfer Learning. Journal of Marine Science and Engineering. 2025; 13(11):2134. https://doi.org/10.3390/jmse13112134

Chicago/Turabian StyleGao, Sen, Wei Guo, Gaofei Xu, and Ben Liu. 2025. "An Unsupervised Obstacle Segmentation Method for Forward-Looking Sonar Based on Teacher–Student Transfer Learning" Journal of Marine Science and Engineering 13, no. 11: 2134. https://doi.org/10.3390/jmse13112134

APA StyleGao, S., Guo, W., Xu, G., & Liu, B. (2025). An Unsupervised Obstacle Segmentation Method for Forward-Looking Sonar Based on Teacher–Student Transfer Learning. Journal of Marine Science and Engineering, 13(11), 2134. https://doi.org/10.3390/jmse13112134