Ship-RT-DETR: An Improved Model for Ship Plate Detection and Identification

Abstract

1. Introduction

2. Related Work

2.1. Ship License Plate Detection

2.2. Ship License Plate Recognition

3. The Proposed Method

3.1. Dataset Construction

3.1.1. Image Acquisition of Ships in Xijiang

3.1.2. Annotation

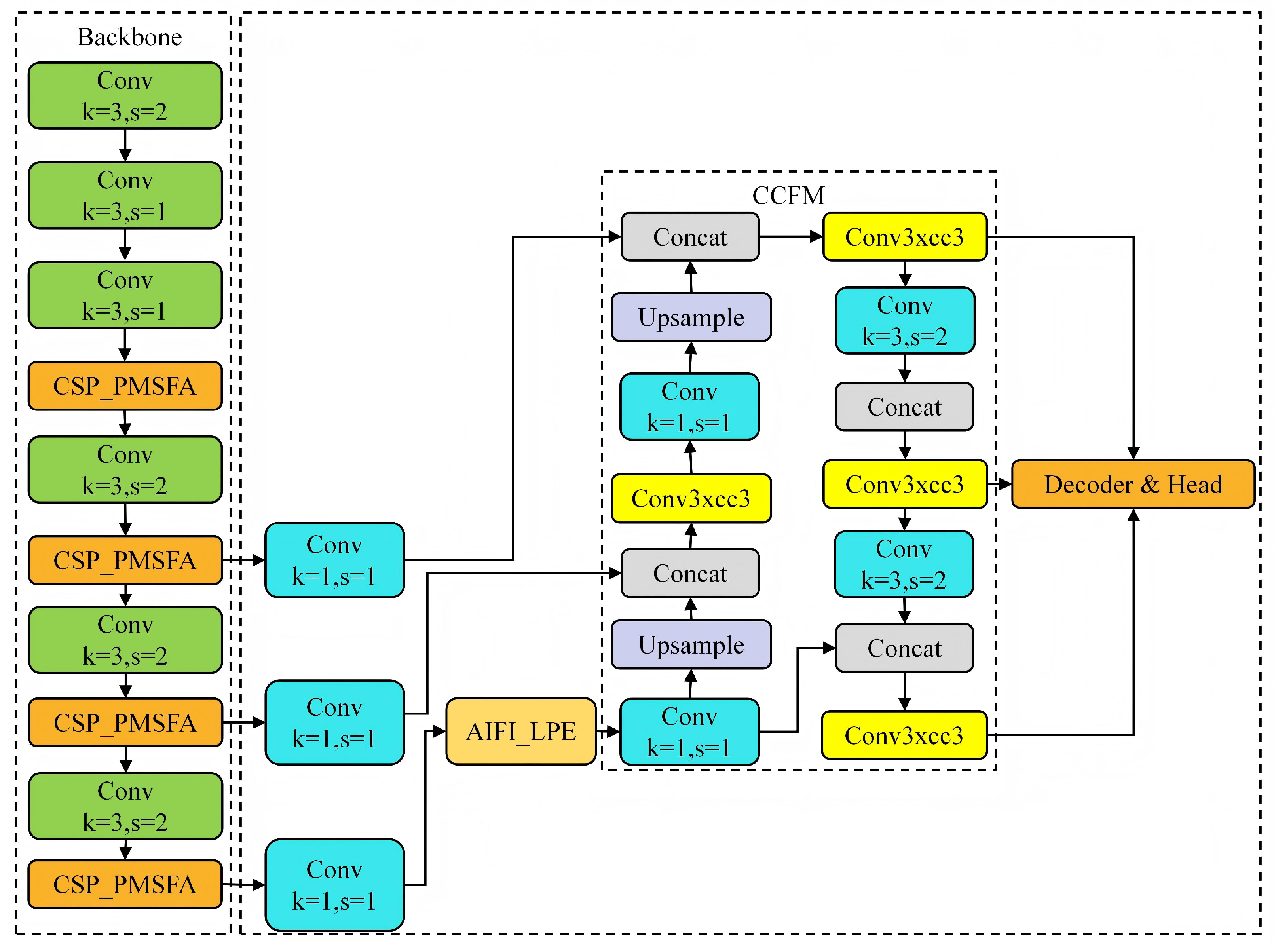

3.2. Ship Plate Detection Based on Ship-RT-DETR

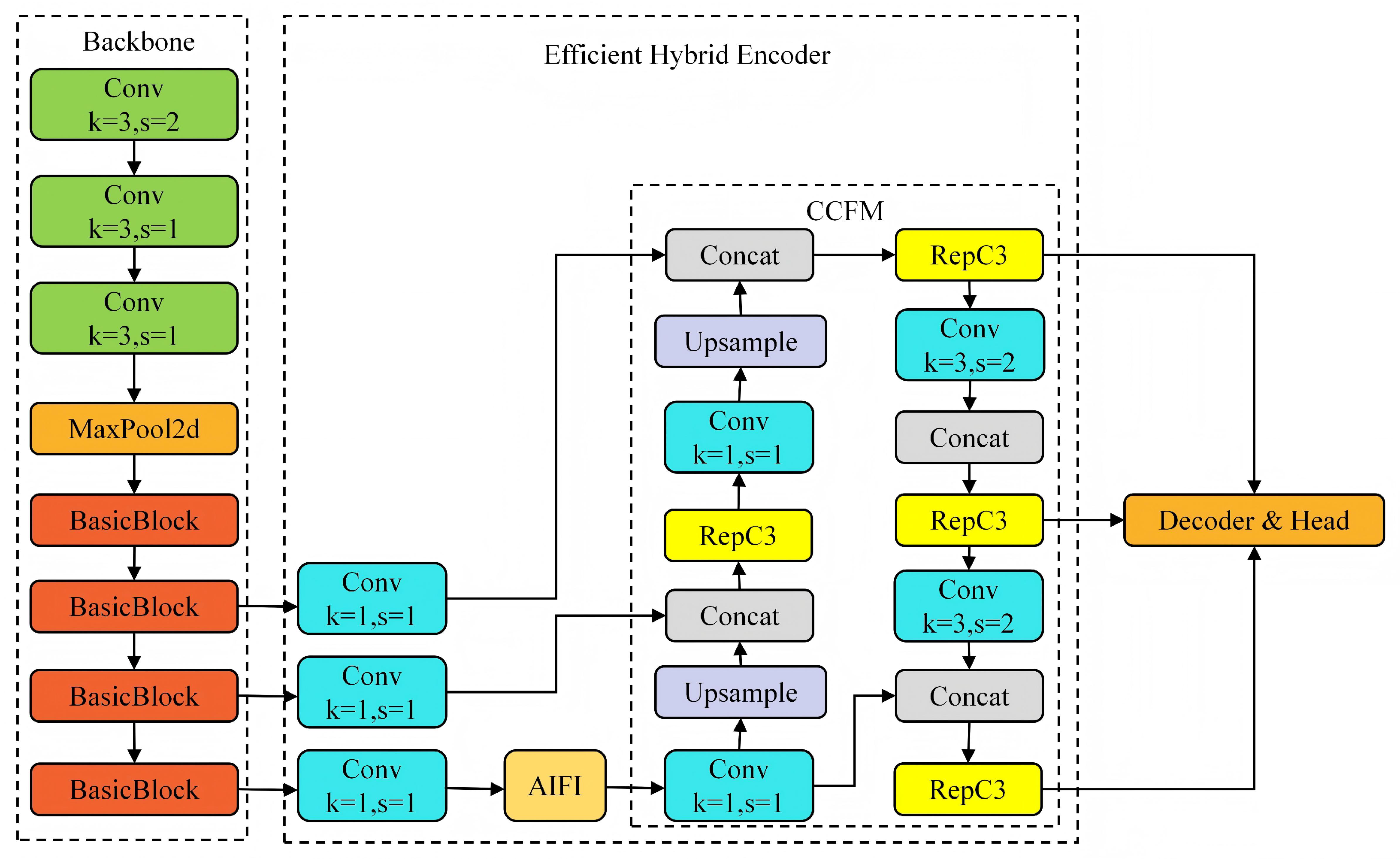

3.2.1. Overview of RT-DETR

3.2.2. Improvement of RT-DETR

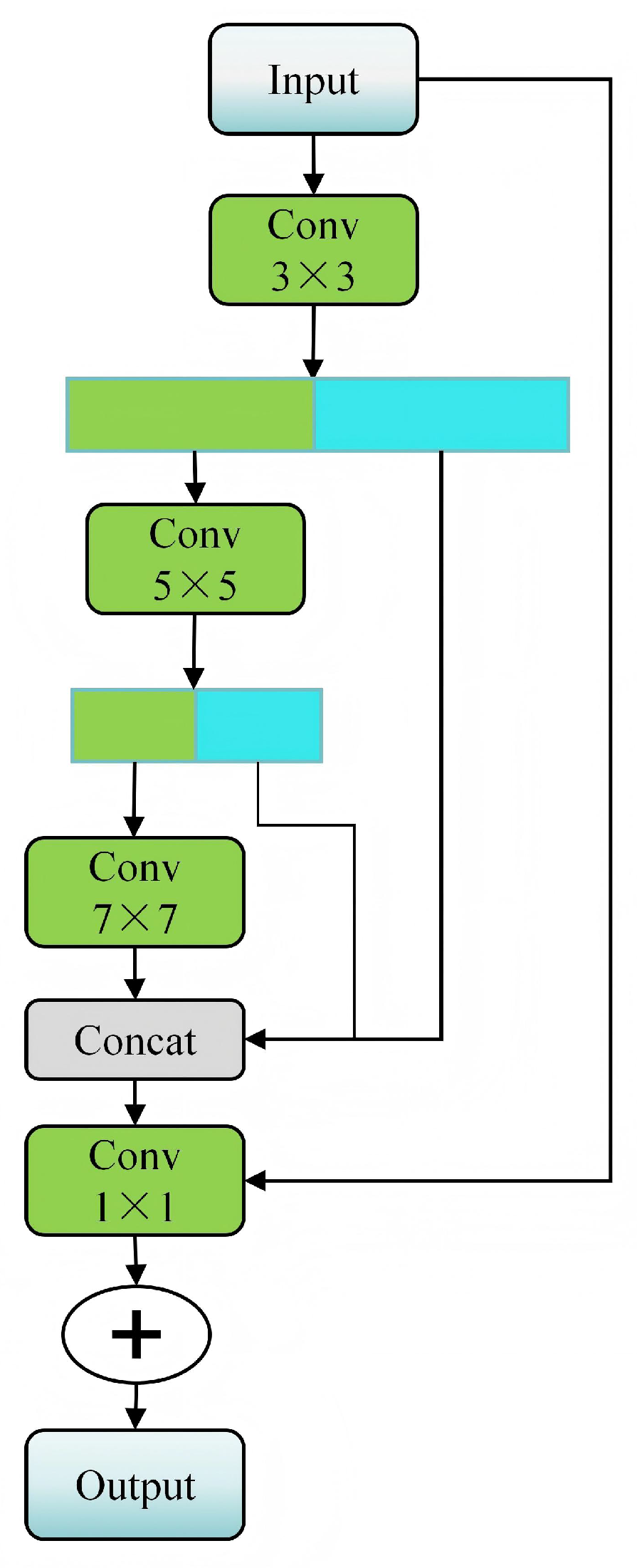

Design of CSP_PMSFA Module

Module Design of Conv3xcc3

Module Design of AIFI_LPE

3.2.3. Using OCR for SLPR

4. Experiments

4.1. Evaluation Metrics of Ship License Plate Detection

4.2. Evaluation Metrics of Ship License Plate Recognition

5. Results

5.1. Ablation Experiment

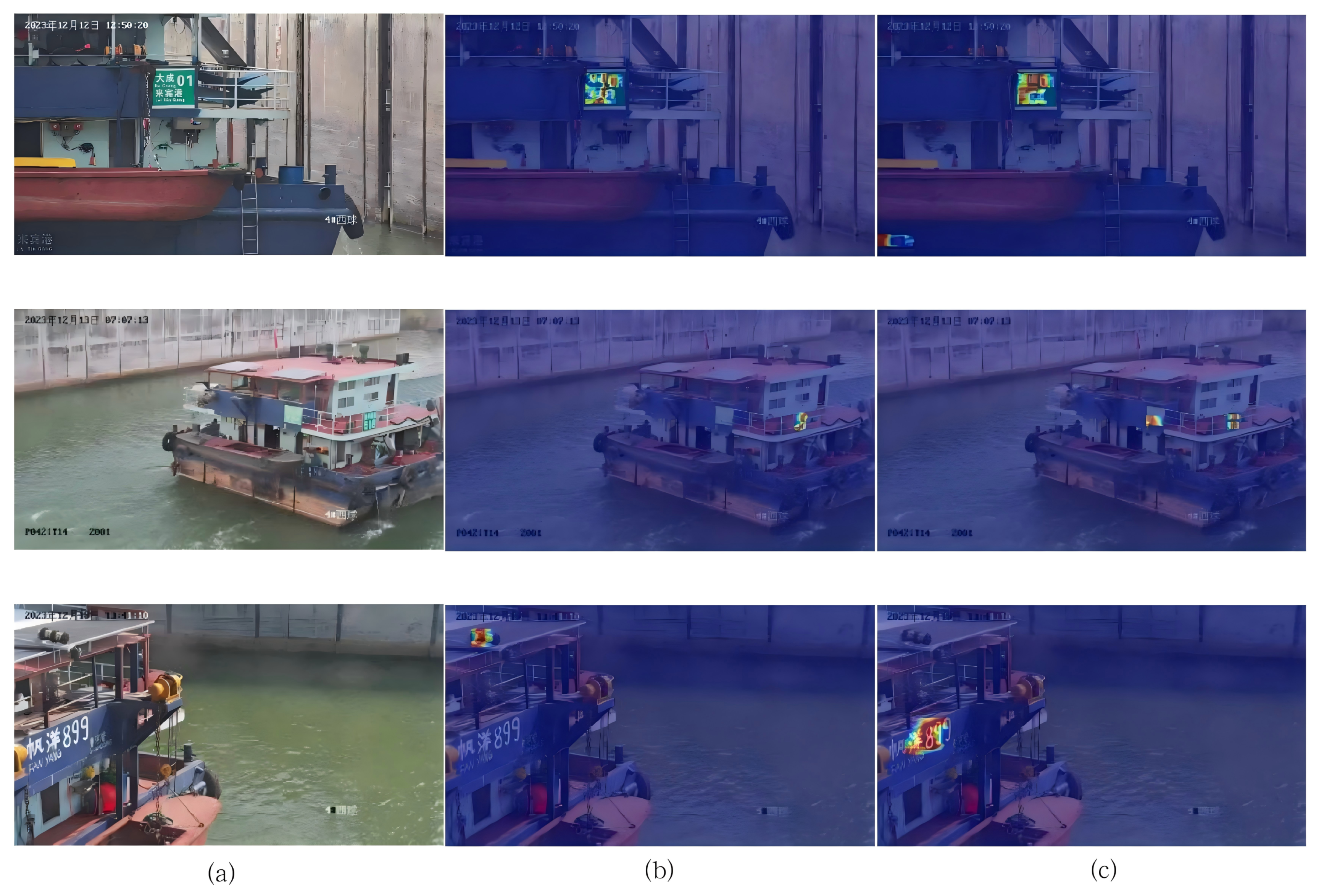

5.2. Visual Experiment

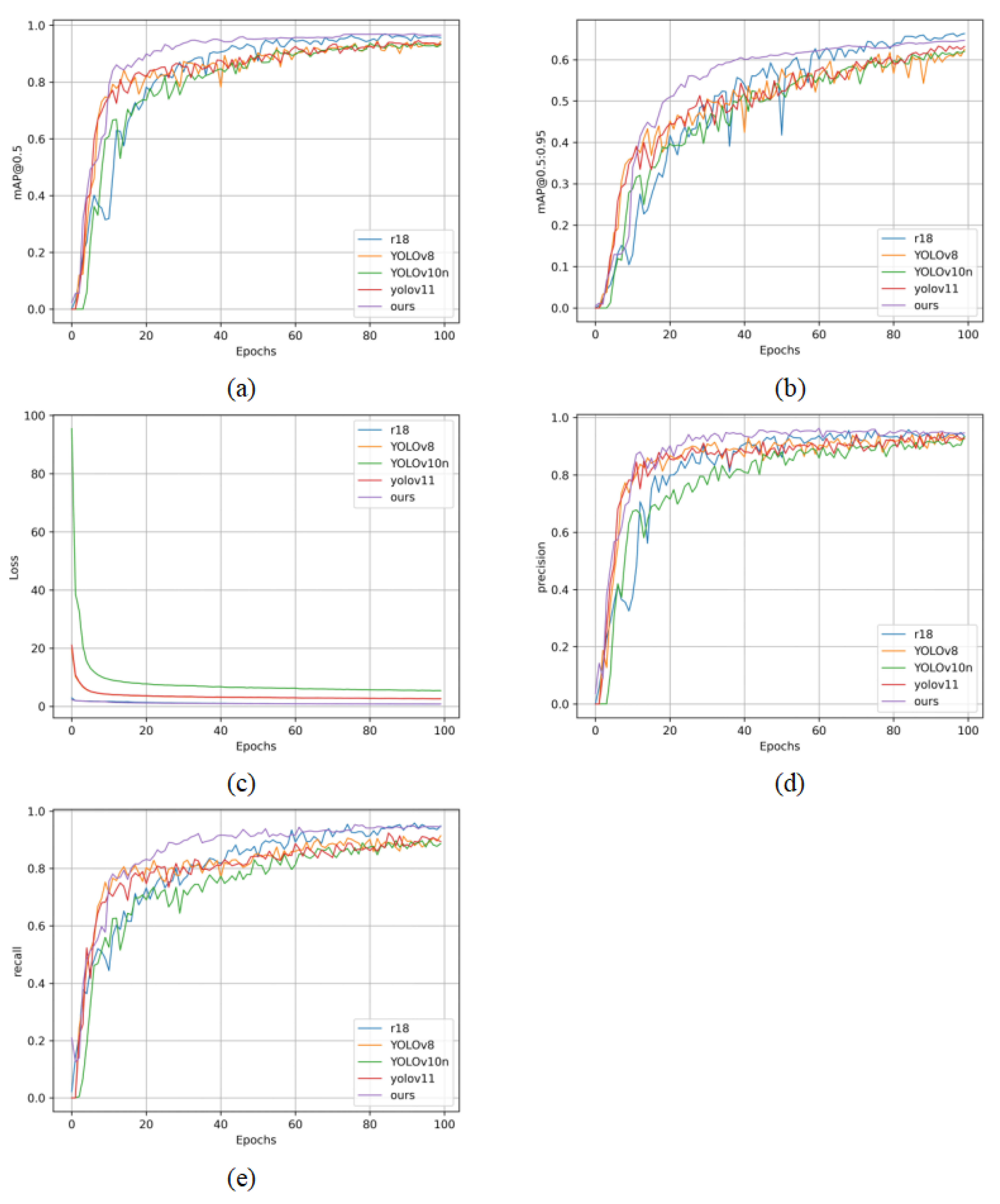

5.3. Comparative Experiment

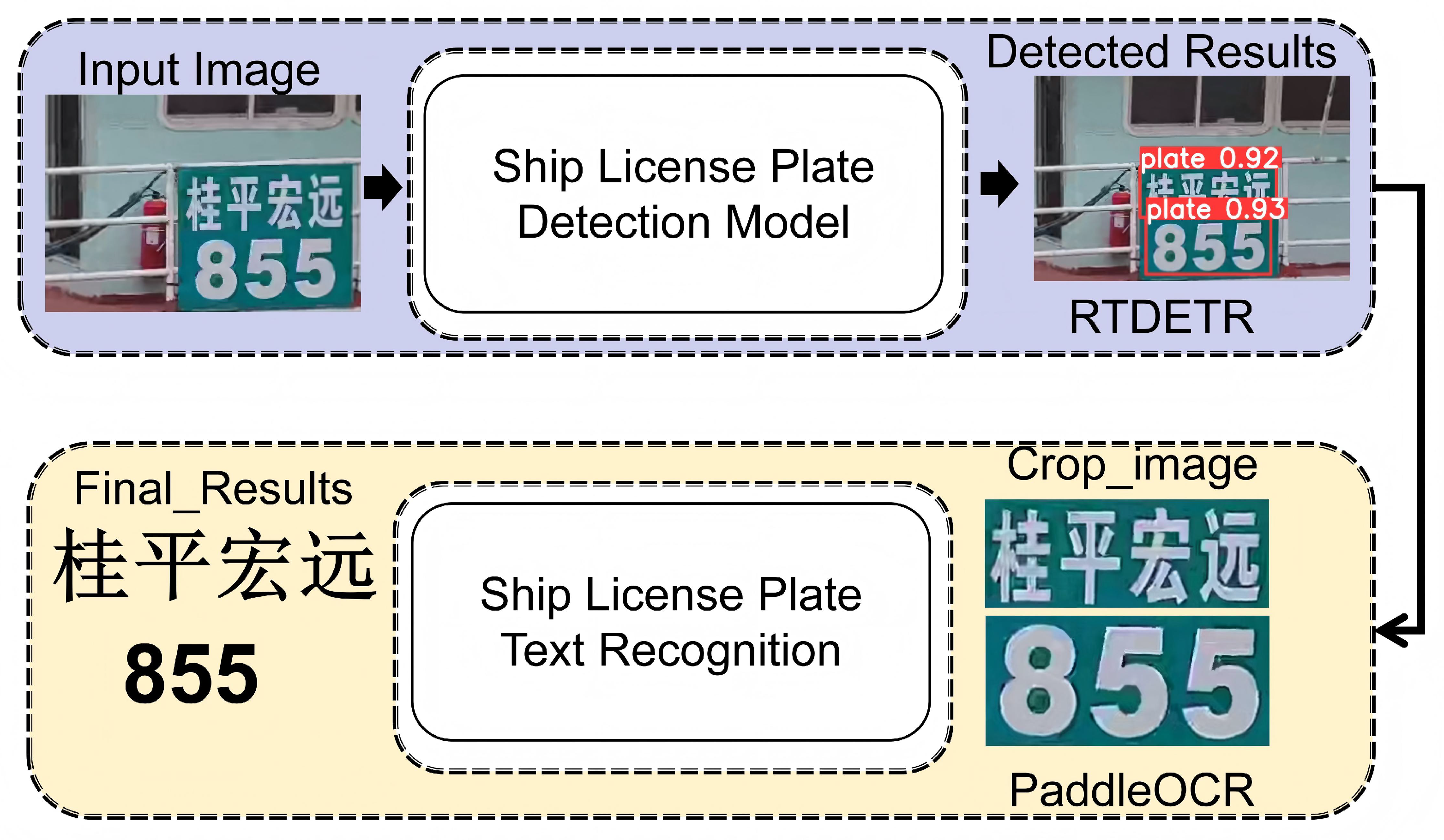

5.4. OCR

5.5. Environmental Robustness and Generalization Validation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Goncharov, V.K. The safety of arctic ice navigation on the basis of acceptable risk. Transp. Saf. Environ. 2025, 7, tdaf035. [Google Scholar] [CrossRef]

- Salma; Saeed, M.; ur Rahim, R.; Gufran Khan, M.; Zulfiqar, A.; Bhatti, M.T. Development of ANPR framework for Pakistani vehicle number plates using object detection and OCR. Complexity 2021, 2021, 5597337. [Google Scholar] [CrossRef]

- Moussaoui, H.; Akkad, N.E.; Benslimane, M.; El-Shafai, W.; Baihan, A.; Hewage, C.; Rathore, R.S. Enhancing automated vehicle identification by integrating YOLO v8 and OCR techniques for high-precision license plate detection and recognition. Sci. Rep. 2024, 14, 14389. [Google Scholar] [CrossRef]

- Rathi, R.; Sharma, A.; Baghel, N.; Channe, P.; Barve, S.; Jain, S. License plate detection using YOLO v4. Int. J. Health Sci. 2022, 6, 9456–9462. [Google Scholar] [CrossRef]

- Aljelawy, Q.M.; Salman, T.M. License plate recognition in slow motion vehicles. Bull. Electr. Eng. Inform. 2023, 12, 2236–2244. [Google Scholar] [CrossRef]

- Long, S.; He, X.; Yao, C. Scene text detection and recognition: The deep learning era. Int. J. Comput. Vis. 2021, 129, 161–184. [Google Scholar] [CrossRef]

- Lin, H.; Yang, P.; Zhang, F. Review of scene text detection and recognition. Arch. Comput. Methods Eng. 2020, 27, 433–454. [Google Scholar] [CrossRef]

- Gao, Y.; Chen, Y.; Wang, J.; Lu, H. Semi-supervised scene text recognition. IEEE Trans. Image Process. 2021, 30, 3005–3016. [Google Scholar] [CrossRef]

- Liu, D.; Cao, J.; Wang, T.; Wu, H.; Wang, J.; Tian, J.; Xu, F. SLPR: A deep learning based Chinese ship license plate recognition framework. IEEE Trans. Intell. Transp. Syst. 2022, 23, 23831–23843. [Google Scholar] [CrossRef]

- Zhou, C.; Liu, D.; Wang, T.; Tian, J.; Cao, J. M3ANet: Multi-modal and multi-attention fusion network for ship license plate recognition. IEEE Trans. Multimed. 2023, 26, 5976–5986. [Google Scholar] [CrossRef]

- Liu, B.; Lyu, X.; Li, C.; Zhang, S.; Hong, Z.; Ye, X. Using transferred deep model in combination with prior features to localize multi-style ship license numbers in nature scenes. In Proceedings of the 2017 IEEE 29th International Conference on Tools with Artificial Intelligence (ICTAI), Boston, MA, USA, 6–8 November 2017; pp. 506–510. [Google Scholar]

- Liu, B.; Wu, S.; Zhang, S.; Hong, Z.; Ye, X. Ship license numbers recognition using deep neural networks. In Journal of Physics: Conference Series, Proceedings of the 2018 2nd International Conference on Data Mining, Communications and Information Technology (DMCIT 2018), Shanghai, China, 25–27 May 2018; IOP Publishing: Bristol, UK, 2018; Volume 1060, p. 012064. [Google Scholar]

- Abdulraheem, A.; Suleiman, J.T.; Jung, I.Y. Enhancing the Automatic Recognition Accuracy of Imprinted Ship Characters by Using Machine Learning. Sustainability 2023, 15, 14130. [Google Scholar] [CrossRef]

- Li, T.; Yang, F.; Song, Y. Visual attention adversarial networks for Chinese font translation. Electronics 2023, 12, 1388. [Google Scholar] [CrossRef]

- Ke, W.; Wei, J.; Hou, Q.; Feng, H. Rethinking text rectification for scene text recognition. Expert Syst. Appl. 2023, 219, 119647. [Google Scholar] [CrossRef]

- Wang, Q.; Huang, Y.; Jia, W.; He, X.; Blumenstein, M.; Lyu, S.; Lu, Y. FACLSTM: ConvLSTM with focused attention for scene text recognition. Sci. China Inf. Sci. 2020, 63, 1–14. [Google Scholar] [CrossRef]

- Gao, M.; Du, Y.; Yang, Y.; Zhang, J. Adaptive anchor box mechanism to improve the accuracy in the object detection system. Multimed. Tools Appl. 2019, 78, 27383–27402. [Google Scholar] [CrossRef]

- Liu, B.; Sheng, J.; Dun, J.; Zhang, S.; Hong, Z.; Ye, X. Locating various ship license numbers in the wild: An effective approach. IEEE Intell. Transp. Syst. Mag. 2017, 9, 102–117. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, D.; Zhang, Y.; Cheng, X.; Zhang, M.; Wu, C. Deep learning for autonomous ship-oriented small ship detection. Saf. Sci. 2020, 130, 104812. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, W.; Zhuang, J.; Zhang, R.; Du, X. Detection Technique Tailored for Small Targets on Water Surfaces in Unmanned Vessel Scenarios. J. Mar. Sci. Eng. 2024, 12, 379. [Google Scholar] [CrossRef]

- Lu, D.; Tang, H.; Teng, L.; Tan, J.; Wang, M.; Tian, Z.; Wang, L. Multiscale Feature-Based Infrared Ship Detection. Appl. Sci. 2023, 14, 246. [Google Scholar] [CrossRef]

- Kim, K.; Hong, S.; Choi, B.; Kim, E. Probabilistic ship detection and classification using deep learning. Appl. Sci. 2018, 8, 936. [Google Scholar] [CrossRef]

- Shao, Z.; Wu, W.; Wang, Z.; Du, W.; Li, C. Seaships: A large-scale precisely annotated dataset for ship detection. IEEE Trans. Multimed. 2018, 20, 2593–2604. [Google Scholar] [CrossRef]

- Han, X.; Zhao, L.; Ning, Y.; Hu, J. ShipYolo: An enhanced model for ship detection. J. Adv. Transp. 2021, 2021, 1060182. [Google Scholar] [CrossRef]

- Jiang, X.; Cai, J.; Wang, B. YOLOSeaShip: A lightweight model for real-time ship detection. Eur. J. Remote Sens. 2024, 57, 2307613. [Google Scholar] [CrossRef]

- Jiang, Z.; Su, L.; Sun, Y. YOLOv7-Ship: A Lightweight Algorithm for Ship Object Detection in Complex Marine Environments. J. Mar. Sci. Eng. 2024, 12, 190. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Wan, C.; Yu, H.; Li, Z.; Chen, Y.; Zou, Y.; Liu, Y.; Yin, X.; Zuo, K. Swift parameter-free attention network for efficient super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 6246–6256. [Google Scholar]

- Liu, X.; Yu, H.F.; Dhillon, I.; Hsieh, C.J. Learning to encode position for transformer with continuous dynamical model. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual Event, 13–18 July 2020; pp. 6327–6335. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Chen, X.; Wu, H.; Han, B.; Liu, W.; Montewka, J.; Liu, R.W. Orientation-aware ship detection via a rotation feature decoupling supported deep learning approach. Eng. Appl. Artif. Intell. 2023, 125, 106686. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Shi, B.; Bai, X.; Yao, C. An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 2298–2304. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Jiang, Y.; Luo, Z.; Liu, C.L.; Choi, H.; Kim, S. Arbitrary shape scene text detection with adaptive text region representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6449–6458. [Google Scholar]

- Wang, H.; Bai, X.; Yang, M.; Zhu, S.; Wang, J.; Liu, W. Scene text retrieval via joint text detection and similarity learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4558–4567. [Google Scholar]

- Padilla, R.; Netto, S.L.; Da Silva, E.A. A survey on performance metrics for object-detection algorithms. In Proceedings of the 2020 international conference on systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar]

- Nguyen, T.T.H.; Jatowt, A.; Coustaty, M.; Doucet, A. Survey of post-OCR processing approaches. ACM Comput. Surv. (CSUR) 2021, 54, 1–37. [Google Scholar] [CrossRef]

| CSP | Conv3 | AIFI | P% | R% | AP.5% | AP.5:0.9% | Parms% | FPS | F1% |

|---|---|---|---|---|---|---|---|---|---|

| × | × | × | 95.0 | 95.3 | 96.0 | 64.8 | 38.6 | 63.7 | 94.0 |

| ✓ | × | × | 95.3 | 94.6 | 95.6 | 63.5 | 27.6 | 66.3 | 92.0 |

| × | ✓ | × | 94.8 | 94.6 | 95.7 | 64.4 | 39.3 | 48.7 | 94.0 |

| × | × | ✓ | 94.7 | 95.3 | 95.9 | 64.9 | 38.8 | 67.4 | 94.0 |

| ✓ | ✓ | × | 96.0 | 95.3 | 95.9 | 66.2 | 28.3 | 58.3 | 95.0 |

| × | ✓ | ✓ | 96.0 | 95.3 | 95.9 | 66.2 | 39.5 | 62.4 | 93.0 |

| ✓ | ✓ | ✓ | 96.2 | 95.3 | 97.0 | 64.7 | 28.5 | 67.3 | 95.0 |

| Model | Acc | Norm_edit_dis | FPS |

|---|---|---|---|

| PP-OCRv2 | 85.30% | 0.953 | 402.7 |

| PP-OCRv3 | 91.60% | 0.965 | 1613 |

| Condition | Detection mAP@0.5 | OCR Success Rate | OCR Confidence |

|---|---|---|---|

| Sunny | 97.8% | 100.0% | 0.903 |

| Cloudy | 95.7% | 100.0% | 0.986 |

| Rainy | 95.5% | 100.0% | 0.936 |

| Foggy | 90.1% | 77.8% | 0.947 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, C.; Ji, X.; Mo, Z.; Mo, J. Ship-RT-DETR: An Improved Model for Ship Plate Detection and Identification. J. Mar. Sci. Eng. 2025, 13, 2205. https://doi.org/10.3390/jmse13112205

Qin C, Ji X, Mo Z, Mo J. Ship-RT-DETR: An Improved Model for Ship Plate Detection and Identification. Journal of Marine Science and Engineering. 2025; 13(11):2205. https://doi.org/10.3390/jmse13112205

Chicago/Turabian StyleQin, Chang, Xiaoyu Ji, Zhiyi Mo, and Jinming Mo. 2025. "Ship-RT-DETR: An Improved Model for Ship Plate Detection and Identification" Journal of Marine Science and Engineering 13, no. 11: 2205. https://doi.org/10.3390/jmse13112205

APA StyleQin, C., Ji, X., Mo, Z., & Mo, J. (2025). Ship-RT-DETR: An Improved Model for Ship Plate Detection and Identification. Journal of Marine Science and Engineering, 13(11), 2205. https://doi.org/10.3390/jmse13112205