MASS-LSVD: A Large-Scale First-View Dataset for Marine Vessel Detection

Abstract

1. Introduction

2. Relevant Work

2.1. Maritime Target Detection Datasets

2.2. Maritime Target Detection Model

3. Data Acquisition

3.1. Video Data Acquisition

3.2. Data Collection Challenges

- First-person perspective acquisition: Our dataset captures video from the ship’s bridge or bow to obtain first-person perspective images of ships. Unlike land-based or satellite imaging (as in SeaShips, SMD), shipborne first-person perspective collection is more aligned with the navigator’s visual perception, with stronger view-dependent relevance and realism. This provides training samples that are closer to real conditions for developing autonomous ship vision systems.

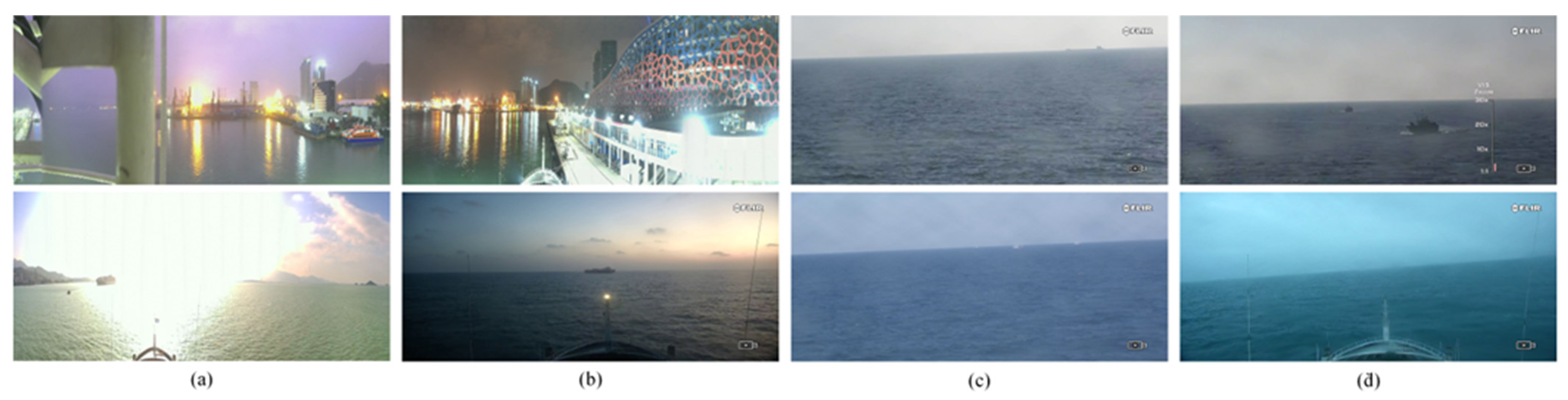

- All-weather, all-hour collection: Data collection spans daytime, dusk, and night, with different weather conditions including clear, overcast, and haze, and it covers complex navigation environments. This provides a rich training basis for target detection under adverse conditions such as night navigation and fog navigation, enhancing model generalization under extreme conditions. It also fills the gap left by existing public datasets, which are mostly concentrated in daytime and calm seas, and significantly improves model robustness in real sea conditions.

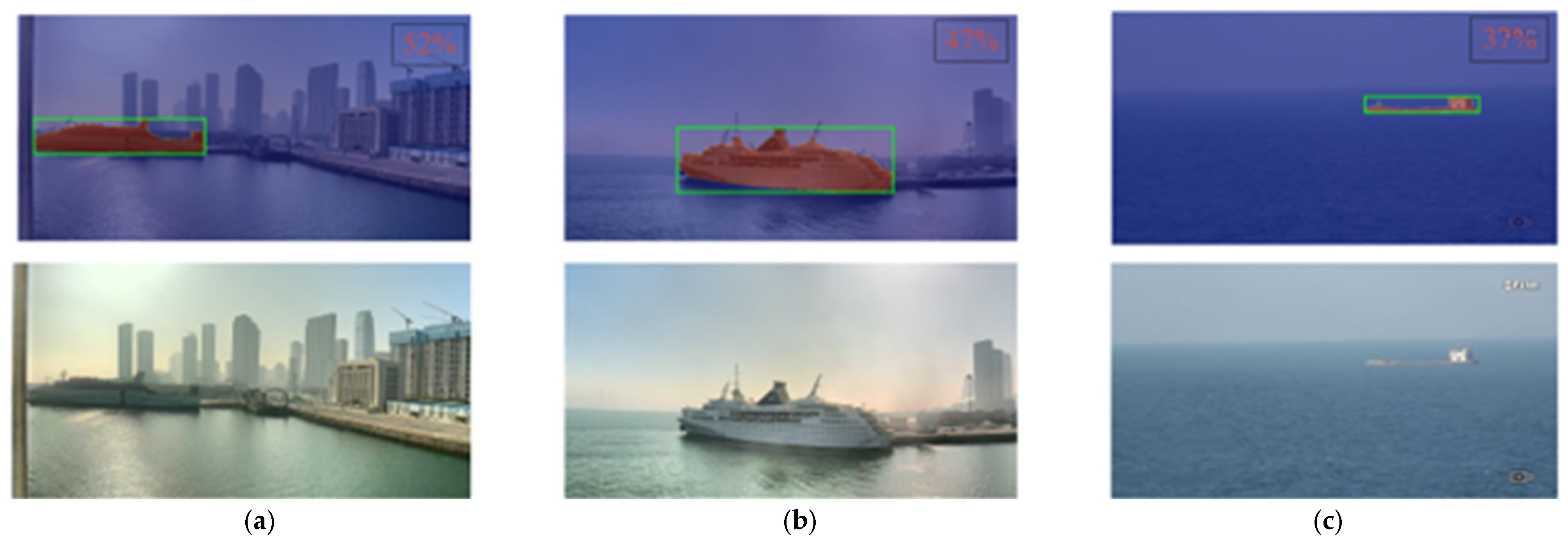

- Multimodal data collection: We simultaneously collected infrared images corresponding to the same times, providing a training data foundation for vision-based unmanned surface vessel (USV) systems. This further builds a multimodal perception capability and provides usable data standards for algorithm development, engineering practice, and industrial applications in intelligent shipborne systems.

3.3. Dataset Diversity

- First-person high-definition capture: All MASS-LSVD images were captured using the shipborne high-definition visible-light camera on Dalian Maritime University’s Xinhongzhuan. Unlike land-based or satellite datasets, shipborne first-person perspective captures dynamic features such as pitch, heading changes, and occlusions as the ship maneuvers. This not only trains models to more effectively recognize ships from the navigation viewpoint but also provides directly relevant training data for future autonomous ship vision systems.

- Extensive coverage of conditions: We collected over 4000 h of Xinhongzhuan navigation video in the Bohai Sea and other waters, covering multiple weather conditions (sunny, rainy, nighttime, haze) and various ship states (entering/exiting port, off-shore navigation, etc.),as can be seen from Figure 3. Ultimately, we selected 64,263 clear 1K-resolution images. This all-hours, all-weather sampling strategy ensures that the dataset has a high degree of generalization across visibility, illumination changes, and sea state complexity.

- Target-focused framing: We used the pan-tilt system to keep the target ship as centered as possible in the image and adjusted the focus (manually or automatically) to obtain clearer images of the targets.

- Multimodal sample synchronization: We also collected corresponding infrared images at the same moments, providing training samples for vision-based USV systems. This further builds a multimodal perception capability and supplies the data needed for algorithm support and engineering practice in intelligent shipborne systems.

3.4. Annotation

- We categorized the collected video into two scenarios: in-transit and near-port. For in-transit video, we extracted one image every 600 frames (about 20 s). For near-port (docked) video, where scenes change more slowly, we extracted one image every 1500 frames (about 50 s). After processing, we obtained 576,324 raw PNG images.

- The raw images were extracted from the video, many of which contain no ships, or the ship position and orientation in adjacent frames changed very little, producing a large number of redundant images. To reduce manual annotation workload, we filtered the raw images and ultimately retained 64,263 images.

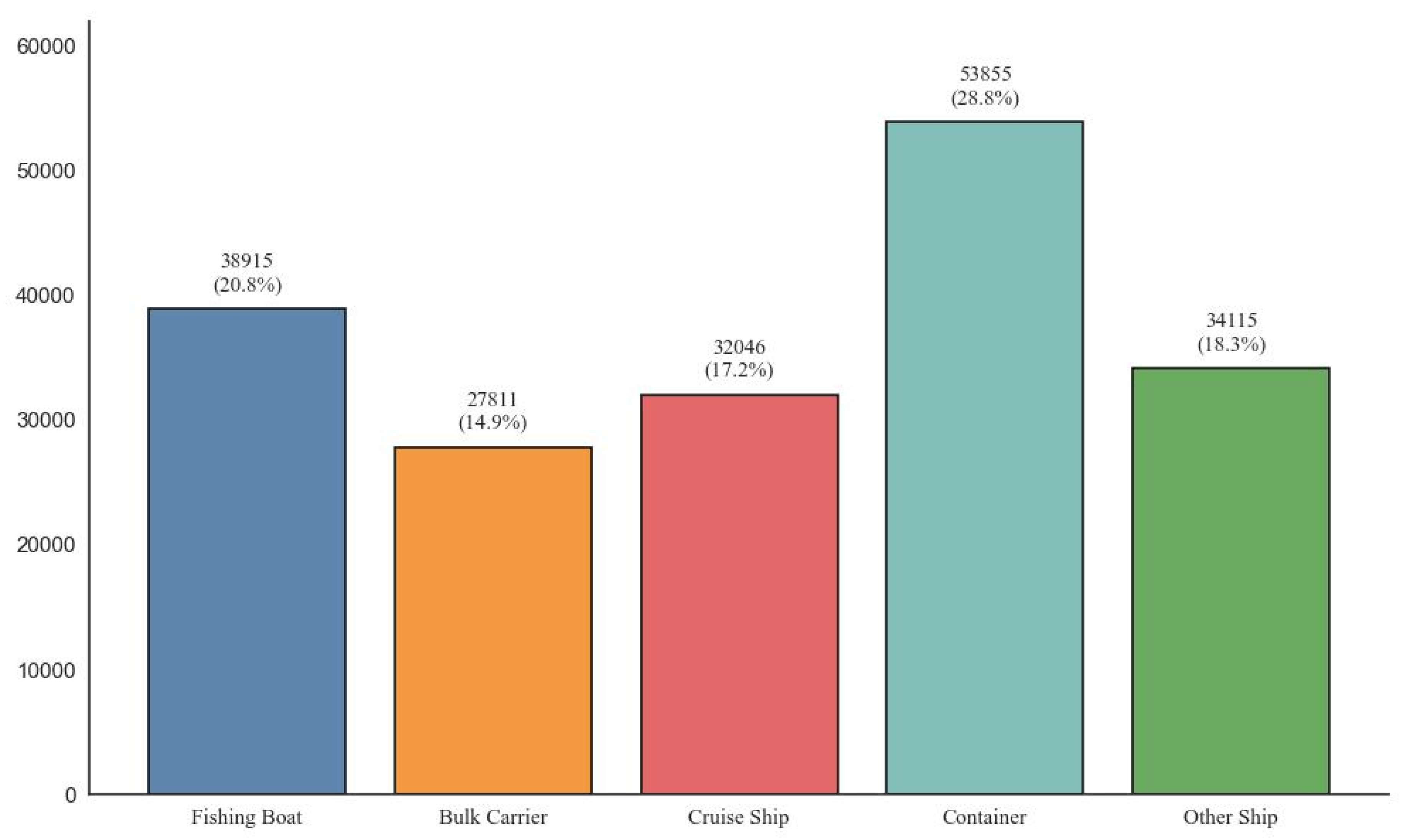

- For each retained image, we manually drew bounding boxes around vessels using the label annotation tool. Each image underwent double-blind cross-annotation to ensure the accuracy of every annotated box, with all annotation data being uniformly saved as text files compliant with the COCO dataset format. Upon completion, a total of 186,742 object bounding boxes were obtained. The dataset was then split into training and testing sets at an 8:2 ratio, maintaining the same proportion of annotated boxes across both sets wherever possible. All steps described above were performed manually. Figure 4 illustrates the number and proportion of bounding boxes for each vessel type in the dataset.

4. Experiments on the MASS-LSVD Dataset

4.1. Ship Detection Algorithms

4.2. Evaluation Protocol

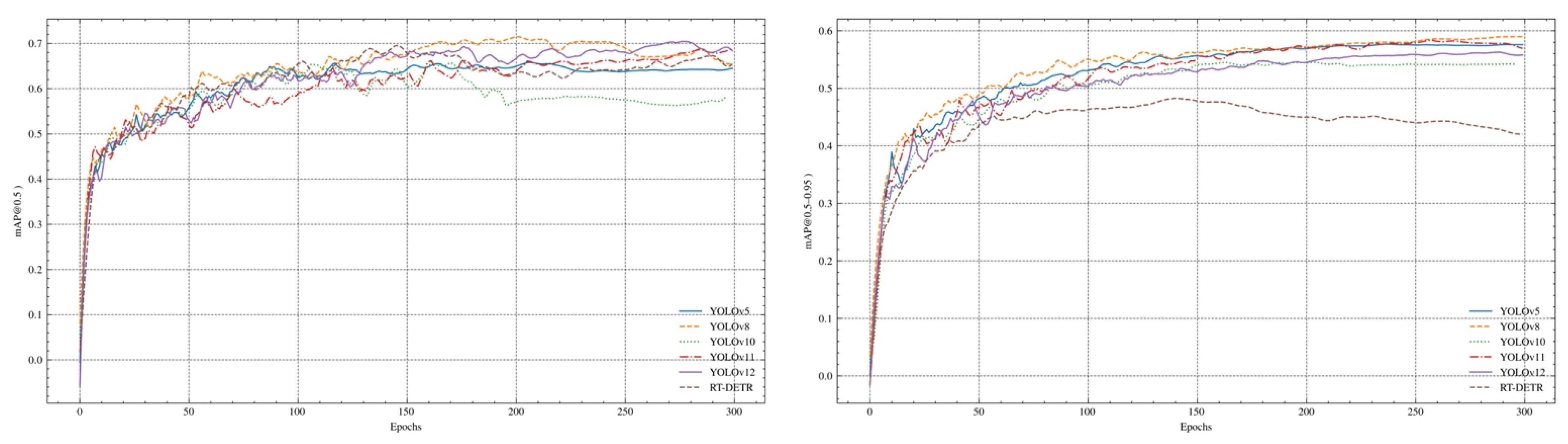

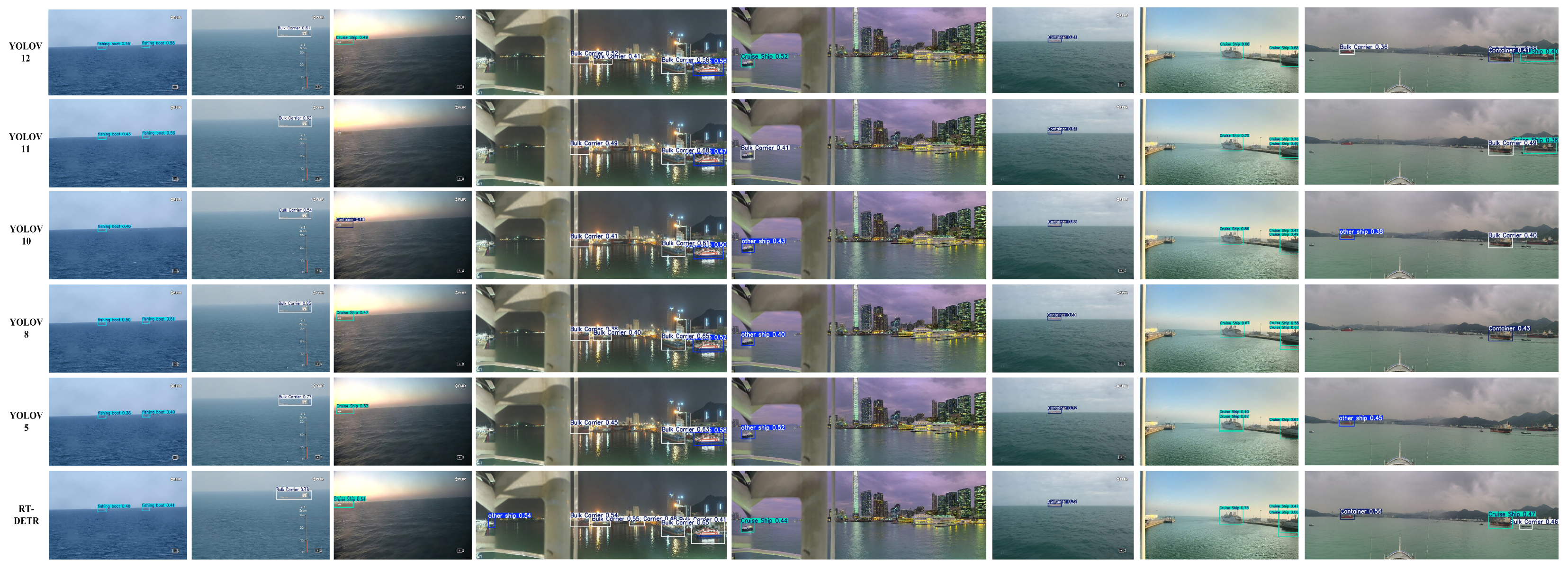

4.3. Analysis of Experimental Results

4.4. Generalization and Real-Time Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| MASS | Multi-Access Semi-Autonomous Ship |

| CNN | Convolutional Neural Network |

| YOLO | You Only Look Once |

References

- Xu, S.; Fan, J.; Jia, X.; Chang, J. Edge-Constrained Guided Feature Perception Network for Ship Detection in SAR Images. IEEE Sens. J. 2023, 23, 26828–26838. [Google Scholar] [CrossRef]

- Varghese, R.; M., S. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Er, M.J.; Zhang, Y.; Chen, J.; Gao, W. Ship Detection with Deep Learning: A Survey. Artif. Intell. Rev. 2023, 56, 11825–11865. [Google Scholar] [CrossRef]

- Shi, Z.; Yu, X.; Jiang, Z.; Li, B. Ship Detection in High-Resolution Optical Imagery Based on Anomaly Detector and Local Shape Feature. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4511–4523. [Google Scholar] [CrossRef]

- Development and Application of Ship Detection and Classification Datasets: A Review | IEEE Journals & Magazine | IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/10681575 (accessed on 5 August 2025).

- Wang, N.; Wang, Y.; Feng, Y.; Wei, Y. MDD-ShipNet: Math-Data Integrated Defogging for Fog-Occlusion Ship Detection. IEEE Trans. Intell. Transp. Syst. 2024, 25, 15040–15052. [Google Scholar] [CrossRef]

- Tan, X.; Leng, X.; Ji, K.; Kuang, G. RCShip: A Dataset Dedicated to Ship Detection in Range-Compressed SAR Data. IEEE Geosci. Remote Sens. Lett. 2024, 21, 4004805. [Google Scholar] [CrossRef]

- Zhao, C.; Liu, R.W.; Qu, J.; Gao, R. Deep Learning-Based Object Detection in Maritime Unmanned Aerial Vehicle Imagery: Review and Experimental Comparisons. Eng. Appl. Artif. Intell. 2024, 128, 107513. [Google Scholar] [CrossRef]

- Zhang, C.; Pan, F.; Kim, J.; Kweon, I.S.; Mao, C. ImageNet-D: Benchmarking Neural Network Robustness on Diffusion Synthetic Object. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE Computer Soc: Los Alamitos, CA, USA, 2024; pp. 21752–21762. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing Ag: Cham, Switzerland, 2014. Part IV. Volume 8693, pp. 740–755. [Google Scholar]

- Gao, W.; Cao, B.; Shan, S.; Chen, X.; Zhou, D.; Zhang, X.; Zhao, D. The CAS-PEAL Large-Scale Chinese Face Database and Baseline Evaluations. IEEE Trans. Syst. Man Cybern. Part A-Syst. Hum. 2008, 38, 149–161. [Google Scholar] [CrossRef]

- Zheng, T.; Deng, W. Cross-Pose LFW: A Database for Studying Cross-Pose Face Recognition in Unconstrained Environments. arXiv 2018, arXiv:1708.08197. [Google Scholar]

- Shao, S.; Zhao, Z.; Li, B.; Xiao, T.; Yu, G.; Zhang, X.; Sun, J. CrowdHuman: A Benchmark for Detecting Human in a Crowd. arXiv 2018, arXiv:1805.00123. [Google Scholar] [CrossRef]

- Neumann, L.; Karg, M.; Zhang, S.; Scharfenberger, C.; Piegert, E.; Mistr, S.; Prokofyeva, O.; Thiel, R.; Vedaldi, A.; Zisserman, A.; et al. NightOwls: A Pedestrians at Night Dataset. In Proceedings of the Computer Vision—ACCV 2018, Perth, Australia, 2–6 December 2018; Jawahar, C.V., Li, H., Mori, G., Schindler, K., Eds.; Springer International Publishing Ag: Cham, Switzerland, 2019. Part I. Volume 11361, pp. 691–705. [Google Scholar]

- Fu, C.; Liu, R.; Fan, X.; Chen, P.; Fu, H.; Yuan, W.; Zhu, M.; Luo, Z. Rethinking General Underwater Object Detection: Datasets, Challenges, and Solutions. Neurocomputing 2023, 517, 243–256. [Google Scholar] [CrossRef]

- Liu, C.; Li, H.; Wang, S.; Zhu, M.; Wang, D.; Fan, X.; Wang, Z. A Dataset and Benchmark of Underwater Object Detection for Robot Picking. In Proceedings of the 2021 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shenzhen, China, 5–9 July 2021; IEEE: New York, NY, USA, 2021. [Google Scholar]

- Zhang, M.M.; Choi, J.; Daniilidis, K.; Wolf, M.T.; Kanan, C. VAIS: A Dataset for Recognizing Maritime Imagery in the Visible and Infrared Spectrums. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; IEEE: New York, NY, USA, 2015. [Google Scholar]

- Gundogdu, E.; Solmaz, B.; Yucesoy, V.; Koc, A. MARVEL: A Large-Scale Image Dataset for Maritime Vessels. In Proceedings of the Computer Vision—ACCV 2016, Taipei, Taiwan, 20–24 November 2016; Lai, S.H., Lepetit, V., Nishino, K., Sato, Y., Eds.; Springer International Publishing Ag: Cham, Switzerland, 2017. Part V. Volume 10115, pp. 165–180. [Google Scholar]

- Patino, L.; Cane, T.; Vallee, A.; Ferryman, J. PETS 2016: Dataset and Challenge. In Proceedings of the 29th IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW 2016), Las Vegas, NV, USA, 1–26 July 2016; IEEE: New York, NY, USA, 2016; pp. 1240–1247. [Google Scholar]

- Shao, Z.; Wu, W.; Wang, Z.; Du, W.; Li, C. SeaShips: A Large-Scale Precisely Annotated Dataset for Ship Detection. IEEE Trans. Multimed. 2018, 20, 2593–2604. [Google Scholar] [CrossRef]

- Moosbauer, S.; Koenig, D.; Jaekel, J.; Teutsch, M. A Benchmark for Deep Learning Based Object Detection in Maritime Environments. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW 2019), Long Beach, CA, USA, 16–17 June 2019; IEEE: New York, NY, USA, 2019; pp. 916–925. [Google Scholar]

- Shi, D.; Guo, Y.; Wan, L.; Huo, H.; Fang, T. Fusing Local Texture Description of Saliency Map and Enhanced Global Statistics for Ship Scene Detection. In Proceedings of the 2015 IEEE International Conference on Progress in Informatcs and Computing (IEEE PIC), Nanjing, China, 18–20 December 2015; Xiao, L., Wang, Y., Eds.; IEEE: New York, NY, USA, 2015; pp. 311–316. [Google Scholar]

- Zhu, C.; Zhou, H.; Wang, R.; Guo, J. A Novel Hierarchical Method of Ship Detection from Spaceborne Optical Image Based on Shape and Texture Features. IEEE Trans. Geosci. Remote Sensing 2010, 48, 3446–3456. [Google Scholar] [CrossRef]

- Yang, F.; Xu, Q.; Gao, F.; Hu, L. Ship Detection from Optical Satellite Images Based on Visual Search Mechanism. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; IEEE: New York, NY, USA, 2015; pp. 3679–3682. [Google Scholar]

- Liu, T.; Pang, B.; Zhang, L.; Yang, W.; Sun, X. Sea Surface Object Detection Algorithm Based on YOLO v4 Fused with Reverse Depthwise Separable Convolution (RDSC) for USV. J. Mar. Sci. Eng. 2021, 9, 753. [Google Scholar] [CrossRef]

- Yang, C.; Wang, Y.; Zhang, J.; Zhang, H.; Wei, Z.; Lin, Z.; Yuille, A. Lite Vision Transformer with Enhanced Self-Attention. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE Computer Soc: Los Alamitos, CA, USA, 2022; pp. 11988–11998. [Google Scholar]

- Guo, W.; Xia, X.; Wang, X. A Remote Sensing Ship Recognition Method Based on Dynamic Probability Generative Model. Expert Syst. Appl. 2014, 41, 6446–6458. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, C.; Guo, W.; Zhang, T.; Li, W. CFANet: Efficient Detection of UAV Image Based on Cross-Layer Feature Aggregation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5608911. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, T.; Yu, P.; Wang, S.; Tao, R. SFSANet: Multiscale Object Detection in Remote Sensing Image Based on Semantic Fusion and Scale Adaptability. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4406410. [Google Scholar] [CrossRef]

- What Is YOLOv5: A Deep Look into the Internal Features of the Popular Object Detector. Available online: https://arxiv.org/abs/2407.20892 (accessed on 31 July 2025).

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. In Proceedings of the 38th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dan, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE Computer Soc: Los Alamitos, CA, USA, 2024; pp. 16965–16974. [Google Scholar]

- Zeng, G.; Yu, W.; Wang, R.; Lin, A. Research on Mosaic Image Data Enhancement for Overlapping Ship Targets. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. Mixup: Beyond Empirical Risk Minimization. arXiv 2021, arXiv:2105.05090. [Google Scholar]

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.-Y.; Cubuk, E.D.; Le, Q.V.; Zoph, B. Simple Copy-Paste Is a Strong Data Augmentation Method for Instance Segmentation. arXiv 2021, arXiv:2012.07177. [Google Scholar] [CrossRef]

- Adam: A Method for Stochastic Optimization. Available online: https://arxiv.org/abs/1412.6980 (accessed on 1 August 2025).

- Namgung, H.; Kim, J.-S. Collision Risk Inference System for Maritime Autonomous Surface Ships Using COLREGs Rules Com pliant Collision Avoidance. IEEE Access 2021, 9, 7823–7835. [Google Scholar] [CrossRef]

| Dataset | Annotated Images | Type | Bounding Box |

|---|---|---|---|

| VAIS [18] | 2856 | single | Available |

| MarDCT [19] | 6743 | single | Available |

| IPATCH [20] | 30,418 | single | Available |

| SeaShips [21] | 31,455 | various | Available |

| SMD [22] | 240,842 | various | Available |

| MASS-LSVD | 64,263 | various | Available |

| Model | Type | Anchor-Free | NMS-Free | End-to-End | Notable Features |

|---|---|---|---|---|---|

| YOLOv5 | CNN (One-Stage) | ✗ | ✗ | ✗ | Mosaic Aug., Anchor-Based, Ciou Loss |

| YOLOv8 | CNN (One-Stage) | ✗ | ✗ | ✗ | C2f Module, MixUp, CopyPaste |

| YOLOv10 | CNN (Unified) | ✓ | ✓ | ✓ | OD-Head, Hungarian Matching |

| YOLOv11 | CNN + Attention | ✓ | ✓ | ✓ | Cross-layer Attention, Dynamic Conv |

| YOLOv12 | CNN + Attention | ✓ | ✓ | ✓ | RepOptimizer, EMA-BN, Multi-Scale Attention |

| RT-DETR | Transformer | ✓ | ✓ | ✓ | Global Attention, AIFI+CCFM, iou-Aware Query |

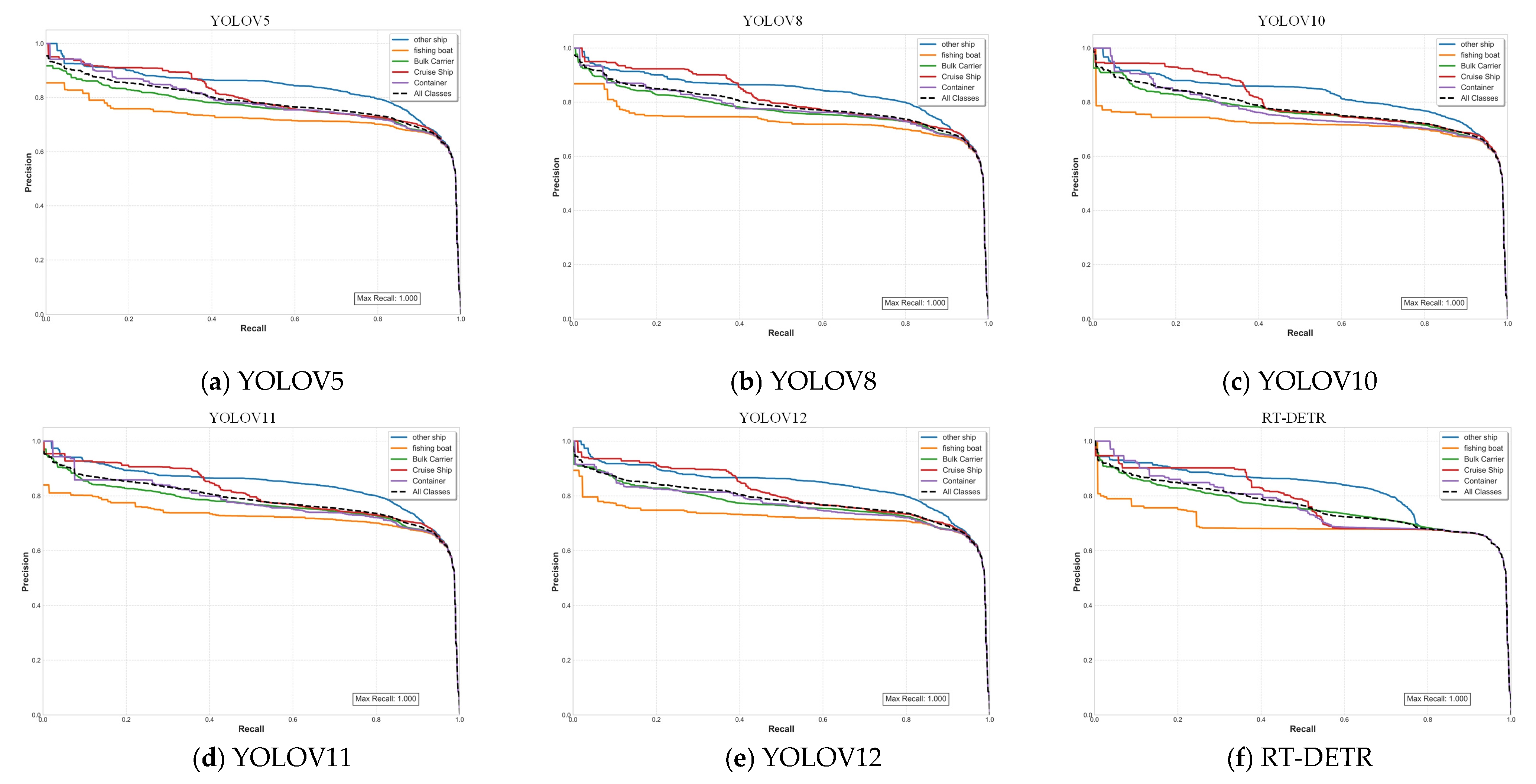

| Model | Fishing Boat | Bulk Carrier | Cruise Ship | Container | Other Ship | Average Map | FPS (A40) |

|---|---|---|---|---|---|---|---|

| YOLOv5 | 0.713 | 0.877 | 0.908 | 0.891 | 0.916 | 0.861 | 47 |

| YOLOv8 | 0.722 | 0.859 | 0.926 | 0.903 | 0.927 | 0.867 | 63 |

| YOLOv10 | 0.774 | 0.863 | 0.886 | 0.912 | 0.922 | 0.871 | 77 |

| YOLOv11 | 0.731 | 0.872 | 0.910 | 0.908 | 0.930 | 0.873 | 49 |

| YOLOV12 | 0.778 | 0.885 | 0.923 | 0.921 | 0.938 | 0.889 | 80 |

| RT-DERT | 0.706 | 0.825 | 0.891 | 0.843 | 0.906 | 0.834 | 42 |

| Train Set | mAP@50 | ||

|---|---|---|---|

| MASS-LSVD | SeaShips | SMD | |

| MASS-LSVD | 92.60 | 48.73 | 52.64 |

| SeaShips | 58.44 | 99.26 | 47.98 |

| SMD | 31.49 | 28.56 | 98.70 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, Y.; Ju, D.; Han, B.; Sun, F.; Shen, L.; Gao, Z.; Mu, D.; Niu, L. MASS-LSVD: A Large-Scale First-View Dataset for Marine Vessel Detection. J. Mar. Sci. Eng. 2025, 13, 2201. https://doi.org/10.3390/jmse13112201

Fan Y, Ju D, Han B, Sun F, Shen L, Gao Z, Mu D, Niu L. MASS-LSVD: A Large-Scale First-View Dataset for Marine Vessel Detection. Journal of Marine Science and Engineering. 2025; 13(11):2201. https://doi.org/10.3390/jmse13112201

Chicago/Turabian StyleFan, Yunsheng, Dongjie Ju, Bing Han, Feng Sun, Liran Shen, Zongjiang Gao, Dongdong Mu, and Longhui Niu. 2025. "MASS-LSVD: A Large-Scale First-View Dataset for Marine Vessel Detection" Journal of Marine Science and Engineering 13, no. 11: 2201. https://doi.org/10.3390/jmse13112201

APA StyleFan, Y., Ju, D., Han, B., Sun, F., Shen, L., Gao, Z., Mu, D., & Niu, L. (2025). MASS-LSVD: A Large-Scale First-View Dataset for Marine Vessel Detection. Journal of Marine Science and Engineering, 13(11), 2201. https://doi.org/10.3390/jmse13112201