1. Introduction

Accurate water-level prediction in coastal waterways and navigation channels is crucial for effective, safe navigation, port operations, and coastal risk management under complex environments [

1]. The manager or ship officer generally judges the water depth situation of a channel based on prediction values from the water level station to ensure navigation safety and decide on ship navigation planning [

2]. However, the water level changing trend in the water level station is impacted by nearby water level situations. Surrounding environmental changes, such as changing amounts of water from upstream, periodic changes in tides, or regulation of reservoirs from dams, directly influence the trend of water level of a specific station near coastal areas [

3]. The majority of conventional algorithms have focused only on training models based on historical water-level sequential data [

4]. They have not paid attention to upstream and downstream influences on water-level change trends, especially in water level stations near the sea. Inaccurate prediction of water levels would decrease the efficiency of vessel navigation and increase risks during vessel navigation, especially for ports that manage vessels when anchoring based on tide changes and water levels in channels. Underutilized channel depths due to inaccurate prediction of the depth of channels can cost millions in delayed shipments and suboptimal cargo loading [

5]. At the same time, coastal water-level predictions are core reference data for controlling risks during vessel navigation in coastal areas, which assists in identifying safely navigable areas in complex environments. Therefore, these limitations directly or indirectly impact the navigation safety of vessels, port operations, and coastal risk management in marine and estuarine systems.

Furthermore, the intensification of hydrological extremes under climate change, represented as increasingly severe floods and droughts, poses a critical threat to the safety and efficiency of waterway transportation [

6]. Precise and real-time water level predictions provide operational convenience in terms of ship navigation and management of ports or channels. This advancement plays a vital role in the adaption of traffic management systems. It empowers rerouting of vessels in response to rapidly fluctuating conditions [

7]. Taking, as an example, a historic event on the Rhine River in 2021, approximately 200 vessels were grounded in the Rhine River, crippling this critical European supply corridor and triggering estimated economic losses exceeding 5 billion euros within a single month [

8,

9]. These catastrophic events highlight the critical importance of developing advanced adaptive frameworks for water-level prediction during extreme hydrological periods. Thus, accurate and timely forecasts are essential to ensure maritime safety, maintain logistical continuity, and mitigate severe financial and ecological consequences.

In aspect of algorithms regarding water level prediction, the traditional statistical approaches for water level forecasting, exemplified by the Autoregressive Integrated Moving Average (ARIMA) model, rely heavily on physical and statistical principles but often struggle to capture the dynamic, nonlinear complexities inherent in hydrological systems. For instance, Yu et al. [

10] applied ARIMA to provide satisfactory short-term daily water level forecasts for flood control, shipping, and water supply management. Its accuracy decreased significantly during periods of sharp water level fluctuations and as the forecasting period extended, which highlights the limitation in capturing complex non-linear dynamics and maintaining performance over longer lead times. While conventional machine learning methods offer greater flexibility, they inadequately represent the complex spatiotemporal dependencies across water level stations. The studies [

11,

12] utilized Support Vector Machines (SVM) and its variant to forecast the multi-step water level in the Yangtze River. They achieved the good short-term accuracy yet revealed the difficulties in capturing long-term temporal patterns and spatial interactions between distant gauges without explicit feature engineering. On the other hand, deep learning techniques have gained prominence due to their ability of automatically extracting relevant spatiotemporal features. Zhang et al. [

13] developed a hybrid model with convolutional neural network and long short-term memory network to predict downstream water level prediction of reservoir. It significantly improved the computation efficiency and had superior forecasting ability to the benchmark methods. However, these advanced deep learning methods still face challenges regarding generalization under non-stationary conditions and often necessitate significant volumes of high-quality training data and support of high-performance processor.

At the same time, accurate water level prediction is critically dependent on capturing both complex temporal dynamics and inherent spatial inter-dependencies, as water levels at any station are influenced by upstream conditions, lateral inflows, and downstream backwater effects within the watershed system. However, the current popular pattern of building model—ranging from discussed statistical methods to conventional deep learning—often inadequately represent these essential spatial linkages and hydrodynamic constraints. They primarily focused on temporal patterns at discrete points or relied on the simplified spatial interpolations, which leads to systemic errors in capturing basin-wide hydrodynamic interactions. This limitation is acutely evident in coastal area and channel nearby sea where downstream stage-flow relationships are highly dynamic. Consequently, the modeling framework with an explicit topology to represent the connection and influential relationship among water level stations is necessary. To address the limitations of algorithms above discussed and meet the critical need for accurate water level predictions, we propose the Water State-aware Spatiotemporal Graph Transformer Network (WS-STGTN) to achieve task of water level prediction. It maps the topology to represent the relationship among water level in aspect of spatial and temporal features. The water level stations in the topology are set as the interconnected nodes. The water level monitoring points are formalized as the vertex Their edge weights are designed by similarity between geographical distance and time series features. This framework in the proposed model is designed to enhance water level prediction capabilities across navigation channels and overcome the fragmented representation inherent in current discrete or interpolation-based approaches. The contributions of this study are summarized in the following key points:

A framework using dual-weight edge construction mechanism is designed, which integrates geographical distance and temporal correlation, yielding a more holistic representation of the interplay among water level stations.

A state-aware weighted loss function is introduced, which not only dynamically adjusts the importance of prediction errors based on different water level states, but also ensures the accuracy during critical periods.

A graph transformer network based on theory of transformer is designed, which employs the advanced attention mechanisms to focus on the relevant information and model complex dependencies.

A precise water level prediction model—WS-STGTN—is proposed, which assists on the safe navigation, port operations, and coastal risk management under complex environment.

In the following sections,

Section 2 will outline our methodology in detail, including the definition of water level states, the construction of the graph structure.

Section 3 will provide the implementation and evaluation of the WS-STGTN.

Section 4 will introduce dataset description, experimental settings, evaluation metrics, and details of experiments. The following

Section 5 will show the experimental results and their discussion.

Section 6 will conclude our findings and the implications of our work for waterway management and maritime safety.

2. Literature Review

Accurate water level prediction is crucial for effective water resource management, flood risk mitigation, and the safety and efficiency of navigation systems. Over the years, a variety of methodologies have been developed, evolving from traditional statistical models to machine learning, deep learning, and more recently, graph-based and Transformer models, with increasing attention on capturing complex spatiotemporal dynamics across rivers, lakes, and estuarine systems.

Early water level forecasting relied primarily on statistical approaches such as the Autoregressive Integrated Moving Average (ARIMA) model. ARIMA captures linear temporal dependencies using historical observations, providing straightforward interpretability and computational efficiency. Adnan et al. [

14] applied ARIMA to streamflow forecasting and observed improved performance compared to alternative models in terms of mean absolute percentage error (MAPE) and root mean square error (RMSE). Similarly, Yu et al. [

10] demonstrated ARIMA’s utility for Yangtze River water level prediction. Nevertheless, ARIMA assumes linear relationships and stationary time series, which limits its ability to model nonlinear dynamics and abrupt fluctuations, especially during extreme events [

15].

To address the limitations of linear models, machine learning (ML) methods have been widely adopted, including Support Vector Machines (SVM), Random Forests (RF), and Gradient Boosting (GB). These models can handle nonlinear dependencies and large datasets. Castillo-Botón et al. [

16] demonstrated that Support Vector Regression (SVR) achieved high precision in reservoir inflow forecasting, while Tran et al. [

17] found RF maintained robust performance for river water level prediction even with missing data. Gradient boosting methods, particularly XGBoost, have been enhanced through hybridization with evolutionary algorithms, outperforming RF and CART in multi-step water level prediction [

18]. Despite these advantages, ML models require careful feature selection and engineering; improper inputs or hyperparameter tuning may lead to overfitting and limited generalization in complex hydrological systems.

Deep learning (DL) techniques, including Long Short-Term Memory (LSTM) networks and Convolutional Neural Networks (CNN), have been increasingly employed to model temporal and spatial dependencies. LSTM effectively captures long-term temporal correlations but can be sensitive to transitional hydrological dynamics, reducing performance in medium-term predictions [

19]. Hybrid methods integrating signal decomposition techniques such as Variational Mode Decomposition (VMD) enable separation of dominant trends, seasonal cycles, and high-frequency noise, which are subsequently modeled using sequence-aware predictors like LSTM [

20,

21]. CNNs extract spatial features from sensor grids or watershed maps and can be fused with attention-enhanced LSTM to jointly capture temporal and spatial patterns, improving forecasting accuracy [

22]. These approaches have also been applied to lake-level prediction, e.g., Xu et al. [

23] used Transformer models to simulate Poyang Lake water levels with high accuracy, demonstrating the potential of DL in both river and lake systems. However, challenges remain, including high computational cost, dependence on large labeled datasets, and potential oversimplification of complex watershed interactions [

24,

25].

A key limitation of prior approaches is the decoupling of temporal and spatial dependencies. Recent work has addressed this using graph neural networks (GNN) and Transformer architectures. ST-GNN and GCN-LSTM models have effectively captured spatiotemporal interactions in groundwater and river networks, outperforming standalone LSTM and traditional numerical models [

26,

27,

28]. Transformer-based models, including BiLSTM-Transformer and ladder-Transformer frameworks, have shown superior accuracy in multi-step water level prediction for lakes and rivers, often exceeding conventional RNN-based architectures [

29,

30,

31]. Hybrid DL architectures integrating CNN, LSTM, GRU, and Transformer components further enhance robustness and forecasting precision across various temporal scales and monitoring stations [

32,

33,

34]. Additionally, these models have been extended to flow prediction, capturing temporal variations in streamflow and estuarine dynamics, which is crucial for flood early warning and reservoir operation [

14,

18].

Despite these advances, critical challenges remain. Most current methodologies focus on individual monitoring stations, neglecting the influence of surrounding hydrological and environmental factors. Temporal and spatial features are often treated separately, limiting models’ ability to capture dynamic interactions among water levels across stations, particularly in heterogeneous and complex coastal environments. Conventional loss functions uniformly weight prediction errors, ignoring the operational significance of different water level states (e.g., flood alerts vs. normal conditions). Standard graph networks frequently lack adaptive mechanisms to discern contextually relevant spatiotemporal dependencies from noisy multivariate data, reducing reliability during extreme events.

To address these deficiencies, this study proposes the WS-STGTN framework for monitoring-point water level prediction. The framework dynamically fuses temporal correlations with spatial proximity to capture synergistic effects on water propagation across hydrological networks. It incorporates state-aware optimization by applying differential weighting in the loss function, reflecting the operational importance of various water level states. Transformer-based attention mechanisms enable adaptive graph learning, allowing the model to identify contextually relevant spatiotemporal dependencies from noisy multivariate data. By integrating these strategies, WS-STGTN enhances both predictive accuracy and robustness, particularly during extreme hydrological events. A detailed description of the proposed methodology is provided in the following section.

3. Methodology

This study aims to deliver accurate monitoring point of water level in the coastal area while minimizing reliance on external data, WS-STGTN is introduced. First, a data-driven spatio-temporal graph is constructed. Hydrological stations and selected points with water level data are nodes (geographic coordinates as static attributes, historical water-level series as dynamic attributes) and edges are weighted by inferred hydrological connectivity. Second, a Graph Transformer is trained on this evolving graph; its multi-head self-attention simultaneously models spatial dependencies across stations and temporal dynamics within each station, directly producing future water-level sequences. Last, a state-aware weighted Mean Squared Error (WMSE) loss function is designed, which provides the different weights for forecasting errors for samples belonging to different hydrological states (e.g., flood, normal, and drought).

3.1. Data Transformation Approach

To prepare raw multi-station water level series for model training, a sliding-window transformation is applied. Let

denote water level data from

N monitoring stations over

T time steps. Given an input window size

D and a prediction horizon

P, training samples are generated as

where

L is the number of training instances. Each

contains

D sequential observations, and the corresponding

contains the following

P target values. This structure facilitates temporal pattern learning across multiple stations.

3.2. Water Level State Definition

To capture hydrological variability, historical water levels are categorized into three regimes—flood, typical, and drought—using the k-means clustering algorithm. Given observed levels

, the objective is

where

is the centroid of cluster

. After convergence, clusters are ordered by centroid values and assigned state labels

. These labels later serve to adaptively weight prediction errors in the model loss function (

Section 3.4), improving accuracy during extreme conditions.

3.3. Dynamic Spatio-Temporal Graph Model

The accurate representation of hydrological interdependencies requires explicit modeling of both spatial connectivity and temporal dynamics. This subsection details our dual-approach methodology for capturing these complex relationships through integrated geographical and temporal similarity measures.

A dynamic graph structure is represented as , where the vertex set , the edge set is , and represents the edge weights. In our study, the water level monitoring points are formalized as the vertex in graph structure and the historical features in water level series is set as corresponding nodes features. Their edge weights are designed by similarity between geographical distance and time series features, which reflects the significance of influence between monitoring points. The adjacency matrix encodes pairwise relationships where quantifies the influence of station j on point i.

First, the spatial connectivity matrix captures fundamental relationships of monitoring points driven by geographical proximity. We employ a multi-step computational process to transform raw coordinates into normalized connection weights that reflect hydrodynamic interdependence. The Haversine formula computes great-circle distances between stations i and j with coordinates (

) and (

). The distance computation can be showed in Equation (

3).

where Earth’s radius R = 6371 km and angular differences are converted to radians. This formula calculates the great-circle distance between two geographic coordinates on the Earth’s surface, and the use of the

function ensures numerical stability for small angular separations.

Then, this study applies a normalized exponential decay function to convert distances into connectivity weights. Its mathematical representation is showed in the following:

Here,

is linearly scaled into [0, 1], and

controls the decay rate of spatial influence; a higher

leads to faster attenuation of long-range connections.

The waterway topology is generated through directional constraints. The weights can be updated by following equation:

where

represents the elevation difference (

) and river length

L between points, which enforces much stronger weights for upstream connections. The spatial weights can be generated by Equation (

6).

Here,

A represents the normalized spatial adjacency strength of the graph, obtained by averaging all valid edge weights in

; this ensures that the overall connectivity is scale-invariant and comparable across different Network.

On the other hand, considering the delay effect of water flow propagation, conventional Pearson correlation fails to account for phase misalignment in hydrograph comparisons. Thus, we employ Dynamic Time Warping (DTW) to quantify the morphological congruence between water level series

and

, accommodating the hydraulic time lags inherent in watershed response dynamics. The smaller value of the DTW distance

indicates the more similar the morphologies of the two sequences are. This study converts it into time-dependent weights through the Gaussian kernel function as Equation (

7).

where

represents the DTW distance between the water level time series of stations

i and

j, and

is an adjustable hyperparameter used to control the sensitivity of the DTW distance to weight attenuation. A higher

value reduces the decay rate of the similarity weight function, implying that significant hydrological correlations may persist between stations despite substantial DTW distances.

Finally, the edge weights integrate spatial proximity and hydrograph congruence through a convex combination of geographical and temporal similarity metrics. Its mathematical representation is showed in Equation (

8).

where

is a hyperparameter between 0 and 1, used to balance the relative importance of geographical distance weights and temporal correlation weights. The larger value of

represents the more important the geographical distance is in determining the correlation between sites. All the combined weights form the adjacency matrix

of the graph, where

.

3.4. WS-STGTN Model Architecture

Water State Spatio-Temporal Graph Transformer Network (WS-STGTN) employs a hierarchical encoder-decoder structure to model complex hydrological dependencies. The architecture integrates spatial topology and temporal dynamics through specialized attention mechanisms, enabling precise water level forecasting across interconnected water level monitoring points.

The encoder layer employs a dual-pathway attention mechanism to capture spatio-temporal dependencies inherent in hydrological systems. The spatial pathway utilizes a graph attention network that incorporates the adjacency matrix

that is generated by

Section 3.3. The attention mechanism works by comparing the features of the target node

i with the features of each of its neighbors

j to determine the importance of each neighbor. The core operation for calculating the unnormalized attention score

by Equation (

9).

where

is the shared projection matrix for feature transformation;

represents the attention mechanism weight vector. Then, the attention score is normalized by Equation (

10) and is represented as

, which defines how much influence station

j has on station

i.

For each node i, the spatial aggregation is computed below equation:

where

is the projection matrix; and

is the adjacency weight.

At the same time, the long-range temporal dependencies within each monitoring point’s water level time series, we employ a causal self-attention mechanism. This component allows the model to dynamically weigh the importance of past observations when generating representations for future predictions. The mechanism begins by transforming the input temporal input that are generated by data transformation approach into three distinct semantic spaces through learned linear projections. Its mathematical representation is showed in the following:

where X are the input features generated by data transformation approach,

,

, and

are learnable weights in the proposed model. Then, multi-head temporal self-attention is computed as:

These attention maps capture temporal dependencies at different representation subspaces, enabling the model to focus on diverse patterns of temporal correlation across multiple heads. The outputs from all heads are then concatenated and linearly transformed as follows:

where

are learnable parameters, and Concat(·) function represents the concatenation among output of the different heads of attention.

The WS-STGTN model progressively extracts and integrates spatial dependencies and temporal dynamics within the water level data by above processing through multiple stacked spatio-temporal encoder layers. Each encoder layer first aggregates information from neighboring stations via a graph attention mechanism (GAT), generating spatially context-aware node representations. Next, a temporal self-attention mechanism captures long-range dependencies within the sequence, enhancing the model’s ability to represent temporal patterns at each station. The node representations output by the final encoder layer are then fed into a fully-connected output network to predict water levels for the next P time steps.

To achieve the water level state awareness, a Weighted Mean Squared Error (WMSE) loss function is designed. This function assigns different weights to the prediction error of each sample based on the water level state (determined by the K-means clustering results in

Section 3.2) to which the true water level

belongs. The loss function is formulated as:

where w denotes the weight assigned according to the water level state (i.e., dry, normal, or flood) of

. Higher weights are allocated to samples from flood and dry periods (e.g.,

and

), thereby directing the model to focus more on prediction accuracy during these critical hydrological phases. By minimizing this WMSE loss, the model is optimized to not only reduce overall prediction errors but also prioritize performance in states that are hydrologically sensitive or operationally significant, ultimately enhancing the practical reliability and state-specific perception capability of the forecasting system. The entire model is trained in an end-to-end manner by minimizing a loss function. The pseudocode is shown in Algorithm 1, which shows the detail of training process in model WS-STGTN. To visualize this multi-stage process, the overall architecture of the proposed WS-STGTN is illustrated in

Figure 1.

| Algorithm 1: WS-STGTN Model Process |

Require: Historical water level time series for all stations: where Ensure: Predicted water levels for future P time steps: for each station i 1: Initialize model parameters: graph attention weights , , and temporal self-attention parameters 2: for to L do 3: Graph Attention Module (GAT) in layer l: 4: for each node i do 5: for each neighbor do 6: Compute attention coefficient by Equation ( 9); 7: end for 8: Normalize coefficients by Equation ( 10); 9: Aggregate neighbor features ( ) by Equation ( 11); 10: end for 11: Update node representations: ; 12: Temporal Self-Attention Module in layer l: 13: for each node i do 14: Project inputs to queries, keys, valuesby Equation ( 12); 15: Compute attention by Equation ( 13); 16: Apply multi-head mechanism by Equation ( 14); 17: end for 18: Update node representations: 19: end for 20: Output layer: Pass final representations through a fully connected layer: ; 21: Calculate loss value based on WMSE loss function by Equation ( 15); 22: Update learnable parameters in the model until meet the stop condition; 23: return

|

4. Experiments

This section mainly compares the performance of the proposed WS-STGTN with that of representative baselines and ablated variants for multi-step water-level forecasting on a downstream reach of the Yangtze River. The details on the data description, experimental Settings, and performance measurement are provided in the following subsections.

4.1. Dataset Description

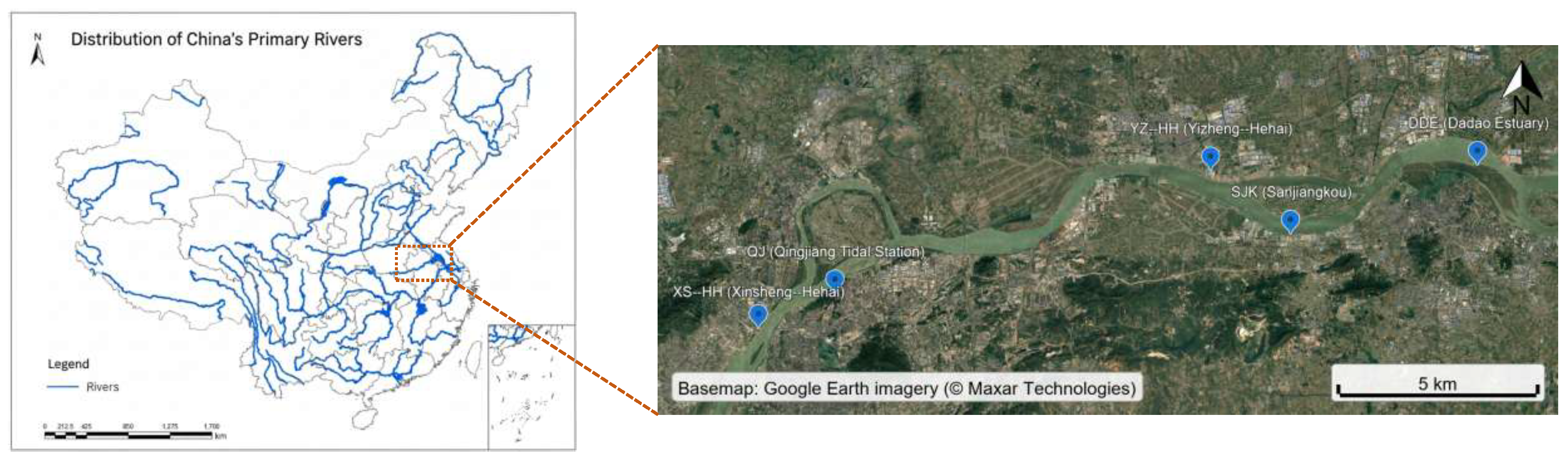

To evaluate multi-step water-level forecasting performance and examine the contribution of state-aware learning and dynamic spatio-temporal graph modeling, we employ five real-world water-level time series from the lower Yangtze River, China: Xinsheng–Hehai (XS–HH), Qingjiang Tidal Station (QJ), Sanjiangkou (SJK), Yizheng–Hehai (YZ–HH), and Dadao Estuary (DDE). Precise geographic coordinates are available for all stations (see

Figure 2). After basic quality control, we retain the common observation window shared by all sites, from 21 June 2020 03:00:00 to 7 June 2023 03:00:00, sampled hourly. The fused hourly series therefore contains 26,088 timestamps per station; applying a 120-step encoder window together with a 24-step forecasting horizon yields

sliding samples for model development.

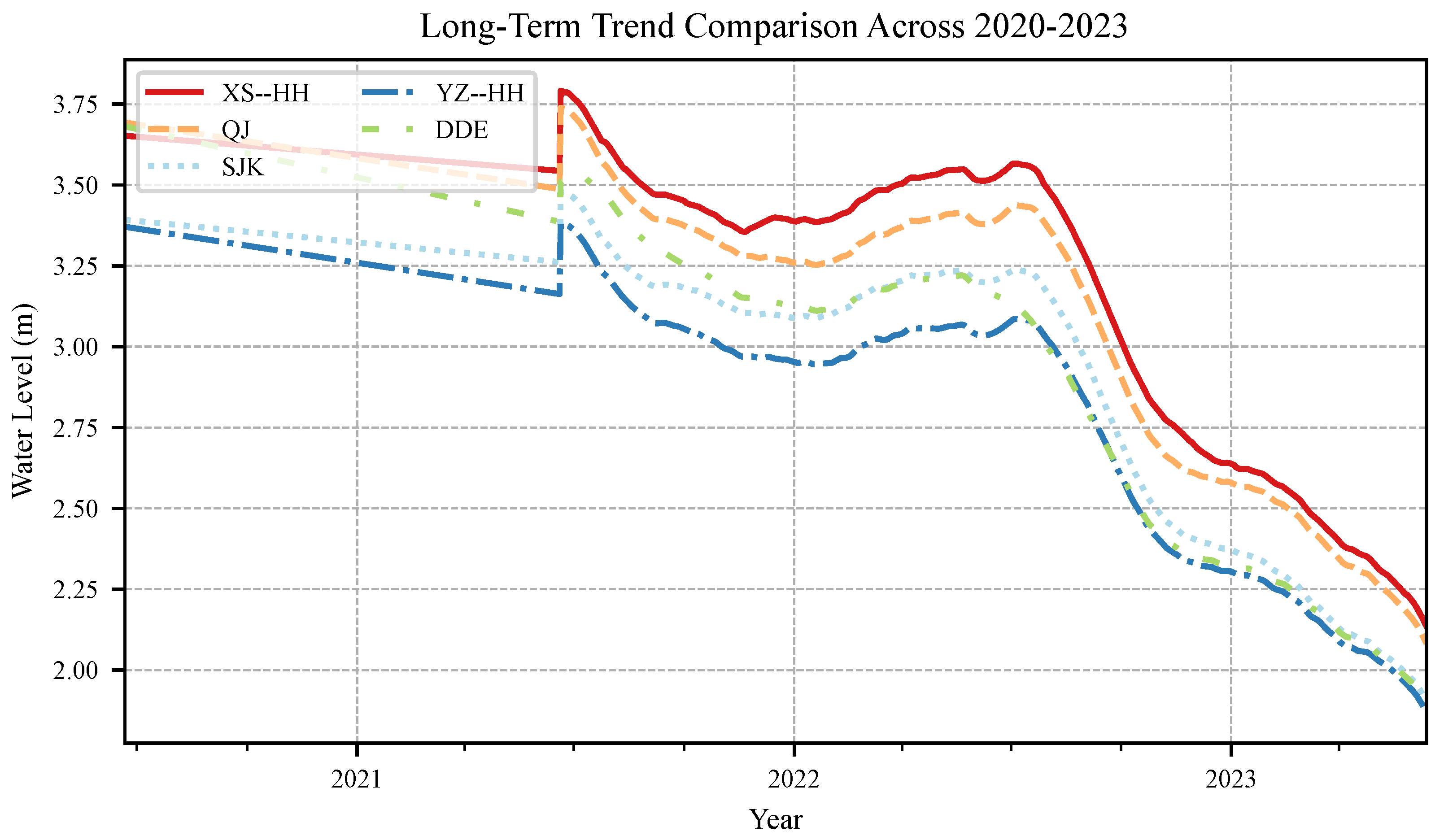

Table 1 shows that mean water levels stay between 2.75 m and 3.14 m, while the minima (0.02–0.50 m), maxima (7.20–7.87 m), and standard deviations (1.53–1.68 m) are tightly clustered, demonstrating comparable fluctuation magnitudes along the reach. The trend signatures in

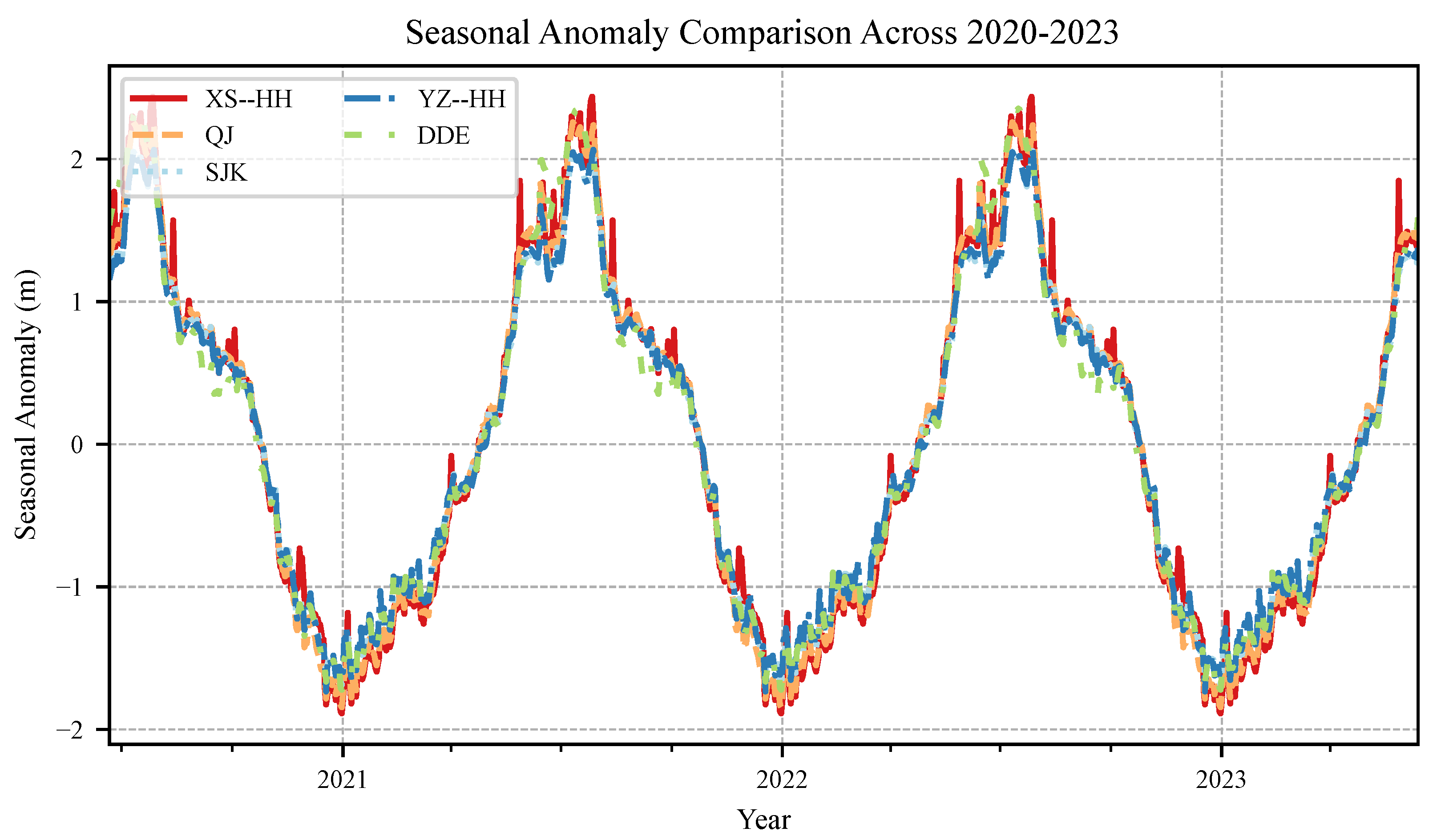

Figure 3 rise and fall synchronously, and the seasonal anomaly profiles in

Figure 4 share the same annual cycle with summer surges and winter recessions, confirming that the selected window captures representative upstream–downstream dynamics and tide-river interactions.

Following the data transformation described in

Section 3, across all stations, this yields

sliding windows. For convenience in downstream experiments, we also provide a flattened representation as a

matrix). Equivalently, the transformed representation is

and

, with

and

.

4.2. Experimental Settings

To validate the effectiveness and robustness of WS-STGTN across diverse forecasting horizons, model capacities, and graph configurations, we design experiments that first establish multi-step-ahead prediction baselines by benchmarking against widely used alternatives from statistics, machine learning, and deep learning, including ARIMA, SVR, LSTM, GCN, STGCN, and Transformer. In addition, to quantify the contribution of key architectural components in a spatiotemporal setting, we conduct ablation studies on a graph-based variant by constructing four controlled models: removing DTW-based temporal similarity in graph construction (NoDTW), replacing the proposed weighted MSE with standard MSE (NoWMSE), substituting graph attention with graph convolution (NoGAT), and replacing temporal self-attention with LSTM (NoTimeAttn).

We explore multiple combinations of the prediction horizon

P and time window

D tailored to each dataset. Unless otherwise specified, we set

and

, and performance across four prediction horizons: 1–7, 8–16, 17–24, and 1–24. All time series are transformed following Liu [

35]. All experiments use the common observation window described in

Section 4 with a chronological split of 70% for training and 30% for testing. To ensure fair comparisons, we perform grid-search hyperparameter tuning to minimize MSE, focusing on a single principal hyperparameter per model: ARIMA selects the order

by AIC within

,

,

; SVR tunes the penalty

; LSTM tunes the number of hidden units

; GCN tunes the number of layers

using the adjacency

A from

Section 3.3; STGCN tunes the temporal kernel size

; the Transformer tunes the number of attention heads

. For WS-STGTN, we tune the graph balance parameter

in Equation (

8).

All experiments are conducted with Python 3.6.10 and PyTorch 1.7.1 (GPU build, CUDA 10.1) on an Intel(R) Core(TM) i7-8750H @ 2.20 GHz with 16 GB memory (Intel Corporation, Santa Clara, CA, USA). Reproducibility is ensured by fixing random seeds and preserving the chronological split to avoid information leakage. We report a set of complementary metrics for each prediction horizon, including MSE (Mean Squared Error), MAPE (Mean Absolute Percentage Error). These metrics collectively provide a comprehensive assessment of forecasting performance, capturing both absolute and relative errors. Detailed definitions of each metric are provided in the following subsection.

4.3. Performance Measurement

To comprehensively evaluate the forecasting performance of all models, we use two metrics. The Mean Absolute Percentage Error (MAPE) expresses prediction errors as a percentage of actual values:

The Mean Squared Error (MSE) computes the average squared deviation, placing greater weight on larger errors:

Here,

N denotes the number of observations,

the predicted value, and

the actual value.

Additionally, the coefficient of determination (

) is utilized to assess the proportion of variance explained by the model:

where

denotes the mean of the observed values.

provides a normalized measure of model fit, enabling comparison across datasets with differing ranges and scales.

5. Results and Discussion

5.1. Forecasting Performance Comparison

Table 2 reports the forecasting performance of the proposed WS-STGTN and representative baselines on the five real-world Yangtze River water-level datasets (XS–HH, QJ, SJK, YZ–HH, DDE). Performance is evaluated in terms of MSE, MAPE and

across different forecast horizons (1–8, 9–16, 17–24, and 1–24 steps).

On the full horizon, WS-STGTN reduces MSE by an average of and MAPE by relative to the Transformer, with the largest gains on YZ–HH and SJK. Relative to LSTM, average improvements are for MSE and for MAPE, underscoring the benefits of explicitly modeling spatiotemporal dependencies and lag-aware inter-station relations beyond sequence-only architectures. Across horizons, the gains are consistent, with the short and medium ranges seeing the strongest boosts, while the long range still benefits notably. Compared with the graph-based STGCN, WS-STGTN delivers clear additional gains on of about lower MSE and lower MAPE on average, highlighting the value of lag-aware graph construction and dual attention through graph attention and temporal self-attention over static convolutional aggregation. Against classical SVR, the margins are very large. Furthermore, the coefficient of determination () consistently validates the superiority of WS-STGTN in capturing hydrological dynamics. Across all five stations, WS-STGTN attains the highest values, averaging around 0.95–0.97 on the full horizon, surpassing Transformer (0.93–0.94), LSTM (0.92–0.93), and STGCN (0.90–0.91). This improvement indicates that WS-STGTN not only minimizes prediction errors but also explains a substantially greater proportion of the observed variance in water-level fluctuations. The gains are especially evident at complex stations such as YZ–HH and DDE, where nonlinear flow interactions and lagged dependencies are prominent. These results confirm that the proposed model generalizes well under different hydrological regimes, achieving both lower error magnitudes and higher explanatory power.

5.2. Statistical Analysis

In this subsection, we evaluate the proposed WS-STGTN model by comparing its performance with that of other state-of-the-art forecasting methods widely recognized for their effectiveness in multistep prediction. The contenders include SVR, TCN, LSTM, GRU, and Transformer. To ensure a fair and comprehensive evaluation, all models are trained and tested under identical experimental settings using the benchmark hydrological datasets across multiple forecasting horizons (1–24 steps). The detailed results are summarized in

Table 2, from which it can be observed that WS-STGTN consistently yields the lowest prediction error across all time horizons.

To further verify whether these improvements stem from systematic differences rather than random variations, we convert the Mean Absolute Percentage Error (MAPE) metric into an equivalent accuracy measure as follows:

Subsequently, we rank the five models based on their Accuracy for each dataset and forecasting horizon, assigning rank 1 to the worst-performing model and rank 5 to the most accurate one. To ensure a comprehensive statistical comparison, we apply the Quade test independently to both the Mean Squared Error (MSE) and Accuracy (i.e.,

) metrics, thereby evaluating the consistency of model rankings across different performance criteria. This analysis determines whether the observed differences among models are statistically significant rather than attributable to random variability.

For the MSE metric, the Quade statistic yields

with a corresponding

p-value of

, thereby rejecting the null hypothesis of equal median performance among models. As shown in

Figure 5a, WS-STGTN attains a weighted mean rank close to 1.0, confirming its superiority over the competing methods, whereas SVR consistently ranks lowest. When Accuracy—a monotonic transformation of MAPE—is analyzed, the Quade test reports

with

, yielding an identical ranking pattern across all models (

Figure 5b). Because Accuracy and MAPE produce equivalent rank sequences, the MAPE-based panel is omitted for brevity.

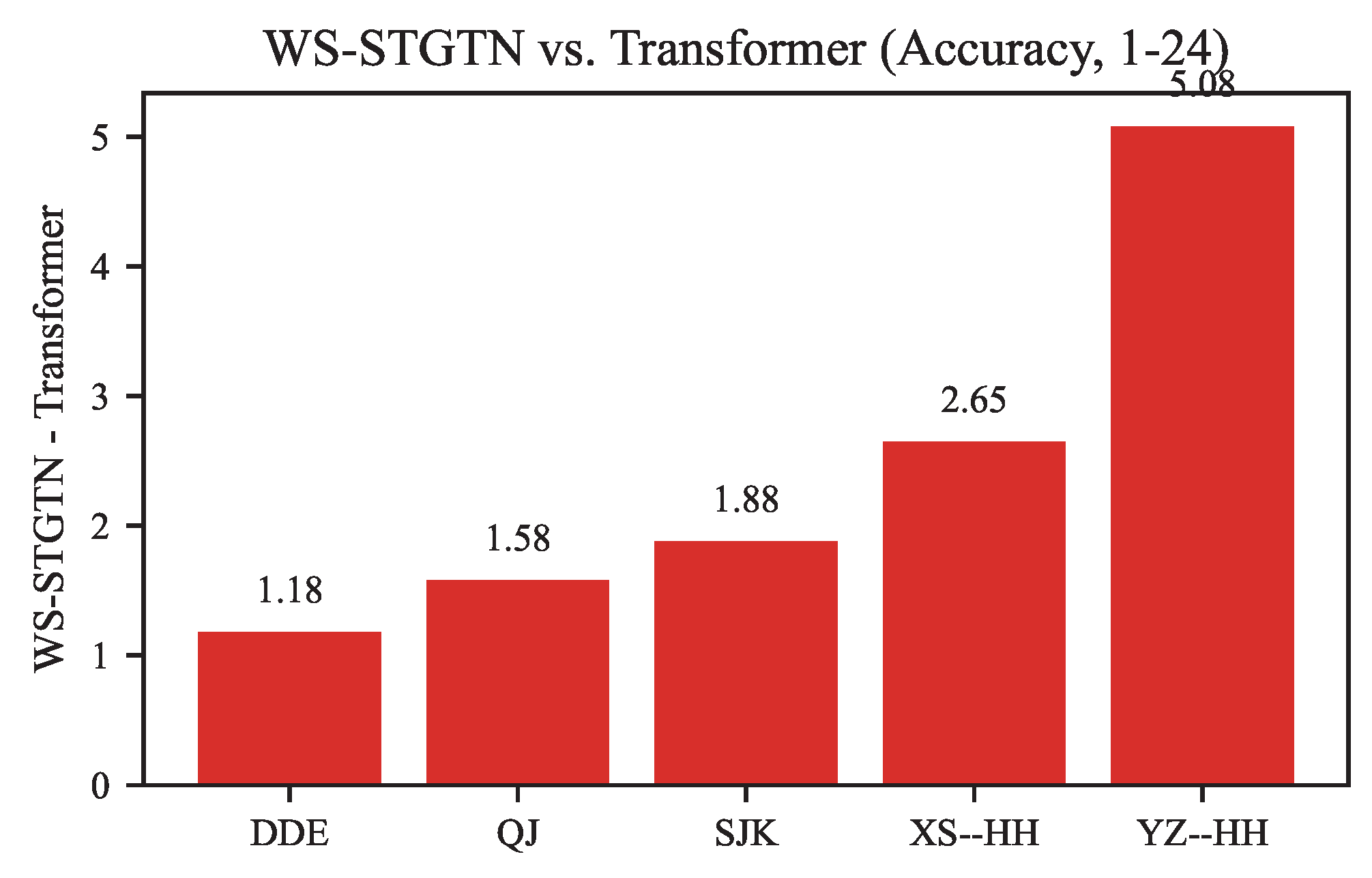

To further quantify the pairwise advantage of WS-STGTN over the strongest neural baseline, Transformer, we conduct a nonparametric sign test based on per-dataset Accuracy gains. The performance differences are summarized in

Table 3 and visualized in

Figure 6. In every case, WS-STGTN surpasses Transformer, yielding accuracy improvements ranging from 1.18% to 5.08%. The sign test produces five positive outcomes and no negatives, resulting in

due to the limited number of paired samples. Despite this marginal

p-value, the uniformly positive differences strongly corroborate that the architectural innovations of WS-STGTN consistently enhance forecasting accuracy across all hydrological stations.

5.3. Visualization of Forecasts

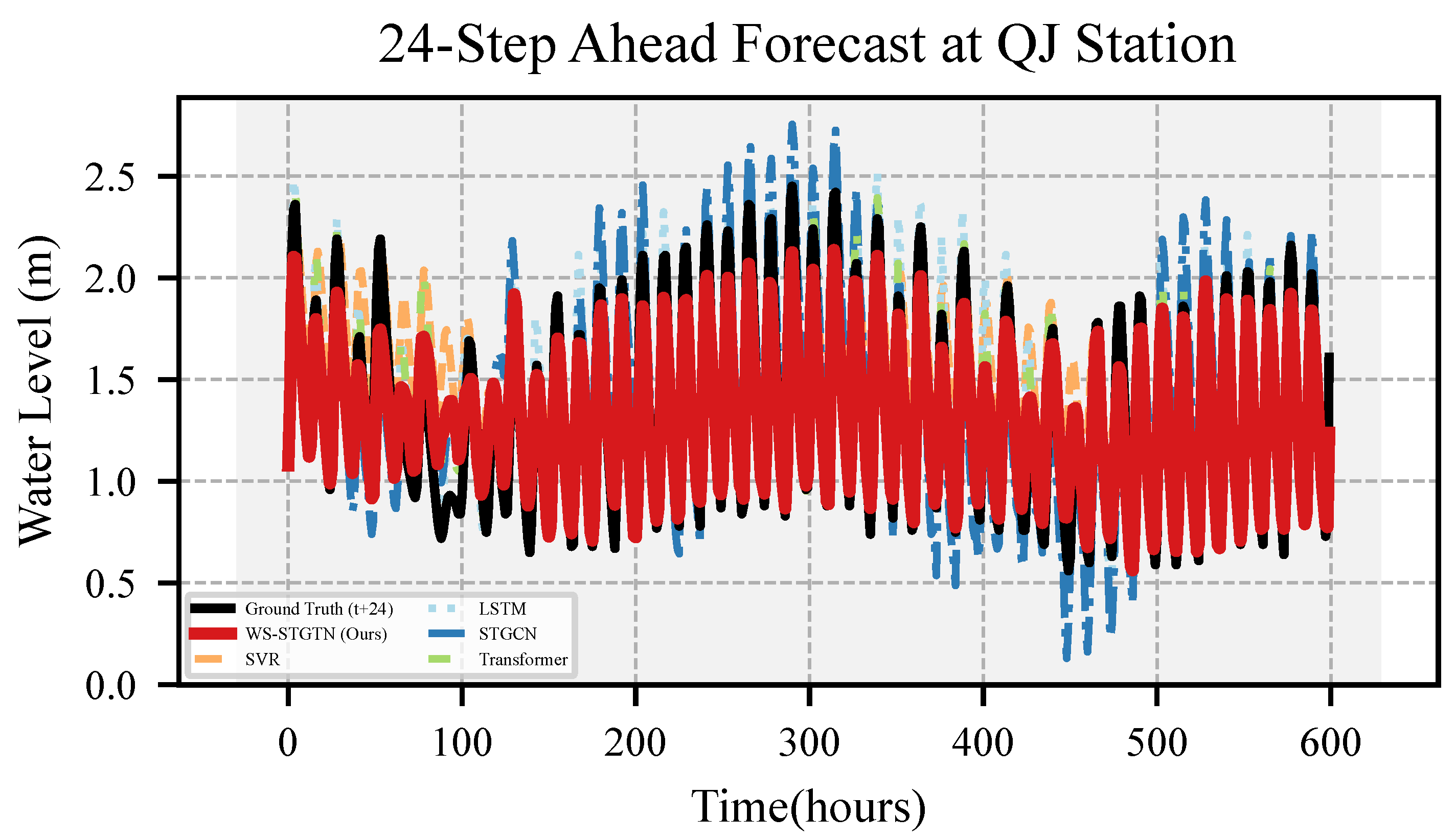

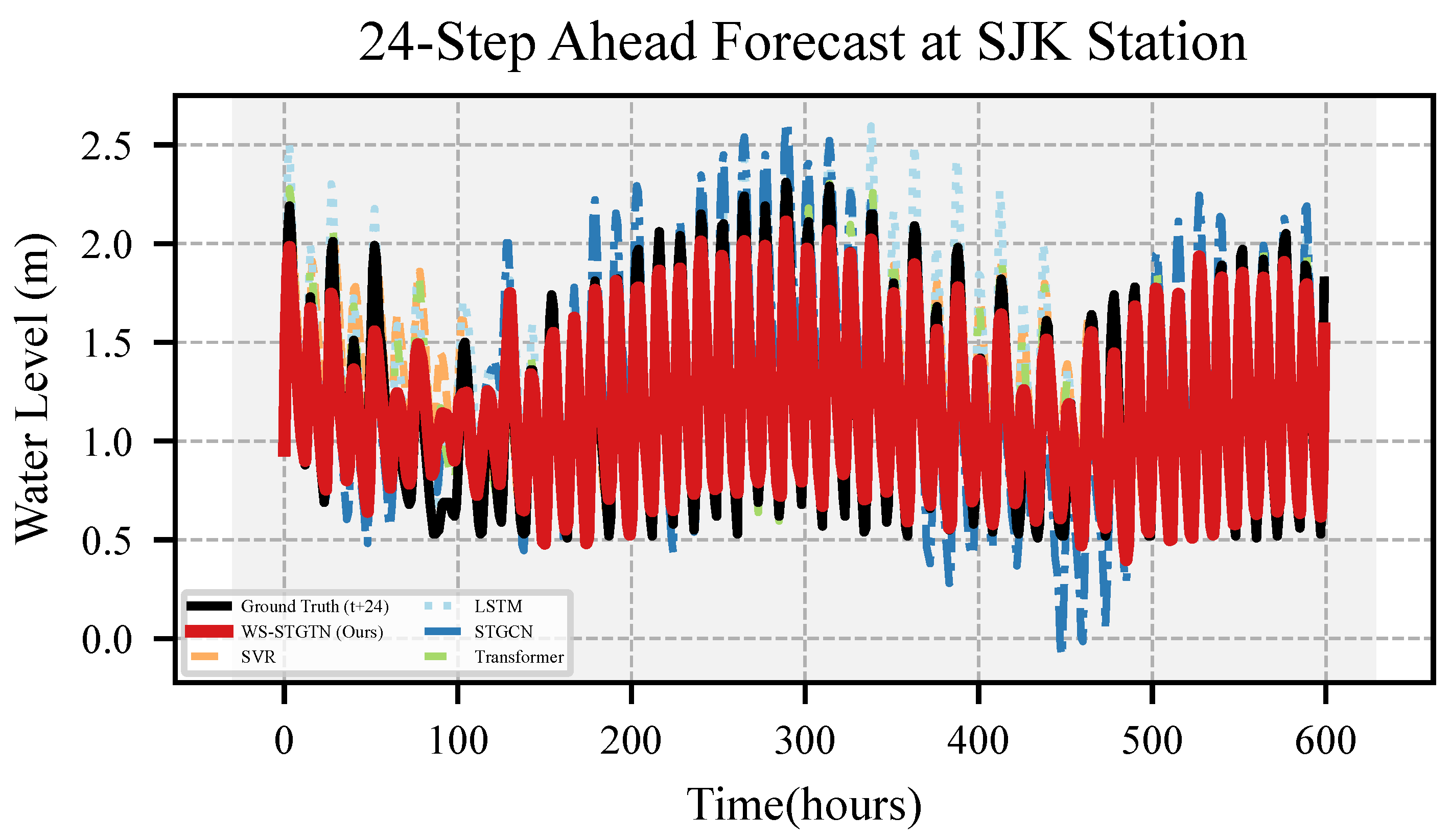

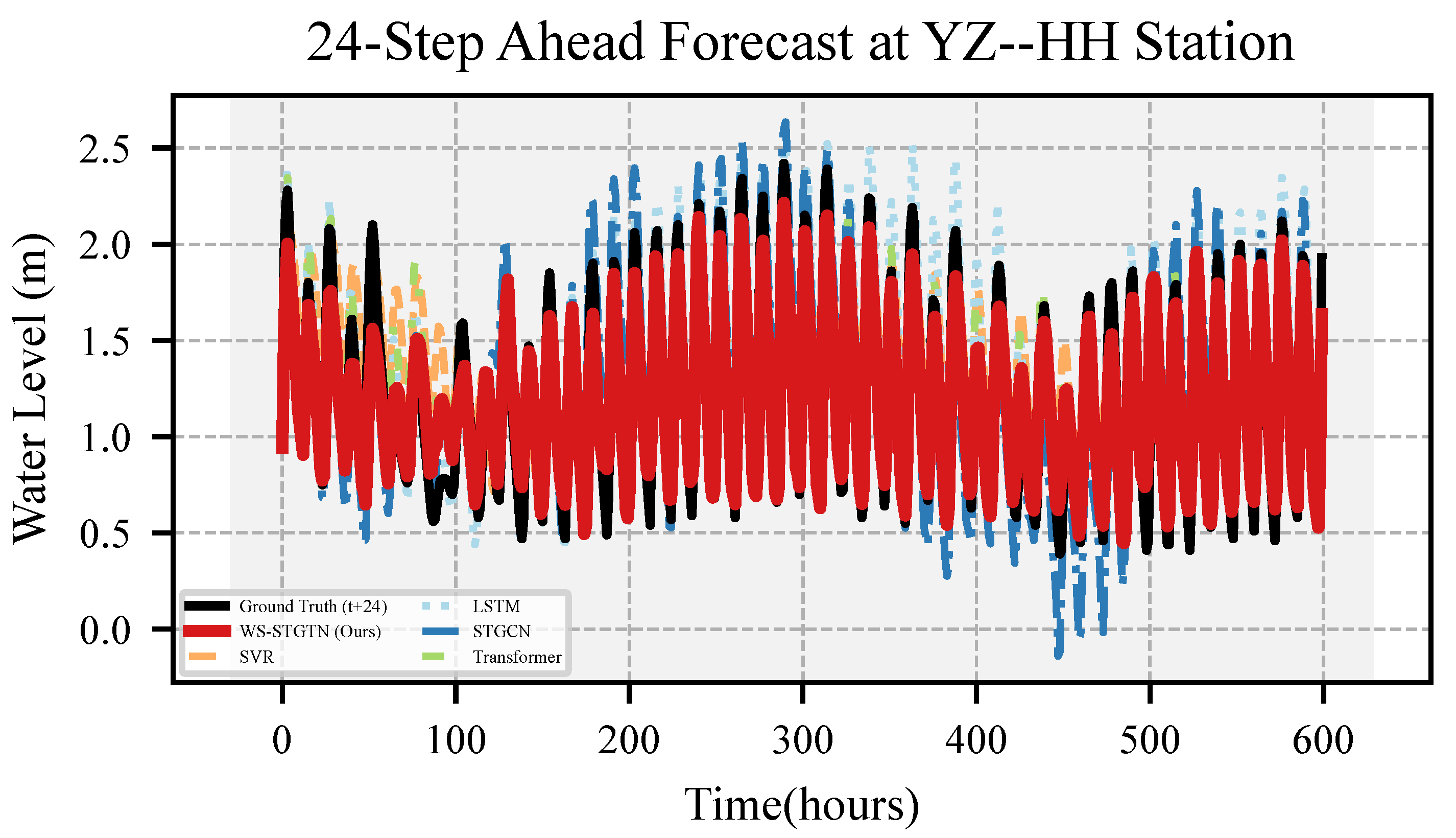

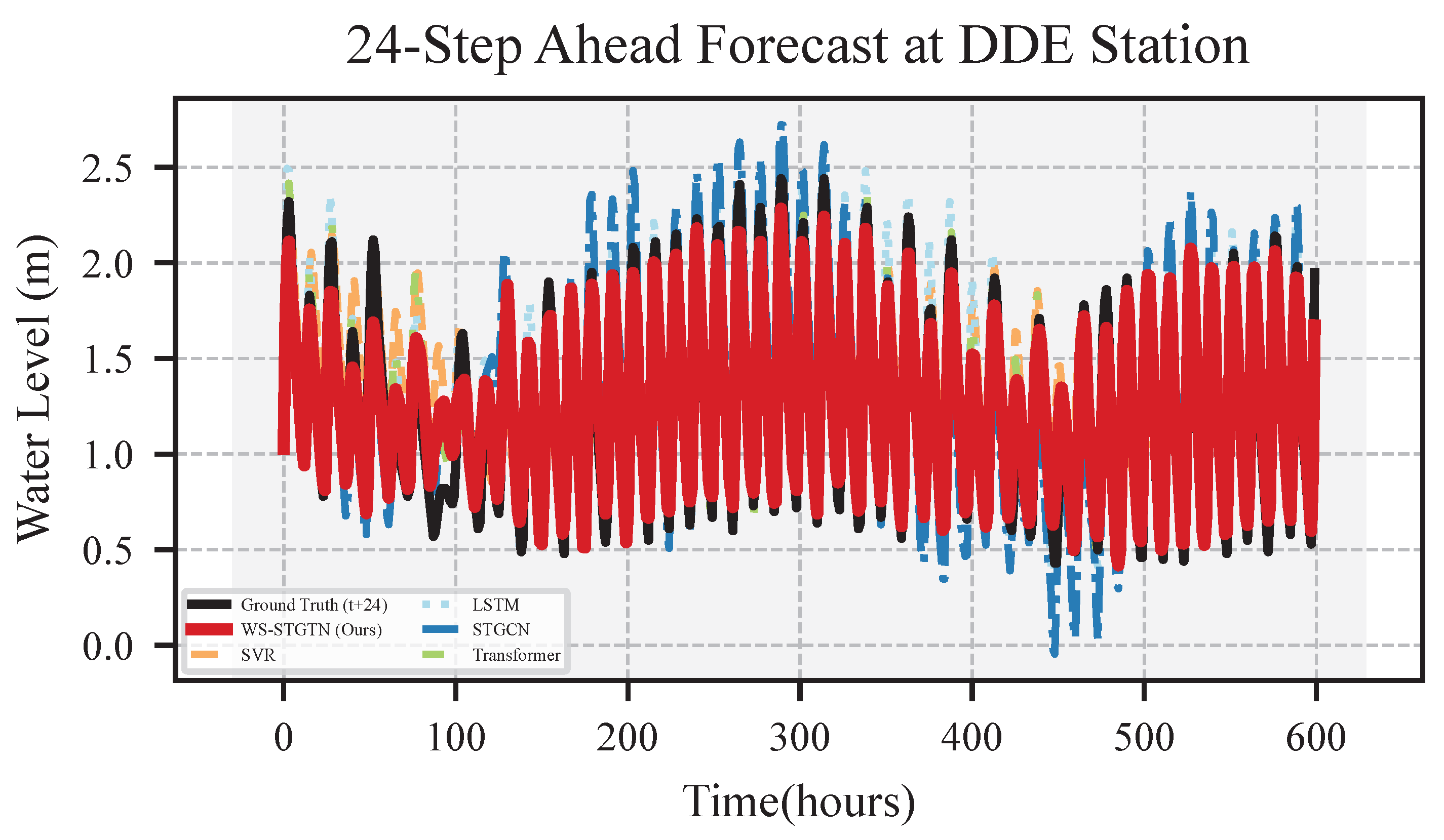

Figure 7,

Figure 8,

Figure 9,

Figure 10 and

Figure 11 present the 24-step ahead predictions conducted by WS-STGTN and the baseline models (SVR, LSTM, STGCN, Transformer), alongside the corresponding ground truth, for all five water level datasets. Here, each time step corresponds to 1 h, meaning that the 24-step ahead predictions cover a total of 24 h.

Each figure illustrates that the trend lines of the predictions generated by WS-STGTN closely align with the respective ground truth trends across all datasets. This high degree of similarity is consistently observed, even during periods of significant water level fluctuation.

In contrast, the forecasting performance of the baseline models reveals a notable lag across all datasets, suggesting that error accumulation significantly impacts their accuracy and stability, particularly in multi-step long-term predictions.

Overall, WS-STGTN demonstrates a more accurate and reliable fitted trend on all five real-world datasets compared to the baselines, effectively capturing both the overall trend and local variations in water level.

5.4. Ablation Study

We conduct ablation studies to investigate the contribution of each component of WS-STGTN: temporal DTW-based similarity in graph construction, weighted MSE, graph attention, and temporal self-attention.

Table 4 shows results on the XS–HH dataset. Each variant reflects a realistic modeling scenario: NoDTW ignores temporal misalignment such as flow lag; NoWMSE omits prioritization of critical hydrological events; NoGAT replaces graph attention with convolution, mimicking static spatial aggregation; and NoTimeAttn substitutes temporal self-attention with LSTM, reflecting sequence-based modeling without global context. These variants isolate the contribution of each component under real-world forecasting conditions.

5.5. Forecasting Performance Comparison

Table 2 summarizes multi-horizon results (short:

, medium:

, long:

, and overall:

) on five Yangtze River water-level datasets (XS–HH, QJ, SJK, YZ–HH, DDE). Across all datasets and horizons, WS-STGTN consistently outperforms SVR, LSTM, STGCN, and Transformer in both MSE and MAPE.

On the full horizon, WS-STGTN reduces errors relative to the Transformer by approximately 28% in MSE 21%in MAPE on average, reflecting robust gains over a strong sequence model with global attention. Dataset-wise, improvements range from modest to large; the biggest benefits appear on SJK and YZ–HH, e.g., on YZ–HH () WS-STGTN attains roughly MSE and MAPE versus the Transformer. Compared with LSTM, the average gains are about one-third on both metrics, underscoring the value of explicitly modeling spatiotemporal dependencies and lag-aware inter-station relations beyond sequence-only architectures.

Across horizons, improvements are stable and most pronounced at short and medium ranges: averaged over datasets, WS-STGTN lowers short-term () MSE by about relative to the Transformer, while long-range () forecasts still benefit notably. Relative to the graph-based STGCN, WS-STGTN secures clear additional gains, highlighting the advantages of lag-aware graph construction and dual (graph and temporal) attention over static convolutional aggregation; against classical SVR, margins are, as expected, very large. In aggregate, WS-STGTN achieves state-of-the-art performance across datasets and horizons, and the combination of DTW-driven, lag-aware graph construction with graph attention and temporal self-attention translates into robust, accurate multi-step water-level forecasting.

6. Conclusions

Accurate water level prediction is vital for ensuring navigational safety and optimizing the economic benefits of waterways. However, conventional forecasting models often fall short due to their inability to adequately model the complex and dynamic spatiotemporal relationships within river networks. In this paper, we proposed the Water State-aware Spatiotemporal Graph Transformer Network (WS-STGTN), a novel deep learning framework designed to overcome these limitations.

The core strengths of WS-STGTN lie in its two primary innovations: a dual-weight graph construction that fuses static geographical information with dynamic temporal correlations derived from DTW, and a state-aware weighted loss function that prioritizes forecast accuracy during critical high and low water level events.

Through extensive experiments on five real-world Yangtze River datasets (XS–HH, QJ, SJK, YZ–HH, DDE), WS-STGTN consistently outperformed representative baselines, including SVR, LSTM, STGCN, and Transformer. On the full 1–24-step horizon, WS-STGTN achieved an average reduction in MSE of 27.6% and an average improvement in Accuracy (derived from MAPE) of 21.1% relative to Transformer. Compared with LSTM, average improvements were 37% in MSE and 35% in Accuracy. Dataset-specific gains were especially notable for YZ–HH and SJK, where MSE reductions exceeded 40% and Accuracy improved by over 30%. In addition, WS-STGTN achieved the highest coefficient of determination () across all datasets, averaging around 0.95–0.97, which is higher than Transformer (0.93–0.94) and LSTM (0.92–0.93), demonstrating its stronger capability to explain observed water-level variance. These results quantitatively confirm the advantage of combining DTW-driven lag-aware graph construction with graph and temporal attention mechanisms. The results underscore the significant value of explicitly modeling the underlying hydrological connectivity and focusing on state-sensitive errors for robust and reliable water level forecasting.

6.1. Limitations

Despite its promising performance, this study has several limitations. The graph structure, while dynamic through DTW-based weighting, relies on a fixed set of monitoring stations and does not inherently account for sudden topological changes, such as the emergence of new tributaries or man-made alterations to the waterway. In real-world hydrological environments, the graph can be updated periodically or in an online manner by recalculating inter-station relationships within a recent time window, enabling WS-STGTN to adapt to evolving flow patterns and connectivity. Future work could explore incremental or event-driven graph updates to ensure real-time adaptability, addressing a limitation present in traditional graph-based or sequence-only models. Furthermore, the definition of water level states via k-means clustering is entirely data-driven and unsupervised; incorporating domain-specific hydrological thresholds could provide more operationally relevant state definitions. While adaptive graph updates may introduce additional computational overhead, this cost can be mitigated through sparse updates or asynchronous recalibration, as partially discussed in the experiments. Compared with previous approaches, WS-STGTN balances predictive accuracy and adaptability, offering a systematic improvement over static or purely sequential forecasting methods. Lastly, the model’s complexity, while advantageous for capturing spatiotemporal dependencies, may require substantial computational resources for training and inference, potentially limiting deployment in resource-constrained settings.

6.2. Future Work

Future research could extend this work in several promising directions. First, incorporating a wider range of exogenous variables, such as rainfall from weather station and tidal information, could significantly enhance predictive accuracy. Second, exploring methods for dynamic graph evolution, where nodes and edges can be added or removed over time, would improve the model’s adaptability to long-term changes in the river system. Finally, developing a lightweight version of WS-STGTN or employing model compression techniques could facilitate its practical application in real-time, on-board vessel navigation systems.