A Novel Approach for Vessel Graphics Identification and Augmentation Based on Unsupervised Illumination Estimation Network

Abstract

1. Introduction

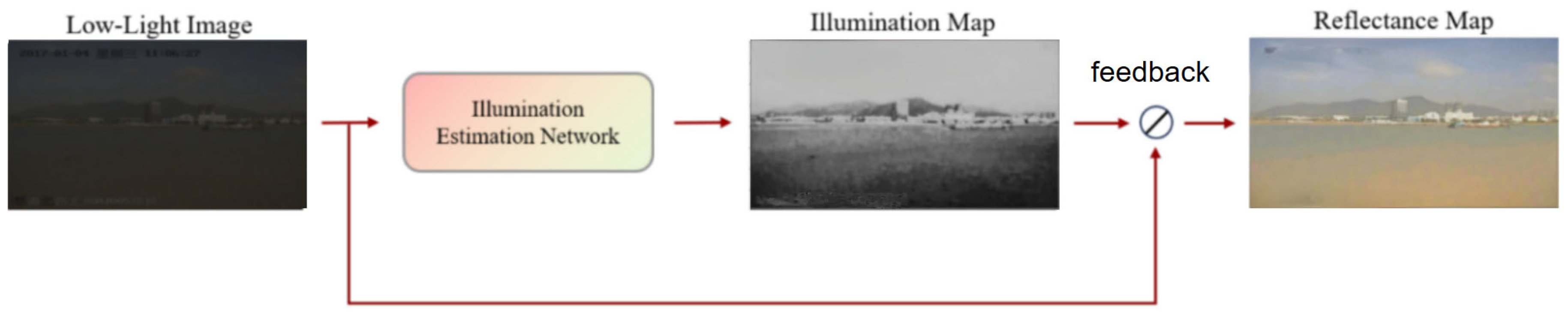

2. Methodology

3. The Image Augmentation Algorithm

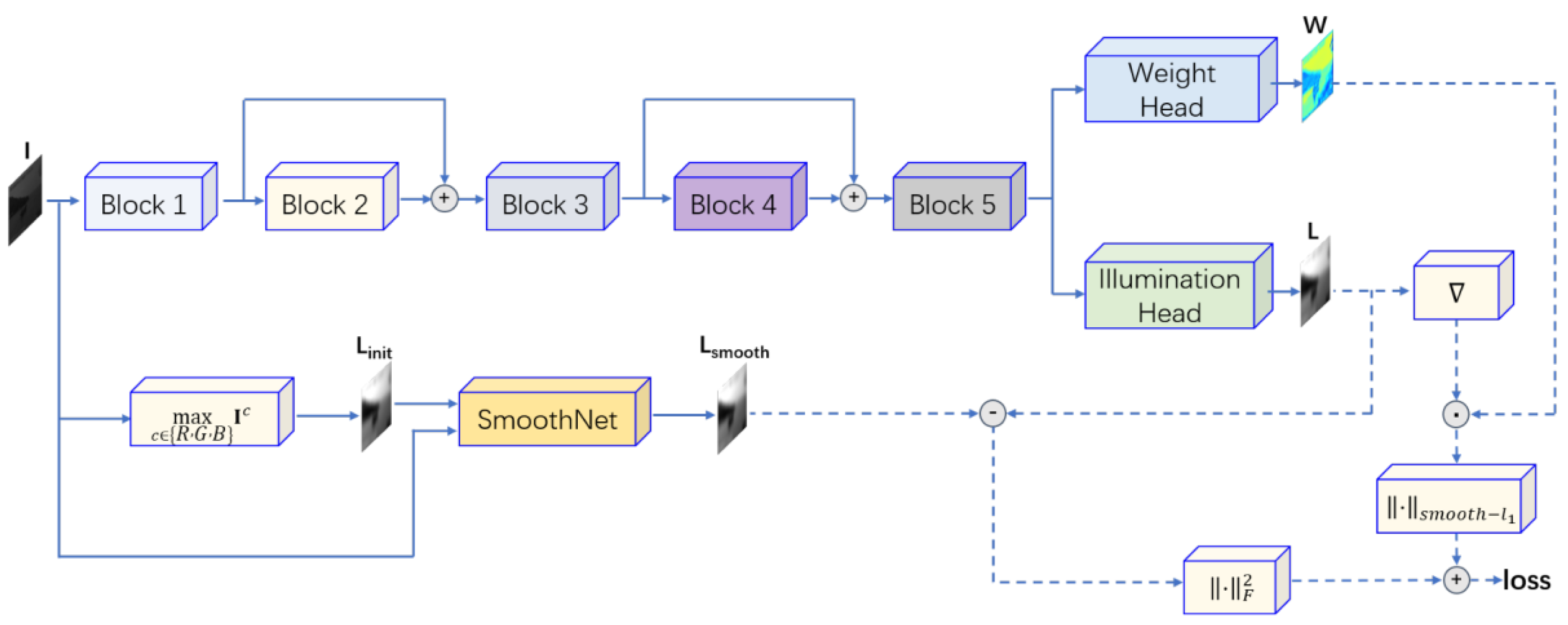

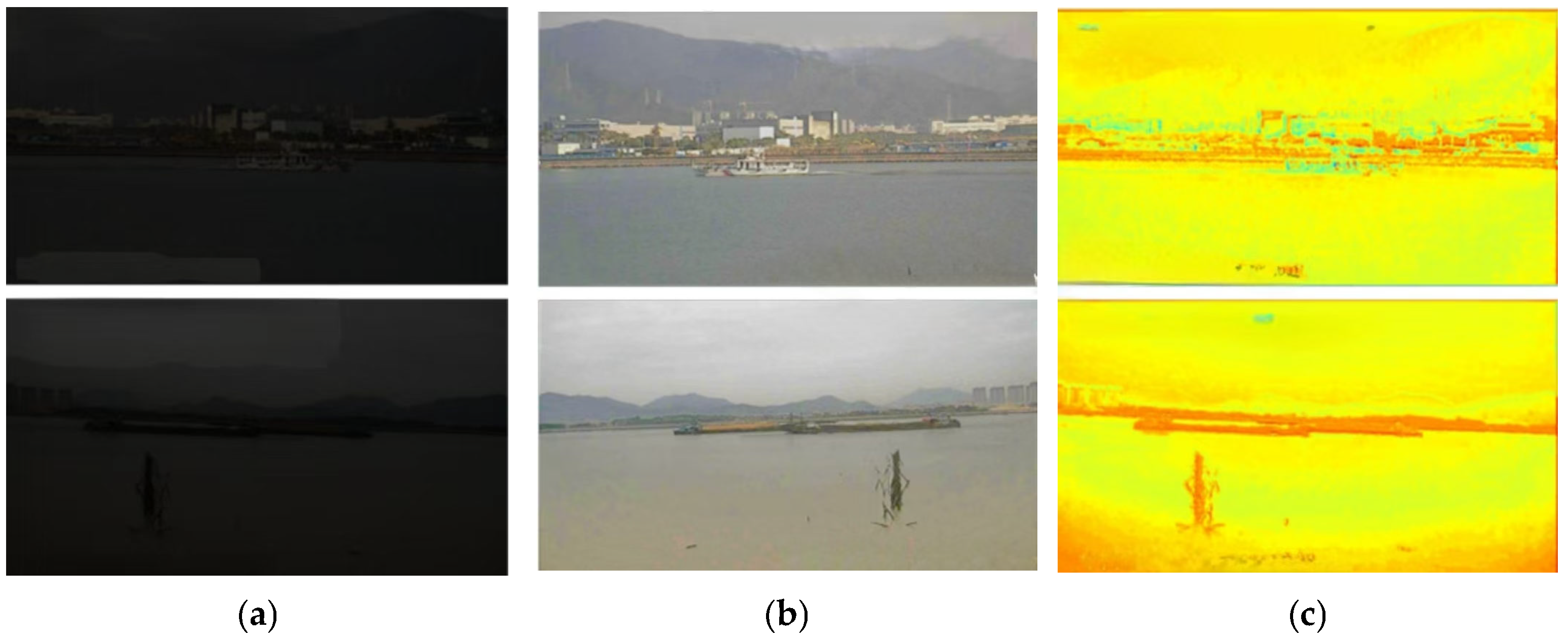

3.1. Illumination Estimation

| Algorithm 1 Pytorch code for SmoothNet implementation |

| import torchimport torch.nn.functional as F class SmoothNet(torch.nn.Module): def __init__(self, size, delta): super().__init__() self.delta = delta kernel = torch.ones(size, size) kernel = (1/size**2) * torch.reshape(kernel, (1, 1, size, size)) self.kernel = torch.as_tensor(kernel, device = “cuda”, dtype = torch.float32) def forward(self, img, L): img = torch.mean(img, dim = 1, keepdim = True) mean_img = F.conv2d(img, self.kernel, stride = 1, padding = “same”) mean_L = F.conv2d(L, self.kernel, stride = 1, padding = “same”) mean_IL = F.conv2d(img * L, self.kernel, stride = 1, padding = “same”) cov_IL = mean_IL-mean_img * mean_L mean_img2 = F.conv2d(img * img, self.kernel, stride = 1, padding = “same”) var_img = mean_img2-mean_img * mean_img a = cov_IL/(var_img + self.delta) b = mean_L-a * mean_img mean_a = F.conv2d(a, self.kernel, stride = 1, padding = “same”) mean_b = F.conv2d(b, self.kernel, stride = 1, padding = “same”) s = mean_a * img + mean_b return s |

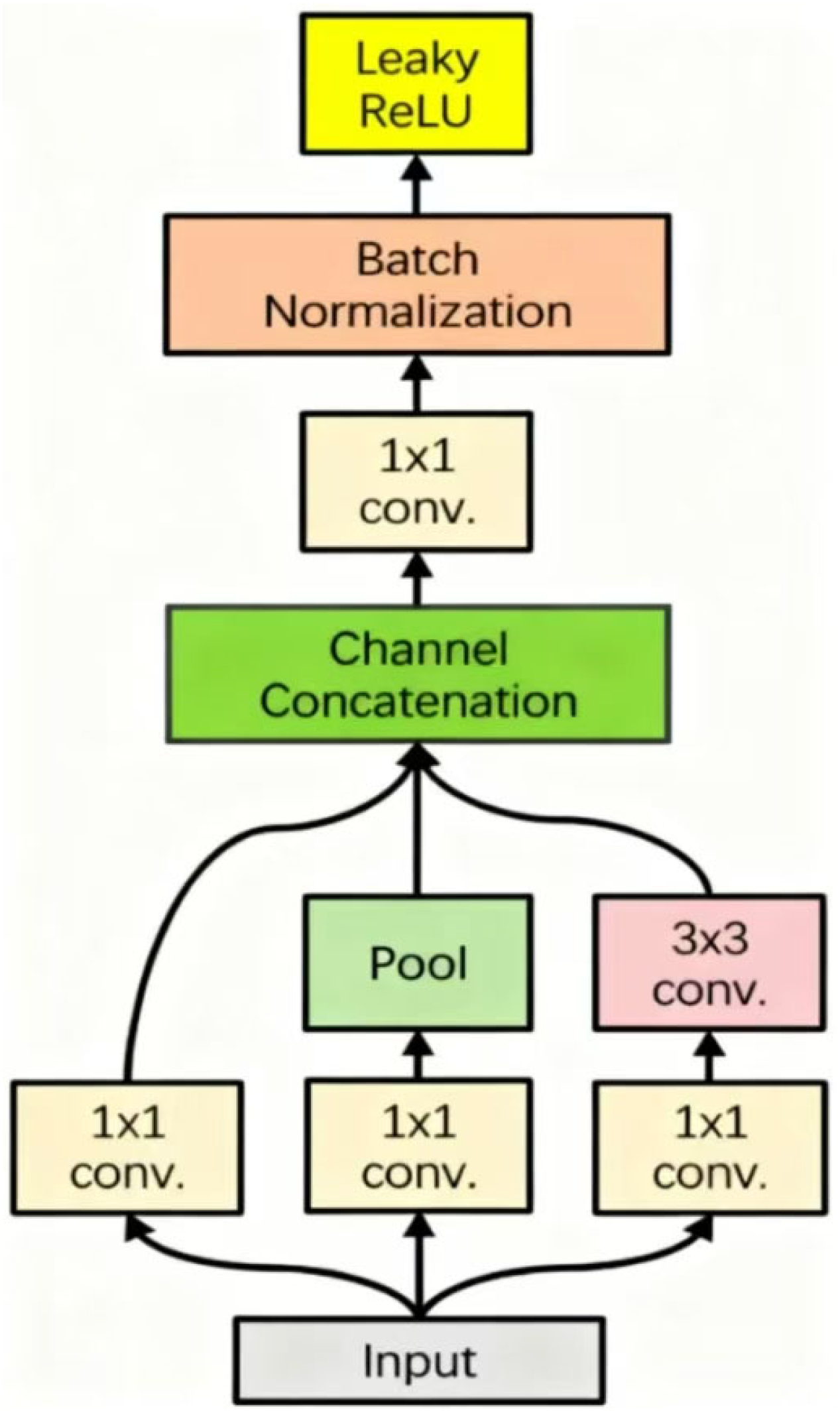

3.2. Introduction to the Architecture of Illumination Estimation Networks

3.3. Learnable Weight Matrix

3.4. Reflectance Estimation

4. Experiment and Result Analysis

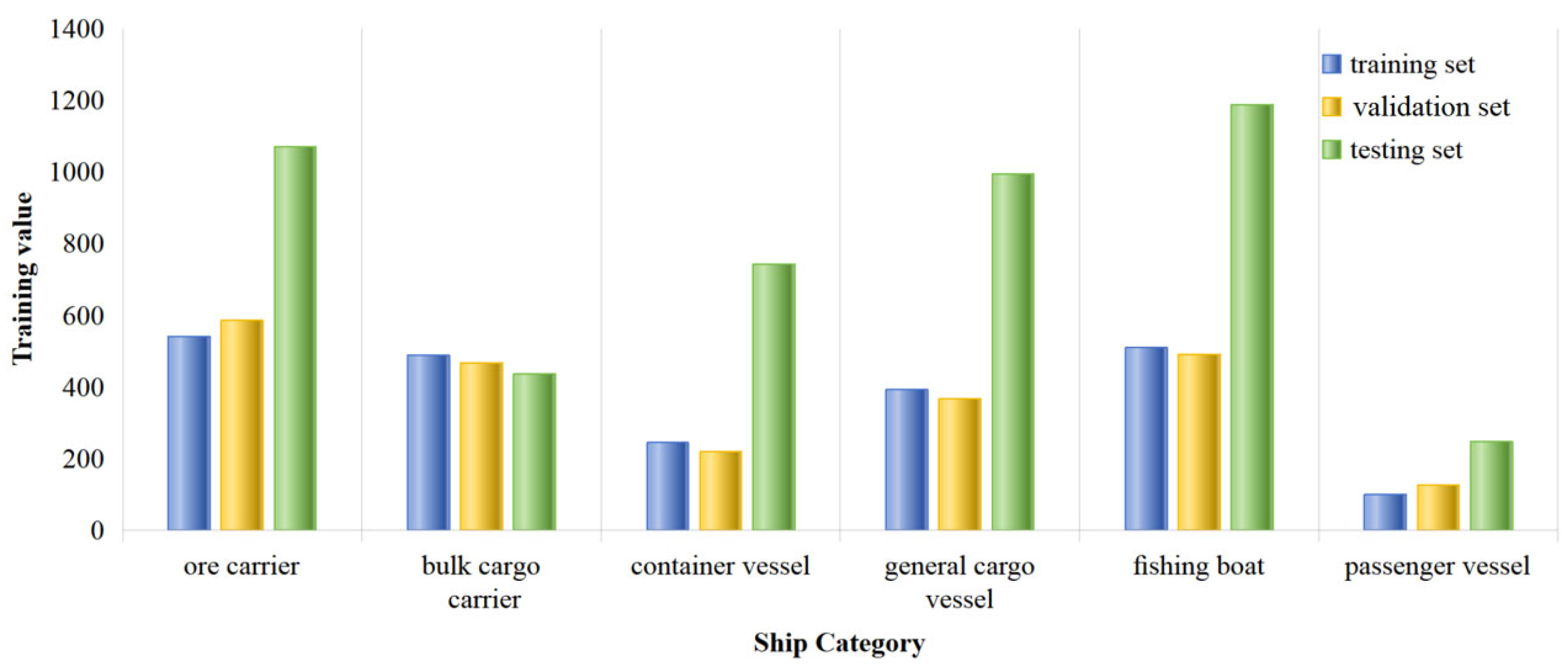

4.1. Dataset

4.2. Training IEN

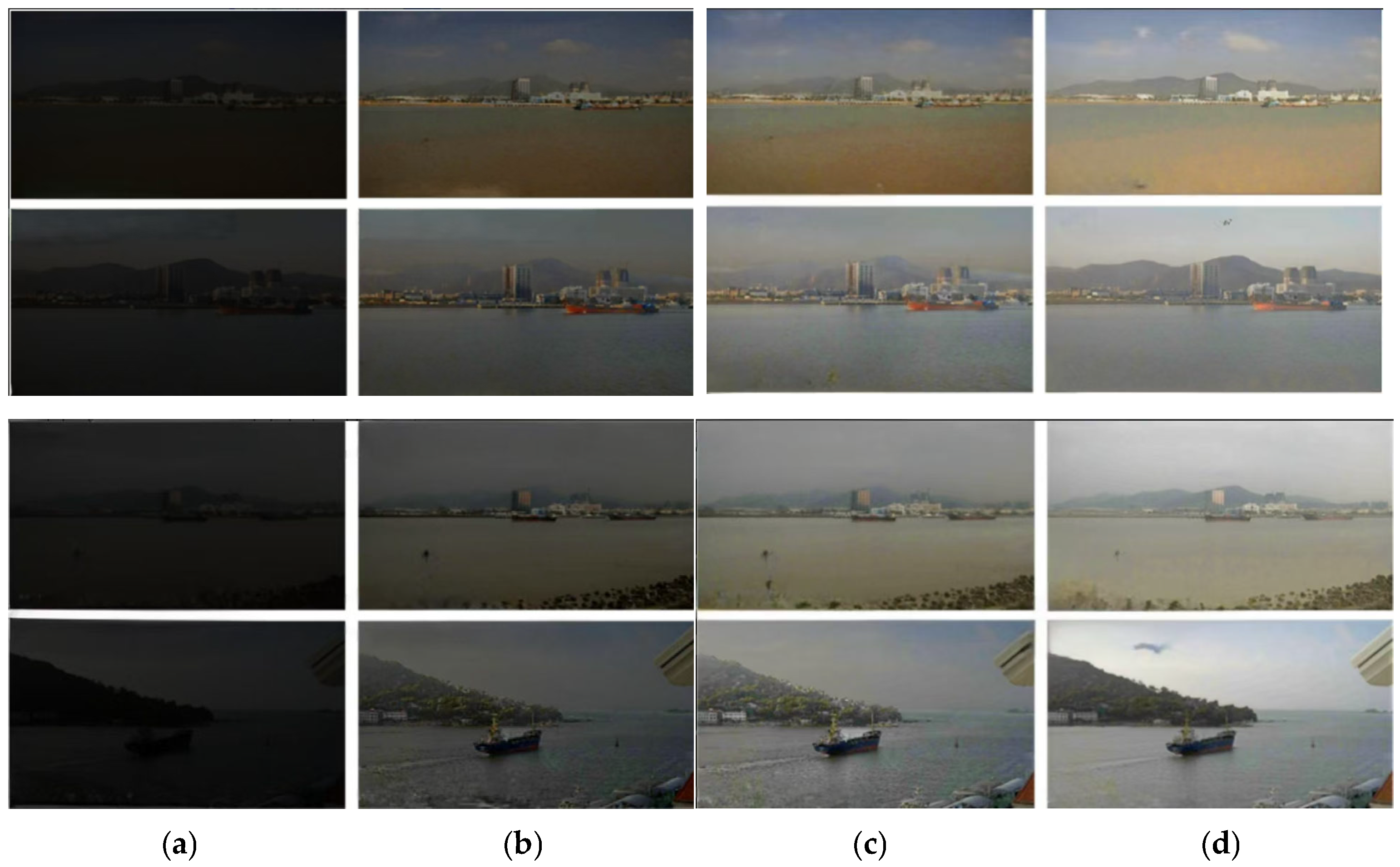

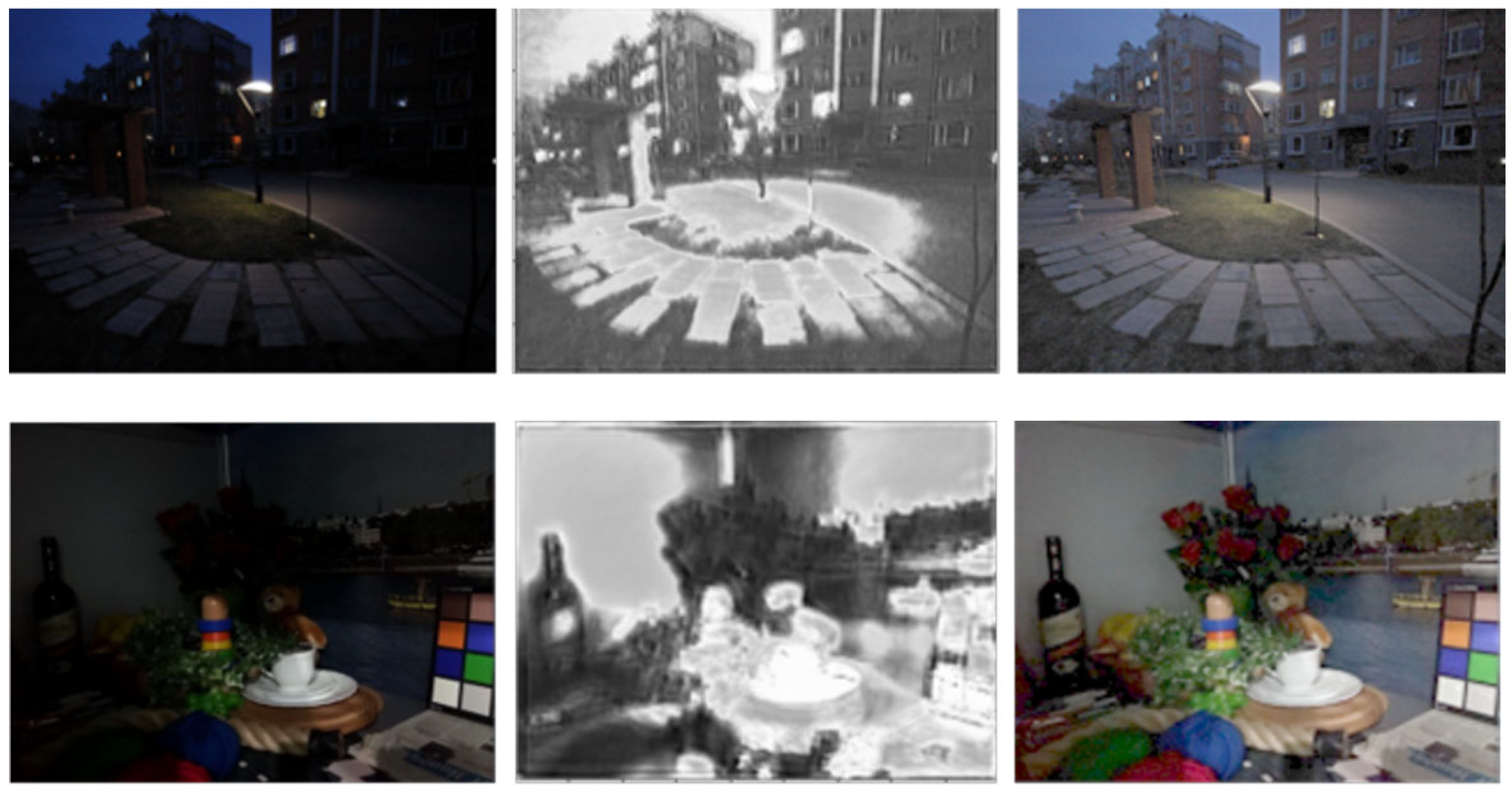

4.3. Comparison with Unsupervised Approach

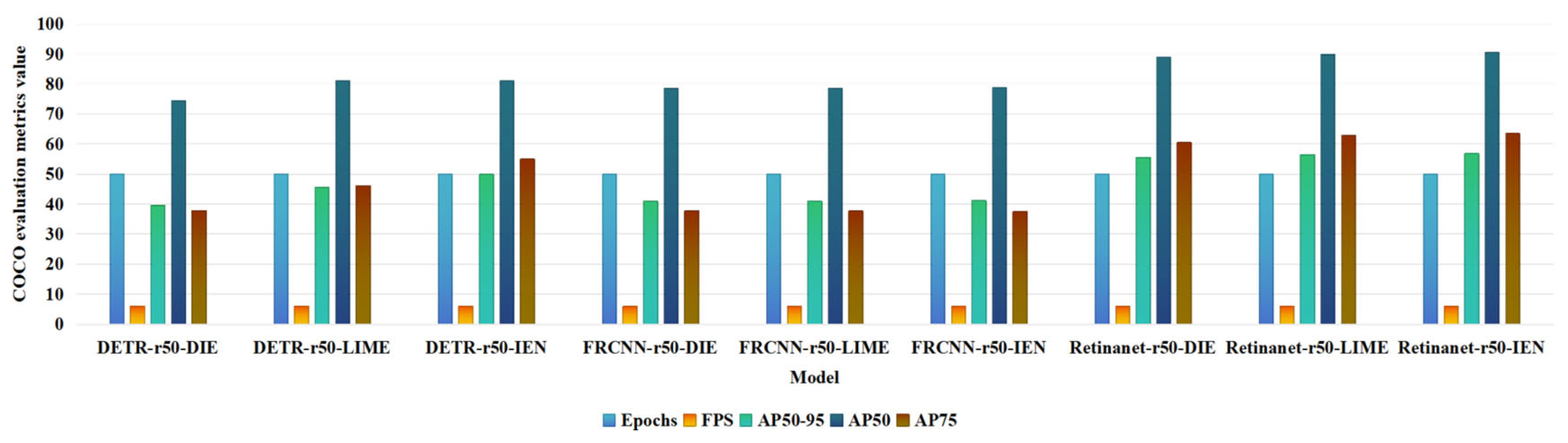

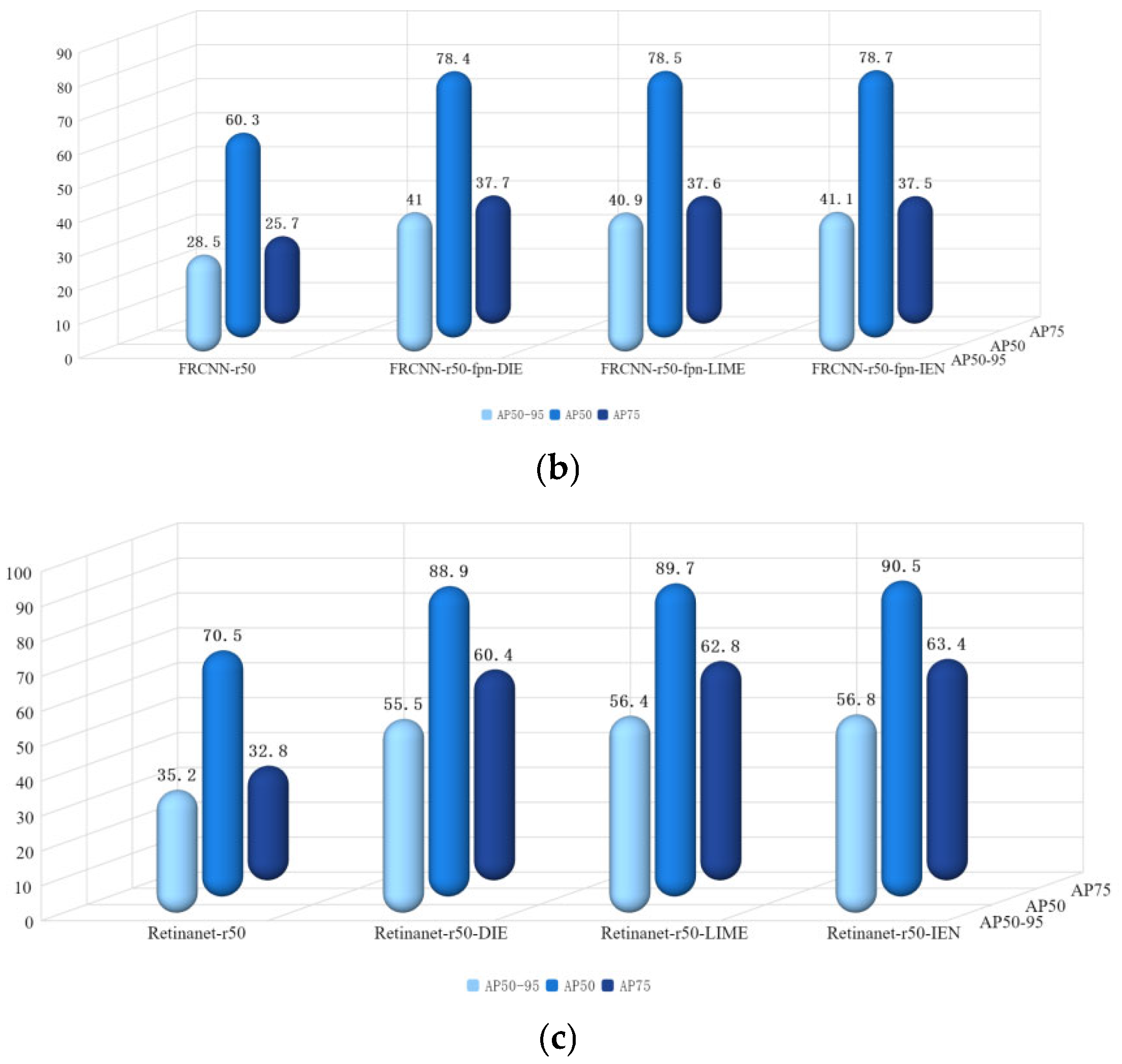

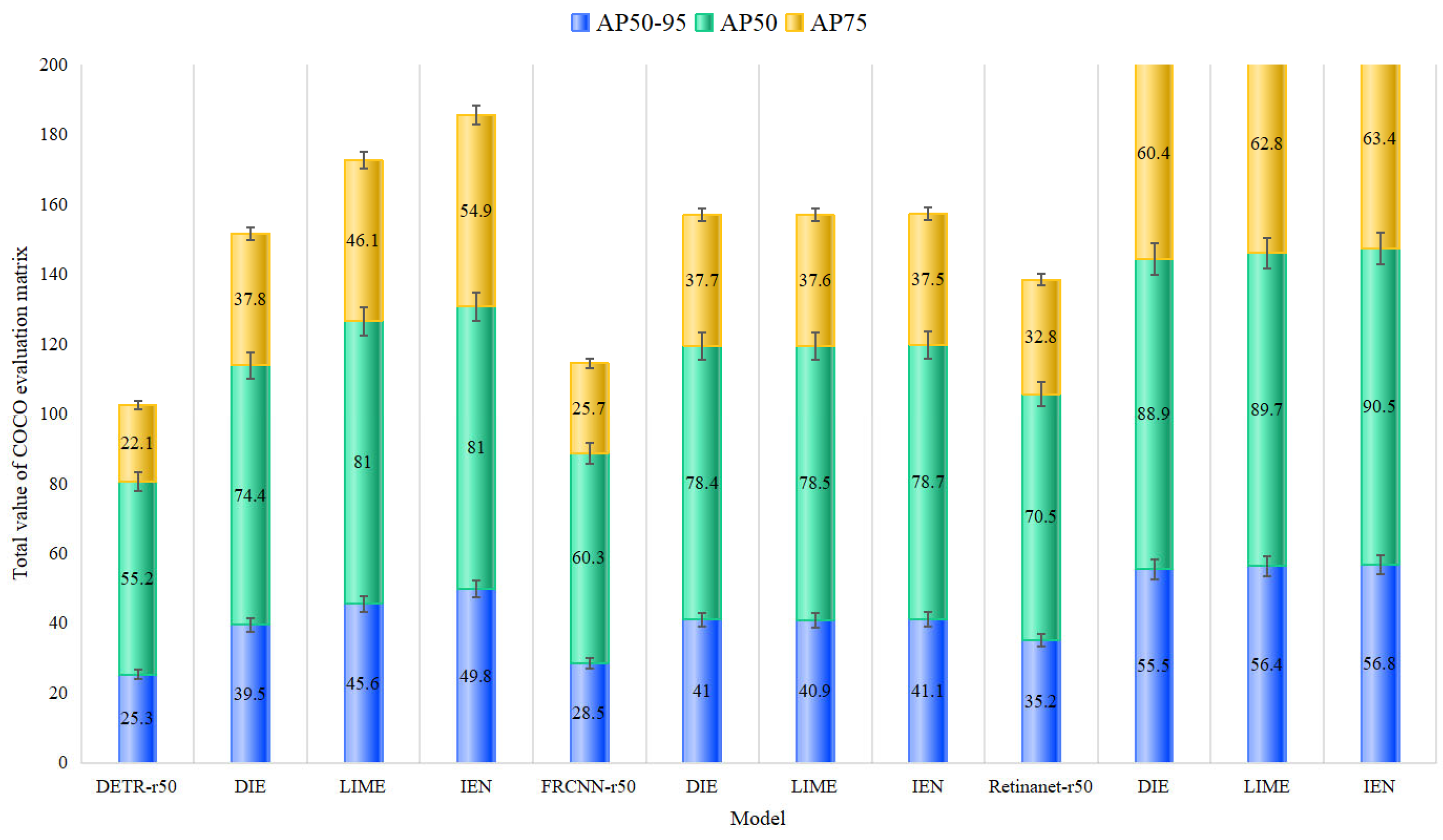

4.4. Vessel Identification on Augment Image

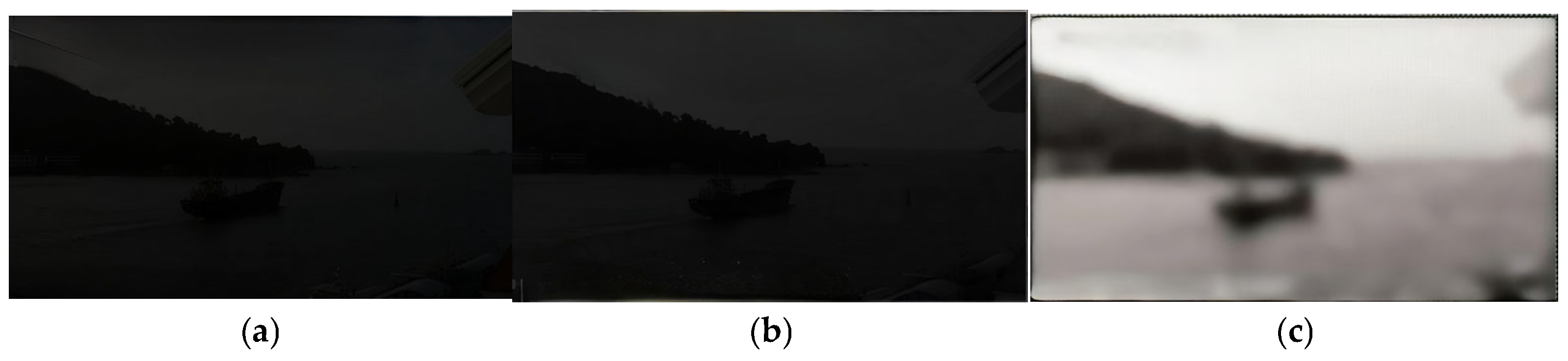

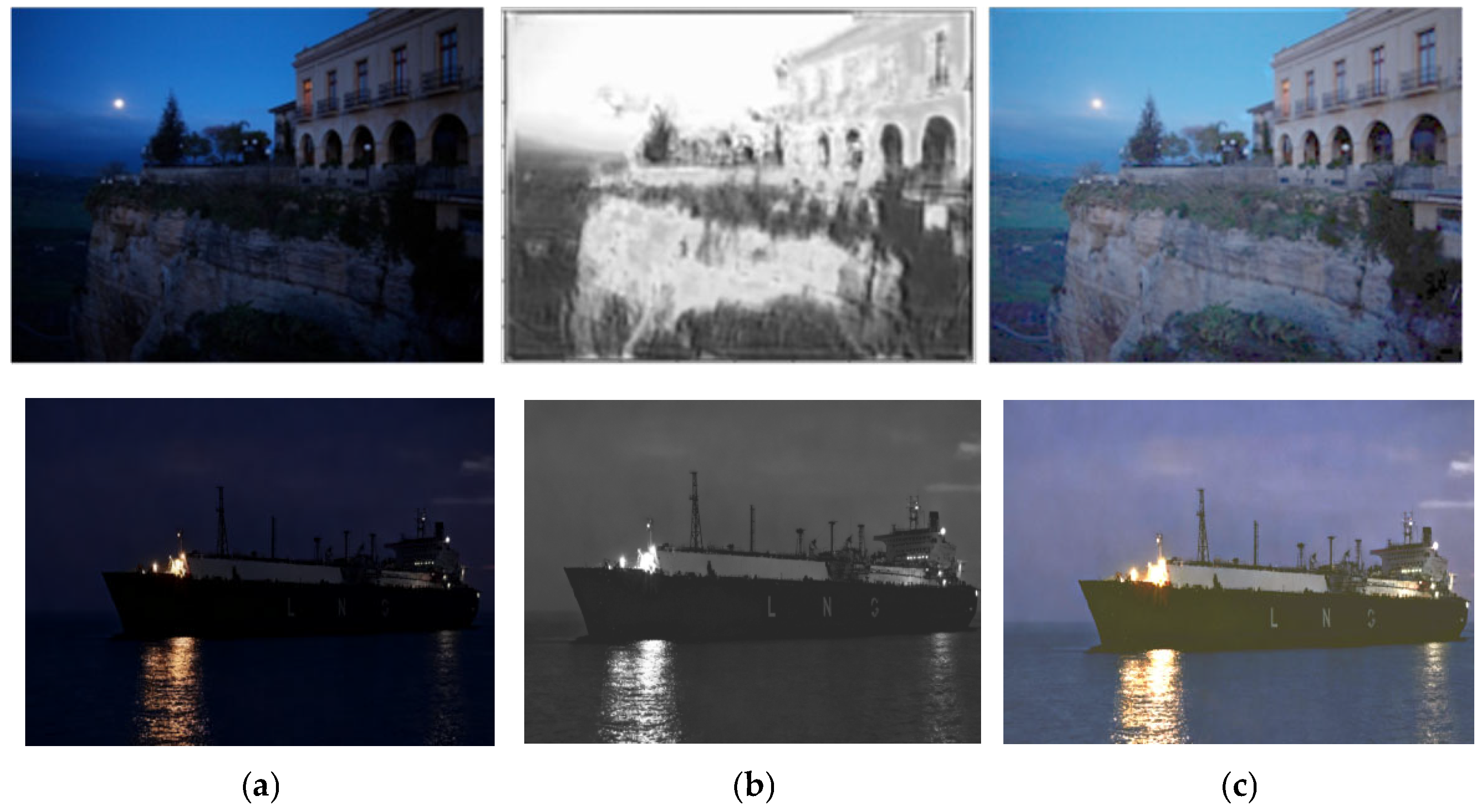

4.5. Generalization to Open-World Low-Light Image

4.6. Verification of Physical Rationality of Enhanced Images

5. Discussions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gao, Z.; Dai, J.; Xie, C. Dim and small target detection based on feature mapping neural networks. J. Vis. Commun. Graph. Represent. 2019, 62, 206–216. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Q.; Zhang, F. Vessel detection for visual maritime surveillance from non-stationary platforms. Ocean. Eng. 2017, 141, 53–63. [Google Scholar] [CrossRef]

- Wang, L.; Fan, S.; Liu, Y.; Li, Y.; Fei, C.; Liu, J.; Liu, B.; Dong, Y.; Liu, Z.; Zhao, X. A review of methods for vessel detection with electro-optical Graphics in marine environments. J. Mar. Sci. Eng. 2021, 9, 1408. [Google Scholar] [CrossRef]

- Er, M.J.; Zhang, Y.N.; Chen, J.; Gao, W.X. Vessel detection with deep learning: A survey. Artif. Intell. Rev. 2023, 56, 11825–11865. [Google Scholar] [CrossRef]

- Shao, Z.; Wu, W.; Wang, Z.; Du, W.; Li, C. SeaVessels: A large-scale precisely annotated dataset for vessel detection. IEEE Trans. Multimed. 2018, 20, 2593–2604. [Google Scholar] [CrossRef]

- Prasad, D.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Video processing from electro-optical sensors for object detection and tracking in a maritime environment: A survey. IEEE Trans. Intell. Transpl. Syst. 2018, 8, 1993–2016. [Google Scholar] [CrossRef]

- Shao, Z.; Zhang, X.; Zhang, T.; Xu, X.; Zeng, T. Rbfa-net: A rotated balanced feature- aligned network for rotated SAR vessel detection and classification. Remote Sens. 2022, 14, 3345. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, X.Y.; Zhou, S.L.; Wang, Y.Q.; Hou, Y. Arbitrary-oriented vessel detection through center-head point extraction. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5612414. [Google Scholar]

- Liu, R.W.; Yuan, W.Q.; Chen, X.Q.; Lu, Y.X. An augmented CNN-enabled learning method for promoting vessel detection in maritime surveillance system. Ocean. Eng. 2021, 235, 109435. [Google Scholar] [CrossRef]

- Cheng, S.X.; Zhu, Y.S.; Wu, S.H. Deep learning based efficient vessel detection from drone-captured Graphics for maritime surveillance. Ocean. Eng. 2023, 285, 115440. [Google Scholar] [CrossRef]

- Shan, Y.; Zhou, X.; Liu, S.; Zhang, Y.; Huang, K. SiamFPN: A deep learning method for accurate and real-time maritime vessel tracking. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 315–325. [Google Scholar] [CrossRef]

- Li, H.; Deng, L.; Yang, C.; Liu, J.; Gu, Z. Augmented YOLO v3 tiny network for real-time vessel detection from visual Graphics. IEEE Access. 2021, 9, 16692–16706. [Google Scholar] [CrossRef]

- Kim, J.H.; Kim, N.; Park, Y.W.; Won, C.S. Object detection and classification based on YOLO-V5 with improved maritime dataset. J. Mar. Sci. Eng. 2022, 3, 377. [Google Scholar] [CrossRef]

- Shao, Z.F.; Wang, L.G.; Wang, Z.Y.; Du, W.; Wu, W.J. Saliency-aware convolution neural network for vessel detection in surveillance video. IEEE Trans. Circuits Syst. Video Technol. 2020, 3, 781–794. [Google Scholar] [CrossRef]

- Xing, Z.; Ren, J.; Fan, X.; Zhang, Y. S-DETR: A Transformer model for real-time detection of marine vessels. J. Mar. Sci. Eng. 2023, 4, 696. [Google Scholar] [CrossRef]

- Shi, H.; Chai, B.; Wang, Y.; Chen, L. A local-sparse-information aggregation transformer with explicit contour guidance for SAR vessel detection. Remote Sens. 2022, 20, 5247. [Google Scholar] [CrossRef]

- Nie, X.; Yang, M.F.; Liu, R.W. Deep neural network-based robust vessel detection under different weather conditions. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 47–52. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Vijayakumar, A.; Vairavasundaram, S. Yolo-based object detection models: A review and its applications. Multimed. Tools Appl. 2024, 83, 83535–83574. [Google Scholar] [CrossRef]

- Chen, P.D.; Zhang, J.; Gao, Y.B.; Fang, Z.J.; Hwang, J. A lightweight RGB superposition effect adjustment network for low-light image enhancement and denoising. Eng. Appl. Artif. Intell. 2024, 127, 107234. [Google Scholar] [CrossRef]

- Im, S.J.; Yun, C.; Lee, S.J.; Park, K.R. Artificial Intelligence-Based Low-light Marine Image Enhancement for Semantic Segmentation in Edge Intelligence Empowered Internet of Things Environment. IEEE Internet Things J. 2024, 12, 4086–4114. [Google Scholar]

- Wang, Q.; Zhang, Q.; Wang, Y.; Gou, S. Event-triggered adaptive finite time trajectory tracking control for an underactuated vessel considering unknown time-varying disturbances. Transp. Saf. Environ. 2023, 5, tdac078. [Google Scholar] [CrossRef]

- Goncharov, V.K.; Klementieva, N.Y. Problem statement on the vessel braking within ice channel. Transp. Saf. Environ. 2021, 3, 50–56. [Google Scholar] [CrossRef]

- Chen, X.; Wu, H.; Han, B.; Liu, W.; Montewka, B.; Liu, R.W. Orientation-aware ship detection via a rotation feature decoupling supported deep learning approach. Eng. Appl. Artif. Intell. 2023, 125, 106686. [Google Scholar] [CrossRef]

- Land, E.H. The Retinex theory of color vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef] [PubMed]

- Land, E.H. An alternative technique for the computation of the designator in the Retinex theory of color vision. Proc. Natl. Acad. Sci. USA 1986, 83, 3078–3080. [Google Scholar] [CrossRef]

- Jobson, G.A.; Rahman, Z.U.; Woodell, G.A. Properties and performance of a center/surround Retinex. IEEE Trans. Graph. Process. 1997, 6, 115–121. [Google Scholar] [CrossRef]

- Rahman, Z.U.; Jobson, D.J.; Woodell, G.A. Multi-scale Retinex for color Graphics Augmentation. In Proceedings of the International Conference on Graphics Processing, Pohang, Republic of Korea, 16–19 September 1996; Volume 3, pp. 1003–1006. [Google Scholar]

- Jobson, D.J.; Rahman, Z.U.; Woodell, G.A. A multiscale Retinex for bridging the gap between color Graphics and the human observation of scenes. IEEE Trans. Graph. Process. 1997, 6, 965–976. [Google Scholar] [CrossRef]

- Zhang, Q.; Nie, Y.W.; Zheng, W.S. Dual illumination estimation for robust exposure correction. Comput. Graph. Forum. 2019, 38, 243–252. [Google Scholar] [CrossRef]

- Guo, X.J.; Li, Y.; Ling, H.B. LIME: Low-light Graphics Augmentation via illumination map estimation. IEEE Trans. Graph. Process. 2017, 26, 982–993. [Google Scholar] [CrossRef]

- Lore, K.G.; Akintayo, A.; Sarkar, S. LLNet: A deep autoencoder approach to natural low-light Graphics Augmentation. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep Retinex decomposition for low-light Augmentation. In Proceedings of the British Machine Vision Conference (BMVC), Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Li, C.Y.; Guo, J.C.; Porikli, F.; Pang, Y. LightenNet: A convolutional neural network for weakly illuminated Graphics Augmentation. Pattern Recognit. Lett. 2018, 104, 15–22. [Google Scholar] [CrossRef]

- Wang, Y.; Cao, Y.; Zha, Z.J.; Zhang, J.; Xiong, Z.W.; Zhang, W.; Wu, F. Progressive retinex: Mutually reinforced illumination-noise perception network for low-light Graphics Augmentation. In Proceedings of the 27th ACM International Conference on Multimedia (MM), Nice, France, 15 October 2019; pp. 2015–2023. [Google Scholar]

- Wu, Y.H.; Pan, C.; Wang, G.Q.; Yang, Y.; Wei, J.W.; Li, C.Y.; Shen, H.T. Learning semantic-aware knowledge guidance for low-light Graphics Augmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 1662–1671. [Google Scholar]

- Fu, Z.Q.; Yang, Y.; Tu, X.T.; Huang, Y.; Ding, X.H.; Ma, K.K. Learning a simple low-light Graphics augmentr from paired low-light instances. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 1662–1671. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Guided Graphics filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar] [CrossRef]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.M.; Dollar, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- He, K.M.; Zhang, X.G.; Ren, R.S.; Sun, J. Spatial pyramid pooling in deep convo- lutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Tian, Z.; Shen, C.H.; Chen, H. FCOS: Fully convolutional one-stage object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October 2019; pp. 9626–9635. [Google Scholar]

- Wang, S. Effectiveness of traditional augmentation methods for rebar counting using UAV imagery with Faster R-CNN and YOLOv10-based transformer architectures. Sci. Rep. 2025, 15, 33702. [Google Scholar] [CrossRef]

- Han, J.; Kim, J.; Kim, S.; Wang, S. Effectiveness of image augmentation techniques on detection of building characteristics from street view images using deep learning. J. Constr. Eng. Manag. 2024, 150, 04024129. [Google Scholar] [CrossRef]

| Category | Training Set | Validation Set | Testing Set |

|---|---|---|---|

| ore carrier | 542 | 586 | 1071 |

| bulk cargo carrier | 489 | 468 | 437 |

| container vessel | 245 | 219 | 744 |

| general cargo vessel | 393 | 368 | 995 |

| fishing boat | 510 | 492 | 1188 |

| passenger vessel | 100 | 126 | 248 |

| total | 2279 | 2259 | 4683 |

| Approach | CPU/GPU | Resolution | Mean | Standard Deviation |

|---|---|---|---|---|

| DIE | CPU | 1920 × 1080 | 78.54 | 1.23 |

| LIME | CPU | 1920 × 1080 | 40.21 | 0.87 |

| IEN | CPU | 1920 × 1080 | 10.68 | 0.34 |

| IEN | GPU | 1920 × 1080 | 0.26 | 0.02 |

| Model | Epochs | FPS | Trainable Params | AP50-95 | AP50 | AP75 |

|---|---|---|---|---|---|---|

| DETR-r50 | 50 | 6 | 41280780 | 25.3 | 55.2 | 22.1 |

| DETR-r50-DIE | 50 | 6 | 41280780 | 39.5 | 74.4 | 37.8 |

| DETR-r50-LIME | 50 | 6 | 41280780 | 45.6 | 81.0 | 46.1 |

| DETR-r50-IEN | 50 | 6 | 41280780 | 49.8 | 81.0 | 54.9 |

| FRCNN-r50 | 50 | 6 | 41102386 | 28.5 | 60.3 | 25.7 |

| FRCNN-r50-fpn-DIE | 50 | 6 | 41102386 | 41.0 | 78.4 | 37.7 |

| FRCNN-r50-fpn-LIME | 50 | 6 | 41102386 | 40.9 | 78.5 | 37.6 |

| FRCNN-r50-fpn-IEN | 50 | 6 | 41102386 | 41.1 | 78.7 | 37.5 |

| Retinanet-r50 | 50 | 6 | 32050019 | 35.2 | 70.5 | 32.8 |

| Retinanet-r50-fpn-DIE | 50 | 6 | 32050019 | 55.5 | 88.9 | 60.4 |

| Retinanet-r50-fpn-LIME | 50 | 6 | 32050019 | 56.4 | 89.7 | 62.8 |

| Retinanet-r50-fpn-IEN | 50 | 6 | 32050019 | 56.8 | 90.5 | 63.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, J.; Liu, Z.; Jiao, C.; Jiang, M. A Novel Approach for Vessel Graphics Identification and Augmentation Based on Unsupervised Illumination Estimation Network. J. Mar. Sci. Eng. 2025, 13, 2167. https://doi.org/10.3390/jmse13112167

Luo J, Liu Z, Jiao C, Jiang M. A Novel Approach for Vessel Graphics Identification and Augmentation Based on Unsupervised Illumination Estimation Network. Journal of Marine Science and Engineering. 2025; 13(11):2167. https://doi.org/10.3390/jmse13112167

Chicago/Turabian StyleLuo, Jianan, Zhichen Liu, Chenchen Jiao, and Mingyuan Jiang. 2025. "A Novel Approach for Vessel Graphics Identification and Augmentation Based on Unsupervised Illumination Estimation Network" Journal of Marine Science and Engineering 13, no. 11: 2167. https://doi.org/10.3390/jmse13112167

APA StyleLuo, J., Liu, Z., Jiao, C., & Jiang, M. (2025). A Novel Approach for Vessel Graphics Identification and Augmentation Based on Unsupervised Illumination Estimation Network. Journal of Marine Science and Engineering, 13(11), 2167. https://doi.org/10.3390/jmse13112167