1. Introduction

Autonomous Underwater Vehicles (AUVs) are unmanned ocean observation platforms with intelligence. They are known for their compact size, high maneuverability, and ability to operate in deep-sea environments. AUVs incorporate various sensors enabling them to carry out thorough surveys and research in underwater environments, which allows them to undertake submersible exploration without temporal and spatial constraints.

In marine science, AUVs collect oceanic environmental data, survey seafloor geology and topography, and prospect for resources like oil. In marine engineering, AUVs evaluate dam structures, help maintain underwater foundations, aid divers with their tasks, and perform underwater target observation, search, and rescue missions. In military applications, AUVs detect targets, collect intelligence, perform surveillance and reconnaissance, and engage in anti-submarine warfare and related activities.

Differential equations are typically used to describe the motion model of an underwater vehicle. For a particular type of underwater vehicle,

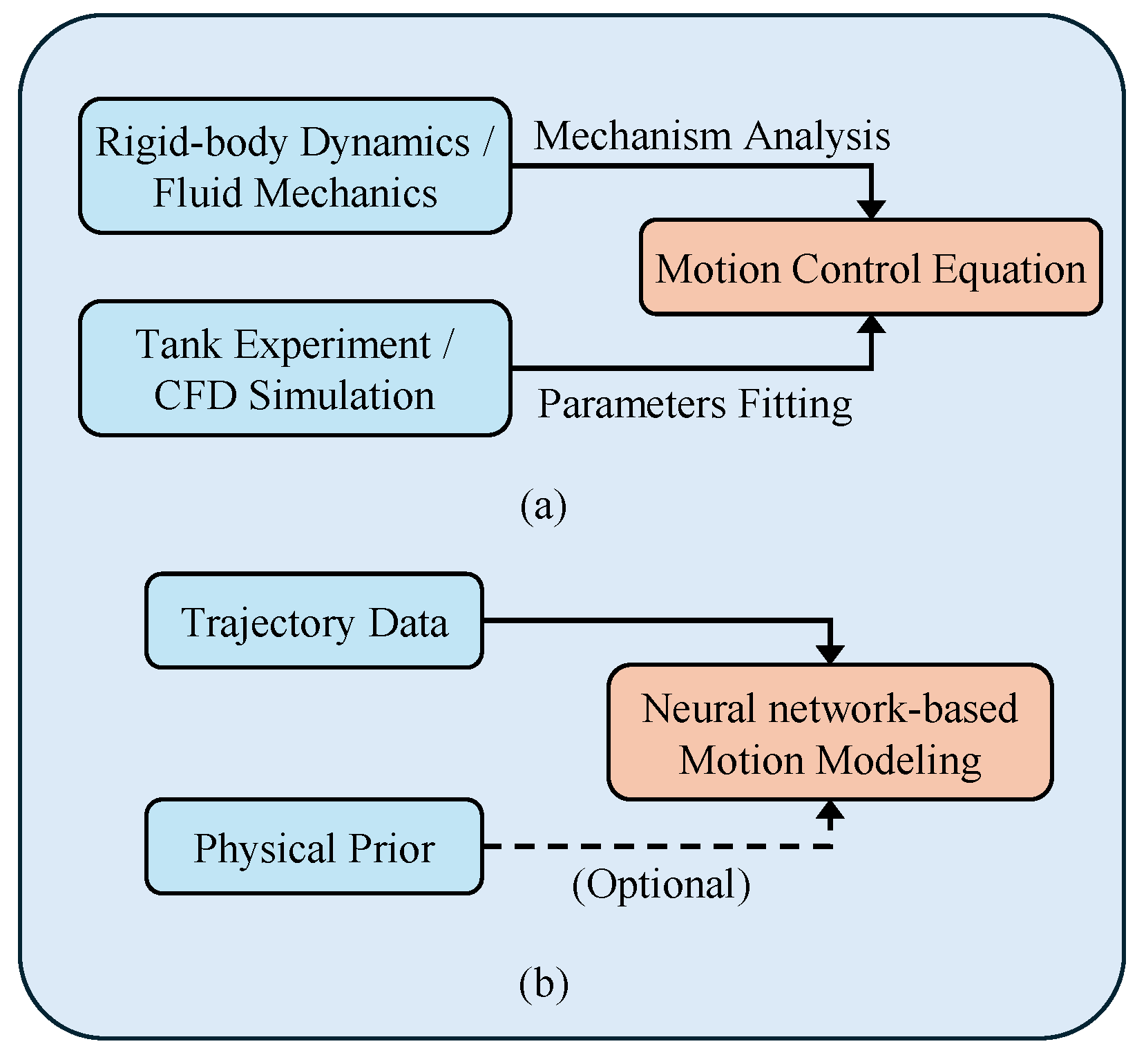

Figure 1 illustrates how certain parameters in the motion equations, like the inertial matrix and the Coriolis–centripetal moment matrix, among others, can be accurately obtained through experimental measurements or theoretical calculations once the interior structure and exterior design are established. Nevertheless, another set of parameters, such as hydrodynamic damping coefficients, require acquisition through computational fluid dynamics (CFD) simulations or water tank experiments, despite the fact that the resulting values frequently display a certain degree of discrepancy from the actual ones [

1].

Considerable research has focused on overcoming the challenge of estimating inaccurate hydrodynamic parameters. System identification methods have emerged as a common pathway for obtaining high-precision hydrodynamic parameters among various approaches. This method enables a cost-effective online or offline identification of parameters. To identify and optimize parameters, the fundamental approach involves designing parameter identification experiments and subsequently utilizing techniques such as the least squares method [

2], Kalman filtering [

3], Gaussian processes [

4], or neural networks [

5].

System identification can attain the desired accuracy by minimizing the discrepancy between theoretical models and actual data [

6]. Nevertheless, these methods have some limitations. Firstly, the accuracy of system identification methods depends on the quality of the theoretical model used. In situations where the model is faulty, accurate motion models can still be hard to achieve, even if the identification method shows high precision. Secondly, to completely excite the dynamic characteristics of underwater vehicles, tailored parameter identification experiments must be designed. This leads to a considerable need for high-quality trajectory data. Lastly, when multiple parameters need identification, it may cause challenges for algorithm convergence. It becomes necessary to decouple the motion of the underwater vehicle followed by identifying hydrodynamic parameters in separate channels. Nevertheless, this method may result in inaccuracies when estimating strongly coupled parameters.

Moreover, the theoretical model may lack accuracy inherently because of the absence of a universally accepted method for modeling hydrodynamic damping terms. Numerous methods have been summarized in reference [

7] with varying assumptions made regarding the vehicle and fluid characteristics. The generated models differ in their applicability and computational complexity across various scenarios. Furthermore, certain factors within the vehicle dynamics model, including manufacturing errors, assembly discrepancies, external disturbances, and changes in physical characteristics over time, present challenges for explicit modeling. Therefore, predicting the motion behavior of underwater vehicles through differential equations to obtain long-term accurate explanations is exceedingly difficult. Establishing a motion model through experiments, even with a lot of effort, is only applicable to a specific type of vehicle and is difficult to generalize to other vehicles.

Recently, deep learning technology has seen rapid development and has been extensively applied in various fields, including visual recognition [

8], natural language understanding [

9], robot manipulation, and control [

10]. Deep learning has demonstrated much better performance than traditional methods. Deep neural networks are theoretically capable of fitting any nonlinear function, as they serve as universal function approximators. Deep neural networks can automatically extract latent features from large amounts of data, which is supported by carefully designed network architectures and advanced training strategies.

Modeling underwater vehicle dynamics through theoretical analysis presents challenges due to the inherent incompleteness of theoretical models and difficulties associated with obtaining certain parameters accurately. In contrast, deep learning methods can effectively utilize the impressive fitting capacity of neural networks. These methods learn the dynamics of underwater vehicles directly from their trajectory data, surpassing the mere task of fitting parameters within theoretical models.

It is important to note that deep neural networks are black-box models and lack interpretability in their operational mechanisms. Although deep neural networks usually have high model capacity to fit training data well, they may not capture the true underlying patterns in some cases. Instead, they may identify specific local optimal solutions. When confronted with scenarios outside the training data, such models can exhibit significant performance degradation, which is commonly referred to as over-fitting.

To enhance the generalization ability of deep neural networks in unfamiliar contexts and to guide their learning of appropriate data patterns, it is necessary to incorporate task-relevant prior information, i.e., biases, into the learning process. The embedding of bias information into neural networks can be categorized into the following three approaches: a. Input bias: During the data pre-processing stage, domain knowledge can be used to normalize the data by bringing different scales of data to the same. If the data distribution is unfavorable, domain knowledge can be applied to adjust the data distribution, thus facilitating a more effective modeling of the target area [

11]. b. Inductive bias: Designing specialized neural network architectures for specific tasks is one way to implicitly embed prior information into the learning process. For example, convolutional neural networks exploit the symmetry and distributed pattern representation present in natural images. By using network structures based on local connectivity and parameter sharing, they have revolutionized the field of computer vision [

12,

13,

14,

15]. c. Learning bias: Incorporating task-relevant prior information into the loss function as penalty terms is another strategy. This is similar to multi-task learning, where the learning algorithm not only adapts to the training data but also ensures that the network predictions satisfy certain constraints (e.g., conservation of quality and momentum, monotonicity, etc.). Typical methods include deep Galerkin methods [

16] and physics-informed neural networks [

17,

18].

In addition, traditional neural network methods often deal only with discrete data, while the sampling intervals of actual motion data may be irregular. The challenge of recovering continuous dynamics of underwater vehicles from such observational data remains another critical issue. In this study, we establish the neural ordinary differential equation (neural ODE) [

19] as a fundamental framework to modeling underwater vehicle dynamics. This framework combines ordinary differential equations with neural networks, starting only from the initial state of the system and using non-uniform observation data to model continuous dynamics. We then express the motion model of underwater vehicles in the form of Hamiltonian mechanics, using the Hamiltonian neural network (HNN) to model system conservation and dissipation quantities, respectively [

20,

21]. By using exponential mapping methods to handle rotational motion, we ensure that the resulting motion model satisfies the dynamic constraints of underwater vehicles. Finally, we compare the derived dynamic model to a fully data-driven modeling method. The experimental results show that our proposed modeling approach has significant advantages in terms of model robustness and long-term prediction accuracy.

The key contributions of this work are therefore threefold:

We propose a novel physics-informed framework for AUV dynamics modeling that integrates a Port-Hamiltonian structure within a neural ordinary differential equation (NODE) to explicitly separate and learn energy-conserving and dissipative effects from data.

We ensure geometric consistency and numerical stability for long-term prediction by representing the full 6-DOF rigid-body motion on the SE(3) manifold, which inherently respects the constraints of rotational dynamics and avoids singularities.

We provide rigorous quantitative evidence demonstrating that our physics-informed approach overcomes the inherent brittleness of black-box models, maintaining high-fidelity predictions in complex, out-of-distribution scenarios where purely data-driven methods fail.

The paper is organized as follows.

Section 2 provides some preliminaries of this work.

Section 3 describes the proposed method for the dynamic modeling of underwater vehicles. Experimental results and the discussion are presented in

Section 4. And the conclusion is given in

Section 5.

2. Sequence Modeling Based on Neural Ordinary Differential Equations

2.1. Problem Statement

We can conceptualize the motion modeling of underwater vehicles as a time series prediction problem. Let us assume that at time t, the motion state of the underwater vehicle can be represented by a vector containing both position and velocity information. Therefore, within the time interval from to , the changing motion states form a sequence . For simplicity, we assume that the control inputs of the underwater vehicle remain constant during this period. As a result, the motion modeling problem for the underwater vehicle revolves around predicting a sequence of motion states for a duration from time to based on the known sequence .

In the context of deep learning, the time series prediction can be seen as a supervised learning problem, which allows us to express the above problem in a more general form. Given the sets

and

, sampled from an unknown distribution

p, denoted as

, and a loss function

, the goal is to find a function

that minimizes the expected loss

where

represents the known sequence,

are the predicted and target sequences, respectively, and the function

can be parameterized as a neural network that takes

as input and produces

as output. The goal is to minimize the expected loss given by the loss function

, considering pairs of predicted and target sequences sampled from the distribution

p.

Commonly used neural network architectures for time series modeling include recurrent neural networks (RNNs), long short-term memory networks (LSTMs), and the Transformer [

22]. Among them, the Transformer has gained increasing attention due to its ability to capture long-term dependencies and interactions. Compared to RNNs and LSTMs, the Transformer can parallelize the processing of input sequences, providing computational advantages for large-scale data processing. Although these neural network models applied to sequence prediction may differ, they generally share similar architectural forms, as shown in

Figure 2.

While these models have the advantages of simplicity and the ability to handle long-term or short-term dependencies, they are typically applied to uniformly sampled time series data. When applied to underwater vehicle motion modeling, it is often required that each state in the sequence has an equal time interval. Although the limitation of non-uniformly sampled data can be addressed by resampling or interpolation, these methods may compromise the original temporal information in the data. To avoid the loss of valuable information, another method is to include time stamps of the time series data as input to the neural network. However, compared to modeling methods based on differential equations, these methods still remain as discrete data modeling and may struggle to effectively model continuous dynamics.

2.2. Neural Ordinary Differential Equations

Neural ODE is a modeling method that combines ordinary differential equations with residual networks (ResNet) [

8] to learn the dynamics of a system from data without having to explicitly define the differential equation. Unlike methods such as RNNs, neural ODEs require only the initial state of the system as input, eliminating the need for a sequence of data as input. This feature allows neural ODEs to naturally model time series data with non-uniform sampling and predict the dynamics of a system over continuous time.

As

Figure 3 shows, the transformation of hidden states within a neural network can be expressed as

where

f represents a network layer,

and

denote the state output and weight matrix of layer

t, and

represents the state output of layer

.

For residual networks, the expression is

where

can skip the network layer at

and be added to the output.

To make the connection between the ODE and the residual network, consider a simple ordinary differential equation

Given an initial value

and a time step of 1, the iterative solution process can be expressed as

This process is also known as Euler’s method for solving differential equations. If we consider the hidden layer index

t in (

4) as the time step

t in (

5), the forward propagation process of a ResNet has the same form as the iterative solution process of a differential equation.

However, there are two differences: (a) when solving an ODE, the time step can take any continuous value, while for ResNet, the time step is discrete, i.e., the number of network layers; (b) different residual blocks of ResNet have different

f functions, while in an ODE, there is essentially a single

f function defined by (

5). Based on this, all residual blocks can be assigned the same parameters. In addition, since the time step is fixed, it can be chosen to be sufficiently small, allowing the network to become deep. As a result, a neural network based on an ODE with continuous depth can be formulated.

2.3. Hamiltonian Neural Networks

A neural ODE provides a general method for learning continuous-time dynamics from non-uniformly sampled data without imposing constraints on the underlying dynamical system. This provides versatility but also makes it difficult to accurately model specific physical processes. From a learning theory perspective, this is because a neural ODE has fewer inductive biases and lacks certain necessary assumptions about the target function to be learned.

Hamiltonian mechanics describes the evolution of the system state over time in phase space, where the system state is represented by generalized coordinates and generalized moments . The Hamiltonian function maps the state of the system to a scalar representing the total energy of the system. In the context of classical mechanics, the Hamiltonian H represents the sum of the kinetic and potential energies of the system. The Hamiltonian function specifies a vector field in phase space that describes all possible dynamic behaviors of the system. Each state of the system corresponds to a unique trajectory in phase space.

The Hamiltonian function can be expressed as

where

is the kinetic energy and

is the potential energy. The evolution of the state of the system over time is described by the Hamiltonian equations

The direction of the vector field defined by (

7) is typically called the symplectic gradient of the Hamiltonian system.

It is easy to show that

This implies that the total energy of the system is conserved when moving along the direction of the symplectic gradient [

23].

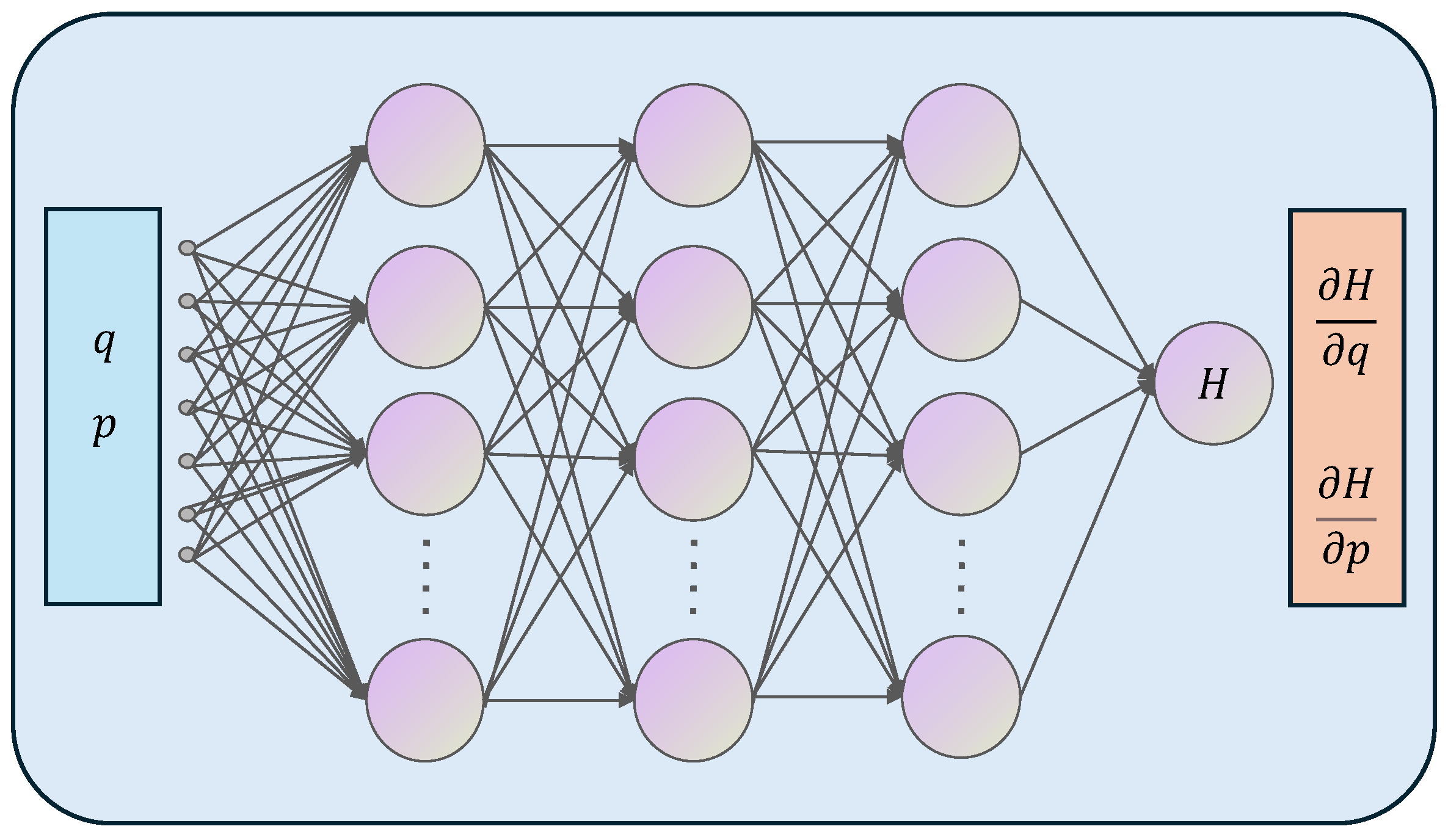

In recent years, deep neural networks have been used to learn the Hamiltonian mechanics [

24,

25]. The Hamiltonian neural network is proposed to model the Hamiltonian function using deep neural networks and satisfy the dynamics of (

7), ensuring that the system obeys the law of conservation of energy.

As

Figure 4 shows, during forward computation, the HNN takes the generalized coordinates and momenta of the system as input and outputs the total energy of the system

, where

represents the learnable network parameters. In the backpropagation process, the derivatives of the output with respect to the inputs are computed using automatic differentiation. The loss function of the HNN can be expressed as

Let

represent the dynamics of the system’s evolution over time. If the system state

at time

is known, the neural ODE can be used to obtain the system state at time

,

Compared to the conventional neural ODE, the HNN offers faster training speeds, better generalization performance, and the ability to learn the dynamics of conservative systems more effectively.

4. Experiment and Results

4.1. Experimental Setup

The proposed HNN-based motion modeling method is validated using the REMUS 100 underwater vehicle, which is depicted in

Table 1 [

29]. The REMUS 100 achieves axial propulsion through a stern thruster and maneuverability via symmetrically arranged sets of rudders and elevators.

To model the Hamiltonian equations for the underwater vehicle, we employ four independent neural networks to learn the system’s constituent matrices: the generalized mass matrix , the potential energy , the damping matrix , and the control input matrix . All networks share a common architecture, consisting of two hidden layers with 128 nodes each and using the Tanh activation function. Furthermore, they are all conditioned on the same input: the 12-dimensional generalized coordinate vector , which fully describes the vehicle’s position and orientation.

The output structure of each network is tailored to incorporate prior physical knowledge about the vehicle, which is a strategy that significantly reduces the dimensionality of the learning problem and improves training efficiency. For instance, based on the vehicle’s known symmetries and its operation in a low-speed regime, the network is designed to output only seven scalar values, corresponding to the six principal diagonal elements and the dominant off-diagonal coupling term (). Similarly, the network learns the six diagonal elements of the hydrodynamic damping matrix. The network outputs a single scalar for the system’s potential energy, and the network learns an 18-element vector that forms a matrix, mapping the three control inputs (propeller revolution, rudder angle, and elevator angle) to the corresponding forces and torques in the 6 degrees of freedom.

It is crucial to emphasize that these output simplifications are a practical application of prior knowledge—not an inherent limitation of our framework. The proposed HNN-based methodology is fully capable of learning complete, dense mass and damping matrices for vehicles with more complex or less understood dynamics provided that sufficient and adequately exciting training data are available.

4.2. Experimental Result

The REMUS 100 motion model provided by [

29] was used to generate training data. One hundred motion trajectories of 5 s each were generated under random control inputs, starting from the initial state of

and

. The control and motion sampling frequencies were 10 Hz and 50 Hz, respectively. Following this, non-overlapping samples of 0.1 s each were obtained by randomly cropping 3 s segments from each trajectory while keeping the control input unchanged. We obtained a total of 3000 data samples through this process. The data were randomly split into training and testing sets at an 8:2 ratio.

All models were implemented using the PyTorch framework. The training and evaluation were conducted on a workstation equipped with a single NVIDIA RTX 3090 GPU. For training, we used the Adam optimizer with default parameters and a batch size of 128. The learning rate was set to

and was kept constant throughout the 500 training epochs. To ensure the statistical significance and robustness of our results, all experiments were repeated five times using different random seeds for data shuffling and network initialization.

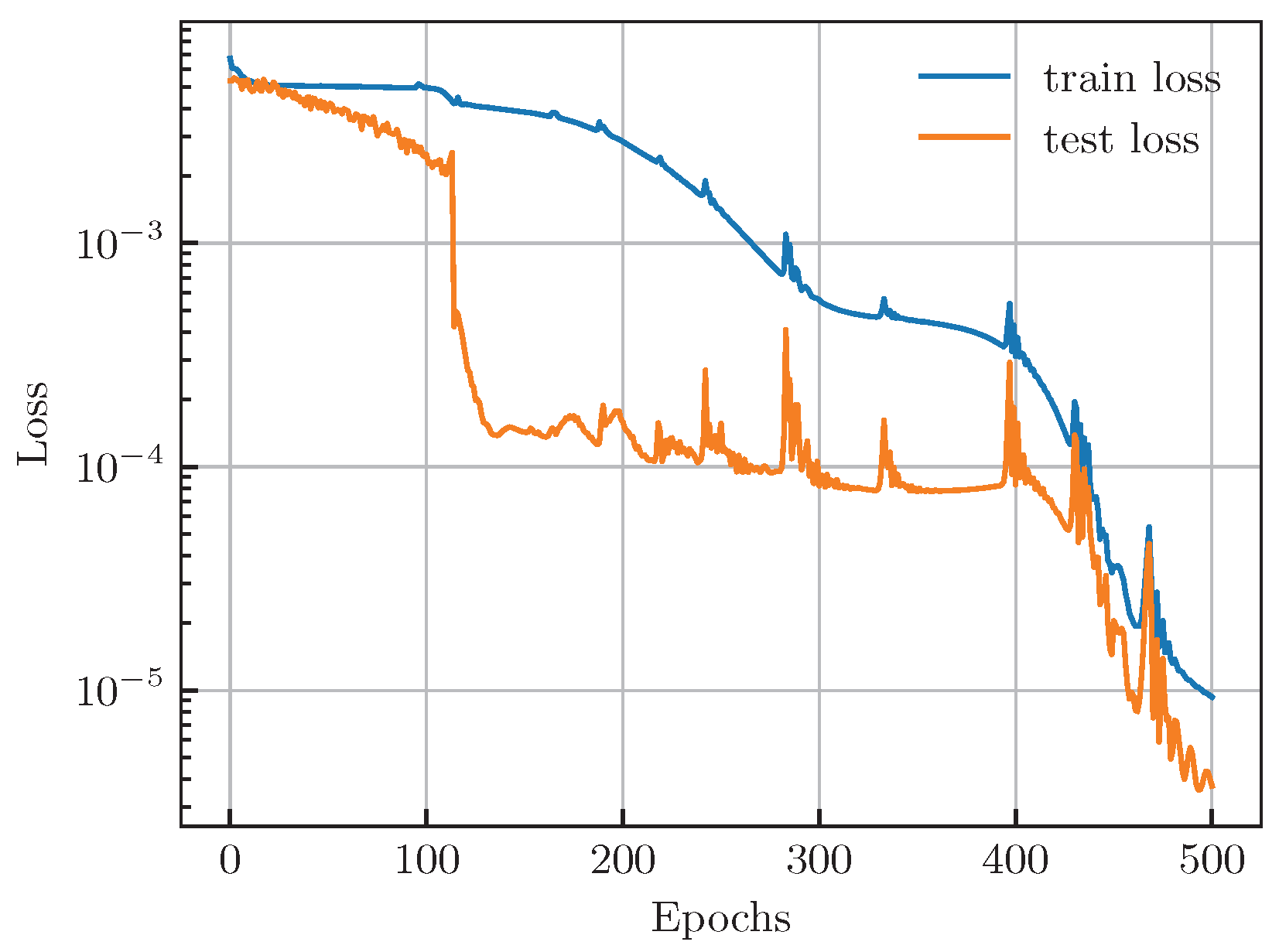

Figure 5 shows the variation of the loss on the dataset during the training.

The loss on the training set decreases consistently with a more rapid decline in the final 100 epochs. At the same time, the loss on the test set remains consistently lower than that on the training set, although its trend is more complex. Starting from the 100th epoch, the test loss experiences a rapid decrease to the level, which is followed by almost constant maintenance with intense fluctuations over the next 250 epochs. Nonetheless, during the final 100 epochs, the test loss set decreases rapidly again, eventually approaching the level. Although the loss curve is not always smooth, the model’s loss reduces to a very low level after 500 epochs of training, indicating successful completion.

To more intuitively evaluate the training results, we generated 100 random motion trajectories of 10 s each using the same method described earlier. Next, we used the trained model to predict changes in the motion state of the vehicle from the same initial state within 10 s. It is important to note that the model has not predicted continuous trajectories beyond 0.1 s during training. As a result, this evaluation provides a better test of the model’s generalization ability.

Figure 6 shows the predicted generalized mass matrix by the trained model, where the learned elements remain nearly constant throughout the 10 s prediction. A remarkable result is the clear symmetry discovered by the network, where

and

. This is not a trivial consequence of the model’s architecture but rather a meaningful demonstration of learning physical consistency.

Crucially, the neural network architecture imposes no prior structural constraints that would force these matrix elements to be equal; each output is parameterized and learned independently. The observed symmetry is a direct reflection of the physical properties of the REMUS 100 AUV, which, as an axisymmetric vehicle, exhibits an identical hydrodynamic response to motions in the sway and heave directions. The fact that our model autonomously discovered and internalized this fundamental physical principle purely from observing trajectory data serves as strong validation. It shows that the framework is genuinely learning the underlying system physics, distinguishing it from a simple black-box curve-fitter.

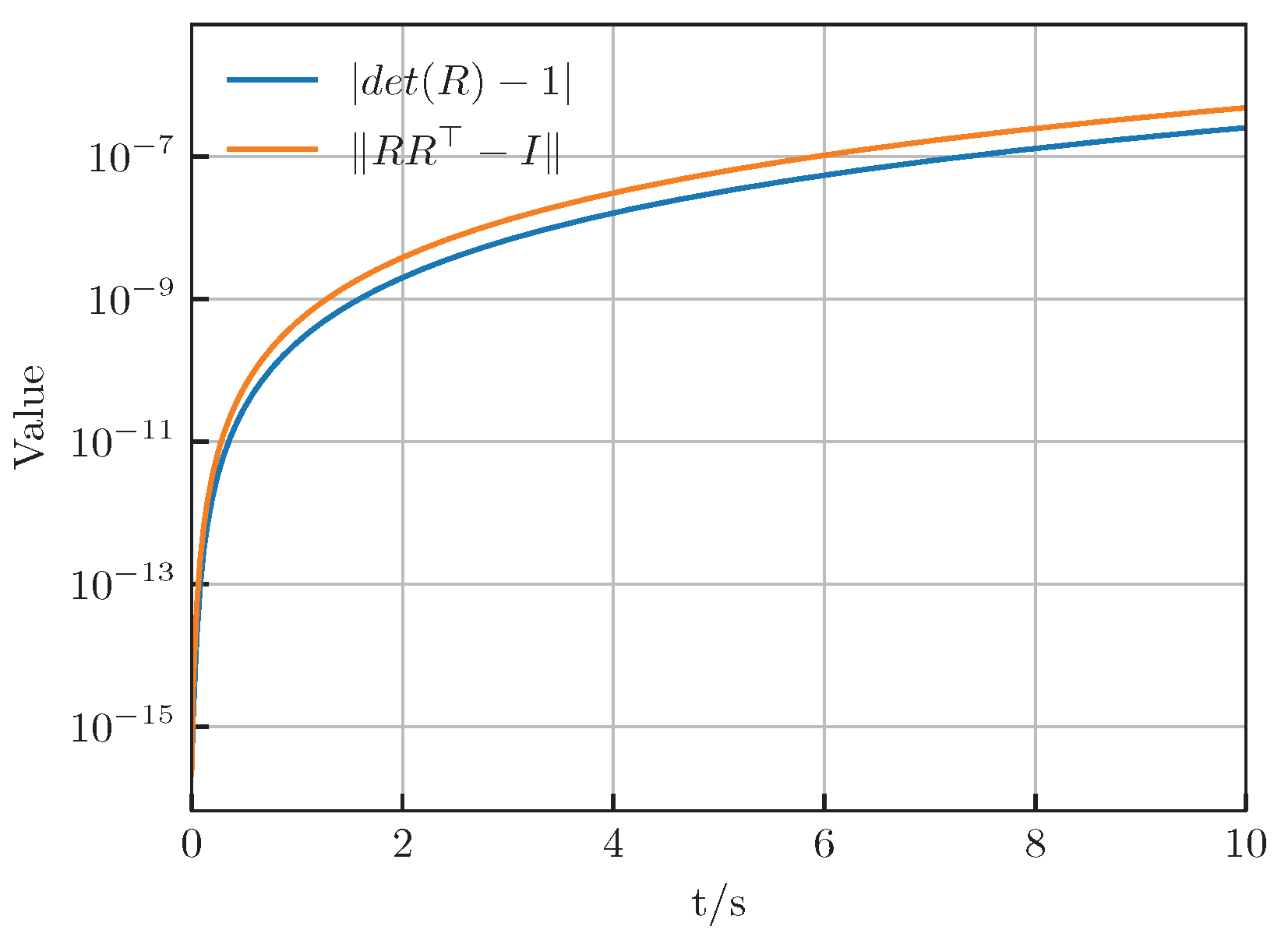

Figure 7 shows the variation of two variables associated with the rotation matrix over time, whose expected values are zero. Despite a gradual increase over time, the predicted values provided by the Hamiltonian neural network remain small with their absolute value peaking at around

. Therefore, it can be concluded that these values stay within a certain range. Our proposed method based on the Hamiltonian neural network does not rely on complex physical priors and parameter estimation, as opposed to traditional modeling methods based on differential equations. Despite its relatively simple structure, it efficiently captures the dynamic characteristics of underwater vehicles. Furthermore, the high accuracy in predicting rotational motion in the long term is still evident, which will be further demonstrated in subsequent case studies.

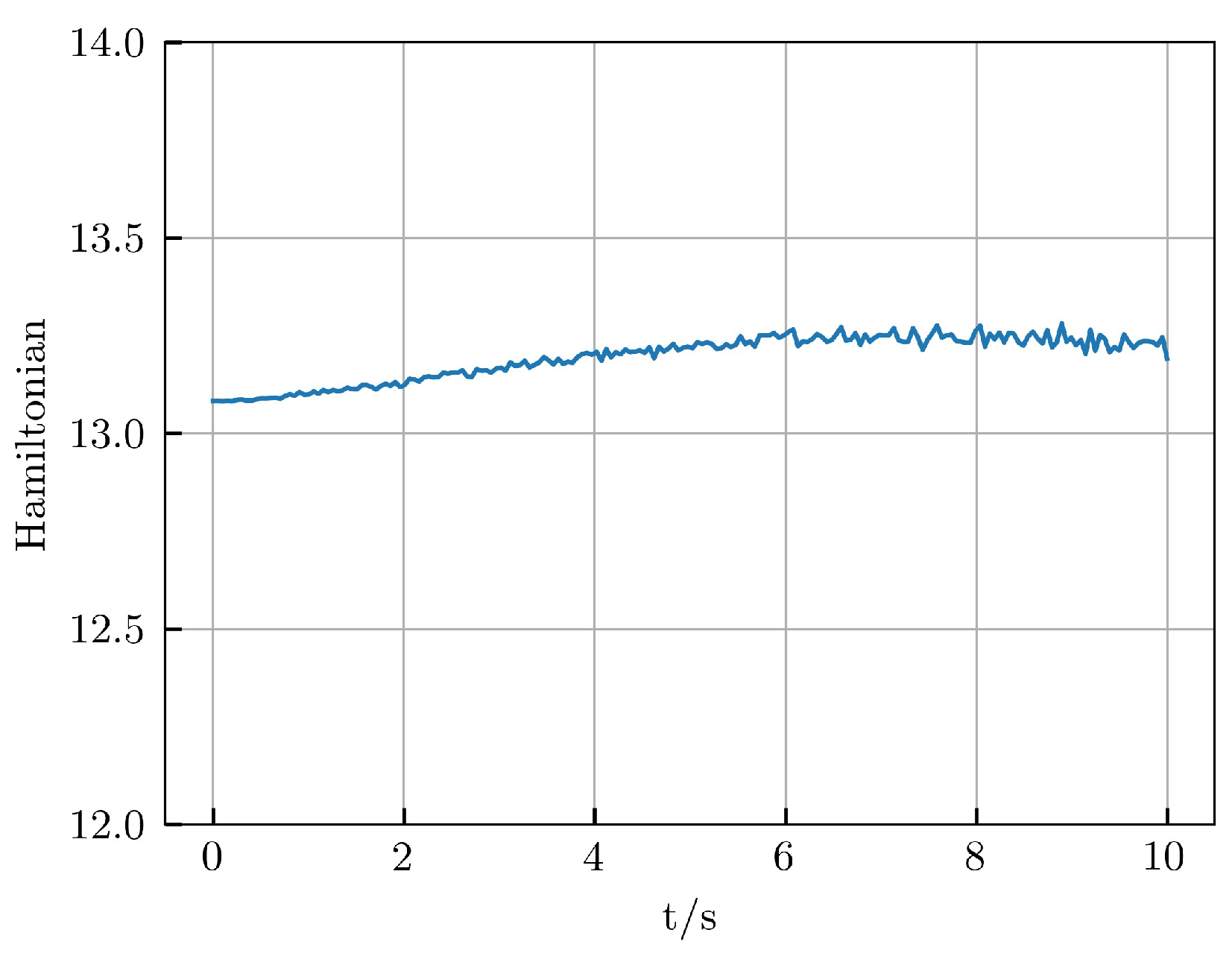

Figure 8 shows the predicted changes in the Hamiltonian over time. The Hamiltonian is not constant in this case; however, its fluctuations are very small. This implies that the model has learned the Hamiltonian’s invariance. With increasing prediction time, these fluctuations become larger, although they still remain confined to a very narrow range overall. This observation demonstrates that predicting the long-term motion of underwater vehicles is a challenging task, as errors tend to accumulate over time.

4.3. Case Study

This section presents a comparison between our HNN method and another one based on temporal convolutional networks (TCNs) [

36]. The TCN takes as input the historical motion state information of the underwater vehicle over a certain time window. It employs multiple layers of temporal convolutional operations to compress the high-dimensional input, extracting effective low-dimensional features in chronological order. The extracted features are passed through several fully connected layers with nonlinear activation functions to produce the acceleration information for the current moment. The TCN has a simpler structure than our HNN. However, after extensive training with a massive amount of data, it can also demonstrate strong performance. We trained the TCN using the dataset mentioned earlier and implemented it according to the guidelines presented in [

36].

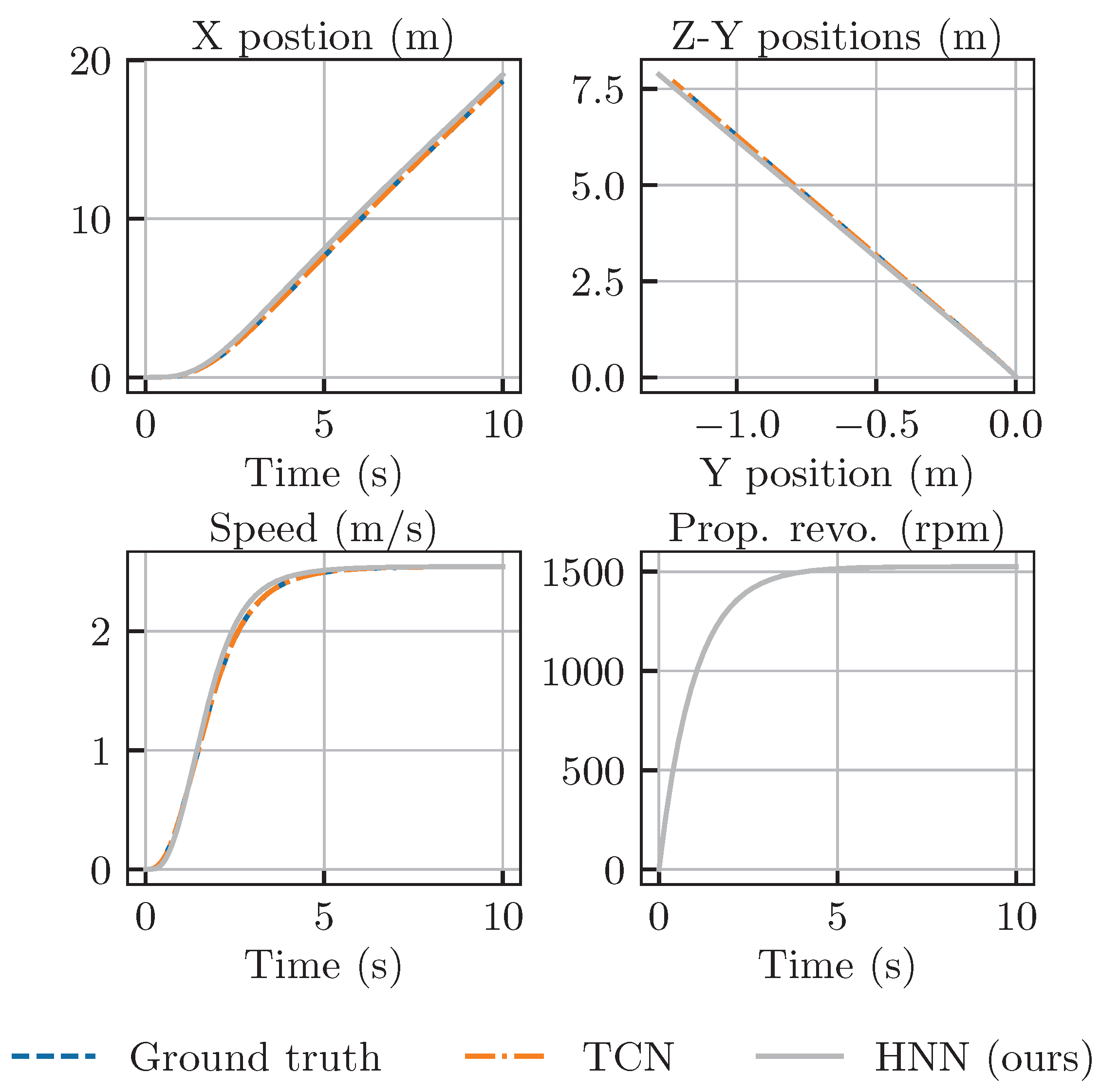

4.3.1. Simple Scenarios

Figure 9 shows how the TCN and HNN perform when predicting the straight-line motion of underwater vehicles. In this scenario, the rudder angles for both yaw and pitch are held steady at 0 degrees, while the propeller’s rotational speed gradually increases to 1500 rpm. Both neural network-based methods effectively simulate the vehicle’s motion with predictions of remarkably similar precision. The accuracy of the TCN slightly surpasses that of the HNN. Notably, due to the coupling effects of motion, the vehicle does not strictly move in a straight line along the inertial X-axis. Instead, it exhibits slight deviations in the Y and Z-axis directions, which stabilize over time.

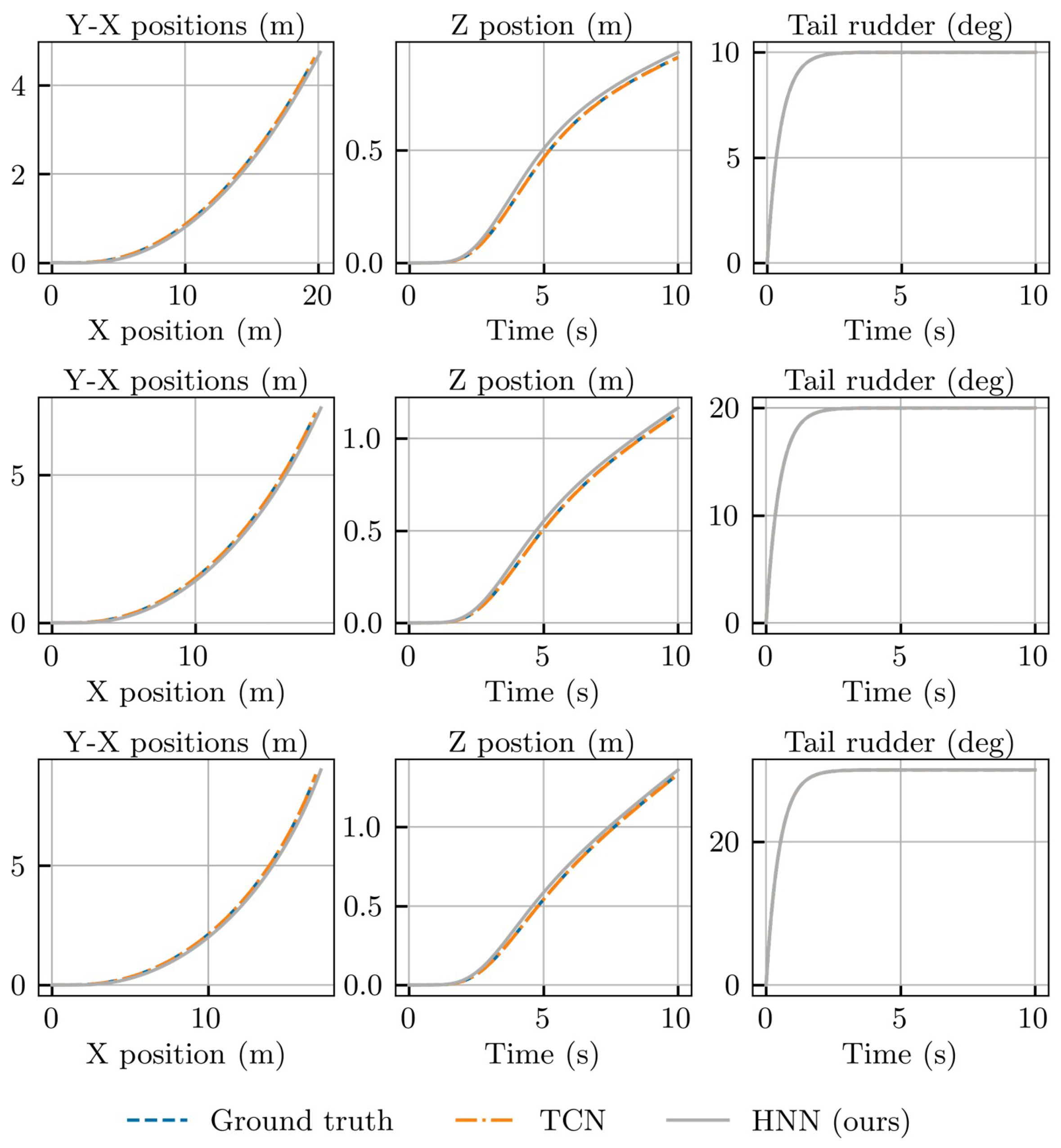

Figure 10 shows the variation in the motion state of the underwater vehicle after it is steered 10, 20, and 30 degree to the right while maintaining a propeller speed of 1500 rpm. The initial two columns depict the positional changes of the vehicle over time, and the third column demonstrates the variations in the yaw angle. The predictive outcomes of both neural network-based methods are almost identical. This suggests that both the TCN and HNN are skilled in anticipating the vehicle’s turning motion, thereby upholding a high degree of predictive accuracy, even in intricate scenarios.

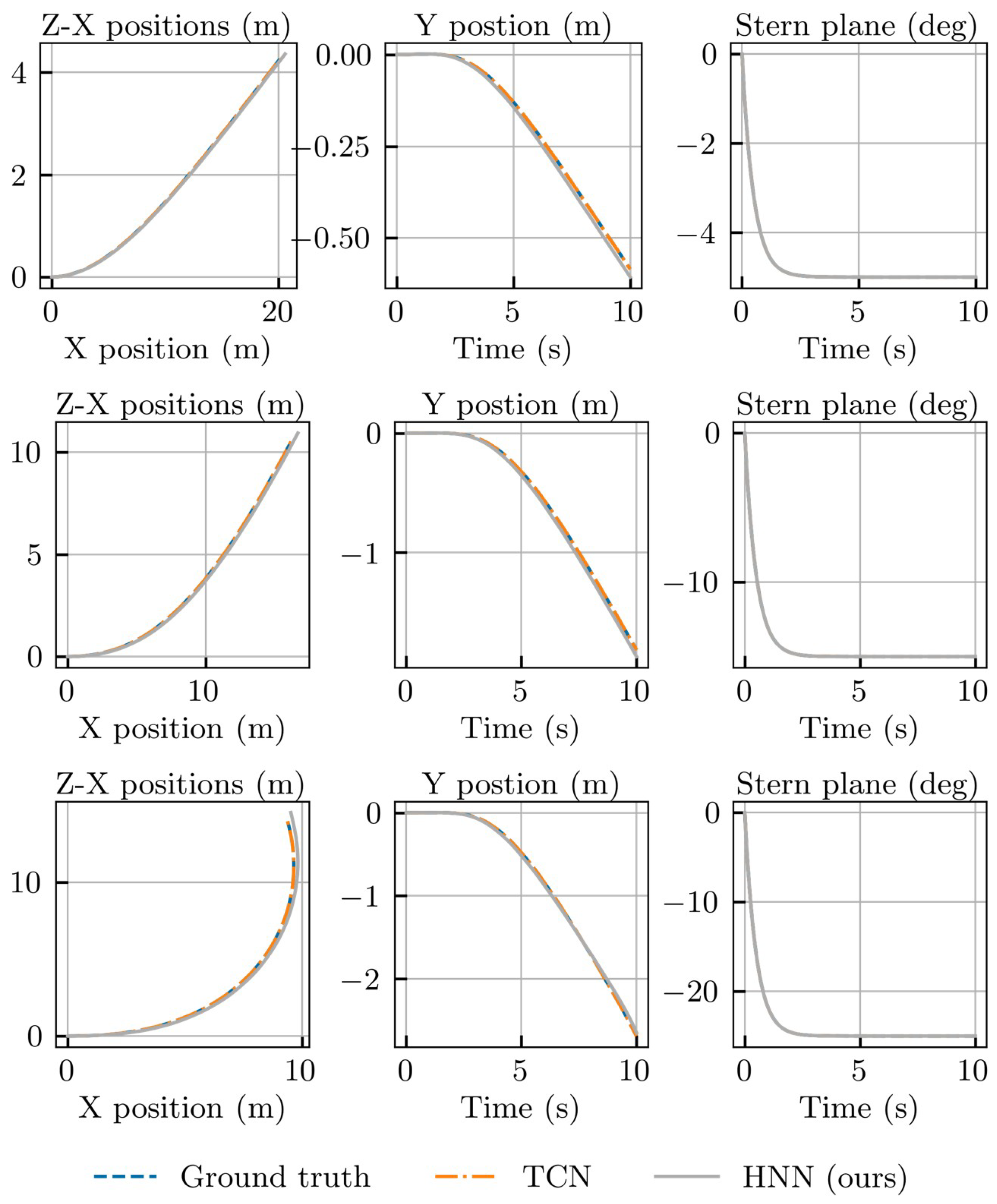

Figure 11 illustrates the motion of the underwater vehicle as the pitch angle is adjusted by 5, 10, and 15 degrees while maintaining a constant propeller speed of 1500 rpm. The vehicle initiates a descent in this scenario. The first two columns display the temporal evolution of the vehicle’s position, and the third column demonstrates the changes in the pitch angle. Both methods adeptly model the descent motion of the underwater vehicle with predictive outcomes closely aligning with benchmark data.

4.3.2. Complex Scenarios

The above analysis shows that the TCN method performs exceptionally well in simple scenarios with straight-line and turning motions even in the absence of physical priors. However, to demonstrate the benefits of the proposed HNN method, we analyze more complex motion scenarios.

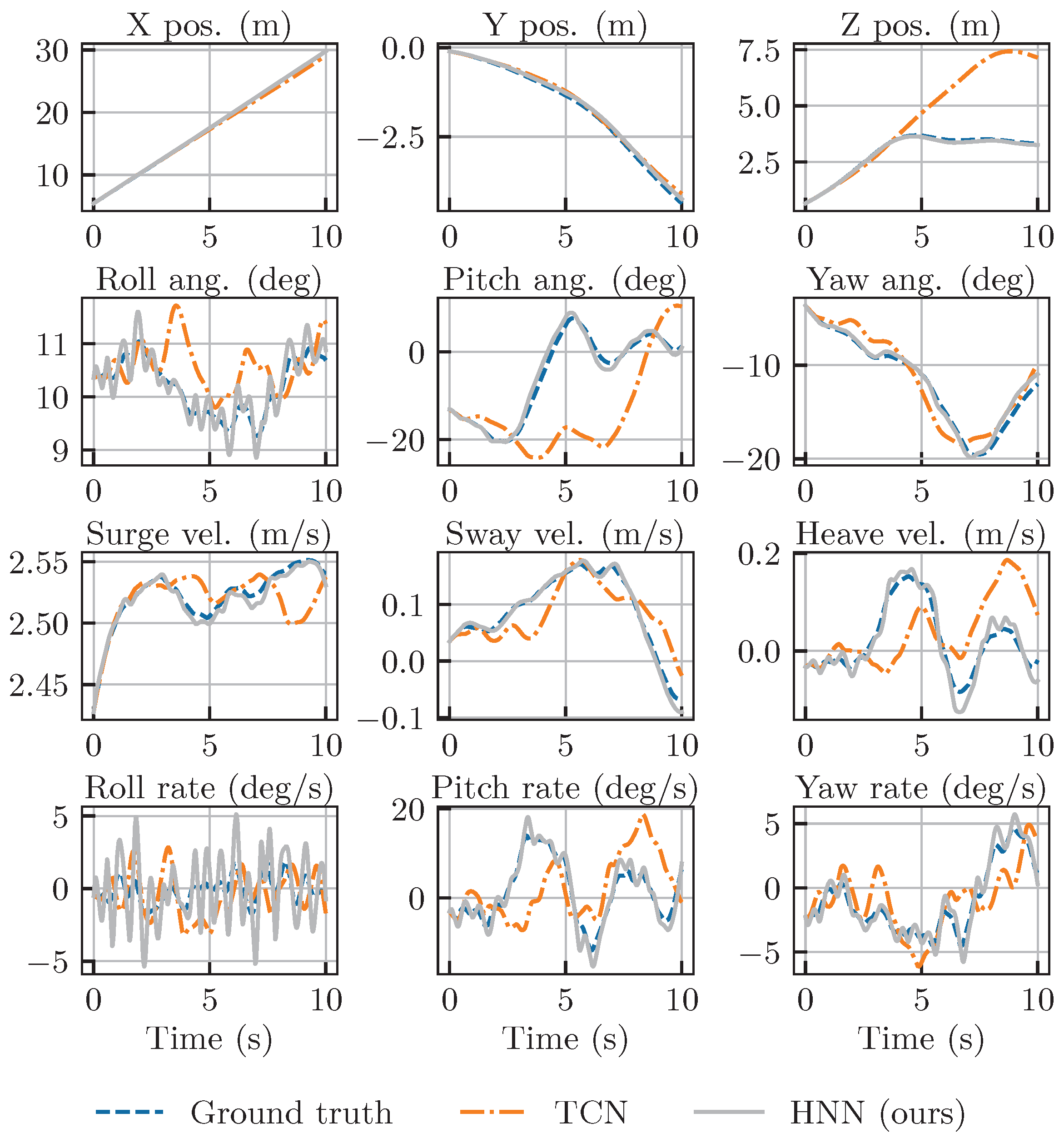

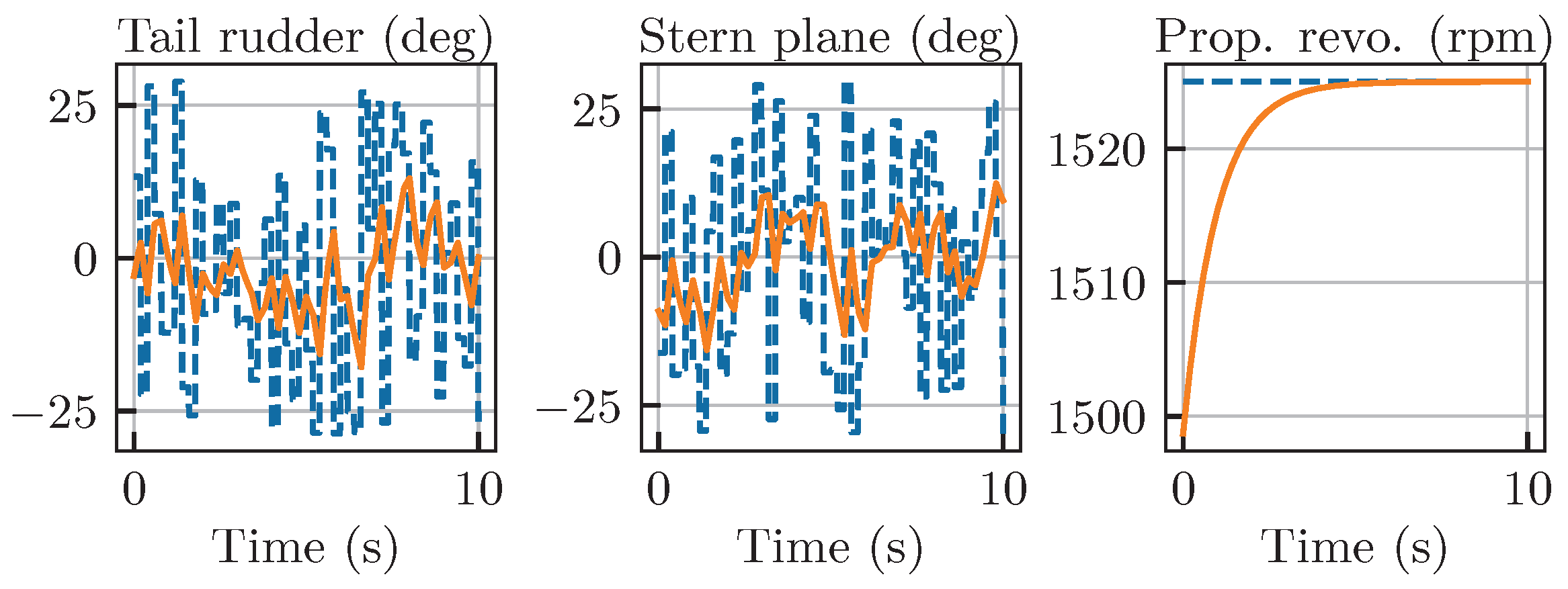

Figure 12 shows the changes in the motion state of the underwater vehicle due to random controls. The first two rows demonstrate the vehicle’s orientation in three-dimensional space, and the following two rows show the variations in velocity and angular velocity over time.

Figure 13 shows the control inputs corresponding to

Figure 12, where dashed lines represent desired control generated randomly, and solid lines represent the applied inputs.

As the complexity of the control inputs increases, the TCN method maintains relatively accurate predictions of the motion of the underwater vehicle for a short time. However, approximately 2 s after the initiation of motion, TCN’s predictions of velocity vectors other than the longitudinal velocity start to deviate from the benchmark. This results in a gradual difference between the predicted and the actual motion of the vehicle. It is important to note that the TCN method demonstrated exceptional performance in the simple motion scenarios that came before.

Although the HNN method did not demonstrate superior performance to the TCN in previous scenarios, it now outperforms the TCN’s performance in the more complex control scenario. In spite of HNN’s minor overestimation in predicting the vehicle’s velocity, it consistently delivers highly accurate predictions of the vehicle’s pose that closely match the actual outcomes. This emphasizes that the HNN-based motion modeling approach, which is based on robust physical principles, better captures the dynamic characteristics of underwater vehicles, going beyond mere data fitting. The HNN method maintains consistent predictive accuracy across a spectrum of control inputs that range from simple to complex.

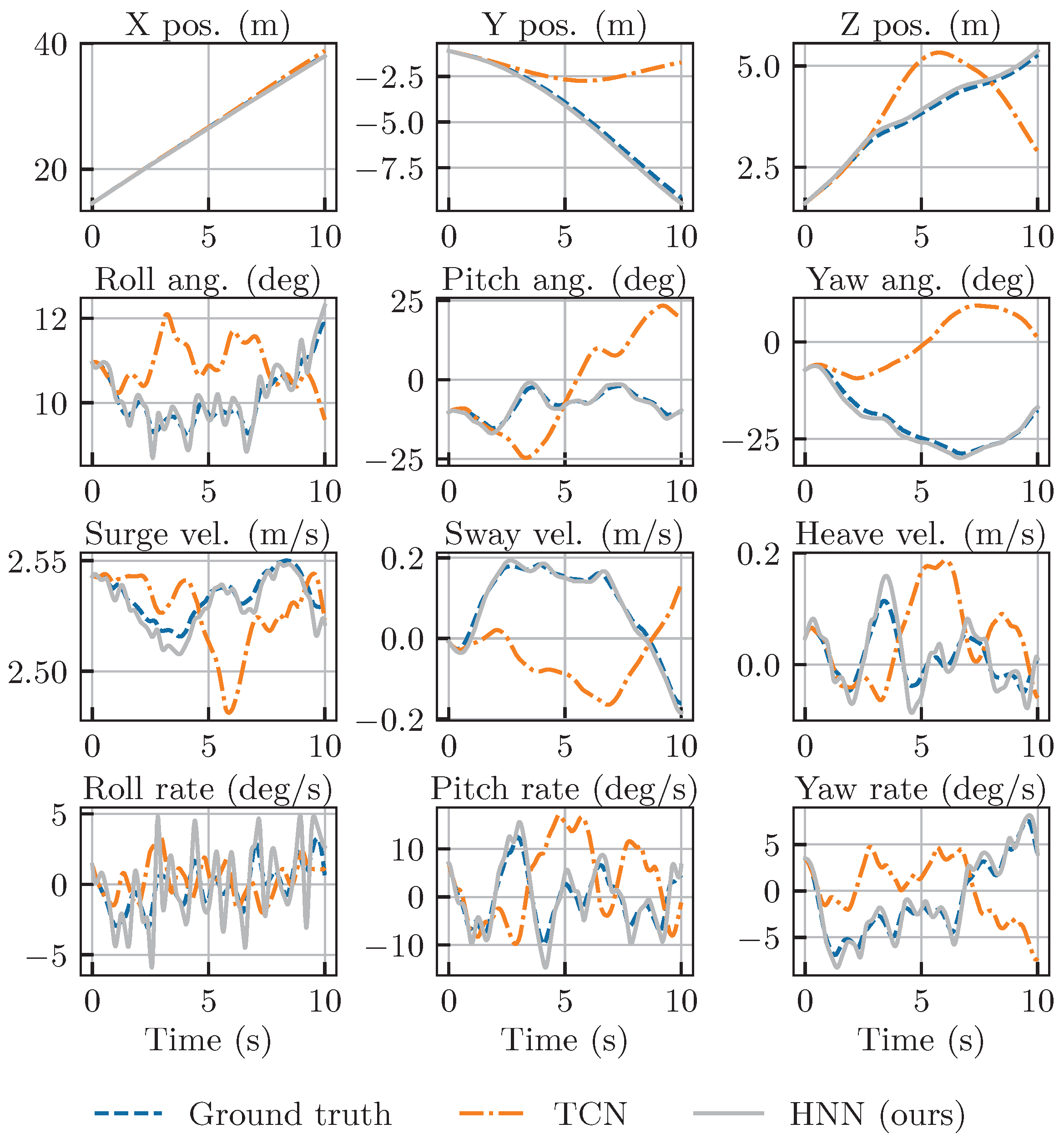

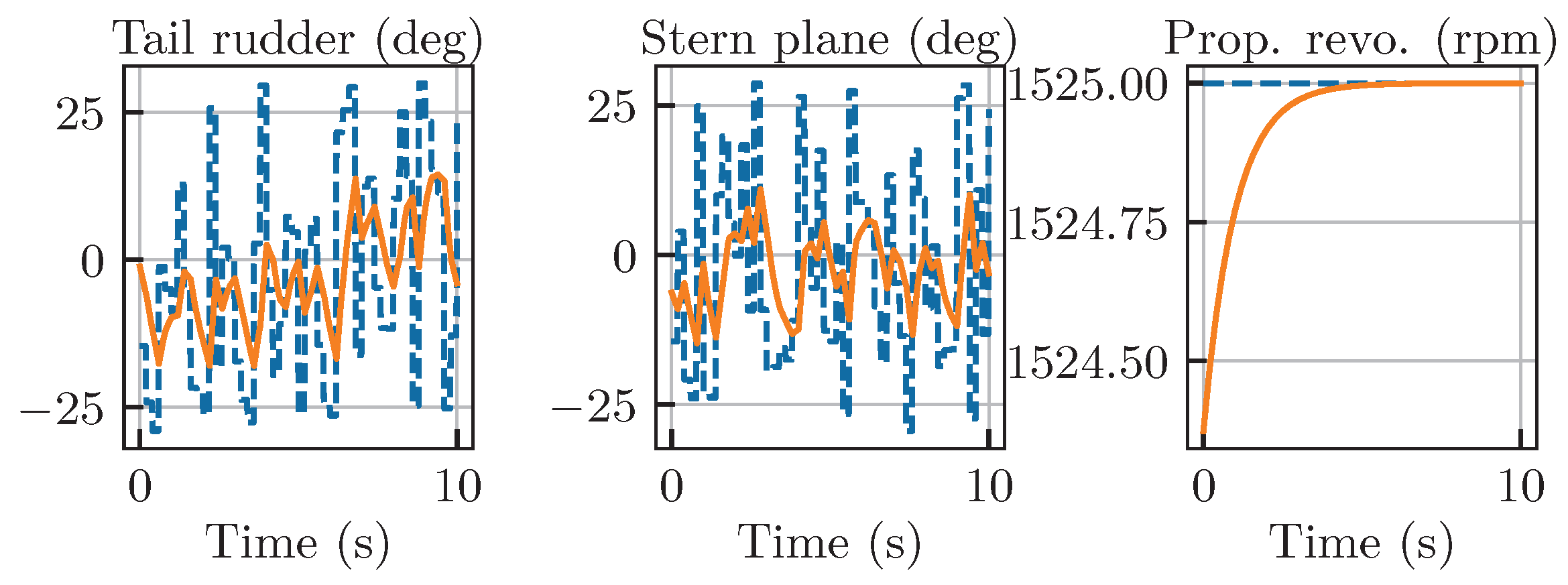

Figure 14,

Figure 15,

Figure 16 and

Figure 17 show the motion variation in response to two additional sets of random control inputs. The TCN method maintains high accuracy only during the initial stages of motion, which is followed by a sharp decline in performance. In contrast, our HNN maintains high accuracy consistently and produces predictive outcomes that closely match the true motion. It is worth noting that both the TCN and HNN provide relatively accurate predictions of the longitudinal motion of the underwater vehicle. This is attributed to underwater vehicles such as REMUS 100, which have torpedo-like profiles and predominantly move longitudinally, accounting for a considerable part of all motion components. Through extensive data training, the TCN method is also capable of capturing this leading motion pattern. However, the proposed HNN method, which is built on the physical principles of underwater vehicle motion, consistently performs well in predicting all motion components. Therefore, the HNN outperforms in various scenarios.

While the trajectory plots in

Figure 14,

Figure 15,

Figure 16 and

Figure 17 provide a compelling qualitative illustration of the HNN’s superior robustness, a quantitative analysis is necessary to rigorously assess the performance gap between the two models. To this end, we conducted a series of 10 tests using unique, randomly generated complex control inputs, mirroring the conditions in the qualitative examples. In each test, we evaluated the 10 s prediction performance of both the HNN and TCN models against the ground truth. The root mean square error (RMSE) was calculated across the entire trajectory for key state variables.

The aggregated results of this analysis are presented in

Table 2. The data reveal a dramatic and unambiguous difference in performance. The HNN model consistently achieves extremely low prediction errors across all metrics, with a mean position error of only 3.3 cm and very low variance, indicating its stable and reliable performance across different chaotic scenarios. In stark contrast, the TCN model’s predictions diverge significantly, resulting in a mean position error of over 5.4 m—more than 160 times greater than that of the HNN. Its errors for attitude, linear velocity, and angular velocity are also one to two orders of magnitude higher than those of our model.

This quantitative analysis provides conclusive evidence that the physics-informed structure of the HNN is essential for maintaining long-term prediction stability and accuracy when faced with complex, out-of-distribution control inputs. Under these challenging conditions, the purely data-driven TCN model, despite its strong performance in simple scenarios, proves to be unreliable and fails catastrophically.