1. Introduction

In the context of the rapid advancement of intelligent shipping and autonomous unmanned systems, unmanned surface vehicles (USVs) have shown considerable application potential in fields such as environmental monitoring and maritime search and rescue, owing to their flexibility and low cost [

1]. However, current obstacle avoidance technologies for USVs still face dual challenges. Firstly, the perception systems of single-platform USVs are limited by the field of view of sensors and are susceptible to environmental occlusion, resulting in notable perceptual blind spots in complex scenarios. Secondly, traditional collision avoidance algorithms generally suffer from inefficiencies in path planning and insufficient generalization performance, making it difficult for them to adapt to complex and dynamic navigation environments. In light of these shortcomings in both perception and decision-making, improving the efficient autonomous obstacle avoidance capability of USVs in complex waters has become a crucial technology for overcoming the bottlenecks that restrict their wider application [

2,

3].

Currently, most USVs rely on lidar for environmental perception to support decision-making for obstacle avoidance [

4]. Although lidar can fulfill basic perception requirements in conventional scenarios, it still exhibits certain limitations. Owing to the attenuation of laser wavelengths, the detection range of lidar is generally confined to within 200 meters, which hinders the perception of distant obstacles. More critically, in environments with dense obstacles, lidar has difficulty detecting vessels obscured by other objects, leading to localized perceptual blind spots [

5,

6]. These perceptual deficiencies considerably compromise the safety of autonomous navigation for USVs.

With the rapid development of the low-altitude economy, an increasing number of researchers have introduced unmanned aerial vehicles (UAVs) into the maritime domain, aiming to leverage their high-altitude perception advantages to compensate for the limitations of USVs in environmental perception [

7,

8]. For instance, Wang et al. proposed a vision-based collaborative search-and-rescue system involving UAVs and USVs, in which the UAV identifies targets and the USV serves as a rescue platform, navigating to the target location to execute rescue operations [

9]. Li et al. implemented cooperative tracking and landing between UAVs and USVs using nonlinear model predictive control, ensuring stable tracking of USVs by UAVs and enabling remote target detection in maritime combat systems [

10]. Zhang et al. developed a cooperative control algorithm for USV-UAV teams performing maritime inspection tasks, achieving coordinated path following between the vehicles [

11]. Chen et al. integrated visual data from USVs and UAVs with multi-target recognition and semantic segmentation techniques to successfully detect and classify various objects around USVs, as well as to accurately distinguish navigable from non-navigable regions [

12]. Despite these significant achievements, existing studies predominantly treat UAVs as external sensing units and fall short of effectively integrating perceptual information into USVs’ decision-making systems. This limitation curtails the potential of UAVs to enhance the autonomous navigation capabilities of USVs. Consequently, there is a pressing need to develop methodologies that embed UAV-derived perceptual information into the obstacle avoidance decision-making process of USVs, thereby extending their environmental perception range and improving navigation safety in complex aquatic environments.

In the domain of collision avoidance algorithms for USVs, traditional path planning methods continue to encounter significant challenges. Although the A* algorithm guarantees globally optimal paths, its high computational complexity in high-dimensional dynamic environments leads to inadequate real-time performance [

13]. The RRT* algorithm provides probabilistic completeness but exhibits limitations in path smoothness and dynamic adaptability [

14]. The dynamic window approach (DWA) is susceptible to local optima, primarily due to its dependence on local perception information for velocity space sampling [

15]. Meanwhile, the velocity obstacle (VO) method demands high precision in obstacle motion prediction and often demonstrates delayed responses in complex dynamic environments [

16]. In summary, these conventional approaches rely on manually predefined rules and model parameters, resulting in limited generalization capability in complex scenarios, which directly constrains the efficiency of USV decision-making for collision avoidance.

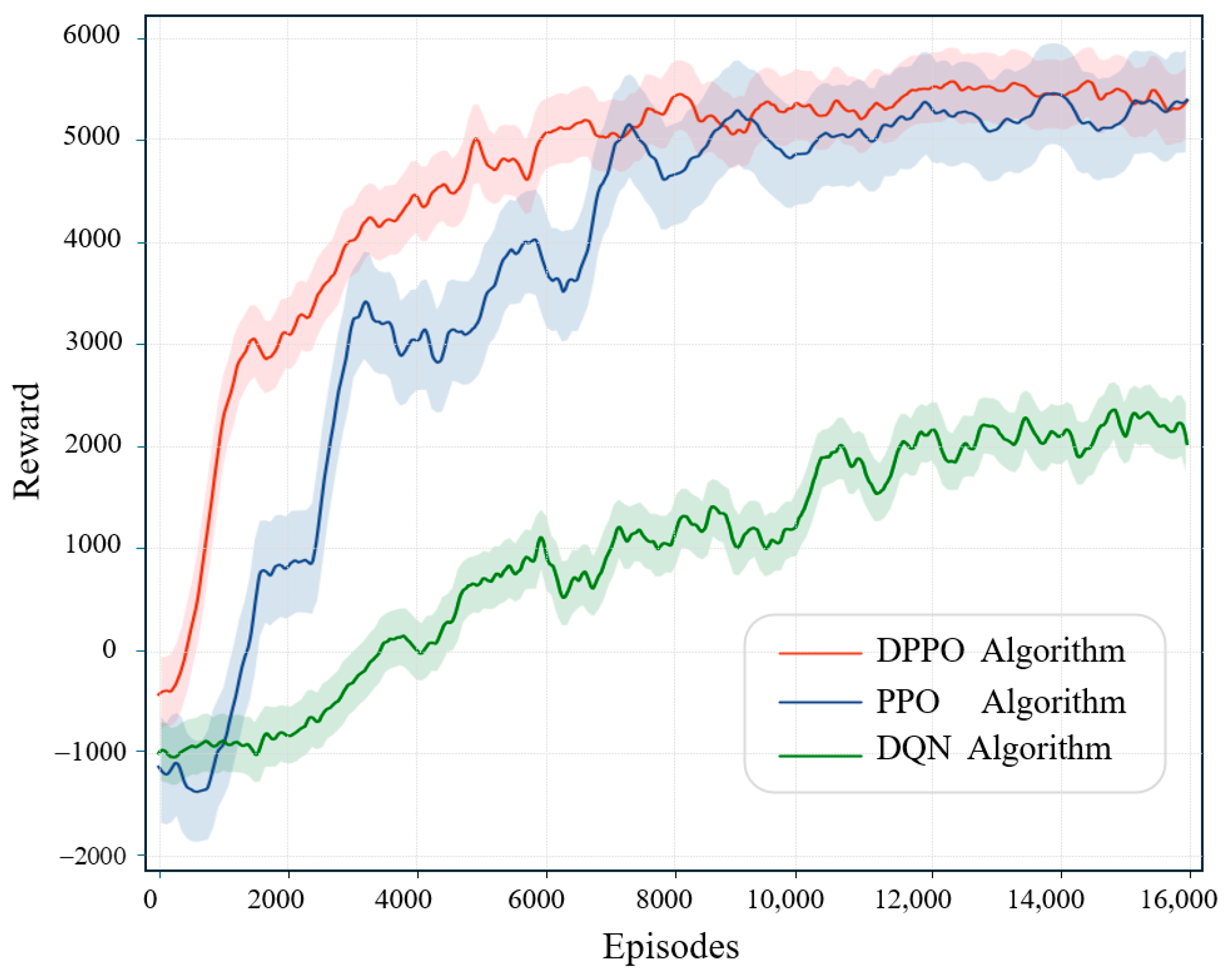

Breakthroughs in artificial intelligence have opened new pathways for collision avoidance research in USVs through learning-based intelligent decision-making methods [

17,

18,

19]. Among these, deep reinforcement learning (DRL) algorithms optimize navigation policies through continuous interaction with complex dynamic environments, leveraging autonomous learning mechanisms to enhance generalization capability in unknown scenarios. As a result, DRL has been widely adopted for ship collision avoidance tasks [

20,

21,

22]. For instance, Chen et al. developed a Q-learning-based path planning algorithm that enabled USVs to achieve autonomous navigation through iterative policy optimization, without relying on manual expertise [

23]. However, Q-learning was susceptible to the curse of dimensionality in high-dimensional state spaces. The Deep Q-Network (DQN) mitigated computational burden by substituting Q-tables with deep neural networks. Fan et al. implemented an enhanced DQN for USV obstacle avoidance, reporting favorable performance in dynamic settings [

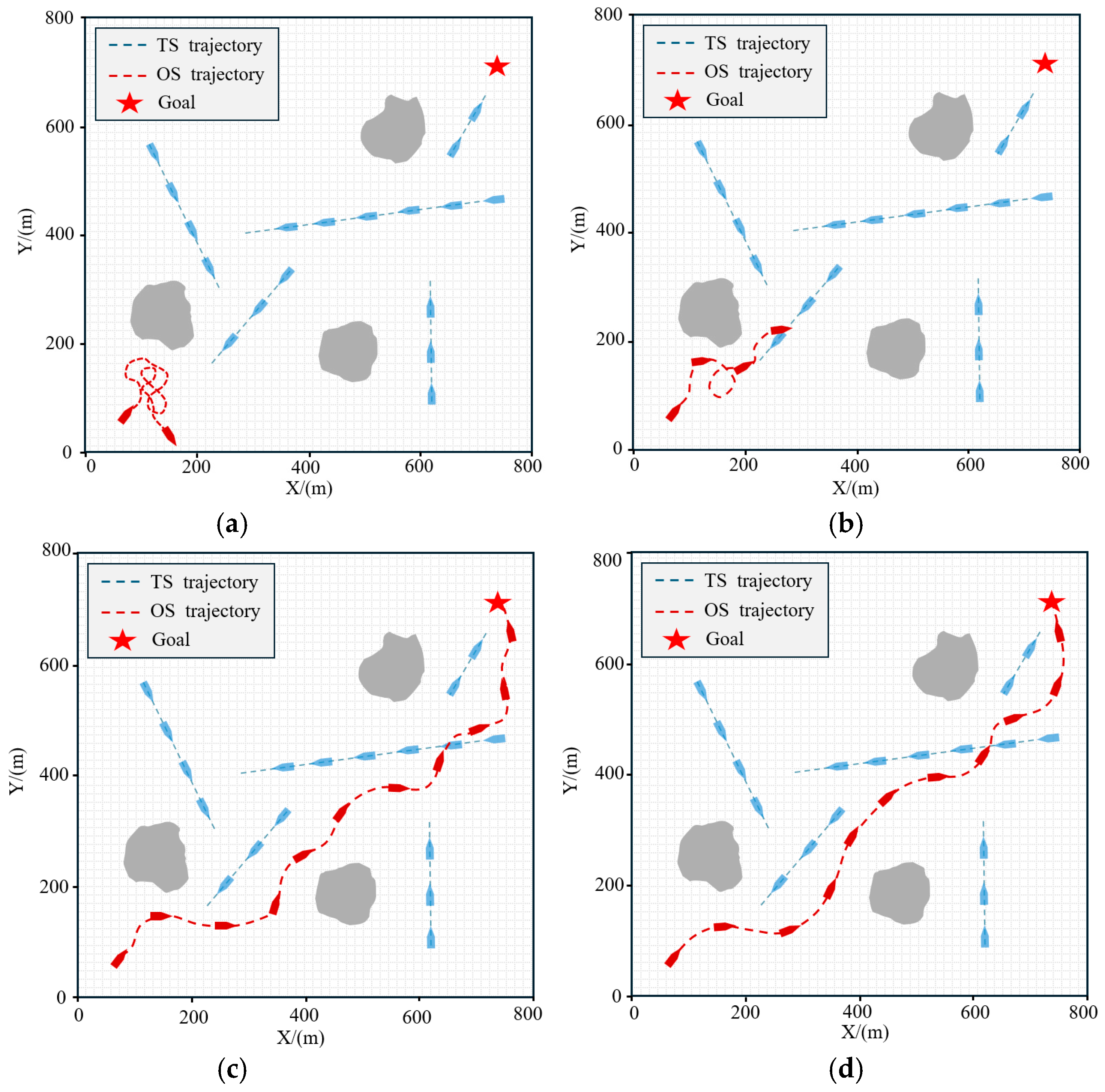

24]. Despite these advances, DQN and other value-based DRL algorithms operate on discrete action spaces, which limits fine-grained and continuous control in obstacle avoidance behavior and often results in oscillatory trajectories [

25].

To address the limitations of discrete action spaces, policy gradient-based deep reinforcement learning methods have increasingly become a research focus. These approaches leverage an Actor network to directly output continuous actions, thereby substantially enhancing control precision. For example, Cui et al. enhanced the Twin Delayed Deep Deterministic Policy Gradient algorithm by incorporating multi-head self-attention and Long Short-Term Memory mechanisms, constructing a historical environmental information processing framework that improves the stability of continuous action generation in complex environments [

26]. Lou et al. combined the dynamic window approach and velocity obstacle method within the Deep Deterministic Policy Gradient framework to optimize the reward mechanism, achieving a multi-objective balance between collision avoidance safety and navigation efficiency [

27]. Xu et al. integrated a prioritized experience replay strategy into DDPG, dynamically adjusting sample weights to expedite training convergence in critical collision avoidance scenarios [

28]. Despite these advancements, the aforementioned methods still exhibit certain limitations regarding training stability, convergence efficiency, or sensitivity to hyperparameters.

In contrast, the proximal policy optimization (PPO) algorithm significantly enhances training stability and convergence reliability by utilizing importance sampling and a policy clipping mechanism. Xia et al. constructed a multi-layer perceptual state space and incorporated convolutional neural networks, demonstrating the effectiveness of PPO in complex obstacle avoidance scenarios [

29]. Sun et al. further extended its environmental perception capability, enabling stronger generalization performance in unfamiliar waters [

30]. Although these studies show promising results, the design of their reward functions remains subject to notable limitations. Firstly, reward function design often relies heavily on researchers’ empirical knowledge, lacking a systematic theoretical foundation and interpretability. Secondly, most existing works focus predominantly on collision avoidance safety while overlooking other navigation metrics such as path smoothness [

31]. This inadequacy not only leads to convoluted USV trajectories but also causes low learning efficiency during training due to sparse-reward problems [

32]. These limitations substantially constrain further improvement of overall algorithm performance. Consequently, developing a theoretically sound, highly interpretable, and multi-objective balanced reward mechanism has emerged as a crucial research direction for advancing the performance of unmanned surface vehicle collision avoidance systems.

To address the aforementioned challenges, this paper proposes an obstacle avoidance scheme for USVs incorporating vision assistance from a UAV, and develops the DWA-PPO (DPPO) collision avoidance algorithm. The principal contributions of this work are summarized as follows:

(1). By leveraging the high-altitude perspective of UAVs, a high-dimensional state space characterizing obstacle distribution is constructed. This representation is integrated with a low-dimensional state space derived from the USV’s own state information, forming a hierarchical state space framework that enhances the comprehensiveness and reliability of environmental information for decision-making during navigation.

(2). By integrating DWA’s trajectory evaluation, a multi-layered dense reward mechanism is established, combining heading, distance, and proximity rewards with COLREGs-based compliance incentives to guide USVs toward safe, efficient, and regulation-compliant collision avoidance.

The remainder of this paper is organized as follows.

Section 2 introduces the mathematical models of UAV/USV and COLREGs, and constructs the collision risk assessment model.

Section 3 provides a detailed description of the proposed collision avoidance method. The design of simulation experiments and analysis of results are presented in

Section 4. Finally,

Section 5 concludes the paper and outlines future research directions.

2. Materials and Methods

2.1. Mathematical Models of UAV and USV

To describe the UAV and USV models, a coordinate system architecture as shown in

Figure 1 is established. The

represents the inertial coordinate system, with its origin

fixed at a specific point. Assuming that both the UAV and the USV are rigid bodies, the coordinate systems

and

denote the body coordinate system and the USV-attached coordinate system, respectively, with their origins

and

positioned at the respective centers of mass. Based on the above coordinate system architecture, the mathematical models of the UAV and the USV are established as follows:

In Equation (1), is the total mass of the UAV, represents the position of the UAV in the inertial coordinate system, denote the roll, pitch, and yaw angles of the UAV, is the total thrust generated by the rotors, is the moment of inertia of the UAV, is the angular velocity of the UAV in the body coordinate system, is the air resistance coefficient.

In Equation (2), represents the ship’s position and heading angle in the inertial frame, denote the longitudinal velocity, lateral velocity, and angular velocity around the z-axis in the body-fixed frame, respectively. is the yaw response time constant, is the maneuverability index, represents disturbances caused by unmodeled dynamics, denotes inherent uncertainties in the internal model, and stands for uncertain external disturbances. is the rudder angle. A change in the rudder angle will alter the forces acting on the ship, which in turn affects the angular velocity, thereby achieving the steering operation of the ship.

2.2. Ship Domain Model

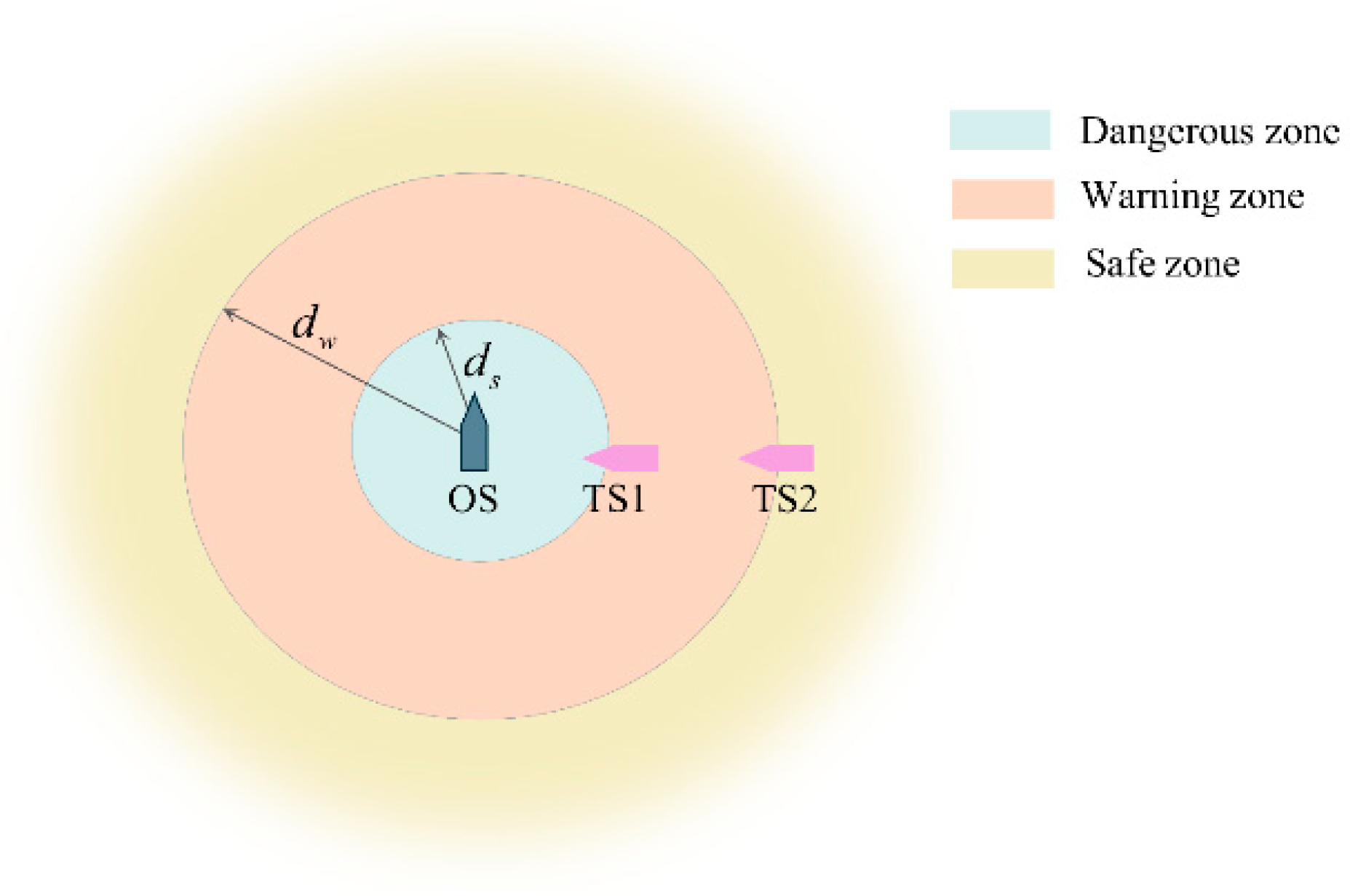

The ship domain serves as a critical criterion for assessing collision risk at sea. The potential intrusion of another vessel into this domain signifies a substantial increase in collision risk, making immediate collision avoidance preparations mandatory. This paper draws on the ship domain research of Lou et al. for USVs and constructs a concentric circular ship domain model based on the Distance to Closest Point of Approach (DCPA), as illustrated in

Figure 2 [

27]. The model is centered on the USV and radiates outward. It uses DCPA thresholds to define different risk zones:

is set as the boundary threshold between the danger zone and the warning zone, and

as the threshold between the warning zone and the safe zone. These two distance thresholds allow for a clear distinction among various collision risk levels. The characteristics of each zone and the corresponding collision avoidance strategies are described as follows:

Safe zone: Defined with as the inner boundary, the USV possesses sufficient controllable maneuvering space within this region, making collision avoidance measures unnecessary.

Warning zone: Situated between and , this is the critical threshold interval for collision decision-making. If the DCPA decreases over time, it indicates an increasing collision risk, and the USV must enter a preparedness state for collision avoidance to prevent entering the danger zone.

Dangerous zone: Defined with as the outer boundary, when other ships enter this region, they pose a direct threat to the navigation safety of the USV, necessitating immediate emergency collision avoidance measures.

Based on the above ship domain division, the USV can execute differentiated collision avoidance strategies according to the zone it is in, enabling precise prevention and control of collision risks.

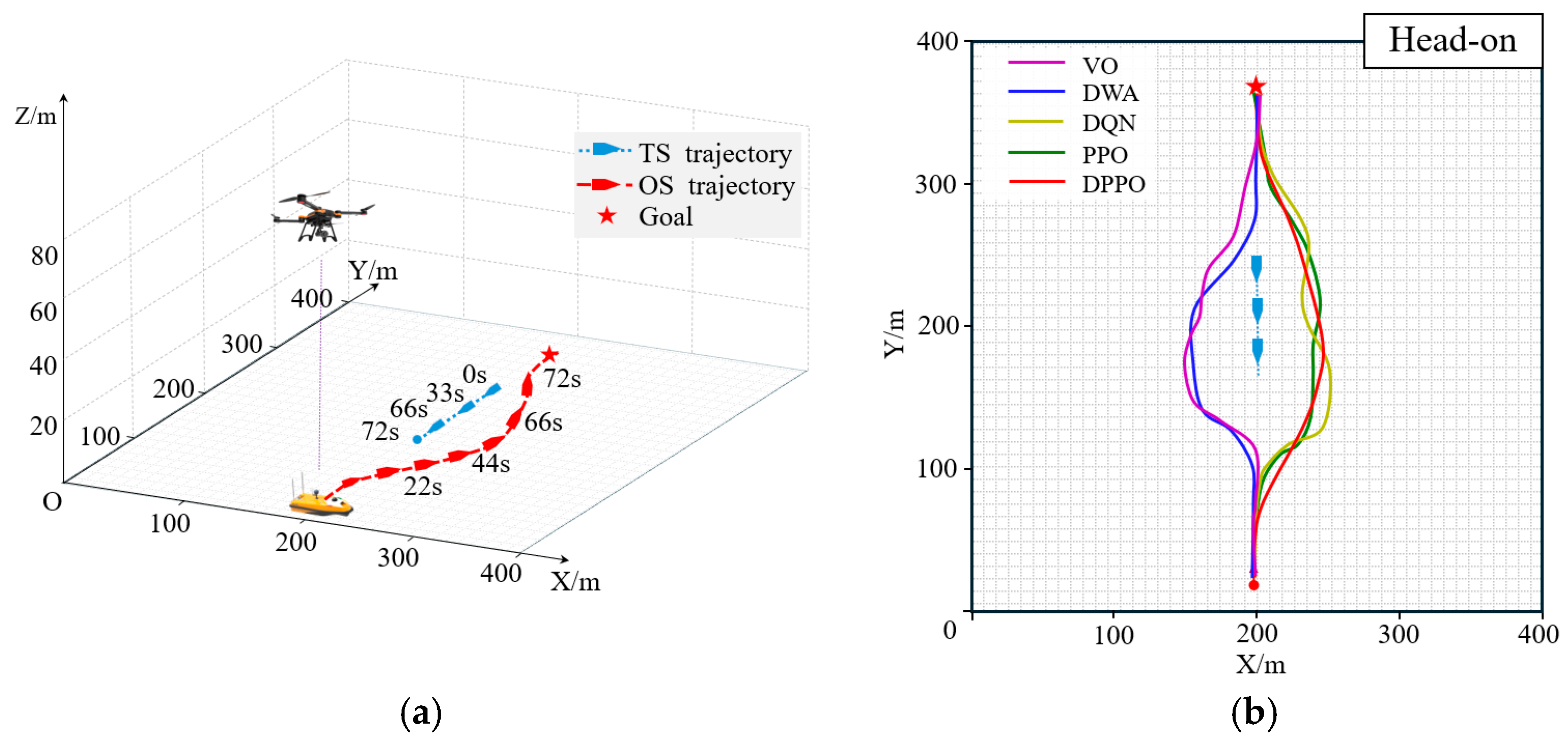

2.3. COLREGs

The core objective of COLREGs is to standardize ship operations to prevent maritime collisions. In the research of autonomous USV collision avoidance, strictly adhering to COLREGs rules is fundamental to ensuring safe navigation [

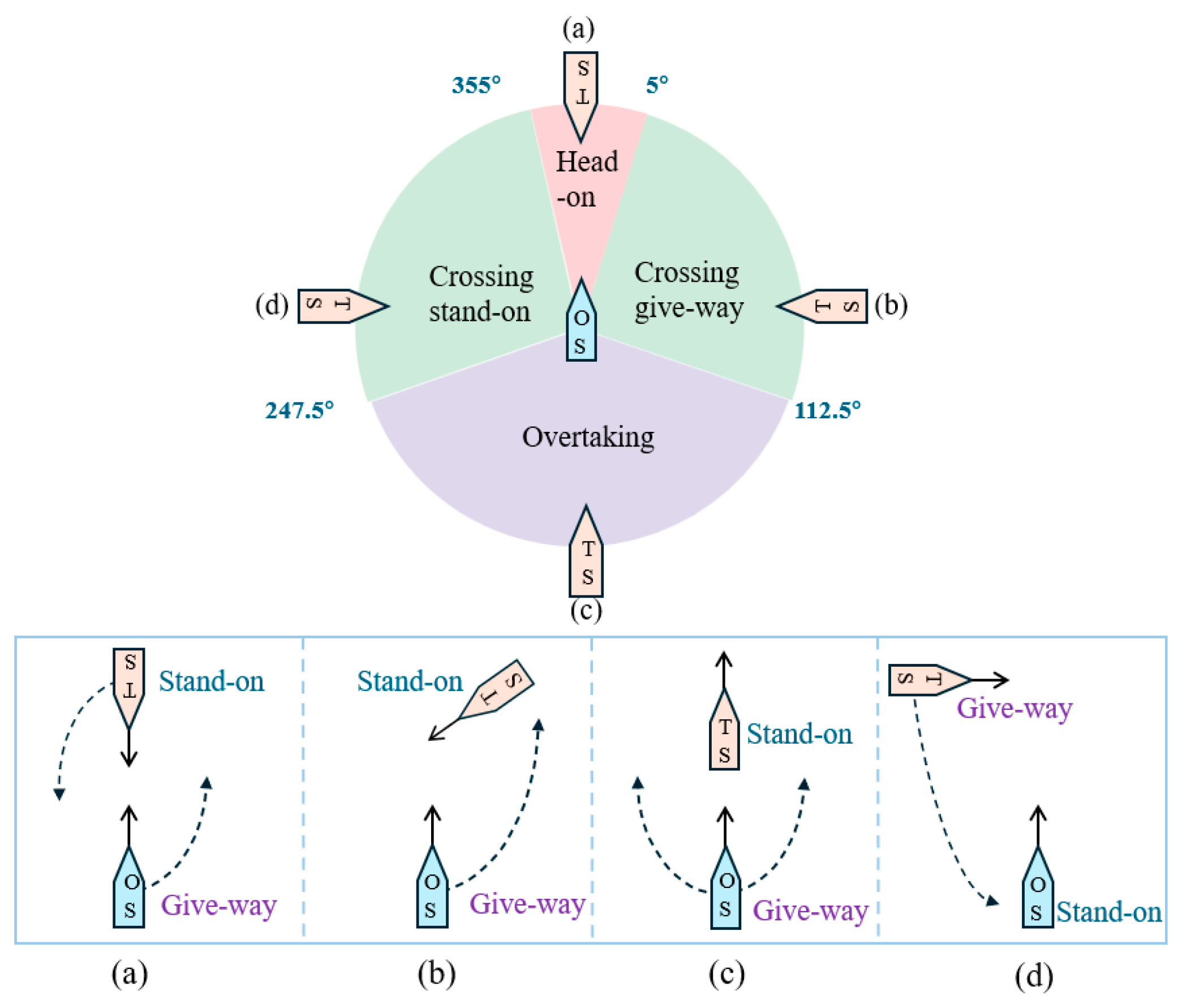

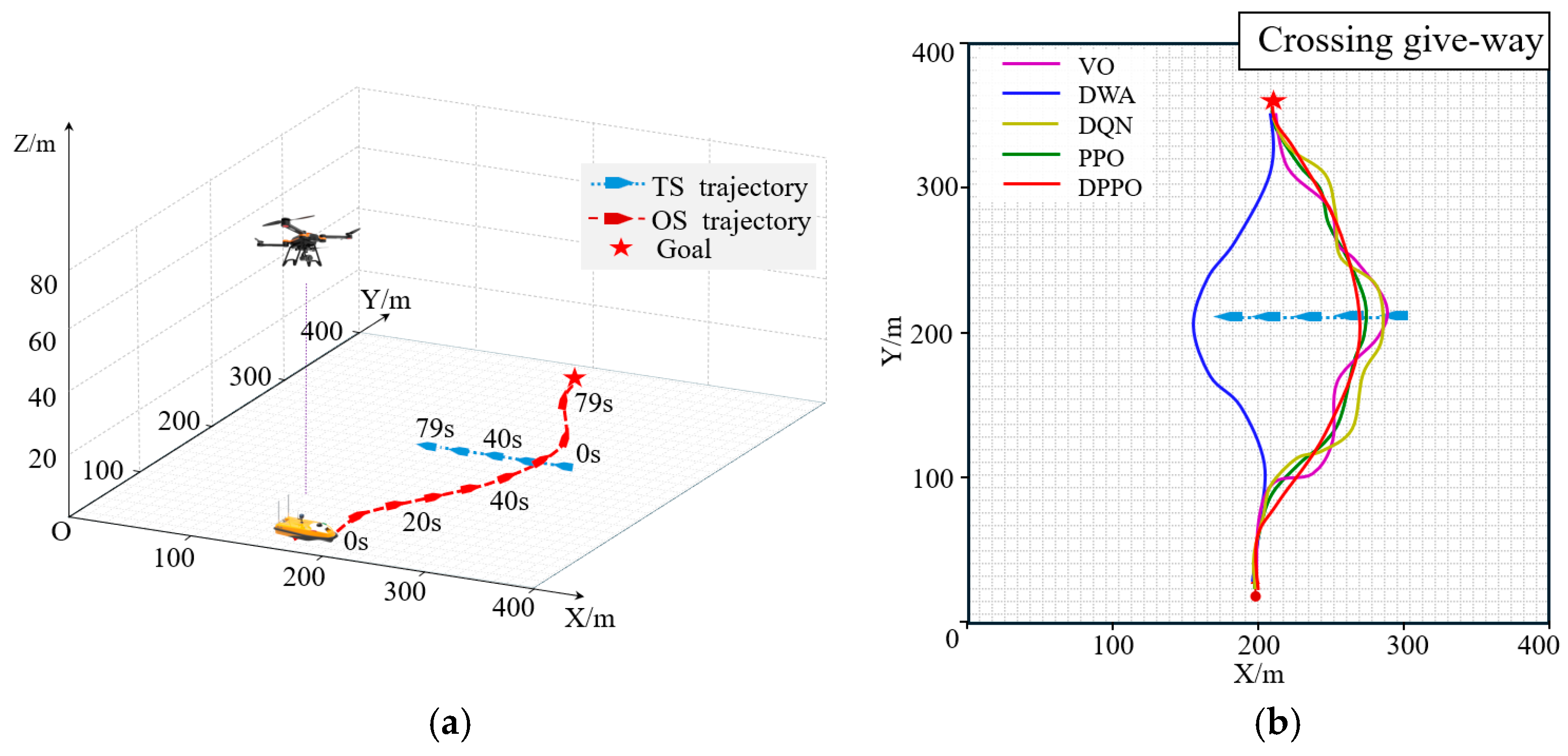

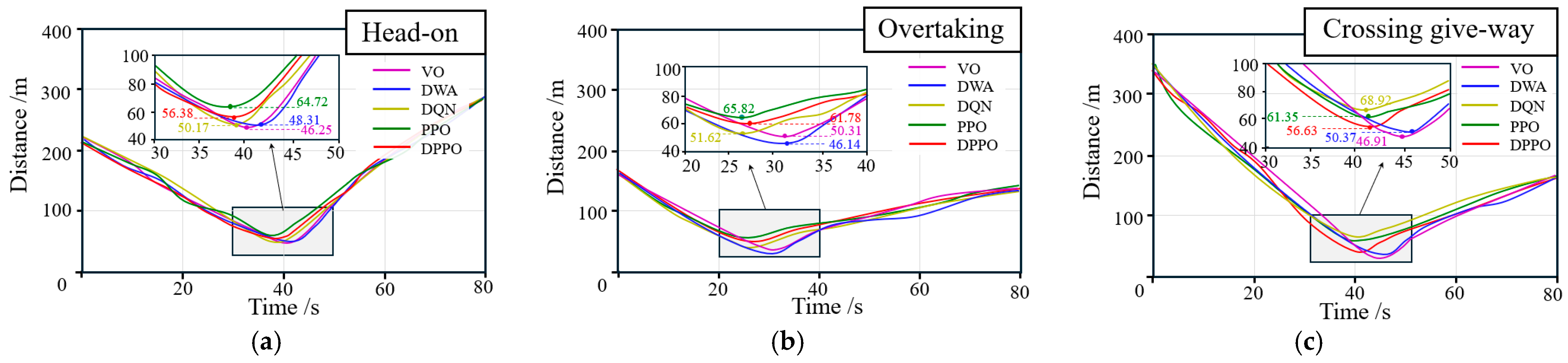

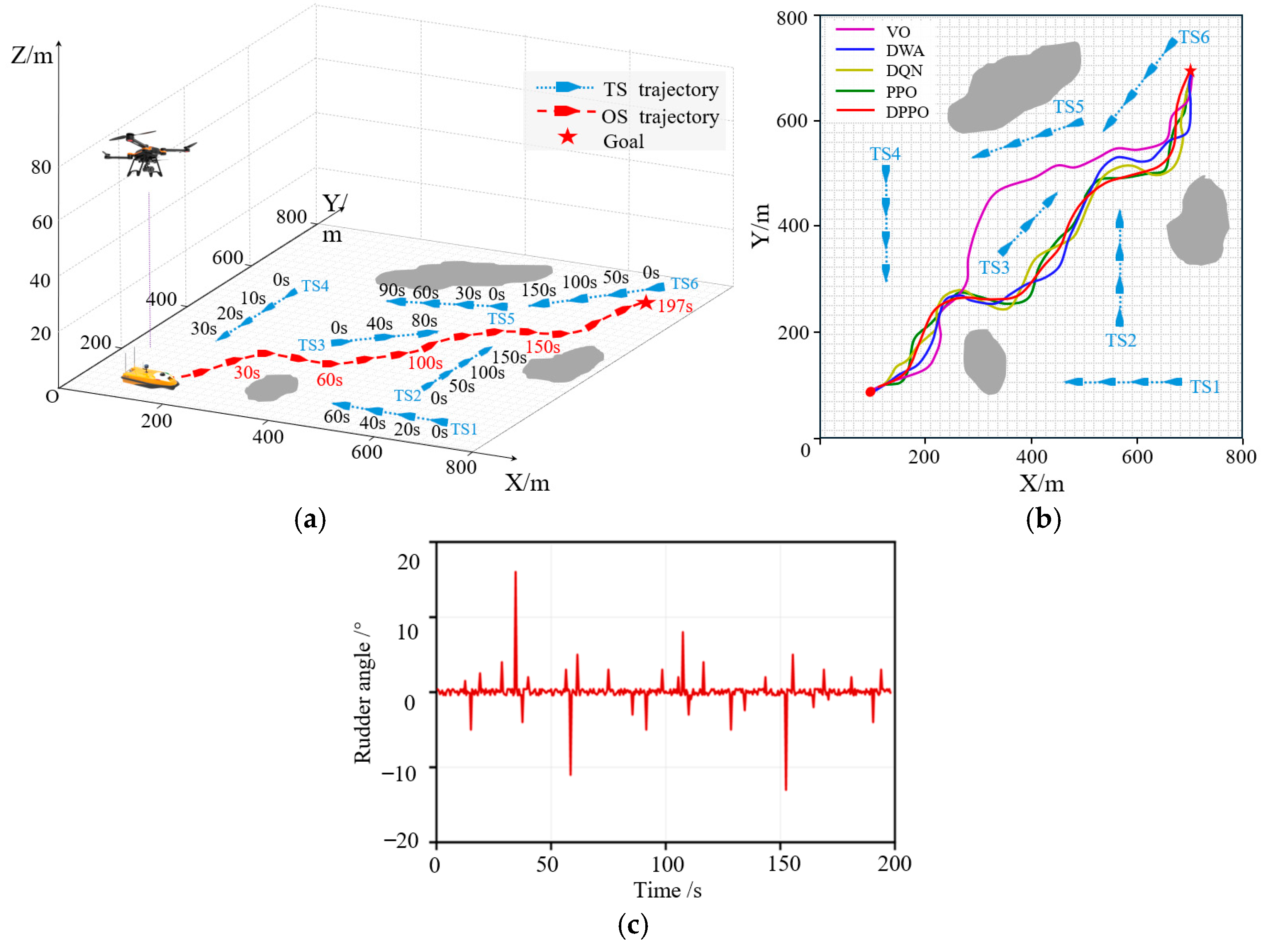

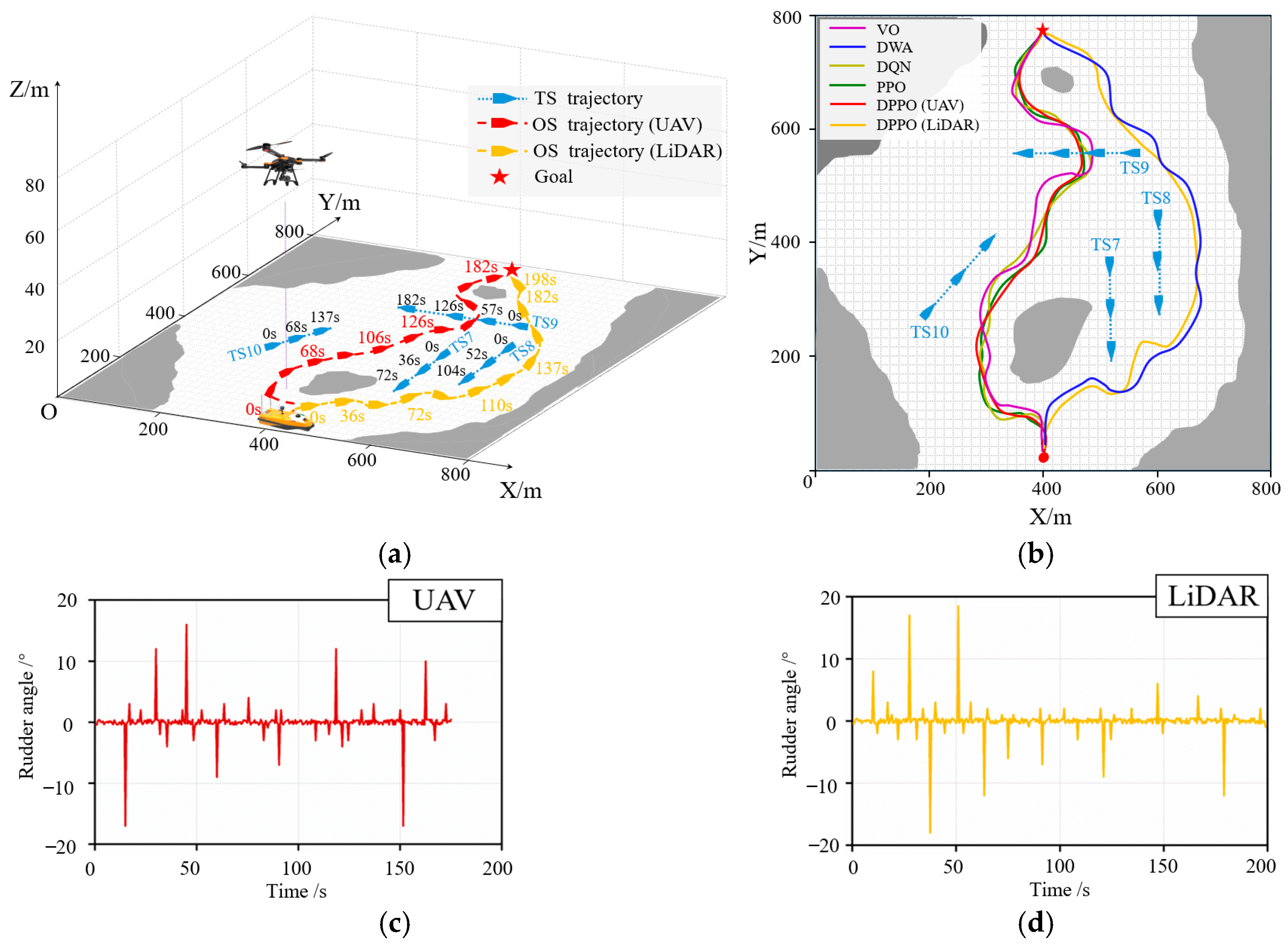

28]. This paper focuses on rules 13 to 17, which are directly related to ship collision avoidance. These rules clearly define the responsibilities for collision avoidance in three typical encounter scenarios: head-on encounter, overtaking encounter, and crossing encounter. As shown in

Figure 3, in a head-on encounter, both ships must turn to starboard. During an overtaking encounter, the overtaking ship is the give-way ship. In a crossing encounter, the ship with another ship on its starboard side is the stand-on ship, while the ship with another ship on its port side is the give-way ship.

3. Proposed Approach

3.1. Improved PPO Algorithm

The PPO algorithm, relying on the importance sampling and policy clipping mechanisms, significantly enhances stability and reduces the sensitivity to hyperparameters, and performs excellently in the USV collision avoidance scenario [

22,

29,

30]. However, this algorithm has problems of low sample efficiency and an imbalance between exploration and exploitation, and further optimization is still needed.

The core goal of PPO is to solve the optimal policy to maximize the expected value of the long-term cumulative discounted reward. Its objective function is:

where

is the environmental state at time

,

is the action executed by the intelligent agent,

is the immediate reward, and

is the discount factor.

According to the policy gradient theorem, the gradient of the objective function is expressed as:

where

is the advantage function, which is used to guide the policy gradient update direction.

To address the problem of low sample efficiency of traditional policy gradient algorithms, PPO introduces the importance sampling technique, allowing the use of samples generated by the old policy to optimize the new policy. At this time, the objective function is converted to:

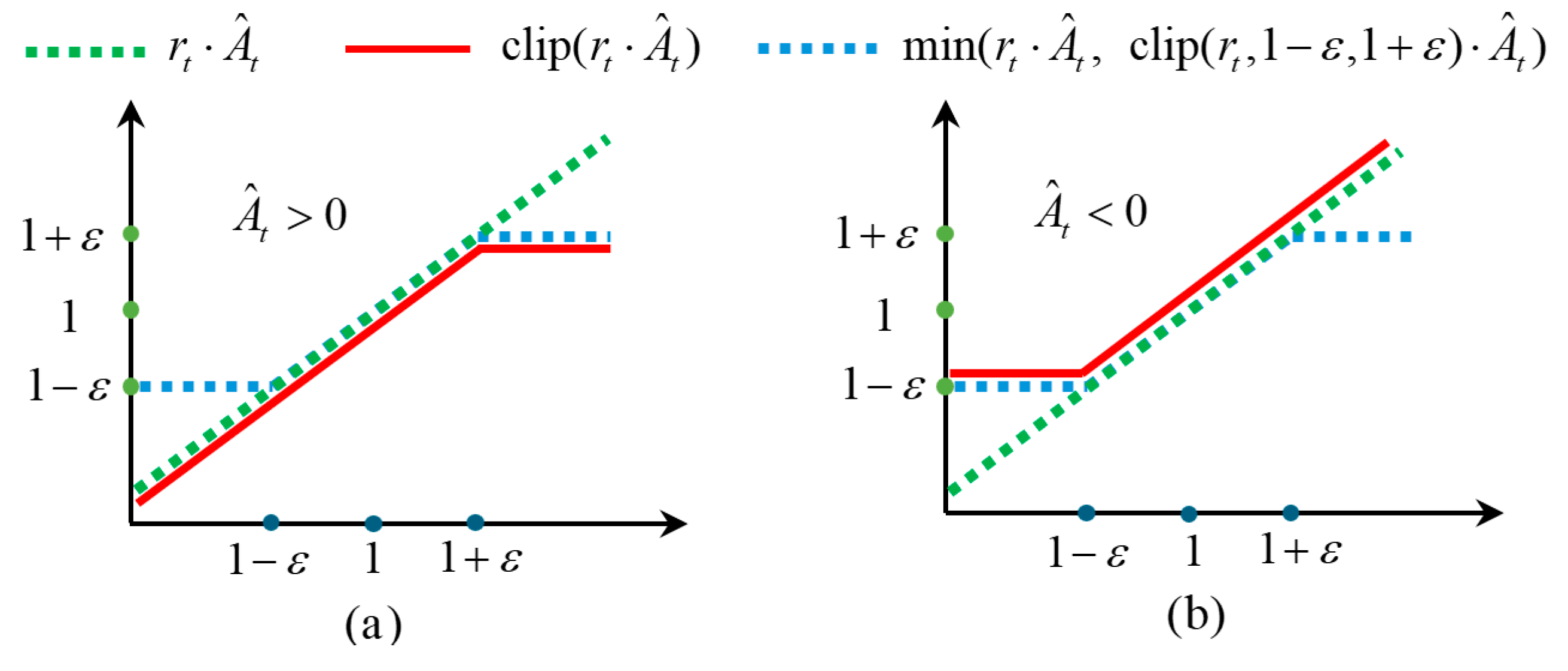

To prevent the performance degradation caused by an overly large policy update step, PPO adopts a clipping mechanism and modifies the objective function to:

where

represents the policy probability ratio,

is the estimated value of the advantage function,

is the clipping function, which limits the probability ratio within the interval

, and

is a hyperparameter. The two clipping situations are shown in

Figure 4.

To further enhance the exploration capability of the algorithm, this paper introduces a policy entropy improvement mechanism into PPO, which broadens the policy search space to increase the probability of discovering potential return policies. The improved objective function is:

where

represents the policy entropy,

is the weight coefficient of the value function loss,

is the entropy coefficient, and

is the value function loss, with the mathematical expression:

where

is the generalized advantage estimate.

Finally, the algorithm achieves a balance by simultaneously optimizing the policy objective and the value function loss. Its complete optimization objective is:

3.2. State Space Design

State space design is a core component of collision avoidance decision-making for USVs, and its representational capability directly impacts the algorithm’s decision-making performance. This paper divides the state space into two parts: a high-dimensional state space and a low-dimensional state space, aiming to achieve precise characterization of environmental information.

The high-dimensional state space is designed to characterize the spatial distribution and dynamic variations in obstacles surrounding the USV, thereby providing foundational environmental perception support for subsequent collision avoidance decision-making processes. To mitigate the limitations of USV-borne sensors, which are prone to occlusion in complex maritime environments, this study leverages the high-altitude visual perception capability of UAV and primarily constructs the high-dimensional state space using UAV-acquired image data. The design of this state space is predicated on the following core premise: the UAV can maintain stable tracking of the USV with minimal tracking errors while simultaneously capturing environmental information centered on the USV [

33,

34,

35]. To fulfill this premise, the nonlinear model predictive control (NMPC) approach proposed in Reference [

10] is adopted herein to ensure reliable and stable tracking of the USV by the UAV.

In terms of specific implementation, the onboard camera mounted on the UAV collects real-time overhead environmental images centered on the USV, with a coverage area of 400 m × 400 m. The resolution of these images is optimally designed to clearly distinguish the contour features and position information of both static obstacles and dynamic targets in the water area [

36]. It is worth noting that relying solely on a single-frame image fails to capture the movement trends of obstacles, which is likely to cause the decision-making system to misjudge dynamic risks. To address this issue, this paper introduces a sliding time window mechanism. By extracting a sequence of three consecutive frames of time-series images, a high-dimensional state space is constructed. The high-dimensional state space is defined as

:

where

and

respectively represent the image information collected at time

, time

, and time

.

The low-dimensional state space is primarily used to supplement the USV’s own state information, including its position

, heading angle

, and target location

. Since such information is not suitable for representation in image form, the low-dimensional state space is constructed based on data collected by the USV’s onboard sensors. The low-dimensional state space is defined as

:

3.3. Actor Space Design

The design of the action space takes full account of the physical characteristics of the USV’s actual control system, selecting a continuous set of rudder angles as the algorithm’s action space . At each decision step, the algorithm outputs a scalar action, representing the desired rudder angle at the current timestep. According to Equation (2), changes in the rudder angle directly affect the USV’s angular turning rate, enabling it to adjust its heading for effective obstacle avoidance or autonomous navigation toward the target.

3.4. Neural Network Architecture

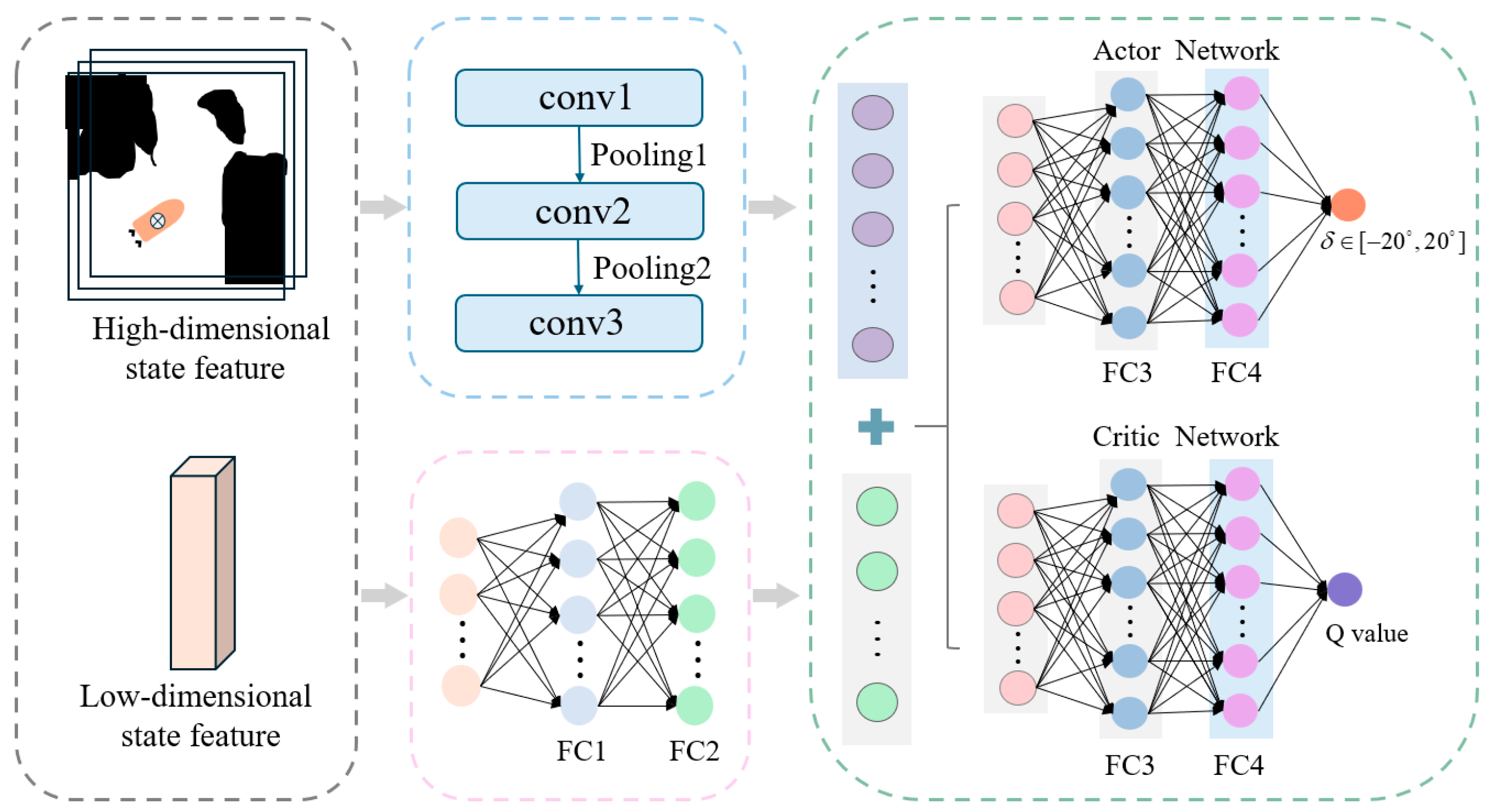

The neural network architecture is designed with multi-level feature fusion as its core, enabling precise characterization and efficient decision-making in complex aquatic environments through the collaborative processing of high-dimensional and low-dimensional state information. The detailed architecture is shown in

Figure 5.

For the high-dimensional state space derived from image data, feature extraction is performed using a three-layer convolutional neural network. The first convolutional layer utilizes 16 filters of size 3 × 3 with a stride of 2, followed by a ReLU activation function and a 2 × 2 max-pooling operation, to capture initial local features representing obstacle distribution and motion trends. The second layer employs 32 filters of size 3 × 3 with a stride of 2, further enhancing feature abstraction while reducing dimensionality via an additional max-pooling layer. The third layer consists of 64 filters of size 3 × 3 with a stride of 1, producing a high-dimensional feature map that is subsequently flattened into a one-dimensional feature vector, thereby preserving information on both the static distribution and dynamic movement patterns of obstacles.

For the low-dimensional state space, a two-layer fully connected network is used. The first layer maps the raw low-dimensional vector into a 64-dimensional feature space, and the second layer compresses it into a 32-dimensional vector, improving nonlinear feature expression and producing the final low-dimensional feature vector.

The high-dimensional and low-dimensional feature vectors are concatenated to form a fused feature vector, which is then fed into both the Actor network and the Critic network. The Actor network consists of three fully connected layers: the first two use ReLU activation, while the final layer applies a Tanh activation function to output a continuous rudder angle, directly guiding the USV’s steering actions. The Critic network also has three fully connected layers, used to evaluate the current policy and provide guidance for policy updates. Detailed network parameter configurations are listed in

Table 1.

3.5. The Novel Reward Mechanisms

The design of the reward function directly dictates the convergence efficiency and decision-making performance of the agent’s policy. In this paper, on the basis of setting the collision penalty and the terminal reward

as the main rewards, a process reward

based on the trajectory evaluation function of the DWA is introduced to furnish denser feedback signals for the USV [

32]. Meanwhile, to incentivize collision-avoidance behaviors that conform to COLREGs, a dedicated COLREGs reward

is devised as an additional auxiliary mechanism, guiding the USV to realize safe and compliant autonomous collision avoidance in complex environments. The structure of the reward is as follows:

3.5.1. Process Rewards

The process reward

generates dense feedback signals by quantifying the navigation status in real time. This reward is designed based on the content of

Section 3.1, and consists of three components: heading angle reward, obstacle distance reward, and goal proximity reward. The specific design is as follows:

(1). Heading Angle Reward: By quantifying the deviation between the USV’s current heading and the target direction, a continuous directional guidance signal is provided. A positive reward is given when the USV’s heading approaches the target direction, while negative rewards are applied to suppress inefficient paths if significant detours occur.

is defined as follows:

where

is the weight of the heading reward,

is the current heading angle, and

represents the bearing angle of the target position relative to the USV.

(2). Obstacle Distance Reward: Based on the layered logic of the risk zones (dangerous zone, warning zone, and safe zone) defined in the ship domain model in

Section 2.2, a dynamic reward mechanism is designed. When an obstacle vessel is in the danger or warning zone, a distance-based linear penalty is applied, with a higher weight assigned to the danger zone. No penalty is imposed when the obstacle is in the safe zone, thereby reinforcing distinct reward and penalty guidance across different zones.

is defined as follows:

where

and

are the reward weights for the dangerous zone and the warning zone, respectively, and

is the distance between the USV and the obstacle.

(3). Target Proximity Reward: Evaluate the change in Euclidean distance between the USV and the target point. If the distance to the target at the current moment is closer than that at the previous moment, a positive reward is given to incentivize continuous approach to the target, if operations such as obstacle avoidance cause the distance to increase, negative rewards are used to constrain excessive detour behaviors.

is defined as follows:

where

is the weight of the target proximity reward,

denotes the distance from the USV to the target position at the current moment, and

represents the distance from the USV to the target position at the previous moment.

Remark 1. The determination of reward function weights combines task priority analysis with experimental tuning. Firstly, according to the relative significance of each navigation objective, the initial value ranges of the coefficients are defined to ensure that the rewards for core tasks play a dominant role in the learning signal. Subsequently, via a series of controlled experiments, the optimal configuration of the reward coefficients is ascertained to optimize path efficiency. Ultimately, this approach guarantees that the reward function can effectively guide the USV to make safe and efficient obstacle-avoidance decisions.

3.5.2. Collision and Arrival Reward

Collision rewards enhance navigation safety through negative reward to prevent collisions, while arrival rewards provide positive incentives upon achieving the target to clarify navigation objectives. Together, they form the fundamental framework of the reward function, ensuring the safety and task orientation of obstacle avoidance behaviors.

is defined as follows:

where

represents the arrival reward value,

denotes the arrival reward value,

signifies the position of the USV, and

indicates the target position.

3.5.3. COLREGs Reward

The COLREGs reward

, based on COLREGs Articles 13–17, guides the USV to execute collision-avoidance actions in compliance with regulations during interactive decision-making. A negative reward is given if the USV’s collision-avoidance violates COLREGs rules.

is defined as follows:

where

denotes the reward value complying with COLREGs rules.

The overall reward structure is as follows:

Remark 2. Considering conventional reward mechanisms, the USV usually obtains explicit reward signals only when a collision occurs or it successfully reaches the target, while effective guidance is lacking during intermediate navigation phases. This frequently leads to aimless random exploration, considerably prolonging the training cycle. In this paper, a process reward based on the DWA algorithm is introduced, which provides continuous and real-time feedback in three dimensions—heading angle deviation, obstacle distance, and target proximity—thus offering full-course navigation guidance for the USV. This approach effectively reduces ineffective exploration and speeds up policy convergence. Furthermore, by integrating reward terms corresponding to COLREGs rules with the process reward, a dual-constraint mechanism that balances navigation efficiency and regulatory compliance is established, ultimately yielding a collision avoidance strategy that is both efficient and compliant with COLREGs rules.

3.6. The Algorithm Flow of DPPO

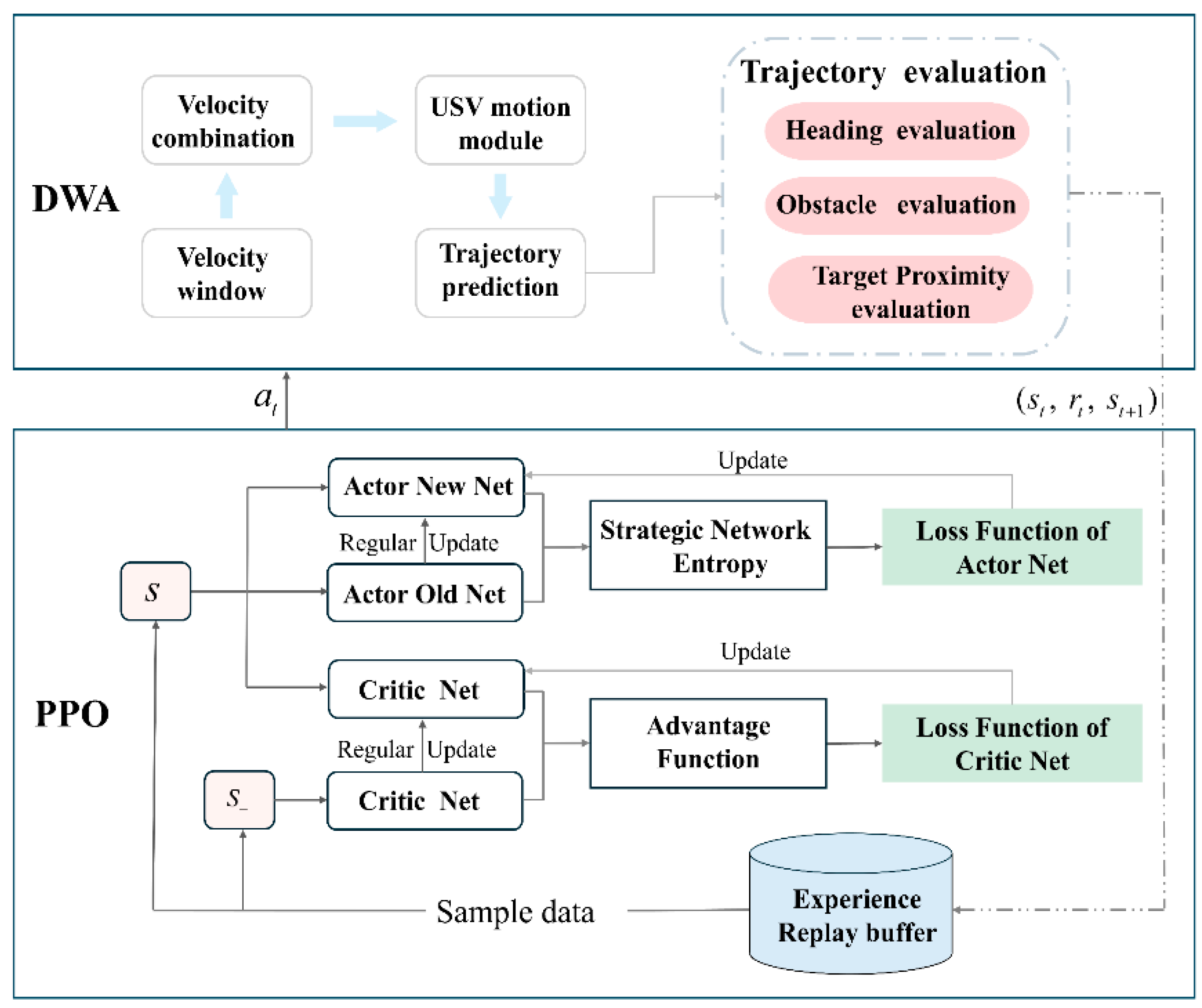

The algorithmic flow of DPPO is illustrated in

Figure 6 and primarily consists of three stages.

In the initial phase, the parameters of the policy network and value network are initialized, hyperparameters such as the discount factor and clipping coefficient are set, and an experience buffer is established to store transitions .

During the second stage, the actor network generates an action based on the current state . This action is executed to produce a predicted trajectory. The reward is then calculated using the reward function designed based on the DWA trajectory evaluation. The next state is obtained, and the transition is stored in the experience buffer .

In the third stage, sample data from the experience pool , and combine it with the output of the value network to compute the advantage function and the target return using GAE. Record the probability of action under the old policy . Based on the PPO clipping mechanism, calculate and clip the probability ratio of under the new and old policies to obtain the policy loss , while also computing the value loss . Introduce the policy entropy and combine it with and through weighted summation to form the total loss. Employ gradient descent to update the network parameters, and iterate over multiple rounds until the policy converges.