YOLO-PFA: Advanced Multi-Scale Feature Fusion and Dynamic Alignment for SAR Ship Detection

Abstract

1. Introduction

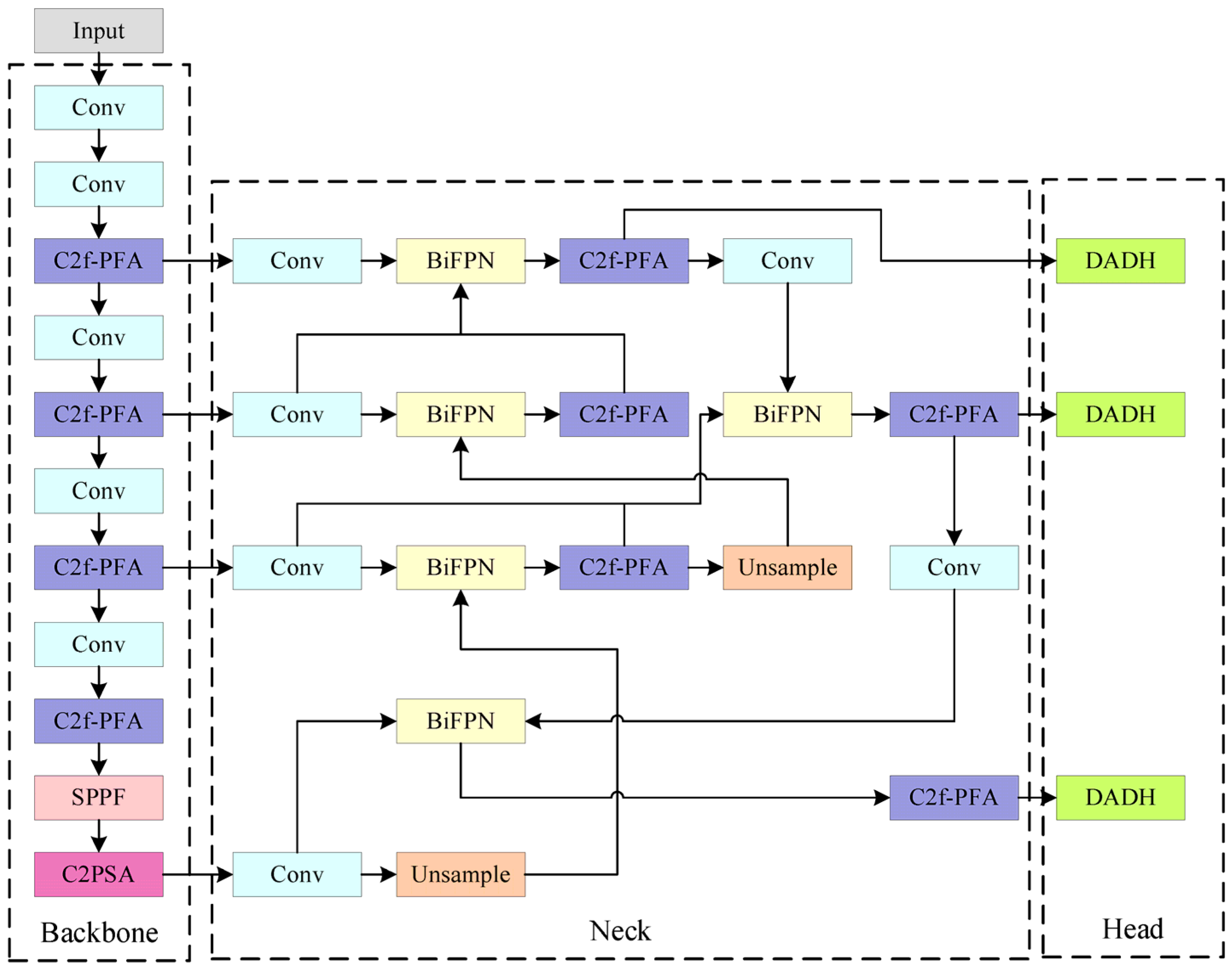

- (1)

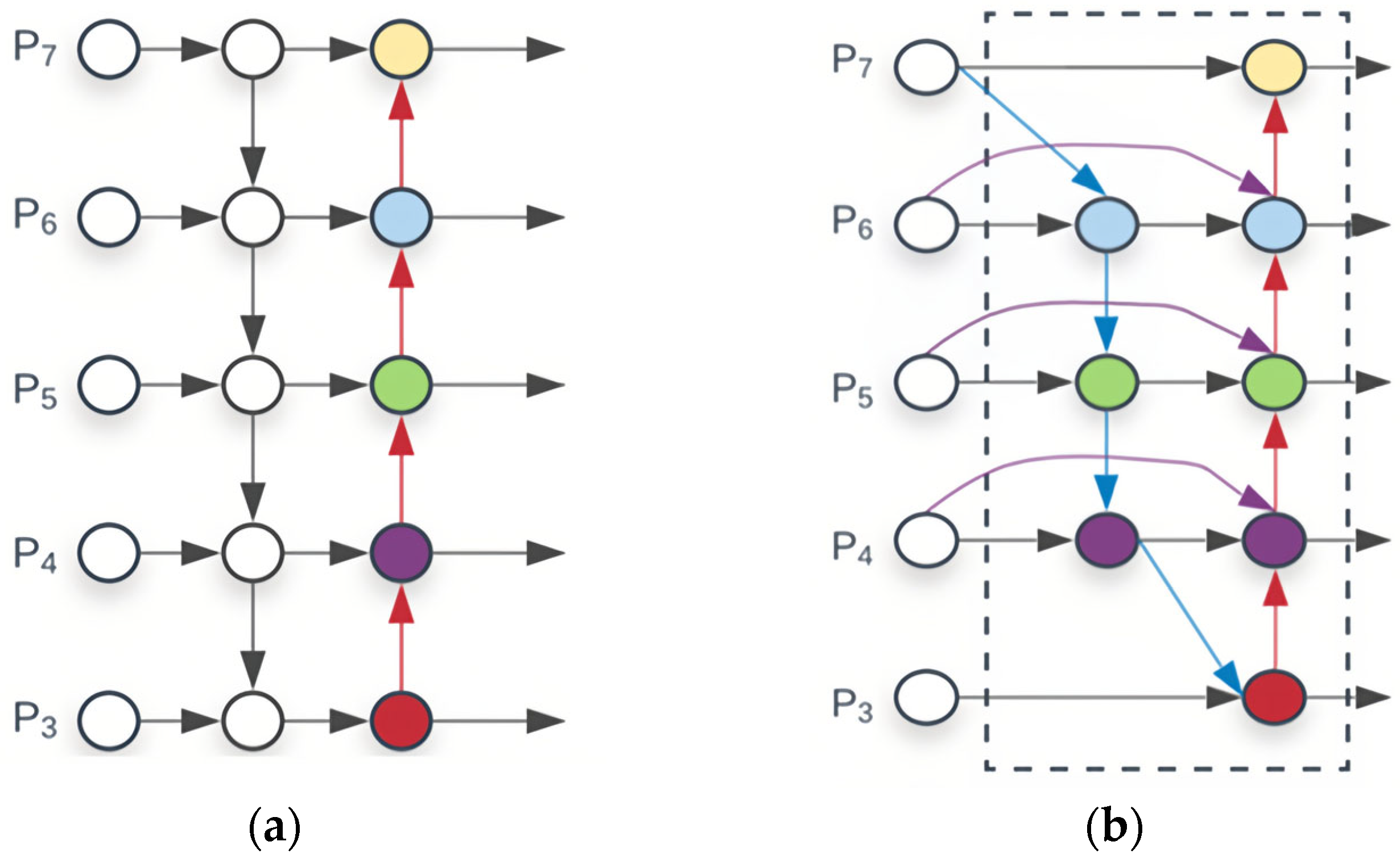

- To address the limitations of YOLOv11 in managing targets of varying scales—such as inefficient multi-scale feature fusion, inadequate adaptive balancing of cross-layer feature contributions, and suboptimal detection of small objects—this study incorporates the Bidirectional Feature Pyramid Network (BiFPN) [22]. BiFPN utilizes bidirectional connections to facilitate efficient information flow across multi-resolution feature maps, along with an adaptive weighting strategy that dynamically prioritizes salient features, thereby enhancing multi-scale fusion. These mechanisms significantly improve the capture of details for small objects, ultimately leading to enhanced detection accuracy and robustness for YOLOv11.

- (2)

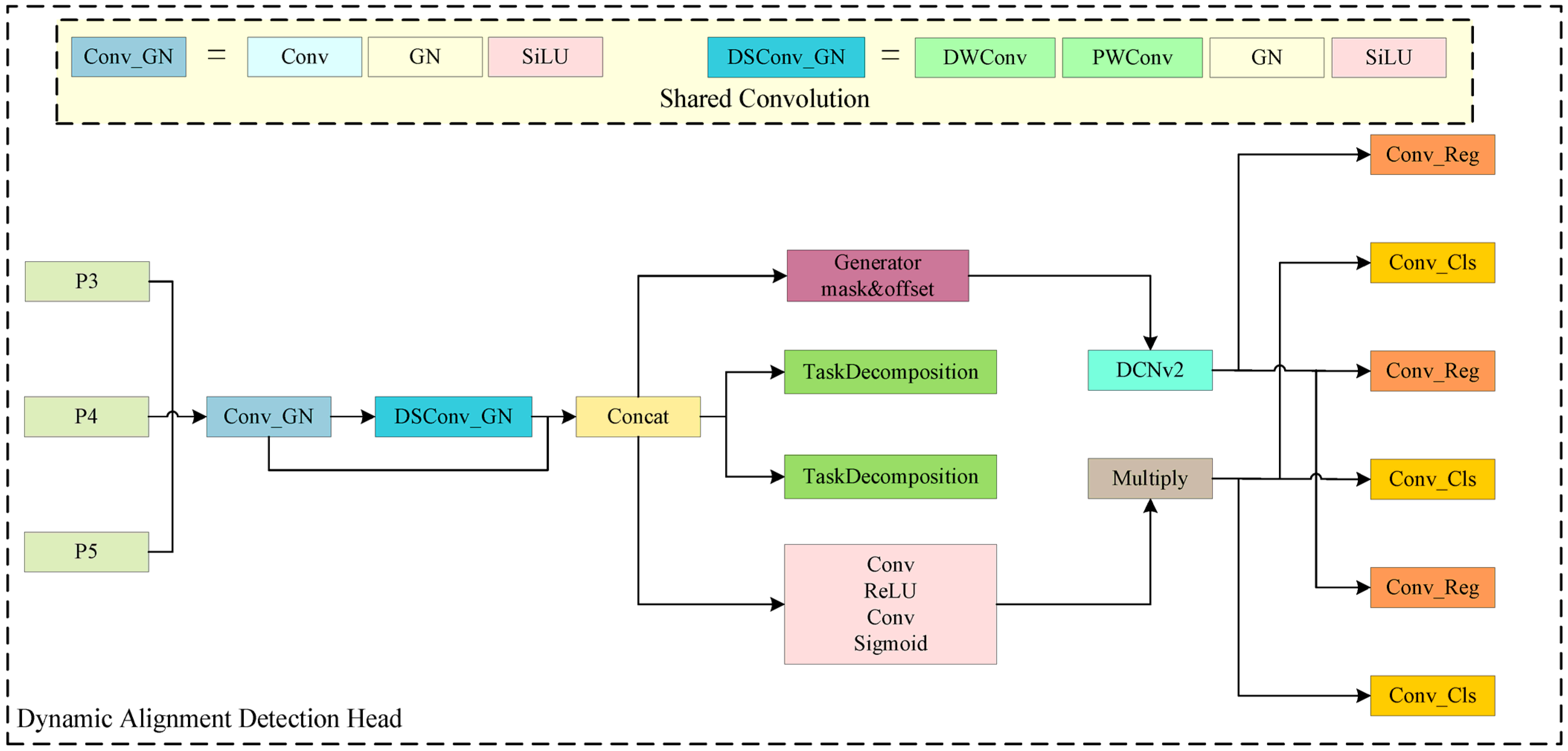

- To resolve the issue in ship detection where misalignment between classification and localization tasks makes their joint optimization difficult, this study integrates Depthwise Separable Convolution with Group Normalization (DSConv_GN) into the Task-aligned Dynamic Detection Head (TADDH) [23], proposing an enhanced Dynamic Alignment Detection Head (DADH). This module dynamically selects discriminative features critical for both tasks in complex marine scenarios, thereby improving detection accuracy across ship types and scales. Additionally, DSConv_GN mitigates overfitting and reduces computational overhead.

- (3)

- To address low detection accuracy caused by large-scale variations and heavy background clutter in ship detection, the C2f-Partial Feature Aggregation (C2f-PFA) module is proposed. It extracts multi-scale features via varied convolutional kernels and fuses raw and processed multi-scale features to strengthen comprehensive ship feature expression. Integrating 1 × 1 convolution and residual connections further refines key features, reduces information loss, and alleviates gradient vanishing.

- (4)

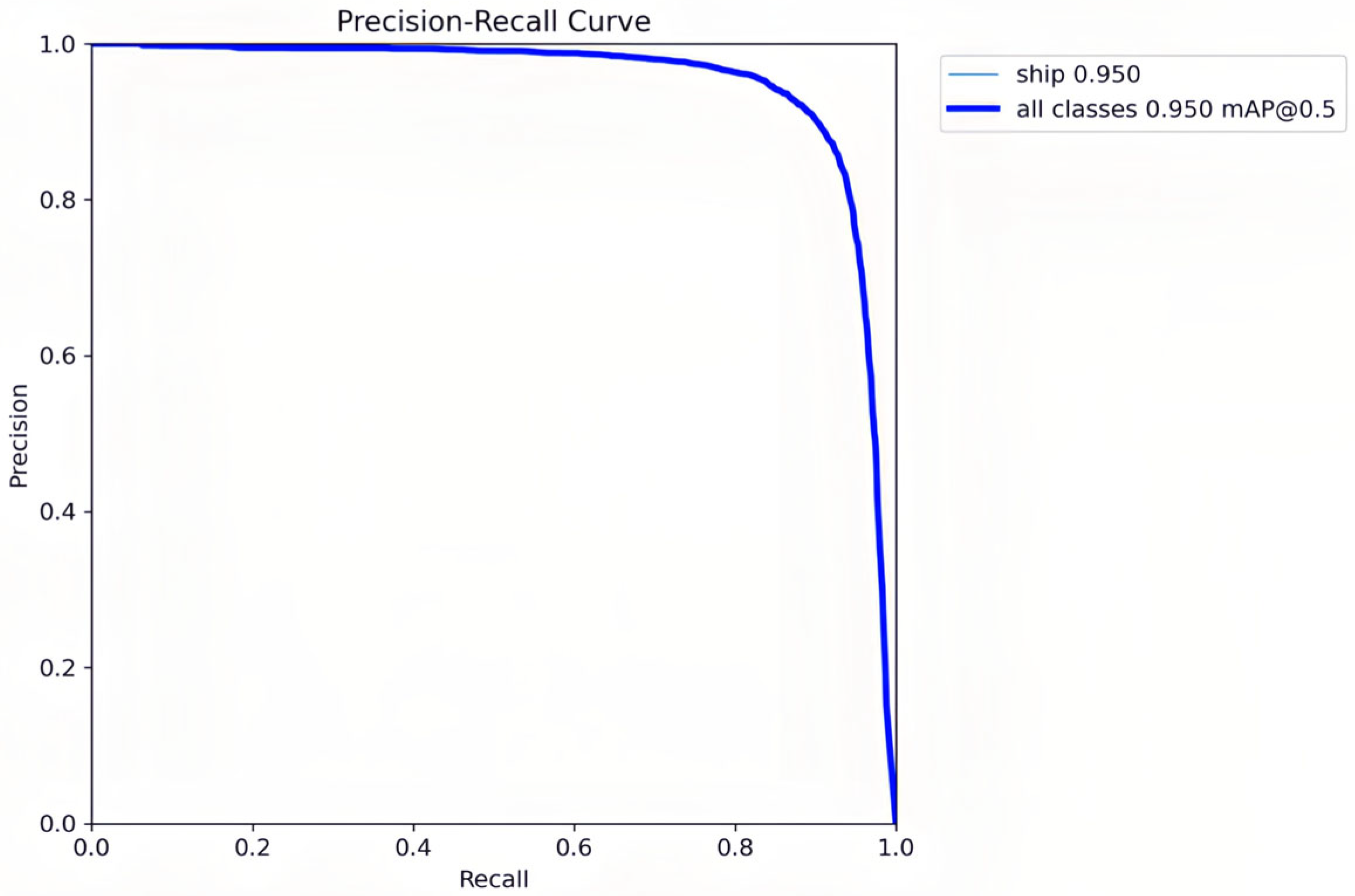

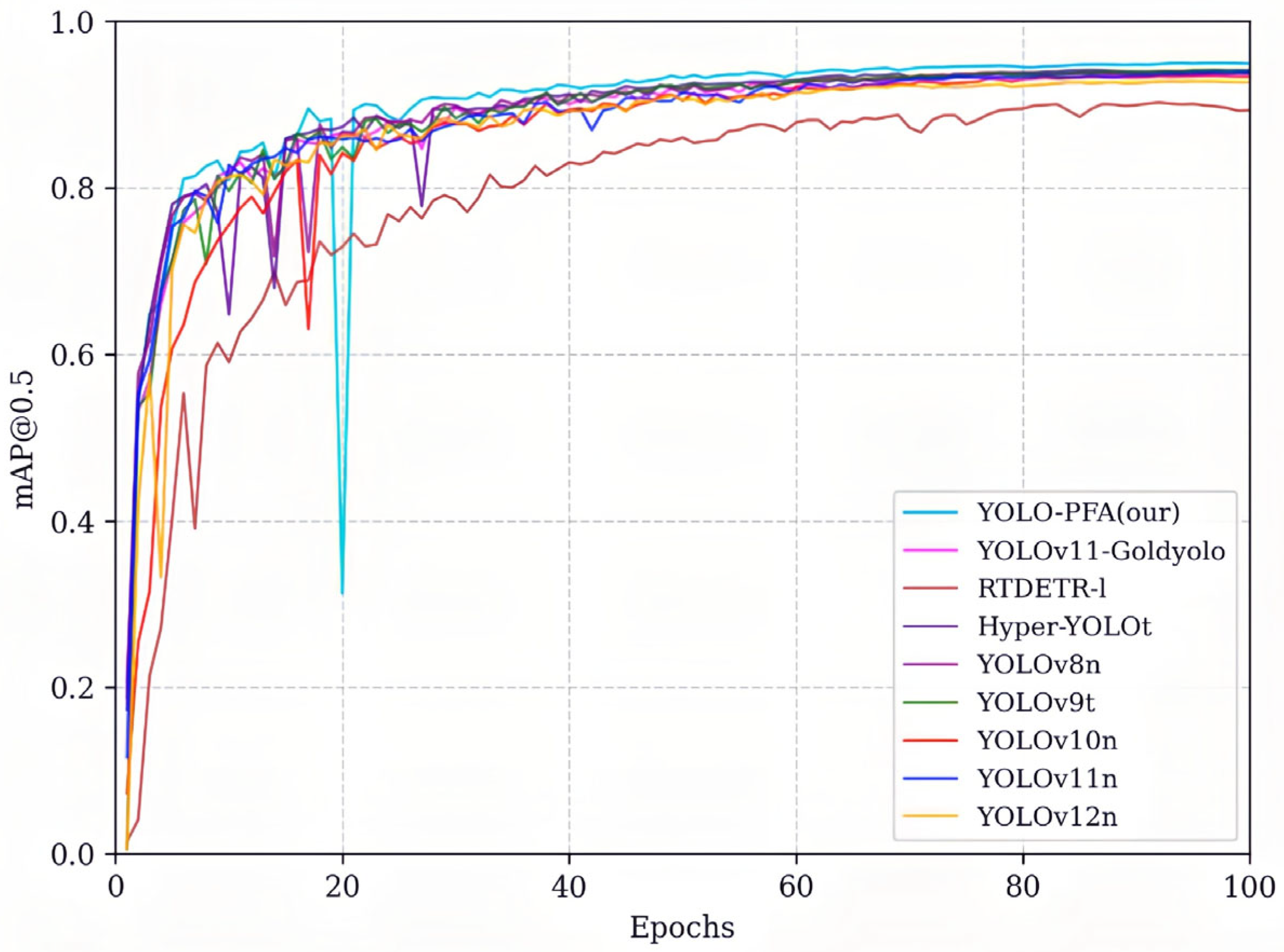

- Experiments on the public iVision-MRSSD ship dataset evaluate the impact of the proposed modules. Results show the improved YOLO-PFA achieves a mAP@0.5 of 95%, outperforming YOLOv11 by 1.2% and YOLOv12 by 2.8%.

2. Related Works

2.1. Ship Target Detection

2.2. Multi-Scale Feature Fusion

2.3. Target Detection Head

3. Methodology

3.1. YOLO-PFA Structure

3.2. Bidirectional Characteristic Pyramid Network

3.3. DADH Detection Head Structure

- (1)

- Depthwise convolution operation:

- (2)

- Pointwise convolution operation:

- (3)

- Group normalization operation:

- (4)

- Application of activation function:

3.4. C2F-PFA Module

- (1)

- Initial convolution processing:

- (2)

- Feature Splitting and First Branch:

- (3)

- Secondary Splitting and Second Branch:

- (4)

- Feature Fusion:

4. Experimental and Results Analysis

4.1. Experimental Settings and Evaluation Indicators

4.1.1. Experimental Environment

4.1.2. Datasets

4.1.3. Evaluation Indicators

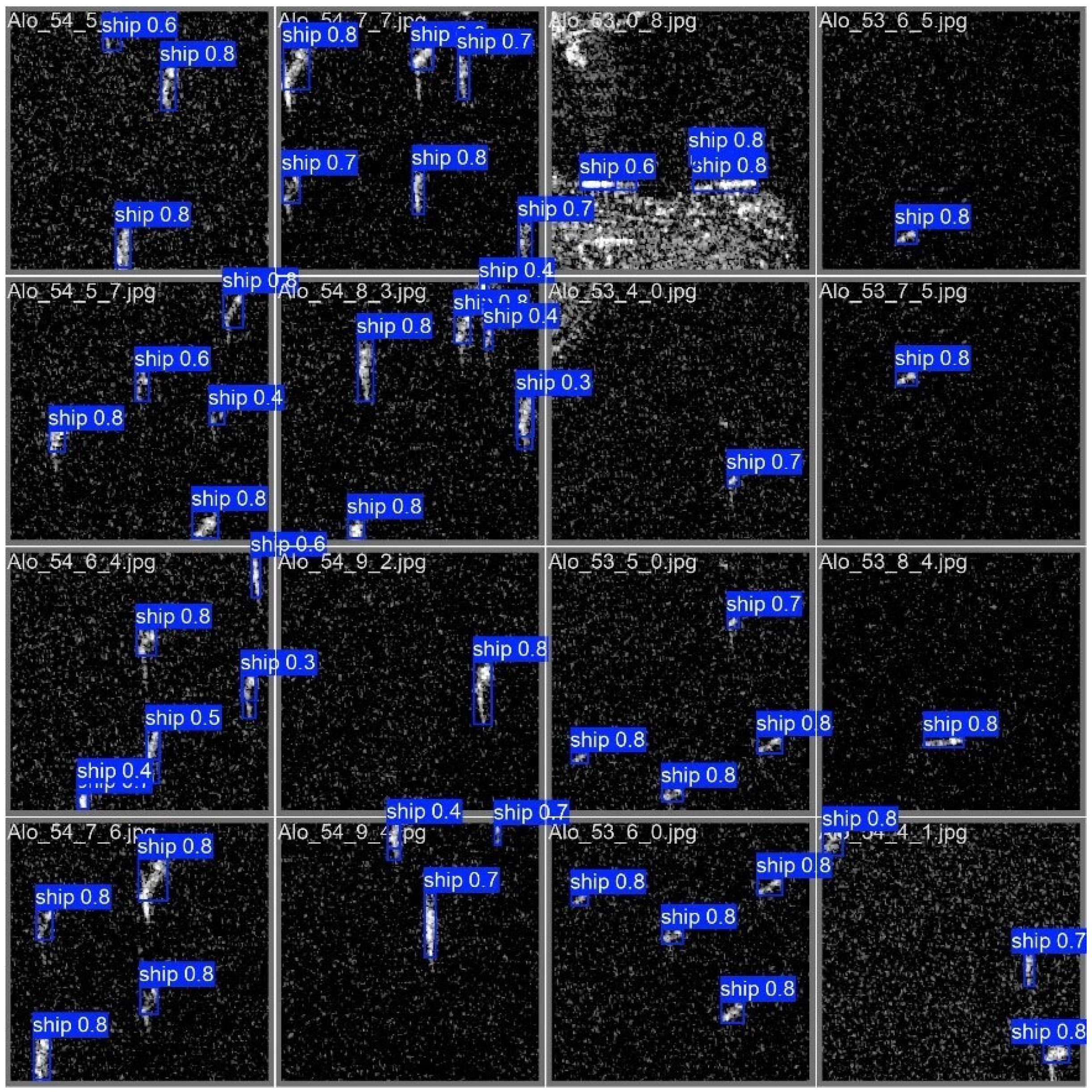

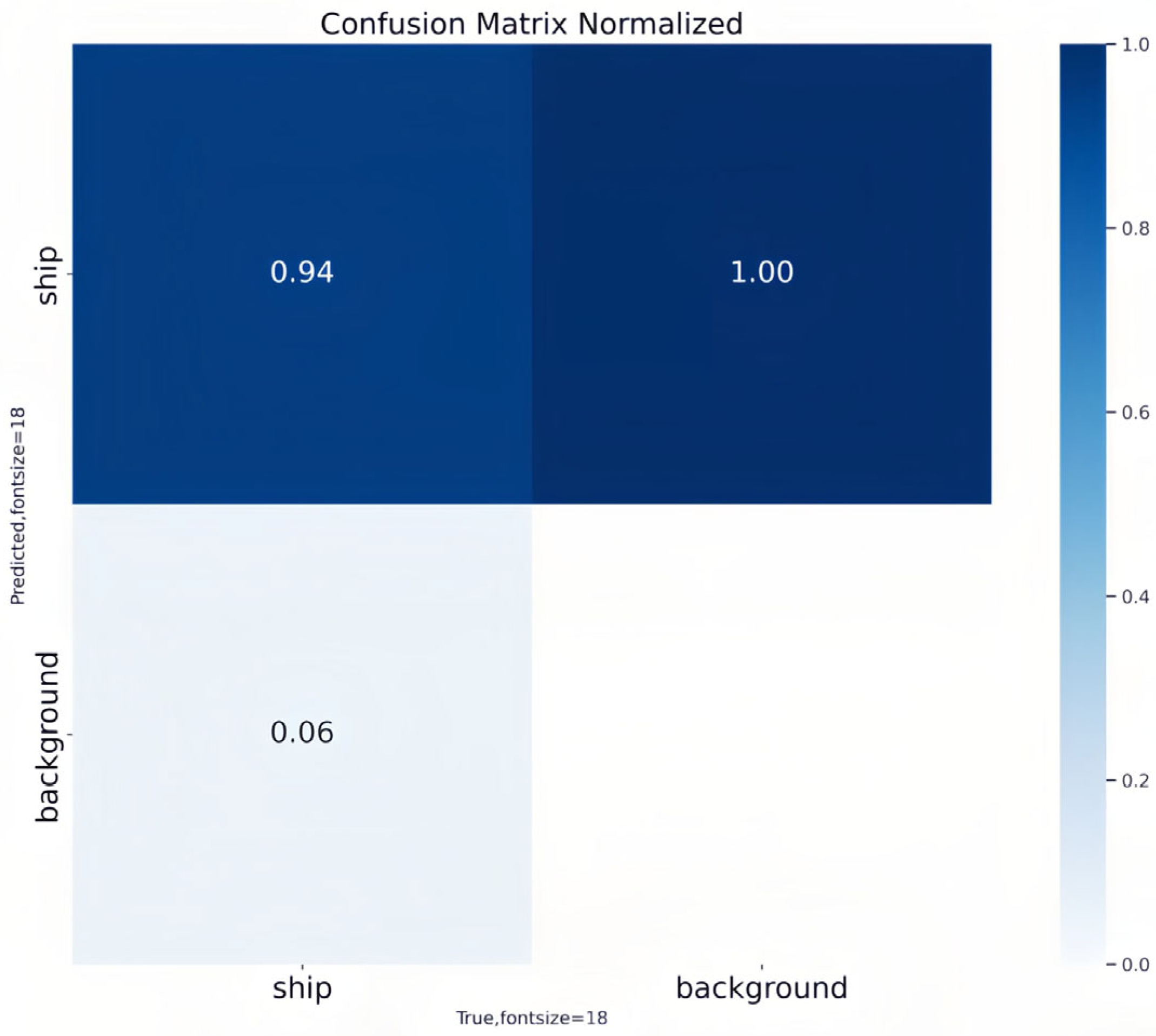

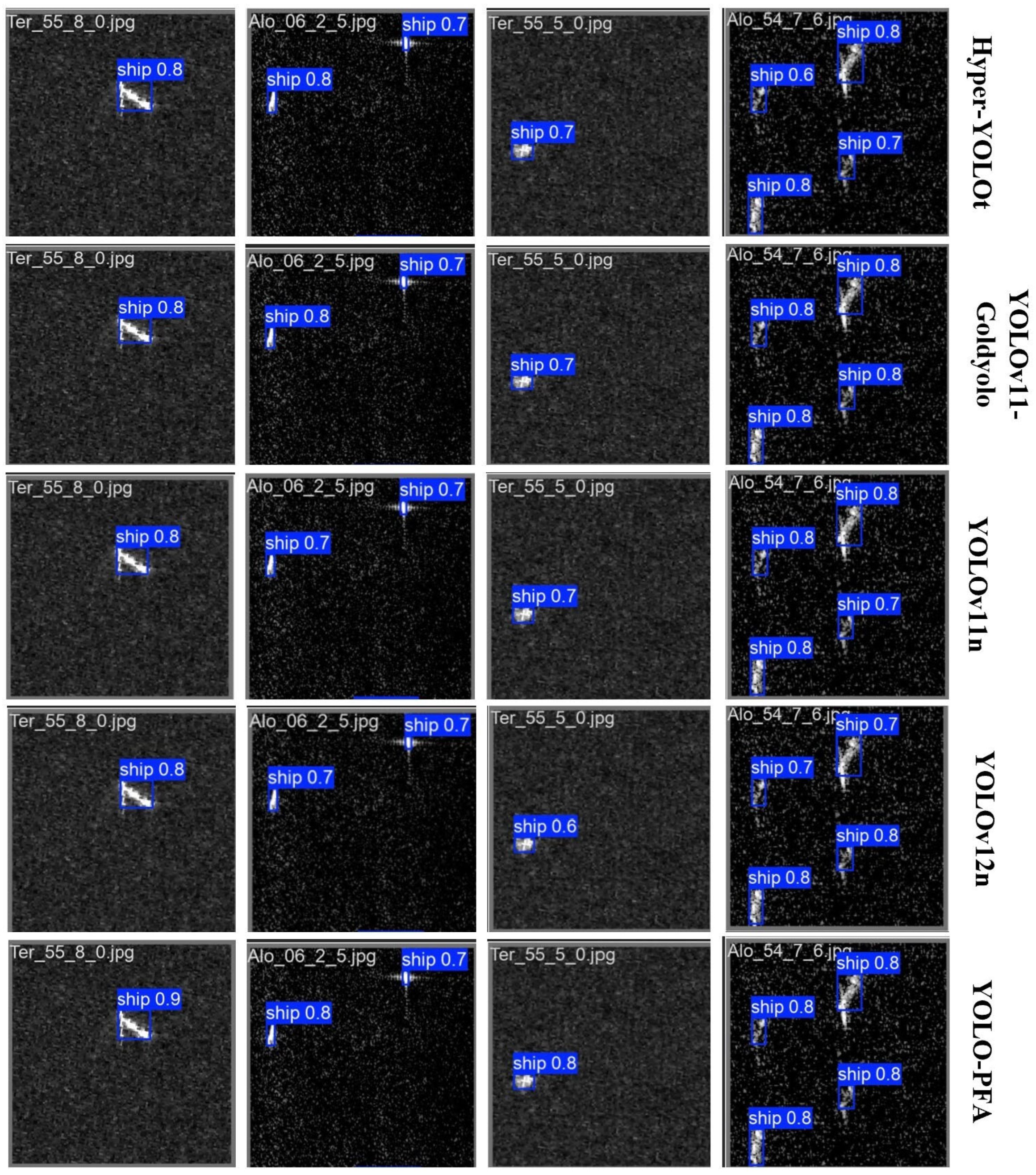

4.2. Experimental Results

4.2.1. Ablation Experimental Results and Analysis

4.2.2. Comparative Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fu, H.; Song, G.; Wang, Y. Improved YOLOv4 Marine Target Detection Combined with CBAM. Symmetry 2021, 13, 623. [Google Scholar] [CrossRef]

- Yang, J.; Ran, L.; Dang, J.; Wang, Y.; Qu, Z. Deeper Multiscale Encoding–Decoding Feature Fusion Network for Change Detection of VHR Images. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6012105. [Google Scholar] [CrossRef]

- Li, Q.; Zhang, W.; Li, M.; Niu, J.; Wu, Q.J. Automatic detection of ship targets based on wavelet transform for HF surface wavelet radar. IEEE Geosci. Remote Sens. Lett. 2017, 14, 714–718. [Google Scholar] [CrossRef]

- Golubović, D.; Erić, M.; Vukmirović, N.; Orlić, V. High-Resolution Sea Surface Target Detection Using Bi-Frequency High-Frequency Surface Wave Radar. Remote Sens. 2024, 16, 3476. [Google Scholar] [CrossRef]

- Zhang, W.; Li, Q.; Wu, Q.J.; Li, M. Sea surface target detection for RD images of HFSWR based on optimized error self-adjustment extreme learning machine. Acta Autom. Sin. 2019, 47, 108–120. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Ross, G. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Yang, G.; Feng, W.; Jin, J.; Lei, Q.; Li, X.; Gui, G.; Wang, W. Face mask recognition system with YOLOv5 based on image recognition. In Proceedings of the 2020 IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; pp. 1398–1404. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. YOLOv10: Real-Time End-to-End Object Detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Huang, Q.; Sun, H.; Wang, Y.; Yuan, Y.; Guo, X.; Gao, Q. Ship Detection Based on YOLO Algorithm for Visible Images. IET Image Process. 2024, 18, 481–492. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Cheng, S.; Zhu, Y.; Wu, S. Deep Learning Based Efficient Ship Detection from Drone-Captured Images for Maritime Surveillance. Ocean Eng. 2023, 285, 115440–115446. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, C.; Filaretov, V.F.; Yukhimets, D.A. Multi-Scale Ship Detection Algorithm Based on YOLOv7 for Complex Scene SAR Images. Remote Sens. 2023, 15, 2071. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, D.; Song, T.; Ye, Y.; Zhang, X. YOLO-SSP: An Object Detection Model Based on Pyramid Spatial Attention and Improved Downsampling Strategy for Remote Sensing Images. Vis. Comput. 2025, 41, 1467–1484. [Google Scholar] [CrossRef]

- Luo, Y.; Li, M.; Wen, G.; Tan, Y.; Shi, C. SHIP-YOLO: A Lightweight Synthetic Aperture Radar Ship Detection Model Based on YOLOv8n Algorithm. IEEE Access 2024, 12, 37030–37041. [Google Scholar] [CrossRef]

- Zhou, F.; Yang, T.; Tan, L.; Xu, X.; Xing, M. DAP-Net: Enhancing SAR Target Recognition with Dual-Channel Attention and Polarimetric Features. Vis. Comput. 2025, 41, 7641–7656. [Google Scholar] [CrossRef]

- Shen, Y.; Gao, Q. DS-YOLO: A SAR Ship Detection Model for Dense Small Targets. Radioengineering 2025, 34, 407–421. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Gu, J.; Pan, Y.; Zhang, J. Deep Learning-Based Intelligent Detection Algorithm for Surface Disease in Concrete Buildings. Buildings 2024, 14, 3058. [Google Scholar] [CrossRef]

- Jiang, J.; Fu, X.; Qin, R.; Wang, X.; Ma, Z. High-Speed Lightweight Ship Detection Algorithm Based on YOLO-V4 for Three-Channel RGB SAR Image. Remote Sens. 2021, 13, 1909. [Google Scholar] [CrossRef]

- Leng, X.; Ji, K.; Yang, K.; Zou, H. A Bilateral CFAR Algorithm for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1536–1540. [Google Scholar] [CrossRef]

- Liao, M.; Wang, C.; Wang, Y.; Jiang, L. Using SAR Images to Detect Ships from Sea Clutter. IEEE Geosci. Remote Sens. Lett. 2008, 5, 194–198. [Google Scholar] [CrossRef]

- Zhou, K.; Zhang, M.; Wang, H.; Tan, J. Ship Detection in SAR Images Based on Multi-Scale Feature Extraction and Adaptive Feature Fusion. Remote Sens. 2022, 14, 755. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. High-Speed Ship Detection in SAR Images Based on a Grid Convolutional Neural Network. Remote Sens. 2019, 11, 1206. [Google Scholar] [CrossRef]

- Zwemer, M.H.; Wijnhoven, R.G.J.; de With Peter, H.N. Ship Detection in Harbour Surveillance based on Large-Scale Data and CNNs. In Proceedings of the VISIGRAPP (5: VISAPP), Funchal, Portugal, 27–29 January 2018; pp. 153–160. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Xu, X.; Jiang, Y.; Chen, W.; Huang, Y.; Zhang, Y.; Sun, X. Damo-yolo: A report on real-time object detection design. arXiv 2022, arXiv:2211.15444. [Google Scholar]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Wang, Y.; Han, K. Gold-YOLO: Efficient Object Detector via Gather-and-Distribute Mechanism. Adv. Neural Inf. Process. Syst. 2023, 36, 51094–51112. [Google Scholar]

- Wang, Y.; Jiang, Y.; Xu, H.; Xiao, C.; Zhao, K. Detection Method of Key Ship Parts Based on YOLOv11. Processes 2025, 13, 201. [Google Scholar] [CrossRef]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable convnets v2: More deformable, better results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9308–9316. [Google Scholar]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic head: Unifying object detection heads with attentions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7373–7382. [Google Scholar]

- Humayun, M.F.; Bhatti, F.A.; Khurshid, K. iVision MRSSD: A Comprehensive Multi-Resolution SAR Ship Detection Dataset for State of the Art Satellite Based Maritime Surveillance Applications. Data Brief 2023, 50, 109505–109508. [Google Scholar] [CrossRef] [PubMed]

| Name | Version |

|---|---|

| CPU | Intel(R) Xeon(R) Platinum 8168 CPU @ 2.70 GHz |

| GPU | NVIDIA GeForce RTX 4090, 24,210 MiB |

| Operating system | ubuntu 22.04 |

| Deep learning framework | Pytorch 2.5.1 |

| Parameters | Setup |

|---|---|

| Epochs | 100 |

| Batch size | 32 |

| Workers | 4 |

| Input image size | 640 × 640 |

| Optimizer | SGD |

| Data enhancement strategy | Mosaic |

| DADH | BiFPN | C2f-PFA | P (%) | R (%) | mAP@0.5 (%) | mAP0.5:0.95 (%) | Parameters |

|---|---|---|---|---|---|---|---|

| - | - | - | 90.5 | 86.1 | 93.8 | 57.5 | 2,582,347 |

| √ | - | - | 90.4 | 88.4 | 94.5 | 58.6 | 2,168,012 |

| - | √ | - | 90.6 | 84.2 | 92.8 | 57.9 | 2,864,679 |

| - | - | √ | 90.3 | 86.8 | 93.7 | 57.3 | 2,629,203 |

| √ | √ | - | 90 | 88.7 | 94.3 | 57.6 | 2,587,064 |

| √ | - | √ | 91.2 | 88 | 94.6 | 57.9 | 2,229,556 |

| - | √ | √ | 89.8 | 87.7 | 93.9 | 58.4 | 2,969,135 |

| √ | √ | √ | 91.1 | 89.5 | 95 | 59.2 | 2,691,520 |

| Method | P (%) | R (%) | mAP@0.5 (%) | mAP0.5:0.95 (%) | Parameters |

|---|---|---|---|---|---|

| YOLOv8n | 90.1 | 87.6 | 93.8 | 57.7 | 3,005,843 |

| YOLOv9t | 90.6 | 87.1 | 94.1 | 58.5 | 1,970,979 |

| YOLOv10n [10] | 89.4 | 86.2 | 93.4 | 57.6 | 2,265,363 |

| YOLOv11-Goldyolo [33] | 88.9 | 87.6 | 93.7 | 56.8 | 5,896,539 |

| YOLOv12n [11] | 89.5 | 83.8 | 92.2 | 55.4 | 2,556,923 |

| RTDETR-l | 85.2 | 82.4 | 90 | 56.6 | 31,985,795 |

| Hyper-YOLOt | 90.1 | 87.7 | 94.1 | 58.4 | 2,682,899 |

| YOLOv11n | 90.5 | 86.1 | 93.8 | 57.5 | 2,582,347 |

| YOLO-PFA | 91.1 | 89.5 | 95 | 59.2 | 2,691,520 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Liu, P.; Wang, Z.; Sun, M.; He, P. YOLO-PFA: Advanced Multi-Scale Feature Fusion and Dynamic Alignment for SAR Ship Detection. J. Mar. Sci. Eng. 2025, 13, 1936. https://doi.org/10.3390/jmse13101936

Liu S, Liu P, Wang Z, Sun M, He P. YOLO-PFA: Advanced Multi-Scale Feature Fusion and Dynamic Alignment for SAR Ship Detection. Journal of Marine Science and Engineering. 2025; 13(10):1936. https://doi.org/10.3390/jmse13101936

Chicago/Turabian StyleLiu, Shu, Peixue Liu, Zhongxun Wang, Mingze Sun, and Pengfei He. 2025. "YOLO-PFA: Advanced Multi-Scale Feature Fusion and Dynamic Alignment for SAR Ship Detection" Journal of Marine Science and Engineering 13, no. 10: 1936. https://doi.org/10.3390/jmse13101936

APA StyleLiu, S., Liu, P., Wang, Z., Sun, M., & He, P. (2025). YOLO-PFA: Advanced Multi-Scale Feature Fusion and Dynamic Alignment for SAR Ship Detection. Journal of Marine Science and Engineering, 13(10), 1936. https://doi.org/10.3390/jmse13101936