YOLOv11-MSE: A Multi-Scale Dilated Attention-Enhanced Lightweight Network for Efficient Real-Time Underwater Target Detection

Abstract

1. Introduction

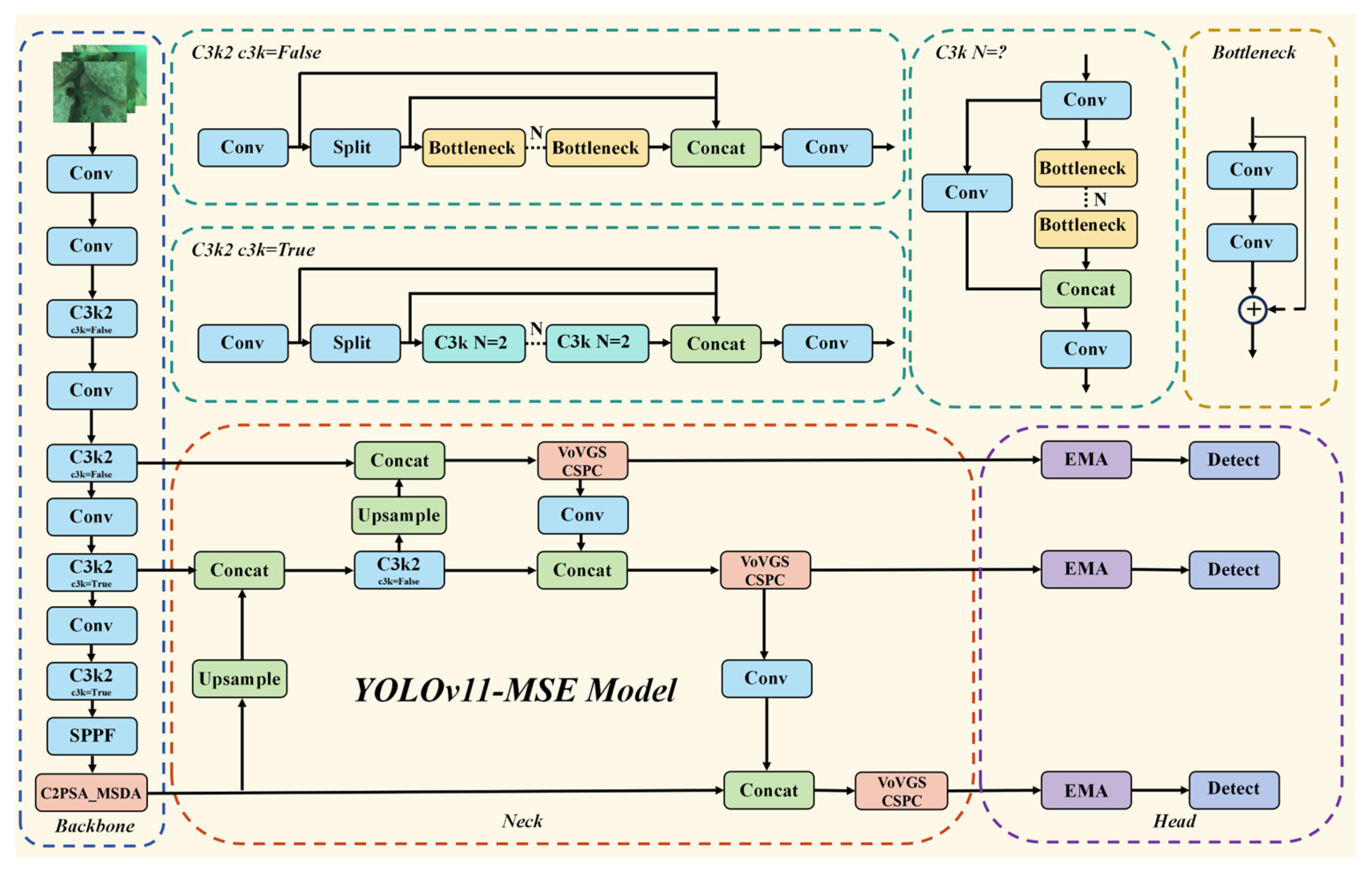

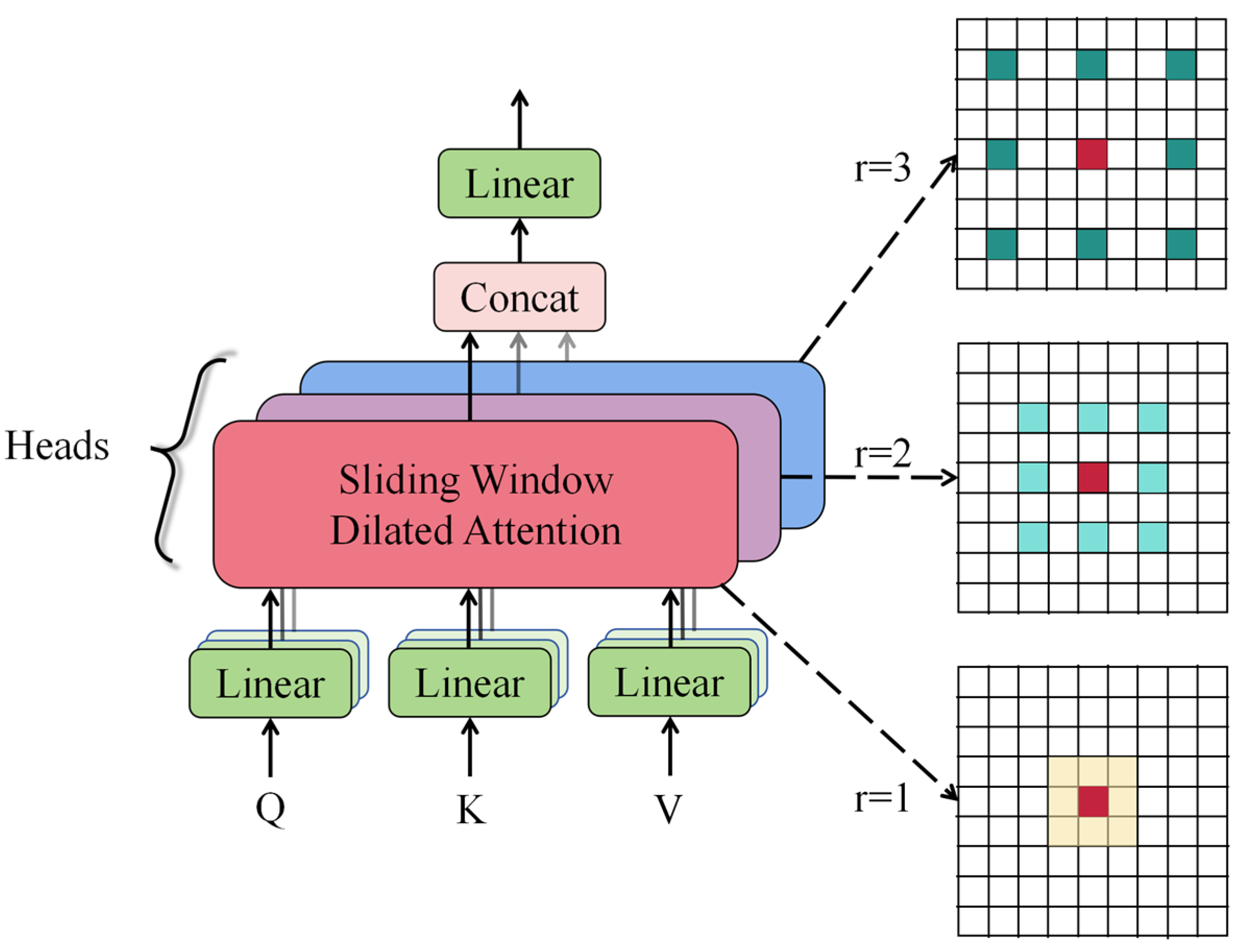

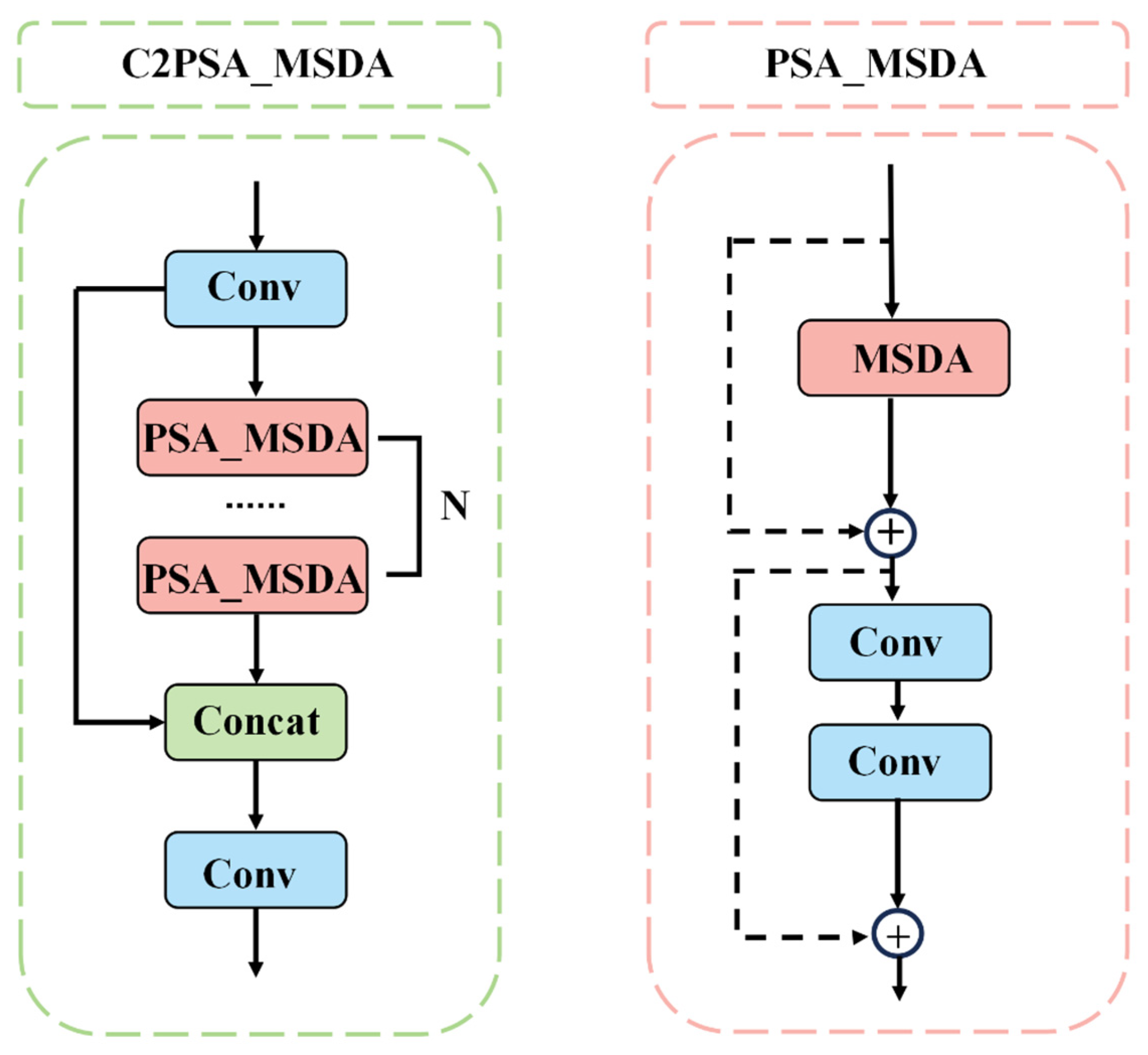

- The MSDA mechanism is combined with the backbone and integrated into the C2PSA module of YOLOv11. This arrangement can help the model improve the detection ability of multi-scale targets, enhance the focus on key areas, suppress the interference of complex background information, and enhance multi-scale feature extraction.

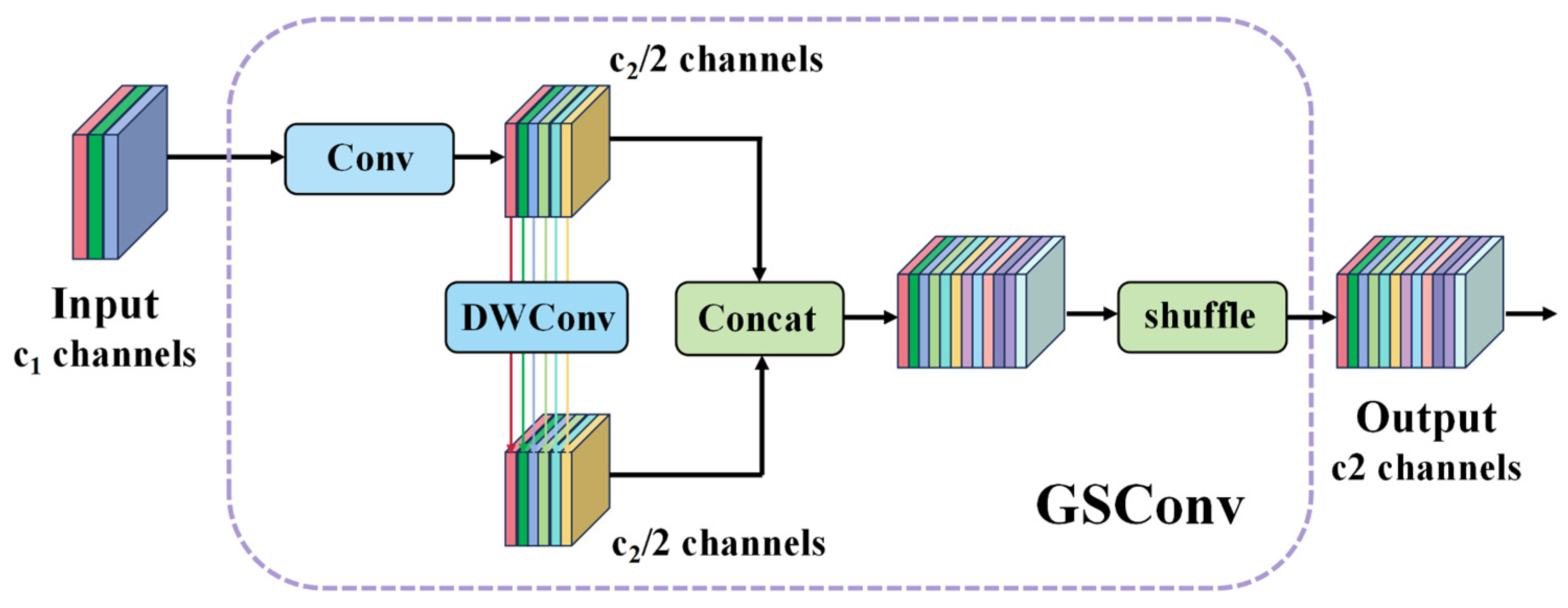

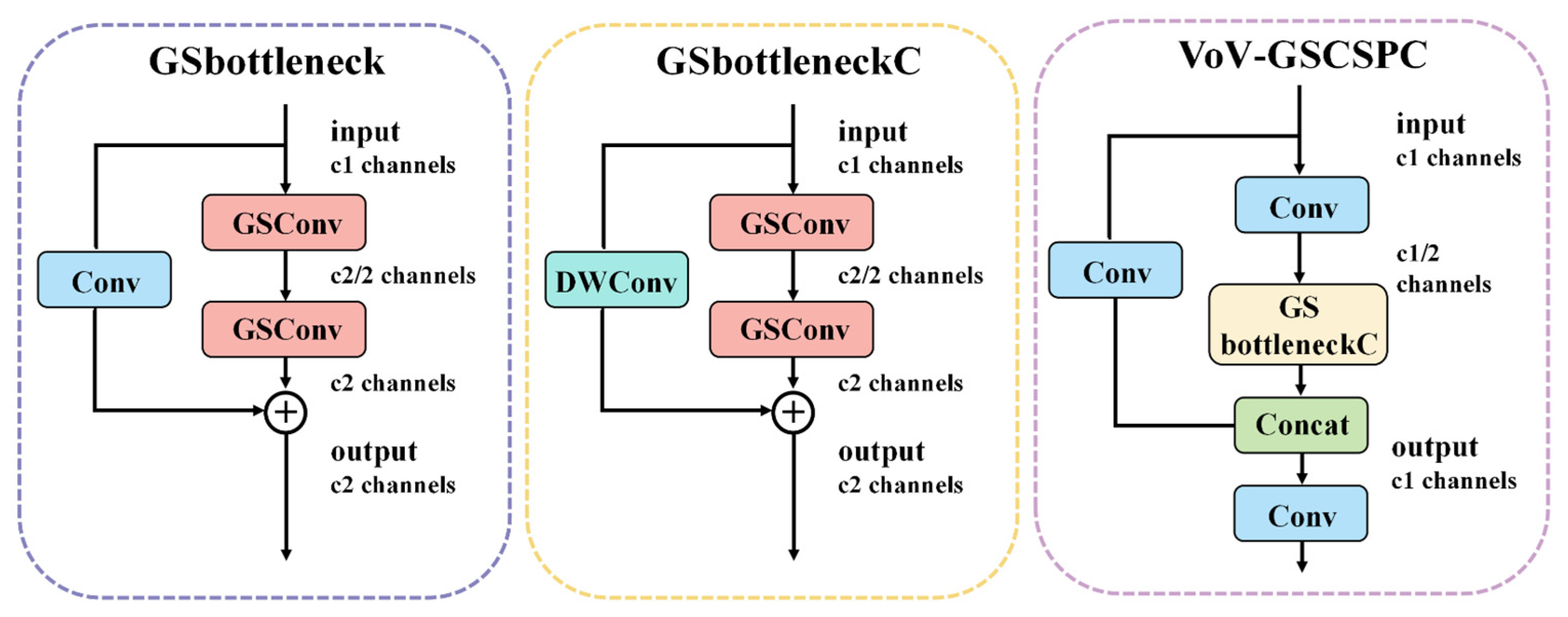

- GSConv and VoV-GSCSPC modules are introduced to build Slim-Neck. By applying depth-separable convolution (DWConv) and the fixed channel ratio, the convolution operation efficiency is improved, and the feature map information of different stages is fused more effectively at the same time. The detection model further reduces complexity without losing accuracy, speeds up the detection speed, and makes it more suitable for actual detection tasks requiring fast and lightweight networks.

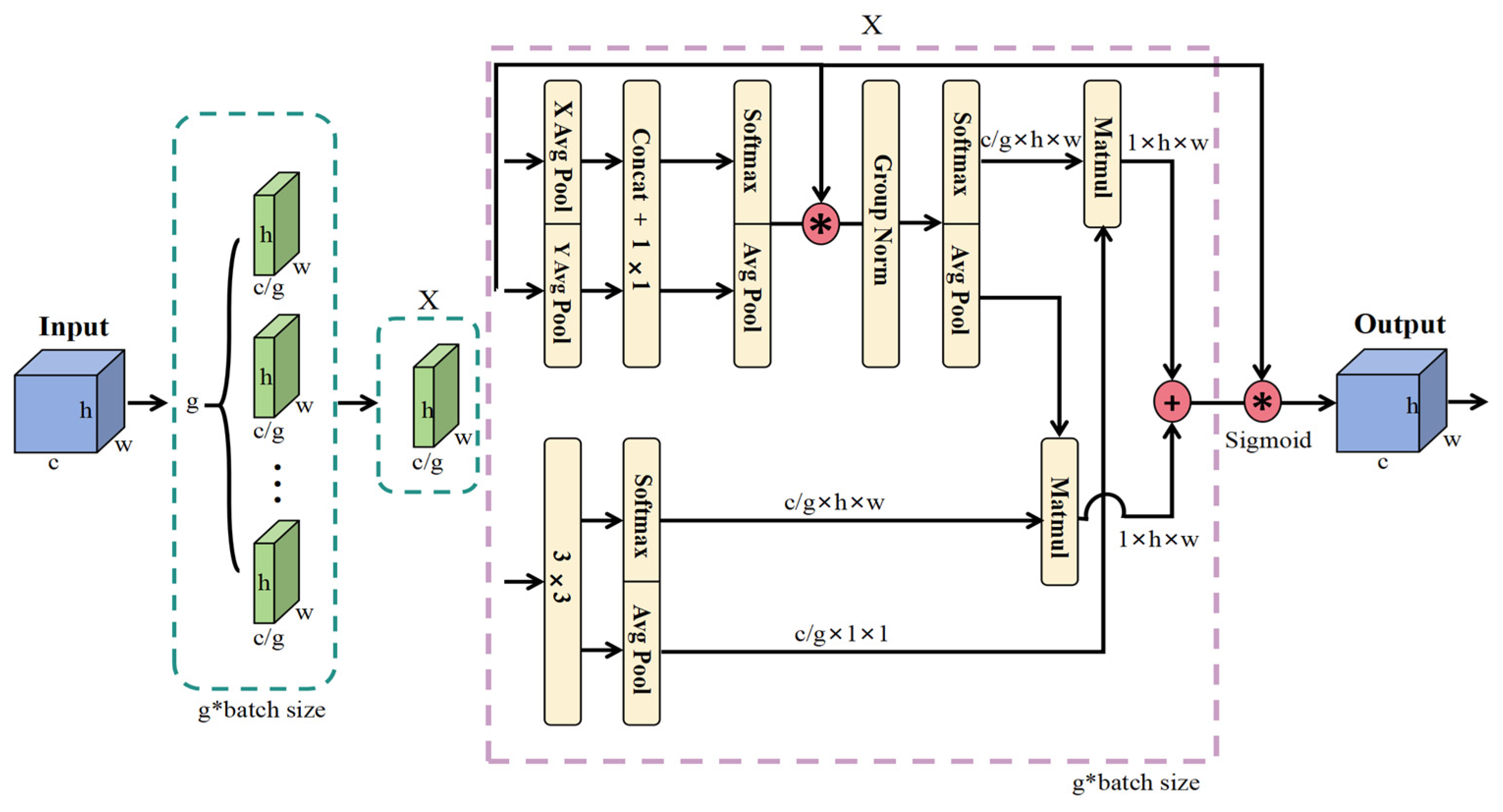

- EMA is used to enhance the detection head. First, EMA is integrated; then, channel dimension and batch dimension are reorganized, interdimensional interaction is used to capture pixel-level relationships, and feature loss in lightweight design is compensated for through channel attention recalibration to improve rare small-target detection.

2. Related Work

2.1. Underwater Target Detection

2.2. Small Target Detection

3. Proposed Method

3.1. Network Structure

3.2. C2PSA_MSDA Module

3.3. Slim-Neck Structure

3.4. EMA Module

4. Experiments and Discussion

4.1. Dataset

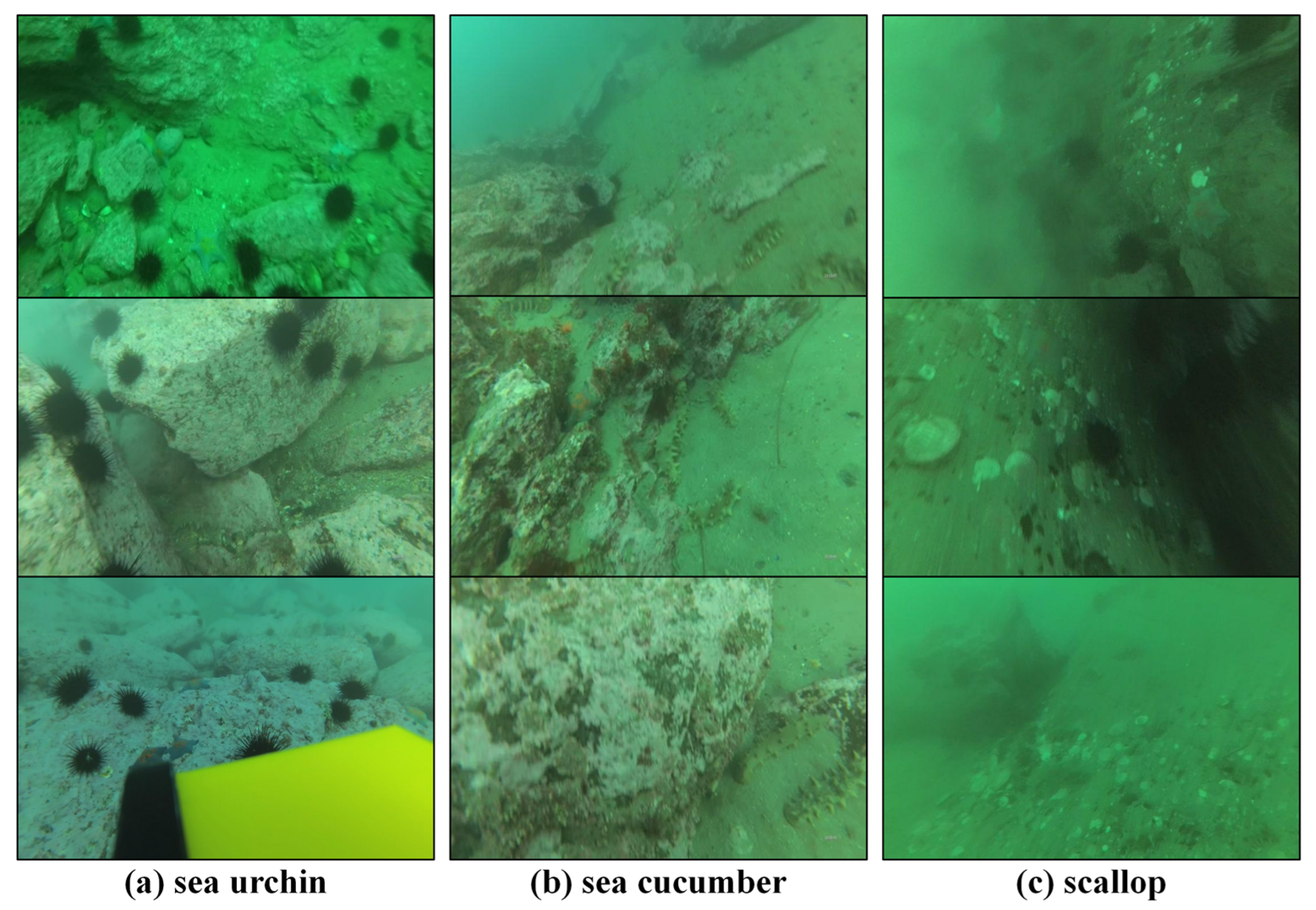

4.1.1. Overview of the Dataset

4.1.2. Image and Target Characteristics

- (1)

- Optical environment interference: The attenuation and scattering of underwater light cause image blurring, color distortion, and reduced target feature recognition, which affects the performance of the YOLOv11 detection algorithm.

- (2)

- Small targets and dense distribution: The three types of targets generally have the characteristics of small size and high aggregation. The existing detection networks have insufficient perception ability for small target features, and the distinction and positioning of targets in dense scenes are technical difficulties.

- (3)

- Computational resource constraints: The edge devices carried by underwater robots have limited computing power. The real-time deployment of high-precision detection models requires a balance between model complexity and inference efficiency, which results in higher requirements for the design of lightweight networks.

4.2. Implementation Details

4.3. Evaluation Metrics

4.3.1. Precision

4.3.2. Recall

4.3.3. AP

4.3.4. mAP

4.3.5. GFLOPs

4.4. Quantitative Analysis

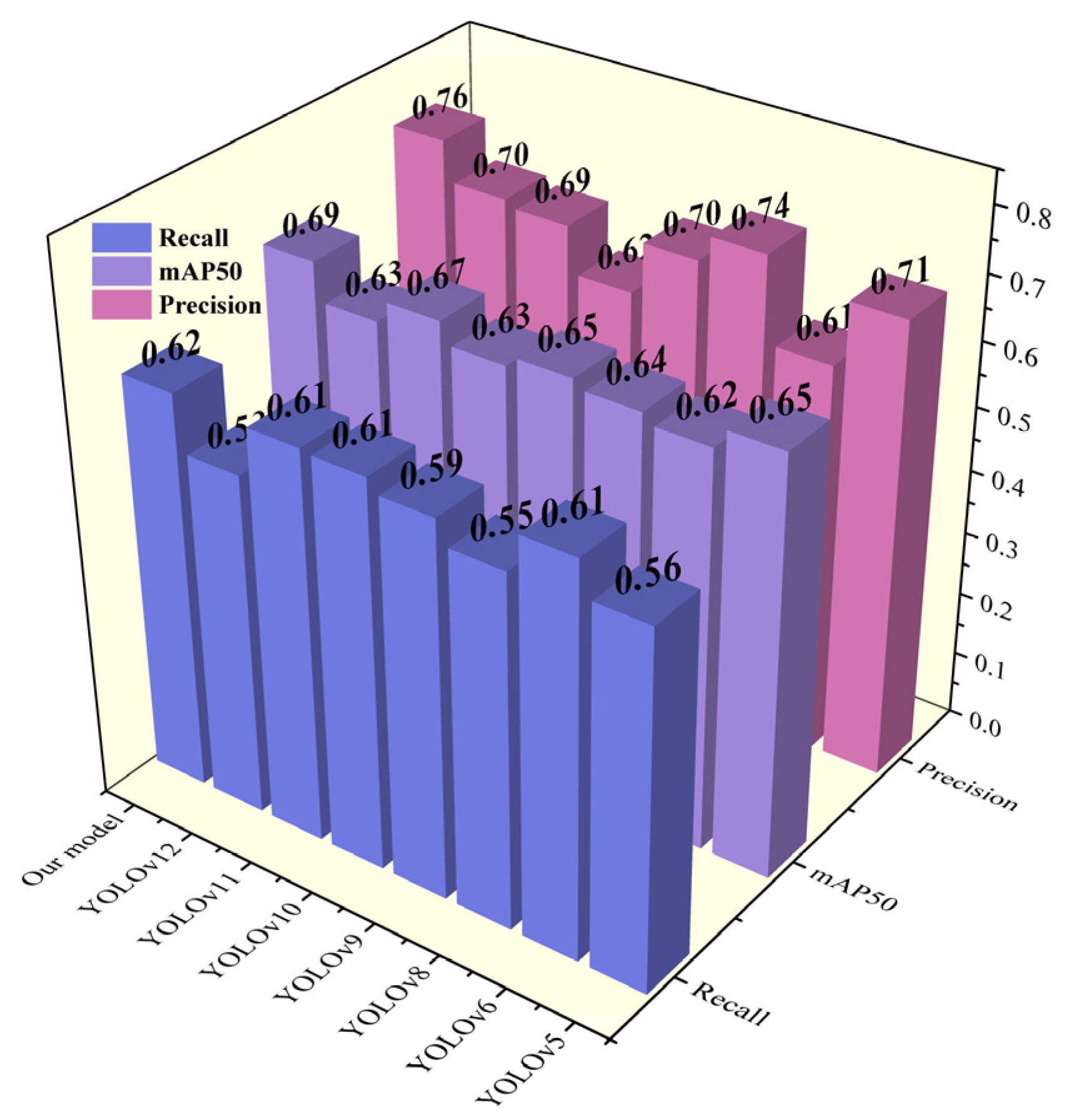

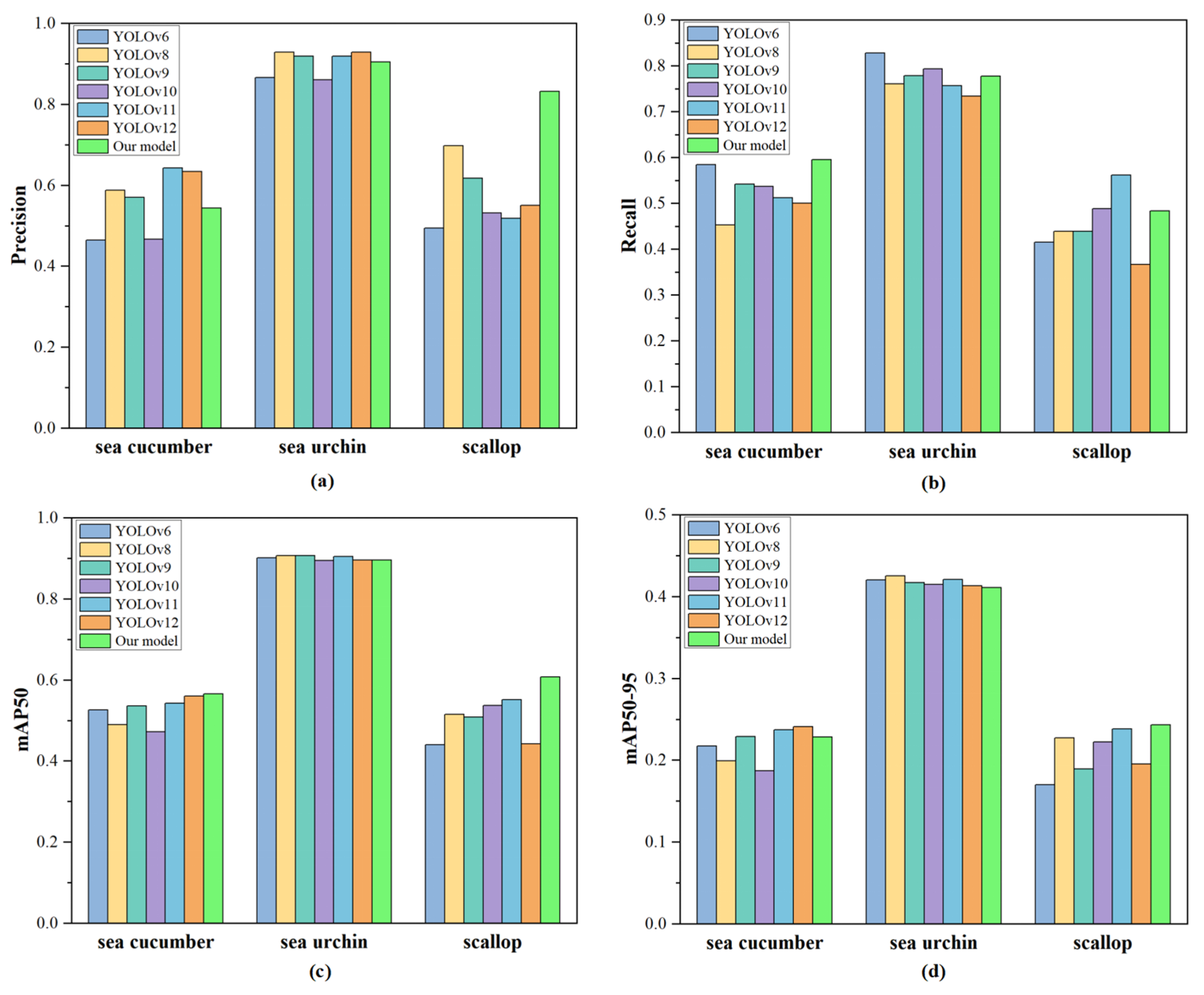

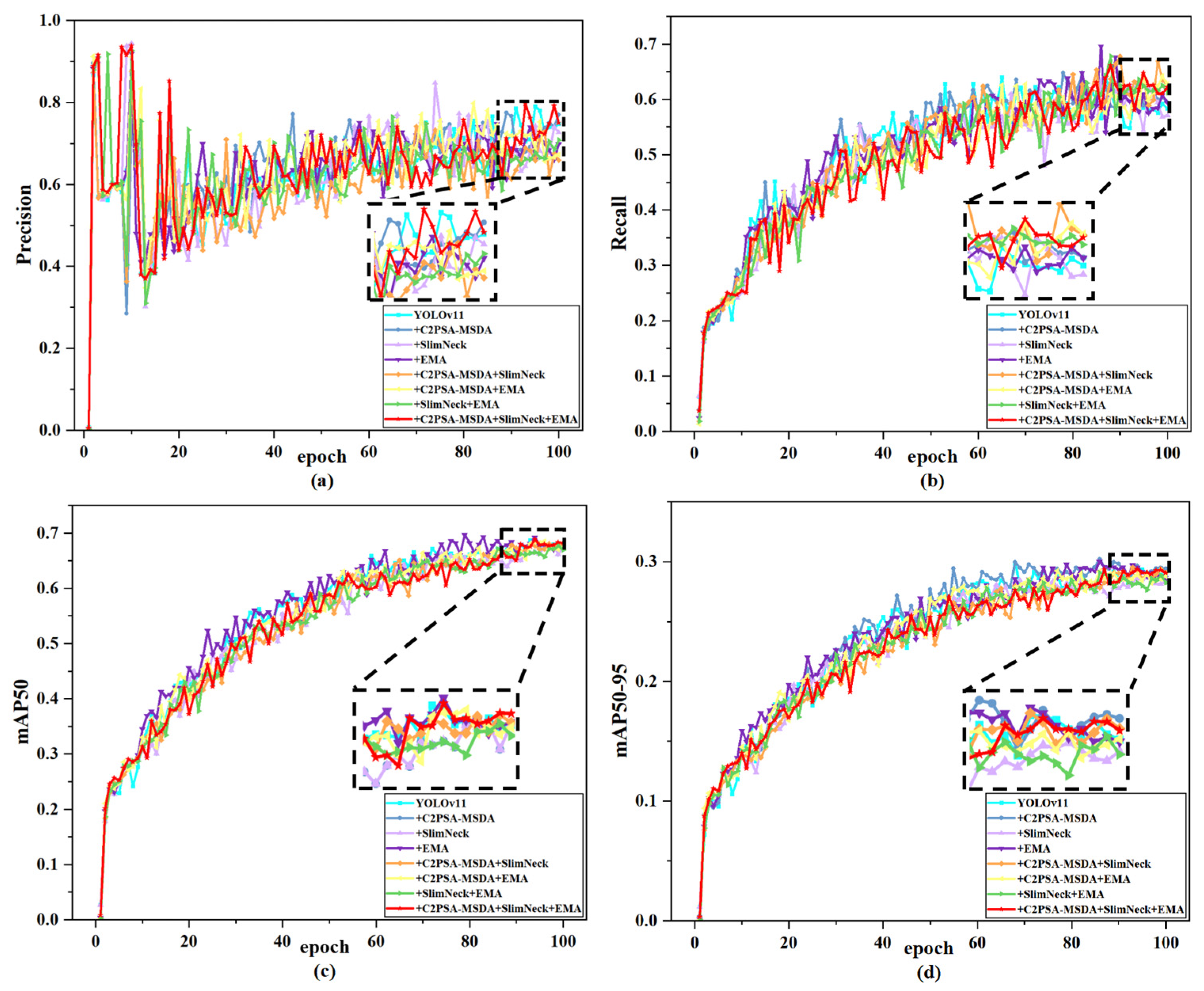

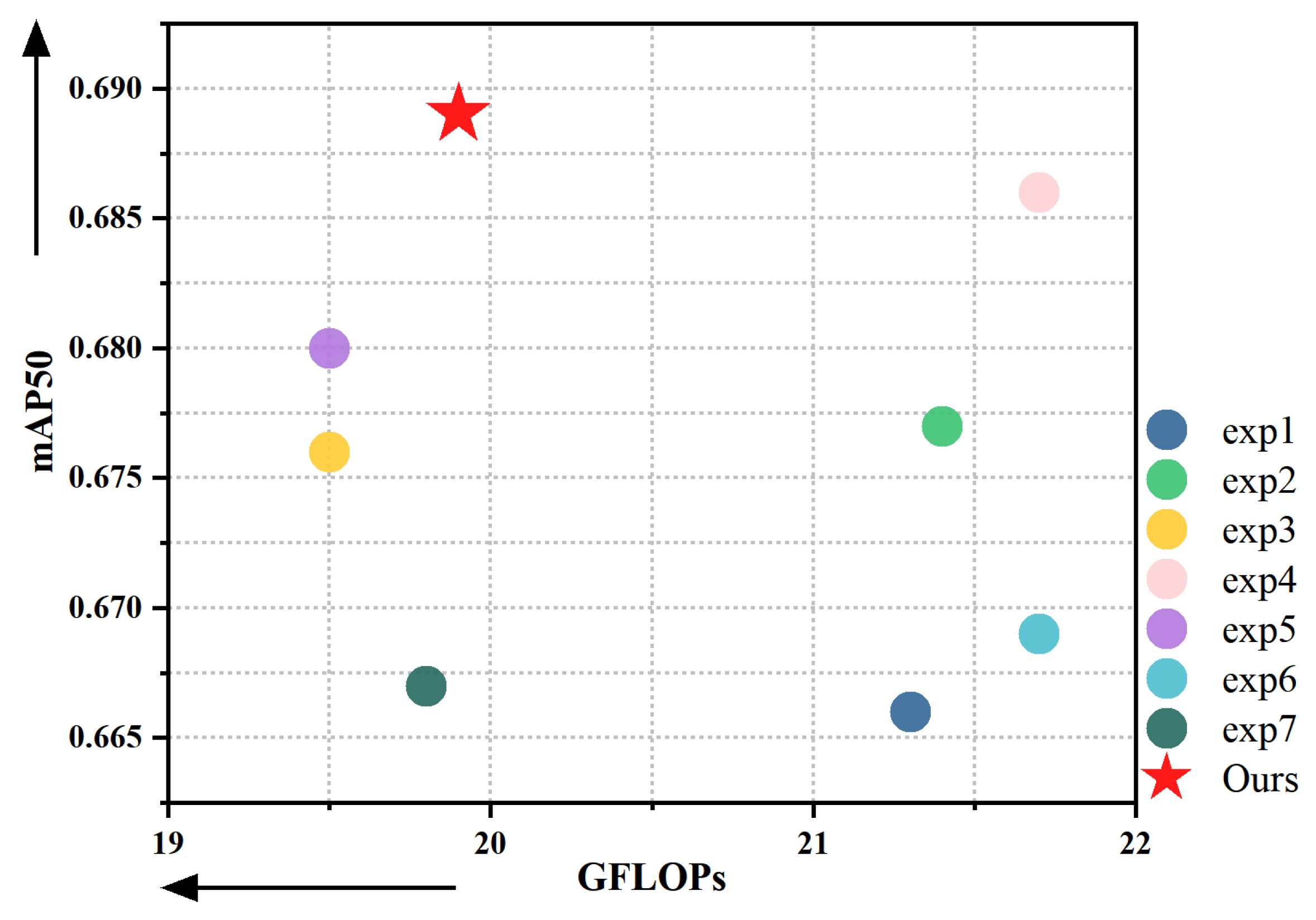

4.5. Ablation Experiments

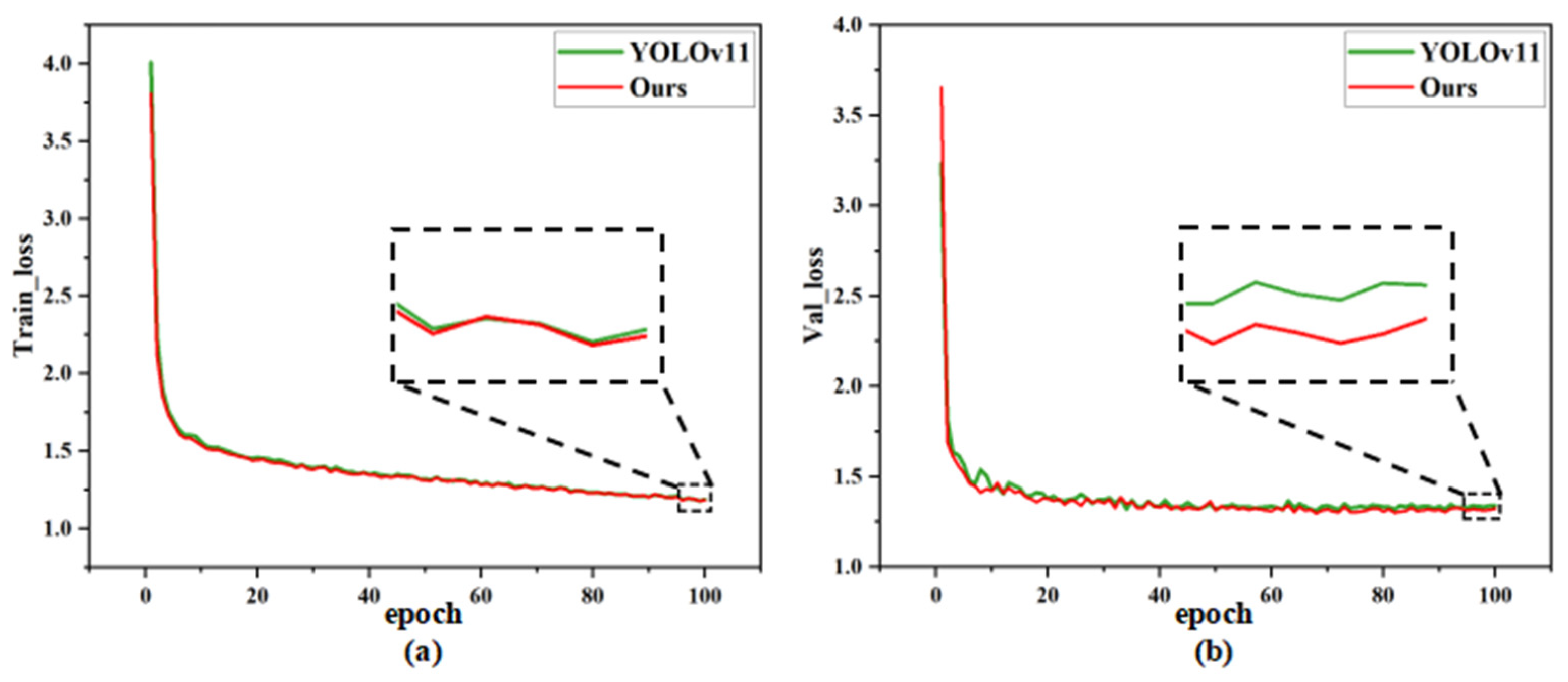

4.6. Model Loss Chart Comparison

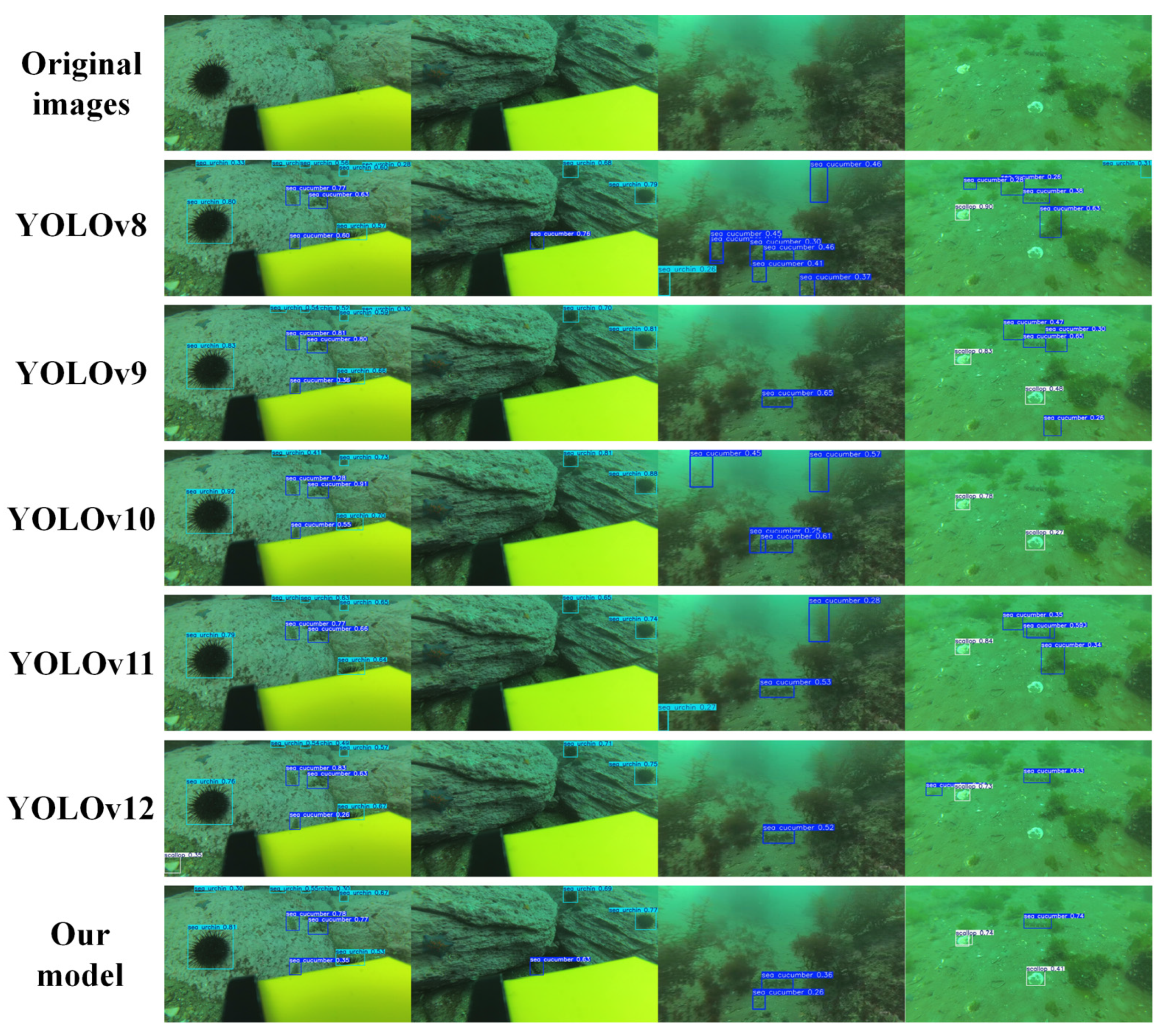

4.7. Visualization Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- McGeady, R.; Runya, R.M.; Dooley, J.S.G.; Howe, J.A.; Fox, C.J.; Wheeler, A.J.; Summers, G.; Callaway, A.; Beck, S.; Brown, L.S.; et al. A review of new and existing non-extractive techniques for monitoring marine protected areas. Front. Mar. Sci. 2023, 10, 1126301. [Google Scholar] [CrossRef]

- Zeng, L.; Sun, B.; Zhu, D. Underwater target detection based on Faster R-CNN and adversarial occlusion network. Eng. Appl. Artif. Intell. 2021, 100, 104190. [Google Scholar] [CrossRef]

- Fan, B.; Chen, W.; Cong, Y.; Tian, J. Dual Refinement Underwater Object Detection Network. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 275–291. [Google Scholar]

- Qiao, W.; Khishe, M.; Ravakhah, S. Underwater targets classification using local wavelet acoustic pattern and multi-layer perceptron neural network optimized by modified whale optimization algorithm. Ocean Eng. 2021, 219, 108415. [Google Scholar] [CrossRef]

- Liu, C.; Wang, Z.; Wang, S.; Tang, T.; Tao, Y.; Yang, C.; Li, H.; Liu, X.; Fan, X. A New Dataset, Poisson GAN and AquaNet for Underwater Object Grabbing. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 2831–2844. [Google Scholar] [CrossRef]

- Feng, J.; Jin, T. CEH-YOLO: A composite enhanced YOLO-based model for underwater object detection. Ecol. Inform. 2024, 82, 102758. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, J.; Fu, X.; Yu, T.; Guo, Y.; Wang, R. DC-SPP-YOLO: Dense connection and spatial pyramid pooling based YOLO for object detection. Inf. Sci. 2020, 522, 241–258. [Google Scholar] [CrossRef]

- Fu, X.; Zhuang, P.; Huang, Y.; Liao, Y.; Zhang, X.-P.; Ding, X. A retinex-based enhancing approach for single underwater image. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4572–4576. [Google Scholar]

- Yu, Y.; Zhao, J.; Gong, Q.; Huang, C.; Zheng, G.; Ma, J. Real-Time Underwater Maritime Object Detection in Side-Scan Sonar Images Based on Transformer-YOLOv5. Remote Sens. 2021, 13, 3555. [Google Scholar] [CrossRef]

- Cai, K.; Miao, X.; Wang, W.; Pang, H.; Liu, Y.; Song, J. A modified YOLOv3 model for fish detection based on MobileNetv1 as backbone. Aquac. Eng. 2020, 91, 102117. [Google Scholar] [CrossRef]

- Liu, L.; Chu, C.; Chen, C.; Huang, S. MarineYOLO: Innovative deep learning method for small target detection in underwater environments. Alex. Eng. J. 2024, 104, 423–433. [Google Scholar] [CrossRef]

- Wang, Q.; He, B.; Zhang, Y.; Yu, F.; Huang, X.; Yang, R. An autonomous cooperative system of multi-AUV for underwater targets detection and localization. Eng. Appl. Artif. Intell. 2023, 121, 105907. [Google Scholar] [CrossRef]

- Chen, N.; Zhu, J.; Zheng, L. Light-YOLO: A Study of a Lightweight YOLOv8n-Based Method for Underwater Fishing Net Detection. Appl. Sci. 2024, 14, 6461. [Google Scholar] [CrossRef]

- Yang, C.; Xiang, J.; Li, X.; Xie, Y. FishDet-YOLO: Enhanced Underwater Fish Detection with Richer Gradient Flow and Long-Range Dependency Capture through Mamba-C2f. Electronics 2024, 13, 3780. [Google Scholar] [CrossRef]

- Xu, S.; Zhang, M.; Song, W.; Mei, H.; He, Q.; Liotta, A. A systematic review and analysis of deep learning-based underwater object detection. Neurocomputing 2023, 527, 204–232. [Google Scholar] [CrossRef]

- Liu, R.; Fan, X.; Zhu, M.; Hou, M.; Luo, Z. Real-World Underwater Enhancement: Challenges, Benchmarks, and Solutions Under Natural Light. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Zhang, F.; Cao, W.; Gao, J.; Liu, S.; Li, C.; Song, K.; Wang, H. Underwater Object Detection Algorithm Based on an Improved YOLOv8. J. Mar. Sci. Eng. 2024, 12, 1991. [Google Scholar] [CrossRef]

- Gao, Y.; Li, Z.; Zhang, K.; Kong, L. GCP-YOLO: A lightweight underwater object detection model based on YOLOv7. J. Real-Time Image Process. 2025, 22, 3. [Google Scholar] [CrossRef]

- Zhou, J.; Pang, L.; Zhang, D.; Zhang, W. Underwater Image Enhancement Method via Multi-Interval Subhistogram Perspective Equalization. IEEE J. Ocean. Eng. 2023, 48, 474–488. [Google Scholar] [CrossRef]

- Ni, T.; Sima, C.; Zhang, W.; Wang, J.; Guo, J.; Zhang, L. Vision-based underwater docking guidance and positioning: Enhancing detection with YOLO-D. J. Mar. Sci. Eng. 2025, 13, 102. [Google Scholar] [CrossRef]

- Lu, Y.; Zhang, J.; Chen, Q.; Xu, C.; Irfan, M.; Chen, Z. AquaYOLO: Enhancing YOLOv8 for accurate underwater object detection for sonar images. J. Mar. Sci. Eng. 2025, 13, 73. [Google Scholar] [CrossRef]

- Xu, S.; Wang, J.; Sang, Q. Semi-supervised method for underwater object detection algorithm based on improved YOLOv8. Appl. Sci. 2025, 15, 1065. [Google Scholar] [CrossRef]

- Zhou, J.; He, Z.; Zhang, D.; Liu, S.; Fu, X.; Li, X. Spatial residual for underwater object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 4996–5013. [Google Scholar] [CrossRef] [PubMed]

- Shen, X.; Yuan, G.; Wang, H.; Fu, X. Unsupervised clustering optimization-based efficient attention in YOLO for underwater object detection. Artif. Intell. Rev. 2025, 58, 219. [Google Scholar] [CrossRef]

- Zhuang, P.; Li, C.; Wu, J. Bayesian retinex underwater image enhancement. Eng. Appl. Artif. Intell. 2021, 101, 104171. [Google Scholar] [CrossRef]

- Sarkar, P.; De, S.; Gurung, S.; Dey, P. UICE-MIRNet guided image enhancement for underwater object detection. Sci. Rep. 2024, 14, 22448. [Google Scholar] [CrossRef] [PubMed]

- Manimurugan, S.; Narmatha, C.; Aborokbah, M.M.; Chilamkurti, N.; Ganesan, S.; Thavasimuthu, R.; Karthikeyan, P.; Uddin, M.A. HLASwin-T-ACoat-net based underwater object detection. IEEE Access 2024, 12, 32200–32217. [Google Scholar] [CrossRef]

- Wang, J.; Qi, S.; Wang, C.; Luo, J.; Wen, X.; Cao, R. B-YOLOX-S: A lightweight method for underwater object detection based on data augmentation and multiscale feature fusion. J. Mar. Sci. Eng. 2022, 10, 1764. [Google Scholar] [CrossRef]

- Wang, W.; Yu, Z.; Huang, M. Refining features for underwater object detection at the frequency level. Front. Mar. Sci. 2025, 12, 1544839. [Google Scholar] [CrossRef]

- Zheng, Z.; Yu, W. RG-YOLO: Multi-scale feature learning for underwater target detection. Multimed. Syst. 2025, 31, 26. [Google Scholar] [CrossRef]

- Li, T.; Gang, Y.; Li, S.; Shang, Y. A small underwater object detection model with enhanced feature extraction and fusion. Sci. Rep. 2025, 15, 2396. [Google Scholar] [CrossRef]

- Liu, M.; Wu, Y.; Li, R.; Lin, C. LFN-YOLO: Precision underwater small object detection via a lightweight reparameterized approach. Front. Mar. Sci. 2025, 11, 1513740. [Google Scholar] [CrossRef]

- Chen, J.; Er, M.J. Dynamic YOLO for small underwater object detection. Artif. Intell. Rev. 2024, 57, 165. [Google Scholar] [CrossRef]

- Zhang, M.; Wang, Z.; Song, W.; Zhao, D.; Zhao, H. Efficient small-object detection in underwater images using the enhanced YOLOv8 network. Appl. Sci. 2024, 14, 1095. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024. [Google Scholar] [CrossRef]

- Jiao, J.; Tang, Y.-M.; Lin, K.-Y.; Gao, Y.; Ma, J.; Wang, Y.; Zheng, W.-S. DilateFormer: Multi-Scale Dilated Transformer for Visual Recognition. arXiv 2023. [Google Scholar] [CrossRef]

- Cao, L.; Wang, Q.; Luo, Y.; Hou, Y.; Cao, J.; Zheng, W. YOLO-TSL: A lightweight target detection algorithm for UAV infrared images based on Triplet attention and Slim-neck. Infrared Phys. Technol. 2024, 141, 105487. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A lightweight-design for real-time detector architectures. J. Real-Time Image Process. 2024, 21, 62. [Google Scholar] [CrossRef]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient Multi-Scale Attention Module with Cross-Spatial Learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

| Category | Characteristics | Detection Challenges |

|---|---|---|

| Sea cucumbers | Long strips; the color is similar to the rock on the sea floor | Easily drowned by the background; small size of the target makes it easy to miss detection |

| Sea urchins | Black spiny appearance; visual contrast with sandy background; aggregation distribution | Densely distributed; objectives overlap; the boundary is fuzzy, and the location of the detection block is difficult |

| Scallops | In the form of a shell; various opening and closing state and occlusion conditions; the distribution is scattered or clustered | Irregular shape and complex occlusion; small-sized scallops easily lose details; dataset classification is unbalanced |

| Environment Configuration | Parameter |

|---|---|

| Operating system | Windows 11 |

| CPU | AMD Ryzen 7 5800H with Radeon Graphics |

| GPU | NVIDIA GeForce RTX 3060 (6 GB) |

| Development environment | Pycharm 2024.3.1.1 |

| Language | Python 3.10.16 |

| Operating platform | Pytorch2.5.0+cuda11.8 |

| Hyperparameter | Parameter |

|---|---|

| Epochs | 100 |

| Batch size | 16 |

| Learning rate | 0.01 |

| Momentum | 0.937 |

| Weight decay | 0.0005 |

| Input image size | 640 |

| Model | Precision | Recall | mAP50 | mAP50-95 | GFLOPs |

|---|---|---|---|---|---|

| YOLOv5 | 0.714 | 0.556 | 0.653 | 0.281 | 23.8 |

| YOLOv6 | 0.608 | 0.609 | 0.622 | 0.269 | 44 |

| YOLOv8 | 0.737 | 0.55 | 0.637 | 0.284 | 28.4 |

| YOLOv9 | 0.702 | 0.586 | 0.65 | 0.278 | 26.7 |

| YOLOv10 | 0.619 | 0.606 | 0.634 | 0.275 | 24.5 |

| YOLOv11 | 0.693 | 0.61 | 0.666 | 0.299 | 21.3 |

| YOLOv12 | 0.704 | 0.533 | 0.632 | 0.283 | 21.2 |

| Our model | 0.76 | 0.618 | 0.689 | 0.294 | 19.9 |

| NO | Model | Precision | Recall | mAP50 | mAP50-95 | GFLOPs | ||

|---|---|---|---|---|---|---|---|---|

| C2PSA-MSDA | Slim-Neck | EMA | ||||||

| exp1 | Baseline | 0.693 | 0.61 | 0.666 | 0.299 | 21.3 | ||

| exp2 | √ | 0.717 | 0.612 | 0.677 | 0.302 | 21.4 | ||

| exp3 | √ | 0.686 | 0.62 | 0.676 | 0.287 | 19.5 | ||

| exp4 | √ | 0.591 | 0.695 | 0.686 | 0.301 | 21.7 | ||

| exp5 | √ | √ | 0.687 | 0.639 | 0.68 | 0.296 | 19.5 | |

| exp6 | √ | √ | 0.719 | 0.58 | 0.669 | 0.292 | 21.7 | |

| exp7 | √ | √ | 0.689 | 0.609 | 0.667 | 0.289 | 19.8 | |

| exp8 | √ | √ | √ | 0.76 | 0.618 | 0.689 | 0.294 | 19.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, Z.; Peng, X.; Li, D.; Shi, F. YOLOv11-MSE: A Multi-Scale Dilated Attention-Enhanced Lightweight Network for Efficient Real-Time Underwater Target Detection. J. Mar. Sci. Eng. 2025, 13, 1843. https://doi.org/10.3390/jmse13101843

Ye Z, Peng X, Li D, Shi F. YOLOv11-MSE: A Multi-Scale Dilated Attention-Enhanced Lightweight Network for Efficient Real-Time Underwater Target Detection. Journal of Marine Science and Engineering. 2025; 13(10):1843. https://doi.org/10.3390/jmse13101843

Chicago/Turabian StyleYe, Zhenfeng, Xing Peng, Dingkang Li, and Feng Shi. 2025. "YOLOv11-MSE: A Multi-Scale Dilated Attention-Enhanced Lightweight Network for Efficient Real-Time Underwater Target Detection" Journal of Marine Science and Engineering 13, no. 10: 1843. https://doi.org/10.3390/jmse13101843

APA StyleYe, Z., Peng, X., Li, D., & Shi, F. (2025). YOLOv11-MSE: A Multi-Scale Dilated Attention-Enhanced Lightweight Network for Efficient Real-Time Underwater Target Detection. Journal of Marine Science and Engineering, 13(10), 1843. https://doi.org/10.3390/jmse13101843