Abstract

The application potential of unmanned aerial vehicles (UAVs) in marine search and rescue is especially of concern for the ongoing advancement of visual recognition technology and image processing technology. Limited computing resources, insufficient pixel representation for small objects in high-altitude images, and challenging visibility conditions hinder UAVs’ target recognition performance in maritime search and rescue operations, highlighting the need for further optimization and enhancement. This study introduces an innovative detection framework, CFSD-UAVNet, designed to boost the accuracy of detecting minor objects within imagery captured from elevated altitudes. To improve the performance of the feature pyramid network (FPN) and path aggregation network (PAN), a newly designed PHead structure was proposed, focusing on better leveraging shallow features. Then, structural pruning was applied to refine the model and enhance its capability in detecting small objects. Moreover, to conserve computational resources, a lightweight CED module was introduced to reduce parameters and conserve the computing resources of the UAV. At the same time, in each detection layer, a lightweight CRE module was integrated, leveraging attention mechanisms and detection heads to enhance precision for small object detection. Finally, to enhance the model’s robustness, WIoUv2 loss function was employed, ensuring a balanced treatment of positive and negative samples. The CFSD-UAVNet model was evaluated on the publicly available SeaDronesSee maritime dataset and compared with other cutting-edge algorithms. The experimental results showed that the CFSD-UAVNet model achieved an mAP@50 of 80.1% with only 1.7 M parameters and a computational cost of 10.2 G, marking a 12.1% improvement over YOLOv8 and a 4.6% increase compared to DETR. The novel CFSD-UAVNet model effectively balances the limitations of scenarios and detection accuracy, demonstrating application potential and value in the field of UAV-assisted maritime search and rescue.

1. Introduction

UAV-based object detection technology has shown considerable potential across various domains due to its flexibility and efficiency, particularly in applications like maritime search and rescue [1], traffic monitoring [2], and disaster assessment [3]. However, as an emerging research focus, UAV-based object detection remains in its infancy and faces numerous challenges in practical applications.

Firstly, the varying flight altitudes of UAVs often result in captured images containing numerous small targets. Consequently, the ability of UAV-based object detection algorithms to precisely identify and quickly localize small objects is critical to enhancing rescue efficiency and success rates. In terrestrial UAV applications, ground targets are sometimes stationary and not affected by disturbances, such as wave-reflected light, resulting in clearer images captured by UAVs. In contrast, maritime targets are typically smaller and are constantly in motion due to the influence of waves. Additionally, the clarity of maritime targets is further degraded by various factors, such as sea clutter, wave motion, and fog, making their recognition and detection more challenging. Moreover, due to the constraints of UAVs’ payload capacity and hardware limitations, onboard algorithms must achieve high-precision small object detection while maintaining lightweight and low-complexity characteristics. These challenges are especially significant in the field of UAV-based object detection in maritime environments [4].

Over the past few years, advancements in deep learning methods have significantly advanced the detection of small objects in maritime environments. Maritime targets, such as boats and buoys, pose substantial detection challenges due to limited pixel resolution from long-distance imaging. To address these challenges, numerous approaches based on deep learning have been proposed. The Single Shot MultiBox Detector (SSD) [5] uses convolutional layers to generate default boxes, effectively detecting multi-scale objects, including small ones, although its accuracy decreases with insufficient feature fusion. YOLOv4 enhances detection through multi-scale prediction but faces high computational complexity, limiting deployment in resource-constrained environments. Feature Pyramid Networks (FPNs) [6] use a top–down approach to improve feature pyramids, aiding in distant small object detection, although accuracy may drop for fast-moving targets. RetinaNet [7] tackles class imbalance with Focal Loss [8], which is beneficial in maritime environments where the background dominates, although challenges remain when targets and backgrounds are similar.

Researchers have advanced the field by devising techniques that integrate data from multiple sensor modalities, such as optical and thermal imaging, to enhance maritime detection capabilities. However, challenges in data synchronization and fusion strategy selection remain. EfficientDet [9], using BiFPN [10] and compound scaling, detects small objects with low computational cost and is suitable for real-time monitoring, but its performance can decline in extreme lighting conditions. Federated learning [11,12], a privacy-preserving distributed framework, enhances detection by integrating distributed data without uploading raw data, but issues like communication overhead and data heterogeneity need further exploration. These techniques have advanced maritime small object detection, supporting maritime safety, defense, and rescue operations, but resource constraints and detection accuracy remain critical challenges [13].

Building upon existing studies, this paper introduces a novel algorithm specifically designed for small object detection in UAV-based maritime applications, referred to as the Cross-Stage Focused Small Object Detection UAVNet (CFSD-UAVNet). To improve detection accuracy for small objects, a newly designed PHead structure was introduced to strengthen the FPN and PAN, with a particular focus on shallow feature information. The model was optimized using structural pruning, which enhanced its ability to detect small objects effectively. Additionally, to further emphasize small object detection, each detection layer incorporates CSP-RepViT-EMA (CRE), which leverage attention mechanisms in conjunction with detection heads to enhance precision. To conserve computational resources and improve the processing speed, a lightweight CSP Efficient Dual-Layer Module (CED) was proposed, minimizing the parameter count and computational overhead. Finally, to enhance the model’s robustness, WIoUv2 loss function [14] was employed, ensuring a balanced treatment of positive and negative samples.

To assess the performance and generalization capability of the CFSD-UAVNet, experiments were carried on the publicly available SeaDronesSee [15] maritime dataset. First, the CFSD-UAVNet’s performance was evaluated against other advanced object detection algorithms using the same dataset. The results demonstrated that CFSD-UAVNet outperformed the other algorithms across metrics, achieving an average precision of 80.1%, representing an approximate 10% improvement over other mainstream algorithms. Subsequently, the model’s parameter count and computational cost were analyzed. Experimental results indicated that CFSD-UAVNet performed well in lightweight design, with only 1.7 M parameters and 10.2 G floating-point operations. These findings indicate that CFSD-UAVNet has achieved some positive advancements in precise detection and efficient computation.

The key findings of this paper can be encapsulated in the following points:

- A high-precision detection model, CFSD-UAVNet, is proposed for UAV-based maritime small object detection applications.

- To improve small object detection accuracy, a new PHead structure is designed to enhance the FPN and PAN, with a greater emphasis on shallow feature information. Structural pruning is applied to optimize the network, improving the detection accuracy for small objects.

- To further focus on small object detection, the CRE module is incorporated into each detection layer, leveraging attention mechanisms in conjunction with detection heads to enhance small object detection accuracy.

- A lightweight CED module is proposed, simplifying the model by decreasing parameters and the computational burden.

2. Relative Work

To overcome the difficulty of identifying small targets in UAV images, which often occupy only a few pixels and are difficult to detect accurately, researchers have implemented various improvements. Xu et al. [16] proposed a DRSR to improve the clarity of small targets degraded by transformations. This module is capable of recovering and enhancing the details of small objects, thereby increasing detection accuracy by improving their resolution in the image. Li et al. [17] utilized the k-means clustering algorithm to effectively identify the most appropriate anchor box sizes and ratios for specific target dimensions and shapes, which enhances the precision in identifying minor targets and caters to the varying demands of object detection across diverse settings. Bi et al. [18] proposed APFNet, a novel network aimed at integrating multi-level features. This network directly integrates the final feature fusion path with the backbone network, thereby enhancing the positional and semantic information of deep features. Nevertheless, certain images of small targets captured by unmanned aerial vehicles (UAVs) still experience issues with false negatives and incorrect identifications. To address these issues, techniques, such as multi-scale fusion and feature enhancement mechanisms, are implemented to improve the detection accuracy of small targets in UAV images.

Apart from the methods mentioned above, multi-scale fusion techniques play a vital role in enhancing small object detection. Zhou et al. [19] proposed a GAFN to enhance feature fusion efficiency. The network employs a three-tier adaptive feature fusion method, which dynamically integrates multi-scale features based on the significance of each feature layer, thereby enhancing the accuracy of detecting both small and multi-scale targets. Sun et al. [20] introduced GD-PAN, which leverages the advantages of classical path aggregation networks and the collect–distribute mechanism. This facilitates a more profound and extensive integration of feature maps across various scales. This enhances fine-grained processing for small object detection. Zhou et al. [21] enhanced the original detection head with the CASFF method, successfully resolving conflicts in multi-scale information and improving the accuracy of small object localization as well as the perception of long-range dependencies. Jiang et al. [22] designed an MSFEM to improve the network’s capacity to capture detailed features from small-scale targets. This module extracts rich and valuable multi-scale features through convolution operations at different scales across multiple branches. However, its real-time performance remains a challenge in maritime small object detection, which can be enhanced through the use of lightweight networks and techniques, such as pruning.

With the multi-scale fusion techniques having proven effective in boosting the accuracy of small object detection, researchers have delved into incorporating attention mechanisms to better discern target characteristics across various scales. Chen et al. [23] introduced the BRA method, combining it with dynamic detection heads enhanced by attention mechanisms to intensify the model’s attention to small objects and improve detection accuracy. However, this study did not fully address issues related to occlusion and model lightweighting. Zeng et al. [24] developed an innovative dual-branch fusion attention block that leverages the coordinate attention strategy. By combining feature that have been enhanced through the coordinate attention process with lower-level features, it bolsters the network’s capability to a rich tapestry of features from multiple layers. Tang et al. [25] proposed an attention mechanism called SCPMA, which effectively models spatial and channel dependencies of small objects at different scales. Despite the optimizations, issues of false positives and missed detections can still be mitigated by incorporating advanced post-processing techniques, such as Soft-NMS, and enhancing the model’s robustness through domain-specific data augmentation, thereby further improving detection accuracy.

3. Method

3.1. Overall Network Architecture of the Model

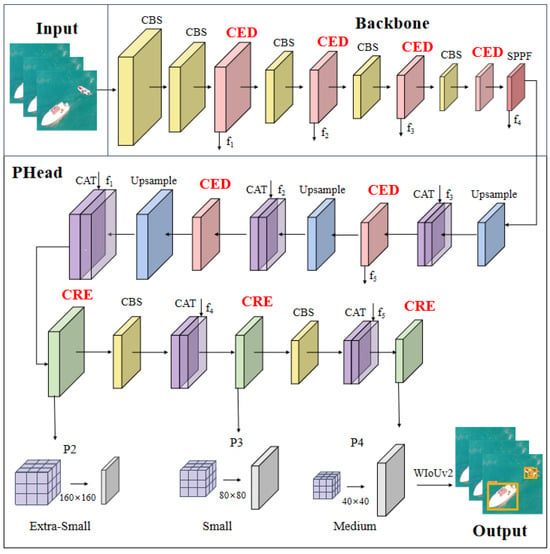

Figure 1 illustrates the overall structure of the CFSD-UAVNet model introduced in this paper, which consists of two main components: the Backbone and the PHead. The Backbone consists of a lightweight CED module, CBS convolutions, and SPPF. The input features are initially processed, and feature extraction is performed using consecutive CBS modules, followed by further enhancement of feature representation through alternating CED modules. After multiple layers of processing, the model generates four sets of multi-scale feature maps, f1, f2, f3, and f4. Finally, the SPPF module integrates deep features to capture global contextual information, providing support for subsequent operations. The PHead structure then further enhances the model’s capacity to detect small objects through hierarchical processing and feature fusion. First, the resolution of deep features is progressively restored through three upsampling layers, which are concatenated with shallow features from the Backbone, thus fully integrating spatial and semantic information from different levels. After each feature concatenation, the feature maps of the detection layers are further focused using the CRE module, allowing the model to pay more attention to small objects. The resulting feature maps from the three detection layers are input into three detection heads, P2, P3, and P4, corresponding to the detection of targets at different scales. P2 focuses on detecting smaller targets, while P3 and P4 handle the detection of both small and medium-sized targets. This multi-scale detection mechanism effectively adapts to the significant variation in target size in UAV-based maritime scenarios. Additionally, the model incorporates the WIoUv2 loss function, which optimizes the accuracy and robustness of bounding box predictions. Overall, CFSD-UAVNet, through feature extraction and multi-scale fusion, forms a highly efficient model for UAV-based maritime small object detection, providing a solution for maritime small object detection tasks.

Figure 1.

Overall network architecture of the CFSD-UAVNet.

3.2. PHead

To tackle the issue of small objects occupying a small portion of the image and the challenges in extracting their features, we proposed a PHead structure optimized with FPN and PAN. This structure, while incorporating a small object detection head, removes redundant large object detection heads, focusing on improving the model’s capability to detect small object features.

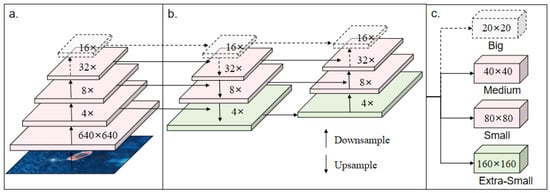

Traditional network structures typically utilize a two-level feature pyramid, which is suitable for real-time object detection in high-resolution aerial images. However, it performs poorly in UAV-based small object detection tasks. Therefore, we extend the feature pyramid from two levels to three, enhancing the fusion capabilities of FPN and PAN. This extension enhances the extraction and utilization of high-resolution features, thereby improving the model’s sensitivity to small objects and its detection accuracy. The additional layers in the feature pyramid facilitate the fusion of features obtained from 4× downsampling, improving the ability of the network to perceive small objects. By combining the FPN and PAN structures, the overall performance of the network is further enhanced. To address the detection of even smaller objects, an additional 4× downsampling detection head is introduced, enabling more effective detection of smaller objects. By extending the layers of the feature pyramid, the detection head can fully leverage shallow feature maps, capturing more comprehensive feature and positional information.

The optimized network structure is shown in Figure 2. Figure 2a represents the feature maps extracted from the original input at different downsampling rates by the feature extraction network; Figure 2b shows the feature maps after fusion through the corresponding PAN structure of FPN; Figure 2c represents the detection heads tailored for different object sizes. While this design enhances small object detection accuracy, it also increases the network’s complexity. In the SeaDronesSee dataset, small objects dominate, while large objects are relatively sparse. To reduce network complexity, we prune the modules related to 32× downsampling. In particular, the 32× downsampling feature extraction module and the 32× downsampling detection head, highlighted in the gray section of Figure 2, are eliminated. This pruning operation enhances computational efficiency, reduces memory usage, and shortens training time, boosting the model’s practical value. This pruning operation is specifically tailored to small object detection tasks in marine environments. It not only reduces the processing demand for redundant features associated with large objects but also optimizes computational resources.

Figure 2.

Overall network architecture of the PHead. (a) Feature maps extracted from the original input at different downsampling rates by the feature extraction network; (b) feature maps after fusion through the corresponding PAN structure of FPN; (c) detection heads tailored for different object sizes.

3.3. CSP-RepViT-EMA Module (CRE)

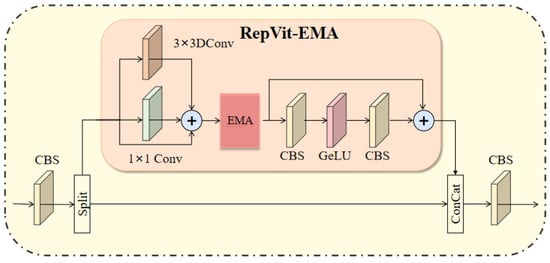

To improve the UAV’s ability to detect small targets in challenging maritime environments, the improved RepViTBlock module [26] is integrated into the detection layer and combined with the CSP structure [27], forming an attention-based lightweight CSP-RepViT-EMA (CRE) module, as shown in Figure 3. This integration further strengthens the model’s ability to recognize and focus on small targets.

Figure 3.

Structural diagram of the CRE module.

The RepViT module is a compact and efficient network structure that integrates the strengths of the Vision Transformer [28] and Convolutional Neural Networks (CNNs). Initially, the RepViT module employs 3 × 3 depthwise convolutions to extract spatial features, followed by 1 × 1 convolutional layers for channel expansion and mapping. This design effectively captures local features, such as detailed characteristics of ocean waves and small targets, thereby suppressing background interference. The residual connections in the module construct a feed-forward network (FFN), which both deepens the network and mitigates information loss resulting from resolution reduction. In addition, the SE attention module [29] enhances the model’s focus on critical features by dynamically recalibrating feature channel weights, thereby amplifying significant channels and boosting sensitivity to essential details.

However, the FC layers and activation functions of the SE module introduce computational overhead and delay, particularly in resource-limited environments, thus negatively impacting efficiency. To tackle this challenge, the SE module is replaced with the EMA module [30], resulting in the more efficient RepViT-EMA module. EMA, as a multi-scale attention mechanism, not only reduces computational overhead but also captures contextual information of multi-scale targets. This enables the model to better address the challenges posed by variations in target size, thereby enhancing its robustness in dynamic maritime scenarios. By integrating the RepViT-EMA module into the CSP structure, the feature map is divided into two sections: one part undergoes feature processing via the RepViT-EMA module, while the other remains unchanged. After processing, the two parts are effectively concatenated to form the lightweight CRE module. This residual connection integrates shallow texture information with deep semantic information, enhancing its ability to detect small maritime targets. The CRE module is applied to the critical detection layer of the model, ensuring that small target features are effectively captured across different scales.

3.4. CSP Efficient Dual-Layer Module (CED)

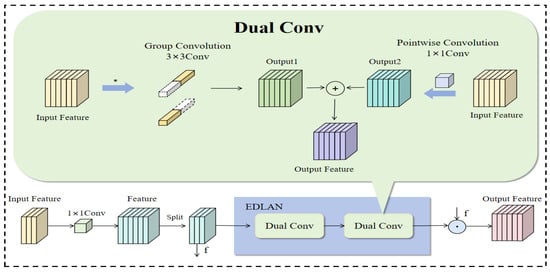

In UAV-based maritime small object detection tasks, to improve detection efficiency and reduce computational resource consumption, this paper designs a lightweight backbone network module called the CSP Efficient Dual-Layer Module (CED). This module is specifically designed to efficiently extract and aggregate multi-level features while optimizing computational complexity.

The core of CED lies in the EDLAN structure, which is primarily composed of Dual Conv [31]. This convolutional structure enables efficient extraction of both local and global features of small maritime targets, leveraging the strengths of group convolution and pointwise convolution. Group convolution reduces computational complexity by dividing the input feature map into several groups and performing convolution operations on each group independently. This operation focuses on capturing fine-grained local features of small maritime targets, such as ocean waves, sunlight reflections, and edge information of small objects. Pointwise convolution is then applied to refine local features and merge them into global semantic insights, maintaining comprehensive context across multi-scale features and enriching the diversity of feature representations. The output features of Dual Conv are obtained by performing an addition operation that combines the local feature information from pointwise convolution with the global contextual features from grouped convolution. This convolutional operation facilitates the capture of unique textures and dynamic characteristics in maritime scenes, such as wave patterns, vessel contours, and the locations of persons overboard. The EDLAN structure stacks two Dual Conv layers together, enabling progressive extraction and fusion of small target features across multiple levels, resulting in efficient and lightweight deep feature representations.

To adapt to the complex environment of maritime small object detection, the CED module employs the CSP architecture. This architecture divides the feature map into two parts for independent processing, followed by the fusion of the processed features. This design not only effectively integrates feature information from different layers but also reduces redundant computations, further alleviating the computational burden of the network. Figure 4 illustrates the structure of the CED module.

Figure 4.

Structural diagram of the CED module. * represents the convolution operation.

3.5. WIoUv2

In maritime object detection tasks using UAVs, the presence of reflections on the sea surface and the influence of waves can lead to issues, such as blurred boundaries of small targets and dense target distributions.

Therefore, an appropriate loss function can enhance the model’s performance. WIoUv2 is chosen as the loss function for the algorithm, as it improves the model’s attention to small samples in UAV-based maritime rescue scenarios.

The WIoU loss function builds upon the traditional IoU by introducing a class-weighting mechanism aimed at reducing the disparities between different classes and mitigating their impact on the final test results. This paper opts for WIoUv2 among the three available versions of WIoU. WIoUv2 is a monotonic focusing mechanism applied to cross-entropy loss, drawing inspiration from Focal Loss, which effectively diminishes the import of easy examples on the loss value. This allows the model to concentrate on challenging examples, thereby enhancing classification performance. The formula is as follows:

Due to the gradient increment decreasing as decreases, which leads to slower model convergence, WIoUv2 introduces the mean value of as a normalization factor, as shown in the following formula:

where denotes the moving average with a momentum of m, which ensures that the gradient gain remains consistently high, tackling the problem of slow convergence. In the task of maritime small object detection, the WIoUv2 loss function is introduced. WIoUv2 demonstrates strong loss performance, and it enhances the model’s detection ability in UAV-based scenarios through its dynamic gradient gain strategy, accelerating convergence and reducing the risk of overfitting.

4. Experimental Results and Discussion

4.1. Dataset Description and Experimental Design

- 1.

- SeaDronesSee dataset and MOBDrone dataset

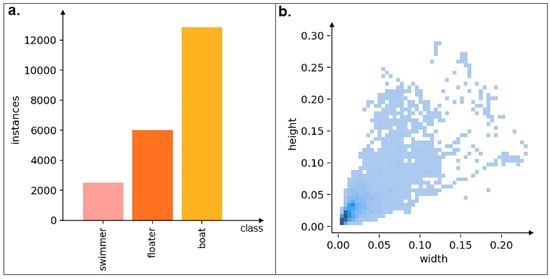

The SeaDronesSee dataset serves as a benchmark for visual object detection and tracking, aiming to connect the capabilities of land-based and sea-based vision systems. The dataset contains over 54,000 frames and approximately 400,000 instances, captured by UAVs at altitudes ranging from 5 to 260 m and angles from 0 to 90 degrees. It supports multi-object and multi-scale detection. While the dataset is rich, it also increases the detection difficulty, impacting the performance of detection models. The dataset used in this study includes 5630 images and 24,131 targets, with re-labeled imbalanced samples, maintaining the original dataset partition used in the competition. The training set includes 2975 images (52.9%), the validation set comprises 859 images (15.3%), and the test set includes 1796 images (31.9%) to ensure a fair comparison. Figure 5 illustrates the quantity and size of each category. Figure 5a presents a bar chart showing the number of samples for each category (e.g., swimmer, floater, boat). Figure 5b displays a scatter density plot of the target size distribution, where the data points are concentrated in the lower-left corner, indicating that most targets in the dataset have small widths and heights typical of small object detection.

Figure 5.

Category quantity and size of the SeaDronesSee Dataset. (a) Bar chart of the number of samples per category. (b) Scatter density plot of width and height distribution.

The MOBDrone dataset is a large-scale collection of aerial imagery focused on maritime environments, specifically capturing images under varying heights, camera angles, and lighting conditions. This dataset comprises 126,170 images extracted from 66 video clips recorded by a UAV flying at altitudes ranging from 10 to 60 m. The images are annotated with over 180,000 bounding boxes to label five object categories: humans, boats, lifebuoys, surfboards, and wood. Particular emphasis is placed on annotating humans in water to simulate scenarios requiring rescue operations for individuals overboard. To ensure the dataset’s diversity and representativeness, 8000 images were randomly sampled for experiments in this study. The chosen samples were divided into training, validation, and testing subsets in a ratio of 7:2:1, which is conducive to the construction and assessment of models tailored for maritime applications.

- 2.

- Experimental Setup and Configuration

The CFSD-UAVNet method was implemented using the PyTorch framework. To run the algorithm, a deep learning environment was set up on an Ubuntu 18.04 system, which includes a CPU with 15 vCPUs (AMD EPYC 7642 48-Core Processor), 80 GB of memory, and an RTX 3090 GPU (24 GB) with 24 GB of VRAM. Other experimental settings are shown in Table 1.

Table 1.

Experimental setup parameters.

- 3.

- Evaluation Metrics

The evaluation metrics in this study include standard object detection benchmarks to assess the performance of the CFSD-UAVNet. Key metrices are as follows.

Precision (P): Reflects the proportion of correctly identified positive samples out of all predicted positive samples calculated using true positives (TPs) and false positives (FPs), as expressed in the following formula:

Recall (R): Indicates the ratio of true positives to the total number of actual positive samples derived from TPs and false negatives (FNs), as expressed in the following formula:

Average Precision (AP): Represents the mean detection accuracy for a specific class, calculated as the area under the Precision–Recall (P-R) curve. A higher AP value signifies superior detection performance. It is expressed by the following formula:

Mean Average Precision (mAP): Aggregates the AP across all classes, measuring the model’s overall detection accuracy. It is expressed by the following formula:

Additional practical metrics include the following.

Floating-Point Operations (FLOPs): Quantifies a model’s computational complexity by calculating the total number of floating-point operations performed.

Parameter Count (Param): Indicates the total parameters in the model, reflecting its size and efficiency.

Detection Speed: In object detection, it refers to the system’s ability to identify and locate targets within a given time unit.

4.2. Model Performance Evaluation

4.2.1. Effectiveness of Attention in the CRE

To assess the performance of the EMA’s attention in the proposed CRE module, various attention mechanisms, including SE, CBAM [32], SimAM [33], and GAM [34], were also selected. The results of the experiments are presented in Table 2.

Table 2.

Experimental results of attention mechanisms.

It is evident that various attention mechanisms have varying impacts on the CRE module. In terms of key performance indicators, EMA consistently demonstrates positive performance; its mAP@50 reaches 71.5%, and its mAP@95 achieves 42.4%, both of which are the highest among all tested attention mechanisms. This result not only highlights EMA’s precision in detecting small objects in detection tasks but also reflects its robustness in handling complex scenarios.

A further analysis of EMA’s performance reveals that it has a precision (P) of 80.8% and a recall (R) of 63.9%. In terms of model lightweighting, EMA also performs well. Its parameter count (Params) and floating-point operations (FLOPs) are relatively low. Compared with SE, CBAM, SimAM, and GAM, the advantages of EMA become even more apparent. Although these mechanisms each have their unique features, none outperform EMA in overall performance. EMA, through its distinctive channel and spatial feature recalibration strategy, effectively enhances feature representation, improving the model’s capacity to detect and recognize small objects. Consequently, EMA was ultimately chosen as the attention mechanism for the CRE module.

4.2.2. Effectiveness of Loss Function

To assess the performance of different loss functions, five different loss functions with distinct functionalities were selected for detailed evaluation: CIoU, EIoU [35], SIoU [36], DIoU, and WIoU. The primary goal of this study is to evaluate how loss functions improve detection accuracy and precision in maritime object detection. The experimental findings are summarized in Table 3.

Table 3.

Experimental results of loss functions.

From the results, it is evident that the WIoU loss function outperforms the other loss functions in terms of accuracy across each category. Among them, the WIoUv2 version demonstrated relatively good improvement, achieving an mAP@50 of 70.6%. This indicates that the WIoUv2 loss function is suitable for application on the SeaDronesSee dataset, with good performance in small object detection in maritime environments. By more accurately capturing target features, the WIoUv2 loss function effectively enhances detection accuracy while reducing the risks of incorrect positives and incorrect negatives.

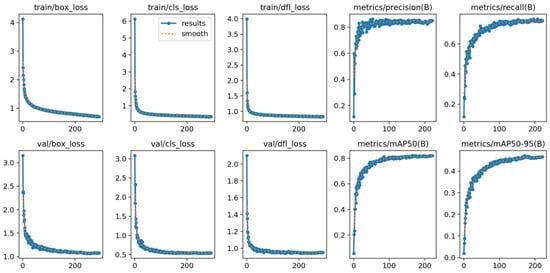

4.3. Model Loss

On the SeaDronesSee dataset, as shown in Figure 6, the box_loss, the cls_loss, and the dfl_loss curves for the proposed CFSD-UAVNet model on both the training and validation sets demonstrate its excellent convergence characteristics. In the initial phase, the loss curves exhibit a clear downward trend, followed by a stabilization period, with fluctuations within a narrow range. This reflects that the CFSD-UAVNet model not only learned the features of the dataset but also maintained stability during the learning process, avoiding overfitting and underfitting. This robust loss reduction and stable performance demonstrate the efficient fitting ability of the CFSD-UAVNet model for maritime small object detection. As training progresses, the model’s precision, recall, and mAP indicators show improvement. The rapid growth of precision and recall, followed by a period of gradual increase and eventually stabilizing at a high level, not only showcases the model’s learning capacity but also reflects its reliability in object detection tasks.

Figure 6.

Variation curves during training and validation Process.

4.4. Ablation Experiment

To assess the performance of the modules introduced in the model, ablation experiments were executed on the SeaDronesSee dataset using YOLOv8n as the baseline algorithm. The result from the ablation experiments are illustrated in Table 4 for various modules, enabling a detailed comparison of each module’s contribution to the model’s performance.

Table 4.

The ablation experiment results table for different modules.

According to the data in Table 4, the incorporation of the PHead and CRE modules mainly enhanced the model’s detection accuracy while decreasing the number of parameters. Additionally, both precision and recall were increased. The incorporation of the CRE and CED modules decreased the parameter count, while the average precision improved by 3.5% and 1.2%, respectively. Similarly, the addition of the WIoUv2 loss function helped balance positive and negative samples, thereby enhancing the model’s robustness. These experimental results show the effectiveness of these modules in reducing the model’s parameter count while ensuring the precision of maritime small object detection. This enables the maritime rescue system to process data efficiently.

Table 5 presents the ablation experiment results for the stepwise integration of modules. It is evident that adding the CRE module to the PHead effectively merges the efficient Vision Transformer architecture with lightweight CNN [37]. This integration lower the parameter count while increasing the model’s mAP@50 by 2.3%. Building on this, the introduction of the CED module further enhances performance, with mAP@50 increasing by 0.8% and mAP@95 by 0.5%. Additionally, the recall (R) metric shows an improvement of 3.1%, while both the model’s parameters are reduced. Finally, the inclusion of the WIoUv2 loss function demonstrates its ability to improve performance without increasing computational complexity or the model size. This indicates that WIoUv2 helps the network detect small objects in complex environments, thus enhancing the model’s ability to generalize and its accuracy.

Table 5.

Table of ablation experiment results.

In summary, the CFSD-UAVNet model achieved an mAP@50 of 80.1% and an mAP@95 of 46.3%, representing improvements of 12.1% and 7.2% over the baseline algorithm. Additionally, precision and recall improved by 5.4% and 16.7%, over the baseline, indicating that the model is accurate in classification and better at balancing false positives and false negatives, particularly in small object detection. Furthermore, the parameter count was reduced, with 1.6 M fewer parameters compared to the baseline algorithm, allowing for high detection accuracy with reduced computational resources. Therefore, the CFSD-UAVNet model improves detection performance while simultaneously lowering the number of parameters, thereby improving its applicability in resource-constrained environments.

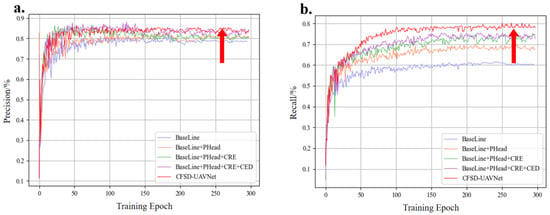

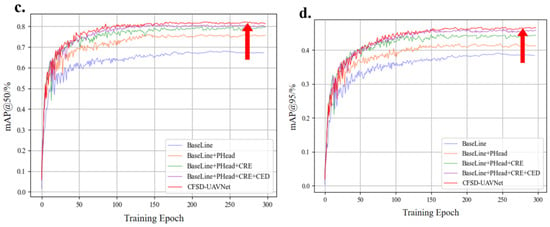

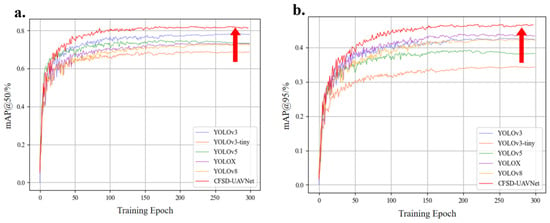

Figure 7 illustrates the comparison of precision, recall, mAP@0.5, and mAP@0.95 for the ablation experiment in the UAV-based maritime small object detection task. As shown in Figure 7a, the precision of CFSD-UAVNet is consistently higher than that of other methods throughout the training process, especially in the early stages. Figure 7b displays the recall comparison, where CFSD-UAVNet consistently outperforms other experiments, with a gap emerging in the later stages of training. In Figure 7c, upon comparing mAP@0.5, CFSD-UAVNet initially lags behind some methods but rapidly surpasses them and maintains a leading position thereafter. Figure 7d shows the mAP@0.95 comparison, where CFSD-UAVNet also demonstrates good performance, surpassing other methods after the mid-training phase. By incorporating improvements like PHead, CRE, and CED, CFSD-UAVNet outperforms all metrics, validating the proposed strategies. Compared to the baseline, CFSD-UAVNet achieves higher precision and recall earlier in training and maintains stable performance, demonstrating improved detection accuracy and robustness for UAV-based maritime small object detection.

Figure 7.

Ablation experiment comparison curves for different metrics. (a) Precision, (b) recall, (c) mAP@50, (d) mAP@95. The red arrow indicates the position of the proposed algorithm CFSD-UAVNet in the figure.

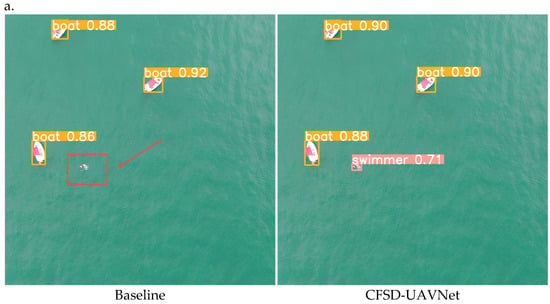

The ablation experiment results comparing the baseline and CFSD-UAVNet models on the SeaDronesSee dataset are visualized in Figure 8. In Figure 8a, the CFSD-UAVNet algorithm demonstrates good performance under the challenging task of multi-class object recognition, accurately distinguishing various targets with enhanced recognition accuracy and robustness. In contrast, the baseline model exhibits missed detections. In the high-altitude images shown in Figure 8b, CFSD-UAVNet can handle the dual challenges of complex sea surface textures and blurred small target edges, precisely capturing the floater category, with an improvement in recognition accuracy, while the baseline fails to detect the floater category. In the UAV perspective images presented in Figure 8c, the baseline incorrectly classifies the floater category as swimmer, whereas CFSD-UAVNet correctly identifies all targets without false positives or missed detections, showcasing its recognition capability for specific targets. Combining the results from Figure 8a–c, it is evident that CFSD-UAVNet excels across varying heights and image clarity conditions, demonstrating its performance and adaptability in small object detection in complex maritime environments. However, further investigation is required to evaluate its performance under varying lighting and weather conditions.

Figure 8.

Ablation experiment visualization results. (a) Multi-class object recognition, (b) complex sea surface texture recognition, (c) low-altitude UAV recognition. The red arrow and dotted square in the figure indicate cases of missed or false detections by the comparison algorithm.

4.5. Comparison Experiment on SeaDronesSee Dataset

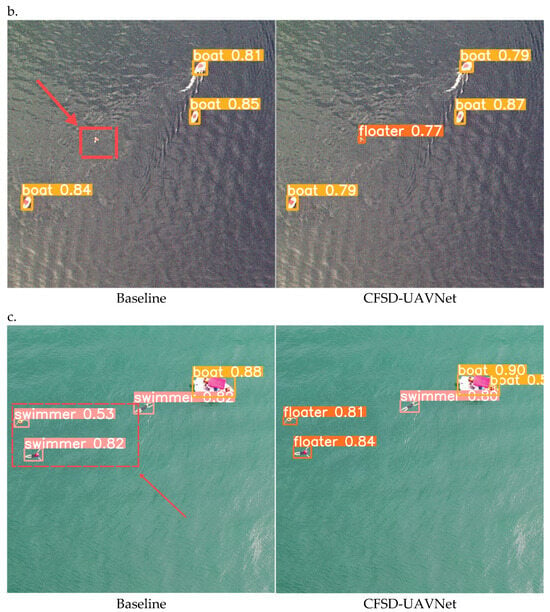

To evaluate the relative impact of the attention mechanism within the proposed CRE module, we visualized the detection results of five models, YOLOv3, YOLOv3-tiny, YOLOv5, YOLOv8, and CFSD-UAVNe, using heatmaps, as shown in Figure 9. Specifically, Figure 9a represents the ground truth, Figure 9b is the heatmap of YOLOv3-tiny, Figure 9c is the heatmap of YOLOv5, Figure 9d is the heatmap of YOLOv8, Figure 9e is the heatmap of YOLOv3, and Figure 9f is the heatmap of CFSD-UAVNet. In these heatmaps, the intensity of the color indicates the model’s level of attention to specific regions of the image during object detection. It can be observed that YOLOv3-tiny, YOLOv5, YOLOv3, and YOLOv8 exhibit little or no attention to the two floater-class objects within the red bounding box in the heatmaps. In contrast, CFSD-UAVNet accurately focuses on these small maritime targets. Additionally, as illustrated in Figure 9b, YOLOv3-tiny erroneously directs attention to background regions of the sea (in the yellow box), whereas CFSD-UAVNet effectively mitigates such background interference. The integration of the EMA attention mechanism enhances the representation of object features, thereby improving the model’s perceptual capability for these targets.

Figure 9.

Heatmap of attention mechanisms in comparison experiments. (a) The ground truth, (b) the heatmap of YOLOv3-tiny, (c) the heatmap of YOLOv5, (d) the heatmap of YOLOv8, (e) the heatmap of YOLOv3, and (f) the heatmap of CFSD-UAVNet. The red squares show the varying attention of different algorithms to small targets, with the CFSD-UAVNet algorithm performing the best. The yellow square indicates that YOLOv3-tiny algotithm focuses its attention on the sea surface background.

To evaluate the detectability of the CFSD-UAVNet algorithm, it was compared with the latest mainstream algorithms on the SeaDronesSee dataset, including Faster R-CNN, DETR [36], YOLOv3, YOLOv3-tiny, YOLOv5, YOLOv6, YOLOX, and YOLOv8. The evaluation metrics selected for comparison included mAP@50, mAP@95, the parameter count, the computational complexity, the recall rate, and the detection speed. For each method, the experimental settings remained largely consistent, with the same dataset and scale, ensuring fairness in the comparison. The comparative experiment results in Table 6 highlight that CFSD-UAVNet delivers strong performance on this dataset, combining low computational complexity and parameter count while outperforming other methods in accuracy and overall effectiveness.

Table 6.

Comparison of experimental results on the SeaDronesSee dataset.

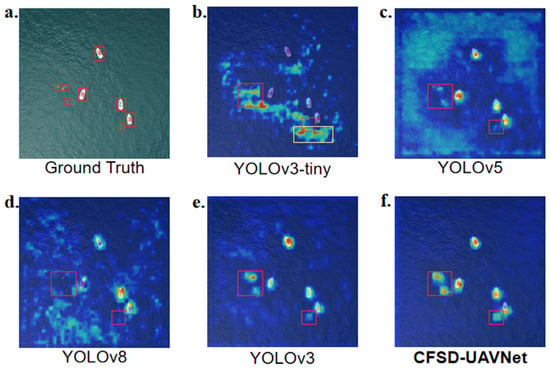

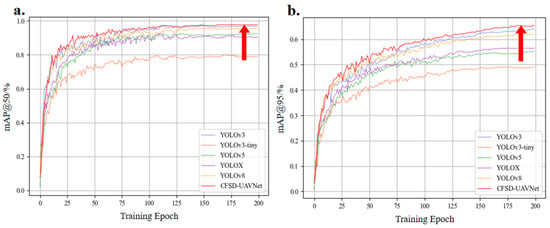

Figure 10 presents the comparison of mAP@0.5 and mAP@0.95 during the training process for YOLOv3-tiny, YOLOv3, YOLOv5, YOLOX, YOLOv8, and the proposed CFSD-UAVNet algorithm. As shown in Figure 10a, in the mAP@0.5 comparison, the CFSD-UAVNet curve consistently outperforms the other methods, especially during the early stages of training, showing its efficiency in recognizing small targets. Figure 10b shows the mAP@0.95 comparison, where CFSD-UAVNet maintains a leading position throughout, with the gap widening during the later stages of training, indicating its advantage in high-precision object detection.

Figure 10.

Comparison of Map curves for selected algorithms in the comparison experiment on the SeaDronesSee dataset. (a) mAP@50, (b) mAP@95. The red arrow indicates the position of the proposed algorithm CFSD-UAVNet in the figure.

Combining both figures, it is evident that CFSD-UAVNet consistently maintains a high Map value throughout training, excelling in both lower threshold generalization (mAP@0.5) and higher-precision target recognition (mAP@0.95). This demonstrates the superiority of CFSD-UAVNet in UAV-based maritime small object detection tasks, where it ensures high detection accuracy while providing faster convergence and higher detection quality. Compared to traditional YOLO-based algorithms, CFSD-UAVNet shows improvements in both mAP@0.5 and mAP@0.95, reflecting that our modifications effectively enhance the model’s detection capabilities and stability.

4.6. Comparison Experiment on MOBDrone Dataset

To assess the proposed algorithm’s ability to generalize, comparative tests were run on the MOBDrone dataset, pitting it against leading-edge algorithms. The algorithms and metrics chosen for evaluation mirrored those employed in the SeaDronesSee dataset analysis. As shown in Table 7, the results demonstrate that among the various algorithms, the CFSD-UAVNet algorithm performed remarkably well on this dataset, surpassing the competing algorithms in terms of small object detection accuracy and overall model capability.

Table 7.

Comparison experimental results on the MOBDrone dataset.

Figure 11a,b, respectively, illustrate the comparison curves of mAP@0.5 and mAP@0.95 during the training process for selected algorithms. The x-axis indicates the number of training epochs, and the y-axis shows the detection metrics, mAP@0.5 and mAP@0.95. CFSD-UAVNet surpasses other algorithms in terms of mAP@0.5. With the progression of training, the performance of all algorithms steadily increases and approaches a common level. Notably, CFSD-UAVNet achieves higher mAP@0.5 values after convergence compared to other algorithms, demonstrating its superiority in accuracy. For the more stringent mAP@0.95 metric, CFSD-UAVNet also performs well, achieving higher final precision than its counterparts. Particularly in the later stages of training, the performance gap between CFSD-UAVNet and other algorithms widens, highlighting its robustness in high-standard detection tasks. Overall, CFSD-UAVNet demonstrates detection accuracy across the two key metrics in UAV-based maritime object detection, outperforming common YOLO series algorithms.

Figure 11.

Comparison of mAP curves for selected algorithms in the comparison experiment on the MOBDrone dataset. (a) mAP@50, (b) mAP@95. The red arrow indicates the position of the proposed algorithm CFSD-UAVNet in the figure.

4.7. Analysis of Visualization Results

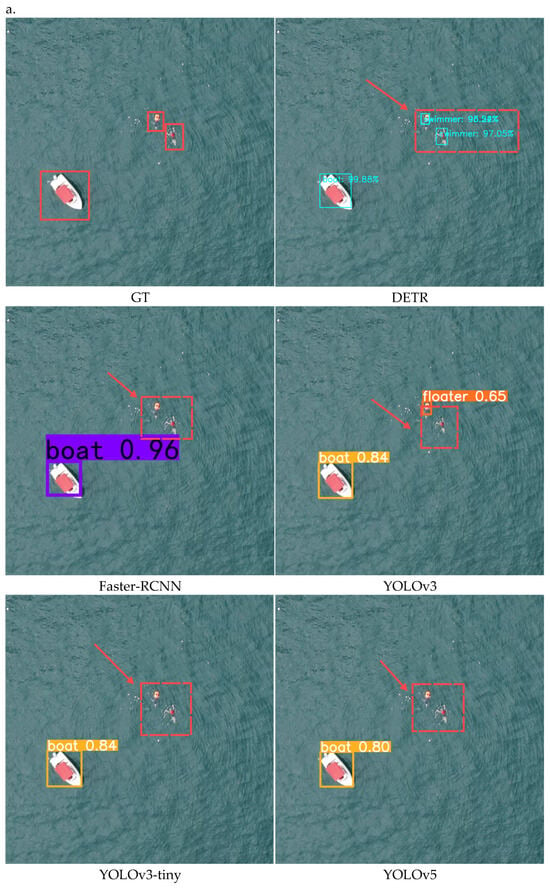

The visualization outcomes of the comparative experiments conducted on the SeaDronesSee dataset, are presented in Figure 12. Figure 12a illustrates a scenario with high brightness, complex textures, and background interference in the marine environment. The image contains one boat and two floaters. While all algorithms successfully detect the boat, Faster-RCNN, YOLOv3-tiny, YOLOv5, and YOLOv8 fail to detect the floaters, likely due to their limited ability to extract key features of small objects in this challenging background. YOLOv3 detects only one floater, highlighting its insufficient capability in local feature extraction for small objects. Although DETR detects the targets, it misclassifies the categories, which may be attributed to its limited discrimination ability for target semantics. In contrast, the CFSD-UAVNet algorithm successfully detects and classifies all expected targets, demonstrating superior detection completeness and accuracy in complex backgrounds.

Figure 12.

Visualization results of comparison experiment on the SeaDronesSee dataset. (a) A high-brightness scene with a complex, textured background; (b) a low-brightness scene with a dark sea surface background. The red arrow and dotted square in the figure indicate cases of missed or false detections by the comparison algorithm.

Figure 12b depicts a low-brightness scenario with a darker sea surface, where blurred target boundaries and low contrast increase the detection challenge, particularly for closely positioned targets. The image includes one boat, two floaters, and two swimmers. DETR, YOLOv3-tiny, and YOLOv5 exhibit category misclassification errors for the floater and swimmer classes, likely due to their weak ability to distinguish blurred target features in low-light conditions. Faster-RCNN shows overlapping bounding boxes for the floater category, which may result from interference in boundary feature extraction by its feature extraction network. YOLOv3 and YOLOv8 detect only one floater, indicating their limitations in detecting weak-feature targets in multi-object scenarios. In contrast, CFSD-UAVNet correctly detects and classifies all targets, further validating its robustness and adaptability to blurred boundaries in low-brightness and complex backgrounds.

In summary, CFSD-UAVNet demonstrates significant advantages in handling complex maritime scenarios and varying lighting conditions. However, other algorithms reveal limitations in feature extraction, target differentiation, and background interference suppression in cases of detection errors.

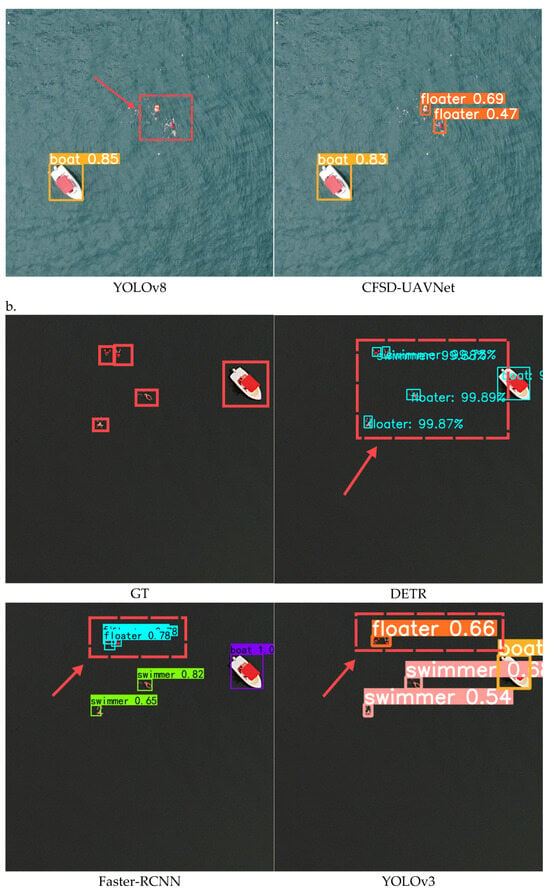

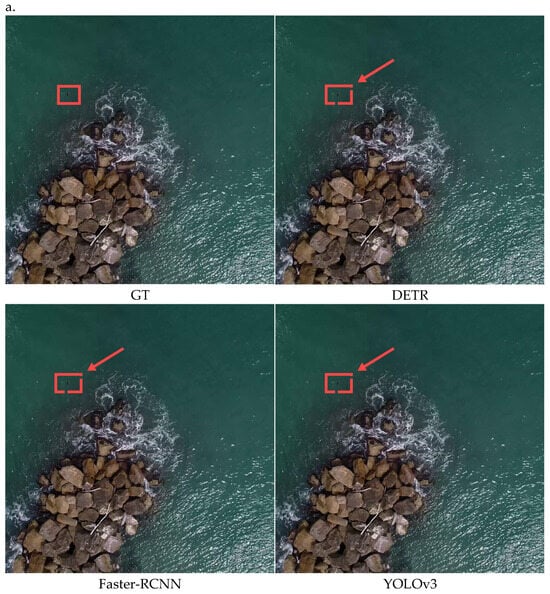

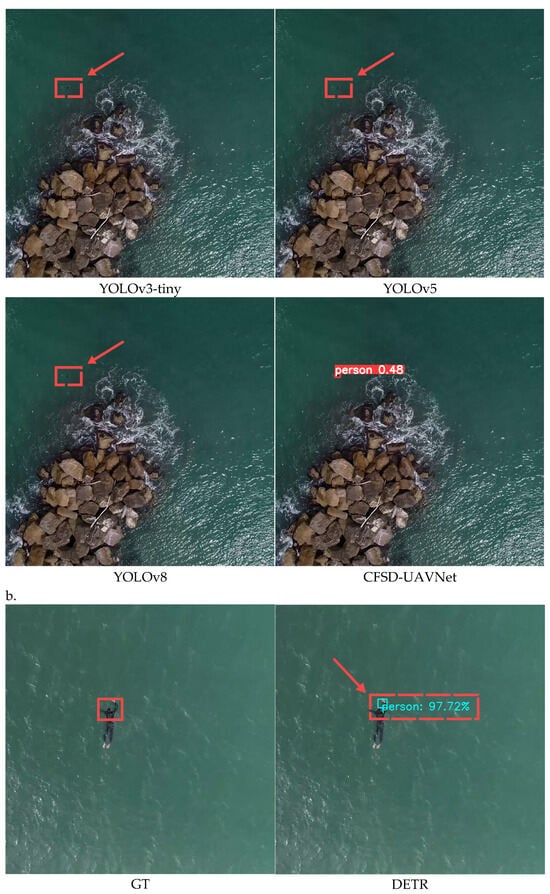

The comparative experiment visualizations on the MOBDrone dataset are illustrated in Figure 13. Figure 13a illustrates small object detection under complex natural backgrounds at high altitudes using UAVs. In the image, the drowning person is located near coastal rocks, with textures similar to those of the rocks and ocean waves, making the detection task highly challenging. Except for the CFSD-UAVNet algorithm, none of the other algorithms successfully detected the drowning person, indicating their insufficient robustness in this scenario and their weaker ability to recognize small objects against such challenging backgrounds.

Figure 13.

Visualization results of comparison experiment on the MOBDrone dataset. (a) A high-altitude UAV image with a complex, natural background; (b) a UAV image with a distant open-sea background. The red arrow and dotted square in the figure indicate cases of missed or false detections by the comparison algorithm.

Figure 13b presents the detection of a distant small object over the open sea. The target’s small size and low visibility against the background compound the challenge of detection. Additionally, due to the wave fluctuations, the target’s edges are blurred, requiring detection algorithms to exhibit strong robustness. Notably, the detection bounding box for the target should be positioned above and around the head of the drowning person. The DETR algorithm’s bounding box, however, is positioned at the hand of the person, while YOLOv8 places the bounding box at the feet of the person, failing to fully detect the individual. This may be attributed to the incomplete feature extraction of small objects by these algorithms. Faster-RCNN, YOLOv3-tiny, and YOLOv5 all fail to detect the target, potentially due to susceptibility to wave interference during distant small object detection. Although YOLOv3 detects the target, it misclassifies the drowning person as a boat, likely due to its insufficient representation of small object features, especially against complex backgrounds where targets and similarly textured regions are easily confused, resulting in misclassification. Only CFSD-UAVNet successfully detects the drowning person, demonstrating its exceptional capabilities in feature extraction and object recognition in complex scenarios.

5. Conclusions

To address the challenges of maritime small object detection, including low accuracy, slow detection speed, and insufficient model lightweighting, this paper proposes the CFSD-UAVNet model as an innovative solution. The model incorporates key components, such as the PHead structure, the CRE module, the CED module, and the WIoUv2 loss function, each addressing critical aspects of detection performance. Specifically, the PHead structure enhances feature extraction for small targets, the CRE module strengthens multi-scale target perception, and the lightweight CED module reduces the computational load while preserving high accuracy. Additionally, the WIoUv2 loss function improves training performance, particularly in bounding box regression and small object detection accuracy. Experimental evaluations on the SeaDronesSee dataset demonstrate the performance of the CFSD-UAVNet model over mainstream detection frameworks, achieving improvements in small object detection accuracy and overall evaluation metrics. This advancement highlights the model’s effectiveness in maritime UAV applications, such as surveillance, rescue, and target tracking, offering a robust technical foundation for practical deployment.

Looking ahead, several specific directions can be explored to further enhance the model’s practical applicability. First, optimization techniques, such as model quantization, pruning, or knowledge distillation, could be applied to further reduce computational complexity, reduce latency, and enable real-time deployment on UAV hardware. Second, enhancing the robustness of the CRE module’s multi-scale perception capability could improve performance in scenarios with varying lighting, extreme weather, or dynamic sea states. Furthermore, advanced data augmentation strategies or the integration of self-supervised learning could help the model better adapt to data-scarce or unseen environments. Additionally, exploring how the CRE and PHead structures can be extended to multi-target scenarios may further unlock the model’s potential in complex maritime conditions.

In summary, the CFSD-UAVNet model provides a practical and effective solution for maritime UAV-based small object detection. By addressing key challenges, such as low accuracy and high computational demands, it offers improvements in detection performance and efficiency.

Author Contributions

Conceptualization, X.G. and G.D.; methodology, J.L.; validation, X.F., Y.Z. and M.Z.; formal analysis, W.L.; data curation, Y.W.; writing—original draft preparation, G.D. and J.L.; writing—review and editing, X.G. and C.L.; visualization, D.L. and W.L.; funding acquisition, X.G. and C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Key Research and Development Program of China (2022YFB4301401, 2022YFB4300401, 2022YFB4300400), the Young Elite Scientist Sponsorship Program by CAST (No. YESS20230004, 2023021K), the Science and technology innovation project of China Waterborne Transport Research Institute (182407, 182408, 182410, 182414), the Natural Science Foundation of Liaoning Province (No.2024-MS-168), the Fundamental Research Funds for the Provincial Universities of Liaoning (No. LJ212410150030, LJ212410150024), and the Research Foundation of Liaoning Province (No. LJKQZ20222447).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used in the paper can be downloaded here “SeaDronesSee Dataset” at https://seadronessee.cs.uni-tuebingen.de/ accessed on 25 June 2023 and “MOBDrone Dataset” at https://aimh.isti.cnr.it/dataset/mobdrone/ accessed on 11 March 2024.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fu, Z.; Xiao, Y.; Tao, F.; Si, P.; Zhu, L. DLSW-YOLOv8n: A Novel Small Maritime Search and Rescue Object Detection Framework for UAV Images with Deformable Large Kernel Net. Drones 2024, 8, 310. [Google Scholar] [CrossRef]

- Cherif, B.; Ghazzai, H.; Alsharoa, A. LiDAR From the Sky: UAV Integration and Fusion Techniques for Advanced Traffic Monitoring. IEEE Syst. J. 2024, 18, 1639–1650. [Google Scholar] [CrossRef]

- Yang, Y.; Miao, Z.; Zhang, H.; Wang, B.; Wu, L. Lightweight Attention-Guided YOLO With Level Set Layer for Landslide Detection From Optical Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 3543–3559. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, J.; Wang, X.; Wu, L.; Feng, K.; Wang, G. A YOLOv7-Based Method for Ship Detection in Videos of Drones. J. Mar. Sci. Eng. 2024, 12, 1180. [Google Scholar] [CrossRef]

- Zhang, J.; Xie, R.; Meng, Z.; Li, G.; Xin, S. A Mini-UAV Lightweight Target Detection Model Based on SSD. In International Conference on Autonomous Unmanned Systems; Springer: Singapore, 2022; pp. 2999–3013. [Google Scholar]

- Zhao, H.; Wang, L.; Zhao, Z.; Deng, W. A New Fault Diagnosis Approach Using Parameterized Time-Reassigned Multisynchrosqueezing Transform for Rolling Bearings. IEEE Trans. Reliab. 2024; early access. [Google Scholar]

- Wang, B.; Yang, G.; Yang, H.; Gu, J.; Xu, S.; Zhao, D.; Xu, B. Multiscale Maize Tassel Identification Based on Improved RetinaNet Model and UAV Images. Remote Sens. 2023, 15, 2530. [Google Scholar] [CrossRef]

- Li, Y.; Zou, G.; Zou, H.; Zhou, C.; An, S. Insulators and defect detection based on the improved focal loss function. Appl. Sci. 2022, 12, 10529. [Google Scholar] [CrossRef]

- Yang, S.; Li, J.; Li, Y.; Nie, J.; Qiao, Y.; Ercisli, S. Fuzzy EfficientDet: An approach for precise detection of larch infestation severity in UAV imagery under dynamic environmental conditions. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 8810–8822. [Google Scholar] [CrossRef]

- Zhang, H.; Shao, F.; He, X.; Zhang, Z.; Cai, Y.; Bi, S. Research on object detection and recognition method for UAV aerial images based on improved YOLOv5. Drones 2023, 7, 402. [Google Scholar] [CrossRef]

- Deng, W.; Li, X.; Xu, J.; Li, W.; Zhu, G.; Zhao, H. BFKD: Blockchain-based federated knowledge distillation for aviation Internet of Things. IEEE Trans. Reliab. 2024; early access. [Google Scholar]

- Li, X.; Zhao, H.; Xu, J.; Zhu, G.; Deng, W. APDPFL: Anti-Poisoning Attack Decentralized Privacy Enhanced Federated Learning Scheme for Flight Operation Data Sharing. IEEE Trans. Wirel. Commun. 2024; early access. [Google Scholar]

- Alsamhi, S.H.; Shvetsov, A.V.; Kumar, S.; Shvetsova, S.V.; Alhartomi, M.A.; Hawbani, A.; Rajput, N.S.; Srivastava, S.; Saif, A.; Nyangaresi, V.O. UAV computing-assisted search and rescue mission framework for disaster and harsh environment mitigation. Drones 2022, 6, 154. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding box regression loss with dynamic focusing mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Varga, L.A.; Kiefer, B.; Messmer, M.; Zell, A. Seadronessee: A maritime benchmark for detecting humans in open water. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 2260–2270. [Google Scholar]

- Hui, Y.; Wang, J.; Li, B. DSAA-YOLO: UAV remote sensing small target recognition algorithm for YOLOV7 based on dense residual super-resolution and anchor frame adaptive regression strategy. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 101863. [Google Scholar] [CrossRef]

- Li, C.; Zhou, S.; Yu, H.; Guo, T.; Guo, Y.; Gao, J. An efficient method for detecting dense and small objects in uav images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 6601–6615. [Google Scholar] [CrossRef]

- Bi, L.; Deng, L.; Lou, H.; Zhang, H.; Lin, S.; Liu, X.; Wan, D.; Dong, J.; Liu, H. URS-YOLOv5s: Object Detection Algorithm for UAV Remote Sensing Images. Phys. Scr. 2024, 99, 086005. [Google Scholar] [CrossRef]

- Zhou, L.; Zhao, S.; Wan, Z.; Liu, Y.; Wang, Y.; Zuo, X. MFEFNet: A Multi-Scale Feature Information Extraction and Fusion Network for Multi-Scale Object Detection in UAV Aerial Images. Drones 2024, 8, 186. [Google Scholar] [CrossRef]

- Sun, F.; He, N.; Li, R.; Wang, X.; Xu, S. GD-PAN: A multiscale fusion architecture applied to object detection in UAV aerial images. Multimed. Syst. 2024, 30, 143. [Google Scholar] [CrossRef]

- Zhou, S.; Zhou, H. Detection Based on Semantics and a Detail Infusion Feature Pyramid Network and a Coordinate Adaptive Spatial Feature Fusion Mechanism Remote Sensing Small Object Detector. Remote Sens. 2024, 16, 2416. [Google Scholar] [CrossRef]

- Jiang, L.; Yuan, B.; Du, J.; Chen, B.; Xie, H.; Tian, J.; Yuan, Z. MFFSODNet: Multi-Scale Feature Fusion Small Object Detection Network for UAV Aerial Images. IEEE Trans. Instrum. Meas. 2024, 73, 5015214. [Google Scholar] [CrossRef]

- Chen, J.; Wen, R.; Ma, L. Small object detection model for UAV aerial image based on YOLOv7. Signal Image Video Process. 2024, 18, 2695–2707. [Google Scholar] [CrossRef]

- Zeng, Y.; Guo, D.; He, W.; Zhang, T.; Liu, Z. ARF-YOLOv8: A novel real-time object detection model for UAV-captured images detection. J. Real-Time Image Process. 2024, 21, 107. [Google Scholar] [CrossRef]

- Tang, X.; Ruan, C.; Li, X.; Li, B.; Fu, C. MSC-YOLO: Improved YOLOv7 Based on Multi-Scale Spatial Context for Small Object Detection in UAV-View. Comput. Mater. Contin. 2024, 79, 983–1003. [Google Scholar]

- Wang, A.; Chen, H.; Lin, Z.; Han, J.; Ding, G. Repvit: Revisiting mobile cnn from vit perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 15909–15920. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 390–391. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient multi-scale attention module with cross-spatial learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Zhong, J.; Chen, J.; Mian, A. DualConv: Dual convolutional kernels for lightweight deep neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 9528–9535. [Google Scholar] [CrossRef]

- Ma, R.; Wang, J.; Zhao, W.; Guo, H.; Dai, D.; Yun, Y.; Li, L.; Hao, F.; Bai, J.; Ma, D. Identification of maize seed varieties using MobileNetV2 with improved attention mechanism CBAM. Agriculture 2022, 13, 11. [Google Scholar] [CrossRef]

- Cai, Z.; Qiao, X.; Zhang, J.; Feng, Y.; Hu, X.; Jiang, N. Repvgg-simam: An efficient bad image classification method based on RepVGG with simple parameter-free attention module. Appl. Sci. 2023, 13, 11925. [Google Scholar] [CrossRef]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Zhao, H.; Gao, Y.; Deng, W. Defect Detection Using Shuffle Net-CA-SSD Lightweight Network for Turbine Blades in IoT. IEEE Internet Things J. 2024, 11, 32804–32812. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU loss: More powerful learning for bounding box regression. arXiv 2022, arXiv:2205.12740. [Google Scholar]

- Li, W.; Liu, D.; Li, Y.; Hou, M.; Liu, J.; Zhao, Z.; Guo, A.; Zhao, H.; Deng, W. Fault diagnosis using variational autoencoder GAN and focal loss CNN under unbalanced data. Struct. Health Monit. 2024. Available online: https://journals.sagepub.com/doi/abs/10.1177/14759217241254121 (accessed on 11 December 2024). [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).