A Stable Multi-Object Tracking Method for Unstable and Irregular Maritime Environments

Abstract

1. Introduction

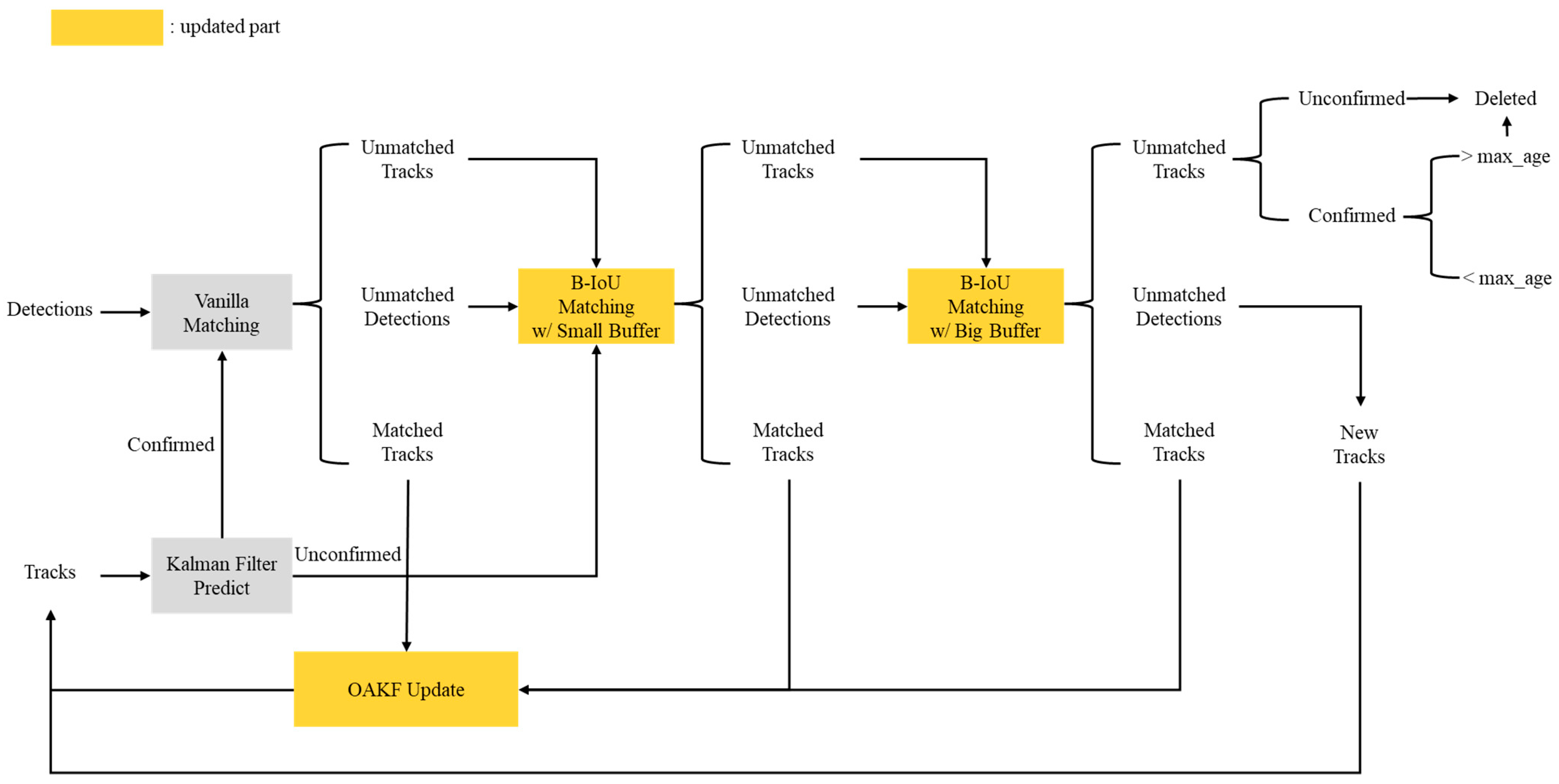

- The improved algorithm, StableSORT, enhances robustness and stability in multi-object tracking by B-IoU and OAKF into the StrongSORT framework. These enhancements address camera instability and irregular object motion in maritime environments, ensuring more accurate tracking performance.

- A real-world dataset was collected using small ASVs under challenging maritime conditions. This dataset was used to validate StableSORT’s performance against state-of-the-art algorithms, demonstrating improvements in key metrics, including HOTA, AssA, and IDF1.

2. Related Work

2.1. Object Tracking

2.2. Deep Learning-Based Ship Tracking

3. Proposed Method

3.1. Overview of StrongSORT

- Aa denotes appearance cost, which quantifies the visual similarity between detected objects across frames. This cost is derived from the deep feature embeddings extracted by a neural network, ensuring that objects with similar visual features are more likely to be associated.

- Am denotes motion cost, which estimates the positional consistency of objects between consecutive frames. This cost relies on the predictions generated by a Kalman filter, to forecast the location of each object based on its previous trajectory.

- λ is a weighting factor that adjusts the effect of appearance and motion information.

3.2. Buffered Intersection over Union

3.3. Observation-Adaptive Kalman Filter

- High confidence (): When the confidence level meets or exceeds the threshold, the detection is fully trusted, and is set to 1.0, resulting in . This removes any additional measurement noise, allowing the detection to exert maximum influence on the state update.

- Low confidence (): When the confidence level is below the threshold, the measurement noise covariance increases according to (3), effectively scaling up the noise and thereby reducing the influence of the detection on the state update.

3.4. StableSORT: Integrating B-IoU and OAKF to StrongSORT

4. Experimental Setup

4.1. Dataset

4.2. Evaluation Measures

5. Experimental Results

5.1. Detection Results

5.2. Tracking Results

5.3. Ablation Study

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 1–21. [Google Scholar] [CrossRef]

- Shan, Y.; Liu, S.; Zhang, Y.; Jing, M.; Xu, H. LMD-TShip⋆: Vision based large-scale maritime ship tracking benchmark for autonomous navigation applications. IEEE Access 2021, 9, 74370–74384. [Google Scholar] [CrossRef]

- Zhang, W.; He, X.; Li, W.; Zhang, Z.; Luo, Y.; Su, L.; Wang, P. A robust deep affinity network for multiple ship tracking. IEEE Trans. Instrum. Meas. 2021, 70, 2508920. [Google Scholar] [CrossRef]

- Liang, Z.; Xiao, G.; Hu, J.; Wang, J.; Ding, C. MotionTrack: Rethinking the motion cue for multiple object tracking in USV videos. Vis. Comput. 2024, 40, 2761–2773. [Google Scholar] [CrossRef]

- Prasad, D.K.; Dong, H.; Rajan, D.; Quek, C. Are object detection assessment criteria ready for maritime computer vision? IEEE Trans. Intell. Transp. Syst. 2019, 21, 5295–5304. [Google Scholar] [CrossRef]

- Fefilatyev, S.; Goldgof, D.; Shreve, M.; Lembke, C. Detection and tracking of ships in open sea with rapidly moving buoy-mounted camera system. Ocean Eng. 2012, 54, 1–12. [Google Scholar] [CrossRef]

- Yang, F.; Odashima, S.; Masui, S.; Jiang, S. Hard to track objects with irregular motions and similar appearances? make it easier by buffering the matching space. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 4799–4808. [Google Scholar] [CrossRef]

- Du, Y.; Zhao, Z.; Song, Y.; Zhao, Y.; Su, F.; Gong, T.; Meng, H. Strongsort: Make deepsort great again. IEEE Trans. Multimed. 2023, 25, 8725–8737. [Google Scholar] [CrossRef]

- Cao, J.; Pang, J.; Weng, X.; Khirodkar, R.; Kitani, K. Observation-centric sort: Rethinking sort for robust multi-object tracking. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 9686–9696. [Google Scholar] [CrossRef]

- Cao, J.; Zhang, H.; Jin, L.; Lv, J.; Hou, G.; Zhang, C. A review of object tracking methods: From general field to autonomous vehicles. Neurocomputing 2024, 585, 127635. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar] [CrossRef]

- Karunasekera, H.; Wang, H.; Zhang, H. Multiple object tracking with attention to appearance, structure, motion and size. IEEE Access 2019, 7, 104423–104434. [Google Scholar] [CrossRef]

- Zheng, L.; Tang, M.; Chen, Y.; Zhu, G.; Wang, J.; Lu, H. Improving multiple object tracking with single object tracking. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 2453–2462. [Google Scholar] [CrossRef]

- Yang, Y.; Li, G.; Qi, Y.; Huang, Q. Release the power of online-training for robust visual tracking. In Proceedings of the AAAI Conference on Artificial Intelligence; AAAI Press: Washington, DC, USA, 2020; Volume 34, pp. 12645–12652. [Google Scholar] [CrossRef]

- Qi, Y.; Qin, L.; Zhang, S.; Huang, Q.; Yao, H. Robust visual tracking via scale-and-state-awareness. Neurocomputing 2019, 329, 75–85. [Google Scholar] [CrossRef]

- Chu, P.; Fan, H.; Tan, C.C.; Ling, H. Online multi-object tracking with instance-aware tracker and dynamic model refreshment. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 161–170. [Google Scholar] [CrossRef]

- Luo, W.; Xing, J.; Milan, A.; Zhang, X.; Liu, W.; Kim, T.K. Multiple object tracking: A literature review. Artif. Intell. 2021, 293, 103448. [Google Scholar] [CrossRef]

- Stanojevic, V.D.; Todorovic, B.T. BoostTrack: Boosting the similarity measure and detection confidence for improved multiple object tracking. Mach. Vis. Appl. 2024, 35, 123. [Google Scholar] [CrossRef]

- Qi, Y.; Yao, H.; Sun, X.; Zhang, Y.; Huang, Q. Structure-aware multi-object discovery for weakly supervised tracking. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 466–470. [Google Scholar] [CrossRef]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar] [CrossRef]

- Park, H.; Ham, S.H.; Kim, T.; An, D. Object recognition and tracking in moving videos for maritime autonomous surface ships. J. Mar. Sci. Eng. 2022, 10, 841. [Google Scholar] [CrossRef]

- Han, J.; Cho, Y.; Kim, J.; Kim, J.; Son, N.S.; Kim, S.Y. Autonomous collision detection and avoidance for ARAGON USV: Development and field tests. J. Field Robot. 2020, 37, 987–1002. [Google Scholar] [CrossRef]

- Lee, W.J.; Roh, M.I.; Lee, H.W.; Ha, J.; Cho, Y.M.; Lee, S.J.; Son, N.S. Detection and tracking for the awareness of surroundings of a ship based on deep learning. J. Comput. Des. Eng. 2021, 8, 1407–1430. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y.; Zhong, Z.; Chen, Y.; Xia, J.; Chen, Y. Depth tracking of occluded ships based on SIFT feature matching. KSII Trans. Internet Inf. Syst. 2023, 17, 1066–1079. [Google Scholar] [CrossRef]

- Ding, H.; Weng, J. A robust assessment of inland waterway collision risk based on AIS and visual data fusion. Ocean Eng. 2024, 307, 118242. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhao, J.; Chen, X.; Chen, Y. A ship tracking and speed extraction framework in hazy weather based on deep learning. J. Mar. Sci. Eng. 2023, 11, 1353. [Google Scholar] [CrossRef]

- Guo, Y.; Shen, Q.; Ai, D.; Wang, H.; Zhang, S.; Wang, X. Sea-IoUTracker: A more stable and reliable maritime target tracking scheme for unmanned vessel platforms. Ocean Eng. 2024, 299, 117243. [Google Scholar] [CrossRef]

- Du, Y.; Wan, J.; Zhao, Y.; Zhang, B.; Tong, Z.; Dong, J. Giaotracker: A comprehensive framework for MCMOT with global information and optimizing strategies in VisDrone 2021. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2809–2819. [Google Scholar] [CrossRef]

- Luiten, J.; Osep, A.; Dendorfer, P.; Torr, P.; Geiger, A.; Leal-Taixé, L.; Leibe, B. Hota: A higher order metric for evaluating multi-object tracking. Int. J. Comput. Vis. 2021, 129, 548–578. [Google Scholar] [CrossRef]

- Bernardin, K.; Stiefelhagen, R. Evaluating multiple object tracking performance: The clear mot metrics. EURASIP J. Image Video Process. 2008, 2008, 246309. [Google Scholar] [CrossRef]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance measures and a data set for multi-target, multi-camera tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–10, 15–16 October 2016; pp. 17–35. [Google Scholar] [CrossRef]

| Dataset | Sequences | Length | GT Boxes | Trajectories |

|---|---|---|---|---|

| Train | 77 | 19,985 | 10,681 | 185 |

| Test | 7 | 1944 | 3604 | 38 |

| Model | Precision | Recall | mAP0.5 | mAP0.5:0.95 |

|---|---|---|---|---|

| YOLOv5m | 0.886 | 0.759 | 0.848 | 0.553 |

| YOLOv5l | 0.907 | 0.808 | 0.882 | 0.582 |

| YOLOv5x | 0.914 | 0.844 | 0.905 | 0.608 |

| Test Sequence | Tracking Method | HOTA | MOTA | AssA | IDF1 |

|---|---|---|---|---|---|

| Sequence 1 (Length 273) | ByteTrack | 15.082 | 17.204 | 10.580 | 25.210 |

| OC-SORT | 13.655 | 12.903 | 5.388 | 15.152 | |

| StrongSORT | 62.334 | 74.194 | 62.334 | 85.366 | |

| StableSORT | 63.325 | 74.194 | 63.325 | 85.542 | |

| Sequence 2 (Length 293) | ByteTrack | 36.579 | 31.319 | 38.633 | 43.041 |

| OC-SORT | 50.197 | 36.081 | 51.538 | 49.948 | |

| StrongSORT | 50.878 | 53.480 | 51.949 | 53.112 | |

| StableSORT | 54.638 | 51.465 | 59.075 | 58.824 | |

| Sequence 3 (Length 271) | ByteTrack | 20.576 | 39.786 | 11.688 | 23.349 |

| OC-SORT | 19.908 | 34.917 | 8.0646 | 16.286 | |

| StrongSORT | 57.543 | 72.447 | 59.798 | 76.003 | |

| StableSORT | 58.42 | 72.447 | 60.581 | 76.003 | |

| Sequence 4 (Length 275) | ByteTrack | 31.654 | 54.487 | 20.800 | 41.901 |

| OC-SORT | 29.032 | 49.519 | 12.574 | 25.509 | |

| StrongSORT | 67.611 | 86.378 | 62.634 | 76.818 | |

| StableSORT | 68.167 | 87.179 | 63.63 | 77.92 | |

| Sequence 5 (Length 270) | ByteTrack | 54.830 | 68.571 | 51.177 | 73.697 |

| OC-SORT | 44.864 | 59.560 | 26.909 | 40.670 | |

| StrongSORT | 73.414 | 84.176 | 67.14 | 79.206 | |

| StableSORT | 79.914 | 84.615 | 79.945 | 86.814 | |

| Sequence 6 (Length 271) | ByteTrack | 62.513 | 63.127 | 65.323 | 81.049 |

| OC-SORT | 74.242 | 83.776 | 74.202 | 87.770 | |

| StrongSORT | 75.306 | 83.776 | 76.983 | 89.224 | |

| StableSORT | 75.157 | 83.776 | 76.768 | 89.224 | |

| Sequence 7 (Length 291) | ByteTrack | 36.209 | 55.738 | 24.591 | 40.945 |

| OC-SORT | 48.033 | 48.087 | 33.213 | 52.199 | |

| StrongSORT | 74.353 | 86.612 | 75.574 | 92.909 | |

| StableSORT | 74.288 | 86.612 | 75.598 | 92.909 | |

| Overall Average | ByteTrack | 36.778 | 47.176 | 31.827 | 47.027 |

| OC-SORT | 39.990 | 46.406 | 30.270 | 41.076 | |

| StrongSORT | 65.920 | 77.295 | 65.202 | 78.948 | |

| StableSORT | 67.701 | 77.184 | 68.417 | 81.034 |

| Tracking Method | HOTA | MOTA | AssA | IDF1 |

|---|---|---|---|---|

| StrongSORT | 65.920 | 77.295 | 65.202 | 78.948 |

| StrongSORT+B-IoU | 67.479 | 76.605 | 68.333 | 80.805 |

| StrongSORT+OAKF | 66.113 | 77.347 | 65.529 | 79.444 |

| StrongSORT+B-IoU+OAKF (StableSORT) | 67.701 | 77.184 | 68.417 | 81.034 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, Y.-S.; Jung, J.-Y. A Stable Multi-Object Tracking Method for Unstable and Irregular Maritime Environments. J. Mar. Sci. Eng. 2024, 12, 2252. https://doi.org/10.3390/jmse12122252

Han Y-S, Jung J-Y. A Stable Multi-Object Tracking Method for Unstable and Irregular Maritime Environments. Journal of Marine Science and Engineering. 2024; 12(12):2252. https://doi.org/10.3390/jmse12122252

Chicago/Turabian StyleHan, Young-Suk, and Jae-Yoon Jung. 2024. "A Stable Multi-Object Tracking Method for Unstable and Irregular Maritime Environments" Journal of Marine Science and Engineering 12, no. 12: 2252. https://doi.org/10.3390/jmse12122252

APA StyleHan, Y.-S., & Jung, J.-Y. (2024). A Stable Multi-Object Tracking Method for Unstable and Irregular Maritime Environments. Journal of Marine Science and Engineering, 12(12), 2252. https://doi.org/10.3390/jmse12122252