1. Introduction

Ship detection, as one of the most important intelligent maritime perception technologies, has recently attracted significant interest from researchers and scholars in ocean surveillance [

1,

2,

3]. With the development of artificial intelligence, deep-learning-based ship detection methods are now dominating the maritime domain awareness (MDA) field due to their remarkable data fitting ability [

4,

5], which has achieved a breakthrough in the accuracy of ship identification compared to the traditional methods.

However, most of the deep-learning-based ship detection methods are trained based on a traditional training mode [

6,

7,

8,

9], i.e., all the monitoring examples are randomly presented to the model, neglecting the intricacies of the data samples and the current model’s learning progress, and inevitably lead to low model robustness and low generalization performance.

Furthermore, the oceanic environment is characterized by its dynamic, high-dimensional, and combinatorially complex nature. The presence of diverse factors, such as fog, clouds, rain, currents, and clutter, further complicates the detection process. Meanwhile, training a deep learning model requires training data, and those training datasets are subject to various historical, representative, measurement, aggregation, and evaluation biases, thereby creating discrepancies between the acquired data and the actual oceanic environment. The aforementioned issue raises higher demands on the robustness and generalization ability of current deep-learning-based ship detection models. Expanding the training dataset is a potential approach to enhance the generalization ability and robustness of a deep-learning-based model. However, it should be noted that this method is not without its challenges, as data collection is a labor-intensive and time-consuming task that requires significant financial and material support to obtain a large number of labeled data [

10]. Furthermore, it is important to recognize that increasing the dataset is not a panacea for improving the model’s robustness and generalization ability. Rather, the diversity of data is the key factor that can enhance the model’s ability to generalize and be robust. Therefore, relying solely on expanding the dataset to improve the model’s robustness is not a wise strategy.

Hence, in order to make up for the issues of low robustness and low generalization performance in current deep-learning-based ship detection methods, a novel network, LFLD-CLbased NET, is proposed for monitoring ships in real maritime scenarios.

The LFLD-CLbased NET adopts data augmentation techniques purposefully to construct a diversified training dataset that matches the real maritime environment, establishing a communication platform between the real and training environments. Then, the LFLD-CLbased NET incorporates the principles of curriculum learning (CL), organizing the extended training dataset in a meaningful order, by “starting small” and gradually presenting more complex concepts to acquire greater generalization and robustness in ship detection. Additionally, we propose a Leap-Forward-Learning-Decay (LFLD) strategy to the LFLD-CLbased NET that dynamically adjusts the difficulty and learning rate of curriculum learning, enhancing the model’s learning speed, reducing training time, and mitigating the occurrence of gradient explosion.

The experimental results on a real world ship monitoring dataset reveal that LFLD-CLbased NET achieves a state-of-the-art ship identification accuracy and outperforms the modern deep-learning-based methods. Furthermore, we conducted a series of supplementary experiments to assess the efficacy of both the curriculum learning and the Leap-Forward-Learning-Decay strategy. In general, we clarify the contributions of our work as follows:

One of the main contributions is that we propose a new deep-learning-based model that combines the curriculum learning mechanism for real-scenario ship detection. The curriculum learning mechanism is essentially more suitable for making model generalization ability stronger and the generated proposals more realistic.

We deliberate design of a novel learning rate decay strategy, Leap-Forward-Learning-Decay strategy, facilitating the expeditious and efficient training of a model. We further evaluate the performance of the Leap-Forward-Learning-Decay strategy, and the supplementary experimental results indicate that it effectively aids in training the model and can even enhance the model’s accuracy.

Another contribution is that we collected a small real maritime ship detection dataset. This dataset is more realistic compared to current ship detection datasets, and is able to reflect the robustness and generalization ability of models.

Experiments show that LFLD-CLbased NET achieves the highest accuracy and outperforms the current deep-learning based models, achieving 86.64% accuracy in ship detection tasks, significant improving 10% detection accuracy.

The remainder of this article is organized as follows:

Section 2 reviews the related work,

Section 3 describes the details of LFLD-CLbased NET, the experimental situation and analysis results are discussed in

Section 4, and

Section 5 concludes with a summary of the findings and future work.

3. LFLD-CLbased NET

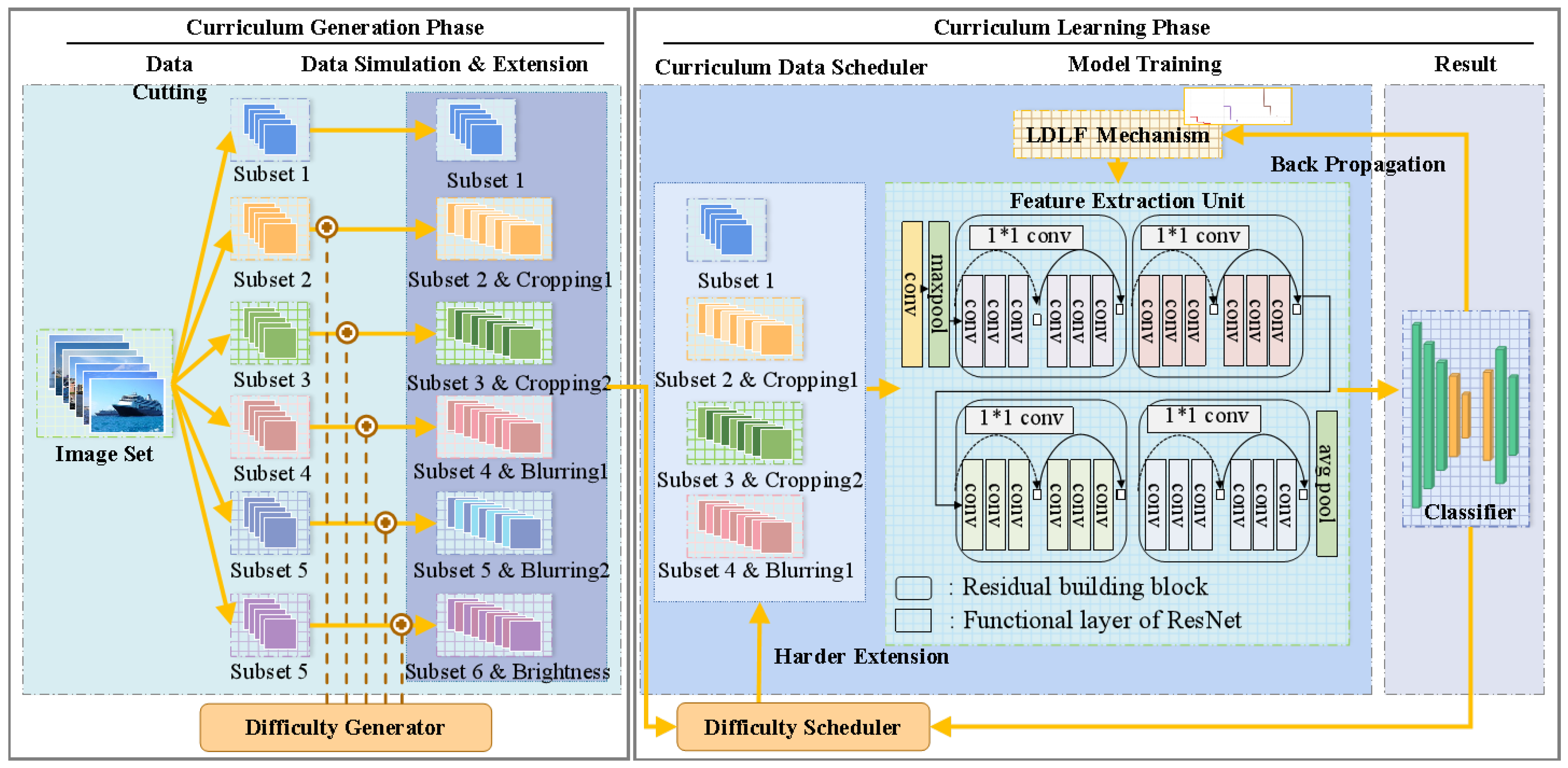

This paper introduces a novel Leap-Forward-Learning-Decay neural network, namely, LFLD-CLbased NET, which integrates curriculum learning for ship detection in a more realistic scenario. The LFLD-CLbased NET is a two-step pipeline model that consists of curriculum generation phase and curriculum learning phase.

Figure 1 shows the details of LFLD-CLbased NET. The LFLD-CLbased NET is founded upon the ResNet34 framework and employs a difficulty generator to produce a range of realistic and varied sample data. The resultant data are systematically arranged and converted into curricula through the assistance of a difficulty scheduler. The curriculum datasets are subsequently arranged in a manner that aligns with the learning progression of LFLD-CLbased NET, thereby enabling a structured course training and optimizing the performance of curriculum learning. During the training phase, the LFLD mechanism was integrated and a learning rate decay factor was devised to accommodate varying levels of learning complexity. By employing a Leap-Forward of the learning rate, the data information is continuously modified, thereby mitigating the issues of slow convergence rate and local optima entrapment. The capacity of the model to explore local optima is strengthened, and its generalization performance is enhanced, making the LFLD-CL-based NET an effective approach for maritime monitoring.

Subsequently, a comprehensive exposition of the model is presented, encompassing the problem statement, curriculum generation phase, curriculum learning, and LFLD mechanism.

3.1. Problem Statement

In this study, we approached the task as a supervised classification problem, with the objective of recognizing images of ship types. The model was designed to classify the type of ship shown in an outboard profile image captured by optical surveillance cameras. Within the provided dataset, , corresponds to the ith outboard profile image, while N signifies the aggregate quantity of images in the dataset. The primary aim of this endeavor is to prognosticate the classification of ship, which is identified by the label . The task’s output was characterized as .

3.2. Curriculum Generation Phase

The curriculum generation phase is the preprocess of curriculum training. During this phase, the original outboard profile ship detection dataset is segmented into multiple independent subsets, and then the subsets are sequentially fed into a difficulty generator for data transformation. The difficulty generator is a module consisting of random cropping unit, noise addition unit, and brightness adjustment unit, which are composed of data augmentation techniques.

The random cropping unit performs image cropping to enhance the model’s ability to process images with only a small portion of ship features. The formula of random cropping unit is

where

x represents a original image, and

denotes the image obtained by applying a cropping function represented by

.

is expressed as

where

represents the size of the clipped image.

The noise addition unit is utilized to simulate noise interference and enhance the model’s robustness against noise. The commonly used noise distributions include Gaussian noise, salt noise, and so on. Taking salt noise addition as an instance, the salt noise can be regarded as the random addition of white pixels to an image. The pixel points in the original image

x can be randomly replaced with white pixels. The density of salt noise can be represented as

p, which is typically within the range of [0, 1]. The method of adding salt noise is mathematically described as follows:

where

represents the pixel value of the

ith channel in the

jth row and

kth column of the image. It should be noted that the density value is able to affect the quality of the generated data. When the value of

p is too high, the image may be overly distorted by noise, which can negatively affect the performance of the model. Conversely, when the value of is

p too low, the diversity of the data may not be effectively increased.

The brightness adjustment unit enhances the diversity of the data by adjusting the brightness of the image, and the formula is as follows:

where

represents the adjusted value of brightness.

The difficulty generator serves as a bridge between real-world data and experimental data, and the data processed through difficulty generator obtain diversity. Its detailed operations are described in Algorithm 1.

| Algorithm 1 Detection Difficulty Generator Algorithm. |

Input: Outboard profile ship detection dataset hyper-parameters , flag_list Require: Dataset Cutting function ; Random Cropping function, ; Noise Addition function, ; Brightness Adjustment function, .

- 1:

# obtain multiple subsets - 2:

- 3:

for j in do - 4:

flag = flag_list, # flag_list is a hyperparameter of the data enhancement type - 5:

if flag==Cutting then - 6:

- 7:

if flag==Cropping then - 8:

- 9:

if flag==Noising then - 10:

- 11:

if flag==Brightness then - 12:

- 13:

- 14:

endfor

Output: preprocessed subsets of outboard profile ship detection dataset: |

3.3. Curriculum Learning Phase

The output from the curriculum generation phase was subsequently fed into the difficulty scheduler to generate a training curriculum from easy to difficult, which will be transmitted to a information processing unit for curriculum training. The training process of curriculum learning phase is shown in

Figure 2. The complexity of ship monitoring in real sea conditions can lead to poor model performance and low generalization ability when directly training the model for automatic monitoring; however, this problem can be effectively alleviated by utilizing curriculum training.

The definition of curriculum learning in this paper is followed by [

26]; a curriculum is a sequence of training criteria over T training steps:

, and each criterion

is a reweighting of the target training distribution

.

such that the following three conditions are satisfied:

(1) The entropy of distributions gradually increases, i.e., .

(2) The weight for any example increases, i.e., .

(3) .

In this definition, in accordance with Condition (1), the diversity and information of the training set should progressively increase. This is achieved by increasing the probability of sampling slightly more difficult examples through the reweighting of examples in later steps. Condition (2) involves gradually adding more training examples, either in a binary or soft manner, to expand the size of the training set. Finally, Condition (3) requires uniform reweighting of all examples and training on the target training set. The definition of curriculum learning serves the purpose of providing a framework for designing a training curriculum that gradually increases in difficulty to better facilitate the learning process for a model. It allows for a more effective and efficient training process by introducing examples in a way that is more conducive to learning, and can lead to improved performance and generalization ability of the model.

The difficulty scheduler is a crucial processing unit in curriculum learning phase, which continuously generates a curriculum training set based on the model’s training process. The concept of complexity in curriculum learning refers to the level of difficulty or sophistication of the training examples. Specifically, the level of difficulty of the training examples varies depending on the entropy of distributions in the curriculum training set based on Condition (1). In other words, the concentration or variety of the entropy samples in the next stage of the curriculum training set is greater than that in the previous stage. It is worth noting that the new curriculum training set is composed of the fusion and reorganization of the training set from the previous curriculum training and an untrained preprocessed subset.

The feature extraction unit is another important processing unit that is designed based on the principles of deep learning, possessing both learning and recognition capabilities. We utilized a residual network as the underlying framework for this unit.

In the feature extraction unit, the image sample of the curriculum training set was first delivered througha convolution layer for performing feature extraction and obtaining higher level representations. The convolution layer comprises a kernel scanning layer and activation layer that extract advanced information by scanning through kernels and applying an activation function. The most essential features are obtained through a maxpooling layer and are then passed through multiple residual building blocks for deeper extraction. Each residual building block includes a convolution layer, a batch normalization layer, and an activation layer.

A breakdown of the curriculum learning phase applied to the ship detection task is presented in Algorithm 2, which includes essential specifics.

| Algorithm 2 Curriculum learning algorithm. |

Input: Preprocessed subsets: Require: Residual, Conv, Maxpool, Avgpool, and FC functions, which encompass the essential elements of residual networks, such as convolution layers, max pooling functions, average pooling functions, and fully connected layers.

- 1:

# Begin Curriculum training - 2:

c=[] - 3:

for t in len do - 4:

, # obtaining the tth Curriculum train set - 5:

- 6:

- 7:

for i in [3,4,6,3] do, # [3,4,6,3] is the number of the residual basic block - 8:

for j in 1 to i do - 9:

- 10:

endfor - 11:

endfor - 12:

- 13:

, # Dimensionality reduction - 14:

# Classifier: - 15:

- 16:

endfor

Ouput: ship detection labels |

3.4. LFLD Mechanism

The curriculum learning strategy is able to effectively facilitate the model to achieve high-quality learning, but compared to the traditional training strategy, curriculum learning requires more training time. As can be seen from Algorithm 2, curriculum learning needs to repeatedly train the model based on data subsets, resulting in a multiple-fold increase in training time.

In order to enhance the efficiency of model training, we developed the LFLD Mechanism, integrating the concept of learning rate decay. The LFLD Mechanism is capable of dynamically adapting the learning rate, whereby in the initial stages of course training, the learning rate undergoes a significant increase, facilitating the model to approach the optimal solution rapidly. However, during the middle and later stages of course training, a high learning rate may result in the model experiencing gradient explosion or gradient disappearance, or failing to converge to the optimal solution. Under the influence of the LFLD Mechanism, the learning rate gradually decreases with a fixed step size, thereby precluding the model from encountering issues such as gradient explosion or gradient disappearance during the course of training, and improving the stability of gradient descent.

Learning rate is a hyperparameter in deep learning model training that determines the size of weight updates made by the model during each iteration. The magnitude of the learning rate can affect the speed and effectiveness of model training. The formula for the learning rate is as follows:

where

means the learning rate,

represents the weight value of the tth iteration, and

represents the gradient of the loss function with respect to the weight.

As the training progresses, the LFLD Mechanism sets a sequence of increasing initial learning rates, and then adopts a fixed step size linear decay strategy for the learning rate in the middle and later stages of each curriculum, according to the following formula:

where

represents the learning rate of iteration t, and

denotes the attenuation coefficient.

4. Experiments and Results

4.1. Dataset

Two ship detection datasets, Deep Learning Vessel Dataset and Real-World Ship Detection Dataset, were utilized to train or evaluate the performance of the LFLD-CLbased NET. The Deep Learning Vessel Dataset is a publicly available dataset for an image recognition task (

https://www.kaggle.com/datasets/arpitjain007/game-of-deep-learning-ship-datasets), consisting of 6252 images of five different types of vessel. This dataset contains a significant number of high-quality images, with clear contrasts and sharp focus, and an adequate number of images for each type, making it a suitable choice for training deep learning models. The Real-World Ship Detection Dataset, on the other hand, is a small dataset that we collected specifically for testing the robustness and generalization ability of models. The image quality in this dataset is more reflective of nearshore camera conditions, including various weather-related factors, providing a more accurate and representative assessment. The data in this dataset was captured using optical surveillance cameras. Compared to the Deep Learning Vessel Dataset, the images in this dataset are more realistic and can effectively reflect the robustness and generalization ability of models.

Table 1 and

Table 2 present the mathematical statistics for both datasets, and the corresponding figures (

Figure 3 and

Figure 4) provide a visual representation of the data.

4.2. Evaluation Metrics

The LFLD-CLbased NET ship identification performance is evaluated quantitatively in terms of accuracy, precision, recall, and F1, which are as follows:

Positive samples correctly predicted as positive are represented by TP, while negative samples correctly predicted as negative are represented by TN. FP and FN denote negative and positive samples, respectively, that are incorrectly predicted.

4.3. Experiment Setup

The model architecture was based on ResNet34. The adopted optimizer was Adam. The whole model was optimized with the proposed loss function that integrates the probabilistic classification loss with the multiclass cross-entropy loss. The Adam optimizer was utilized with a batch size of 64, and the learning rate decay step size was set to 20 epochs based on empirical evaluation.

4.4. Results of Experiments

In order to thoroughly investigate the performance of the LFLD-CLbased NET, comparative experiments were conducted on multiple widely utilized deep learning models for image classification. These models include the renowned AlexNet, GoogLeNet, VGG, ResNet, Wide-ResNet, GoogleNet, DenseNet, and MobileNet, as well as their variant forms. All the baseline models and their variant forms were trained and evaluated by the Real-World Ship Detection Dataset. Model selection was grounded in their established reputation in the field of image classification, as well as their potential to achieve high accuracy in detection tasks.

Table 3 presents comparison experiment results. The experimental results indicate that LFLD-CLbased NET significantly improved upon the baseline models in the real maritime scenarios ship detection task, achieving a detection accuracy rate of 86.635%, coupled with a precision score of 87.090%, recall score of 86.226%, and F1 score of 86.431%. The LFLD-CL-based NET demonstrated a significant increase in accuracy when compared to the baseline models, which ranged from 37.855% to 72.555%.

To further validate the impact of the curriculum learning and LFLD mechanisms on the monitoring and detection performance of the model, we employed the control variable method and selected our ResNet34 skeleton model as the reference point. By establishing ResNet34-Without CL-320 and ResNet34-Without CL-9437, we aimed to conduct more comprehensive comparative experiments. The ResNet34 model trained on the Real-World Ship Detection Dataset is denoted as ResNet34-Without CL-320, while ResNet34-Without CL-9437 refers to the ResNet34 model trained on the Deep Learning Vessel Dataset. It is worth noting that neither of these models underwent curriculum training strategy nor LFLD learning rate decay strategy during training. In comparison, LFLD-CLbased NET-9437 was trained on the Deep Learning Vessel Dataset with the incorporation of curriculum training strategy and LFLD learning rate decay strategy. The experimental outcomes are presented in the last three rows of

Table 3. It is noteworthy that the results in

Table 3 were evaluated using the test set of the Real-World Ship Detection Dataset.

The inferior performance of ResNet34-Without CL-320 was primarily due to the limited size of the training set in the Real-World Ship Detection Dataset, resulting in overfitting despite a more similar distribution between the training and test sets. ResNet34-Without CL-9437 compared to ResNet34-Without CL-320 revealed a 3% increase in detection accuracy for the latter, highlighting the potential benefits of expanding the dataset for enhanced model convergence. Notably, LFLD-CLbased NET-9437 exhibited a significant improvement in detection accuracy by 13% and 10% when compared to ResNet34-Without CL-320 and ResNet34-Without CL-9437, respectively, providing further evidence of the effectiveness of curriculum training and LFLD.

The results of the experiment provide additional evidence supporting the superior generalization ability and robustness of the LFLD-CLbased NET. Both LFLD-CLbased NET-9437 and ResNet34-Without CL-9437 were trained on the Deep Learning Vessel Dataset, but the testing was conducted using the Real-World Ship Detection Dataset, which exhibits a distribution bias compared to the training data. Thus, the testing results can better reflect the model’s generalization ability and stability. The experimental outcomes revealed that the F1 score for ResNet34-Without CL was 74.678, while that for LFLD-CLbased NET was 86.431, indicating a significant improvement of 11.753%.

We also analyzed the balance relationship between model accuracy rate and recall rate, and the experimental results are shown in

Figure 5. It can be seen that the AUC-PR value of LFLD-CLbased NET is significantly higher than that of other models, with better generalization ability and robustness. The AUC-PR value of 0.94 of LFLD-CLbased NET indicates that the model has a very good balance between accuracy rate and recall rate, and can effectively distinguish positive and negative examples, so it has high predictive power. In contrast, LeNet’s AUC-PR value is 0.37, which makes it difficult to effectively distinguish positive and negative examples.

4.5. Comparison of the Training Process for LFLD-CLbased NET

We conducted an analysis of the training process for the ResNet34-Without CL-320, ResNet34-Without CL-9437, and LFLD-CLbased NET-9437, and the experimental results are shown in

Figure 6.

During the initial stages of the curriculum training, the testing accuracy of ResNet34-Without CL-320 exhibited a significant increase, surpassing that of ResNet34-Without CL-9437, and LFLD-CLbased NET-9437 at certain points. The improvement observed can be ascribed to the small real-world dataset used to train ResNet34-Without CL-320, which had a relatively uniform distribution between the training and test sets, enabling the model to converge rapidly in the early training phase. As the number of training epochs increased, we observed that the accuracy of ResNet34-Without CL-320 reached a peak value of 73.501 at epoch 99 before displaying a slow decline, consistent with our earlier analysis. This decline can be attributed to the dataset’s limited number of training samples, which constrained the model’s learning efficiency from making further improvements.

In contrast to ResNet34-Without CL-320, ResNet34-Without CL-9437 employed a more extensive and varied dataset for training, contributing to a gradual but consistent improvement in accuracy throughout a prolonged period as the number of training epochs increased. Notably, the model achieved its highest accuracy at epoch 422.

In contrast to the previous two models, LFLD-CLbased NET-9437 exhibited a step-like increase in accuracy during training, following the approach of curriculum learning The curriculum learning mechanism involved training the model on a dataset that gradually increased in difficulty, with the difficulty level advancing by one step every 100 epochs. As a result, the accuracy of the model exhibited a cyclic increase with a period of 100 epochs. Simultaneously, our findings indicate that during the early stages of each 10-epoch period (i.e., the initial phase of a new curriculum), the model’s accuracy occasionally exhibited a rapid decline, attributable to the LFLD mechanism and curriculum learning mechanism. Those two mechanisms prompted the model to undergo a phase of rapid parameter adjustment, resulting from the addition of numerous challenging courses and learning rate adjustments, leading to a momentary drop in prediction accuracy. However, as the model progressed to learn and deepen its understanding of the new courses, its accuracy steadily increased, surpassing the previous highest point. These experimental outcomes provide further evidence of the effectiveness of curriculum learning mechanism and LFLD mechanism, which can enhance the model fitting process, learning efficiency, and robustness of the model.

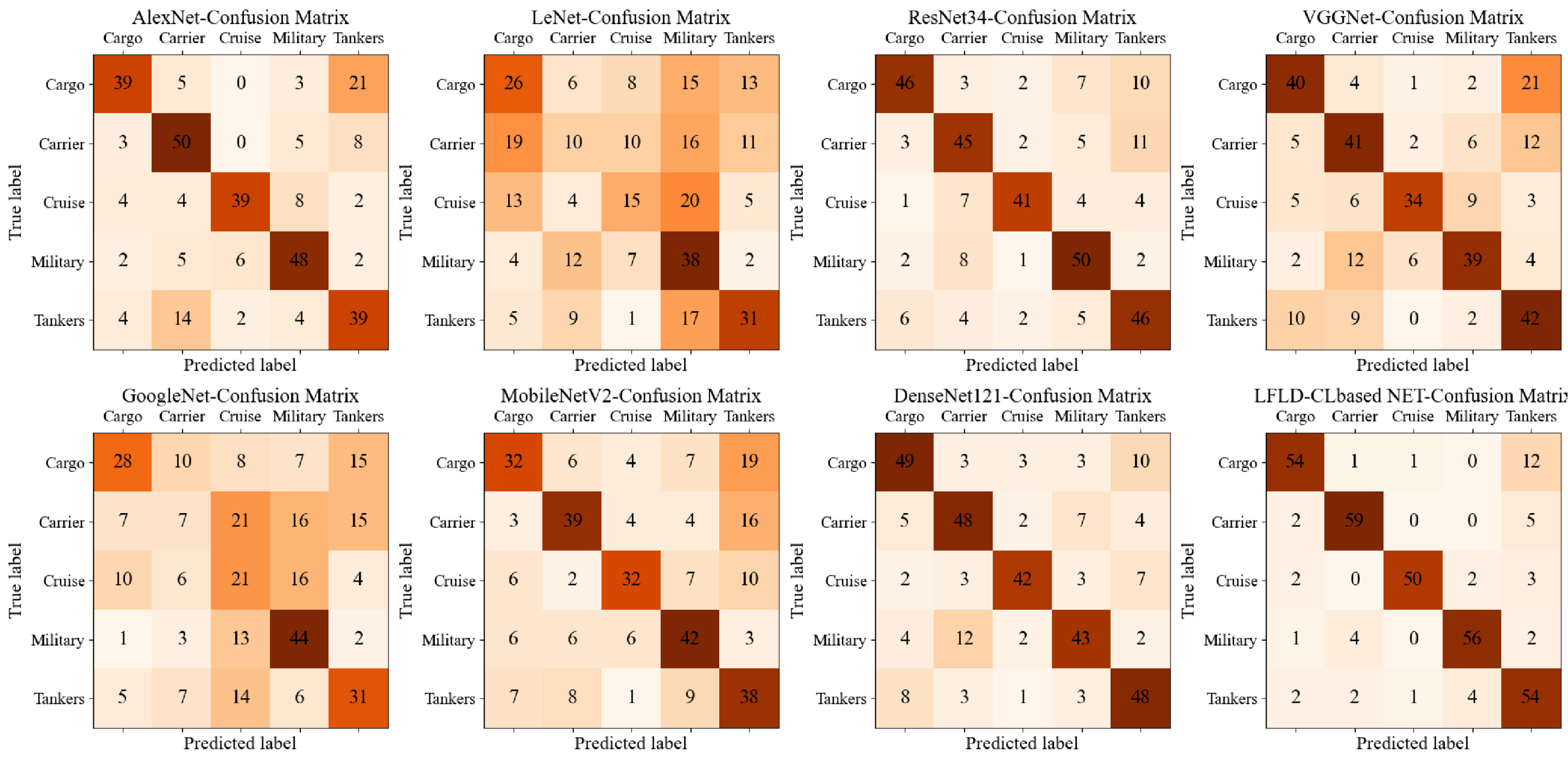

4.6. Error Investigation

We carried out a comprehensive examination of errors in the baseline deep learning model and LFLD-CL-based NET using precision–recall curves and confusion matrices. The experimental results, shown in

Figure 7 and

Figure 8, reveal that the overall misclassification rate of our model is still significantly lower than that of the baseline models.

The outcomes of the ship type prediction error analysis for different models are presented in

Figure 7 and

Figure 8. Overall, LFLD-CLbased NET outperformed other deep learning baseline models. However, we identified a probability of LFLD-CLbased NET misclassifying cargo as tankers. Nonetheless, this tendency was also observed among other models, with most other models exhibiting a misclassification rate approximately two times higher than our model. Furthermore, most deep-learning-based models demonstrated a tendency to misclassify tankers as carriers, whereas LFLD-CLbased NET showed significant improvement in this regard. Additionally, we were pleasantly surprised to find that our model exhibited high accuracy in predicting carriers, with significantly lower misclassification rates compared to other models.

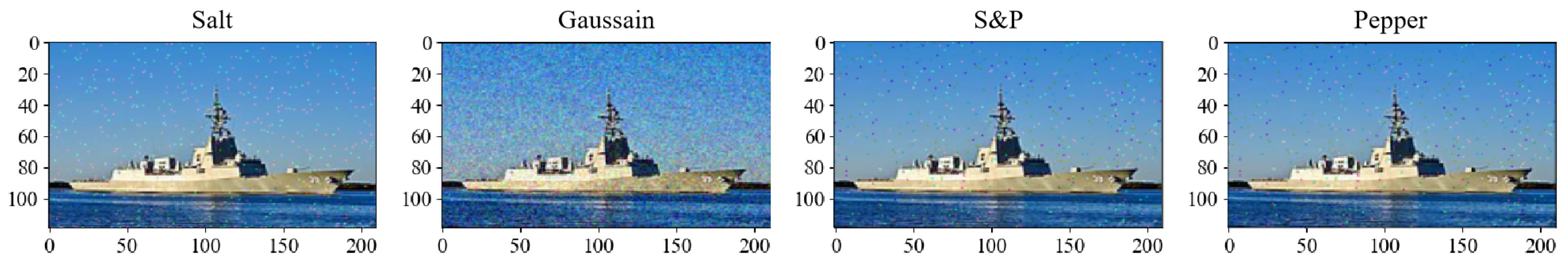

4.7. Exploratory Analysis of the Impact of Noise Types

An extensive exploration was carried out to investigate how different noise types impact ship monitoring performance in real-world scenarios. We tested the effects of four of the most common noise types, namely Gaussian noise, pepper noise, s&p noise, and salt noise, on the model.

Figure 9 illustrates sample images of these four noise types.

The ResNet-34 served as the primary test model in this study. To enable comparison, we utilized the curriculum learning mechanism to train the model with course learning taking place every 100 epochs. The course design method remained consistent with previous experiments, except for the substitution of the third and fourth course data with corresponding noise type data. It is worth noting that our model did not adopt the LFLD updating method in this experiment.

In the experiment, the amount values of noise data were set to 0.01 and 0.03 for the third and fourth course, respectively. The outcomes are illustrated in

Figure 10. The results demonstrate that noise type salt is the most suitable for real-world scenarios, yielding a model accuracy of up to 86.64% when utilizing salt noise for course learning. This represents an approximate 3% improvement compared to the testing results obtained using Gaussian noise. The second-best noise type is pepper noise, with the model accuracy at 85.22%, slightly lower than that achieved with salt noise.

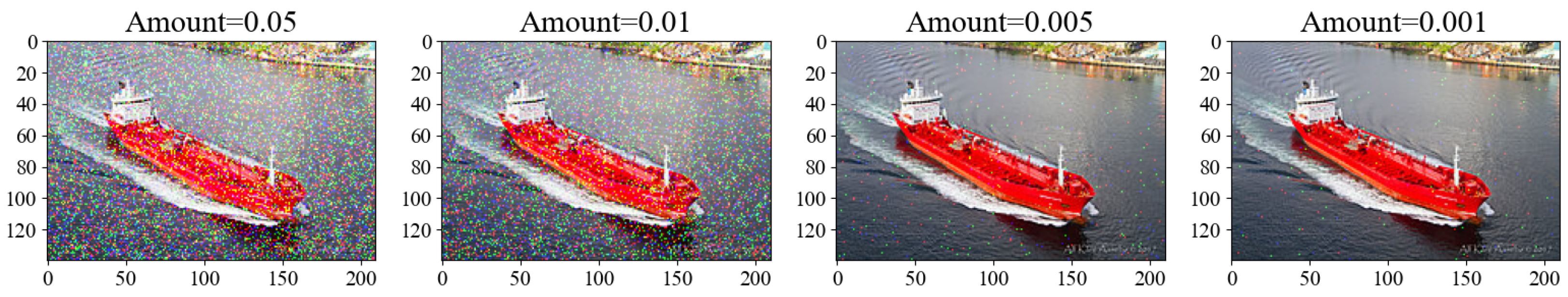

4.8. Analysis of the Impact of Noise Intensity

We conducted a further analysis of the impact of various noise intensities on the model. In the context of data augmentation techniques, noise data commonly refers to the incorporation of a certain degree of random perturbation into the original data. The amount value is a frequently used parameter for noise data, which aims to regulate the intensity and quantity of noise. Specifically, the amount value typically denotes the magnitude of the noise amplitude, which can be leveraged to control the proportion and intensity of noise in the data. For instance, higher amount values produce more pronounced noise perturbations, while lower amount values result in weaker noise perturbations. In the data augmentation process, the noise intensity can be precisely controlled by adjusting the amount value to enhance the effectiveness and stability of data augmentation.

Figure 11 presents the noise data generated by different amount values.

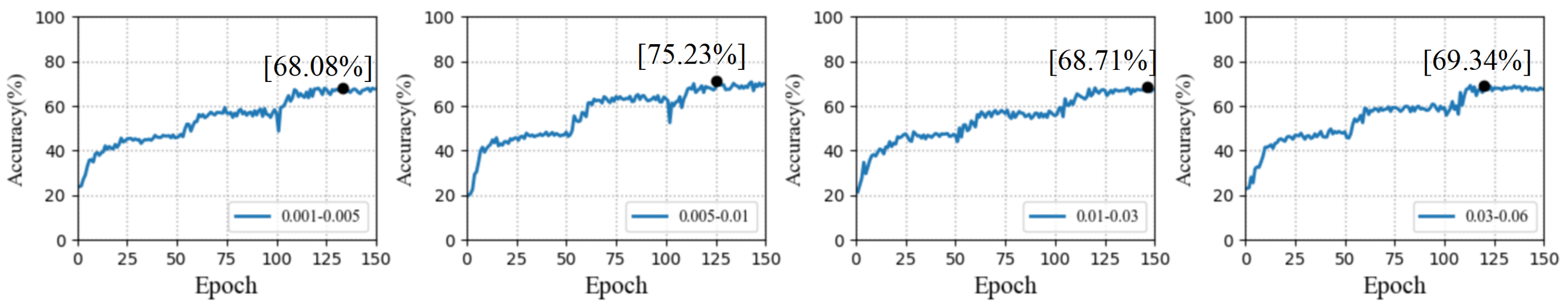

In this study, we persisted in using ResNet-34 as the reference model, employed salt noise as the primary noise source, adopted the curriculum learning as the fundamental model training approach, and designated the duration of each basic course learning to 50 epochs. We investigated various combinations of noise intensities, and the experimental outcomes are depicted in

Figure 12. Notably, the icons in the figure represent different intensities of noise, where the icon for test 1 signifies that the amount value is set to 0.001 for the third course learning stage and 0.005 for the fourth course learning stage in test 1.

From

Figure 12, it is evident that varying noise intensities also exert a substantial influence on the model’s performance. The combination of amount values 0.005 and 0.01 can enable the model to better learn the distribution pattern of the data, achieving an accuracy of 71.23%, which is the highest among all noise combinations. The experimental results also reveal that adding a larger amount of noise information to the model does not necessarily lead to better performance. For instance, test 4, which adopts noise amount values 0.03 and 0.06, can enhance the model’s adaptability to noise and interference to some extent, thereby improving its robustness. However, this combination does not conform to the noise distribution in real-world scenarios. Additionally, we also observed that weak noise has limited assistance to the model, as demonstrated by the results of test 1.

4.9. Analysis of the Effectiveness of Learning Rate Decay Strategy

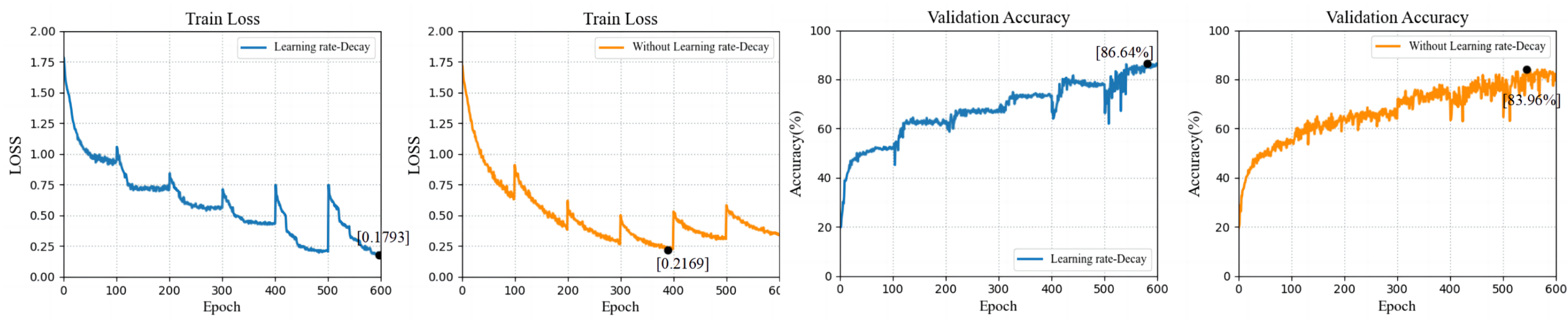

To further investigate the effectiveness of learning rate decay strategy, we conducted an ablation study to confirm the role of learning rate decay strategy in the ship detection task. We devised two models, LD-CLbased NET and CLbased NET, by leveraging our original model as the backbone. LD-CLbased NET employed the conventional constant decay strategy for learning rate decay, while CLbased NET did not incorporate any learning rate decay strategy. The experimental outcomes are demonstrated in

Figure 13.

The experimental results indicate that the model’s accuracy is higher when the learning rate decay strategy is employed, achieving a performance of 86.3%, which is 3% higher than the model without learning rate decay strategy. This implies that the learning rate decay strategy can assist the model in surpassing local optimal solutions and enhancing its detection performance in ship detection tasks. Moreover, it can be observed from the training loss plot that the implementation of the learning rate decay strategy promotes quick attainment of the optimal solution in the early stages of training, while also preventing overfitting in the later stages, resulting in improved training efficiency. This outcome is also evident from the valid acc plot, where it can be seen that the model’s valid acc exhibits a rapid increase during the first half of each course learning stage, followed by a stable maintenance in the latter half, when the learning rate decay strategy is employed.

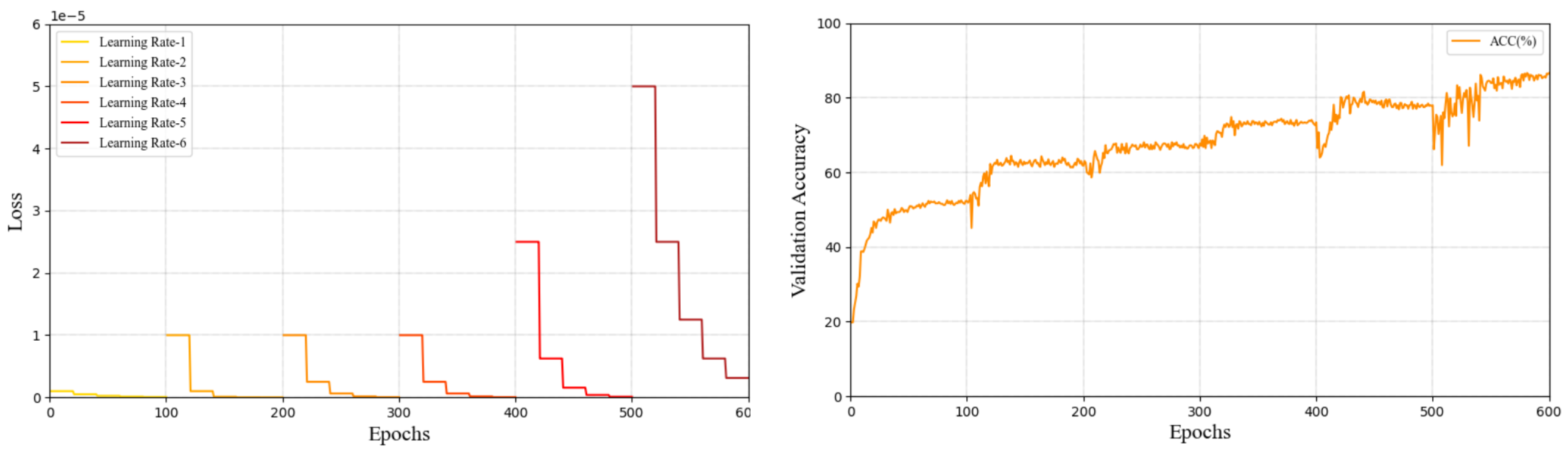

4.10. Exploratory Analysis of Learning Rate Initialization for LFLD Mechanism

In

Section 4.6, we presented evidence supporting the efficacy of the learning rate decay strategy in ship detection task. In this section, we conduct an exploratory analysis of the performance of the LFLD mechanism. Specifically, we investigate the influence of various combinations of initial learning rates on model performance across six course learning stages for the LFLD mechanism. Notably, the learning rate decay values applied in this section are set uniformly to

, with each course unit comprising 50 epochs. The initial learning rate settings for every course stage are tabulated in

Table 4, and the experimental outcomes are illustrated in

Figure 14.

The results reveal that different initial learning rate values have a certain impact on the model’s learning process. It can be observed that when the initial learning rate is set to a large value, the model’s performance exhibits significant oscillations during the early stages of course learning, and even experiences short-term performance degradation due to the large adjustment of weights caused by the learning rate. However, this decline is transient, and with the model’s learning progress, a larger learning rate can help the model rapidly fit the data information. The most representative example of this phenomenon is the LR4 learning rate scheme, where the learning rate value is relatively large compared to other schemes, resulting in oscillations in the model’s performance during the learning process. Meanwhile, the model’s performance increases rapidly during the oscillations.

After continuous adjustments, the optimal learning rate value and the corresponding learning process for LFLD-CLbased NET are shown in

Figure 15. It can be observed that the learning rate exhibits a leapfrog-like increase across different course learning stages, while within each course learning stage, the learning rate decays continuously with a fixed step size.

5. Conclusions

Existing deep-learning-based ship detection approaches typically encounter the training samples in a random or unstructured manner, resulting in a less stable, overfit model with poor performance, and difficulty in optimization. In this article, we introduce a novel model, LFLD-CLbased NET, which incorporates the concept of curriculum learning. Specifically, the model leverages a difficulty generator and scheduler in order to present training data to the model in a structured and gradually increasing order of complexity, tailored to real oceanic scenarios. Experimental findings demonstrate that curriculum learning strategy leads to a significant improvement in the model’s training performance and detection accuracy, increasing its robustness and generalization capability to effectively handle noise and variations in the data. Additionally, to further enhance the model’s training efficiency, we introduce the LFLD learning rate decay strategy, which dynamically adjusts the model’s backpropagation update rate, accelerates the convergence speed, and improves its generalization ability and training efficiency by enabling the model to overcome local optima. Notably, both curriculum learning and LFLD are extremely valuable for deep-learning-based ship detection models. In future research, we will integrate other information generation and scheduling techniques for obtaining a more comprehensive training dataset to further enhance the robustness and generalization capability of the model. Additionally semisupervised learning or PU-learning techniques will be incorporated into our model to overcome no-ground-truth ship images problems.