Autonomous Obstacle Avoidance in Crowded Ocean Environment Based on COLREGs and POND

Abstract

1. Introduction

2. Related Work

3. Collision Avoidance Path Planning in Crowded Ocean Environment

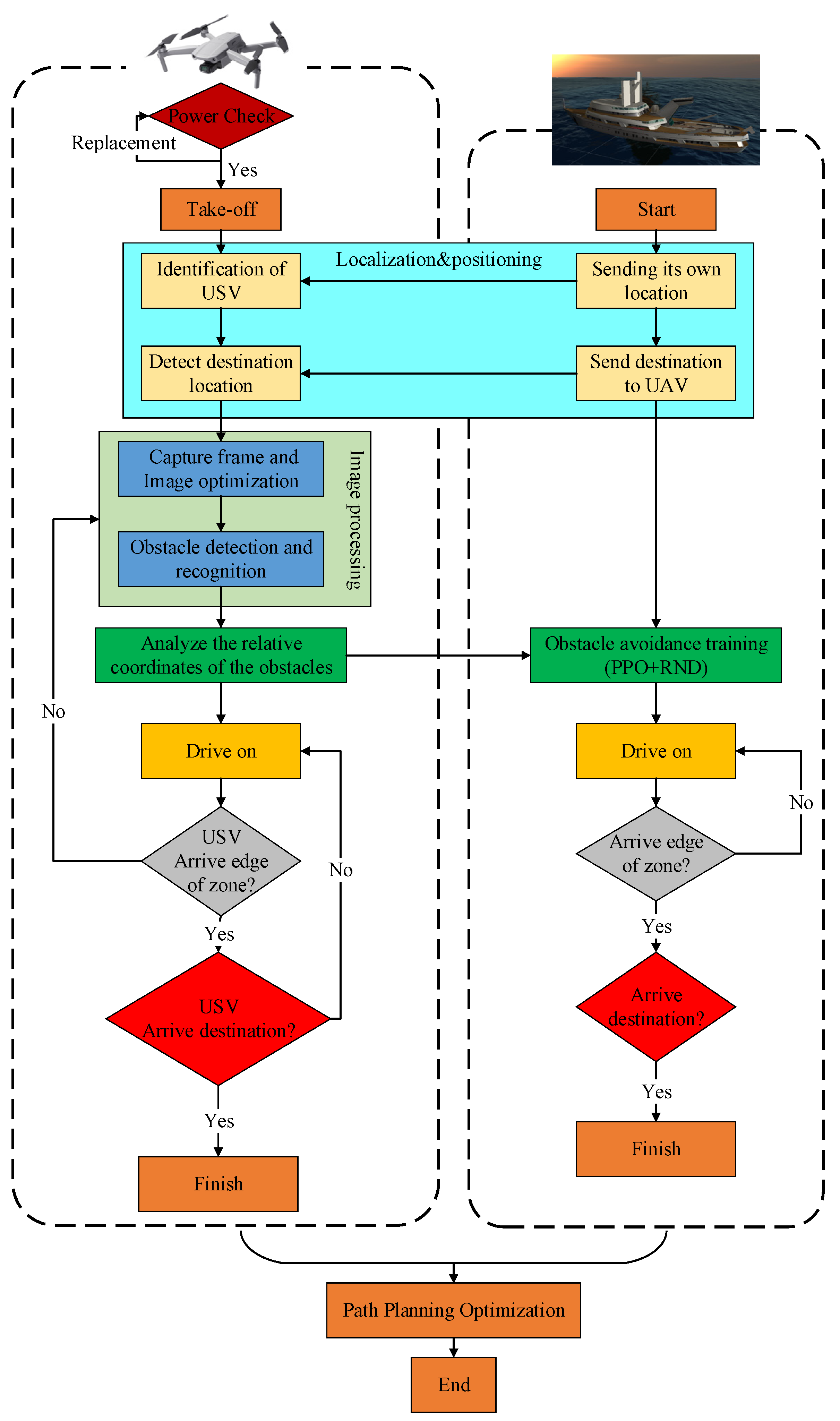

3.1. Framework for a Ship Path Planning System

3.2. International Regulations for Preventing Collisions at Sea

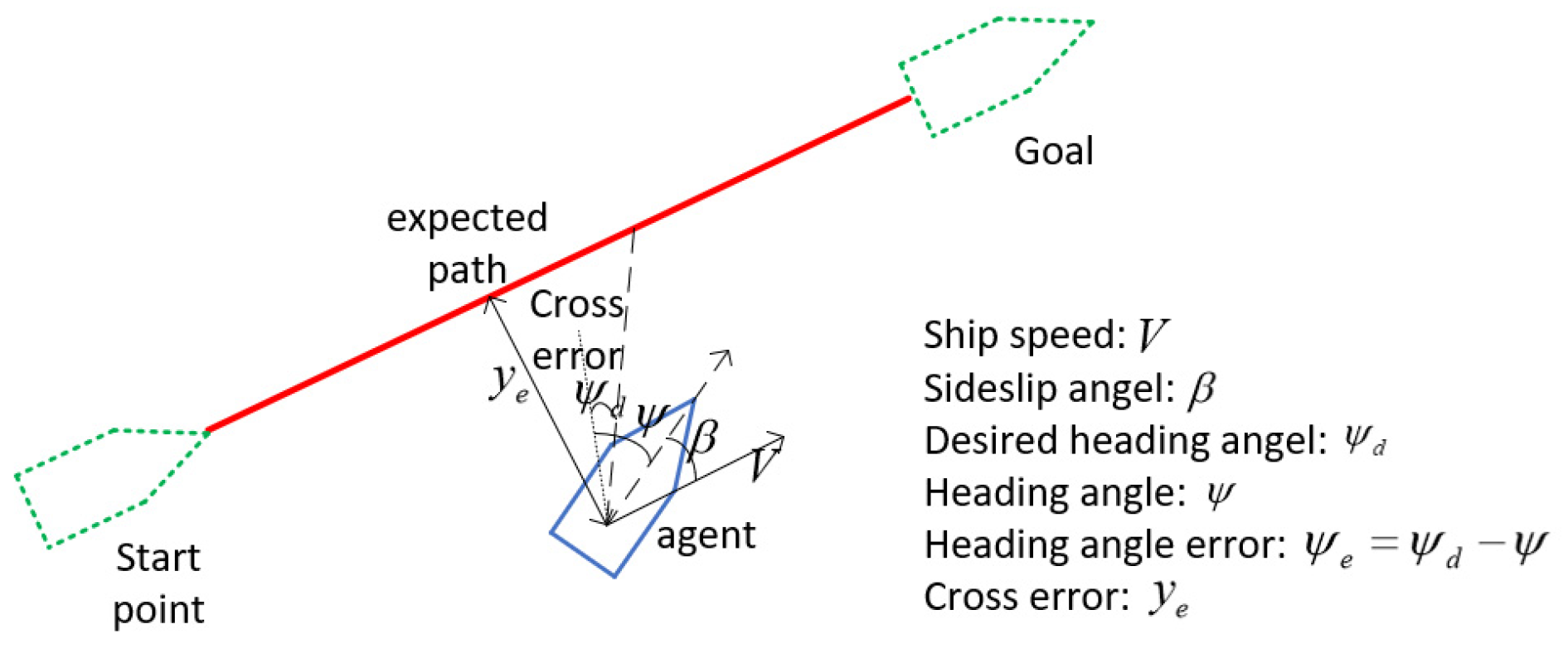

3.3. Problem Definition for Autonomous Vessel Path Planning

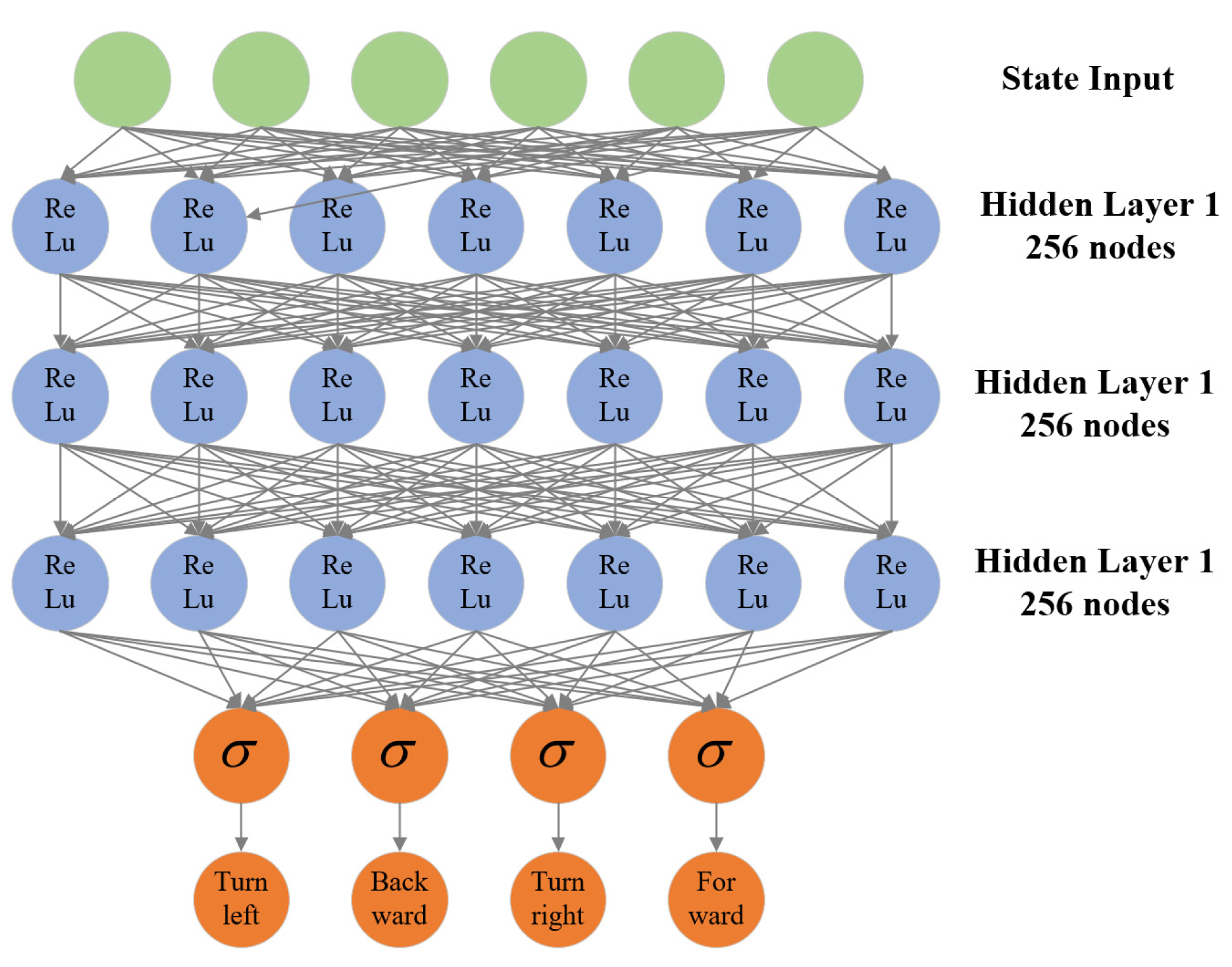

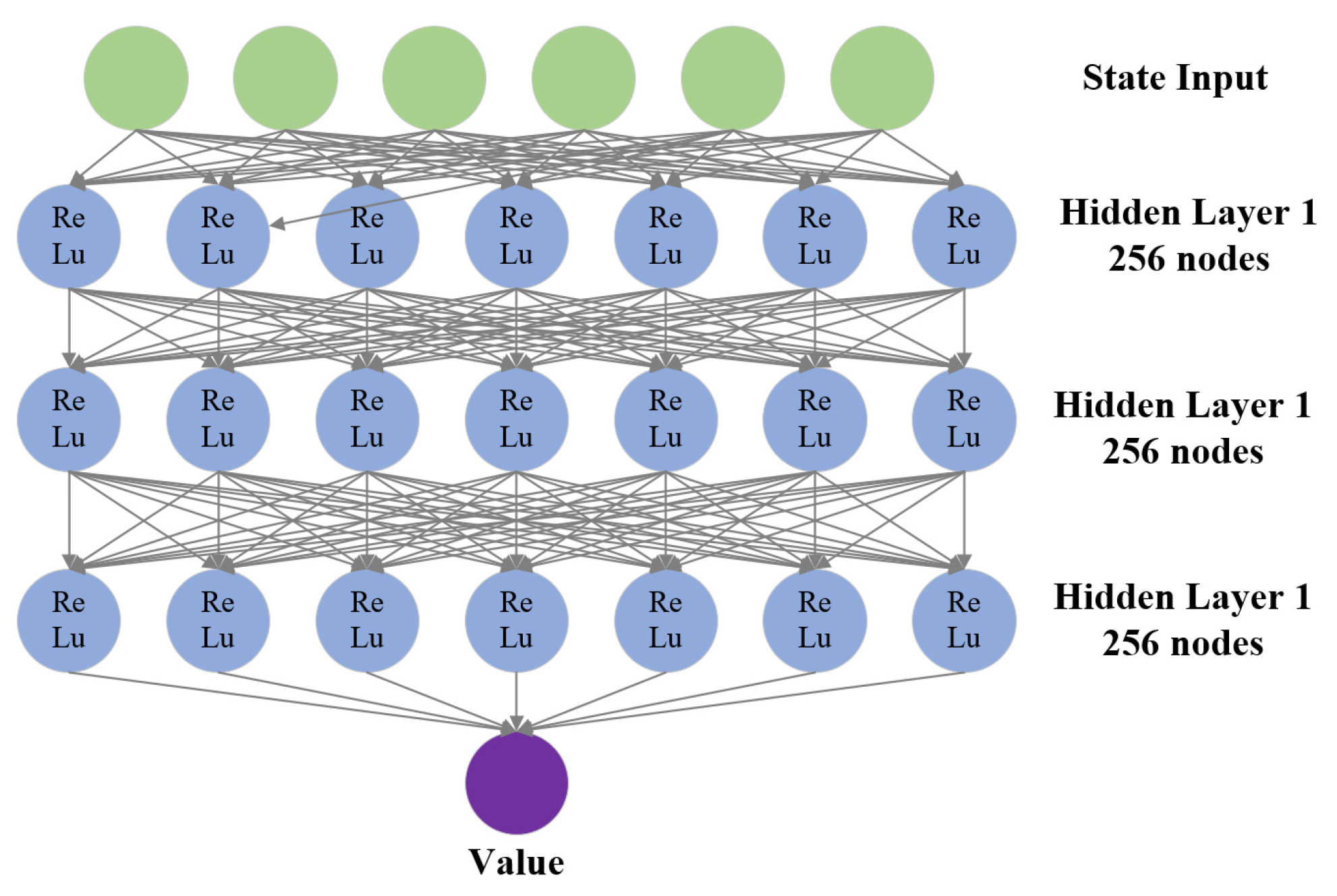

4. The Model of the Deep Reinforcement Learning Network

4.1. Proximal Policy Optimization Algorithm

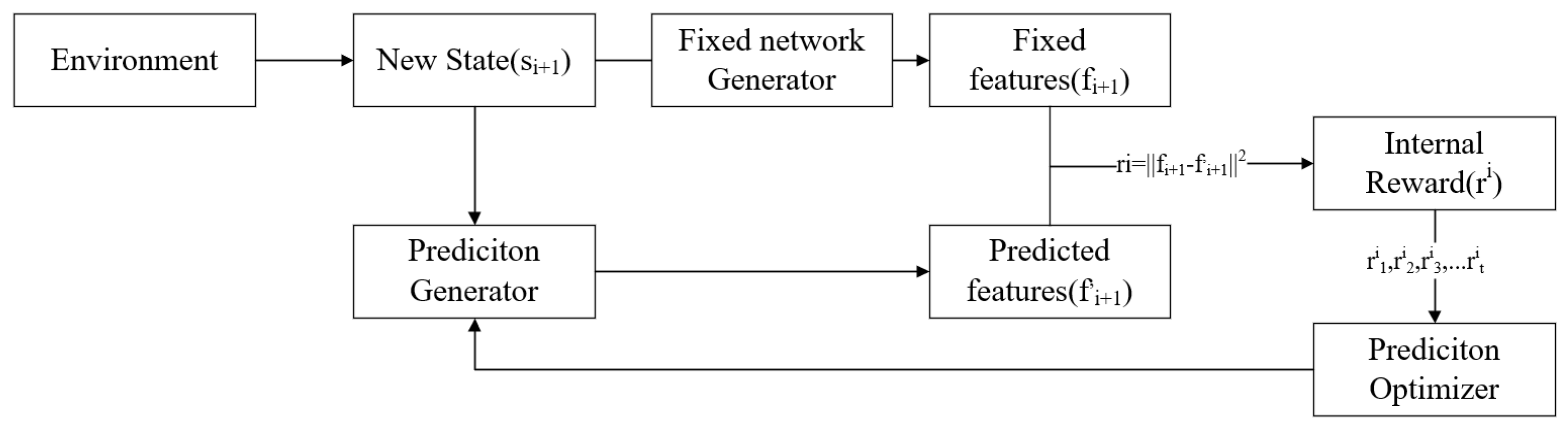

4.2. Random Network Distillation (RND)

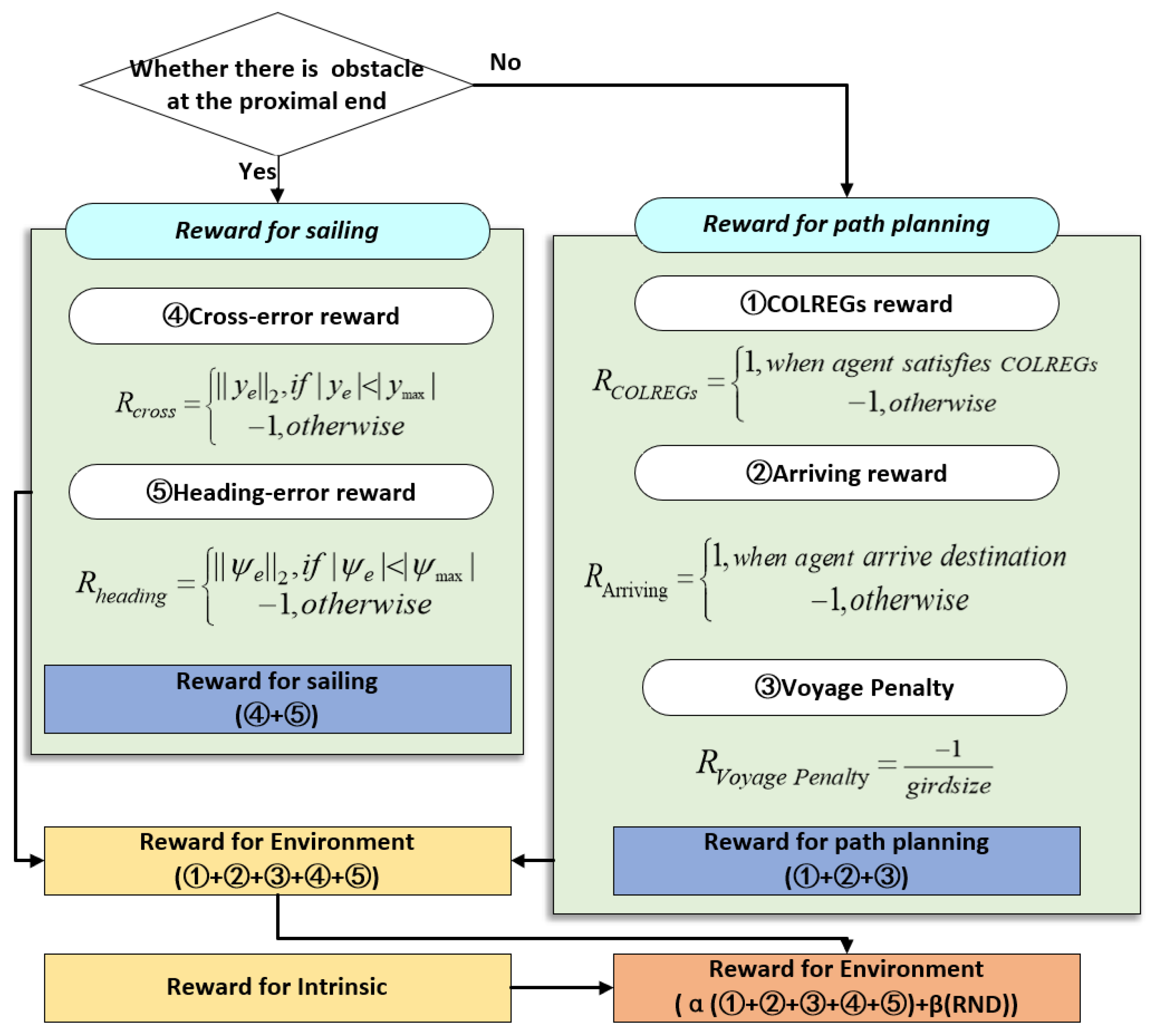

4.3. POND Fusion Algorithm

- Voyage penalty

- 2.

- Arriving reward

- 3.

- COLREGs reward

- 4.

- Heading-error and cross-error reward

4.4. Implementation of POND Algorithm

| Algorithm 1 POND for navigation of USVs |

| number of Pre-training steps |

| number of optimization steps |

| for to do |

| sample sample update observation normalization parameters |

| end for for to do |

| sample sample calculate intrinsic reward for do calculate environment reward calculate combined advantage estimates end for update reward normalization parameters update observation normalization parameters optimize objective function end for |

5. Experiment and Results

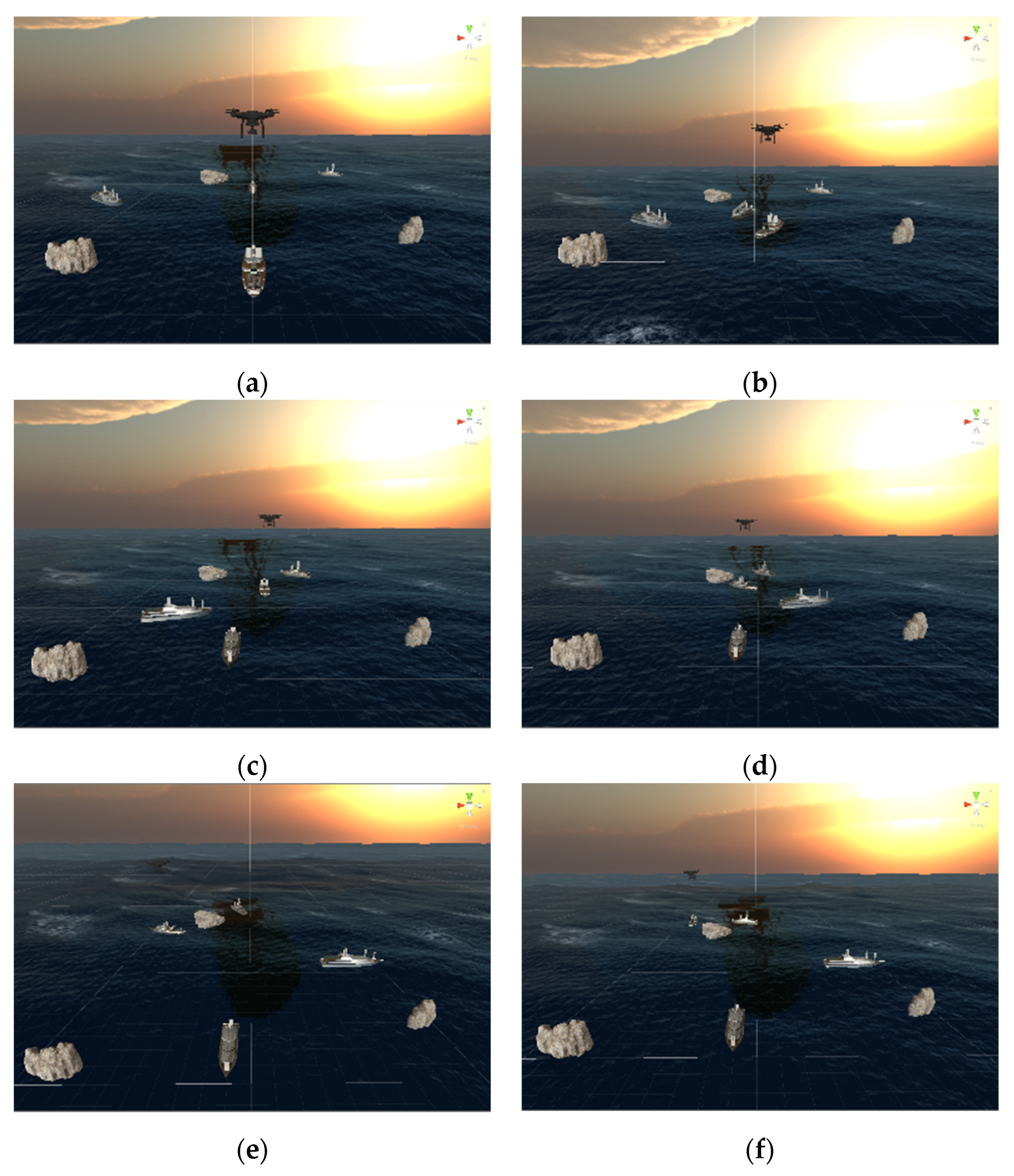

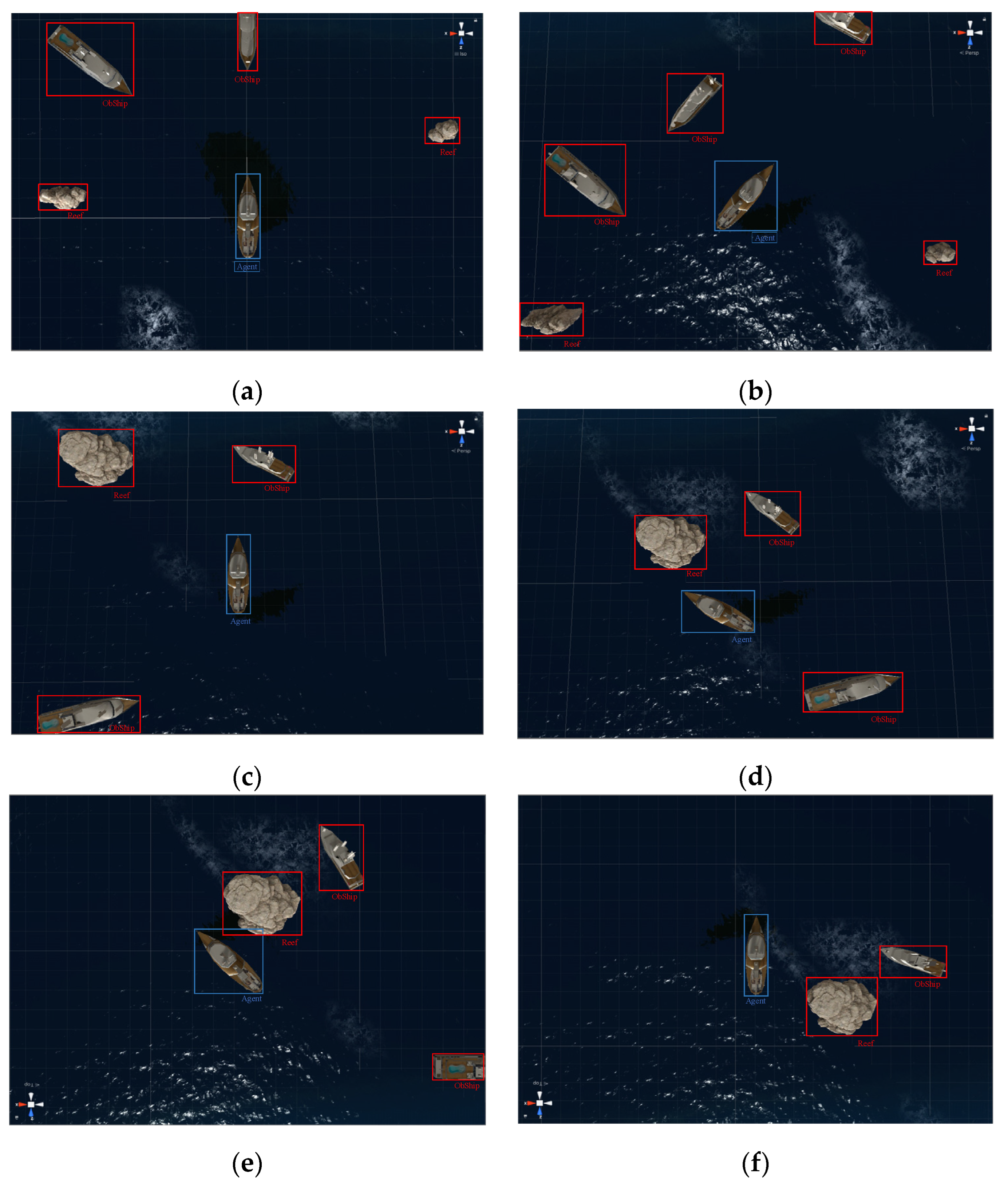

5.1. Simulation Platform Settings

- The autonomous ship module is responsible for the selection and decision-making of physical parameters, motion parameters, and behaviors such as the main scale of the target ship;

- The UAV module is responsible for identifying obstacles and judging the geographic location and distance between autonomous ships and obstacles;

- The marine map module mainly sets the size of the area where the autonomous ship is located, as well as the grid size and quantity;

- The obstacle component is in charge of determining the fundamental physical characteristics and the position and quantity of obstacles, as well as the movement information of movable obstacles.

5.2. Algorithm Model and Parameter Settings

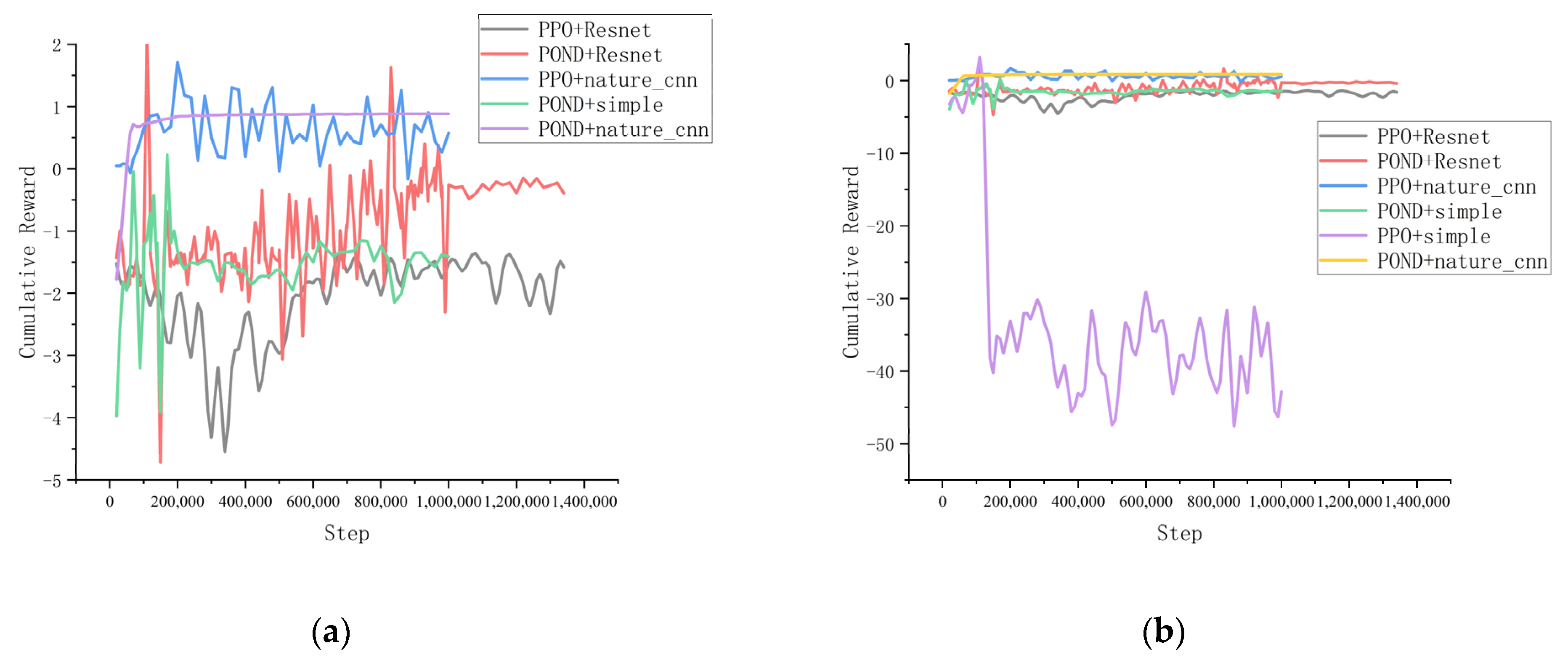

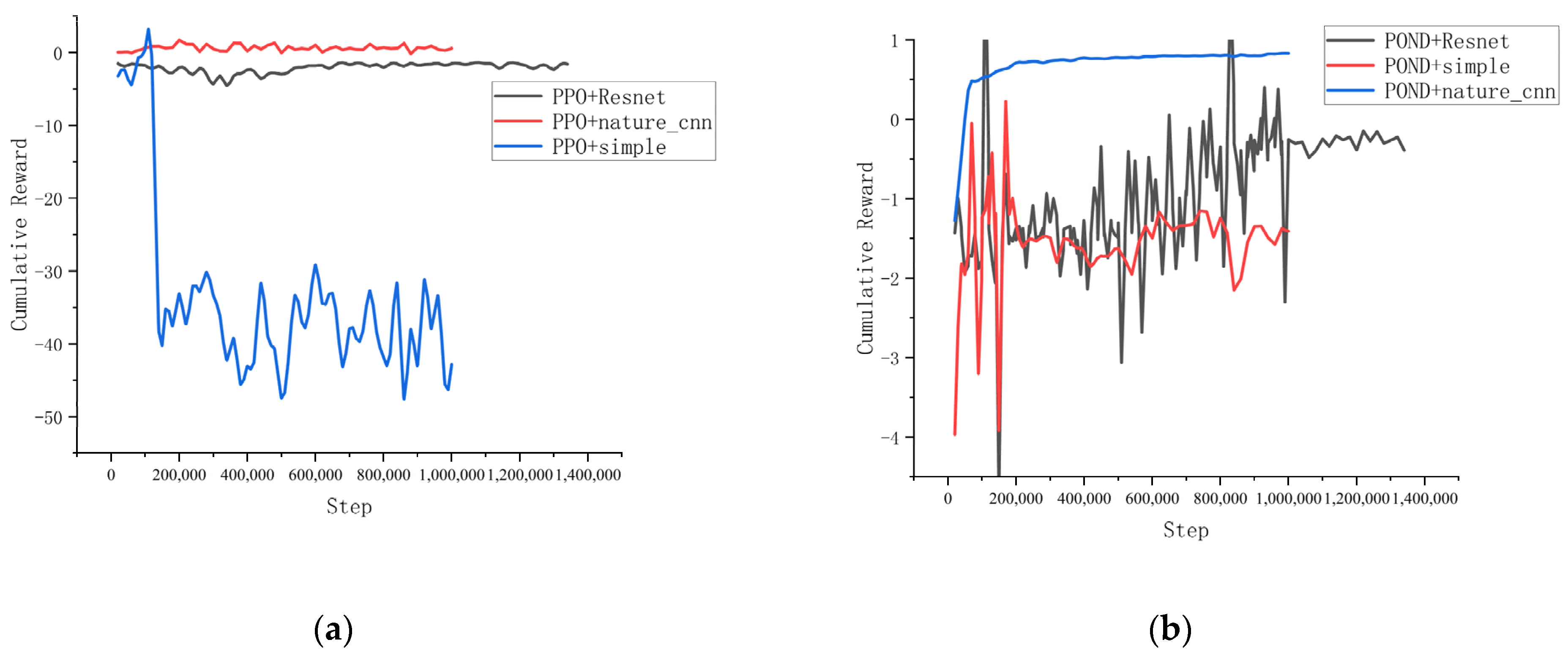

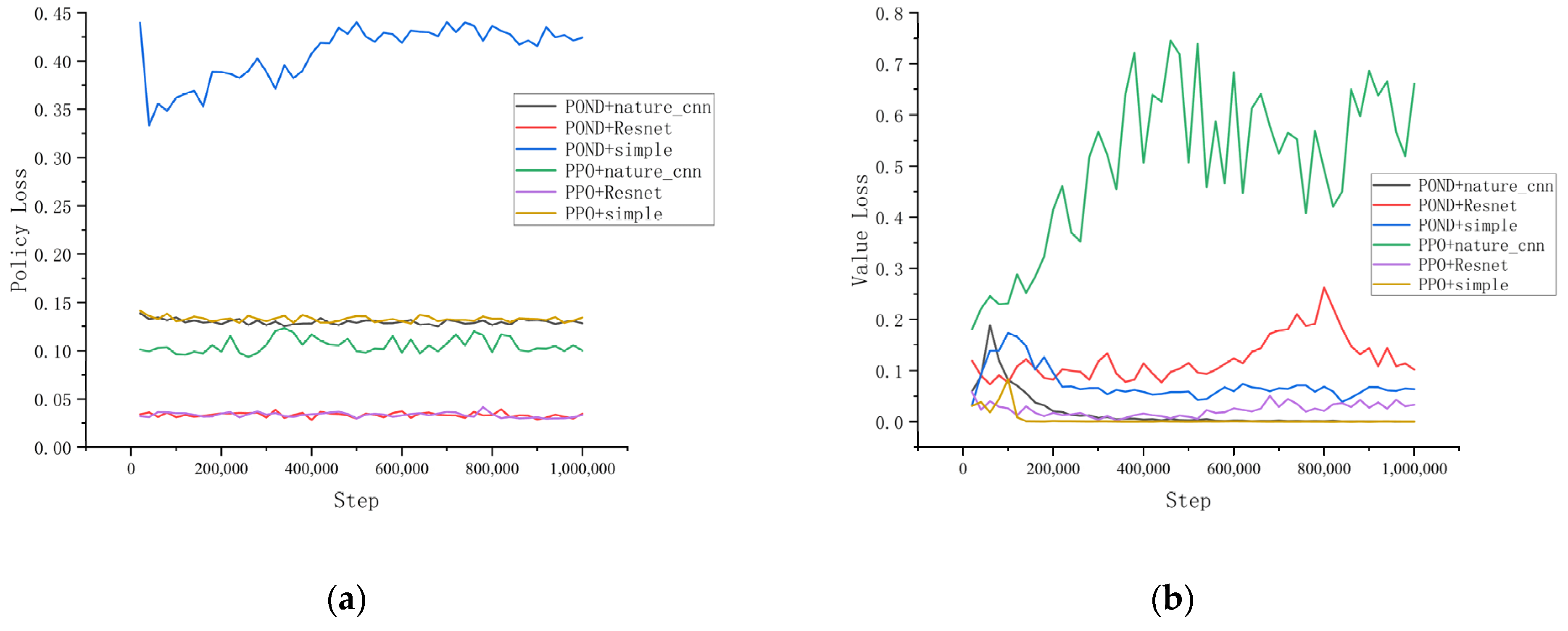

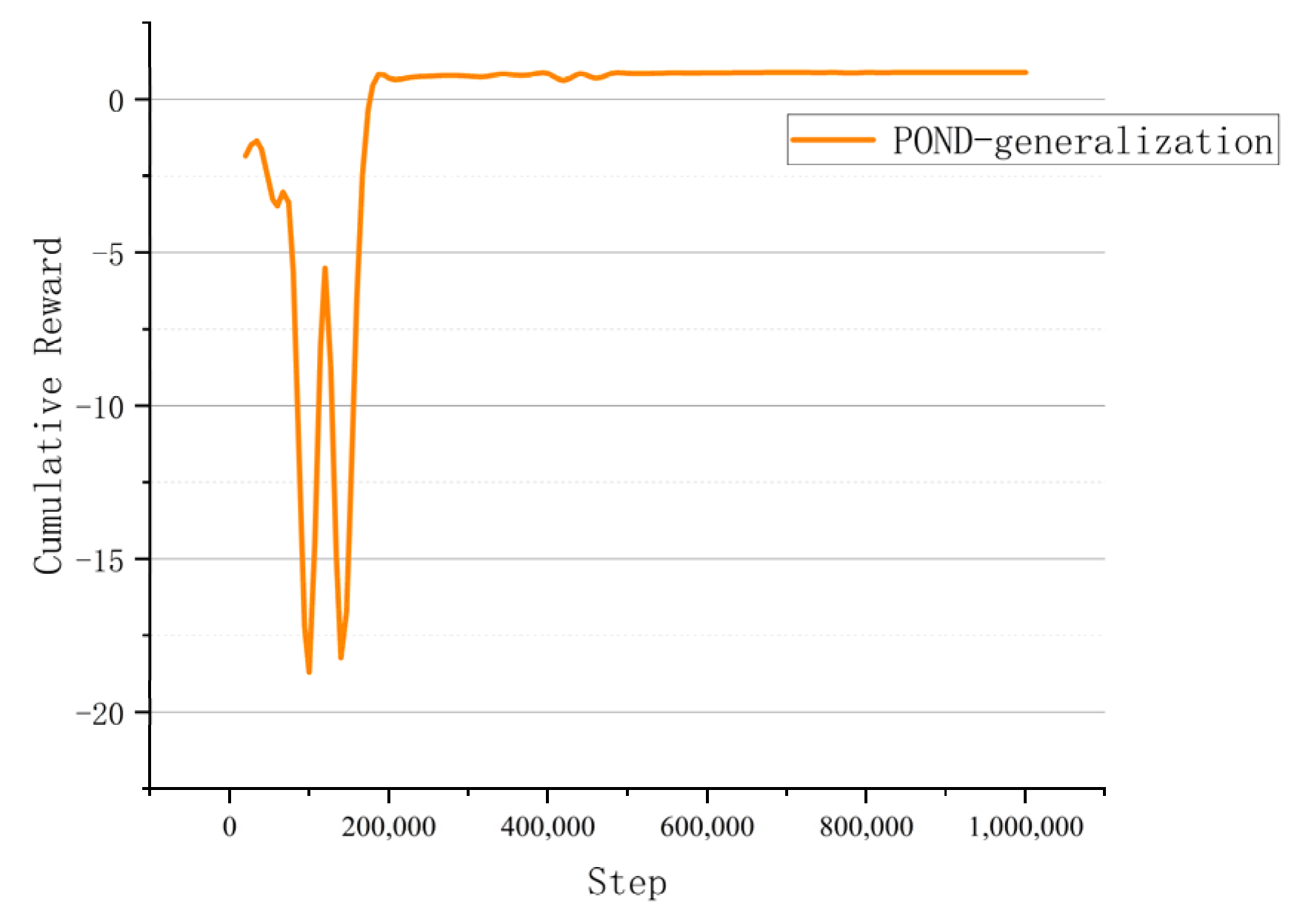

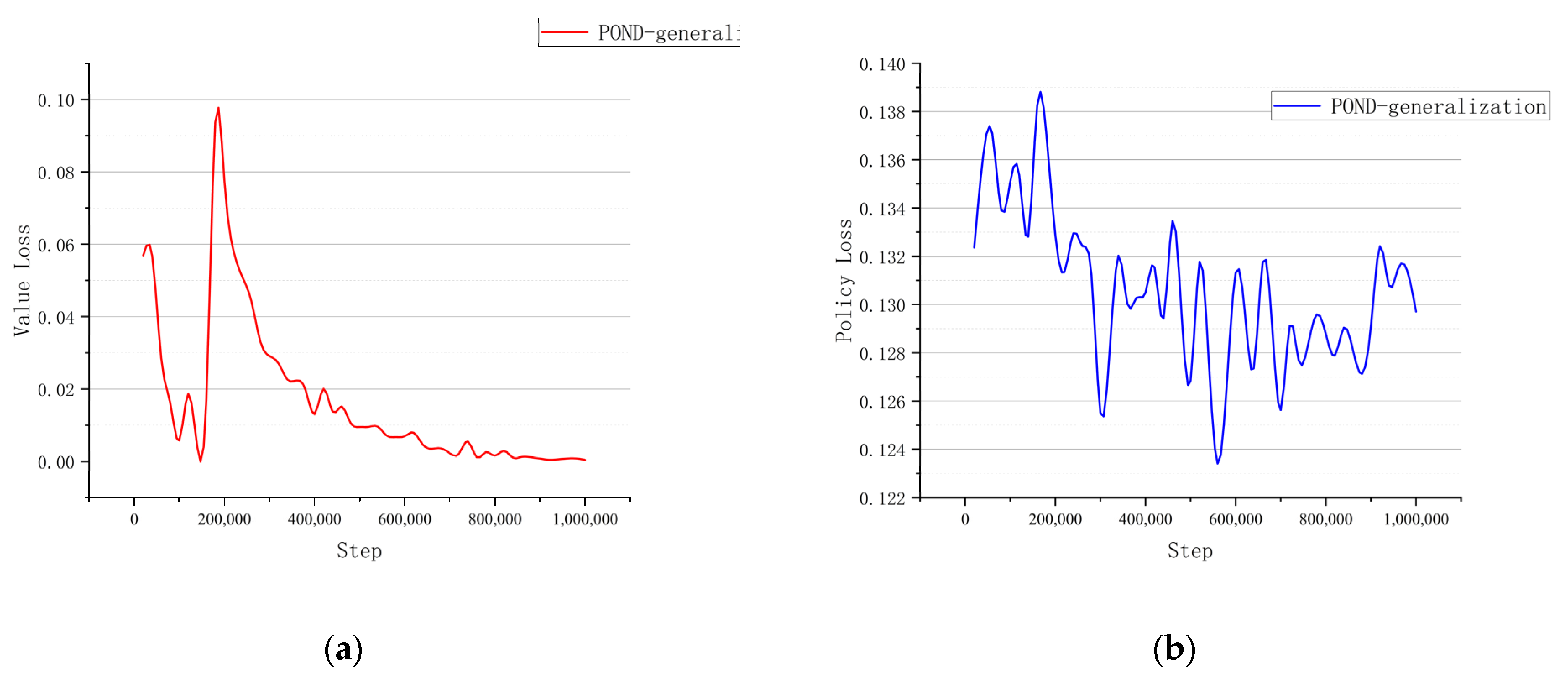

5.3. Experimental Results of Reinforcement Methods in Crowded Marine Environments

6. Conclusions and Discussion

6.1. Conclusions

- While extant research on autonomous collision avoidance algorithms predominantly relies on measurement data from sensors onboard the vessel, yielding limited oceanic information, this investigation utilized an integrated UAV-USV decision system model, harnessing UAV observational data to construct an environmental model, thereby maximizing the data collection surrounding the vessel.

- Existing algorithms generally incorporate the COLREGs rules into the reward function with minimal consideration for other reward factors, compromising the reduction in energy consumption. This study introduced a reward function that integrates environmental data and an intrinsic reward mechanism, incentivizing exploration during training and optimal navigational actions, and thus enhancing collision avoidance efficacy and aligning with sustainable navigation practices.

- Contrary to the majority of collision avoidance models that focus solely on static obstacle avoidance, this study considered both dynamic and static obstacles, randomizing their generation to improve model generalization.

6.2. Discussion

- In the present study, the collision avoidance model has been evaluated exclusively in simulated environments. The subsequent phase will entail conducting sea trials and employing the collision avoidance model aboard research support vessels for empirical validation.

- Moving forward, it is imperative to contemplate the integration of unanticipated factors within the collision avoidance model to bolster navigational safety, particularly in scenarios involving erratic positional alterations by human-navigated vessels in real maritime environments.

- The model, in its current state, is confined to the domain of path planning. However, the maritime navigation process necessitates a heightened focus on the vessel’s state, particularly in regard to crewed ships. Future endeavors should incorporate the vessel’s hydrodynamic performance metrics, such as wave resistance and maneuverability, into the environmental data. Moreover, strategies for the efficacious incorporation of the vessel’s orientation and the aquatic forces acting upon it, as well as the autonomous adjustment of the vessel’s orientation, velocity, and trajectory based on current conditions, should be explored.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Millefiori, L.M.; Braca, P.; Zissis, D.; Spiliopoulos, G.; Marano, S.; Willett, P.K.; Carniel, S. COVID-19 impact on global maritime mobility. Sci. Rep. 2021, 11, 18. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Liu, Z.; Cai, Y. The ship maneuverability based collision avoidance dynamic support system in close-quarters situation. Ocean Eng. 2017, 146, 486–497. [Google Scholar] [CrossRef]

- EMSA. Annual Overview of Marine Casualties and Incidents; EMSA: Tulsa, OK, USA, 2021; pp. 4–5. [Google Scholar]

- Song, R.; Liu, Y.; Bucknall, R. Smoothed A* algorithm for practical unmanned surface vehicle path planning. Appl. Ocean Res. 2019, 83, 9–20. [Google Scholar] [CrossRef]

- Zhang, Z.; Wu, D.; Gu, J.; Li, F. A Path-Planning strategy for unmanned surface vehicles based on an adaptive hybrid dynamic stepsize and target attractive force-RRT algorithm. J. Mar. Sci. Eng. 2019, 7, 132. [Google Scholar] [CrossRef]

- Wang, D.; Wang, P.; Zhang, X.; Guo, X.; Shu, Y.; Tian, X. An obstacle avoidance strategy for the wave glider based on the improved artificial potential field and collision prediction model. Ocean Eng. 2020, 206, 107356. [Google Scholar] [CrossRef]

- Ding, F.; Zhang, Z.; Fu, M.; Wang, Y.; Wang, C. Energy-efficient path planning and control approach of USV based on particle swarm optimization. In Proceedings of the OCEANS 2018 MTS/IEEE Charleston, Charleston, SC, USA, 22–25 October 2018. [Google Scholar]

- Liu, X.; Li, Y.; Zhang, J.; Zheng, J.; Yang, C. Self-adaptive dynamic obstacle avoidance and path planning for USV under complex maritime environment. IEEE Access 2019, 7, 114945–114954. [Google Scholar] [CrossRef]

- Kozynchenko, A.I.; Kozynchenko, S.A. Applying the dynamic predictive guidance to ship collision avoidance: Crossing case study simulation. Ocean Eng. 2018, 164, 640–649. [Google Scholar] [CrossRef]

- Chae, H.; Kang, C.M.; Kim, B.; Kim, J.; Chung, C.C.; Choi, J.W. Autonomous braking system via deep reinforcement learning. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems, Yokohama, Japan, 6–19 October 2017. [Google Scholar]

- Kahn, G.; Villaflor, A.; Pong, V.; Abbeel, P.; Levine, S. Uncertainty-aware reinforcement learning for collision avoidance. arXiv 2017, arXiv:1702.01182. [Google Scholar]

- Everett, M.; Yu, F.C.; Jonathan, P.H. Motion planning among dynamic, decision-making agents with deep reinforcement learning. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Duguleana, M.; Mogan, G. Neural networks based reinforcement learning for mobile robots obstacle avoidance. Expert Syst. Appl. 2016, 62, 104–115. [Google Scholar] [CrossRef]

- Chen, C.; Chen, X.-Q.; Ma, F.; Zeng, X.-J.; Wang, J. A knowledge-free path planning approach for smart ships based on reinforcement learning. Ocean Eng. 2019, 189, 106299. [Google Scholar] [CrossRef]

- Tai, L.; Ming, L. Towards cognitive exploration through deep reinforcement learning for mobile robots. arXiv 2016, arXiv:1610.01733. [Google Scholar]

- Zhang, R.; Tang, P.; Su, Y.; Li, X.; Yang, G.; Shi, C. An adaptive obstacle avoidance algorithm for unmanned surface vehicle in complicated marine environments. IEEE/CAA J. Autom. Sin. 2014, 1, 385–396. [Google Scholar] [CrossRef]

- Woo, J.; Kim, N. Collision avoidance for an unmanned surface vehicle using deep reinforcement learning. Ocean Eng. 2020, 199, 107001. [Google Scholar] [CrossRef]

- Du, Y.; Zhang, X.; Cao, Z.; Wang, S.; Liang, J.; Zhang, F.; Tang, J. An optimized path planning method for coastal ships based on improved DDPG and DP. J. Adv. Transp. 2021, 2021, 7765130. [Google Scholar] [CrossRef]

- Cao, X.; Sun, C.; Yan, M. Target search control of AUV in underwater environment with deep reinforcement learning. IEEE Access 2019, 7, 96549–96559. [Google Scholar] [CrossRef]

- He, Y.; Jin, Y.; Huang, L.; Xiong, Y.; Chen, P.; Mou, J. Quantitative analysis of COLREG rules and seamanship for autonomous collision avoidance at open sea. Ocean Eng. 2017, 140, 281–291. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, W.; Shi, P. A real-time collision avoidance learning system for Unmanned Surface Vessels. Neurocomputing 2016, 182, 255–266. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, D.; Yan, X.; Haugen, S.; Soares, C.G. A distributed anti-collision decision support formulation in multi-ship encounter situations under COLREGs. Ocean Eng. 2015, 105, 336–348. [Google Scholar] [CrossRef]

- Zhao, L. Simulation Method to Support Autonomous Navigation and Installation Operation of an Offshore Support Vessel. Diss. Doctoral Dissertation, Seoul National University, Seoul, Republic of Korea, 2019. [Google Scholar]

- Zhao, L.; Roh, M.-I. COLREGs-compliant multiship collision avoidance based on deep reinforcement learning. Ocean Eng. 2019, 191, 106436. [Google Scholar] [CrossRef]

- Wang, W.; Huang, L.; Liu, K.; Wu, X.; Wang, J. A COLREGs-Compliant Collision Avoidance Decision Approach Based on Deep Reinforcement Learning. J. Mar. Sci. Eng. 2022, 10, 944. [Google Scholar] [CrossRef]

- Zhai, P.; Zhang, Y.; Shaobo, W. Intelligent Ship Collision Avoidance Algorithm Based on DDQN with Prioritized Experience Replay under COLREGs. J. Mar. Sci. Eng. 2022, 10, 585. [Google Scholar] [CrossRef]

- Vagale, A.; Oucheikh, R.; Bye, R.T.; Osen, O.L.; Fossen, T.I. Path planning and collision avoidance for autonomous surface vehicles I: A review. J. Mar. Sci. Technol. 2021, 26, 1292–1306. [Google Scholar] [CrossRef]

- Papadimitriou, C.H.; Tsitsiklis, J.N. The complexity of Markov decision processes. Math. Oper. Res. 1987, 12, 441–450. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Burda, Y.; Edwards, H.; Storkey, A.; Klimov, O. Exploration by random network distillation. arXiv 2018, arXiv:1810.12894. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Espeholt, L.; Soyer, H.; Munos, R.; Simonyan, K.; Mnih, V.; Ward, T.; Kavukcuoglu, K. Impala: Scalable distributed deep-rl with importance weighted actor-learner architectures. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Juliani, A.; Berges, V.P.; Teng, E.; Cohen, A.; Harper, J.; Elion, C.; Lange, D. Unity: A general platform for intelligent agents. arXiv 2018, arXiv:1809.02627. [Google Scholar]

- Keras-rl. Available online: https://github.com/keras-rl/keras-rl. (accessed on 6 June 2021).

- Schulman, J.; Moritz, P.; Levine, S.; Jordan, M.; Abbeel, P. High-dimensional continuous control using generalized advantage estimation. arXiv 2015, arXiv:1506.02438. [Google Scholar]

| Parameter | Content | Value |

|---|---|---|

| Trainer_type | RL types | PPO |

| Summary_freq | Parameter inputs to the next training | 20,000 |

| Time_horzion | The number of training steps inserted into the replay buffer | 5 |

| Max_steps | Total number of training | 1,000,000 |

| Learning_rate | Gradient descent rate | 3.0 × 10−4 |

| Leanrning_rate_schedule | Gradient descent method | Linear |

| Batch_size | Number of data selected for each gradient descent | 32 |

| Buffer_size | The amount of data required for each model update | 256 |

| Hidden_units | Number of hidden layer cells in the POND network | 256 |

| Num_layers | Number of hidden layers in the POND network | 256 |

| Normalize | Environment vector input normalization | 3 |

| Vis_encode_type | Vision sensor data encoder selection | Simple/ Resnet/Cnn |

| Parameter | Content | Value |

|---|---|---|

| beta | Strategy randomness regularization | 5.0 × 10−3 |

| epsilon | Speed of policy change | 0.2 |

| lambd | Regularization parameter used when calculating GAE [35] | 0.95 |

| beta_schedule | The way the beta parameter changes | Linear |

| epsilon_schedule | The way the epsilon parameter changes | Linear |

| num_epoch | Number of complete passes through the training data set | 3 |

| Parameter | Content | Value |

|---|---|---|

| strength | Intrinsic reward weighting | 1.0 |

| gamma | Bonus discount factor | 0.9 |

| learning_rate | Model iteration update rate | 3 × 10−4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, X.; Han, F.; Xia, G.; Zhao, W.; Zhao, Y. Autonomous Obstacle Avoidance in Crowded Ocean Environment Based on COLREGs and POND. J. Mar. Sci. Eng. 2023, 11, 1320. https://doi.org/10.3390/jmse11071320

Peng X, Han F, Xia G, Zhao W, Zhao Y. Autonomous Obstacle Avoidance in Crowded Ocean Environment Based on COLREGs and POND. Journal of Marine Science and Engineering. 2023; 11(7):1320. https://doi.org/10.3390/jmse11071320

Chicago/Turabian StylePeng, Xiao, Fenglei Han, Guihua Xia, Wangyuan Zhao, and Yiming Zhao. 2023. "Autonomous Obstacle Avoidance in Crowded Ocean Environment Based on COLREGs and POND" Journal of Marine Science and Engineering 11, no. 7: 1320. https://doi.org/10.3390/jmse11071320

APA StylePeng, X., Han, F., Xia, G., Zhao, W., & Zhao, Y. (2023). Autonomous Obstacle Avoidance in Crowded Ocean Environment Based on COLREGs and POND. Journal of Marine Science and Engineering, 11(7), 1320. https://doi.org/10.3390/jmse11071320