Enhanced Detection Method for Small and Occluded Targets in Large-Scene Synthetic Aperture Radar Images

Abstract

:1. Introduction

2. Related Work

3. A Target Detection Model Incorporating Multiple Attention Mechanisms

3.1. Multi-Feature Fusion-Based Backbone Network

- M5 = C5.Conv (256, (1, 1))

- M4 = Upsampling (M5) + C4.Conv (256, (1, 1))

- M3 = Upsampling (M4) + C3.Conv (256, (1, 1))

- M2 = Upsampling (M3) + C2.Conv (256, (1, 1))

3.2. Transform Attention Component (TAC)

3.3. Channel and Spatial Attention Component (CSAC)

3.4. TAC_CSAC_Net

| Algorithm 1 Calculating the IoU and GIoU loss functions |

| Input: Coordinates of the prediction frame pb, and the real frame coordinates gt: |

| Output: IoU, LGIoU 1: 2: Ipg is the intersection of the prediction frame and the true frame, Upg is a union: Where, 3: 4: 5: 6: |

4. Results and Discussion

4.1. Experimental Procedure

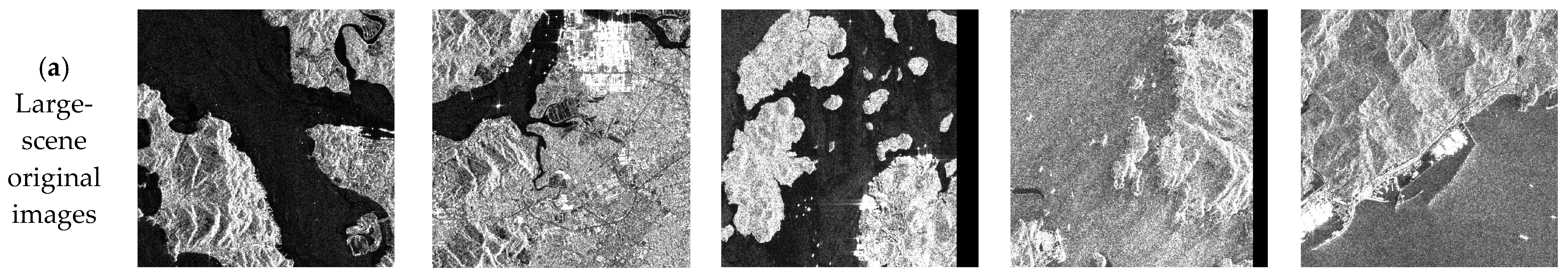

4.1.1. Datasets

4.1.2. Evaluation Metrics

4.2. Experimental Analysis

4.3. Comparative Experiments with Different Models

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xiao, X.; Zhou, Z.; Wang, B.; Li, L.; Miao, L. Ship Detection under Complex Backgrounds Based on Accurate Rotated Anchor Boxes from Paired Semantic Segmentation. Remote Sens. 2019, 11, 2506. [Google Scholar] [CrossRef]

- Kang, M.; Leng, X.; Lin, Z.; Ji, K. A Modified Faster R-CNN Based on CFAR Algorithm for SAR Ship Detection. In Proceedings of the 2017 International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shanghai, China, 19–21 May 2017; pp. 1–4. [Google Scholar]

- An, Q.; Pan, Z.; You, H. Ship Detection in Gaofen-3 SAR Images Based on Sea Clutter Distribution Analysis and Deep Convolutional Neural Network. Sensors 2018, 18, 334. [Google Scholar] [CrossRef] [PubMed]

- Yue, B.; Zhao, W.; Han, S. SAR Ship Detection Method Based on Convolutional Neural Network and Multi-Layer Feature Fusion. In Advances in Natural Computation, Fuzzy Systems and Knowledge Discovery; Spinger: Berlin, Germany, 2020; Volume 1, pp. 41–53. [Google Scholar]

- Shi, W.; Jiang, J.; Bao, S. Ship Detection Method in Remote Sensing Image Based on Feature Fusion. Acta Photonica Sin. 2020, 49, 57–67. [Google Scholar]

- Y Li, Y.; Zhu, W.; Li, C.; Zeng, C. SAR Image Near-Shore Ship Target Detection Method in Complex Background. Int. J. Remote Sens. 2023, 44, 924–952. [Google Scholar] [CrossRef]

- Ma, W.; Li, N.; Zhu, H.; Jiao, L.; Tang, X.; Guo, Y.; Hou, B. Feature Split–Merge–Enhancement Network for Remote Sensing Object Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Ting, L.; Baijun, Z.; Yongsheng, Z.; Shun, Y. Ship Detection Algorithm Based on Improved YOLO V5. In Proceedings of the 2021 6th International Conference on Automation, Control and Robotics Engineering (CACRE), IEEE, Dalian, China, 15–17 July 2021; pp. 483–487. [Google Scholar]

- Tian, Y.; Luo, P.; Wang, X.; Tang, X. Deep Learning Strong Parts for Pedestrian Detection. In Proceedings of the IEEE International Conference on Computer Vision, Araucano Park, Chile, 11–18 December 2015; pp. 1904–1912. [Google Scholar]

- Ouyang, W.; Zhou, H.; Li, H.; Li, Q.; Yan, J.; Wang, X. Jointly Learning Deep Features, Deformable Parts, Occlusion and Classification for Pedestrian Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1874–1887. [Google Scholar] [CrossRef]

- Jiao, J.; Zhang, Y.; Sun, H.; Yang, X.; Gao, X.; Hong, W.; Fu, K.; Sun, X. A Densely Connected End-to-End Neural Network for Multiscale and Multiscene SAR Ship Detection. IEEE Access 2018, 6, 20881–20892. [Google Scholar] [CrossRef]

- Sun, Z.; Meng, C.; Cheng, J.; Zhang, Z.; Chang, S. A Multi-Scale Feature Pyramid Network for Detection and Instance Segmentation of Marine Ships in SAR Images. Remote Sens. 2022, 14, 6312. [Google Scholar] [CrossRef]

- Yang, R.; Pan, Z.; Jia, X.; Zhang, L.; Deng, Y. A Novel CNN-Based Detector for Ship Detection Based on Rotatable Bounding Box in SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1938–1958. [Google Scholar] [CrossRef]

- Cui, Z.; Li, Q.; Cao, Z.; Liu, N. Dense Attention Pyramid Networks for Multi-Scale Ship Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8983–8997. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Xiong, B.; Kuang, G. Attention Receptive Pyramid Network for Ship Detection in SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2738–2756. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhang, T.; Zhang, X.; Ke, X. Quad-FPN: A Novel Quad Feature Pyramid Network for SAR Ship Detection. Remote Sens. 2021, 13, 2771. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, Y.; Yuan, L.; Liu, Z.; Wang, L.; Li, H.; Fu, Y. Rethinking Classification and Localization for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2020; pp. 10186–10195. [Google Scholar]

- Amjoud, A.B.; Amrouch, M. Object Detection Using Deep Learning, CNNs and Vision Transformers: A Review. IEEE Access 2023, 11, 35479–35516. [Google Scholar] [CrossRef]

- Khan, A.; Rauf, Z.; Sohail, A.; Rehman, A.; Asif, H.; Asif, A.; Farooq, U. A Survey of the Vision Transformers and Its CNN-Transformer Based Variants. arXiv 2023, arXiv:2305.09880. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1.0: A Deep Learning Dataset Dedicated to Small Ship Detection from Large-Scale Sentinel-1 SAR Images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. A SAR Dataset of Ship Detection for Deep Learning under Complex Backgrounds. Remote Sens. 2019, 11, 765. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A High-Resolution SAR Images Dataset for Ship Detection and Instance Segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Wan, S.; Goudos, S. Faster R-CNN for Multi-Class Fruit Detection Using a Robotic Vision System. Comput. Netw. 2020, 168, 107036. [Google Scholar] [CrossRef]

- Lin, Z.; Ji, K.; Leng, X.; Kuang, G. Squeeze and Excitation Rank Faster R-CNN for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 751–755. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-Cnn: Delving into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; Chaurasia, A.; Changyu, L.; Hogan, A.; Hajek, J.; Diaconu, L.; Kwon, Y.; Defretin, Y.; et al. Ultralytics/Yolov5: V5. 0-YOLOv5-P6 1280 Models, AWS, Supervise. Ly and YouTube Integrations. Zenodo 2021. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, USA, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

| Models | Characteristics |

|---|---|

| DAPN | DAPN utilizes a pyramid structure in which the Convolutional Block Attention Module (CBAM) is densely connected to each concatenated feature map, creating a network that extends from top to bottom. This design aids in the filtration of negative objects and the suppression of interference from the surrounding environment in the top-down pathway of lateral connections. |

| ARPN | ARPN is a two-stage detector designed to improve the performance of detecting multi-scale ships in SAR images. It represents the Receptive Fields Block (RFB) and utilizes it to capture characteristics of multi-scale ships with different directions. RFB enhances local features with their global dependences. |

| Double-Head R-CNN | R-CNN based detectors often use Double-Head R-CNN (fully connected head and convolutional head) for classification and localization tasks. A Double-Head method is proposed where one fully connected head is responsible for classification, while one convolutional head is used for bounding box regression. |

| CBAM Faster R-CNN | CBAM Faster R-CNN utilizes channel and spatial attention mechanisms to enhance the significant features of ships and suppress interference from surroundings. |

| Quad-FPN | Quad-FPN is a two-stage detector designed to improve the performance of detecting ships in SAR images. It consists of four unique Feature Pyramid Networks (FPNs). These FPNs are well-designed improvements that guarantee Quad-FPN’s excellent detection performance without any unnecessary features. They enable Quad-FPN’s excellent ship scale adaptability and detection scene adaptability. |

| Our model | Unlike the previous model, we establish a backbone network of multi-feature fusion and a self-attention mechanism module. We also introduce the transform attention component and the channel and spatial attention component. Additionally, we use a GIoU-based loss function. |

| Datasets | Total Number of Images | Occlusion | Small Target |

|---|---|---|---|

| Training set | 21,420 | 12,840 | 8580 |

| Verification set | 6120 | 3660 | 2460 |

| Testing set | 3060 | 1836 | 1224 |

| Backbone Network (+Multi-Feature Fusion) | Attention Mechanism | P (%) | R (%) | F1-Score | mAP (%) |

|---|---|---|---|---|---|

| Resnet50 | 92.7 | 95.4 | 0.940 | 92.3 | |

| Resnet50 | TAC | 92.9 | 96.2 | 0.945 | 92.9 |

| Resnet50 | CSAC | 92.9 | 96.4 | 0.946 | 92.8 |

| Resnet50 | TAC + CSAC | 93.9 | 96.5 | 0.952 | 93.5 |

| Resnet50 | TAC_CSAC_Net | 94.5 | 96.9 | 0.957 | 94.2 |

| Resnet101 | 93.0 | 96.3 | 0.946 | 91.6 | |

| Resnet101 | TAC | 93.3 | 96.4 | 0.948 | 92.7 |

| Resnet101 | CSAC | 93.7 | 96.5 | 0.951 | 93.7 |

| Resnet101 | TAC + CSAC | 95.1 | 96.5 | 0.958 | 95.1 |

| Resnet101 | TAC_CSAC_Net | 95.6 | 97.0 | 0.963 | 95.3 |

| Backbone Network (+Multi-Feature Fusion) | Attention Mechanism | P (%) | R (%) | F1-Score | mAP (%) |

|---|---|---|---|---|---|

| Resnet50 | 90.5 | 97.0 | 0.936 | 90.5 | |

| Resnet50 | TAC | 90.9 | 97.1 | 0.939 | 90.9 |

| Resnet50 | CSAC | 92.4 | 97.4 | 0.948 | 91.4 |

| Resnet50 | TAC + CSAC | 94.2 | 97.4 | 0.958 | 94.2 |

| Resnet50 | TAC_CSAC_Net | 97.5 | 98.0 | 0.977 | 97.3 |

| Resnet101 | 90.7 | 98.0 | 0.942 | 91.1 | |

| Resnet101 | TAC | 91.2 | 98.3 | 0.946 | 92.5 |

| Resnet101 | CSAC | 91.5 | 98.1 | 0.947 | 92.7 |

| Resnet101 | TAC + CSAC | 95.4 | 98.3 | 0.968 | 95.4 |

| Resnet101 | TAC_CSAC_Net | 97.7 | 98.3 | 0.979 | 97.7 |

| Backbone Network (+Multi-Feature Fusion) | Attention Mechanism | P (%) | R (%) | F1-Score | mAP (%) |

|---|---|---|---|---|---|

| Resnet50 | 88.2 | 92.1 | 0.901 | 88.2 | |

| Resnet50 | TAC | 88.7 | 93.0 | 0.907 | 88.7 |

| Resnet50 | CSAC | 89.3 | 92.6 | 0.909 | 89.3 |

| Resnet50 | TAC + CSAC | 89.2 | 93.2 | 0.911 | 89.2 |

| Resnet50 | TAC_CSAC_Net | 89.2 | 93.4 | 0.912 | 89.2 |

| Resnet101 | 89.1 | 93.0 | 0.910 | 89.1 | |

| Resnet101 | TAC | 89.3 | 93.0 | 0.911 | 89.3 |

| Resnet101 | CSAC | 89.7 | 93.0 | 0.913 | 89.7 |

| Resnet101 | TAC + CSAC | 89.6 | 93.1 | 0.913 | 89.6 |

| Resnet101 | TAC_CSAC_Net | 89.6 | 93.3 | 0.914 | 89.8 |

| Backbone Network (+Multi-Feature Fusion) | Attention Mechanism | P (%) | R (%) | F1-Score | mAP (%) |

|---|---|---|---|---|---|

| Resnet50 | 81.2 | 88.7 | 0.851 | 81.7 | |

| Resnet50 | TAC | 82.6 | 87.9 | 0.852 | 79.6 |

| Resnet50 | CSAC | 84.2 | 89.4 | 0.867 | 84.2 |

| Resnet50 | TAC + CSAC | 84.5 | 89.8 | 0.871 | 84.5 |

| Resnet50 | TAC_CSAC_Net | 84.5 | 89.9 | 0.871 | 84.5 |

| Resnet101 | 81.2 | 88.8 | 0.848 | 81.2 | |

| Resnet101 | TAC | 81.7 | 88.0 | 0.847 | 80.1 |

| Resnet101 | CSAC | 84.8 | 89.4 | 0.870 | 84.8 |

| Resnet101 | TAC + CSAC | 89.5 | 93.1 | 0.913 | 89.5 |

| Resnet101 | TAC_CSAC_Net | 89.6 | 93.3 | 0.914 | 89.6 |

| Backbone Network (+Multi-Feature Fusion) | Attention Mechanism | P (%) | R (%) | F1-Score | mAP (%) |

|---|---|---|---|---|---|

| Resnet50 | 73.1 | 65.8 | 0.693 | 63.0% | |

| Resnet50 | TAC | 73.7 | 71.4 | 0.725 | 69.2% |

| Resnet50 | CSAC | 78.3 | 72.3 | 0.752 | 72.6% |

| Resnet50 | TAC + CSAC | 82.5 | 71.3 | 0.765 | 75.3% |

| Resnet50 | TAC_CSAC_Net | 83.8 | 73.6 | 0.784 | 76.4% |

| Resnet101 | 73.5 | 71.3 | 0.724 | 70.9% | |

| Resnet101 | TAC | 75.1 | 74.4 | 0.747 | 72.2% |

| Resnet101 | CSAC | 78.4 | 74.9 | 0.766 | 74.8% |

| Resnet101 | TAC + CSAC | 83.5 | 72.7 | 0.777 | 75.3% |

| Resnet101 | TAC_CSAC_Net | 87.7 | 77.3 | 0.822 | 78.6% |

| Models | P (%) | R (%) | F1-Score | mAP (%) | FPS |

|---|---|---|---|---|---|

| Faster-RCNN (Ren et al., 2015) [25] | 72.8 | 72.1 | 0.724 | 74.4 | 4.82 |

| SER Faster R-CNN (Lin et al., 2018) [26] | 73.5 | 71.6 | 0.725 | 75.2 | 7.15 |

| PANET (Liu et al., 2018) [27] | 72.9 | 73.2 | 0.730 | 72.9 | 9.45 |

| Cascade R-CNN (Cai and Vasconcelos, 2018) [28] | 74.0 | 72.8 | 0.733 | 74.1 | 8.83 |

| DAPN (Cui et al., 2019) [14] | 73.8 | 75.1 | 0.744 | 74.1 | 12.22 |

| ARPN (Zhao et al., 2020) [15] | 73.5 | 71.6 | 0.725 | 75.2 | 12.15 |

| Double-Head R-CNN (Wu et al., 2020) [16] | 81.4 | 77.7 | 0.795 | 79.9 | 6.25 |

| CBAM Faster R-CNN [17] | 83.0 | 77.9 | 0.803 | 75.2 | 7.39 |

| Quad-FPN (Zhang et al., 2021a) [18] | 80.1 | 78.9 | 0.794 | 77.1 | 11.37 |

| YOLOv5 (Jocher et al., 2021) [29] | 72.8 | 77.1 | 0.748 | 74.4 | 21.76 |

| YOLOv7 (Wang et al., 2022) [30] | 78.2 | 76.1 | 0.771 | 76.3 | 22.43 |

| Our model | 87.7 | 77.3 | 0.822 | 78.6 | 8.06 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, H.; Chen, P.; Li, Y.; Wang, B. Enhanced Detection Method for Small and Occluded Targets in Large-Scene Synthetic Aperture Radar Images. J. Mar. Sci. Eng. 2023, 11, 2081. https://doi.org/10.3390/jmse11112081

Zhou H, Chen P, Li Y, Wang B. Enhanced Detection Method for Small and Occluded Targets in Large-Scene Synthetic Aperture Radar Images. Journal of Marine Science and Engineering. 2023; 11(11):2081. https://doi.org/10.3390/jmse11112081

Chicago/Turabian StyleZhou, Hui, Peng Chen, Yingqiu Li, and Bo Wang. 2023. "Enhanced Detection Method for Small and Occluded Targets in Large-Scene Synthetic Aperture Radar Images" Journal of Marine Science and Engineering 11, no. 11: 2081. https://doi.org/10.3390/jmse11112081

APA StyleZhou, H., Chen, P., Li, Y., & Wang, B. (2023). Enhanced Detection Method for Small and Occluded Targets in Large-Scene Synthetic Aperture Radar Images. Journal of Marine Science and Engineering, 11(11), 2081. https://doi.org/10.3390/jmse11112081