Deep Learning-Based Classification of Raw Hydroacoustic Signal: A Review

Abstract

1. Introduction

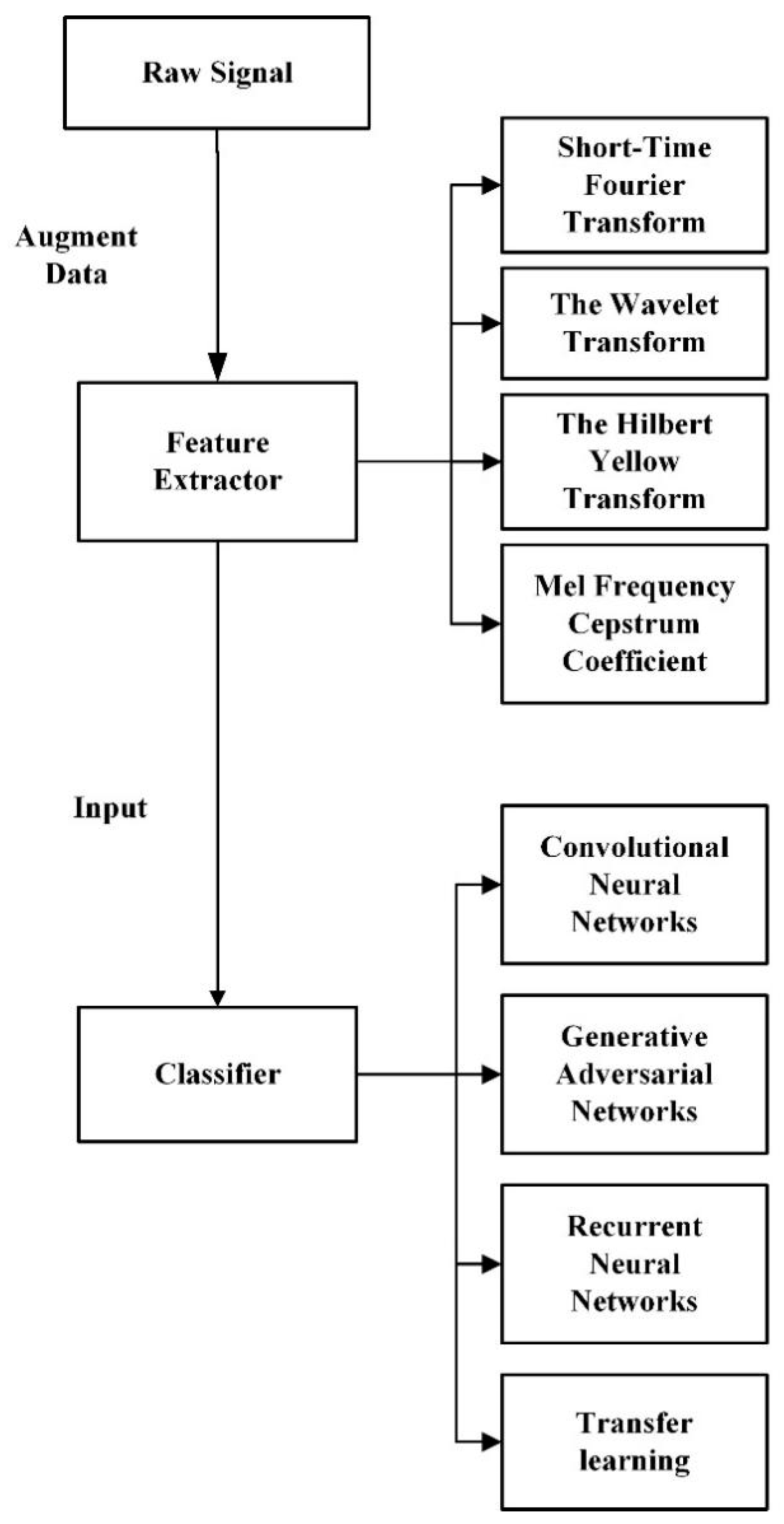

- This paper clarifies and reviews various methods used for signal processing. This paper provides an overview of signal processing according to its developmental history, i.e., in terms of Fourier transform, short-time Fourier transform (STFT), Hilbert–Yellow transform, the Meier spectrum (MFCC), and wavelet transform (WT), and explores the advantages and disadvantages of various signal-processing methods and future development directions by comparing the structures of practical applications.

- This paper introduces various neural networks used in hydroacoustic signal recognition. In addition, unsupervised adaptive methods based on sound signals, such as migration learning and adversarial learning, are proposed in this paper.

- This paper also provides an in-depth analysis of the problems of hydroacoustic signal processing and the corresponding direction of future development by comparing this method with data enhancement methods.

2. Raw Signal

2.1. The Fourier Transform

2.2. The LOFAR Spectrum

2.3. The Wavelet Transform

2.4. The Hilbert–Yellow Transform

2.5. The Mel Spectrum

2.6. Feature Fusion

3. Deep Learning-Based Hydroacoustic Signal Recognition

3.1. Convolutional Neural Networks (CNN)

3.2. Generative Adversarial Networks (GAN)

3.3. Recurrent Neural Networks (RNN)

3.4. Transfer Learning

4. Data Augmentation

4.1. Traditional Data Enhancement Based on Original Audio

4.2. Neural Network Data Enhancement

5. Discussion

6. Conclusions

- (1)

- The development of a signal to preprocess feature extraction is crucial, Table 1. Although short-time Fourier, Meier, Hilbert–Yellow, and other processing methods have been proposed to solve part of signal feature extraction; however, due to the shortcomings of the various algorithms, single signal-processing feature extraction can no longer improve the efficiency of the classifier. Therefore, multi-spectrum feature fusion will be one of the directions of development of hydroacoustic signal recognition.

- (2)

- The improvement of classifier neural networks is necessary, Table 2. In the back-end, the efficiency of the classifier network determines the accuracy and speed of recognition. In hydroacoustic signal recognition, the back-end decision algorithms commonly used in computer vision such as random forest can be introduced. Improving the neural network’s efficiency will be a critical issue.

- (3)

- Hydroacoustic data enhancement is essential, Table 3. Due to the complexity of the marine environment, marine environmental noise varies significantly in different sea conditions, different sea areas, and at different times. Improving classification models’ generalization via data enhancement is a problem to be solved.

- (4)

- The small sample problem must be solved. Notably, the application of deep-learning models such as convolutional neural networks to hydroacoustic target recognition can significantly improve classification accuracy and constitutes a new research direction in the field of hydroacoustic detection, which will lead to the improvement of performance with respect to faint signal detection as well as underwater target identification and localization. However, the computational complexity of these algorithms needs further attention. On the other hand, hydroacoustic targets usually combat divers, underwater crewless vehicles, submarines, etc., which have a certain degree of concealment and confidentiality. Thus, it is more difficult to obtain their target database. While data-driven deep-learning based on a large number of data samples is required for training, the small sample problem also needs to be addressed.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Haugland, E.K.; Misund, O.A. Influence of Different Factors on Abundance Estimates Obtained from Simultaneous Sonar and Echo Sounder Recordings. Open Oceanogr. J. 2011, 5, 42–50. [Google Scholar] [CrossRef][Green Version]

- Cho, H.; Gu, J.; Yu, S.C. Robust sonar-based underwater object recognition against angle-of-view variation. IEEE Sens. J. 2015, 16, 1013–1025. [Google Scholar] [CrossRef]

- Bracewell, R.N.; Bracewell, R.N. The Fourier Transform and Its Applications; McGraw-Hill: New York, NY, USA, 1986. [Google Scholar]

- Marszal, J.; Salamon, R. Detection range of intercept sonar for CWFM signals. Arch. Acoust. 2014, 39, 215–230. [Google Scholar] [CrossRef]

- Behari, N.; Sheriff, M.Z.; Rahman, M.A.; Nounou, M.; Hassan, I.; Nounou, H. Chronic leak detection for single and multiphase flow: A critical review on onshore and offshore subsea and arctic conditions. J. Nat. Gas Sci. Eng. 2020, 81, 103460. [Google Scholar] [CrossRef]

- Santos-Domínguez, D.; Torres-Guijarro, S.; Cardenal-López, A.; Pena-Gimenez, A. ShipsEar: An underwater vessel noise database. Appl. Acoust. 2016, 113, 64–69. [Google Scholar] [CrossRef]

- Zhao, Y.; Yu, H.; Wei, G.; Ji, F.; Chen, F. Parameter estimation of wideband underwater acoustic multipath channels based on fractional Fourier transform. IEEE Trans. Signal Process. 2016, 64, 5396–5408. [Google Scholar] [CrossRef]

- Chen, J.; Han, B.; Ma, X.; Zhang, J. Underwater target recognition based on multi-decision LOFAR spectrum enhancement: A deep-learning approach. Future Internet 2021, 13, 265. [Google Scholar] [CrossRef]

- Jin, G.; Liu, F.; Wu, H.; Song, Q. Deep learning-based framework for expansion, recognition and classification of underwater acoustic signal. J. Exp. Theor. Artif. Intell. 2020, 32, 205–218. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Torrence, C.; Compo, G.P. A practical guide to wavelet analysis. Bull. Am. Meteorol. Soc. 1998, 79, 61–78. [Google Scholar] [CrossRef]

- Chin-Hsing, C.; Jiann-Der, L.; Ming-Chi, L. Classification of underwater signals using wavelet transforms and neural networks. Math. Comput. Model. 1998, 27, 47–60. [Google Scholar] [CrossRef]

- González-Hernández, F.R.; Sánchez-Fernández, L.P.; Suárez-Guerra, S.; Sánchez-Pérez, L.A. Marine mammal sound classification based on a parallel recognition model and octave analysis. Appl. Acoust. 2017, 119, 17–28. [Google Scholar] [CrossRef]

- Liu, F.; Shen, T.; Luo, Z.; Zhao, D.; Guo, S. Underwater target recognition using convolutional recurrent neural networks with 3-D Mel-spectrogram and data augmentation. Appl. Acoust. 2021, 178, 107989. [Google Scholar] [CrossRef]

- Qiao, W.; Khishe, M.; Ravakhah, S. Underwater targets classification using local wavelet acoustic pattern and Multi-Layer Perceptron neural network optimized by modified Whale Optimization Algorithm. Ocean. Eng. 2021, 219, 108415. [Google Scholar] [CrossRef]

- Weeks, M.; Bayoumi, M. Discrete wavelet transform: Architectures, design and performance issues. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2003, 35, 155–178. [Google Scholar] [CrossRef]

- Huang, N.E. Hilbert-Huang Transform and Its Applications; World Scientific: Singapore, 2014. [Google Scholar]

- Rilling, G.; Flandrin, P.; Goncalves, P. On empirical mode decomposition and its algorithms. In Proceedings of the IEEE-EURASIP Workshop on Nonlinear Signal and Image Processing, Grado, Italy, 1 June 2003; Volume 3, pp. 8–11. [Google Scholar]

- Zeng, X.; Wang, S. Bark-wavelet analysis and Hilbert–Huang transform for underwater target recognition. Def. Technol. 2013, 9, 115–120. [Google Scholar] [CrossRef]

- Xu, Z.; Liu, X.; Chen, X. Fog removal from video sequences using contrast limited adaptive histogram equalization. In Proceedings of the 2009 International Conference on Computational Intelligence and Software Engineering, Wuhan, China, 11–13 December 2009; pp. 1–4. [Google Scholar]

- Wang, S.; Zeng, X. Robust underwater noise targets classification using auditory inspired time–frequency analysis. Appl. Acoust. 2014, 78, 68–76. [Google Scholar] [CrossRef]

- Combrinck, H.; Botha, E.C. On the Mel-Scaled Cepstrum; Department of Electrical and Electronic Engineering, University of Pretoria: Pretoria, South Africa, 1996. [Google Scholar]

- Loizou, P.C. Mimicking the human ear. IEEE Signal Process. Mag. 1998, 15, 101–130. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, D.; Han, X.; Zhu, Z. Feature extraction of underwater target signal using mel frequency cepstrum coefficients based on acoustic vector sensor. J. Sens. 2016, 2016, 7864213. [Google Scholar] [CrossRef]

- Lu, Z.B.; Zhang, X.H.; Zhu, J. Feature extraction of ship-radiated noise based on Mel frequency cepstrum coefficients. Ship Sci. Technol. 2004, 2, 51–54. [Google Scholar]

- Huang, T.; Yang, Y.; Wu, Z. Combining MFCC and pitch to enhance the performance of the gender recognition. In Proceedings of the 2006 8th International Conference on Signal Processing, Guilin, China, 16–20 November 2006; Volume 1. [Google Scholar]

- Sivadas, S.; Hermansky, H. Hierarchical tandem feature extraction. In Proceedings of the 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing, Orlando, FL, USA, 13–17 May 2002; Volume 1, pp. I-809–I-812. [Google Scholar]

- Li, R.; Zhao, M.; Li, Z.; Li, L.; Hong, Q. Anti-Spoofing Speaker Verification System with Multi-Feature Integration and Multi-Task Learning. In Proceedings of the Interspeech, Graz, Austria, 15–19 September 2019; pp. 1048–1052. [Google Scholar]

- Zhang, Q.; Da, L.; Zhang, Y.; Hu, Y. Integrated neural networks based on feature fusion for underwater target recognition. Appl. Acoust. 2021, 182, 108261. [Google Scholar] [CrossRef]

- Mishachandar, B.; Vairamuthu, S. Diverse ocean noise classification using deep learning. Appl. Acoust. 2021, 181, 108141. [Google Scholar] [CrossRef]

- Huo, G.; Li, Q.; Zhou, Y. Seafloor segmentation using combined texture features of sidescan sonar images. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 003794–003799. [Google Scholar]

- Kwak, N. Principal component analysis based on L1-norm maximization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1672–1680. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Lu, X.; Hu, Z.; Zheng, W. Fisher discriminant analysis with L1-norm. IEEE Trans. Cybern. 2013, 44, 828–842. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Neupane, D.; Seok, J. Bearing fault detection and diagnosis using case western reserve university dataset with deep learning approaches: A. review. IEEE Access 2020, 8, 93155–93178. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. Overview of supervised learning. In The Elements of Statistical Learning; Springer: New York, NY, USA, 2009; pp. 9–41. [Google Scholar]

- Barlow, H.B. Unsupervised learning. Neural Comput. 1989, 1, 295–311. [Google Scholar] [CrossRef]

- Reddy, Y.; Viswanath, P.; Reddy, B.E. Semi-supervised learning: A brief review. Int. J. Eng. Technol. 2018, 7, 81. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D.D. A survey of transfer learning. J. Big Data 2016, 3, 1–40. [Google Scholar] [CrossRef]

- Li, S.; Yang, S.; Liang, J. Recognition of ships based on vector sensor and bidirectional long short-term memory networks. Appl. Acoust. 2020, 164, 107248. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y. Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 1995, 3361, 1995. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; pp. 1–6. [Google Scholar]

- Neupane, D.; Seok, J. Deep learning-based bearing fault detection using 2-D illustration of time sequence. In Proceedings of the 2020 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 21–23 October 2020; pp. 562–566. [Google Scholar]

- Wu, M.; Wang, Q.; Rigall, E.; Li, K.; Zhu, W.; He, B.; Yan, T. ECNet: Efficient convolutional networks for side scan sonar image segmentation. Sensors 2019, 19, 2009. [Google Scholar] [CrossRef] [PubMed]

- Valdenegro-Toro, M. End-to-end object detection and recognition in forward-looking sonar images with convolutional neural networks. In Proceedings of the 2016 IEEE/OES Autonomous Underwater Vehicles (AUV), Tokyo, Japan, 6–9 November 2016; pp. 144–150. [Google Scholar]

- Hu, G.; Wang, K.; Peng, Y.; Qiu, M.; Shi, J.; Liu, L. Deep learning methods for underwater target feature extraction and recognition. Comput. Intell. Neurosci. 2018, 2018, 1214301. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Chen, H.; Wang, B. DOA estimation based on CNN for underwater acoustic array. Appl. Acoust. 2021, 172, 107594. [Google Scholar] [CrossRef]

- Guo, T.; Song, Y.; Kong, Z.; Lim, E.; Lopez-Benitez, M.; Ma, F.; Yu, L. Underwater Target Detection and Localization with Feature Map and CNN-Based Classification. In Proceedings of the 2022 4th International Conference on Advances in Computer Technology, Information Science and Communications (CTISC), Suzhou, China, 22–24 April 2022; pp. 1–8. [Google Scholar]

- Khishe, M.; Mosavi, M.R. Classification of underwater acoustical dataset using neural network trained by Chimp Optimization Algorithm. Appl. Acoust. 2020, 157, 107005. [Google Scholar] [CrossRef]

- Wu, H.; Song, Q.; Jin, G. Underwater acoustic signal analysis: Preprocessing and classification by deep learning. Neural Netw. World 2020, 30, 85–96. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Kim, J.; Song, S.; Yu, S.C. Denoising auto-encoder based image enhancement for high resolution sonar image. In Proceedings of the 2017 IEEE Underwater Technology (UT), Busan, Republic of Korea, 21–24 February 2017; pp. 1–5. [Google Scholar]

- Yu, X.; Qu, Y.; Hong, M. Underwater-GAN: Underwater image restoration via conditional generative adversarial network. In International Conference on Pattern Recognition; Springer: Cham, Switzerland, 2018; pp. 66–75. [Google Scholar]

- Liu, F.; Song, Q.; Jin, G. Expansion of restricted sample for underwater acoustic signal based on generative adversarial networks. In Proceedings of the Tenth International Conference on Graphics and Image Processing (ICGIP 2018), Chengdu, China, 12–14 December 2018; SPIE: Bellingham, WA, USA; Volume 11069, pp. 1222–1229.

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning. Scholarpedia 2015, 10, 32832. [Google Scholar] [CrossRef]

- Guo, Q.; Yu, Z.; Wu, Y.; Liang, D.; Qin, H.; Yan, J. Dynamic recursive neural network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5147–5156. [Google Scholar]

- Pollack, J.B. Recursive distributed representations. Artif. Intell. 1990, 46, 77–105. [Google Scholar] [CrossRef]

- Gimse, H. Classification of Marine Vessels Using Sonar Data and a Neural Network. Master’s Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2017. [Google Scholar]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef] [PubMed]

- Hughes, T.; Mierle, K. Recurrent neural networks for voice activity detection. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 7378–7382. [Google Scholar]

- Doan, V.S.; Huynh-The, T.; Kim, D.S. Underwater Acoustic Target Classification Based on Dense Convolutional Neural Network; IEEE Geoscience and Remote Sensing Letters: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning. In Proceedings of the International Conference on Artificial Neural Networks, Rhodes, Greece, 4–7 October 2018; Springer: Cham, Switzerland; pp. 270–279.

- Ibrahim, A.K.; Zhuang, H.; Chérubin, L.M.; Schärer-Umpierre, M.T.; Nemeth, R.S.; Erdol, N.; Ali, A.M. Transfer learning for efficient classification of grouper sound. J. Acoust. Soc. Am. 2020, 148, EL260–EL266. [Google Scholar] [CrossRef]

- Gemmeke, J.F.; Ellis, D.P.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. Audio set: An ontology and human-labeled dataset for audio events. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 776–780. [Google Scholar]

- Das, A.; Kumar, A.; Bahl, R. Marine vessel classification based on passive sonar data: The cepstrum-based approach. IET Radar Sonar Navig. 2013, 7, 87–93. [Google Scholar] [CrossRef]

- Wei, X. On feature extraction of ship radiated noise using 11/2 d spectrum and principal components analysis. In Proceedings of the 2016 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), Hong Kong, China, 5–8 August 2016; pp. 1–4. [Google Scholar]

- Meng, Q.; Yang, S.; Piao, S. The classification of underwater acoustic target signals based on wave structure and support vector machine. J. Acoust. Soc. Am. 2014, 136, 2265. [Google Scholar] [CrossRef]

- Meng, Q.; Yang, S. A wave structure based method for recognition of marine acoustic target signals. J. Acoust. Soc. Am. 2015, 137, 2242. [Google Scholar] [CrossRef]

- Azimi-Sadjadi, M.R.; Yao, D.; Huang, Q.; Dobeck, G.J. Underwater target classification using wavelet packets and neural networks. IEEE Trans. Neural Netw. 2000, 11, 784–794. [Google Scholar] [CrossRef]

- Wei, X.; Li, G.-H.; Wang, Z.Q. Underwater target recognition based on wavelet packet and principal component analysis. Comput. Simul. 2011, 28, 8–290. [Google Scholar]

- Huang, J.; Zhang, X.; Guo, F.; Zhou, Q.; Liu, H.; Li, B. Design of an acoustic target classification system based on small-aperture microphone array. IEEE Trans. Instrum. Meas. 2014, 64, 2035–2043. [Google Scholar] [CrossRef]

- Tuma, M.; Rørbech, V.; Prior, M.K.; Igel, C. Integrated optimization of long-range underwater signal detection, feature extraction, and classification for nuclear treaty monitoring. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3649–3659. [Google Scholar] [CrossRef]

- Yang, H.; Gan, A.; Chen, H.; Pan, Y.; Tang, J.; Li, J. Underwater acoustic target recognition using SVM ensemble via weighted sample and feature selection. In Proceedings of the 2016 13th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 12–16 January 2016; pp. 522–527. [Google Scholar]

- Siddagangaiah, S.; Li, Y.; Guo, X.; Chen, X.; Zhang, Q.; Yang, K.; Yang, Y. A complexity-based approach for the detection of weak signals in ocean ambient noise. Entropy 2016, 18, 101. [Google Scholar] [CrossRef]

| Method | Strengths/Features | Limitations |

|---|---|---|

| Short-time Fourier transform (STFT) | Obtains the signal power spectrum at different moments Creates a time–frequency analysis chart of hydroacoustic signals Includes multimodal fusion features Highly distinguishable | Lack of time- and frequency-locating functions Low time–frequency resolution of hydroacoustic signals |

| The Wavelet Transform | Features multiple resolutions Reduces high-frequency interference components Provides significant noise reduction effect towards hydroacoustic signals Widely used in hydroacoustic field | Lack of adaptivity compared to other modal decomposition methods |

| The Hilbert Yellow Transform | Analyzes nonlinear non-smooth signals and is applicable to hydroacoustic signals Suitable for mutational signals | Theoretical framework is difficult to establish Endpoint effect problem exists |

| Mel-Frequency Analysis | High resolution in the low-frequency section of the hydroacoustic signal Good recognition performance even when signal-to-noise ratio is reduced Widely used in speech recognition | The dimensionality reduction process leads to the loss of some of the original data |

| Mel Frequency Cepstrum Coefficient (MFCC) | Combination of dynamic and static features High hydroacoustic signal recognition capability Widely used in speech recognition | High-frequency part is not sensitive |

| Feature fusion | Compensates for the missing features of individual spectrum features More features can be extracted from a small number of training data High efficiency regarding deep-learning networks | After the feature dimension reaches a certain size, the performance of the model will decrease |

| Method | Strengths/Features | Limitations |

|---|---|---|

| Convolutional Neural Network (CNN) | Convolution layer enables feature extraction for easy feature extraction of spectrograms Handles high-dimensional data Highly versatile | A great deal of valuable information will be lost Large number of labeled training data are required Contradictory to the lack of hydroacoustic data |

| Generative Adversarial Network (GAN) | High unsupervised learning ability Suitable for small data sets | Generate single data Low network ubiquitousness |

| Recurrent Neural Network (RNN) | Widely used in text and speech analysis The mathematical basis can be considered as Markov chains with memory capacity | Unable to support long sequences Cannot distinguish between ambient noise and ship noise |

| Transfer learning | High learning capability No reliance on large data sets | Reliant on pre-trained networks Less hydroacoustic data leads to inadequate pre-trained network |

| Temporal Convolutional Network (TCN) | Training is applied directly through the original audio Has applications in speech recognition | Unable to handle noise in an aquatic environment Low accuracy in recognition of hydroacoustic targets |

| Method | Strengths/Features | Limitations |

|---|---|---|

| Traditional data augmentation methods (audio editing and synthesis, etc.) | Simple operation | Highly dependent on original audio data |

| Neural Network data augmentation | Ability to handle unrelated features Suitable for processing samples with missing attributes Compensates for lack of hydroacoustic data | Ignores correlation between data |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, X.; Dong, R.; Lv, Z. Deep Learning-Based Classification of Raw Hydroacoustic Signal: A Review. J. Mar. Sci. Eng. 2023, 11, 3. https://doi.org/10.3390/jmse11010003

Lin X, Dong R, Lv Z. Deep Learning-Based Classification of Raw Hydroacoustic Signal: A Review. Journal of Marine Science and Engineering. 2023; 11(1):3. https://doi.org/10.3390/jmse11010003

Chicago/Turabian StyleLin, Xu, Ruichun Dong, and Zhichao Lv. 2023. "Deep Learning-Based Classification of Raw Hydroacoustic Signal: A Review" Journal of Marine Science and Engineering 11, no. 1: 3. https://doi.org/10.3390/jmse11010003

APA StyleLin, X., Dong, R., & Lv, Z. (2023). Deep Learning-Based Classification of Raw Hydroacoustic Signal: A Review. Journal of Marine Science and Engineering, 11(1), 3. https://doi.org/10.3390/jmse11010003