Underwater Image Enhancement Based on Color Correction and Detail Enhancement

Abstract

1. Introduction

2. Materials and Methods

2.1. Underwater Image Imaging Model

2.2. Underwater Image Denoising

2.2.1. NL-Means

2.2.2. Improved NL-Means

2.3. Underwater Image Color Correction

2.3.1. U-Net

2.3.2. Improved U-Net

2.3.3. CBAM

2.4. Underwater Image Detail Enhancement

2.4.1. Image Degradation Model

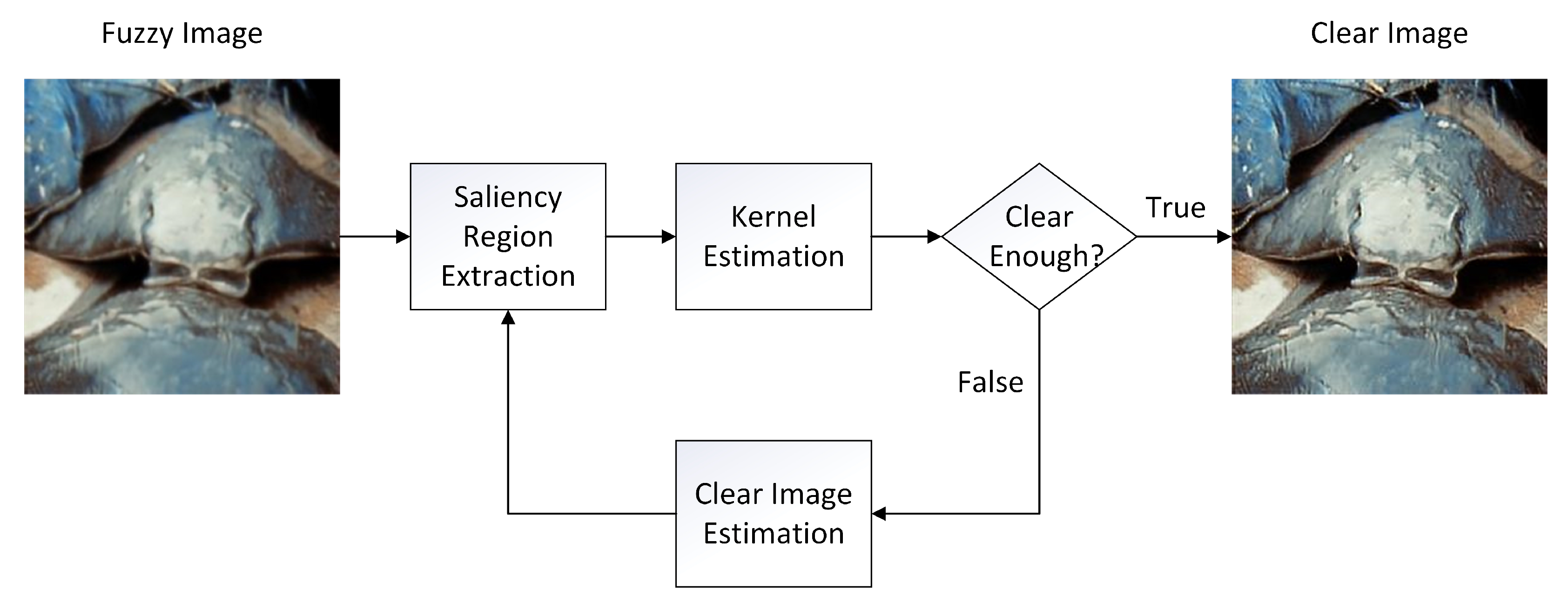

2.4.2. The Process of Sharpening Algorithm

2.4.3. Saliency Region Extraction

2.4.4. The Estimation of Fuzzy Kernel

2.4.5. The Estimation of Clear Images

3. Results and Discussion

3.1. Color Correction Experiment

3.2. Detail Enhancement Experiment

3.3. Image Quality Assessment

3.4. Running Time Experiment

3.5. Validation of Algorithm Effectiveness

3.6. Ablation Study

3.7. Application Test

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Knausgård, K.M.; Wiklund, A.; Sørdalen, T.K.; Halvorsen, K.T.; Kleiven, A.R.; Jiao, L.; Goodwin, M. Temperate fish detection and classification: A deep learning based approach. Appl. Intell. 2022, 52, 6988–7001. [Google Scholar] [CrossRef]

- Xue, B.; Huang, B.; Wei, W.; Chen, G.; Li, H.; Zhao, N.; Zhang, H. An Efficient Deep-Sea Debris Detection Method Using Deep Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 12348–12360. [Google Scholar] [CrossRef]

- Bailey, G.N.; Flemming, N.C. Archaeology of the continental shelf: Marine resources, submerged landscapes and underwater archaeology. Quat. Sci. Rev. 2008, 27, 2153–2165. [Google Scholar] [CrossRef]

- Chao, L.; Wang, M. Removal of water scattering. In Proceedings of the 2010 2nd International Conference on Computer Engineering and Technology, Kuala Lumpur, Malaysia, 7–10 May 2010; Volume 2, pp. V2-35–V2-39. [Google Scholar]

- Chiang, J.Y.; Chen, Y.C. Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 2011, 21, 1756–1769. [Google Scholar] [CrossRef]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Iqbal, K.; Salam, R.A.; Osman, A.; Talib, A.Z. Underwater Image Enhancement Using an Integrated Colour Model. IAENG Int. J. Comput. Sci. 2007, 34, 1–6. [Google Scholar]

- Kaur, M.; Kaur, J.; Kaur, J. Survey of contrast enhancement techniques based on histogram equalization. Int. J. Adv. Comput. Sci. Appl. 2011, 2, 137–141. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 81–88. [Google Scholar]

- Drews, P.; Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission estimation in underwater single images. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013; pp. 825–830. [Google Scholar]

- Peng, Y.T.; Cao, K.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Geoffrey, H. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Guo, C. Emerging from water: Underwater image color correction based on weakly supervised color transfer. IEEE Signal Process. Lett. 2018, 25, 323–327. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Wang, N.; Zhou, Y.; Han, F.; Zhu, H.; Yao, J. UWGAN: Underwater GAN for real-world underwater color restoration and dehazing. arXiv 2019, arXiv:1912.10269. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Jiang, Q.; Wang, G.; Ji, T.; Wang, P. Underwater image denoising based on non-local methods. In Proceedings of the 2018 OCEANS-MTS/IEEE Kobe Techno-Oceans (OTO), Kobe, Japan, 28–31 May 2018; pp. 1–5. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Niu, J.Y.; Xie, Z.H.; Li, Y.; Cheng, S.J.; Fan, J.W. Scale fusion light CNN for hyperspectral face recognition with knowledge distillation and attention mechanism. Appl. Intell. 2022, 52, 6181–6195. [Google Scholar] [CrossRef]

- Luo, Z.; Tang, Z.; Jiang, L.; Ma, G. A referenceless image degradation perception method based on the underwater imaging model. Appl. Intell. 2022, 52, 6522–6538. [Google Scholar] [CrossRef]

- Song, Q.; Wu, C.; Tian, X.; Song, Y.; Guo, X. A novel self-learning weighted fuzzy local information clustering algorithm integrating local and non-local spatial information for noise image segmentation. Appl. Intell. 2022, 52, 6376–6397. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Cho, S.; Lee, S. Fast motion deblurring. ACM Trans. Graph. 2009, 28, 1–8. [Google Scholar] [CrossRef]

- Xu, L.; Jia, J. Two-phase kernel estimation for robust motion deblurring. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2010; pp. 157–170. [Google Scholar]

- Rao, R.P.; Ballard, D.H. An active vision architecture based on iconic representations. Artif. Intell. 1995, 78, 461–505. [Google Scholar] [CrossRef]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1597–1604. [Google Scholar]

- Daugman, J.G. Complete discrete 2-D Gabor transforms by neural networks for image analysis and compression. IEEE Trans. Acoust. Speech Signal Process. 1988, 36, 1169–1179. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef] [PubMed]

- Song, W.; Wang, Y.; Huang, D.; Tjondronegoro, D. A rapid scene depth estimation model based on underwater light attenuation prior for underwater image restoration. In Pacific Rim Conference on Multimedia; Springer: Cham, Switzerland, 2018; pp. 678–688. [Google Scholar]

- Huang, D.; Wang, Y.; Song, W.; Sequeira, J.; Mavromatis, S. Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition. In International Conference on Multimedia Modeling; Springer: Cham, Switzerland, 2018; pp. 453–465. [Google Scholar]

- Akkaynak, D.; Treibitz, T. Sea-thru: A method for removing water from underwater images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1682–1691. [Google Scholar]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Chou, W. Maximum a posterior linear regression with elliptically symmetric matrix variate priors. In Proceedings of the Sixth European Conference on Speech Communication and Technology, Budapest, Hungary, 5–9 September 1999. [Google Scholar]

- Lei, T.; Jia, X.; Zhang, Y.; Liu, S.; Meng, H.; Nandi, A.K. Superpixel-based fast fuzzy C-means clustering for color image segmentation. IEEE Trans. Fuzzy Syst. 2018, 27, 1753–1766. [Google Scholar] [CrossRef]

| Image | IBLA | UDCP | ULAP | RGHS | Sea-thru | UWGAN | FunieGAN | Ours |

|---|---|---|---|---|---|---|---|---|

| 1 | 1.452 | 1.477 | 1.343 | 1.242 | 1.502 | 1.607 | 1.622 | 1.375 |

| 2 | 1.405 | 1.331 | 1.272 | 0.992 | 1.422 | 1.507 | 1.423 | 1.221 |

| 3 | 0.792 | 2.220 | 1.721 | 1.523 | 2.204 | 2.332 | 2.215 | 2.274 |

| 4 | 0.541 | 0.605 | 1.023 | 1.005 | 1.652 | 1.552 | 1.476 | 1.775 |

| 5 | 1.305 | 1.427 | 1.275 | 1.121 | 1.445 | 1.307 | 1.502 | 2.513 |

| Average | 1.099 | 1.412 | 1.327 | 1.177 | 1.645 | 1.661 | 1.648 | 1.832 |

| Scores | Input | IBLA | UDCP | ULAP | RGHS | Sea-thru | UWGAN | FunieGAN | Ours |

|---|---|---|---|---|---|---|---|---|---|

| SSIM | 0.794 | 0.694 | 0.579 | 0.756 | 0.759 | 0.804 | 0.827 | 0.779 | 0.745 |

| PSNR | 17.216 | 16.631 | 13.128 | 17.532 | 16.488 | 15.921 | 14.743 | 15.301 | 17.637 |

| UIQM | 1.377 | 2.772 | 2.987 | 2.270 | 2.208 | 1.066 | 1.875 | 2.240 | 4.035 |

| UCIQE | 0.379 | 0.459 | 0.497 | 0.452 | 0.447 | 0.378 | 0.476 | 0.431 | 0.429 |

| Methods | IBLA | UDCP | ULAP | RGHS | Sea-thru | UWGAN | FunieGAN | Ours |

|---|---|---|---|---|---|---|---|---|

| Time (s) | 7.4774 | 3.1425 | 0.6091 | 1.4407 | 3.3012 | 1.5014 | 1.7256 | 1.4770 |

| Network | SSIM | PSNR | UIQM | UCIQE |

|---|---|---|---|---|

| (a) | 0.703 | 17.521 | 1.422 | 0.383 |

| (b) | 0.711 | 17.755 | 1.451 | 0.385 |

| (c) | 0.723 | 17.968 | 1.570 | 0.390 |

| (d) | 0.731 | 18.201 | 1.782 | 0.392 |

| Experiment | SSIM | PSNR | UIQM | UCIQE |

|---|---|---|---|---|

| (a) | 0.724 | 17.415 | 1.427 | 0.388 |

| (b) | 0.731 | 18.201 | 1.782 | 0.392 |

| (c) | 0.716 | 17.338 | 1.845 | 0.401 |

| (d) | 0.733 | 18.272 | 2.329 | 0.413 |

| (e) | 0.745 | 18.637 | 4.035 | 0.429 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Z.; Ji, Y.; Song, L.; Sun, J. Underwater Image Enhancement Based on Color Correction and Detail Enhancement. J. Mar. Sci. Eng. 2022, 10, 1513. https://doi.org/10.3390/jmse10101513

Wu Z, Ji Y, Song L, Sun J. Underwater Image Enhancement Based on Color Correction and Detail Enhancement. Journal of Marine Science and Engineering. 2022; 10(10):1513. https://doi.org/10.3390/jmse10101513

Chicago/Turabian StyleWu, Zeju, Yang Ji, Lijun Song, and Jianyuan Sun. 2022. "Underwater Image Enhancement Based on Color Correction and Detail Enhancement" Journal of Marine Science and Engineering 10, no. 10: 1513. https://doi.org/10.3390/jmse10101513

APA StyleWu, Z., Ji, Y., Song, L., & Sun, J. (2022). Underwater Image Enhancement Based on Color Correction and Detail Enhancement. Journal of Marine Science and Engineering, 10(10), 1513. https://doi.org/10.3390/jmse10101513