1. Introduction

The assessment and perception of pig information are key indicators for evaluating the quality of husbandry management and production efficiency, and they have also garnered significant attention [

1,

2]. As one of the most important livestock groups in animal husbandry, pigs are a primary source of economic income. Traditional livestock management has largely relied on manual visual judgment and analysis, lacking objective data evaluation. With the rapid development of deep learning and artificial intelligence technologies, precision animal husbandry has witnessed the widespread application of intelligent devices and algorithms [

3,

4]. The Internet of Things (IoT), big data, and artificial intelligence provide strong technological support for the transformation and upgrading of traditional animal husbandry [

5,

6,

7]. However, during the transformation of the precision farming industry, many challenges remain [

8]. With the widespread application of deep learning and artificial intelligence, precision animal husbandry has ushered in the large-scale deployment of intelligent devices and algorithm models [

9,

10,

11,

12]. However, the industry still faces numerous challenges during the transformation process, which increases the difficulty of upgrading the precision pig farming industry [

13]. In factory farming systems, factors such as pig performance, behavior, and environmental conditions directly impact the health and farming efficiency of pigs. The weight information of pigs is a critical indicator for assessing feed conversion rates and health status. As a result, weight evaluation methods based on depth cameras and 3D point cloud technology have emerged [

14,

15,

16,

17].

In the field of behavior analysis, many researchers have utilized technologies such as 3D sensors and video to construct models for their studies. In terms of the pigpen environment, suitable temperature, humidity, and gas conditions are important indicators for ensuring the production welfare of pigs. Numerous researchers have also conducted environmental perception studies based on environmental sensors to optimize production management [

18,

19]. In the process of acquiring physiological information from pigs, both contact-based and non-contact methods are commonly used to obtain the target data [

20]. Contact-based methods are time-consuming and labor-intensive and can induce stress in animals. In contrast, non-contact video detection offers significant advantages, including efficiency, convenience, and the ability to avoid causing stress to the animals. Most importantly, it eliminates the risk of compromising African swine fever prevention measures. Additionally, video detection enables continuous 24 h monitoring, helping to avoid the loss of critical behavioral information. In pig production, physiological information plays a crucial role in guiding management practices, particularly during key stages such as sow estrus, farrowing, and lactation, providing essential guidance for management [

21]. Additionally, behavior is one of the key indicators for assessing the health and welfare of pigs. In the perception of pigs’ physiological parameters, many researchers have monitored pig behavior through multi-sensor fusion technologies and developed related behavior evaluation models. The real-time monitoring of livestock behavior is a complex and challenging research task. With the application of visual technology and artificial intelligence, we are now able to monitor livestock in real-time, ensuring timely perception of animal information.

During the sow production cycle, the accurate perception of estrus behavior is crucial, as it directly impacts the overall productivity and profitability of the pig farm. The relationship between estrus and breeding is particularly important, as estrus is the prerequisite for successful breeding. Only by precisely tracking the estrus cycle can the breeding success rate and the survival rate of weaned piglets be improved. The estrus cycle of a sow typically lasts 21 days. In the early estrus stage, sows exhibit restlessness and fence-biting behavior. During the mid-estrus stage, sows show body stillness and ear erect posture, which marks the optimal breeding time. Traditionally, estrus detection in sows has primarily relied on manual observation, where farm workers assess signs such as reduced appetite, frequent grunting, vulvar swelling, and mounting behavior toward other sows. Additionally, the Back Pressure Test (BPT) is commonly used, where a person applies pressure to the sow’s back to check for standing stillness and ear erect posture. Another method involves boar stimulation, where a sow’s reaction to boar contact and pheromone sniffing is observed to determine estrus status.

With the aid of modern information technologies, precision management in animal husbandry has become achievable, making the intelligent sensing and real-time monitoring of animal production possible. This has driven the development of low-cost, high-efficiency production models. Radio Frequency Identification (RFID) technology has been widely applied in areas such as individual identification, target tracking, and traceability, further enhancing the precision and efficiency of livestock management [

22,

23,

24]. By utilizing visual sensors and neural network technologies, key information such as animal growth status and physiological behaviors can be observed non-invasively and without causing stress [

25]. As a core production force in animal husbandry, pigs have a direct impact on economic benefits, and their posture and behavior are crucial indicators for assessing health status and animal welfare. In addition, researchers have made significant application advancements by using neural network models to analyze animal behaviors such as feeding, drinking, fighting, and posture [

26,

27,

28,

29].

In summary, in the case of the development of precision animal husbandry, many still rely on traditional methods of operation, the degree of intelligence is not high, and there are still many deficiencies in pig behavior models. Today, with the use of high-tech sensors and large models, these advancements have further driven the enhancement of intelligence in livestock management. Based on this, this paper innovatively combines deep learning models with video detection technology to analyze pig behavior and realize the collaborative monitoring of large-scale video detection and normal pig production. The goal is to provide scientific evidence and technical support for the modernization of animal husbandry through the precise monitoring and comprehensive analysis of various pig behaviors. Without affecting the normal production of pigs, it enables the scientific collection of information during the production process, allowing for the timely identification and resolution of potential issues.

2. Materials and Methods

2.1. Environment and Animals

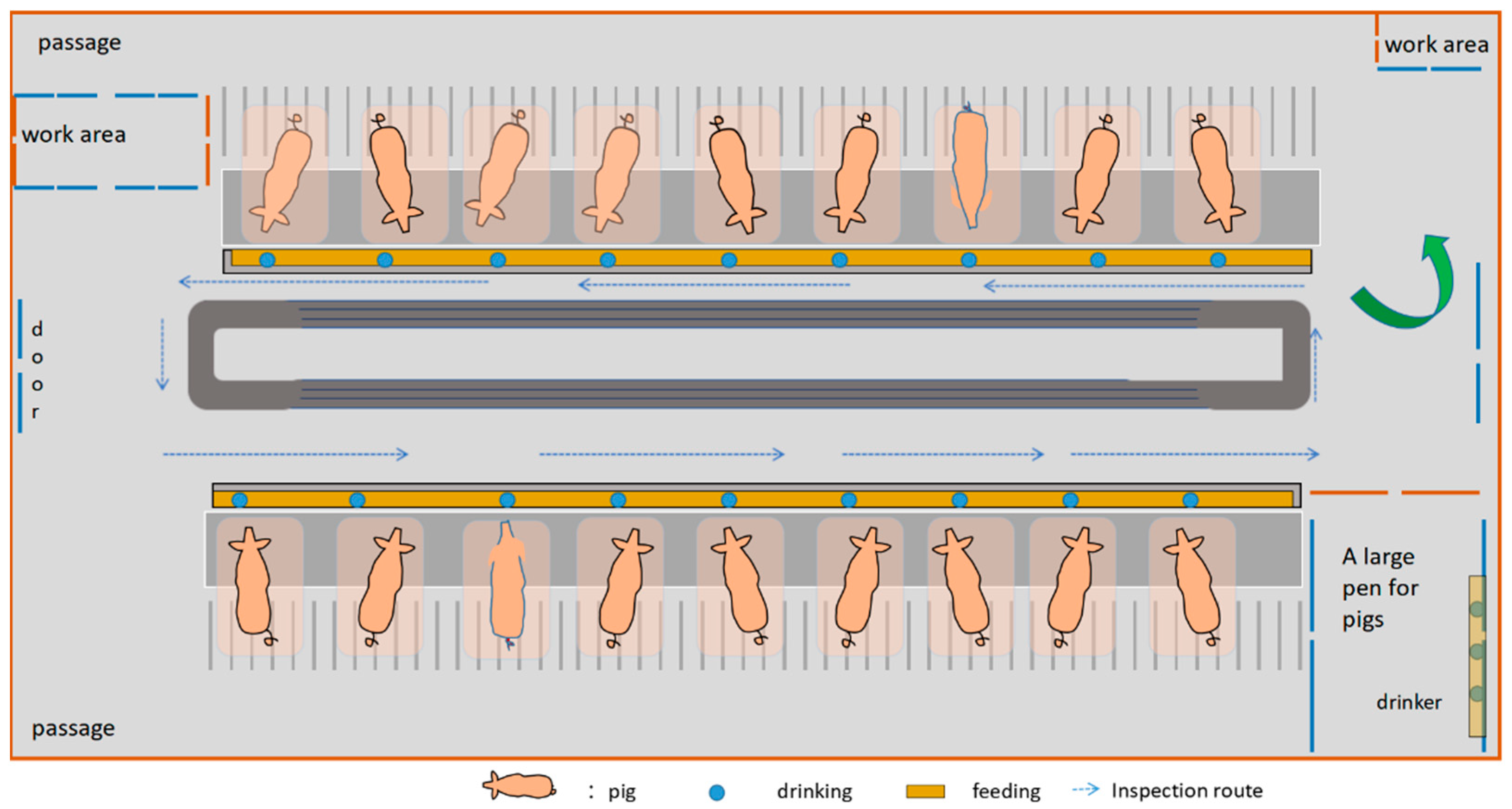

This study was conducted from 2020 to 2024 at the Core Breeding Center of the Chongqing Academy of Animal Science Farm and in Shandong, China. The subjects of the experiment were 68 Canadian Landrace sows in their 2nd to 3rd parity. Individual pigs weigh about 250 kg. The pig house has a perfect feeding system and ventilation conditions, and the pigs only have a more comfortable growing environment. At the same time, fixed-point data collection for each pig was carried out in the pig house. The data sets were allocated according to the ratio of training set, verification set, and test set 7:2:1 to ensure the accuracy of the model. The data collection platform mainly consists of an edge computing device and an intelligent imaging camera. The computer processor is Intel

®Core

TM i7-4210M CPU@2.60 GHz 2.59 GHz, and the RAM is 16 GB. For model training, we set up a high-performance server in the laboratory with the following specifications: Intel

®Core

TM i7-10700F CPU@2.90 GHz and 32 GB GDDR6X. The following is an overall overview of data acquisition (

Figure 1).

2.2. Pig Behavior and Video Analysis

With the rapid advancement of deep learning, real-time video-based monitoring has emerged as both a focal point and a challenge in the study of pig behavior. Analyzing pig behavior not only enhances farm management efficiency and biosecurity but also plays a crucial role in advancing intelligent livestock farming. AI-driven video-based pig behavior recognition represents an innovative, efficient, sustainable, and health-conscious approach to farming, significantly improving the quality of management and production efficiency while optimizing traditional rearing practices.

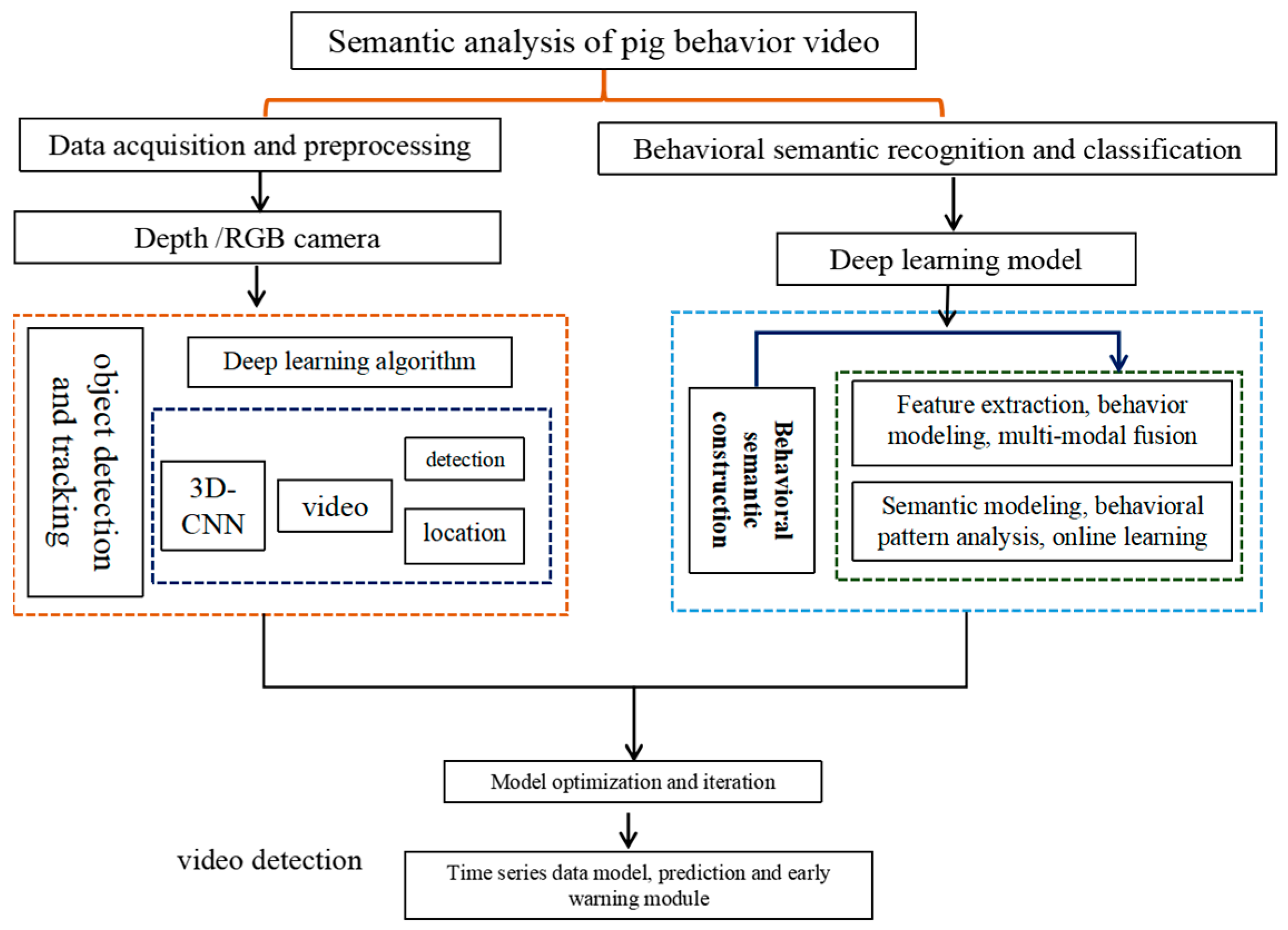

Through behavior recognition technologies, farm personnel can monitor key aspects of pigs in real time, such as behavioral patterns, movement trajectories, feeding and drinking habits, and disease risks. However, this field faces numerous challenges. Data collection is difficult and prone to background interference, behavioral features are often subtle and easily affected by stress responses, and hardware devices tend to have slow response times. Meanwhile, the high costs associated with high-performance equipment further hinder widespread adoption. This study proposes a video-based semantic behavior recognition method using a 3D convolutional neural network (3D-CNN) to analyze pig behaviors. Additionally, a cross-temporal video behavior analysis model based on the 3D-CNN was developed to improve the accuracy and efficiency of behavior analysis. Finally, an integrated model combining posture estimation and behavior detection is presented, enabling a more comprehensive and precise approach to monitoring pig behavior. The study specifically focuses on video semantic analysis of pig behavior, utilizing 3D-CNN for behavior detection and localization while constructing a behavioral semantic recognition model. The analysis covers key aspects such as feature extraction, behavior modeling, and multimodal fusion.

Figure 2 is a technical roadmap for the overall study.

Implementing intelligent monitoring in pig housing requires not only tracking physiological behaviors of pigs but also achieving human–machine collaboration, which can significantly enhance production efficiency. However, the uncertainty and randomness of sow behavior pose considerable challenges for data collection and model development. After data collection, it is essential to perform data cleaning and annotation, as this process helps improve model accuracy and ensures reliable behavior recognition.

2.3. Data Analysis and Model Establishment

The collected video data were preprocessed using Python3.7, primarily involving tasks such as video annotation and data augmentation. Additionally, a 3D convolutional neural network model was constructed, consisting of 3D convolutional layers, pooling layers, fully connected layers, and a Softmax output layer. This model provides a valuable reference for further analysis of pig video semantics. According to the physiological characteristics of the sow, we define the following four behaviors: fence-biting during the estrus cycle (SOB), head stillness and ear erect posture during estrus (SOS), sniffing boar pheromones during estrus (SOC), and significant head swinging during estrus (SOW). Sow behavior is a key observational indicator for determining estrus status and is particularly influenced by physiological conditions and environmental factors. During estrus, sows actively seek out boars and engage in interaction behaviors with them. When a person or a boar applies back pressure, the sow exhibits stillness and a distinct ear-erect posture. Additionally, sows may display frequent restlessness, sniffing behavior, and visible vulvar swelling.

2.3.1. Three-Dimensional Convolutional Neural Network (3D-CNN)

Deep learning is a model that mimics the working principles of the human brain’s neural network. A neural network consists of a hierarchical structure composed of neurons and multiple layers, including an input layer, hidden layers, and an output layer. The activation function in this context enables the neural network to learn more complex functions [

30]. The main steps include video dataset preparation, model construction, setting model parameters, loss calculation, model evaluation, and model optimization. Based on pig behavior, since a single image cannot capture the multi-frame continuous motion relationship, it is difficult to continuously recognize and analyze actions. To address this issue, the convolutional neural network has been extended to three dimensions by adding a temporal dimension, allowing it to learn both spatial and temporal features simultaneously. As the model continues to iterate, research on livestock behavior monitoring based on skeletal key points has also emerged [

31,

32,

33].

A Convolutional Neural Network (CNN) is a type of artificial neural network commonly used for computer vision tasks. It is widely applied in image classification, object detection, and behavior recognition. Since pig behavior videos consist of consecutive frames, there is a motion relationship between preceding and succeeding frames, encompassing both temporal and spatial connections. A 3D-CNN network is composed of three dimensions: the width, height, and time of the input data. The model performs convolution on adjacent video frames to capture temporal information.

2.3.2. Analysis and Comparison of 3D-CNN

In the process of model construction, accurately analyzing and monitoring production information is crucial for practical applications. Despite the rapid development of various models, challenges remain in application scenarios within livestock and poultry housing. Firstly, the target subjects, freely moving pigs, exhibit unpredictable behaviors. Secondly, the presence of other individuals in the pig house adds uncertainty, increasing the complexity of the model. Focusing on the estrus inspection workflow in sow housing, we define specific behaviors during the estrus cycle: a sow biting the fence during estrus is classified as SOB, a sow standing still or with ears erect is defined as SOS, a sow sniffing boar pheromones is categorized as SOC, and a sow exhibiting significant head swinging is labeled as SOW. Therefore, it is essential to compare multiple typical behaviors to analyze the recognition of characteristic actions during the estrus cycle, ultimately providing better guidance for production management.

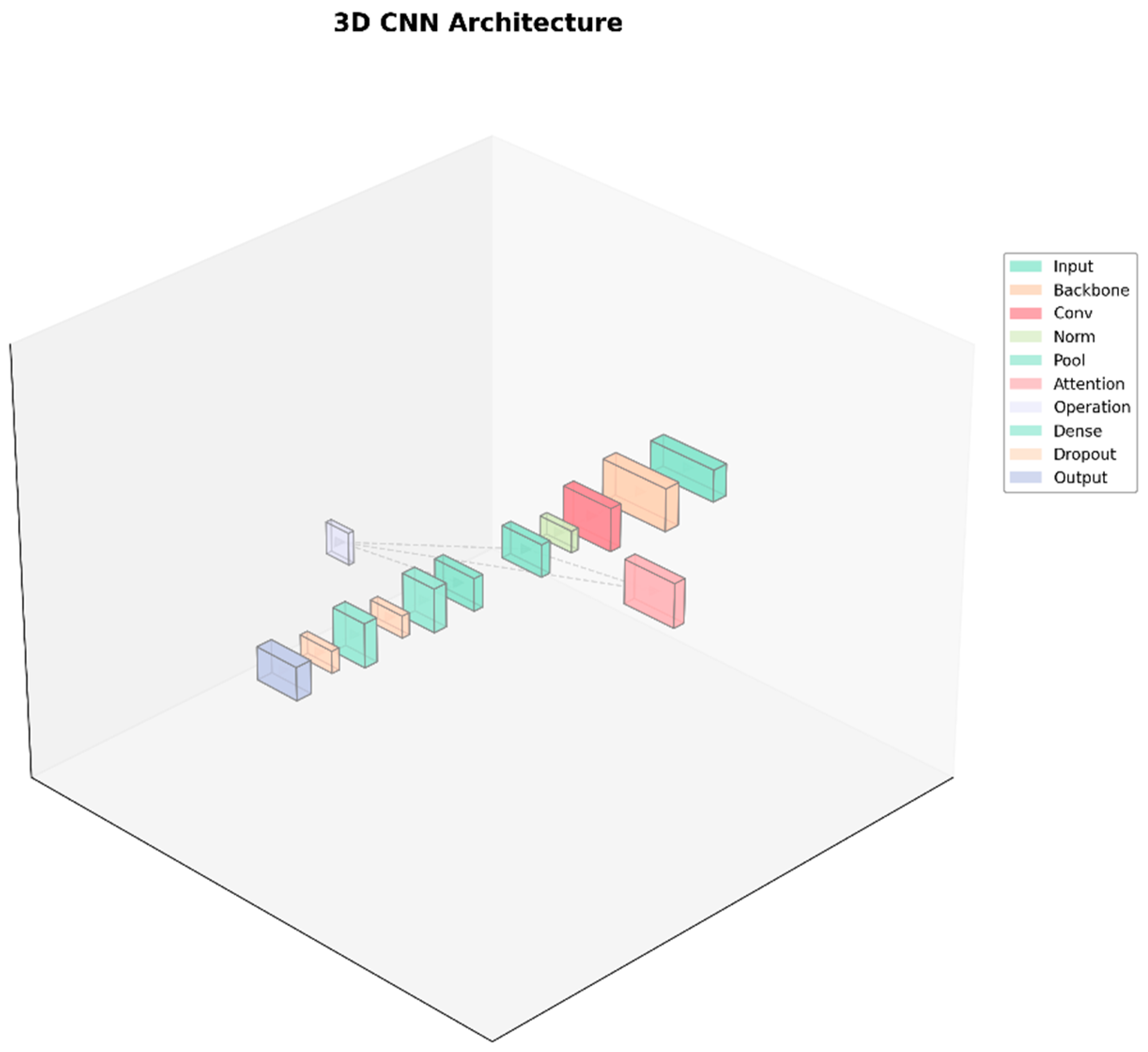

During the model training process, we attempted to perform behavior classification and detection using the 3D-CNN. In this study, we have named it 3D-CNN-d.

Figure 3 shows the overall three-dimensional structure of the model.

2.3.3. Typical Behavior Semantic Recognition Based on 3D-CNN

Advanced video analysis of pig behavior offers a contactless and stress-free advantage in precision livestock farming while also contributing to emission reduction. Video semantic analysis, particularly in pig behavior recognition, holds significant theoretical research value and broad application potential in real-world scenarios. Since sows move freely and randomly, their behavior exhibits greater diversity and increased intra-species complexity. Moreover, the complex pen structure, lighting variations, and interference from other pigs in the pig house environment further raise application challenges. To address this, we define the following four behaviors: fence-biting during the estrus cycle (SOB), head stillness and ear erect posture during estrus (SOS), sniffing boar pheromones during estrus (SOC), and significant head swinging during estrus (SOW).

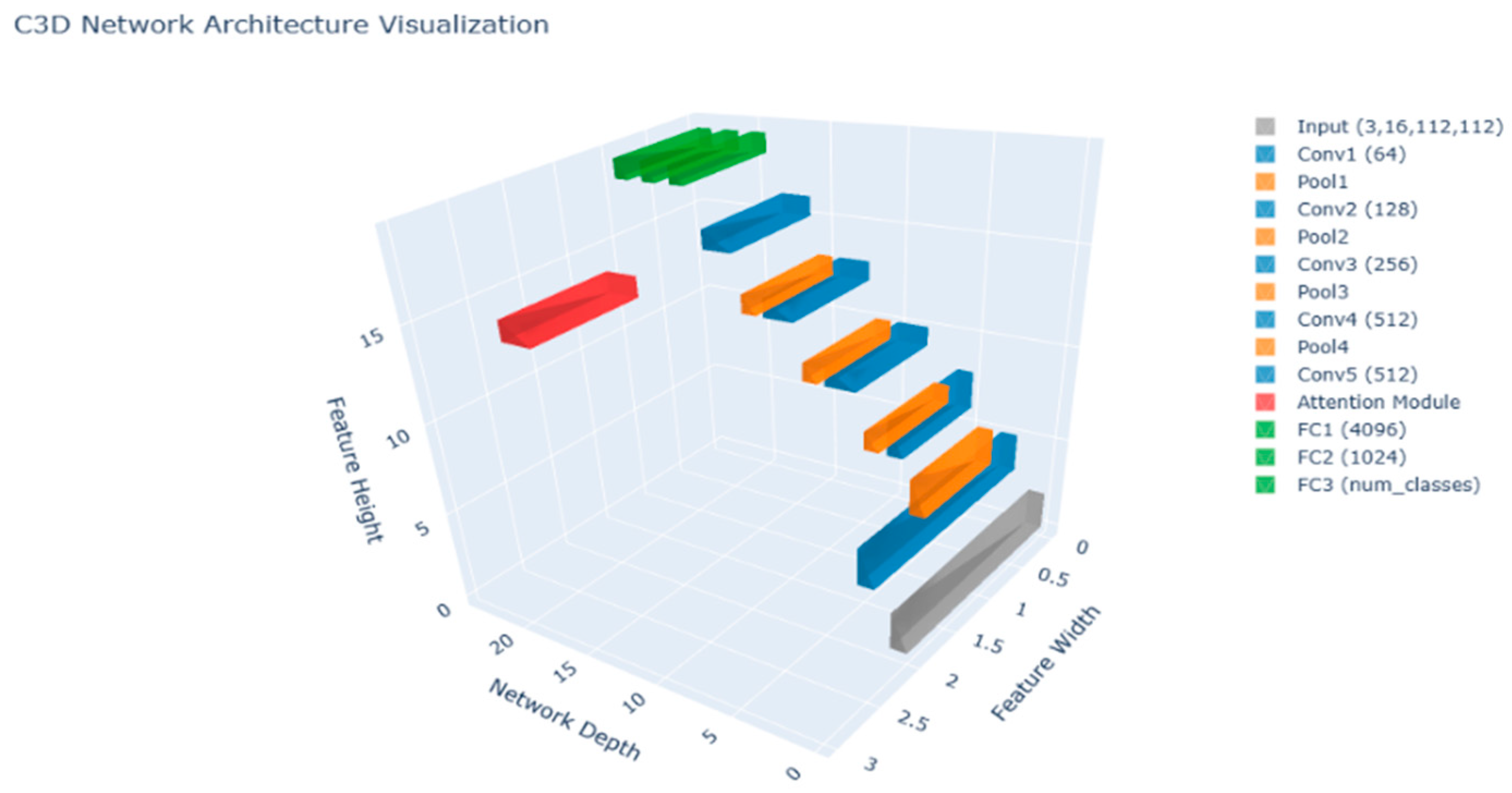

In this study, we constructed an optimized C3D (Convolutional 3D) model for semantic recognition, which effectively improves video action behavior recognition. The model has been optimized primarily in the attention mechanism and residual connections while also simplifying the fully connected layers, thereby enhancing training stability and recognition performance. In terms of Batch Normalization, the model improves gradient propagation stability, accelerating convergence speed. For the attention mechanism, AdaptiveAvgPool3d is used to generate weights, enhancing key feature responses and improving discriminative ability. Regarding residual connections, a downsample layer is introduced to enhance the training efficiency of deeper networks.

Figure 4 shows the overall three-dimensional structure of the model.

3. Results and Discussion

3.1. Model Processing

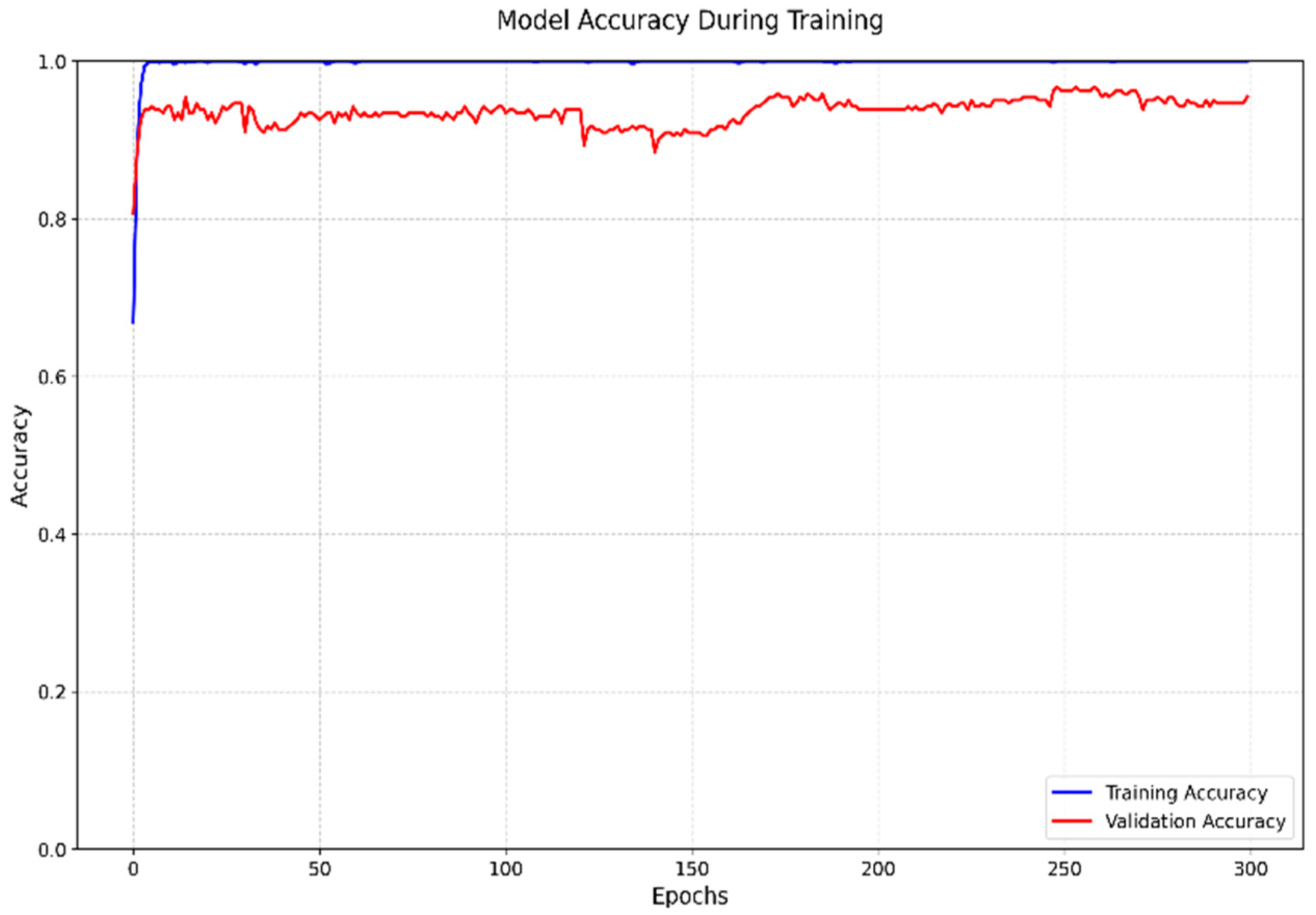

For the classification and detection of four typical behaviors during the sow estrus cycle, processing is primarily based on Python. We mainly constructed a 3D-CNN model for classifying typical actions, with ResNet50V2 serving as the feature extractor, and applied a 3D convolutional layer (Conv3D) for spatiotemporal feature learning. The model consists of five core components, with its central idea being spatiotemporal feature extraction, integrating 2D-CNN (ResNet50V2) + 3D-CNN + an attention mechanism. ResNet50V2 is primarily used to extract spatial features, while TimeDistributed enables ResNet to process each frame independently, producing an output of (batch, 30, feature_map_size). Conv3D is used to learn dynamic features between frames, capturing behavioral patterns along the temporal dimension, with a focus on sow behavior changes (e.g., head swinging, standing still). The attention mechanism enhances key moments, strengthening crucial estrus behavior frames while reducing interference from irrelevant actions (e.g., normal movement, resting). The fully connected layer performs classification, Global Average Pooling (GlobalAveragePooling3D) reduces computational complexity, and the Dense layer conducts the final classification, with Softmax outputting the probabilities of categories such as SOB, SOC, and SOW. The figure below shows the training and validation accuracy curves during the model training process. The model achieves an overall accuracy of approximately 96%, but SOB is often misclassified as SOW. In real-world application scenarios, the behaviors of fence-biting and excessive restlessness in sows tend to overlap, leading to misclassification. Additionally, during the estrus period, sows may exhibit restlessness, which subsequently results in fence-biting behavior.

Figure 5 shows the accuracy curve of model training and verification. The video data set has better performance on the training set and higher accuracy on the verification set, which can meet production demand.

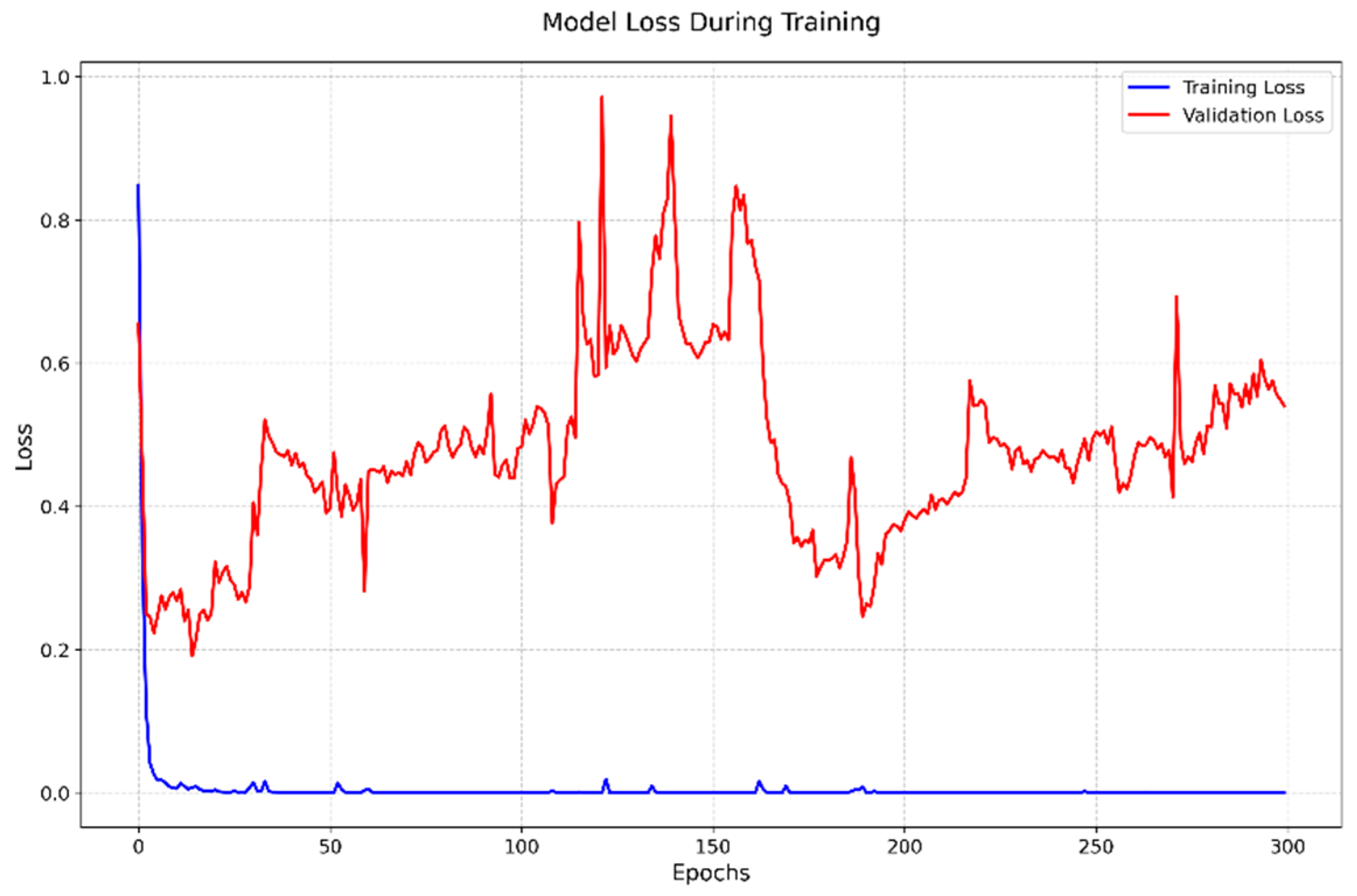

The following figure shows the loss curve of model training and the confusion matrix diagram of the model.

Figure 6 shows changes in the loss curve in the training and verification process of the model, and

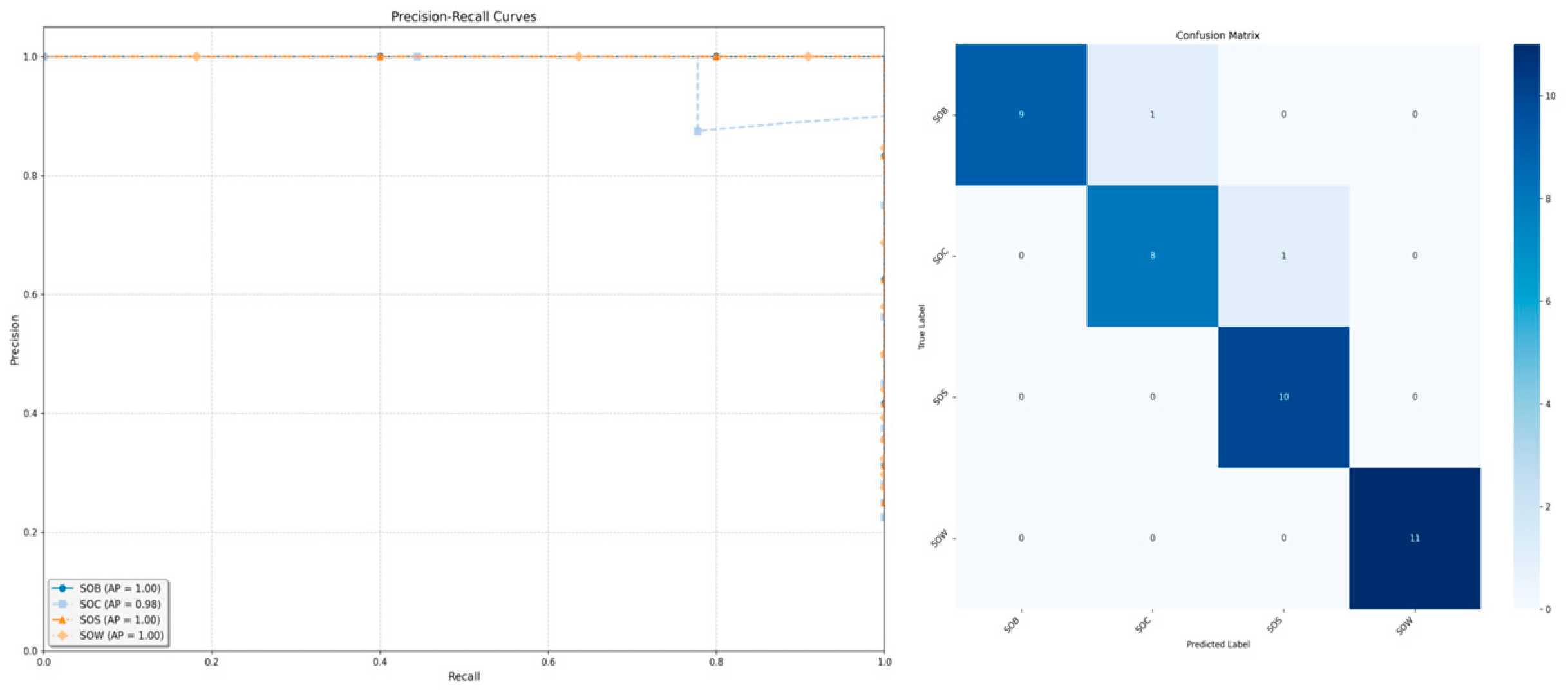

Figure 7 the confusion matrix diagram of the model. In actual sow production, because the sow is in the estrus cycle, the sow’s large agitation and gnawing railings will overlap, so there will be misjudgments.

3.2. Semantic Recognition of Typical Behaviors of Sows During the Estrus Cycle

Estrus detection in sows is one of the most critical aspects of reproductive management. In sow production, farm staff must accurately determine whether a sow is in estrus to ensure the optimal timing for breeding, thereby effectively improving embryo implantation rates and PSY (piglets per sow per year). In practical operations, the free and random nature of pig behavior leads to significant irregularities and behavioral overlap, posing considerable challenges for model-based detection. Traditional behavioral observation still relies on human visual assessment, which is subject to subjective judgment limitations. To address this, we have defined typical behaviors for semantic recognition during the sow estrus cycle, including fence-biting during estrus (SOB), head stillness with ears erect (SOS), sniffing pig pheromones (SOC), and distinct head shaking (SOW). Furthermore, video behavior recognition combined with deep learning models can be widely applied in estrus behavior monitoring and early warning systems, enhancing the automation and intelligence of sow production management.

To this end, we attempted to construct a 3D-CNN for analyzing typical behavior semantic recognition, focusing on data loading, training, and validation. The C3D model consists of five 3D convolutional layers and three fully connected layers, with each convolutional layer followed by a 3D max pooling layer (3D MaxPooling). The core training functions include automatic mixed-precision training (AMP) and gradient optimization. This model demonstrates strong capabilities in spatiotemporal feature extraction, and, by integrating the aforementioned core training functions, it effectively accomplishes the target task.

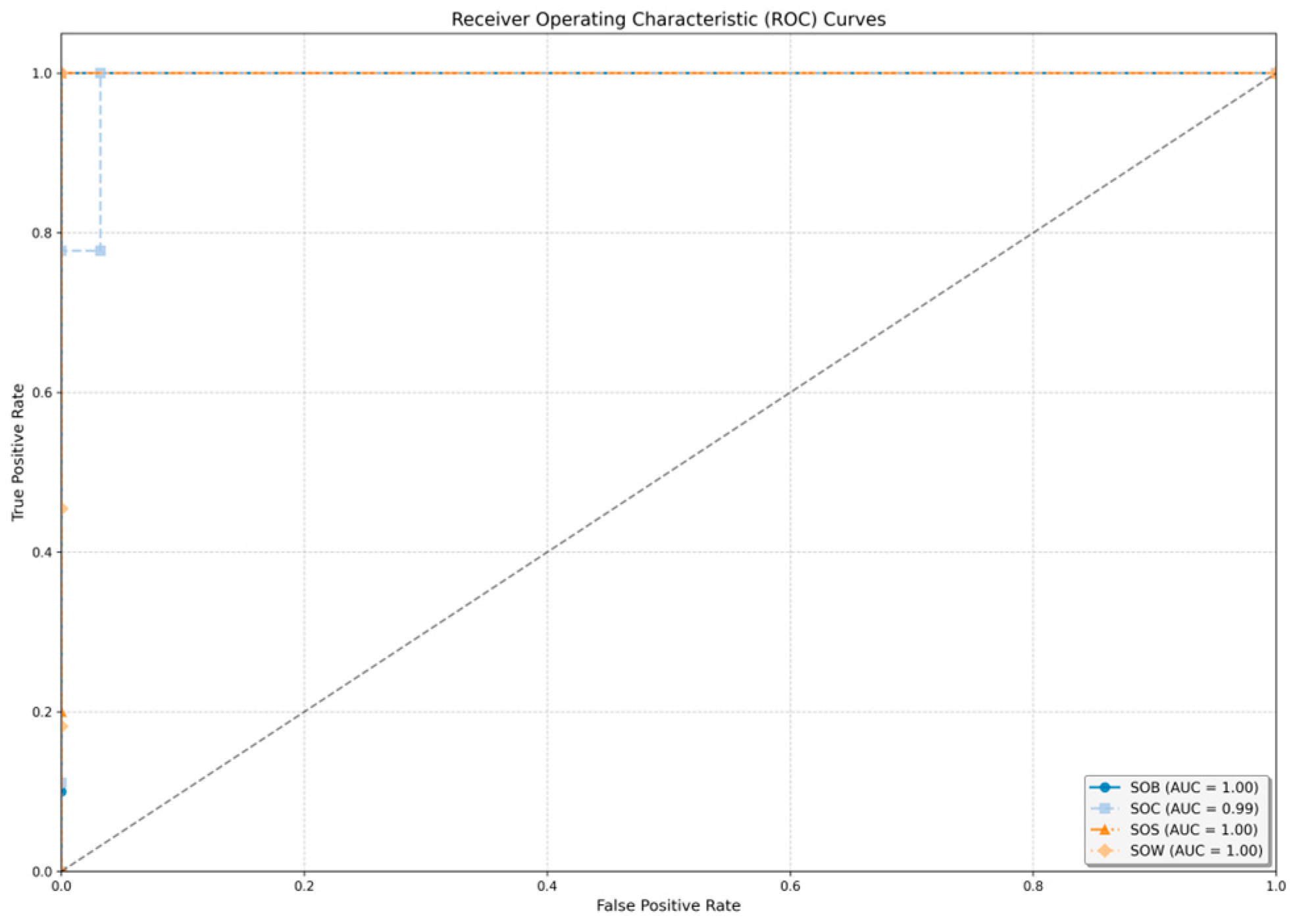

Figure 8 presents the ROC curve of the 3D-CNN in typical behavior semantic recognition. In

Figure 9, numbers 0,1,2,3 represent SOB, SOC, SOS, and SOW respectively.

3.3. Typical Behavior Classification and Semantic Recognition Evaluation of Sows

The 3D-CNN model constructed in this study demonstrates significant advantages over traditional 2D-CNN or LSTM models, as it combines a 2D-CNN (ResNet50V2) and a 3D-CNN to enhance spatiotemporal modeling capabilities. ResNet50V2 extracts spatial information from each frame, enabling rapid convergence. 3D-CNN captures behavioral patterns along the temporal dimension, while Time Distributed reduces computational complexity. More importantly, the use of ResNet50V2 reduces the number of parameters in 3D-CNN, accelerating the training process. These improvements enhance the robustness of sow estrus behavior detection. The model achieves a relatively high overall recognition rate, though SOW has the lowest accuracy, as it is often misclassified as the sow’s fence-biting behavior during the estrus cycle. Overall, as shown in

Table 1, the constructed model demonstrates strong performance, achieving good detection accuracy when validated with videos collected from real-world application scenarios. The model performs particularly well in detecting SOB, SOC, and SOS behaviors, while the accuracy for SOW reaches 86%. This slight reduction in performance is due to the overlap between fence-biting (SOB) and large-scale head movements (SOW) exhibited by sows during the estrus cycle.

Table 1 and

Table 2 show the results of four typical behaviors.

Other researchers have also studied pig behavior, but there are fewer reports on SOB, SOC, SOS, and SOW during estrus. Zhang’s study, Real-time Sow Behavior Detection Based on Deep Learning, focuses primarily on drinking, urination, and mounting behaviors [

34]; Zhou developed a novel behavior detection method for sows and piglets during lactation based on an inspection robot, aiming to reduce labor costs [

35]; Zhang, based on Automated Video Behavior Recognition of Pigs Using Two-Stream Convolutional Networks, achieved an average accuracy of 98.99% for behaviors such as feeding, lying down, walking, scratching, and crawling. However, comprehensive video analysis of sow behavior across different cycles is still lacking. The model proposed in this study offers a better analysis of sow behavior across cycles, providing certain advantages over other researchers’ work [

36].

In the Piggery in production, the model can analyze sow behavior based on collected physiological behavior data. By simulating typical sow behaviors and integrating them into a digital twin platform, real physiological behaviors can be further mapped into the virtual world. Using historical data, the entire estrus cycle of a sow can be simulated and mapped, allowing for the analysis and determination of the optimal breeding time. As a result, farmers can remotely analyze and operate based on (near) real-time estrus behavior data and typical cycle physiological behavior information, reducing the risk of African swine fever prevention and control while achieving efficient, sustainable, and healthy precision sow farming.

3.4. Future Application Scenarios and Shortcomings

The behavior of pigs is an important indicator for assessing their health. In sows, it can reflect reproductive performance and PSY (Pigs per Sow per Year). However, traditional operational models cannot effectively improve the intelligence of operations. In the context of African Swine Fever (ASF) and low levels of automation, the model presented in this paper can effectively monitor sow behavior and mitigate the risks associated with ASF prevention and control. This allows farm and research personnel to remotely analyze data and make informed decisions, significantly improving the operational efficiency and intelligence of pig farms, as well as enhancing the economic benefits of larger farms. However, there are still some limitations in this study. Due to the ongoing ASF control measures, access to farms is difficult; predicting sow behavior remains challenging; and the current model is heavily dependent on hardware, which presents high deployment costs in field applications. In the future, further data collection and the use of lower-cost hardware for on-site validation could address these issues.

4. Conclusions

This study utilizes optoelectronic video technology and a deep learning-based 3D-CNN model specifically designed for the sow estrus cycle. The model employs convolutional neural networks to classify and semantically recognize four key behaviors: fence-biting during estrus (SOB), head stillness with ears erect (SOS), sniffing boar pheromones during estrus (SOC), and significant head swinging during estrus (SOW). The 3D-CNN model constructed in this study successfully recognizes SOB, SOC, SOS, and SOW behaviors, achieving accuracy, recall, and F1-score values of (1.00, 0.90, 0.95; 0.96, 0.98, 0.97; 1.00, 0.96, 0.98; 0.86, 1.00, 0.93), respectively. Additionally, under conditions of multi-pig interference and non-specifically labeled data, the accuracy, recall, and F1-score for semantic recognition of SOB, SOC, SOS, and SOW behaviors based on the 3D-CNN model are (1.00, 0.90, 0.95; 0.89, 0.89, 0.89; 0.91, 1.00, 0.95; 1.00, 1.00, 1.00), respectively. Furthermore, future research can explore the integration of digital twin technology to reconstruct and simulate sow estrus cycle behavior patterns, providing key technical support and reference for identifying typical behaviors associated with the sow estrus physiological cycle.