Accurate Parcel Extraction Combined with Multi-Resolution Remote Sensing Images Based on SAM

Abstract

1. Introduction

2. Materials and Methods

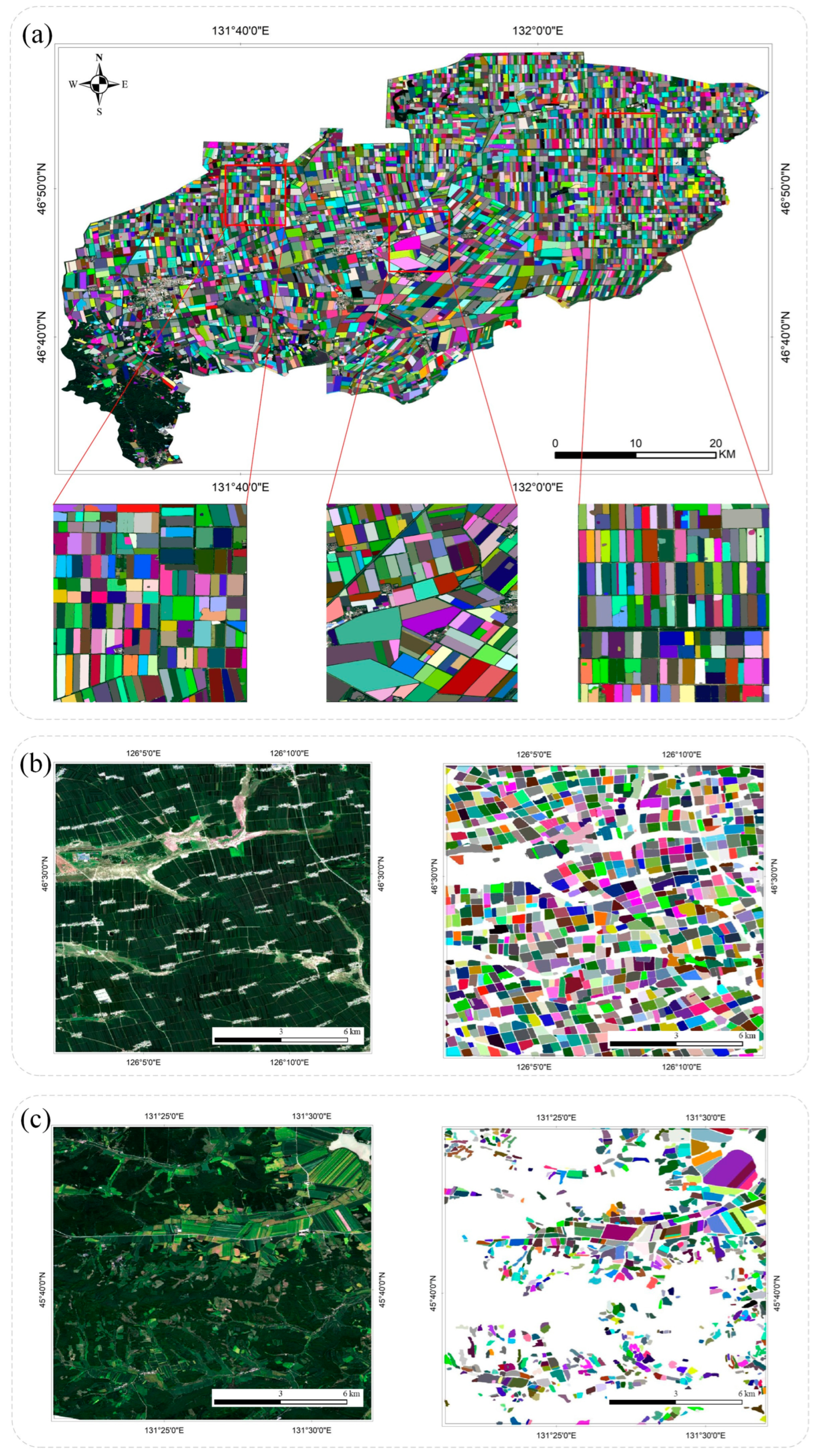

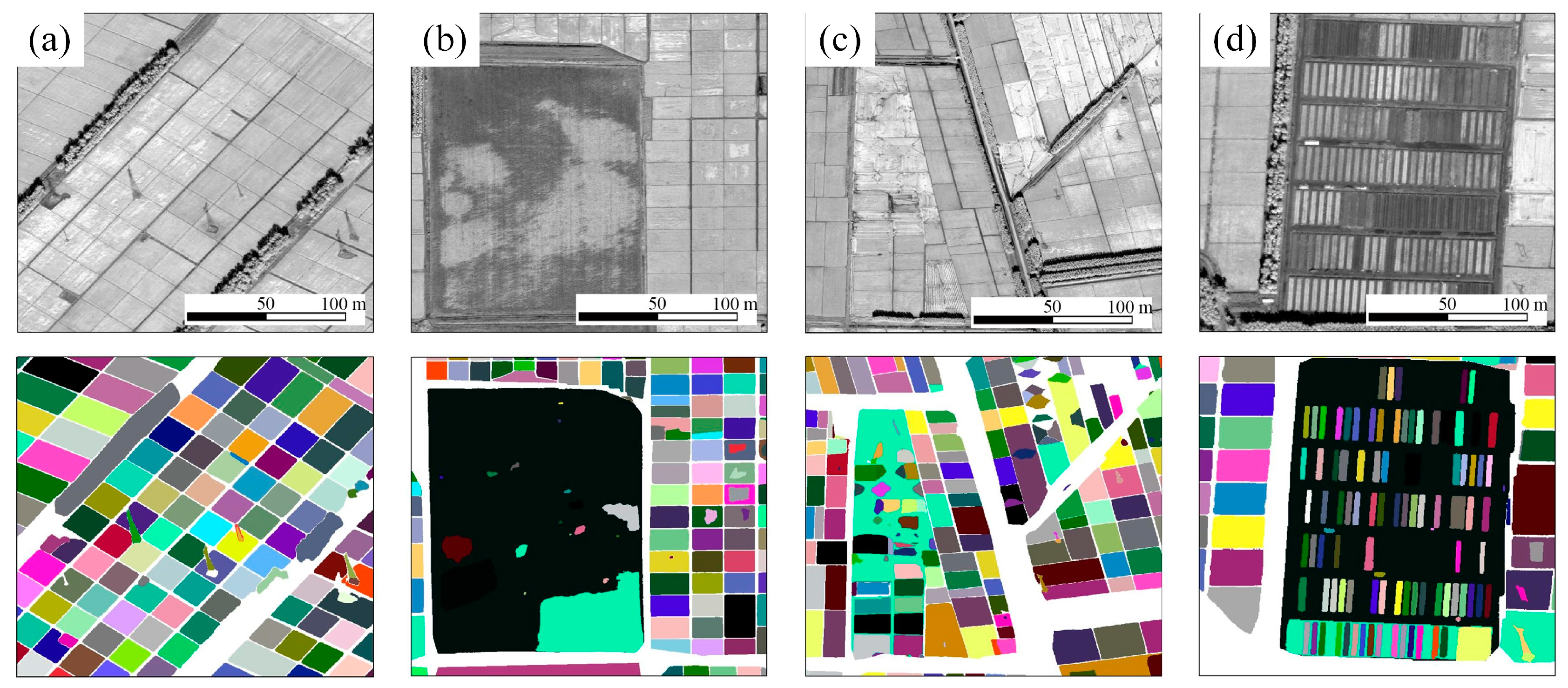

2.1. Study Areas

2.2. Data

2.2.1. Satellite Data and Preprocessing

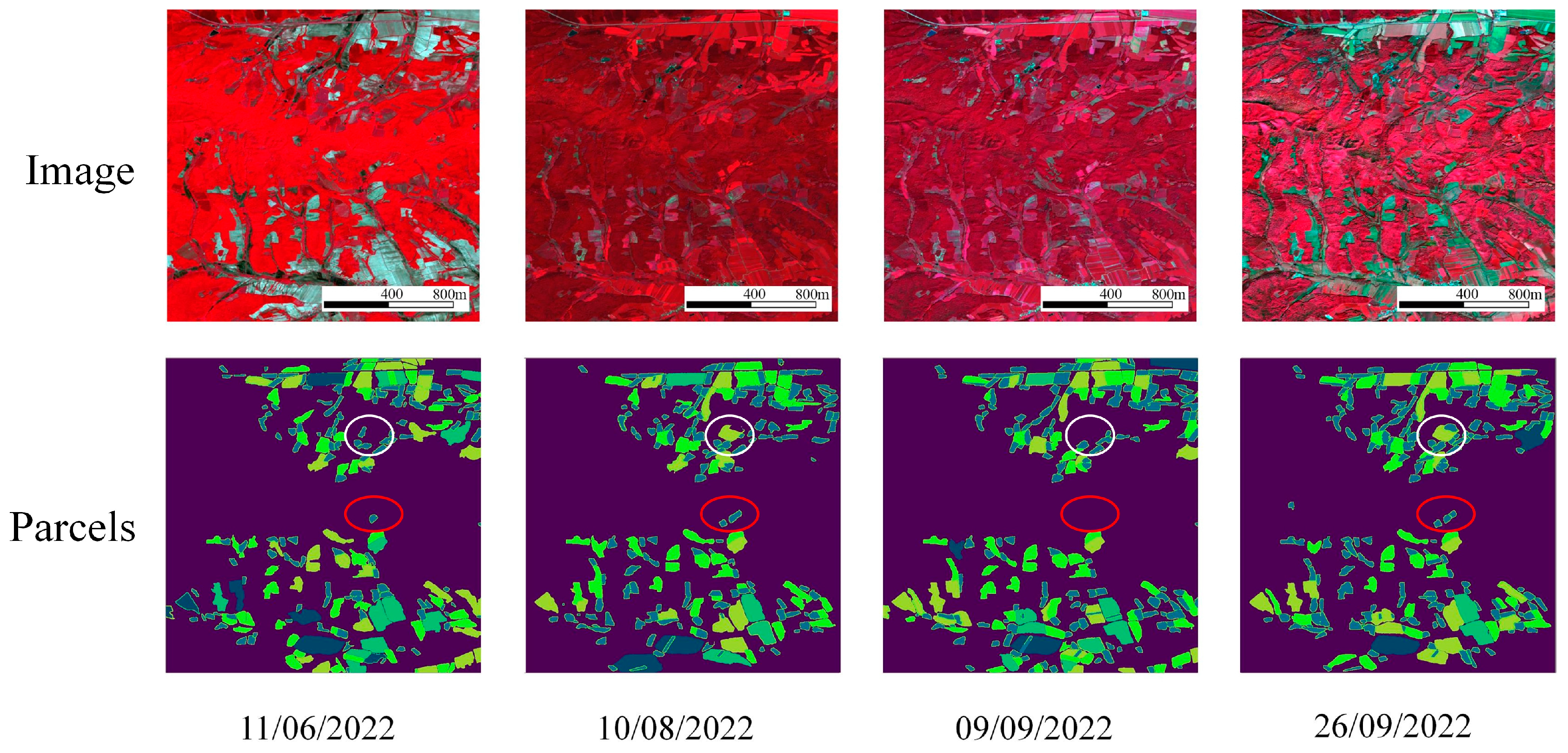

- Sentinel-2 data

- GF-7 data

2.2.2. Auxiliary Data

2.3. Method

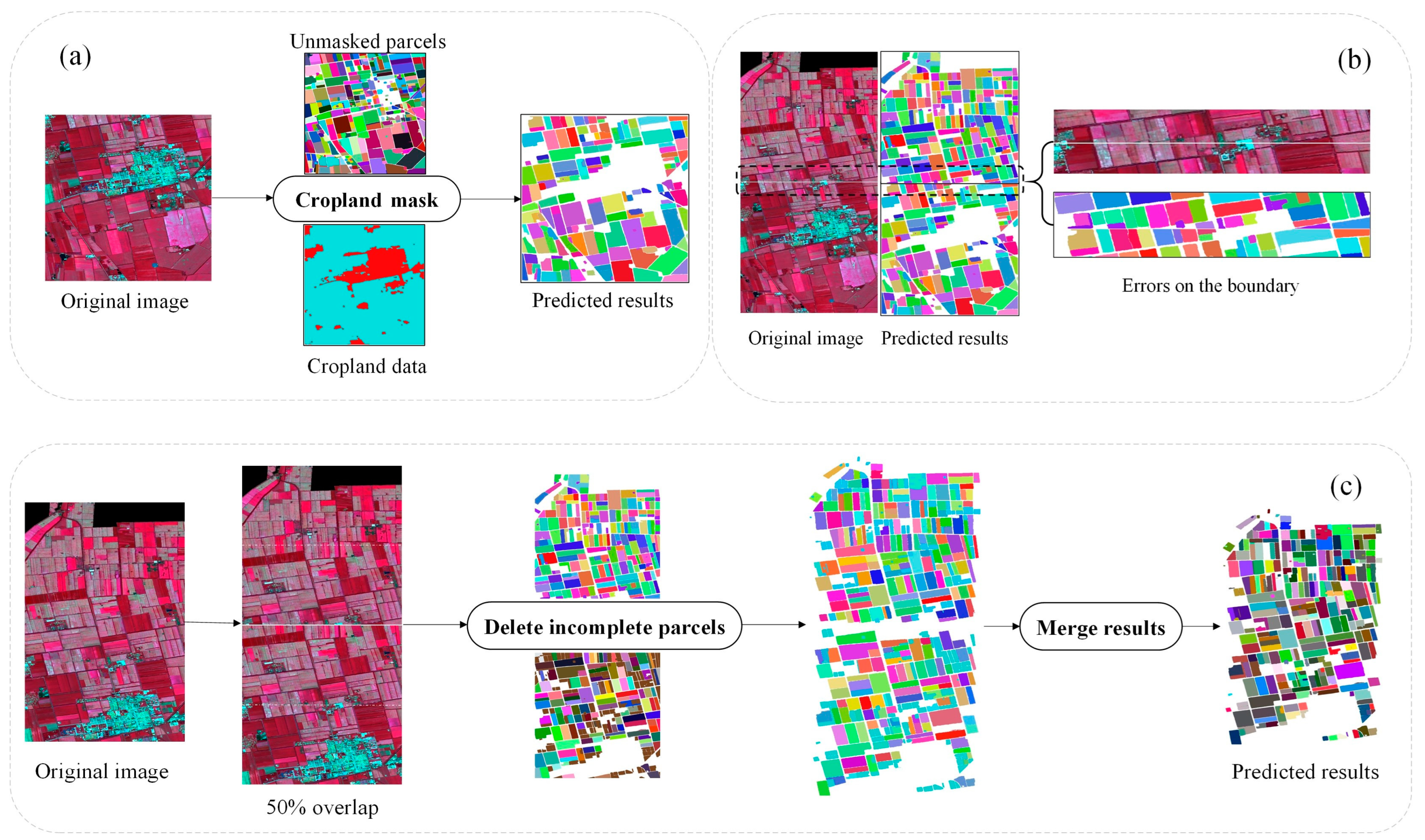

2.3.1. Sentinel-2 Image Parcel Extraction

- Cropland mask

- Overlap prediction

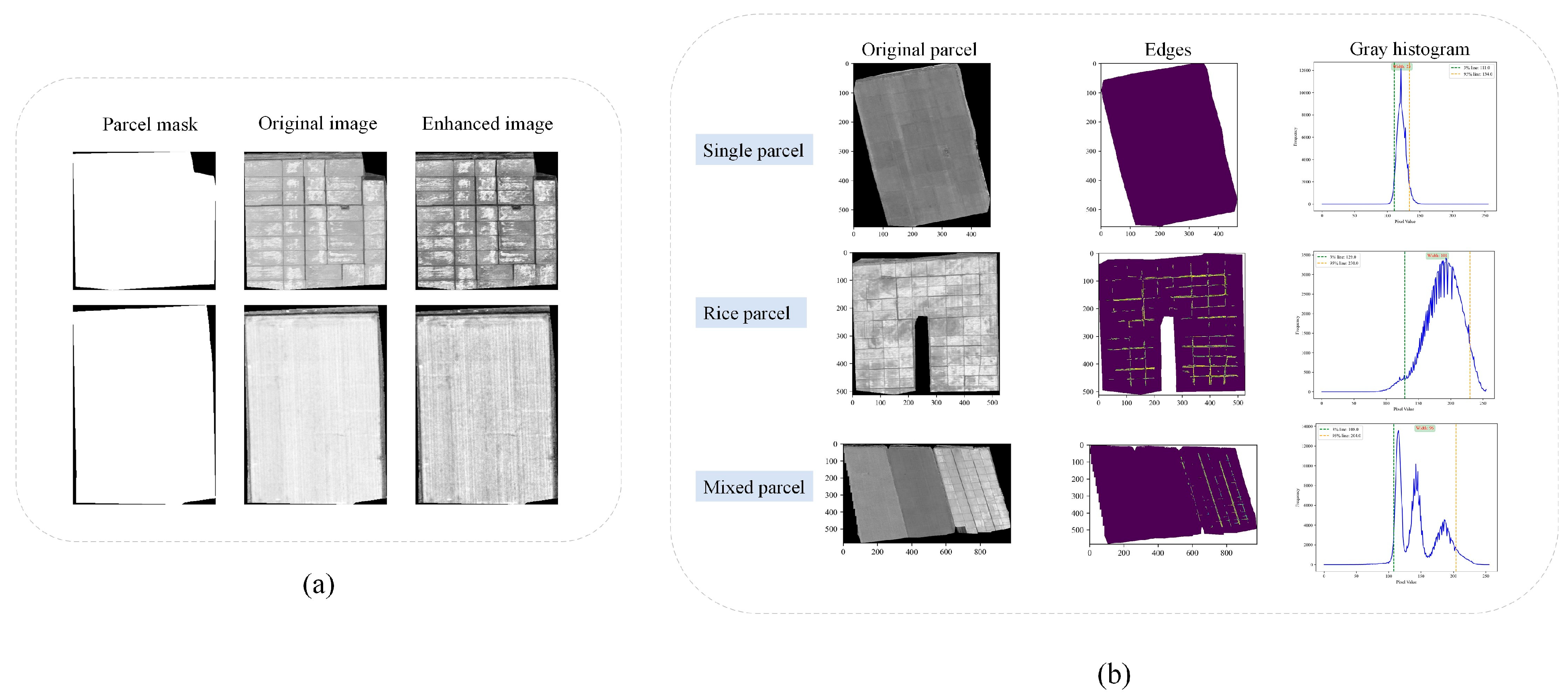

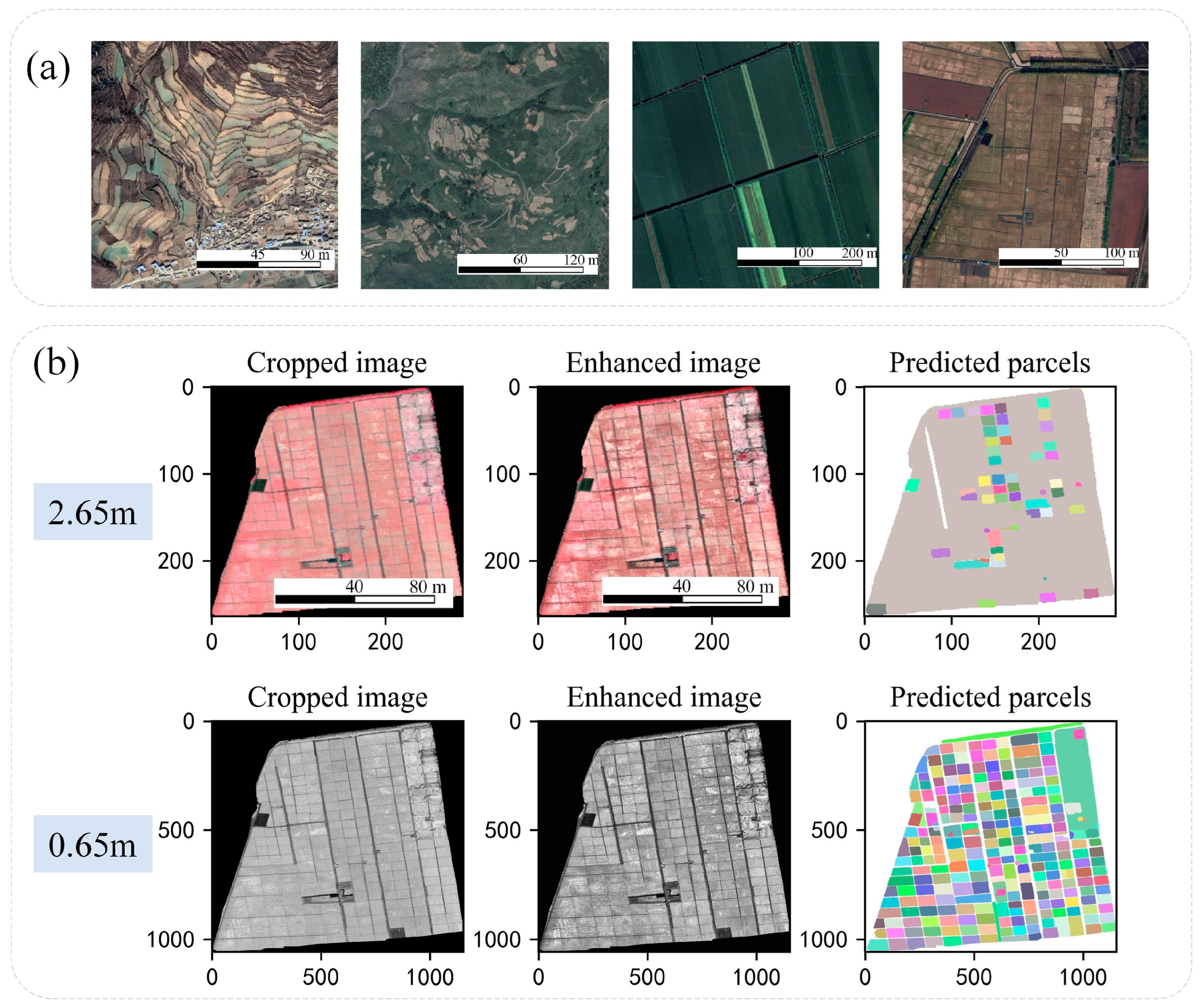

2.3.2. GF-7 Image Parcel Extraction

- Image cropping and histogram equalization

- Downward segmentation features

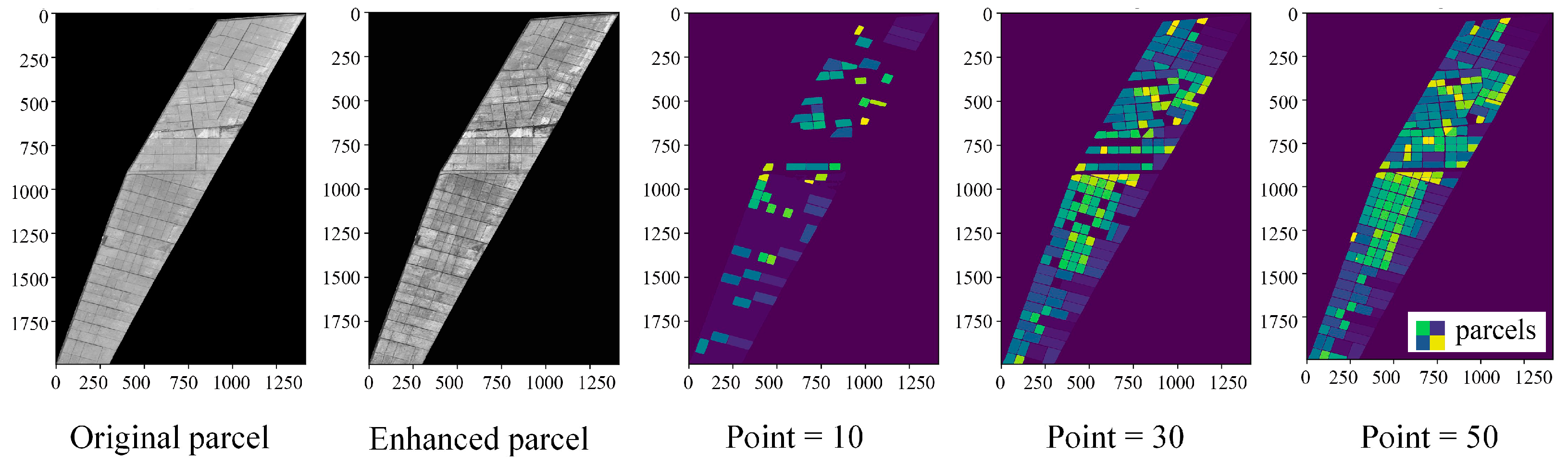

- Parameter adaptation

2.3.3. Accuracy Assessment

3. Results

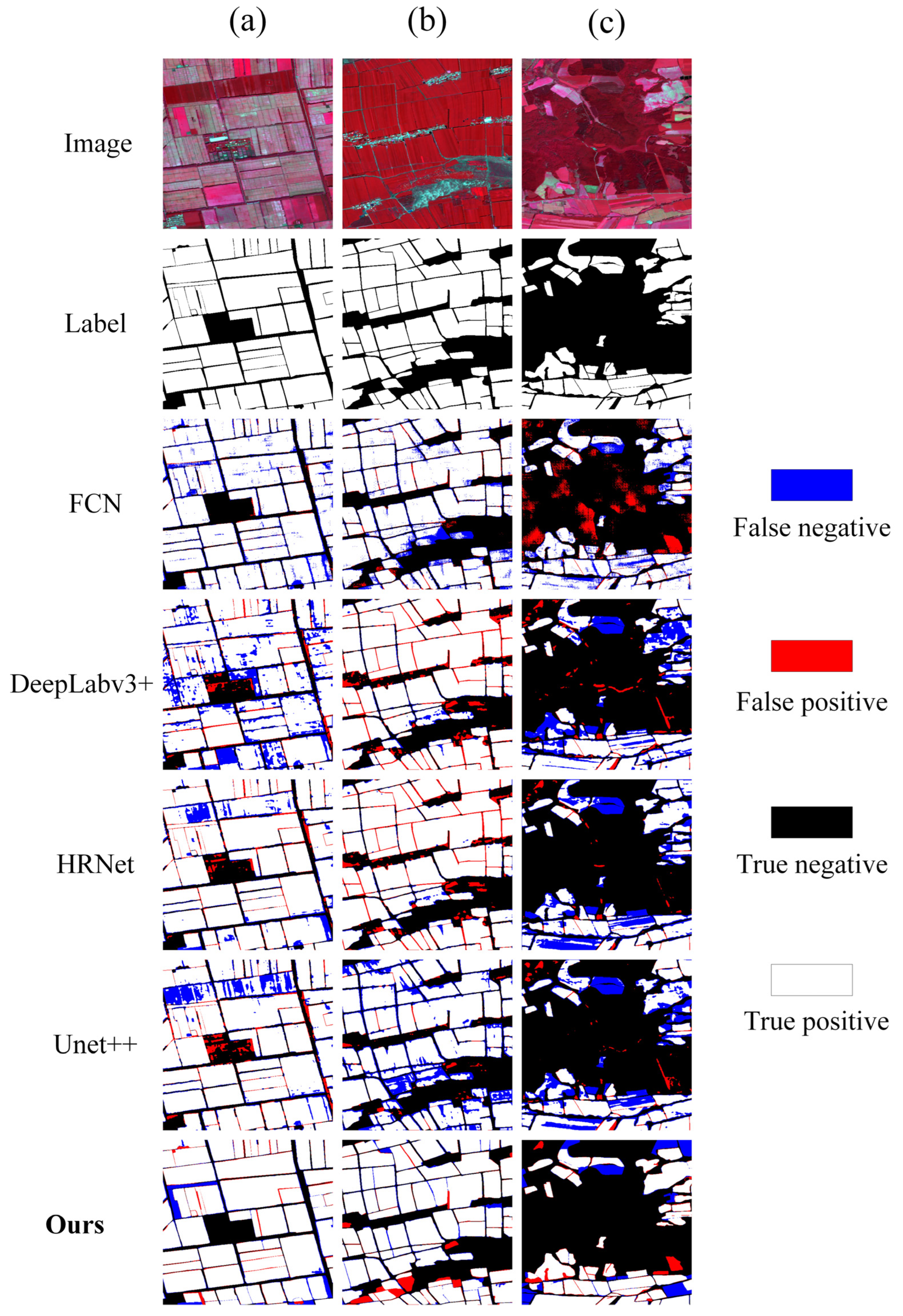

3.1. Accuracy and Spatial Distribution of 10 m Parcels

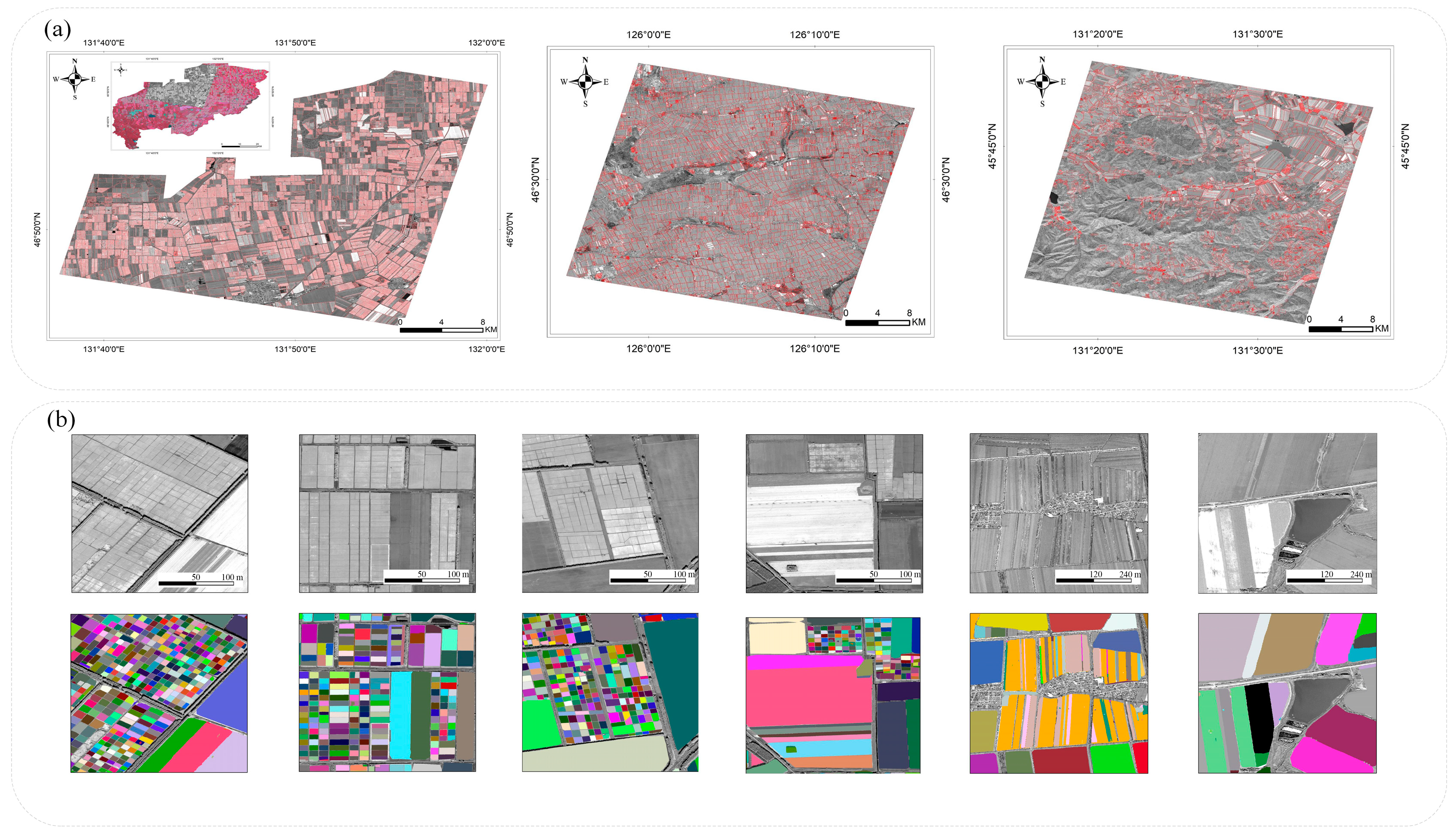

3.2. High-Resolution Parcels

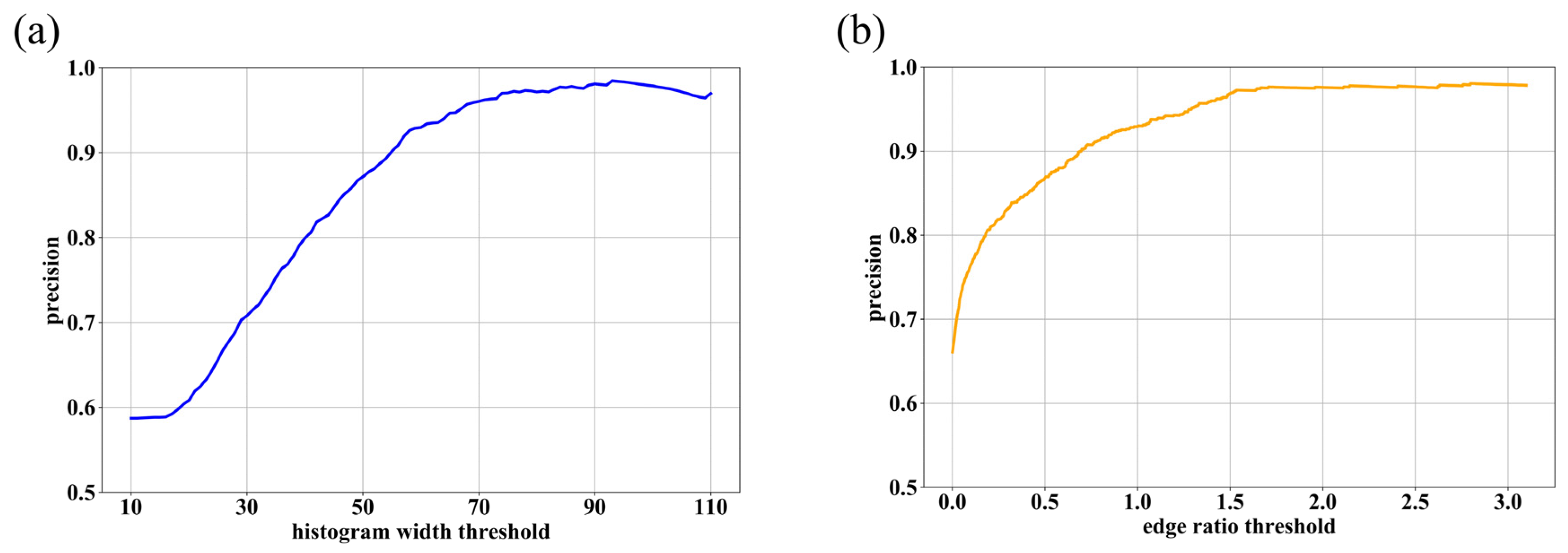

3.2.1. Results of Threshold Selection for Downward Segmentation Features

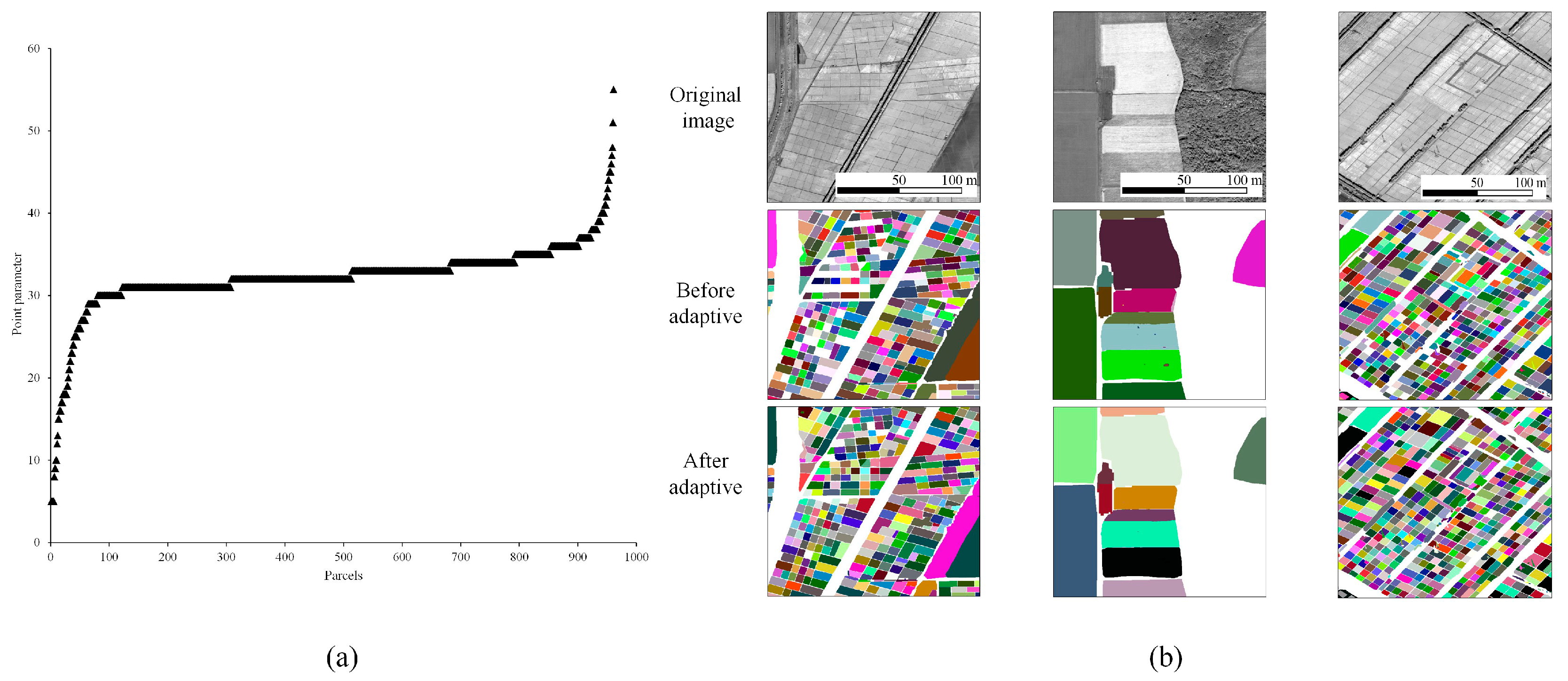

3.2.2. Parameter Adaptation Results

3.2.3. Parcel Extraction Results

4. Discussion

4.1. Advantages of This Method

4.2. Uncertainty Analysis

4.3. The Role of Multi-Temporal Data in Parcel Extraction

4.4. Effects of Different Resolutions on Parcel Extraction

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SAM | Segment Anything Model |

| GEE | Google Earth Engine |

| HE | histogram equalization |

| CLAHE | contrast-limited adaptive histogram equalization |

| NDVI | Normalized Difference Vegetation Index |

References

- Saiz-Rubio, V.; Rovira-Más, F. From Smart Farming towards Agriculture 5.0: A Review on Crop Data Management. Agronomy 2020, 10, 207. [Google Scholar] [CrossRef]

- Xia, L.; Luo, J.; Sun, Y.; Yang, H. Deep Extraction of Cropland Parcels from Very High-Resolution Remotely Sensed Imagery. In Proceedings of the 2018 7th International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Hangzhou, China, 6–9 August 2018; pp. 1–5. [Google Scholar]

- Pan, Y.; Wang, X.; Zhang, L.; Zhong, Y. E2EVAP: End-to-End Vectorization of Smallholder Agricultural Parcel Boundaries from High-Resolution Remote Sensing Imagery. ISPRS J. Photogramm. Remote Sens. 2023, 203, 246–264. [Google Scholar] [CrossRef]

- Da Costa, J.P.; Michelet, F.; Germain, C.; Lavialle, O.; Grenier, G. Delineation of Vine Parcels by Segmentation of High Resolution Remote Sensed Images. Precis. Agric. 2007, 8, 95–110. [Google Scholar] [CrossRef]

- García-Pedrero, A.; Gonzalo-Martín, C.; Lillo-Saavedra, M. A Machine Learning Approach for Agricultural Parcel Delineation through Agglomerative Segmentation. Int. J. Remote Sens. 2017, 38, 1809–1819. [Google Scholar] [CrossRef]

- Yan, L.; Roy, D.P. Automated Crop Field Extraction from Multi-Temporal Web Enabled Landsat Data. Remote Sens. Environ. 2014, 144, 42–64. [Google Scholar] [CrossRef]

- Hong, R.; Park, J.; Jang, S.; Shin, H.; Kim, H.; Song, I. Development of a Parcel-Level Land Boundary Extraction Algorithm for Aerial Imagery of Regularly Arranged Agricultural Areas. Remote Sens. 2021, 13, 1167. [Google Scholar] [CrossRef]

- Turker, M.; Kok, E.H. Field-Based Sub-Boundary Extraction from Remote Sensing Imagery Using Perceptual Grouping. ISPRS J. Photogramm. Remote Sens. 2013, 79, 106–121. [Google Scholar] [CrossRef]

- Fu, K.S.; Mui, J.K. A Survey on Image Segmentation. Pattern Recognit. 1981, 13, 3–16. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef]

- Hadir, A.; Adjou, M.; Assainova, O.; Palka, G.; Elbouz, M. Comparative Study of Agricultural Parcel Delineation Deep Learning Methods Using Satellite Images: Validation through Parcels Complexity. Smart Agric. Technol. 2025, 10, 100833. [Google Scholar] [CrossRef]

- Liu, W.; Wang, J.; Luo, J.; Wu, Z.; Chen, J.; Zhou, Y.; Sun, Y.; Shen, Z.; Xu, N.; Yang, Y. Farmland Parcel Mapping in Mountain Areas Using Time-Series SAR Data and VHR Optical Images. Remote Sens. 2020, 12, 3733. [Google Scholar] [CrossRef]

- Lu, R.; Zhang, Y.; Huang, Q.; Zeng, P.; Shi, Z.; Ye, S. A Refined Edge-Aware Convolutional Neural Networks for Agricultural Parcel Delineation. Int. J. Appl. Earth Obs. Geoinf. 2024, 133, 104084. [Google Scholar] [CrossRef]

- Xie, Y.; Zheng, S.; Wang, H.; Qiu, Y.; Lin, X.; Shi, Q. Edge Detection with Direction Guided Postprocessing for Farmland Parcel Extraction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3760–3770. [Google Scholar] [CrossRef]

- Li, M.; Long, J.; Stein, A.; Wang, X. Using a Semantic Edge-Aware Multi-Task Neural Network to Delineate Agricultural Parcels from Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2023, 200, 24–40. [Google Scholar] [CrossRef]

- Song, W.; Wang, C.; Dong, T.; Wang, Z.; Wang, C.; Mu, X.; Zhang, H. Hierarchical Extraction of Cropland Boundaries Using Sentinel-2 Time-Series Data in Fragmented Agricultural Landscapes. Comput. Electron. Agric. 2023, 212, 108097. [Google Scholar] [CrossRef]

- Waldner, F.; Diakogiannis, F.I. Deep Learning on Edge: Extracting Field Boundaries from Satellite Images with a Convolutional Neural Network. Remote Sens. Environ. 2020, 245, 111741. [Google Scholar] [CrossRef]

- Wu, W.; Liu, Y.; Tang, L.; Yang, H.; Yang, L.; Li, J.; Chen, Z. SBDNet: A Scale and Edge Guided Bidecoding Network for Land Parcel Extraction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 8057–8070. [Google Scholar] [CrossRef]

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding Deep Learning Requires Rethinking Generalization. arXiv 2017, arXiv:1611.03530. [Google Scholar] [CrossRef]

- Xia, L.; Zhao, F.; Chen, J.; Yu, L.; Lu, M.; Yu, Q.; Liang, S.; Fan, L.; Sun, X.; Wu, S.; et al. A Full Resolution Deep Learning Network for Paddy Rice Mapping Using Landsat Data. ISPRS J. Photogramm. Remote Sens. 2022, 194, 91–107. [Google Scholar] [CrossRef]

- Guo, H.; Du, B.; Zhang, L.; Su, X. A Coarse-to-Fine Boundary Refinement Network for Building Footprint Extraction from Remote Sensing Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 183, 240–252. [Google Scholar] [CrossRef]

- Lv, N.; Ma, H.; Chen, C.; Pei, Q.; Zhou, Y.; Xiao, F.; Li, J. Remote Sensing Data Augmentation Through Adversarial Training. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 9318–9333. [Google Scholar] [CrossRef]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.-S. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4015–4026. [Google Scholar]

- Mazurowski, M.A.; Dong, H.; Gu, H.; Yang, J.; Konz, N.; Zhang, Y. Segment Anything Model for Medical Image Analysis: An Experimental Study. Med. Image Anal. 2023, 89, 102918. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Zhang, J.; Du, B.; Xu, M.; Liu, L.; Tao, D.; Zhang, L. SAMRS: Scaling-up Remote Sensing Segmentation Dataset with Segment Anything Model. Adv. Neural Inf. Process. Syst. 2023, 36, 8815–8827. [Google Scholar]

- Osco, L.P.; Wu, Q.; de Lemos, E.L.; Gonçalves, W.N.; Ramos, A.P.M.; Li, J.; Marcato, J. The Segment Anything Model (SAM) for Remote Sensing Applications: From Zero to One Shot. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103540. [Google Scholar] [CrossRef]

- Ji, W.; Li, J.; Bi, Q.; Liu, T.; Li, W.; Cheng, L. Segment Anything Is Not Always Perfect: An Investigation of SAM on Different Real-World Applications. Mach. Intell. Res. 2024, 21, 617–630. [Google Scholar] [CrossRef]

- Huang, Z.; Jing, H.; Liu, Y.; Yang, X.; Wang, Z.; Liu, X.; Gao, K.; Luo, H. Segment Anything Model Combined with Multi-Scale Segmentation for Extracting Complex Cultivated Land Parcels in High-Resolution Remote Sensing Images. Remote Sens. 2024, 16, 3489. [Google Scholar] [CrossRef]

- Liu, X. A SAM-Based Method for Large-Scale Crop Field Boundary Delineation. In Proceedings of the 2023 20th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), Madrid, Spain, 11–14 September 2023; pp. 1–6. [Google Scholar]

- Kovačević, V.; Pejak, B.; Marko, O. Enhancing Machine Learning Crop Classification Models through SAM-Based Field Delineation Based on Satellite Imagery. In Proceedings of the 2024 12th International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Novi Sad, Serbia, 15–18 July 2024; pp. 1–4. [Google Scholar]

- Karra, K.; Kontgis, C.; Statman-Weil, Z.; Mazzariello, J.C.; Mathis, M.; Brumby, S.P. Global Land Use/Land Cover with Sentinel 2 and Deep Learning. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Brussels, Belgium, 11–16 July 2021; pp. 4704–4707. [Google Scholar]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive Histogram Equalization and Its Variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Reza, A.M. Realization of the Contrast Limited Adaptive Histogram Equalization (CLAHE) for Real-Time Image Enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2004, 38, 35–44. [Google Scholar] [CrossRef]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-Based Fully Convolutional Networks. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2016; Volume 29. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A Nested u-Net Architecture for Medical Image Segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018; Proceedings 4; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–11. [Google Scholar]

- Song, W.; Wang, C.; Mu, X.; Fang, G.; Wang, H.; Zhang, H. Accurate Extraction of Fragmented Field Boundaries Using Classification-Assisted and CNN-Based Semantic Segmentation Methods. In Proceedings of the 2023 11th International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Wuhan, China, 25–28 July 2023; pp. 1–4. [Google Scholar]

- Zhang, H.; Liu, M.; Wang, Y.; Shang, J.; Liu, X.; Li, B.; Song, A.; Li, Q. Automated Delineation of Agricultural Field Boundaries from Sentinel-2 Images Using Recurrent Residual U-Net. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102557. [Google Scholar] [CrossRef]

- Cheng, J.; Ye, J.; Deng, Z.; Chen, J.; Li, T.; Wang, H.; Su, Y.; Huang, Z.; Chen, J.; Jiang, L.; et al. SAM-Med2D. arXiv 2023, arXiv:2308.16184. [Google Scholar]

- Pandey, S.; Chen, K.-F.; Dam, E.B. Comprehensive Multimodal Segmentation in Medical Imaging: Combining YOLOv8 with SAM and HQ-SAM Models. arXiv 2023, arXiv:2310.12995. [Google Scholar]

- Huang, J.; Wang, H.; Dai, Q.; Han, D. Analysis of NDVI Data for Crop Identification and Yield Estimation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4374–4384. [Google Scholar] [CrossRef]

- Jakubauskas, M.E.; Legates, D.R.; Kastens, J.H. Crop Identification Using Harmonic Analysis of Time-Series AVHRR NDVI Data. Comput. Electron. Agric. 2002, 37, 127–139. [Google Scholar] [CrossRef]

- Zheng, B.; Myint, S.W.; Thenkabail, P.S.; Aggarwal, R.M. A Support Vector Machine to Identify Irrigated Crop Types Using Time-Series Landsat NDVI Data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 103–112. [Google Scholar] [CrossRef]

| Satellite | Resolution | Area | Date |

|---|---|---|---|

| Sentinel-2 | 10 m | Youyi County | 09/09/2022 |

| A1 | 16/08/2022 | ||

| A2 | 11/06/2022, 10/08/2022, 09/09/2022, 26/09/2022 | ||

| GF-7 | 0.65 m | Youyi County | 27/09/2022 |

| A1 | 01/09/2022 | ||

| A2 | 27/09/2022 |

| Model | Youyi | A1 | A2 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | IoU | P | R | F1 | IoU | P | R | F1 | IoU | |

| FCN8s | 0.95 | 0.88 | 0.93 | 0.87 | 0.94 | 0.9 | 0.93 | 0.87 | 0.87 | 0.72 | 0.79 | 0.65 |

| DeepLabv3+ | 0.94 | 0.86 | 0.9 | 0.82 | 0.9 | 0.97 | 0.93 | 0.87 | 0.87 | 0.61 | 0.71 | 0.56 |

| HRNet | 0.94 | 0.88 | 0.92 | 0.84 | 0.9 | 0.97 | 0.93 | 0.88 | 0.93 | 0.62 | 0.74 | 0.59 |

| UNet++ | 0.94 | 0.91 | 0.93 | 0.87 | 0.9 | 0.79 | 0.87 | 0.77 | 0.91 | 0.64 | 0.75 | 0.6 |

| ours | 0.89 | 0.91 | 0.91 | 0.87 | 0.91 | 0.91 | 0.92 | 0.88 | 0.88 | 0.76 | 0.81 | 0.69 |

| Feature | P | R | F1 |

|---|---|---|---|

| Histogram feature (70) | 0.96 | 0.8 | 0.87 |

| Edge feature (1.5%) | 0.96 | 0.89 | 0.93 |

| Histogram features + Edge features | 0.95 | 0.97 | 0.95 |

| Model | Predict Time (s) | Sample Sets | Pre-Training |

|---|---|---|---|

| FCN8s | 0.0659 | √ | √ |

| DeepLabv3+ | 0.1902 | √ | √ |

| HRNet | 0.0868 | √ | √ |

| UNet++ | 0.1263 | √ | √ |

| Ours | 1.3645 | × | × |

| Date | P | Δ | R | Δ | F1 | Δ | IoU | Δ |

|---|---|---|---|---|---|---|---|---|

| 6.11 | 0.87 | −0.02 | 0.74 | −0.09 | 0.8 | −0.04 | 0.67 | −0.05 |

| 8.10 | 0.88 | −0.01 | 0.7 | −0.13 | 0.78 | −0.06 | 0.64 | −0.08 |

| 9.9 | 0.88 | −0.01 | 0.76 | −0.07 | 0.81 | −0.03 | 0.69 | −0.03 |

| 9.26 | 0.87 | −0.02 | 0.73 | −0.1 | 0.79 | −0.05 | 0.66 | −0.06 |

| 6.11 + 8.10 + 9.9 + 9.26 | 0.89 | - | 0.83 | - | 0.84 | - | 0.72 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, Y.; Wang, H.; Zhang, Y.; Du, X.; Li, Q.; Wang, Y.; Shen, Y.; Zhang, S.; Xiao, J.; Xu, J.; et al. Accurate Parcel Extraction Combined with Multi-Resolution Remote Sensing Images Based on SAM. Agriculture 2025, 15, 976. https://doi.org/10.3390/agriculture15090976

Dong Y, Wang H, Zhang Y, Du X, Li Q, Wang Y, Shen Y, Zhang S, Xiao J, Xu J, et al. Accurate Parcel Extraction Combined with Multi-Resolution Remote Sensing Images Based on SAM. Agriculture. 2025; 15(9):976. https://doi.org/10.3390/agriculture15090976

Chicago/Turabian StyleDong, Yong, Hongyan Wang, Yuan Zhang, Xin Du, Qiangzi Li, Yueting Wang, Yunqi Shen, Sichen Zhang, Jing Xiao, Jingyuan Xu, and et al. 2025. "Accurate Parcel Extraction Combined with Multi-Resolution Remote Sensing Images Based on SAM" Agriculture 15, no. 9: 976. https://doi.org/10.3390/agriculture15090976

APA StyleDong, Y., Wang, H., Zhang, Y., Du, X., Li, Q., Wang, Y., Shen, Y., Zhang, S., Xiao, J., Xu, J., Yan, S., Gong, S., & Hu, H. (2025). Accurate Parcel Extraction Combined with Multi-Resolution Remote Sensing Images Based on SAM. Agriculture, 15(9), 976. https://doi.org/10.3390/agriculture15090976